Abstract

We prove that the Nelder–Mead simplex method converges in the sense that the simplex vertices converge to a common limit point with a probability of one. The result may explain the practical usefulness of the Nelder–Mead method.

MSC:

65K10; 90C56

1. Introduction

The Nelder–Mead (NM) simplex method [1] is a direct method for the solution of the minimization problem

where f is continuous. It is an “incredibly popular method” (see [2]) in derivative-free optimization [3,4,5,6,7] and in various application areas (see, e.g., [8]). The Nelder–Mead simplex method became popular especially in the computational chemistry area as shown by the book [3] and the references therein. The original Nelder–Mead paper [1] has 36,809 references in Google Scholar as of 26 February 2023, showing a great variety of applications and a great number of mainly heuristic variants occasionally combined with other techniques. The Nelder–Mead algorithm can be found in many software libraries or systems as well, such as IMSL, NAG, Matlab, Scilab, Python SciPy and R [9]. The popularity of the method is due to its observed good performance in practice. In spite of this, only a few theoretical results are known on its convergence (see, e.g., [2,10,11,12]).

The counterexample of McKinnon [13] is a strictly convex function with continuous derivatives on which the Nelder–Mead algorithm converges to a nonstationary point of f. For strictly convex functions with bounded level sets, Lagarias, Reeds, Wright and Wright [10] proved that the function values at all simplex vertices converged to the same value and the diameters of the simplices are converging to zero. Kelley [4,14] gave a sufficient-decrease condition for the average of the objective function values (evaluated at the simplex vertices) and proved that if this condition is satisfied during the process, then any accumulation point of the simplices is a critical point of f. Han and Neumann [15] investigated the convergence to 0 and the effect of dimensionality on the function (). For the restricted Nelder–Mead algorithm, Lagarias, Poonen and Wright [11] later proved that if was a twice-continuously differentiable function with bounded level sets and everywhere positive definite Hessian, then it converged to the unique minimizer of f.

If the objective function f does not satisfy the conditions of [10] or [11], then a number of counterexamples show that the Nelder–Mead method may have different types of convergence behavior. It is possible that the function values at the simplex vertices converge to a common value, while the function f has no finite minimum and the simplex sequence is unbounded (Examples 1 and 2 of [16]). It is also possible that the simplex vertices converge to the same point, but the limit point is not a stationary point of f ([13], Examples 3 and 4 of [16]). Other examples indicate that the simplex sequence may converge to a limit simplex of positive diameter resulting in different limit values of f at the vertices at the limit simplex (Examples 4 and 5 of [16]).

Here, we study the convergence of the simplex vertices to a common limit point. In papers [16,17], we proved this type of convergence under sufficient conditions for and , respectively. However, the key assumption of papers [16,17] was related to an algorithmically undecidable problem and required ways to circumvent it.

In this paper, we prove two new theorems for the convergence of the Nelder–Mead method in low dimensional spaces (). Theorem 1 replaces the key assumption of [16,17] with an algorithmically computable one. It is the basis of Theorem 2 of Section 6, which proves that the Nelder–Mead method converges with a probability of one. This result may explain the good behavior of the Nelder–Mead method experienced in practice. The case of two-dimensional strictly convex functions is observed in Remarks 2 and 4, respectively.

2. The Nelder–Mead Simplex Method

There are several forms and variants of the Nelder–Mead method. We use the version of Lagarias, Reeds, Wright and Wright [10]. The vertices of the initial simplex are denoted by . It is assumed that vertices are ordered such that

and this condition is maintained during the iterations of the Nelder–Mead algorithm. The simplex of iteration k is denoted by . Define and . The reflection, expansion and contraction points of simplex are defined by

respectively. The function values at the vertices and the points , , and are denoted by (), , , and , respectively.

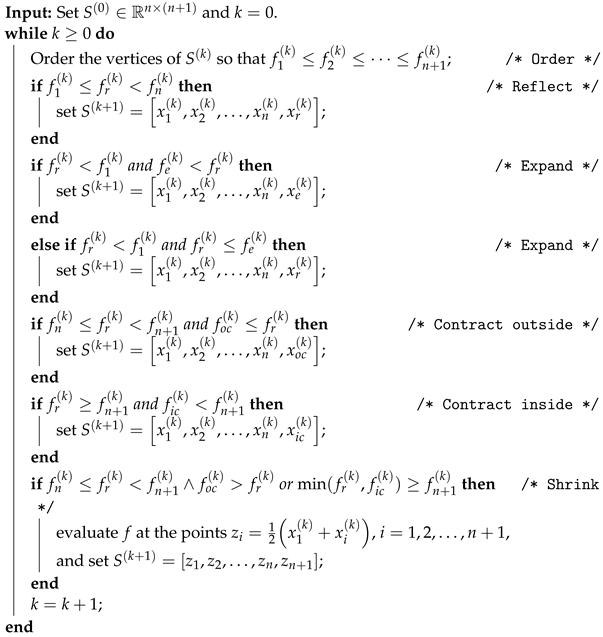

The Nelder–Mead simplex method (Algorithm 1) is a nonstationary iterative method where the kernel of the iteration loop consists of ordering the simplex vertices and exactly one of four possible operations. The logical conditions for these operations (reflection, expansion, contraction and shrinking) are mutually exclusive.

For the order operation, there are two rules that apply to reindexing after each iteration. If a nonshrink step occurs, then is replaced by a new point . The following cases are possible:

If

then the new simplex vertices are

This rule inserts v into the ordering with the highest possible index. If shrinking occurs, then

plus a reordering takes place. If (), then by convention . Hence, it is guaranteed that

The insertion rule (2) implies that if function f is bounded below on and only a finite number of shrink iterations occur, then each sequence converges to some for (see Lemma 3.3 of [10]).

| Algorithm 1: Nelder–Mead algorithm |

|

3. A Matrix Form of the Nelder–Mead Method

Assume that simplex is such that condition (3) holds. If the incoming vertex v is of the form

for some , we can define the transformation matrix

Since , we have to reorder the matrix columns according to the insertion rule (2). Define the permutation matrix

Then, is the new simplex . The following cases are possible

| Operation | New simplex |

| Reflection () | () |

| Expansion () | |

| Expansion () | |

| Outside contraction () | () |

| Inside contraction () | () |

For shrinking, the new simplex is

where

the permutation matrix is defined by the ordering condition (3), and is the set of all possible permutation matrices of order .

Hence, for ,

where

and

Note that contains matrices.

Observe that the transformation matrices , , and () are nonsingular and have the property that their column sums are equal to one (see, e.g., [16,17]). The latter property implies that () holds in any induced matrix norm.

We seek for conditions that guarantee that

holds for some vector . If so, then and for , both () and diam follow. In fact, we prove the convergence of the right infinite matrix product

to a rank-one matrix of the form , from which () and the speed estimate

also follow (see also [16,17]).

Counterexamples of [16,17,18] show that even if the simplex sequence converges to some limit , it may happen that diam. If diam holds, that is for some vector, , it may happen that is not a stationary or minimum point (see McKinnon [13] and also [16,17,18]).

4. Properties of the Transformation Matrices

The spectra of the transformation matrices and are fully characterized in Section 3 of [16]. Furthermore, these matrices have a common similarity form (9). Define the matrix

Lemma 1

([16,17]). For all , matrix has the form

where and depends on .

For a more general result, see Hartfiel [19]. Note that a constant exist such that holds for all . For later use, we make the following numbering of the elements and their corresponding matrices :

where the numbering of the permutations follows the perms function of Matlab (in actual computations).

| ↔ | ||

| ↔ | ||

| ↔ | ||

| ↔ | ||

| ↔ | ||

| ↔ | ||

| ↔ |

5. An Improved Convergence Result

Here, we prove a new convergence theorem where the key condition is numerically checkable at least for low dimensions unlike in [16,17], where the key assumption was algorithmically undecidable and required ways to circumvent this problem. The new result is the basis of the stochastic convergence result of Section 6. The latter theorem may explain, in a sense, the experienced good behavior of the Nelder–Mead method in practice.

We use the following simple result (see, e.g., [16,17]). For , let

Lemma 2.

Assume that , is convergent () and for all k. Then, converges and

for some .

Proof.

It is easy to see that

If is convergent, then . Hence, as . Since is convergent, for any there is a number such that for , . Thus, for , we obtain

Hence, for some . □

If for , then and the series is convergent.

Assume now that and consider all possible products of of fixed length ℓ (). For each product , there is a corresponding product . Define the sets and

where . Consider the norm of the elements of and decompose the set in the form , where

is a fixed number, and for simplicity, Q is selected such that also holds for all . That such a q exists, and are not empty follow from [16]. This fact is also indicated by Equation (21).

We investigate the product (11). For any , write with and . Note that , where stands for the floor function. Then,

and

Assume that ℓ-products belong to and ℓ-product belong to . Clearly . Then, . There exists an integer such that . Hence, . Moreover, assume that for some . This assumption guarantees that the elements from counterbalance the effect of those from . It also implies the density inequalities

It follows that

Now, and . Hence,

since and . Hence, by Lemma 2

holds for some vector . Since there exists a constant such that ,

holds with a suitable constant . It follows that

and

We can summarize the obtained results in the following.

Theorem 1.

Assume that , is nondegenerate, is fixed and is not empty. Let be the number of ℓ-products that belong to and be the number of those ℓ-products that belong to during the first k iterations of the Nelder–Mead method. Moreover, assume that for , and for some , holds (). Then, the Nelder–Mead algorithm converges in the sense that

with a convergence speed proportional to . If f is continuous at , then

holds as well.

A simple but time-consuming computation of the elements of shows the feasibility of the assumptions of Theorem 1. Equation (21) shows the ratios of for the specified pairs, in case of spectral norm and .

The greater the ratio , the better the chance for convergence since more elements can be selected from than from . For any , there are problems on which the NM algorithm does not converge in the above sense (see [16]). Hence, in the general case, the density assumption seems to be necessary.

Corollary 1.

For , Equation (21) implies the convergence of the Nelder–Mead method under the density condition of Theorem 1.

For strictly convex functions , Lagarias et al. proved ([10] Lemma 3.5) that no shrinking occurs when the Nelder–Mead algorithm applied to f. The following observation has some importance, if we can rule out certain operations or steps of the Nelder–Mead method when it is applied to a function f.

Remark 1.

Theorem 1 remains true, if sets , , and are replaced by the subsets , and , such that ,

and is nonempty.

Remark 2.

Assume that and the operation set is restricted to

(no shrinking occurs). For , the ratios are given in the next equation

Hence, we have convergence for strictly convex functions under the conditions of Theorem 1.

Theorem 1 is based on the behavior of ℓ consecutive steps of the Nelder–Mead method. The difference between Theorem 1 and Theorem 9 of [16] is the following. In the case of Theorem 9 of [16], we identified a subset and constructed a matrix norm , such that for every , held. The existence of such norm was related to an algorithmically undecidable problem. The ratio of operations from and then decided the convergence. Here, in Theorem 1, we avoided the construction of the matrix norm , by computing the (spectral) norms of ℓ consecutive steps (products of ℓ operators) and sorting them into two subsets and . The rest of the proof was quite similar to that of Theorem 9 of [16]. The norms of ℓ-products can be easily computed, although the computation time and required memory quickly increase with n and ℓ.

If one can show that for a given function f, the NM method takes only steps that belong to for some ℓ, then the convergence immediately follows. However, if it is not the case, then either one has to make some additional condition as in Theorem 1 or seek some statistical characterization such as Theorem 2 of the next section.

6. A Random Convergence Result

The counterexamples of [16,17,18] showed that there was no sure convergence for the NM method. One may ask, however, if Theorem 1 is sharp enough in some sense. Here, we study a simple random model using the proof of Theorem 1. Formulas (15) and (16) imply that it is enough to study the infinite product

Assume that the ℓ-products are randomly chosen with the probability and . Moreover, assume that the subsequent ℓ-products () are randomly chosen independently of each other.

Let (, ). Note that for and there are numbers such that (see also Section 3 of [16]). Let X be a random variable and let be the values which it assumes. Let () be the probability distribution of X. Assume that for the expected value of X, holds.

If X is uniformly distributed, the expected values belonging to the cases of Equation (21) are given in the following equation (with four-decimal digit precision).

Hence, the condition holds in these cases.

We need the following simple results.

Lemma 3.

Let the positive random variables be independent and identically distributed with the same distribution as X, and assume that . Then, holds with a probability of one. Moreover, there exist numbers such that and only a finite number of the events can occur with a probability of one.

Proof.

The independence of ’s implies that . For any , it follows from the Markov inequality that . Select (). Then,

It follows from the Borel–Cantelli lemma (see, e.g., Theorem 1.5.1 of [20] or Borovkov [21]) that with a probability of one and only a finite number of the events () can occur. □

Corollary 2.

Under the conditions of Lemma 3 there exists numbers () such that () and .

Proof.

There is a random index such that with a probability of one, and if . Since and for any k, , there is no fixed number such that , for and for all . However, for , and for . Thus, it follows that () and . □

Theorem 2.

Assume that , is nondegenerate, is fixed, , and the ℓ-products are randomly chosen with probability and . Furthermore, assume that the subsequent ℓ-products are randomly chosen independently of each other. If , then for the Nelder–Mead algorithm,

holds with a probability of one. If, in addition, f is continuous at , then

also holds with a probability of one.

Proof.

Let X be a random variable defined on the positive numbers with the probability distribution . By assumption, . Lemma 3 and Corollary 2 imply that

and . The result now follows from Lemma 2, the proof of Theorem 1 and the “continuity theorem” (see, e.g., Borovkov [21], Theorem 6.1.4, p. 134). □

Note that assumption does not occur here. Instead, we use the assumption . Furthermore, note that the smaller the , the faster the convergence.

If we assume a uniform distribution for X, then we have the following.

Corollary 3.

Under the assumption of uniform distribution, Equation (23) implies Theorem 2 for .

Theorem 2 and the Corollary 3 ensure the convergence of the NM algorithm with a probability of one although the limit point is not necessarily a minimum point of f. Concerning the speed of convergence, we note that some classical stochastic approximation algorithms (Robbins–Monro, Kiefer–Wolfowitz methods) also converge with a probability of one (see, e.g., [22]).

Remark 3.

Theorem 2 remains true, if the sets and are replaced by the subsets and , respectively, and the probability assumptions for these also hold.

Remark 4.

Assume that and the operation set is restricted to

(no shrinking occurs). For and a uniform distribution, the expected values μ are given in the next equation

Hence, Theorem 2 holds for . It follows that for strictly convex functions , the Nelder–Mead method converges with a probability of one under the assumption of uniform distribution.

7. Conclusions

Although the convergence of the Nelder–Mead simplex method to a minimum point of a function f cannot be guaranteed in general, the main result indicates that in the stochastic sense, it converges to a point at which the value of f is less than the best function value at the start. It also may explain the good behavior of the Nelder–Mead method on average.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Acknowledgments

The author is highly indebted to László Szeidl for his help and comments on Section 6. The author is also indebted to the unknown referees for their observations and remarks that improved the paper.

Conflicts of Interest

The author declares no conflict of interest.

References

- Nelder, J.A.; Mead, R. A simplex method for function minimization. Comput. J. 1965, 7, 308–313. [Google Scholar] [CrossRef]

- Larson, J.; Menickelly, M.; Wild, S. Derivative-free optimization methods. Acta Numer. 2019, 28, 287–404. [Google Scholar] [CrossRef]

- Walters, F.; Morgan, S.; Parker, L.P.L.; Deming, S. Sequential Simplex Optimization; CRC Press LLC: Boca Raton, FL, USA, 1991. [Google Scholar]

- Kelley, C. Iterative Methods for Optimization; Society for Industrial and Applied Mathematics (SIAM): Philadelphia, PA, USA, 1999. [Google Scholar] [CrossRef]

- Conn, A.; Scheinberg, K.; Vicente, L. Introduction to Derivative-Free Optimizations; Society for Industrial and Applied Mathematics (SIAM): Philadelphia, PA, USA, 2009. [Google Scholar] [CrossRef]

- Audet, C.; Hare, W. Derivative-Free and Blackbox Optimization; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar] [CrossRef]

- Kochenderfer, M.; Wheeler, T. Algorithms for Optimization; The MIT Press: Cambridge, MA, USA, 2019. [Google Scholar]

- Tekile, H.; Fedrizzi, M.; Brunelli, M. Constrained Eigenvalue Minimization of Incom-plete Pairwise Comparison Matrices by Nelder-Mead Algorithm. Algorithms 2021, 14, 222. [Google Scholar] [CrossRef]

- Nash, C.J. On Best Practice Optimization Methods in R. J. Stat. Softw. 2014, 60, 1–14. [Google Scholar] [CrossRef]

- Lagarias, J.; Reeds, J.; Wright, M.; Wright, P. Convergence properties of the Nelder-Mead simplex method in low dimensions. SIAM J. Optimiz. 1998, 9, 112–147. [Google Scholar] [CrossRef]

- Lagarias, J.; Poonen, B.; Wright, M. Convergence of the restricted Nelder-Mead algorithm in two dimensions. SIAM J. Optimiz. 2012, 22, 501–532. [Google Scholar] [CrossRef]

- Wright, M. Nelder, Mead, and the other simplex method. Extra Volume: Optimization Stories. Doc. Math. 2012, 271–276. [Google Scholar]

- McKinnon, K. Convergence of the Nelder-Mead simplex method to a nonstationary point. SIAM J. Optimiz. 1998, 9, 148–158. [Google Scholar] [CrossRef]

- Kelley, C. Detection and remediation of stagnation in the Nelder-Mead algorithm using an sufficient decrease condition. SIAM J. Optimiz. 1999, 10, 43–55. [Google Scholar] [CrossRef]

- Han, L.; Neumann, M. Effect of dimensionality on the Nelder-Mead simplex method. Optim. Method. Softw. 2006, 21, 1–16. [Google Scholar] [CrossRef]

- Galántai, A. Convergence of the Nelder-Mead method. Numer. Algorithms 2022, 90, 1043–1072. [Google Scholar] [CrossRef]

- Galántai, A. Convergence theorems for the Nelder-Mead method. J. Comput. Appl. Mech. 2020, 15, 115–133. [Google Scholar] [CrossRef]

- Galántai, A. A convergence analysis of the Nelder-Mead simplex method. Acta Polytech. Hung. 2021, 18, 93–105. [Google Scholar] [CrossRef]

- Hartfiel, D. Nonhomogeneous Matrix Products; World Scientific: Singapore, 2002. [Google Scholar] [CrossRef]

- Chandra, T. The Borel—Cantelli Lemma; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar] [CrossRef]

- Borovkov, A. Probability Theory; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar] [CrossRef]

- Kushner, H.; Clark, D. Stochastic Approximation Methods for Constrained and Unconstrained Systems; Springer: New York, NY, USA, 1978. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).