Abstract

The linguistic time-series forecasting model (LTS-FM), which has been recently proposed, uses linguistic words of linguistic variable domains generated by hedge algebras (HAs) to describe historical numeric time-series data. Then, the LTS-FM was established by utilizing real numeric semantics of words induced by the fuzziness parameter values (FPVs) of HAs. In the existing LTS-FMs, just the FPVs of HAs are optimized, while the used word set is still chosen by human experts. This paper proposes a co-optimization method of selecting the optimal used word set that best describes numeric time-series data in parallel with choosing the best FPVs of HAs to improve the accuracy of LTS-FMs by utilizing particle swarm optimization (PSO). In this co-optimization method, the outer loop optimizes the FPVs of HAs, while the inner loop optimizes the used word set. The experimental results on three datasets, i.e., the “enrollments of the University of Alabama” (EUA), the “killed in car road accidents in Belgium” (CAB), and the “spot gold in Turkey” (SGT), showed that our proposed forecasting model outperformed the existing forecasting models in terms of forecast accuracy.

Keywords:

linguistic time series; hedge algebras; linguistic logical relationship; particle swarm optimization; forecasting model MSC:

37M10; 62M10; 68W25

1. Introduction

The human ability to predict future events and phenomena has attracted the interest of the scientific community for many years, with many forecasting methodologies proposed based on observed historical data. In particular, the forecasting method based on time-series analysis has been investigated by numerous researchers using various models, such as ARMA, ARIMA, and so on. In time-series forecasting models, future values can be forecasted based on only past data. The characteristics of time-series data, such as trends, seasonality, stability, outliers, etc., have been considered to establish forecasting models for future values.

The fuzzy time series proposed by Q. Song and B. S. Chissom [1,2,3] is a forecasting method of time-series analysis that combines the principles of the fuzzy set theory proposed by L. A. Zadeh [4] with traditional time-series techniques. The fuzzy time series is particularly useful in handling the uncertainty, vagueness, and imprecision of data, which is often encountered in real-world applications. The approach allows for modeling the uncertainty and vagueness of the data by representing the relationships between linguistic variables as fuzzy sets rather than crisp sets. In a fuzzy time series, the forecasted values are not simply single-point estimates, but rather a range of values that reflect the uncertainty in the data. This provides a more realistic and robust representation of future trends in the time series, especially when dealing with non-linear and complex data. Fuzzy time series have been applied in a variety of domains, including finance, economics, environmental science, and engineering, where traditional time-series methods may not be adequate. Recently, many proposed models use optimization algorithms, such as genetic algorithm [5,6], particle swarm optimization (PSO) [7,8], etc., to optimize forecasting model parameter values to improve the forecasting accuracy.

People often use natural language as a tool to communicate effectively with each other. They also store and process recorded information through linguistic variables with their values. Linguistic values are also used by humans to forecast events occurring in nature and society so that they can better prepare for future planning. In addition to the fuzzy set theory for handling linguistic variables, hedge algebras (HAs), proposed by N. C. Ho and W. Wechler [9,10], exploit the order-based semantics structure of the word domains of linguistic variables, which provides a mathematical formalism for generating the computational semantics of words that can be applied to solve real-life application problems in various domains, such as image processing [11], fuzzy control [12], data mining [13,14,15,16,17], etc. HAs are qualitative models; therefore, they need to be quantified by measurable quantities based on qualitative semantics. The words of linguistic variables convey their qualitative semantics in relation to the other words in the whole variable domain. Because the linguistic words of a variable are interdependent, they only disclose their full qualitative semantics in the comparative context of the whole variable domain. The fuzziness measure of linguistic words [18] is the crucial concept of fuzzy information and plays an important role in quantification by hedge algebras. The quantitative or numerical semantics of a word induced by its fuzziness measure is the semantically quantifying mapping (SQM) value that is the basis for generating computational semantics for solving real-world application problems. Recently, hedge algebras have been applied to solve time-series forecasting problems in such a way that historical numeric time-series data are transformed into linguistic data by using the real numeric semantics of words defined based on their corresponding SQM values. Then, the LTS-FMs were established [19,20,21,22].

In LTS-FMs, instead of partitioning historical data into intervals, a datum of the historical data is assigned a linguistic word based on the nearest real numeric semantic. By doing so for all historical data, the numeric time series is transformed into an LTS. The linguistic words used to describe historical data are used to define the logical relationship between the data of the current year and those of the next year. Then, the linguistic logical relationships (LLRs) and linguistic logical relationship groups (LLRGs) are established. The fuzzy forecasted values are induced and, finally, based on them, the corresponding crisp forecasted values are computed. It can be seen that the SQM values of words determined by the FPVs of HAs are crucial in establishing LTS-FMs. Obtaining an optimal set of FPVs is not a trivial task, so an optimization algorithm should be applied to obtain it automatically. Among the optimization algorithms, PSO is efficient and is applied to solving many real-world problems [7,8,14,16,21]. It needs few algorithm parameters, so it is easy to implement. In [21], the FPVs of HAs were automatically optimized for LTS-FMs by applying PSO. However, the word set used to describe numeric time-series data was chosen by human experts, so the selected words may not have reflected the nature of the historical data. To overcome this drawback, this paper presents a method of selecting an optimal word set that best describes numeric time-series data in parallel with optimizing the FPVs of HAs by applying a co-optimization algorithm of particle swarm optimization (Co-PSO). In Co-PSO, the outer loop optimizes the FPVs of HAs, while the inner loop optimizes the used word set. The experimental results for three datasets, i.e., the “enrollments of the University of Alabama” (EUA), the “killed in car road accidents in Belgium” (CAB), and the “spot gold in Turkey” (SGT), showed that our proposed forecasting model had better forecasting accuracy than the existing models.

The remainder of this paper is organized as follows. Section 2 briefly restates the theory of hedge algebras and some concepts related to LTS-FM and PSO. The proposed forecasting model, called COLTS, is introduced in Section 3. In Section 4, some experiments with three datasets and discussions about the forecasted results are addressed. Finally, the summary of this work and some suggestions for future work are provided in Section 5.

2. Background

2.1. A Brief Introduction of Hedge Algebras

Each linguistic domain of variable , denoted by Dom(), consists of a word set that can be generated from two generator words, e.g., “cold” and “hot”, by the action of linguistic hedges on them. For example, with two hedges of “very” and “rather”, the words generated by the action of two those hedges on the two generator words of “cold” and “hot” can be “very cold”, “rather cold”, “very hot”, “very very hot”, and so on. It can be observed that they are linearly ordered and are comparable, e.g., “very cold” ≤ “cold” ≤ “rather cold” ≤ “hot” ≤ “very hot” ≤ “very very hot”.

From the above intuitive observation, Ho et al. introduced hedge algebras (HAs) in 1990 [9,10], a mathematical structure that can directly manipulate the word domain of . An HA of , denoted by is an order-based structure = (X, G, C, H, ≤), where X ⊆ Dom() is a word set of ; G = {c−, c+} is a set of two generators, where c− ≤ c+; C = {0, W, 1} is a set of three constants satisfying the order relationship 0 ≤ c− ≤ W ≤ c+ ≤ 1, where 0, 1, and W are the lowest, highest, and neutral constants, respectively; a set of hedges of H = H− ∪ H+, where H− and H+ are the negative and positive hedges, respectively; and ≤ is an operator that indicates the order relation between the inherent word semantics of .

String representation can be used to represent the words in X, so a word x ∈ X is either c or ωc, where c ∈ {c−, c+}, ω = hn…h1, hi ∈ H, i = 1, …, n. H(x) denotes all words generated from x, so H(x) = {ωx, ω ∈ H}. If all hedges in H are linearly ordered, all words in X are also linearly ordered. In this case, linear HA is achieved. Hereafter, some main properties of the linear Has are presented:

- The signs of the negative generator c− and the positive generator word c+ are sign(c− = −1 and sign(c+) = +1, respectively;

- Every h ∈ H+ increases the semantic of c+ and has sign(h) = +1, whereas, every h ∈ H- decreases the semantic of c− and has sign(h) = −1;

- If hedge h strengthens the of hedge k, the relative sign between h and k is sign(h, k) = +1. On other hand, if the hedge h weakens the semantic of the hedge k, sign(h, k) = −1. Thus, the sign of a word x = hnhn−1…h2h1c is specified as follows:

sign(x) = sign(hn, hn−1) × … × sign(h2, h1) × sign(h1) × sign(c).

Based on the word sign, if sign(hx) = +1, x ≤ hx, and if sign(hx) = −1, hx ≤ x.

Based on the syntactical semantics of words generated by HAs, the words in H(x), x ∈ X, induced from x, have had their semantics changed by the hedges in H, but they still convey the original semantics of x. Therefore, H(x) can be considered as the fuzziness of x and the diameter of H(x) is considered as the fuzziness measure of x, denoted by fm(x).

Assume that AX is a linear HA. The function fm: X → [0, 1] is the fuzziness measure of the words in X, provided that the following properties are satisfied [13]:

(F1): fm(c−) + fm(c+) = 1 and , for ;

(F2): fm(x) = 0 for all H(x) = x, especially, fm(0) = fm(W) = fm(1) = 0;

(F3): , the proportion is called the fuzziness measure of hedge h, denoted by μ(h).

From the properties of (F1) and (F3), fm(x), where x = hn…h1c and c ∈ {c−, c+}, is computed as fm(x) = μ(hn)… μ(h1)fm(c), where . For a given word in X, its fuzziness measure can be computed when the values of fm(c) and μ(hj) ∈ H are specified.

Semantically quantifying mapping (SQM) [13] of AX is a mapping of , provided that the following conditions are satisfied:

(SQM1): It preserves the order-based structure of X, i.e., ;

(SQM2): It is one-to-one mapping and is dense in [0, 1].

Let fm be a fuzziness measure on X, , , , and . is computed recursively based on fm as follows:

- (1)

- ;

- (2)

- , where j ∈ [−q^p] = {j: −q ≤ j ≤ p & j ≠ 0} and

The SQM values of words are the basis of computing the real numerical semantics of words, and then a time series is transformed into LTS.

2.2. Linguistic Time Series-Forecasting Model

Based on the theory of hedge algebras, Hieu et al. [19] introduced the concept of LTS and its application to the enrollment forecasting problem. The quantitative (numerical) semantics, known as SQM values, of linguistic words were directly used to establish the LTS-FM. Specifically, the numerical semantics of words were linearly transformed to the real numerical semantic domain of the universe of discourse (UD) of the linguistic variable. Thus, each datum of a time series, whether recorded in numerical or linguistic values, could be naturally associated with the corresponding real numerical semantics of the used linguistic words of the LTS. These are also the distinguishing and outstanding properties of LTS compared with fuzzy time series.

In order to have a theoretical basis for proposing a forecasting model, in this subsection, some concepts of LTS proposed by Hieu et al. [19] are presented.

Definition 1

([19]). (LTS) Let 𝕏 be a set of natural linguistic words of variable defined on the UD to describe its numeric values. Then, any chronological series L(t), t = 0, 1, 2, …, where L(t) is a finite subset of 𝕏, is called a linguistic time series.

Definition 2

([19]). (LLR) Suppose Xi and Xj are the linguistic words representing the data at time t and t + 1, respectively. Then, there exists a relationship between Xi and Xj called a linguistic logical relationship (LLR), denoted by

Xi → Xj

Definition 3

([19]). (LLRG) Assume that there are LLRs, such as

Xi → Xj1,

Xi → Xj2,

…

Xi → Xjn.

Then, they can be grouped into a linguistic logical relationship group (LLRG) and are denoted by

Xi → Xj1, Xj2, …, Xjn.

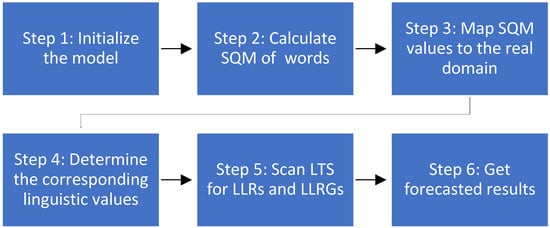

In [19], Hieu et al. also presented a forecasting model called LTS-FM. The procedure of an LTS-FM is depicted in Figure 1.

Figure 1.

The procedure of an LTS-FM.

In Figure 1, the LTS-FM procedure includes six steps, which can be briefly described as follows (for more detail, please see [19,22]):

Step 1. Determine the UD of the linguistic variable, the syntactical semantics, and the FPVs of the associated Has, and choose the used linguistic words to describe the designated time series;

Step 2. Calculate the quantitative semantics of the used words;

Step 3. Map the quantitative semantics of the used words to the real domain of the UD to obtain the real numerical semantics;

Step 4. Transform the designated time series into LTS. For each specified datum, its semantics are specified based on the nearest real semantic;

Step 5. Establish the LLRs of words, then group them into the LLRGs;

Step 6. Forecast based on LLRGs, then compute the crisp forecasted values.

The evaluation measures of the forecasting models are the mean-square error (MSE), the root-mean-square error (RMSE), and the mean-absolute-percentage error (MAPE) which are calculated as follows:

where n denotes the number of forecasted data, and Fi and Ri are the forecasted and real data at the time i, respectively.

2.3. Standard PSO

In 1995, Kennedy and Eberhart introduced an optimization method, so-called particle swarm optimization (PSO) [22,23]. This is a swarm intelligence-based optimization method that has been applied to solve a lot of real-world problems. It mimics the way the birds fly to find food sources. The birds in the swarm will follow the leader who is the nearest to the food source. Suppose that a swarm S = {x1, x2, …, xN} with N particles, with each particle’s position being at generation t computed as follows:

where is xi’s velocity at generation t + 1, computed as follows:

where and are the best global and local solutions found so far, respectively; r1 and r2 are two random numbers uniformly distributed in [0, 1]; and c1, c2, and ω are self-cognitive coefficient, social cognitive coefficient, and inertia weight, respectively. The standard PSO is described in brief as follows:

Step 1: Initialize a swarm S with two random vectors, the position vector X and velocity vector V. Initialize the number of cycle constants N and the cycle variable t;

Step 2: Calculate each particle’s objective value f();

Step 3: Check each particle’s objective. If the current position is better than the personal best then update with ;

Step 4: Check if there exists the best particle position in the current cycle whose objective value is better than the objective value of the global best , then update with the best particle position;

Step 5: Update each particle’s velocity by Equation (4) and move to its new position by Equation (5);

Step 6: Terminate if variable t reaches the maximum number of cycles; otherwise, let t = t + 1 and go to Step 2.

3. Improve the LTS-FM by the Co-Optimization of PSO

In this subsection, a hybrid LTS-FM integrated with particle swarm co-optimization (COLTS) is presented. In the LTS-FMs, the word set in the domain of a linguistic variable is generated automatically by hedge algebras associated with a linguistic variable. Therefore, the cardinality of the generated word set is unlimited. However, in a specific period, a given number of words are selected to describe the numeric time series. The number of automatically generated words depends on the max specificity level k of the words specified by human experts, and the maximum word length in the word domain is equal to k.

In the proposed optimization model of LTS presented in [21], the word set used to describe the historical numeric time-series data (so-called used word set) should be selected intuitively by human experts before the fuzziness parameter value optimization processes. Hence, the used word set depends on the human expert’s intuition and may not be optimal. To select the optimal used word set to best describe historical numeric time-series data from the linguistic variable domain, an optimization process should be executed. The FPVs should be optimized in parallel with the word set’s selection to select the best numerical semantics of used words. Therefore, a co-optimization process for concurrently selecting the optimal used word set and FPVs is applied by utilizing PSO. Specifically, the co-optimization process includes inner and outer loops. The inner loop (inner PSO) optimizes the used word set, while the outer loop (outer PSO) optimizes the FPVs. Real encoding is used for both the outer and inner loops. Specifically, as in other applications of hedge algebras [11,12,13,14,15,16,17], in our experiments, only two linguistic hedges (Little and Very) are used to generate the word set of the linguistic variable domain, so the number of optimized FPVs is only 2. They are the fuzziness measure of one of two generator words (e.g., fm(c−)) and the fuzziness measure of one of two hedges (e.g., ). From the constraints of fm(c−) + fm(c+) = 1 and , it can be inferred that fm(c+) = 1 − fm(c−) and . Each particle of the outer loop = {fm(c−), }, i = 1, …, N, in the swarm represents those two FPVs. For the inner loop, each particle corresponds to a solution represented as an array of real numbers . Each word xl of the used word set Xi is selected from word set Wset of by the zero-based index, as follows:

where denotes the integer part of a real number.

The details of all steps of the proposed co-optimization algorithm are as follows (Algorithms 1 and 2):

| Algorithm 1. UWO (Gwmax, Wset, dw, δ)//Used word set-optimization procedure |

| Input: Parameters: Gwmax, Wset, dw, δ; // Gwmax is the number of generations, Wset is the word set of , dw is the cardinality of the used word set selected from Wset, δ is a set of FPVs. Output: The optimal word set and the associated best MSE value; Begin Randomly initialize a swarm W0 = { | i = 1, …, M, ||= dw}; // is a subset of index values in interval [0, |Wset|], where |Wset| is the cardinality of Wset, || is the number of elements of . Sort the elements of ; For each particle xi in swarm do begin Implement the LTS forecasting procedure from Step 2 to Step 6 based on δ and ; Evaluate the value of the MSE of xi by Equation (1); Assign the personal best position of xi to the current position; End; Assign the global best position to the best position in current swarm; t = 1; Repeat For each particle xi in swarm do begin Compute new velocity of xi by Equation (5); Compute new position of xi by Equation (4); Sort the elements of ; Implement the LTS forecasting procedure from Step 2 to Step 6 based on δ and ; Evaluate the value of the MSE of xi by Equation (1); If is better than then Update of xi based on the value of MSE; End; End; Update based on the values of MSE; t = t + 1; Until t = Gwmax; Return the best position and its best MSE value; End. |

| Algorithm 2. PSCO_FPVO//Fuzziness parameter value optimization |

| Input: The designated time-series dataset D; Parameters: N, Gmax, Gwmax, the syntactical semantics of HAs, kmax, dw; //Gmax and Gwmax are the number of generations of outer and inner PSO, respectively; kmax is the maximum word length; dw is the used word set’s cardinality. Output: The optimal FPVs and the best-used word set W*set; Begin Generate the word set Wset of with the maximum word length kmax utilizing HA; Randomly initialize a swarm S0 = { | i = 1, …, N}, where ; // is a set of FPVs //The best used word set W*set and the best MSE value MSE* (W*set, MSE*) = (∅, +∞); For each particle xi do begin (W*set, MSE*) = UWO(Gwmax, Wset, dw, δ = ); //call the inner PSO = MSE*; //Fitness value associated with particle xi Assign the personal best position of xi to the current position; End; Assign the global best position the best position in current swarm; t = 1; Repeat For each particle xi do begin Compute new velocity of xi by Equation (5); Compute new position of xi by Equation (4); (Wtmp_set, MSE) = UWO(Gwmax, Wset, dw, δ = ); //call the inner PSO = MSE; //Fitness value associated with particle i If is better than then begin Update the personal best of xi based on ’s value; If MSE is better than MSE* then begin (W*set, MSE*) = (Wtmp_set, MSE); Update the global best position ; End; End; End; t = t + 1; Until t = Gmax; Return the best position and W*set; End. |

As shown in the UWO and PSCO_FPVO algorithms, the PSCO_FPVO algorithm optimizes the FPVs and runs as an outer iteration of COLTS. Each particle of PSCO_FPVO represents a given FPV set , which is the input of UWO. In turn, UWO finds the optimally used word set from word set Wset of based on the given FPV set as its input. Each particle of UWO represents a given used word set. The output of UWO includes the local best used word set contained in corresponding to the input FPV set and the associated local best MSE value. The output of PSCO_FPVO includes the best FPV set contained in and the global best used word set W*set, which is the best found solution so far.

4. Experimental Studies and Discussion

The experiments aim to show the necessity of optimizing the used linguistic word set to describe a numeric time series in parallel with optimizing FPVs, and our proposed forecasting models outperformed the models used for comparison. To show the efficiency of our proposed models, their experimental results on the three forecasting problems of the EUA, CAB, and GT were evaluated and compared with those proposed by Uslu et al. [6], Chen et al. [8], and Phong et al. [21]. The objective function of the optimization problem is the MSE. The values of MSE, RMSE, and MAPE were used to evaluate the forecasting models used for comparison.

In the experiments, our proposed forecasting models were implemented using the C# programming language running on Microsoft Windows 10 64 bit with the hardware configuration of an Intel Core i5-8250U 1.6 GHz CPU integrated with 8 GB of RAM. Each experiment was attempted five times. Then, the smallest obtained MSE values were chosen as the forecasting accuracy.

To limit the running time, the parameter values of the optimization algorithm were set as in the existing forecasting models; for the inner iteration, the number of cycles was 100 and the number of particles was 30, and for the outer iteration, the number of cycles was 30 and the number of particles was 20. By trial and error and our experience in applying PSO in our previous studies, for both inner and outer iterations, the Inertia weight ω was 0.4, and both the self-cognitive factor c1 and the social cognitive factor c2 were 2.0.

4.1. Forecast the “Enrollments of the University of Alabama”

To show the efficiency of the proposed LTS-FM described above, it was applied to forecast the EUA, as shown in Table 1. As in the counterparts, the UD of the EUA was U = [13,000, 20,000] and the number of linguistics used to describe the numeric time series was 16. Instead of partitioning U into the intervals in the existing methods, it was transformed into linguistic words to form an LTS in our proposed forecasting methods. The linguistic word set was generated by the associated hedge algebras, so its cardinality was not limited. In fact, in a specific period, the number of words used to describe the numeric time series was limited. Therefore, PSO was applied to select the best used word set to improve the accuracy of the forecasting model.

Table 1.

Comparative simulation of the enrollments forecast in the case of 16 used words and a maximum word length of 5.

To facilitate a significant comparison with the existing methods, seven, fourteen, and sixteen linguistic words were, in turn, used to describe U, and to show the significance of the word length; the maximum word length was, in turn, limited to 3, 4, and 5. The best MSE and MAPE values in accordance with the best word set selected by the inner iteration of the linguistic word optimization process and the best FPVs optimized by the outer iteration are shown in Table 2. It can be seen that, with the same number of used words, the higher the maximum word length was, the better the MSE value we obtained. However, this was not absolutely true when slightly increasing the number of used words. In the case of a maximum word length of 3, the LTS-FMs with 14 used words were better than that with 16 used words when compared by both the MSE and MAPE values. The cause of this situation will be analyzed more deeply in a future study. It may be attributed to the limitation of the cardinality of the word domain of the linguistic variable. According to the theory of HAs [9,10], when limiting the maximum word length to 3 and 2 linguistic hedges, the number of words in the word domain generated by the associated HAs is only 17. In the case of 16 used words, to choose 16 words out of 17, PSO does not have much choice, and among them, at least one word has semantics that are suitable for the natural distribution of time-series data, leading to worse forecasted results than those obtained in the case of 14 used words. In the case of maximum word lengths of 4 and 5, the higher the number of used words was, the better the MSE value we obtained. Therefore, the best MSE was reached in the case of 16 used words and a maximum word length of 5.

Table 2.

The MSE values of the first-order LTS-FMs with different numbers of words and different maximum word lengths.

To show the efficiency of our proposed LTS-FMs with co-optimization PSO in comparison with other forecasting models, the best experimental results of the proposed LTS-FMs with maximum word lengths of 3, 4, and 5, denoted by COLTS3, COLTS4, and COLTS5, respectively, were compared with the state-of-the-art LTS-FM of Phong et al. [21] and the existing FTS-FMs, such as the CCO6 model of Chen and Chung, applying a genetic algorithm [5], HPSO [7], applying a PSO algorithm, Uslu et al. [6], considering the number of iterations of a fuzzy logical relationship, and Chen et al. [8], applying PSO and a new defuzzification technique. The experimental results and their comparative presentation are shown in Table 1 and visualized in Figure 2. By analyzing the experimental results shown in Table 1, it can be recognized that our proposed LTS-FM with a word length of 3 (COLTS3) had the same MSE and MAPE values as the LTS-FM proposed by Phong et al. [21] and had better MSE and MAPE values than those of FTS-FMs CCO6 [5], HPSO [7], Uslu et al. [6], and Chen et al. [8]. Meanwhile, both COLTS4 and COLTS5 had better MSE and MAPE values than those of the compared forecasting models. Recall that the word set used to describe the numeric time-series data in the LTS-FM proposed in [21] was chosen by human experts. Therefore, it depended on their cognitive recognition. To achieve a better result, they could also perform some trial and error to obtain the best word set. The number of cycles and the number of particles of the PSO in [21] were too large, at 1000 and 300, respectively. Based on the comparison results described above, it can be stated that the proposed LTS-FMs were better than the FTS-FMs and the COLTS5 was the best.

Figure 2.

Comparison chart of the forecasted values of the EUA of the compared forecasting models.

4.2. Forecast the “Killed in Car Road Accidents in Belgium”

The proposed LTS-FMs were also applied to the CAB’s problem to evaluate them again. The minimum and maximum values of the historical data of CAB observed from 1974 to 2004 were 953 and 1644, respectively. Thus, the UD was defined as U = [900, 1700]. The number of used words was set to 17, which was equal to the number of intervals in [6,8].

It is easy to see from the comparison results in Table 3 that the MSE values of COLTS3, COLTS4, and COLTS5 were, in turn, 794, 444, and 421, which were much better than those of the compared forecasting methods of Uslu et al. [6] and Chen et al. [8], which were 1731 and 1024, respectively. When comparing by the MAPE values, we can also see that all three of our LTS-FMs, COLTS3, COLTS4, and COLTS5, had better MAPE values than those of Uslu et al. and Chen et al. [6,8], respectively. Therefore, our proposed LTS-FMs outperformed the FTS-FMs presented in [6,8] in forecasting the problem of CAB. In addition, among our three LTS-FMs, COLTS5 was better than COLTS4 and, in turn, COLTS4 was better than COLTS3. Therefore, the statement “with the same number of used words, the longer the maximum word length is, the better MSE value we obtain” presented above is also true for the CAB problem.

Table 3.

Comparative simulation of CAB forecast in the case of 16 used words and a maximum word length of 5.

4.3. Forecast the “Spot Gold in Turkey”

The proposed LTS-FMs were applied once again to a more complex forecasting problem of SGT. The minimum and maximum of the historical data of SGT observed from December 7th to November 10th were 30,503 and 62,450, respectively, so the UD was set to [30,000, 63,000]. The number of used words was 16, which was equal to the number of intervals in [6,8].

The experimental results of our proposed LTS-FMs shown in Table 4 were compared with those of Uslu et al. and Chen et al. [6,8], respectively. It can be seen in Table 4 that, when compared by both the MSE and MAPE values, all three of our proposed LTS-FMs, COLTS3, COLTS4, and COLTS5, outperformed the FTS-FMs of Uslu et al. and Chen et al. [6,8], respectively, in forecasting the problem of SGT. When compared by the MSE or RMSE values, COLTS5 was better than COLTS4, and COLTS3 was worse than COLTS4. However, when compared by the MAPE value, COLTS5 was slightly worse than COLTS4. In general, once again, we can state that, with the same number of used words, the longer the maximum word length, the better the MSE value obtained.

Table 4.

The forecasted values of the SGT of our proposed FTS-FMs compared with those of existing forecasting models.

5. Conclusions

In this study, we proposed a hybrid LTS-FM with a co-optimization procedure using PSO to determine applicable fuzziness parameter values and the best word set describing the observed data. When compared with the former studies, our proposed model achieved better forecasting accuracies. Based on this research, we can continue studying to improve the forecasted accuracy of the forecasting model and apply it to different forecasting application problems with various datasets. In addition, research on better data representation methods using LTS to improve the accuracy and the performance of the forecasting model is a good idea for future work.

Author Contributions

Conceptualization, N.D.H. and P.D.P.; methodology, N.D.H. and P.D.P.; software, P.D.P. and M.V.L.; validation, M.V.L.; data curation, M.V.L. and N.D.H.; writing, P.D.P. and N.D.H.; visualization, M.V.L.; supervision, P.D.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research is funded by the Vietnam Ministry of Education and Training under grant number B2022-TTB-01.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Song, Q.; Chissom, B.S. Fuzzy Time Series and its Model. Fuzzy Sets Syst. 1993, 54, 269–277. [Google Scholar] [CrossRef]

- Song, Q.; Chissom, B.S. Forecasting Enrollments with Fuzzy Time Series–Part I. Fuzzy Sets Syst. 1993, 54, 1–9. [Google Scholar] [CrossRef]

- Song, Q.; Chissom, B.S. Forecasting Enrollments with Fuzzy Time Series–Part II. Fuzzy Sets Syst. 1994, 62, 1–8. [Google Scholar] [CrossRef]

- Zadeh, L.A. Fuzzy Sets. Inf. Control 1965, 8, 338–353. [Google Scholar] [CrossRef]

- Chen, S.M.; Chung, N.Y. Forecasting Enrollments of Students by Using Fuzzy Time Series and Genetic Algorithms. Inf. Manag. Sci. 2006, 17, 1–17. [Google Scholar]

- Uslu, V.R.; Bas, E.; Yolcu, U.; Egrioglu, E. A fuzzy time series approach based on weights determined by the number of recurrences of fuzzy relations. Swarm Evol. Comput. 2014, 15, 19–26. [Google Scholar] [CrossRef]

- Kuo, I.H.; Horng, S.J.; Kao, T.W.; Lin, T.L.; Lee, C.L.; Pan, Y. An improved method for forecasting enrollments based on fuzzy time series and particle swarm optimization. Expert Syst. Appl. 2009, 36, 6108–6117. [Google Scholar] [CrossRef]

- Chen, S.M.; Zou, X.Y.; Gunawan, G.C. Fuzzy time series forecasting based on proportions of intervals and particle swarm optimization techniques. Inf. Sci. 2019, 500, 127–139. [Google Scholar] [CrossRef]

- Ho, N.C.; Wechler, W. Hedge algebras: An algebraic approach to structures of sets of linguistic domains of linguistic truth values. Fuzzy Sets Syst. 1990, 35, 281–293. [Google Scholar]

- Ho, N.C.; Wechler, W. Extended algebra and their application to fuzzy logic. Fuzzy Sets Syst. 1992, 52, 259–281. [Google Scholar] [CrossRef]

- Huy, N.H.; Ho, N.C.; Quyen, N.V. Multichannel image contrast enhancement based on linguistic rule-based intensificators. Appl. Soft Comput. J. 2019, 76, 744–762. [Google Scholar]

- Le, B.H.; Anh, L.T.; Binh, B.V. Explicit formula of hedge-algebras-based fuzzy controller and applications in structural vibration control. Appl. Soft Comput. 2017, 60, 150–166. [Google Scholar]

- Ho, N.C.; Nam, H.V.; Khang, T.D.; Chau, N.H. Hedge Algebras, Linguistic- valued logic and their application to fuzzy reasoning. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 1999, 7, 347–361. [Google Scholar]

- Ho, N.C.; Son, T.T.; Phong, P.D. Modeling of a semantics core of linguistic terms based on an extension of hedge algebra semantics and its application. Knowl.-Based Syst. 2014, 67, 244–262. [Google Scholar]

- Ho, N.C.; Thong, H.V.; Long, N.V. A discussion on interpretability of linguistic rule based systems and its application to solve regression problems. Knowl.-Based Syst. 2015, 88, 107–133. [Google Scholar]

- Phong, P.D.; Du, N.D.; Thuy, N.T.; Thong, H.V. A hedge algebras based classification reasoning method with multi-granularity fuzzy partitioning. J. Comput. Sci. Cybern. 2019, 35, 319–336. [Google Scholar] [CrossRef]

- Hoang, V.T.; Nguyen, C.H.; Nguyen, D.D.; Pham, D.P.; Nguyen, V.L. The interpretability and scalability of linguistic-rule-based systems for solving regression problems. Int. J. Approx. Reason. 2022, 149, 131–160. [Google Scholar] [CrossRef]

- Ho, N.C.; Long, N.V. Fuzziness measure on complete hedge algebras and quantifying semantics of terms in linear hedge algebras. Fuzzy Sets Syst. 2007, 158, 452–471. [Google Scholar] [CrossRef]

- Hieu, N.D.; Ho, N.C.; Lan, V.N. Enrollment forecasting based on linguistic time series. J. Comput. Sci. Cybern. 2020, 36, 119–137. [Google Scholar] [CrossRef]

- Hieu, N.D.; Ho, N.C.; Lan, V.N. An efficient fuzzy time series forecasting model based on quantifying semantics of words. In Proceeding of 2020 RIVF International Conference on Computing and Communication Technologies (RIVF), Ho Chi Minh, Vietnam, 14–15 October 2020; pp. 1–6. [Google Scholar]

- Phong, P.D.; Hieu, N.D.; Linh, M.V. A Hybrid Linguistic Time Series Forecasting Model combined with Particle Swarm Optimization. In Proceedings of the International Conference on Electrical, Computer and Energy Technologies (ICECET 2022), Prague, Czech Republic, 20–22 July 2022. [Google Scholar]

- Kennedy, J.; Eberhart, R.C. Particle Swarm Optimization. In Proceedings of the IEEE International Conference on Neural Networks, Piscataway, NJ, USA, 27 November–1 December 1995; IEEE Service Center: Piscataway, NJ, USA, 1995; pp. 1942–1948. [Google Scholar]

- Eberhart, R.C.; Kennedy, J. A new optimizer using particle swarm theory. In Proceedings of the Sixth International Symposium on Micro Machine and Human Science, Nagoya, Japan, 4–6 October 1995; IEEE Service Center: Piscataway, NJ, USA, 1995; pp. 39–43. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).