1. Introduction

In recent years, multi-sensor fusion estimation problems were the focus of intense research. In traditional estimation problems information was always obtained from a single sensor [

1], which had limitations. With the development of science and technology, higher precision requirements are put forward for various industrial control processes. Multi-sensor fusion estimation technology has been widely used in various real environments, including intelligent robot systems and driverless cars, target tracking, signal processing, etc. [

2,

3,

4,

5,

6]. In addition, multi-sensor systems are easily affected by external disturbances during operation, which causes certain uncertainties to appear in the system model parameters [

7,

8]. Therefore, it is of great theoretical significance and engineering application value to study the distributed state fusion estimation of uncertain systems.

At present, there are three basic methods for state estimation of multi-sensor information fusion: centralized fusion, distributed fusion and sequential fusion. Although centralized fusion can obtain global optimal fusion estimation, it involves a heavy computational burden and has poor fault tolerance. Sequential fusion is more convenient for handling asynchronous sampling systems [

9]. Distributed fusion has attracted wide attention because of its parallel structure and easy fault detection and isolation. In [

10], a signal selection method based on event trigger is proposed to deal with packet loss caused by network and packet confusion which is caused by random transmission delay. Reference [

11] put forward an algorithm that can get consistent output local estimation when the basic model was unknown but fixed. The authors of reference [

12] proposed a distributed fusion Kalman filter (DFKF) algorithm based on an optimal weighted fusion criterion. In [

13], a cross covariance matrix of estimation error between any two local prediction variables was deduced, and the authors successfully analyzed the steady-state characteristics of these variables. In [

14], a distributed extended Kalman filter algorithm based on sensor networks is proposed to solve norm-bounded uncertain parameters in nonlinear systems. Unfortunately, the algorithms used in the above-mentioned studies are all based on Kalman or extended Kalman filters, which are characterized by high statistical noise and cannot consider the constraints of the system. Therefore, in this study we adopt a moving horizon estimation method, which can deal with system constraints and has high estimation accuracy for complex nonlinear systems.

Moving horizon estimation (MHE) is a finite horizon estimation method which has developed rapidly in recent years. By introducing a performance index, the solution for optimization problems is limited to a fixed horizon window. It is characterized by moving optimization, explicit processing constraints, and does not have special requirements for statistical characteristics of noise [

15,

16]. At present, moving horizon estimation has been widely used in chemical process state estimation [

17], fault detection [

18], system identification [

19], exchange systems [

20,

21], large-scale systems [

22,

23,

24], networked systems [

25], uncertain systems and distributed systems [

25,

26,

27,

28,

29,

30,

31,

32,

33,

34]. In [

22], two distributed moving horizon estimation algorithms were proposed, which can deal with interference and noise constraints. In [

23], an event-triggered mechanism was introduced to govern the evaluation of given estimators and the network information exchange between the device and the estimators, which can provide good estimation performance while reducing the processor usage and network communication frequency. In [

30], a distributed moving horizon estimation method is proposed for nonlinear constrained systems. The dynamic characteristics of state variables that cannot be observed by each sensor are described, and the conditions of local information exchange are provided. In [

34], a robust estimation problem of linear multi-sensor system constrained by additive and multiplicative uncertainties is studied, and a moving horizon

fusion-estimation algorithm is proposed. However, none of the above studies consider the uncertain parameters in distributed systems: when there are uncertain parameters in the system, the traditional moving horizon estimation algorithm cannot satisfy the stability requirements of the system, which reduces its estimation efficiency. Therefore, there is a need to improve the original algorithm.

Based on the above analysis, this paper presents a distributed moving horizon fusion estimation (DMHFE) for nonlinear constrained uncertain systems. The main innovations are as follows:

- (1)

By constructing an augmented system, the relationship between the estimation error covariance and the state covariance of the augmented system is established, and the estimated values of the uncertain parameters are obtained by optimizing the upper bound of the error covariance matrix.

- (2)

By introducing a covariance intersection fusion estimation strategy and considering the fusion estimation error of the system, a sufficient condition for the square convergence of local estimation error norm is given. Moreover, the relationship between fusion estimation error and local estimation error is derived.

The structure of this article is as follows. The distributed moving horizon estimation problem of the desired solution is introduced, and the expression of local performance index is given in

Section 2. Estimated values of the system uncertainty parameters are given in

Section 3. The fusion estimation criterion and the calculation method of weighted coefficient are given in

Section 4. A stability analysis is given in

Section 5. Numerical simulation examples are given in

Section 6. The conclusions are summarized in

Section 7.

Notation: For a column vector , the notation stands for , where Y is a symmetric positive–semidefinite matrix and represents the transpose of . represents m-dimensional Euclidean space. The matrix diag is a block diagonal with blocks , I represents identity matrix, and stands for the Euclidean norm. represents the expectation of the random variable. For any matrix means that S is a positive definite (semi-positive definite) matrix and represent the maximum and minimum singular values of matrix M, respectively.

2. Problem Description

Consider the following distributed nonlinear system model with uncertain parameters:

where

is the system state and

is the measured value of sensor

i.

,

is a Lebesgue measurable unknown function matrix, satisfying

.

I is the identity matrix with appropriate dimensions;

and

are the matrices with known appropriate dimensions.

and

represent the process noise and measurement noise of the system, respectively, with the following statistical characteristics:

where,

is a sign function, and

Q and

represent the covariance matrices of process noise and measurement noise, respectively, satisfying

. Next, we will discuss the proposed distributed moving horizon fusion estimator design which includes

L sensors for the distributed nonlinear system of (1). A schematic of this algorithm is shown in

Figure 1.

Table 1 shows the meaning of each item in Equation (

1). It can be seen from

Figure 1 that sensor

sends the measured value

to the local estimator

, and the local optimal estimator

is solved by solving the nonlinear optimization problem. Then

is sent to the fusion center through the communication network, and the fusion estimate

is obtained using the covariance intersection fusion estimation strategy. In order to realize the above distributed fusion estimation, the following optimization problem of local moving horizon estimation is presented:

Problem 1. with the following constraints:where is the set of convex polyhedra satisfying the corresponding constraints.

Table 1.

The symbolic nomenclature of Formula (1).

Table 1.

The symbolic nomenclature of Formula (1).

| i | The local sensor |

| L | The number of sensors |

| The set of sensors adjacent to sensor i |

| The unknown nonlinear function |

| The known nominal nonlinear dynamic function |

| The known nominal nonlinear observation function |

| The system model error |

In Equation (

2), considering a moving window of fixed length

,

,

represents the performance index of a local optimization problem.

is the weight coefficient, satisfying

. The first two terms represent the arrival cost, which summarizes the impact of the data before the time

on the current data;

is a symmetric positive definite weight matrix quantifying the confidence of the prior state estimate

, which penalizes the distance between the initial state estimate in the moving window

and the prior state estimate

. It is noted that in traditional moving horizon estimation algorithms, the arrival cost usually takes the following form:

However, in the distributed system, in order to ensure the observability of the entire state vector, we reconstruct the arrival cost into the fusion arrival cost obtained by the weighted average of the local arrival cost of node i and the local arrival cost of node i’s in-neighbors j, corresponding to the first two terms in (2), respectively. For the third term, the distance between the expected output based on the state estimate and the actual measurement are weighted using . Finally, the fourth term, represents the case that, at time t, sensor i may receive measurement information from the in-neighbors node .

By solving optimization Problem 1, the optimal state estimation value

is obtained. It is worth noting that the estimated state at other times in optimization Problem 1 can be calculated using the following formula:

where

and

are matrices with appropriate dimensions. Similarly, the prior estimated state at time

can also be calculated using (3). Before solving the above local optimization problem, unknown parameters in (3) must be solved.

Remark 1. From (3), one can find that the window estimation model consists of four components. Therein, the last three terms are designed to improve estimation performance [14]. So the key of this paper is to find the optimal gain matrices and the upper bound of error covariance . 3. The Method of Solving the Estimated Value of Uncertain Parameter in System

This section, considering the system model (1), proves that the estimation error covariance matrix is bounded even if uncertain parameters exist in the system models, and the estimated values of the uncertain parameters are given.

At time

t, to facilitate the addressing of linearization errors and norm-bounded uncertainties in Taylor series expansions,

and

at

, using Taylor expansion, can be written into the following formulas:

where

; the Lebesgue measurable unknown function matrices

,

satisfy

,

; and

are known matrices with appropriate dimensions.

Following [

14], the gain matrices

from (3) and

in the perfomance index (2) should be solved. Therefore, we construct an augmented system and derive the upper bound of the estimation error covariance matrix in system (1) by finding the upper bound of the state covariance matrix of the augmented system. Letting

, the augmented system is written as:

with

The state covariance matrix of the augmented system is defined as and the estimation error covariance matrix for sensor i at time is defined as . It is noted that the error covariance matrix and the augmented system state covariance matrix should satisfy the following lemma:

Lemma 1. Given error covariance matrix and augmented system state covariance matrix , the estimated value of uncertain parameters arewhich means the following inequalityholds. Here, , and is the number of sensors adjacent to sensor i. Proof. By applying ([

14], Lemma 1), the state covariance matrix of the augmented system can be transformed into the following form:

where

are appropriate positive constants. It is noted that there are four terms on the right side of inequality (7); every term will be analyzed separately.

For the sake of analysis,

can be written as follows:

where

be the unit matrices of proper dimensions. Then, the following formula is satisfied by applying ([

14], Lemma 2):

with the appropriate positive constants

, and

For the fourth term on the right-hand side of inequality (7), the equation is

For the third term on the right of inequality (7), based on the basic knowledge of graph theory, we obtain

where

.

To sum up, (7) can be written as:

where

It is noted from (10) that:

and

By applying ([

14], Lemma 3), if the initial conditions of the state covariance matrix

are satisfiedwith the positive definite matrices

, then, in turn, the following inequalities are satisfied:

Considering the relationship between the error covariance matrix and the augmented system state covariance matrix, it follows that

where

Then, by calculating the partial derivative of the trace of (15) with respect to

, we obtain

Similarly, by calculating the partial derivative of the trace of

with respect to

, it follows that

Through calculation, the estimated values of the gain matrix are derived by

Based on the above analysis, we obtained the upper bound of the estimated error covariance matrix by deriving the relationship between the error covariance matrix and the augmented system state covariance matrix. Through optimizing the upper bound, estimates of unknown parameters were obtained, thus ensuring the feasibility of optimization Problem 1. In the following section, a fusion estimation criterion will be designed to compensate for the uncertainty of single sensor measurement. □

Remark 2. It is noted that in (4), the same idea as the extended Kalman filter is used to carry out Taylor expansion of the nonlinear function, and linearization error is introduced. However, different from the conventional extended Kalman filter, here a time-varying matrix considering linearization error and norm-bounded uncertainty is introduced, and then the uncertainty problem is embedded into the solution of nonlinear optimization Problem 1 to compensate for the linearization error.

4. Covariance Intersection Fusion Estimator

In distributed linear systems, considering the real-time requirements of estimation, we often adopt the scalar weighted linear minimum variance fusion estimation criterion, which needs to calculate the mutual covariance matrix between two adjacent nodes. However, for distributed nonlinear systems, it is very difficult to solve the mutual covariance matrices of fusion estimation algorithms. Therefore, based on the algorithm in [

35], a covariance intersection (CI) fusion estimation algorithm is proposed. Furthermore, the following lemma is given:

Lemma 2 ([

35]).

Given an n-dimensional random vector and an unbiased estimate , and given can be calculated using (15), then a covariance intersection (CI) fusion estimation algorithm is given by:where the scalar can be obtained from the following formula: Based on the above analysis, the above distributed moving horizon fusion estimation method can be summarized in Algorithm 1.

| Algorithm 1: Distributed moving horizon fusion estimation |

- 1:

When , can be initialized; - 2:

At time , are calculated using (4), (13), and (17)–(19); - 3:

The prior estimation state is calculated using (3), and is calculated using (12). - 4:

By solving the optimization Problem 1, get the optimal state estimation value ; - 5:

Calculate the state estimate at the current moment using (3); - 6:

The fusion estimation value is calculated using (20)–(22). - 7:

At time , by repeating calculation based on the new measurements, return to

step 2. |

Remark 3. When , the full information moving horizon estimation problem should be solved to obtain the local optimal state estimate. The specific solution process can be referred to in [31]. Remark 4. The method presented in this paper is different from the traditional moving horizon estimation; the error covariance matrix is not fixed, but changes with time. Therefore, it is a fault tolerant state estimation algorithm. For the sake of stability analysis in the next section, the calculation of the state estimate in the window should be consistent with the recursive formula of the prior state estimation model, i.e., (3) can be used to calculate both the state estimation at the current time and the prior state estimation at initial time.

In this section, a fusion estimation criterion was introduced and gave the calculation method of the weighting coefficient . For convenience of application, the above distributed moving horizon fusion estimation was summarized as Algorithm 1. In the next section, the stability of the proposed algorithm will be proven, and a sufficient condition for the square convergence of the fusion estimation error norm will be given.

5. Algorithm Stability Analysis

The stability analysis is divided into two parts: firstly, the boundedness of the error covariance matrix is proven, and then the convergence of the error square of the fusion estimation is proven. Before proving the stability, we first define the following variables:

where the symbol “∘” represents the composition of functions, and

where

Furthermore

which satisfies

obtained from the classical mean value theorem [

36].

Before proving the stability of the system, we first introduce the following definitions and assumptions:

Definition 1. A continuous function is a K-function if it is strictly monotonically increasing, for , and .

Definition 2. If feasible solutions to Problem 1 exists, then exists, such that (12) holds.

Definition 3. If a K-function exists, such thatholds, then the system (1) is a fully observable system in steps.

Assumption 1. System (1) is fully observable in steps with a K-function defined by Definition 3.

Assumption 2. Functions satisfy Lipschitz continuity, i.e., scalars exist, such that the following inequalities hold: Based on the assumptions and definitions above, the following theorem is given

Theorem 1. If the estimation error covariance matrix can be calculated by (12) and the solution of Problem 1 exists, then the estimation error covariance is bounded for system (1).

Proof. It can be seen from Definition 2 that the recursive window estimation model given in (3) is feasible, i.e., when

, the error covariance matrix

obtained from (12) tends to the unique positive semidefinite matrix. If the solution of Problem 1 exists, i.e., the performance index

is bounded, it is expressed as follows:

Then it can be inferred that

Let

; combining it with Assumption 2, we get:

According to

, we obtain

So the upper bound of estimation error covariance is

. Next, we substitute the upper bound value into (20) and rewrite (20) into the following form:

Then the proof is complete. Next we prove the boundedness of the norm square of the fusion estimation error and define the local estimation error at time

as

Similarly, the fusion estimation error at time

is defined as

□

Then, the necessary theorem should be introduced:

Theorem 2. Supposing that Assumptions 1 and 2 hold, a K-function defined in Assumption 1 exists and satisfies the following conditions: If the error covariance matrix corresponding to in (3) and weight matrix in (2) cause the following inequalityto hold, then Furthermore, the norm square of the fusion estimation error satisfies the following form:where has the following form:with Proof. The performance index can be rewritten as the following form:

According to

being optimal, the upper bound of the optimal performance index

is given by

Each of the terms in the second term of the right-hand side of the above inequality can be written as:

is a function of

, and

, so the upper bound of the performance index is changed to

After a little bit of algebra, we obtain:

According to the formula of inequality of the sum of squares and the relevant definitions in (23), we obtain:

Then (32) is rewritten as

For simplicity of expression, let

So we get the upper bound of the optimal performance index as

Similarly, we give the derivation process of the lower bound of the optimal performance index. The second term on the right of the inequality can be rewritten as

Because

where,

.

Further, using Assumption 1, we obtain

For the first term in inequality (32), it is shown that

By combining (26) and (34), the lower bound of the optimal value of the performance index is

Combining (33) and (36), we obtain

According to Theorem 2, we obtain

After sorting out, we obtain

Based on (38) and (23), we have

where,

Substituting (39) into (38) obtains

If inequality (28) holds, it is easy to obtain the upper bound of the square of the local estimation error norm, because . Then the boundedness proof of local estimation error is completed. □

Here, the stability of the local estimation error is analyzed. Next, we will prove the stability of the fusion estimation error by introducing Definition 4.

Definition 4. For any point set , if , the convex function satisfies the following inequality: Proof. The fusion estimation error can be rewritten as

According to Definition 4, we obtain

Let

. It is easy to prove that

is a convex function. We record the upper bound of

as

D. Then (42) is converted into

By combining (36), (38) and (40), we get

Similarly, substituting

as a feasible solution into

obtains

Combining (42), (44) and (45), we obtain

When

, (47) can be obtained from (37):

It can be seen from (40), (46) and (47) that (30) and (31) are proven. It is the same as the previous stability analysis of the local estimation error, if (28) is established, then (29) and (30) are established, because . Then the proof is complete. □

6. Numerical Simulation

To verify the effectiveness of the proposed distributed moving horizon fusion estimation algorithm, we consider the following unstable state space model with two sensors:

We let

; similar to [

14] the uncertain parameters are chosen as

and the variances are chosen as

. It is noted that the

matrix can be calculated using (13), and the Jacobian matrix

calculated according to model (48) is

and

Additionally, the constraints of the system noise are set as

, the simulation number

n is set to 100 and the moving horizon window length

N is set to 4. The performance of the proposed algorithm is evaluated using root mean square error (RMSE), which is written as

We compared the methods in [

10,

14] respectively. In [

10], the matrix-weighted fusion estimation algorithm is used, which is complex and takes a long time to calculate. In [

14], a distributed robust extended Kalman filter algorithm is proposed, but fusion estimation is not carried out.

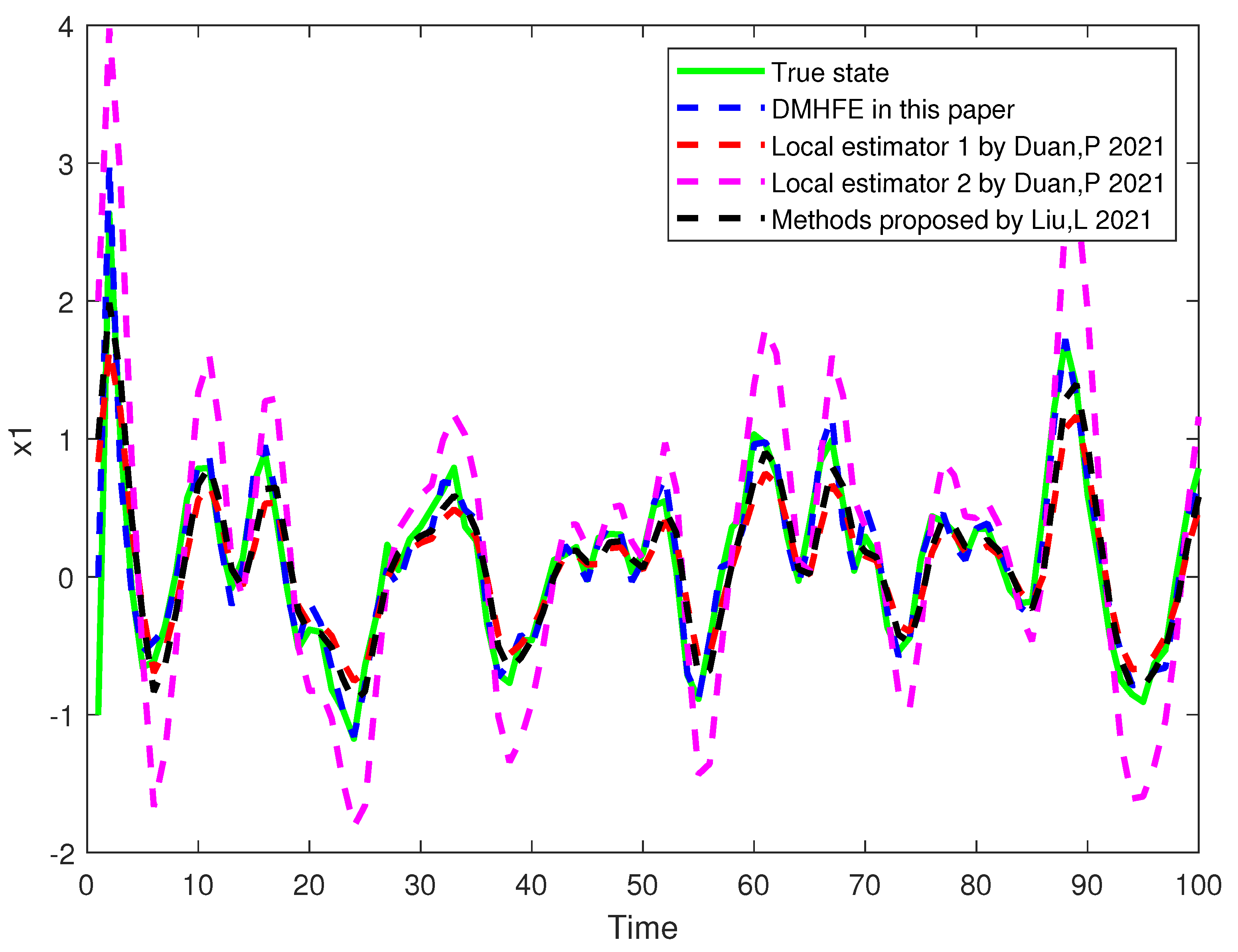

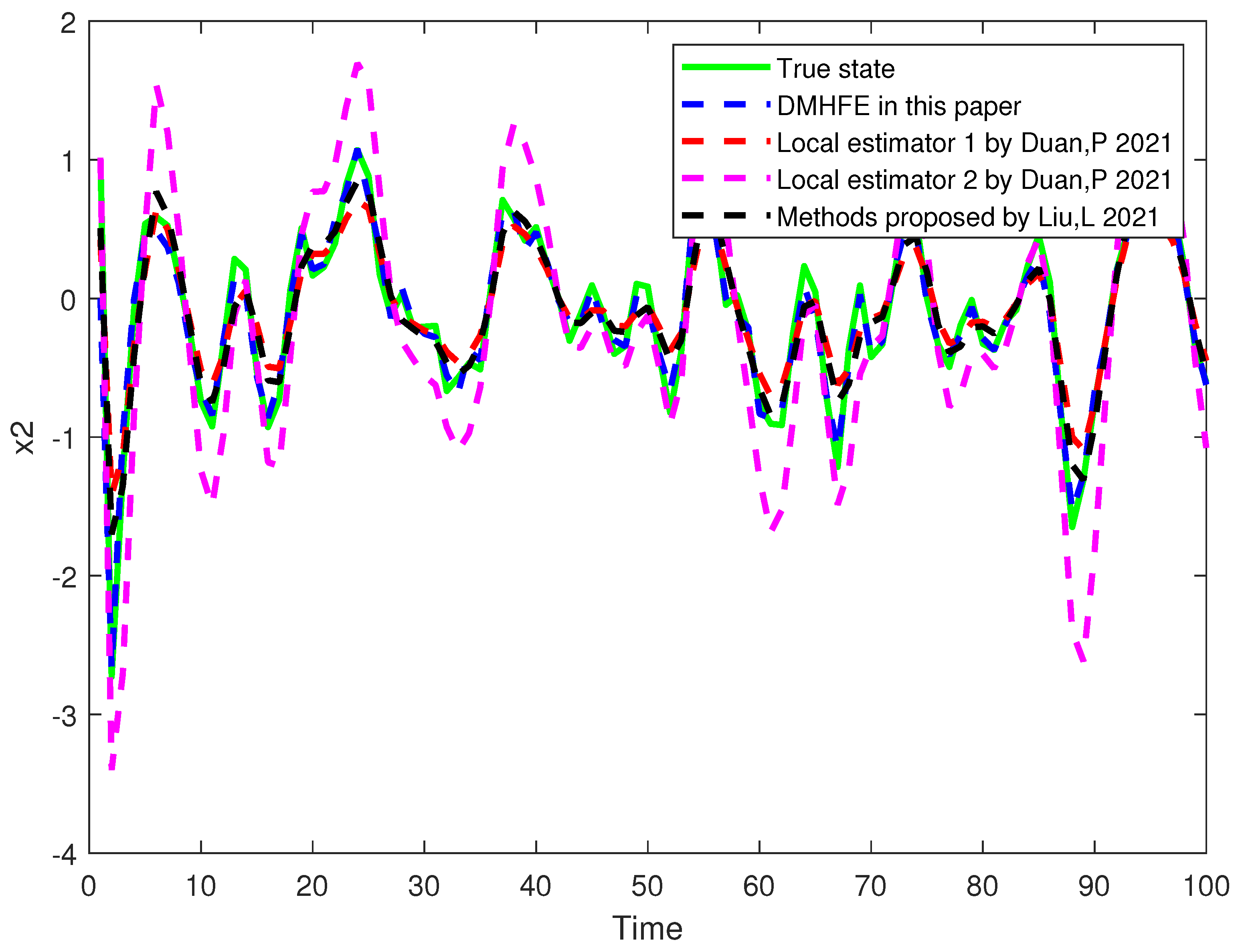

Figure 2 and

Figure 3 show the state trajectory of the system, and

Figure 4 shows the comparison of root mean square error. It can be seen from the figure that the state trajectory after fusion estimation has a better tracking effect and lower root mean square error than that of local state estimation in [

14] and fusion estimation in [

10].

Table 2 shows the mean root mean square error under different moving horizon window lengths. It can be seen from the table that the system error decreases with an increase in window length, but the amount of computation also increases with the increase in the window length. Therefore, a larger

N is not better, and

N is generally selected as twice the system order. We fully balance the two factors of computation and root mean square error, and choose

N as 4. The results show that the accuracy of distributed moving horizon fusion estimation is higher than that of both the local estimation in [

14] and the matrix-weighted fusion estimation method in [

10]. Meanwhile,

Figure 4 also shows that the estimation errors of each local estimator and fusion estimator are bounded. Finally,

Table 3 shows the running time of different algorithms. In 100 simulations, the calculation time of the proposed method is increased by 33.23% and 29.1%, respectively, compared to [

10,

14]. The results show that the proposed method not only achieves a high estimation accuracy, but also improves computation efficiency.

7. Conclusions

In this paper, the problem of estimating the state was solved by following the MHE paradigm for a class of distributed nonlinear systems in the presence of norm-bounded uncertainty. By constructing an augmented system, the upper bound of the estimation error covariance was derived, and the upper bound was optimized to obtain estimated values of the uncertain parameters in the system. In addition, the proposed method fully considered the system constraints: by constructing performance indicators, the state estimation problem was transformed into a nonlinear optimization problem, which avoided the fussy recursive solution of the traditional Kalman filter. Then a fusion estimation method based on covariance intersection (CI) strategy was proposed, which compensated for the difficulty in solving the cross-covariance matrix in the fusion estimation of nonlinear systems, reduced the computational burden of the estimation problem and improved the computational efficiency. More importantly, the sufficient conditions for the convergence of the norm square of the fusion estimation error were given. Finally, a numerical simulation example was given to verify the effectiveness of the algorithm. In future work, the correlation of noise should be fully considered, and the proposed algorithm should be applied to distributed nonlinear systems with correlated noises.