Abstract

This research focuses on designing a min–max robust control based on a neural dynamic programming approach using a class of continuous differential neural networks (DNNs). The proposed controller solves the robust optimization of a proposed cost function that depends on the trajectories of a system with an uncertain mathematical model satisfying a class of non-linear perturbed systems. The dynamic programming min–max formulation enables robust control concerning bounded modelling uncertainties and disturbances. The Hamilton–Jacobi–Bellman (HJB) equation’s value function, approximated by a DNN, permits to estimate the closed-loop formulation of the controller. The controller design is based on an estimated state trajectory with the worst possible uncertainties/perturbations that provide the degree of robustness using the proposed controller. The class of learning laws for the time-varying weights in the DNN is produced by studying the HJB partial differential equation. The controller uses the solution of the obtained learning laws and a time-varying Riccati equation. A recurrent algorithm based on the Kiefer–Wolfowitz method leads to adjusting the initial conditions for the weights to satisfy the final condition of the given cost function. The robust control suggested in this work is evaluated using a numerical example confirming the optimizing solution based on the DNN approximate for Bellman’s value function.

Keywords:

robust optimal control; artificial neural networks; approximate models; Kiefer–Wolfowitz method MSC:

49L20

1. Introduction

The term optimal control refers to a group of techniques that can be used to create a control strategy that produces the best possible behaviour about the specified criterion (i.e., a strategy that minimizes a loss function). Two main techniques can define the solution of a given optimal control problem: maximal principle and dynamic programming. Pontryagin’s maximal principle gives a necessary condition for optimal outcomes [1]. Still, it cannot be used to derive close-loop solutions to the optimal control when the system is non-linear and is affected by modelling uncertainties and external perturbations [2].

On the other hand, the Hamilton–Jacobi–Bellman (HJB) provides a sufficient condition that can be used to arrive at the solutions of optimal control problems (see, for example, [3,4]. Both approaches, being theoretically elegant, have some significant drawbacks due to the requirement of precise knowledge of the system dynamics, which in practice includes internal uncertainties and external perturbations. When a mathematical model contains uncertain parameters from a priory given set, the formulation of necessary conditions for this case is known as the robust maximum principle [5]. The formulation of HJB equation under the presence of uncertainties/disturbances in the model description can be found in [6,7]. This robust version of the optimal control introduces novel approaches that can be exploited to consider no precise representations of the system that must be controlled. Moreover, it allows considering the effect of modelling uncertainties and external perturbations that satisfy some predefined bounds. Hence, using the robust HJB equations represents an innovation to solve online optimization problems that could usually be interpreted as an extremum-seeking control design problem. However, such solutions require a state-dependent explicit solution of the HJB equation, which is a complex task in general, considering that HJB is a non-linear partial equation [8,9].

In general, HJB equation cannot be solved analytically for non-linear mathematical models, even without the influence of errors or disturbances. Because of this, it was suggested in [10,11] that a numerical approximation of the relevant solution based on the use of static neural networks (NN) in which the NN weights are modified online by the least square method implementation [12,13]. From this point of view, this method is referred to as an adaptive realization of an optimal controller. This idea has been used in recent decades considering adaptive approximation of the value function associated with the HJB, which can offer an adaptive version of the optimization problem for dynamical systems. When the approximation is based on the introduction of artificial neural networks as an approximate model of the value function or the optimal structure, the technique is known as neural adaptive dynamic programming.

In this study, we suggest using a dynamic neural network (DNN) approach [14] for outline approximation of the Bellman’s function corresponding to the min–max solution of the HJB equation [15], where the maximum is taken over by the class of admissible uncertainty. The minimum is evaluated for the system trajectories affected by the worst internal parametric ignorance and the external bounded perturbations. Unlike static neural networks, which have weights that converge to "the optimal" approximate values, DNNs have changeable weights throughout the learning process. Utilizing the acquired necessary features [16], the entire design process may be achieved before applying the min–max control [17].

The main contributions of this study are:

- -

- Min–max formulation of the problem and the analytical calculation of the worst internal parametric uncertainties and external perturbations;

- -

- Development of the HJB equation corresponding to the considered min–max problem formulation;

- -

- Proposition of a DNN approximation for the solution of the HJB equation for the min–max problem;

- -

- Designing of the differential equations for the adjustment of the weights (learning) in the suggested DNN structure;

- -

- Numerical validation of the suggested method for some non-linear tested systems.

The structure of this manuscript is the following: Section 2 presents the problem formulation related to the type of non-linear system to be controlled and the min–max optimal control description. Section 3 presents the min–max formulation to design the proposed controller based on the approximate dynamic programming. Section 4 contains the estimation of the worst possible set of trajectories under the maximum value of external perturbations and modelling uncertainties. Section 5 establishes the robust version of the HJB equation evaluated over the estimated worst trajectories. Section 6 establishes the approximation of Bellman’s functions based on applying the DNN approximation capacities. Section 7 relates the DNN approximate values and its adjustment law, considering a recursive algorithm using the Kiefer–Wolfowitz method. Section 8 contains the final remarks and future trends based on the obtained results presented in this study.

2. Problem Formulation

Let us consider the non-linear controllable plant given by the following ordinary differential equation (ODE)

where is the state vector, u is the control action to be designed. The matrix characterizes the internal linear relationship between the state and its dynamics. The matrix defines the constant effect of control action u on the dynamics of the system. Time is represented by the variable t. The time window is defined by the finite value represented by T.

The internal uncertainty matrix is supposed to be bounded as follows

here .

The non-measurable external disturbance is defined by . This term is also assumed to be bounded according to the following inequality:

Let the cost functional be given in the Bolza form:

with the quadratic cost functions

Here the matrix is positive, semi-definite and symmetrical , the control-associated matrix in the functional is positive, definite and symmetrical . The matrix related to the final condition is positive, definite and symmetrical .

This study designs the min–max control which must solve the following optimization problem

subject to the dynamics given in Equation (1).

The set defines the class of admissible internal uncertainties and external perturbations given by

The set consists of all piece-wise continuous vector functions measurable (in the Lebesgue sense) for all . This means that the min–max optimal control is as follows:

3. HJB Min–Max Formulation

According to the results presented in [6,7], the sufficient condition for a control action u to be min–max (robust) optimal (7) consists of fulfilling the following max–min HJB equation [18,19]:

where the function is called the perturbed Hamiltonian (considering the effect of the parameters and external perturbations), which is given by

The vector is referred to as the adjoint variable satisfying the following differential equation with the corresponding final condition

The value (Bellman) function V is defined for any as

with the boundary condition

Here is defined in Equation (5).

4. Determination of the Worst Possible Uncertainty

Given the min–max formulation of the optimization problem considered in this study, it is necessary to estimate the state trajectories under the worst possible evolution of parametric uncertainties and non-parametric imprecision, as well as external perturbations . This section presents this analysis.

Consider the following joint vector defined as

The following lemma presents the result regarding the estimation of the mentioned worst possible trajectory:

Lemma 1.

The worst estimation

for the uncertainties/perturbation extended vector , as follows

Proof.

Remark 1.

The "worst" trajectory (when , the following auxiliary ordinary differential equation gives

The initial condition is a given constant vector. Notice that the dynamics (15) do not contain either uncertainty or perturbations. Therefore, these trajectories could be used to obtain the minimum value of the cost function using the standard dynamic programming approach based on HJB.

5. HJB Equation under the Effect of the Worst Possible Trajectories

Given Lemma 1, the following optimization problem arises for estimating the minimizing controller under the worst possible trajectory. The mathematical optimization technique known as dynamic programming has a necessary optimally derived condition from applying the Bellman equation. The payout from some initial decisions and the value of the decision problem that remains due to these initial choices is used to express the value (using the proposed Bellman function) of a decision problem at a specific time. Following Bellman’s principle of optimality, this divides a dynamic optimization issue into a series of better-defined sub-problems. Based on this technique, the optimization problem considered in this study can be solved based on the conjugated application of online optimization using the HJB with an approximated Bellman’s function [21,22].

According to the dynamic programming theory, and considering the application of the Hamiltonian function, the following equivalent problem for solving the optimal (under the worst possible uncertainties/perturbations) control can be proposed as follows:

Given the arguments given in Equation (8), the robust optimal control is

Substituting both and in the HJB equation leads to:

Using a simplification process on the last term of the right-hand side of the previous partial differential equation leads to

The solution for the Bellman’s function in Equation (18) is tough to find analytically. That is why we propose to use DNN approximation for , which is explained in the next section.

6. Bellman’s Function DNN Approximation

Consider the representation for the Bellman’s function. The function is an approximate solution associated with the HJB Equation (18) that satisfies the following DNN structure:

In Equation (19), the matrix is a positive definite solution, which is uniformly bounded with respect to time and are associated with the DNN weights and activation functions, respectively. The components of are selected strictly for positive sigmoidal functions.

The substitution of the approximate Bellman’s function in Equation (18) leads to

Introducing the unitary and finite valued term in the components that contain in Equation (20) yields

Based on the introduction of the unitary term presented above, the strategy simplifies and removes some of the practical implementations of previous robust optimal control designs using neural network approximations [7,23]. Notice that the expression presented in Equation (21) contains several terms that are proportional to the . Reorganizing the last expression as , we may conclude that . This strategy is commonly implemented in the design of learning laws for a DNN as mentioned in [14,24]. Hence, based on this strategy, it is feasible to estimate the temporal evolution of the weights according to the following ordinary differential equation, which defines the dynamics of all components in :

Hence, based on the definition of (which is known as the learning law), Equation (20) admits the following representation for the HJB equation using the approximate representation of the Bellman’s function:

where the expression described the following matrix Riccati differential equations given by

Taking into account the Property (12), the initial conditions for and should be selected in such a way the following final conditions could be met

So, based on the presented results, we are now ready to formulate the principal result of this study.

Theorem 1.

Since both ODE (22) and (24) have the terminal Conditions (25) we need to apply a recursive method (in this case, the “shooting” method) to find the appropriate initial conditions and . Notice that the ODE (24) can be resolved in inverse time using the initial condition for which is . So, fulfilling of the terminal condition for Equation (24) does not present any problem. In the next section, we will discuss the recursive method for finding initial the condition realizing the terminal requirements . In view of the approximated model Equation (19), finally, the robust control may be represented as

where and are given by Equations (15) and (22), respectively, including as in Equation (24).

7. Recursive Method to Realize the Terminal Conditions for the Weight Dynamics

The exact dependence of the performance index (4) on is difficult to be obtained analytically. This condition constrains the application of traditional recurrent optimization algorithms such as gradient descendent [25] or Levenberg–Marquardt methodology [26]. As an alternative, here we apply the so-called, modified deterministic Kiefer–Wolfowitz method [27,28] applied to the optimization performance index considering that = J. The following describes this method’s recursive procedure:

In this function, can be selected as a small constant and . From a practical point of view, both parameters and can be selected as small constants.

The evolution of this algorithm allows the adjustment of the initial weights of the DNN running at each step of the recurrent methodology. The combination of this recurrent strategy running in discrete form and the continuous evolution of the system controlled with the approximate value of the Bellman’s function based on the DNN appear to be a class of hybrid system. Moreover, adjusting the initial weights in each recurrent stage, driving the performance function towards its minimum value, seems to operate as a class of reinforcement learning using the Kiefer–Wolfowitz method.

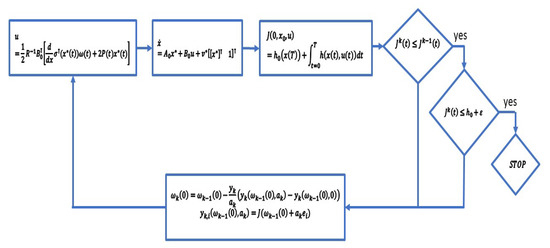

A simple diagram of the hybrid algorithm is shown in Figure 1. This diagram shows the two outer loops that define how the recurrent method works over the continuous development of the proposed min–max optimized controller.

Figure 1.

Diagram of the min–max optimization approach based on the hybrid form based on the DNN approximation of the Bellman’s function to derive the controller and the recurrent method to force the fulfilment of the final condition.

8. Numerical Example

The following parameters of the model presented in Equation (1) were considered for numerical evaluation purposes:

The model with uncertain mathematical structure was simulated in Matlab/Simulink using the ODE-01 (Euler) integration method with the integration step fixed to 0.001 s.

The proposed modified deterministic Kiefer–Wolfowitz method was implemented in M-language in Matlab to perform the recurrent scheme described in Equation (27). The number of cycles evaluated for this recurrent algorithm was

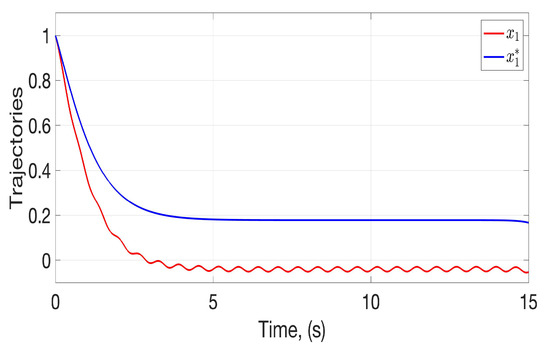

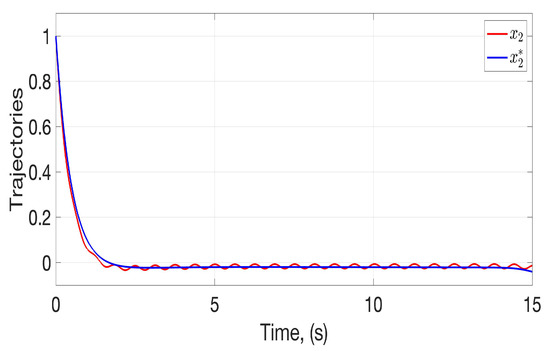

The evolution of the states and appears in Figure 2 and Figure 3. These figures compare the evolution of the worst trajectories and considering the upper bound of the admissible perturbations. Notice that both states are moving towards the origin. Still, in the case of the worst trajectories, there are no oscillations in the steady state in opposition to the controlled states that are affected by the selected uncertainties and perturbations. Furthermore, one may notice that the first variable has a more evident difference between the worst estimated trajectories for the controlled ones. The trajectories for and correspond to those generated with the final weights derived at the end of the iteration process endorsed with the Kiefer–Wolfowitz strategy.

Figure 2.

Temporal evolution of the first state considering the initial iteration () and the final iteration ().

Figure 3.

Temporal evolution of the second state considering the initial iteration () and the final iteration ().

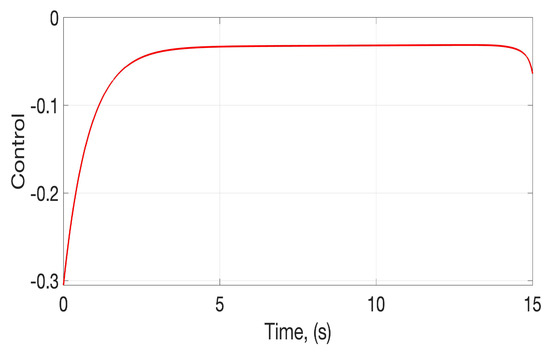

The evolution of the states shown in Figure 2 and Figure 3 are generated by the control action depicted in Figure 4, that is the result produced when the complete set of iterations in the Kiefer–Wolfowitz algorithm is ended. This controller also responds to the effect of uncertainties and perturbations, limiting the possibility that the control action converges towards the origin. Nevertheless, the obtained control action corresponds to the outcome endorsed after the iterative algorithm ends, reaching the early stop criterion.

Figure 4.

Temporal evolution of the control action evaluated with the application of the approximate model based on the DNN.

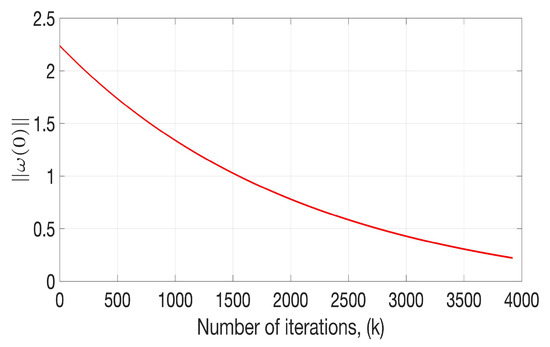

Noticing the dependence of the functional J concerning the weights, Figure 5 shows the evolution of the norm of the weights at the initial condition as a function of time. This temporal evaluation confirmed the weight tendency towards the origin according to the application of the Kiefer–Wolfowitz method. Even though the optimization strategy is not enforcing that the norm of the initial conditions should move to the origin, the continuous dependence of the system trajectories for the control action motivates this behaviour.

Figure 5.

Recurrent evolution of the norm of the weights at the initial moment defined by the Kiefer–Wolfowitz method.

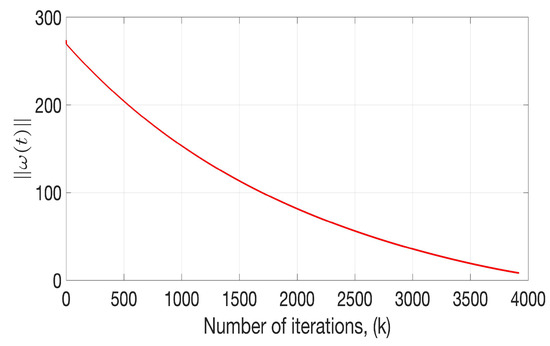

Based on the trajectories produced for the state x and the corresponding control action the proposed functional J was estimated using the Formula (4). According to the case considered above for the states and the control, the evolution of the norm of the weights concerning time is also evidenced in this study in Figure 6, which confirms the effect of the optimization algorithm on the norm of the weights that are also participating in the evolution of the function. The selected example presented in this section shows the details of how the proposed algorithm could be used on an arbitrary system. Such a condition permits to claim that the algorithm introduced in this study could work for some other systems with similar dynamical structure as the one used in the example.

Figure 6.

Recurrent evolution of the cost function defined by the Kiefer–Wolfowitz method.

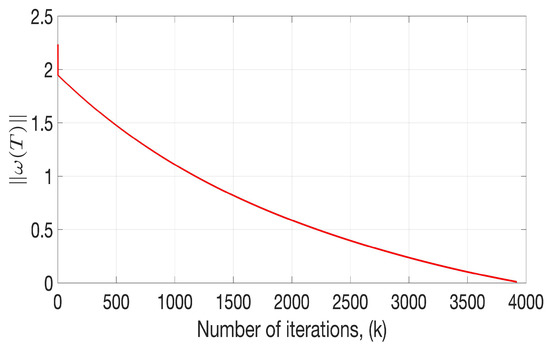

The evaluation of the Kiefer–Wolfowitz method allows for estimating the evolution of the norm for the final weights in the proposed DNN. The temporal evolution in discrete steps for the norm of these weights is shown in Figure 7. This evolution is shown along the entire set of iterations before the weights norm becomes smaller than 0.01, which is considered an early stopping criterion. As expected, the norm of the initial weights has a monotonically decreasing tendency to reach a final value of . Because of the iterative algorithm is based on the evolution of the functional J at the final time T, the temporal evolution of this function over the iterations of the Kiefer–Wolfowitz method confirms the successful application of the recurrent optimization methodology.

Figure 7.

Recurrent evolution of the norm of the weights at the time moment T defined by the Kiefer–Wolfowitz method.

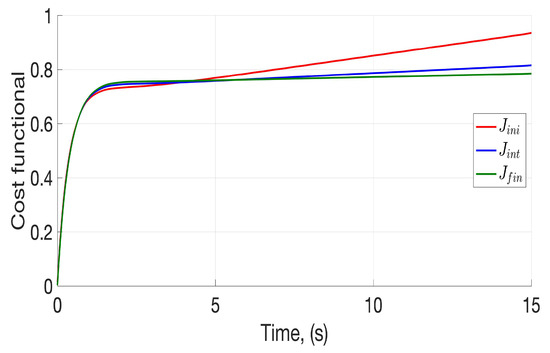

The application of the recurrent Kiefer–Wolfowitz method also implied significant effects on the temporal evolution of the cost function over the continuous time within each step (Figure 8).

Figure 8.

Temporal behaviour of the cost function depending on the sequence of stages evaluated over the sequence of iterations.

The lines shown in Figure 8 represent the evolution of the performance function J evaluated with the weights proposed at the beginning of the iterative simulation (red line), one generated when the iterative process was evaluated with (the middle of the iterative sequence, blue line) and one corresponding to the case when the iterative process was evaluated with corresponding to the end of the process (green line). This figure confirms the iterative process leads to reducing the final value of the performance function, showing a non-increasing behaviour with respect to the number of iterative cycles. These characteristics confirm the sub-optimal construction of the proposed controller.

The variation of the cost function starts at the first round of the recurrent sequence with a non-convergent behaviour after 15 seconds. When the recurrent algorithm moves to its 1900 step (in the middle of the detected maximum number of steps), the cost function does not grow as fast as the case observed in the first step. When the recurrent algorithm reaches the last step, the cost function converges to an almost constant value of 0.8, which indirectly confirms the state convergence to the origin. Moreover, this final value obtained at the temporal evolution of the cost function within each stage of the recurrent algorithm confirms the observed result shown previously. Notice that this asymptotic behaviour of the performance function at the last step of the iteration algorithm is forced by the robustness of the developed controller to the class of admissible perturbations and modelling uncertainties.

9. Conclusions

The following are the main conclusions of this study:

This study provides a robust optimal controller for a class of perturbed non-linear systems using an approximate model of Bellman’s function based on a DNN formulation. The approximation of the min–max value function for the HJB equation allows developing the robust optimal control for non-linear systems affected by non-measurable uncertainties and perturbations. The analytical representation of the system trajectories under the worst admissible uncertainties and perturbations is obtained. The analysis of the HJB equation using the trajectories and the approximated value function leads to deriving the learning laws for the DNN weights. The numerical procedure for adjusting the initial value of the weight dynamics is developed based on the modified deterministic version of the Kiefer–Wolfowitz method. The evaluated numerical example confirms the workability of the proposedrobust optimal control for a wide class of non-linear systems with an uncertain mathematical model.

Author Contributions

Conceptualization, A.P. and I.C.; methodology, A.H.-S. and M.B.-E.; software, S.N.-M.; validation, A.P., M.B.-E. and I.C.; formal analysis, A.P.; investigation, M.B.-E.; resources, I.C.; data curation, A.H.-S.; writing—original draft preparation, S.N.-M.; writing—review and editing, I.C.; visualization, S.N.-M. and A.H.-S.; supervision, A.P.; project administration, I.C.; funding acquisition, I.C. All authors have read and agreed to the published version of the manuscript.

Funding

The Tecnologico de Monterrey funded this research, and the Institute of Advanced Materials for Sustainable Manufacturing under the grant Challenge-Based Research Funding Program 2022 number I006-IAMSM004-C4-T2-T.

Data Availability Statement

Data will available under the proper request to the corresponding author.

Acknowledgments

The author acknowledges the scholarship provided by the Consejo Nacional de Ciencia y Tecnologia of the Mexican government.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DNN | Differential Neural Networks |

| HJB | Hamilton–Jacobi–Bellman Equation |

| NN | Neural Network |

References

- Onori, S.; Serrao, L.; Rizzoni, G. Pontryagin’s minimum principle. In Hybrid Electric Vehicles; Springer: Berlin/Heidelberg, Germany, 2016; pp. 51–63. [Google Scholar]

- Cannon, M.; Liao, W.; Kouvaritakis, B. Efficient MPC optimization using Pontryagin’s minimum principle. Int. J. Robust Nonlinear Control: IFAC-Affil. J. 2008, 18, 831–844. [Google Scholar] [CrossRef]

- Kirk, D.E. Optimal control theory: An introduction; Courier Corporation: Englewood Cliffs, NJ, USA, 2004. [Google Scholar]

- Gadewadikar, J.; Lewis, F.L.; Xie, L.; Kucera, V.; Abu-Khalaf, M. Parameterization of all stabilizing H∞ static state-feedback gains: Application to output-feedback design. Automatica 2007, 43, 1597–1604. [Google Scholar] [CrossRef]

- Boltyanski, V.G.; Poznyak, A.S. The Robust Maximum Principle: Theory and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Azhmyakov, V.; Boltyanski, V.; Poznyak, A. The dynamic programming approach to multi-model robust optimization. Nonlinear Anal. Theory Methods Appl. 2010, 72, 1110–1119. [Google Scholar] [CrossRef]

- Ballesteros, M.; Chairez, I.; Poznyak, A. Robust min–max optimal control design for systems with uncertain models: A neural dynamic programming approach. Neural Netw. 2020, 125, 153–164. [Google Scholar] [CrossRef] [PubMed]

- Munos, R. A study of reinforcement learning in the continuous case by the means of viscosity solutions. Mach. Learn. 2000, 40, 265–299. [Google Scholar] [CrossRef]

- Swiech, A. Viscosity Solutions to HJB Equations for Boundary-Noise and Boundary-Control Problems. SIAM J. Control Optim. 2020, 58, 303–326. [Google Scholar] [CrossRef]

- Vrabie, D.; Lewis, F. Adaptive dynamic programming for online solution of a zero-sum differential game. J. Control Theory Appl. 2011, 9, 353–360. [Google Scholar] [CrossRef]

- Zhao, D.; Liu, D.; Lewis, F.L.; Principe, J.C.; Squartini, S. Special issue on deep reinforcement learning and adaptive dynamic programming. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 2038–2041. [Google Scholar] [CrossRef] [PubMed]

- Liu, D.; Wei, Q.; Wang, D.; Yang, X.; Li, H. Adaptive Dynamic Programming with Applications in Optimal Control; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Bertsekas, D.; Tsitsiklis, J.N. Neuro-Dynamic Programming; Athena Scientific: Belmont, MA, USA, 1996. [Google Scholar]

- Poznyak, A.S.; Sanchez, E.N.; Yu, W. Differential Neural Networks for Robust Nonlinear Control: Identification, State Estimation and Trajectory Tracking; World Scientific: Singapore, 2001. [Google Scholar]

- Bertsekas, D.P.; Ioffe, S. Temporal Differences-Based Policy Iteration and Applications in Neuro-Dynamic Programming; Laboratory for Information and Decision Systems Report LIDS-P-2349; MIT: Cambridge, MA, USA, 1996; Volume 14. [Google Scholar]

- Han, Y.; Huang, G.; Song, S.; Yang, L.; Wang, H.; Wang, Y. Dynamic neural networks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7436–7456. [Google Scholar] [CrossRef] [PubMed]

- Ballesteros, M.; Chairez, I.; Poznyak, A. Robust optimal feedback control design for uncertain systems based on artificial neural network approximation of the Bellman’s value function. Neurocomputing 2020, 413, 134–144. [Google Scholar] [CrossRef]

- Peng, S. A generalized Hamilton–Jacobi-Bellman equation. In Proceedings of the Control Theory of Distributed Parameter Systems and Applications: Proceedings of the IFIP WG 7.2 Working Conference, Shanghai, China, 6–9 May 1990; pp. 126–134. [Google Scholar]

- Kundu, S.; Kunisch, K. Policy iteration for Hamilton–Jacobi–Bellman equations with control constraints. Comput. Optim. Appl. 2021, 78, 1–25. [Google Scholar] [CrossRef]

- Poznyak, A. Advanced Mathematical Tools for Control Engineers: Volume 1: Deterministic Systems; Elsevier: Amsterdam, The Netherlands, 2010; Volume 1. [Google Scholar]

- Murray, J.J.; Cox, C.J.; Lendaris, G.G.; Saeks, R. Adaptive dynamic programming. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2002, 32, 140–153. [Google Scholar] [CrossRef]

- Wang, F.Y.; Zhang, H.; Liu, D. Adaptive dynamic programming: An introduction. IEEE Comput. Intell. Mag. 2009, 4, 39–47. [Google Scholar] [CrossRef]

- Lewis, F.L.; Liu, D. Reinforcement Learning and Approximate Dynamic Programming for Feedback Control; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Chairez, I. Wavelet differential neural network observer. IEEE Trans. Neural Netw. 2009, 20, 1439–1449. [Google Scholar] [CrossRef] [PubMed]

- Haji, S.H.; Abdulazeez, A.M. Comparison of optimization techniques based on gradient descent algorithm: A review. PalArch’s J. Archaeol. Egypt/Egyptology 2021, 18, 2715–2743. [Google Scholar]

- Yu, H.; Wilamowski, B.M. Levenberg–marquardt training. In Intelligent Systems; CRC Press: Boca Raton, FL, USA, 2018; pp. 12–31. [Google Scholar]

- Kiefer, J.; Wolfowitz, J. Stochastic estimation of the maximum of a regression function. Ann. Math. Stat. 1952, 23, 462–466. [Google Scholar] [CrossRef]

- Larson, J.; Menickelly, M.; Wild, S.M. Derivative-free optimization methods. Acta Numer. 2019, 28, 287–404. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).