Abstract

Physics-informed neural networks (PINNs) have been widely adopted to solve partial differential equations (PDEs), which could be used to simulate physical systems. However, the accuracy of PINNs does not meet the needs of the industry, and severely degrades, especially when the PDE solution has sharp transitions. In this paper, we propose a ResNet block-enhanced network architecture to better capture the transition. Meanwhile, a constrained self-adaptive PINN (cSPINN) scheme is developed to move PINN’s objective to the areas of the physical domain, which are difficult to learn. To demonstrate the performance of our method, we present the results of numerical experiments on the Allen–Cahn equation, the Burgers equation, and the Helmholtz equation. We also show the results of solving the Poisson equation using cSPINNs on different geometries to show the strong geometric adaptivity of cSPINNs. Finally, we provide the performance of cSPINNs on a high-dimensional Poisson equation to further demonstrate the ability of our method.

Keywords:

physics-informed neural networks; constrained self-adaptive; bounded weights; ResNet block-enhanced network MSC:

65M99

1. Introduction

Deep learning achieves breakthroughs in many scientific fields and impacts the areas of data analysis, decision making, and pattern recognition. Recently, deep learning methods have been applied to solve partial differential equations (PDEs), and physics-informed neural networks (PINNs) [1,2] used to solve the partial differential equations (PDEs). The main idea is to represent the solutions of PDEs using a neural network, and optimize them with the constraints of physics-informed loss using automatic differentiation (AD). In the last few years, PINNs have been employed to solve PDEs from different fields, including problems in mechanical engineering, geophysics [3], vascular fluid dynamics [4,5], and biomedicine [6].

To further enhance the accuracy and efficiency of PINNs, a series of extensions to the original formulation of Raissi et al. [1,7,8,9,10,11] were proposed. For example, from the aspect of data augmentation, re-sampling methods are proposed to adaptively change the distribution of residual points during training [7,8], which could help to improve the accuracy of PINNs in solving stiff PDEs. The standard loss function in PINNs is the mean square error (MSE), which is not always suitable for training when solving PDEs [12,13]. In [9], an adjustment method among different loss terms was proposed to mitigate the gradient pathologies. Causal-PINNs [10] was proposed to solve the time-dependent PDEs, by means of an adaptive adjustment scheme for loss weights in the temporal domain. Meanwhile, regularization on the differential forms of PDEs was also demonstrated to be an effective way to improve accuracy [14], compared to original PINNs. In addition, the architectures [9,11,15,16] of PINNs greatly influence the final prediction results, and some works [15,17] have focused on embedding methods, which are useful for features enhancement of PINNs and are even possibly enforceable on soft/hard boundary enforcement [18].

PINNs with fully connected neural networks are widely used to solve partial differential equations and the derivatives of PDEs could be directly computed by means of automatic differentiation (AD). There also exist various types of architectures to solve PDEs, e.g., CNN architecture [19] and UNet architecture [20]. However, CNN and UNet require a finite difference approach when calculating the derivatives of PDEs. Other architectures, such as Bayesian neural networks (BNNs) [21] and generative adversarial networks(GANs) [22], are also used to address PDE problems. Despite the development of different architectures, fully connected feed forward neural networks are still the most used architecture in PINNs and their hyper-parameters, such as depth, width or the connected way between hidden layers, greatly influence the final results. In [16], adjustments on width and depth of fully connected neural networks (FCNNs) showed the different accuracy of PINNs. In [11], a ResNet-block was used to enhance the connected way between hidden layers and performed better than FCNNs in the parameters identification of the Navier–Stokes equation. As in [9], modified neural network was also proposed to project the input variables to a high-dimensional feature space and fuse them as neural attention mechanisms. All these cases showed that the selected architecture was essential to the final predictive results of PINNs.

The main idea of such an adaptive scheme is to attach a bounded trainable weight for each single residual point in the residual loss function and adaptively update pointwise weights for each training point. As for the architecture, it influences the predicted results and is also essential for improving the accuracy of PINN methods. Our contributions in this paper are summarized below:

- We develop a constrained self-adaptive physics-informed neural network (cSPINN), which had better accuracy in numerical results. Meanwhile, the dynamics of residual weights changed more steadily during the training process.

- To better capture the solution with sharp transitions in the physical domain, we develop a ResNet block-enhanced modified MLP architecture, which also has the ability to tackle the vanishing gradient problem using identity mapping, even for deep architectures.

2. Related Works

In this section, we first introduce the model problem, and then provide a brief overview of physics-informed neural networks (PINNs) for solving forward partial differential equations (PDEs).

2.1. Model Problem

In this subsection, we first introduce the model problem given the spatial domain , and the temporal domain, having the general form of a partial differential equation (PDE):

where and are the general differential operator, which includes any combination of linear and non-linear terms of temporal and spatial derivatives. The corresponding initial condition at is given by . In the above, is a boundary operator, which could be Neumann, Robin, or periodic boundary conditions, and enforces the condition at the boundary domain .

2.2. PINNs Formulation

To solve the PDEs via PINNs [1], we needed to construct a neural network , given the spatial and temporal inputs with the trainable parameters , to approximate the solution . Then, we could train a physics-informed model by minimizing the following loss function:

where

here, , and are loss functions due to the residual in physics domain, loss in initial condition and boundary condition. We use to represent the output of neural network, which is parameterized by . , and are the weights that could influence the convergence rate of different loss components and the final accuracy of PINNs [9,12]. Hence, it was important to use an appropriate weighting strategy during training. Here , and are training points inside the domain, points on the initial domain and points on the boundary.

In this paper, we used error to define the relative error between the PINNs’ prediction and the reference solutions, as

where is the reference solution and is the neural network prediction for a set of testing points ().

3. Formulation of Constrained Self-Adaptive PINNs (cSPINNs)

In this section, we first present the method of the constrained self-adaptive weighting scheme for PINNs, which could adaptively adjust the weights for residual points during training. Next, we propose a modified network architecture enhanced by ResNet block to further improve the performance of cSPINNs.

3.1. Constrained Self-Adaptive Weighting Scheme

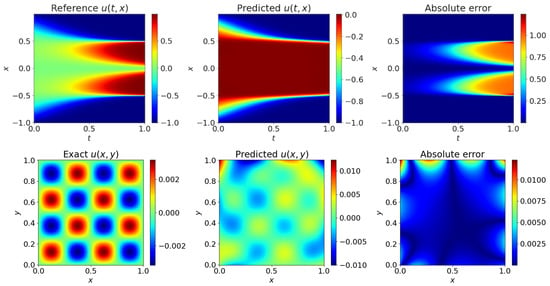

In PINNs, we defined the residual loss to enforce the network to satisfy the governing equation for any sample points inside the domain, i.e., . However, we could find that equal weight was attached for all residual points in the above formulation of , with the result that PINNs could not focus on the area that was difficult to learn during training (as shown in Figure 1). One effective way to overcome this, was to attach individual adjustable weights for each residual point, according to the distribution of residual in the physical domain, and then automatically raise the weights of inner points with relatively higher loss value. Then, we could formulate such self-adaptive adjustment as a min–max optimization problem, i.e.,

where are trainable adaptive weights for all corresponding residual points , and C is a constant that could be used to constrain the range of weights. Here, we set C as the expectation of weights in PINNs, i.e., . The formulation of loss function is as below:

where is a weighted residual loss and the other terms in are the same as in the original formulation. It was easy to solve the inner optimization of the min–max optimization problem above by selecting a residual point with the largest residual loss and attaching a weight with value C, while setting the weights of other points to zero.

Figure 1.

PINNs results for solving Allen–Cahn Equation (The first row) and 2D Poisson’s Equation (The second row).

However, PINNs could only optimize one single point in every training iteration if we chose such a strategy, which went against the adjustment among different residual points. In [12], Mcclenny et al. proposed a self adaptive method to solve the above min–max problem by a step-forward optimization in the inner optimization using a gradient ascent procedure, which could approximately satisfy the inner maximization requirement. In this way, different residual points could be attached with appropriate weights during training. They updated the during the training process as:

where is the learning rate at iteration k. However, it was easy to see that was an unbounded weights vector during the training, which mean that the training of PINNs would suffer an unstable state, caused by the rapidly changing weights. We modified the updating rules of the as:

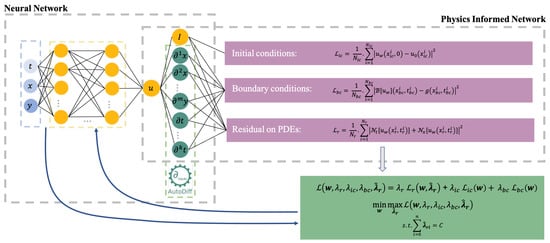

where we denote as ith residual points in , k and the training iteration numbers. is a middle variable before normalization. In other words, we first normalized the and derived the final by a weighted sum of the previous weight of iteration k and the normalized in the current iteration. The framework of cSPINN is shown in Figure 2.

Figure 2.

A framework of constrained self-adaptive physics-informed neural networks. A neural network output the solution u for PDE, then we computed the derivatives by auto differentiation and obtained the loss of initial conditions, boundary conditions and governing equation. The parameters of neural network and constrained self-adaptive weights for residual points were updated at the same time by means of the gradient method.

3.2. ResNet Block-Enhanced Modified Network

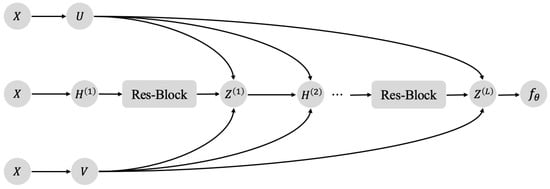

Continuous improvement of network structure design is one of the drivers for the development of deep learning methods and its applications. For example, convolutional neural networks [23,24,25] were designed for, and have been widely used in, computer vision, such as image classification, image segmentation, and object recognition tasks. Similarly, recurrent neural networks and their variants [26,27,28] show great performance in natural language processing and sequential modeling because of their ability to capture long-term dependencies in the sequences. In [9], a modified MLP framework was proposed to correctly capture the solution of complex PDEs, which has been widely used in many cases. PINNs with ResNet blocks are also used to improve the representational capacity of the neural network when solving PDEs. Inspired by these ideas, we proposed a ResNet block-enhanced modified MLP framework to better represent the solution of PDEs. The ResNet block-enhanced modified network is as follows:

here is an activation function, ⊙ is an element-wise multiplication operation, f is the final output of network and the updating rule of is similar to the residual learning, which was first proposed in [23] and achieved great success. We have denoted it above, i.e.,

More specifically, and are the input and output of the ResNet block, respectively. F is an operation consisting of a fully connected layer and activation function, which could be defined as here, with input X, hidden layers’ parameters and an activation function . As for the element-wise addition , it could be conducted by a shortcut connection. The effectiveness of such a block in PINNs was demonstrated in [11] and here we used it as a feature enhanced sub-structure of our network. The features could be fused by a shortcut connection and fed into updating the hidden layers by an element-wise multiplication operation with U and V, as shown in Figure 3. Compared to simple fully connected neural networks, our architecture enhanced the representative ability of hidden layers by ResNet blocks, which could make it easier for the network to learn the desired solution. Meanwhile, embedding of inputs from low-level space to higher dimensional feature space could also be considered here, and could be fused, using the attention mechanism during the forward process.

Figure 3.

The ResNet block-enhanced modified architecture for physics-informed neural networks.

4. Numerical Experiments

We demonstrated the performance of our proposed cSPINN in solving several PDE problems. In all of the examples, we used the ResNet block-enhanced modified network with tanh function as our activation function . The proposed architecture had 2 input neurons and consisted of 4 ResNet blocks, each having a width of 64. The output layer contained only one neuron for the output/solution of the PDE.

4.1. 1D Allen–Cahn Equation

The Allen–Cahn equation is a stiff PDE, which has a sharp interface and time transitions in its solution. We denote the 1D Allen–Cahn equation as below:

We used the same physics parameters of the Allen–Cahn equation as in [7] to better compare the results. For the given problem, we used the ResNet block-enhanced modified network architecture mentioned above to better fit the sharp transition. In order to implement the cSPINNs for the Allen–Cahn equation, the following loss function was used:

- The constrained self adaptive loss for the residual of the governing equation

- Mean squared loss on the initial condition

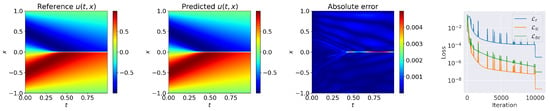

- Mean squared loss on the boundary conditionwhere is the prediction of neural network and we sampled = 25,600 residual points, boundary points and points on the initial condition. Here, we used the Adam optimizer with 10,000 epochs and L-BFGS optimizer with 1000 epochs to optimize the network architecture. During the training process, we set the boundary weight and the initial weight , which could help expedite the convergence. Figure 4 shows the numerical results of constrained self-adaptive PINNs(cSPINNs) compared with the reference solution obtained through the Chebfun method [29] and the training loss history. The relative error was 1.472 × 10, which was better than the time-adaptive approach in [7] and the original PINNs [1].

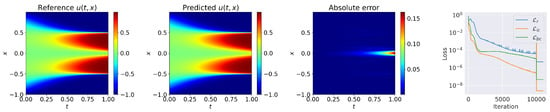

Figure 4. cSPINNs results (with ResNet block-enhanced) for solving 1D Allen–Cahn equation.

Figure 4. cSPINNs results (with ResNet block-enhanced) for solving 1D Allen–Cahn equation.

4.2. 1D Viscous Burgers’ Equation

The Viscous Burgers’ equation is widely used in various areas of applied mathematics, such as fluid mechanics, traffic flow, and gas dynamics. The 1D viscous Burgers equation could be denoted as below:

To better compare the results, we used the same physics parameters of the Burgers equation as in [1]. In order to implement the cSPINN scheme for the Burgers equation, the following modified residual loss function was used as mentioned in the formulation of cSPINNs:

- The constrained self adaptive loss for the residual of the governing equation

- Mean squared loss on the initial condition

- Mean squared loss on the boundary conditionsHere, we trained the network with a constrained self-adaptive scheme and was the prediction of neural network. In this case, we sampled = 25,600 residual points, boundary points and points on the initial condition. We set the weights of initial condition and boundary condition as . Training was performed using 10,000 Adam iterations and 1000 L-BFGS epochs. The predicted solution of cSPINNs, and loss history are shown in Figure 5. Despite a sharp transition in the center of the domain, the solution of cSPINNs was still accurate in the whole domain, yielding a relative error of 4.796 × 10.

Figure 5. cSPINNs results (with ResNet block-enhanced modified neural network) for solving 1D viscous Burgers equation.

Figure 5. cSPINNs results (with ResNet block-enhanced modified neural network) for solving 1D viscous Burgers equation.

4.3. 2D Helmholtz Equation

The Helmholtz equation is widely used to describe the behavior of wave propagation, which could be mathematically formulated as follows:

where and

is a forcing term that results in a closed-form analytical solution

The exact solution above was as the same as in [9], which helped us better compare the results.

- The constrained self adaptive loss for the residual of the governing equation

- Mean squared loss on the boundary conditions

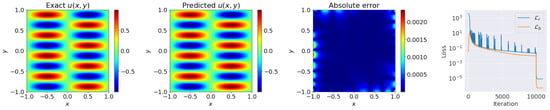

Here, we solved the problem with and to allow a direct comparison with the results reported in [9]. In this case, the ResNet block-enhanced modified network was trained with 10,000 Adam and 1000 L-BFGS epochs. As for the training points, we sampled = 10,000 residual points and (100 points per boundary). We show the prediction results of the cSPINNs in Figure 6. Finally, we achieved a relative error of 1.626 × 10, which exhibited performance than the learning-rate annealing weighted scheme, proposed in [9], and self-adaptive PINNs, proposed in [12]. Meanwhile, our method required less computational cost, due to the stability of the design in the self-adaptive weights.

Figure 6.

cSPINNs results (with ResNet block-enhanced modified neural network) for solving the 2D Helmholtz equation with an exact solution.

4.4. 2D Poisson Equation on Different Geometries

Poisson’s equation is an elliptic partial differential equation widely used in the description of potential fields. The 2D Poisson’s problem could be denoted as follows:

To further demonstrate the performance of cSPINNs, we used the exact solution with periodicity and obtained and , according to the exact solution, directly as:

Then, we had the following loss terms

- The constrained self adaptive loss for the residual of the governing equation:

- Mean squared loss on the boundary conditions (Taking the rectangular area as example):

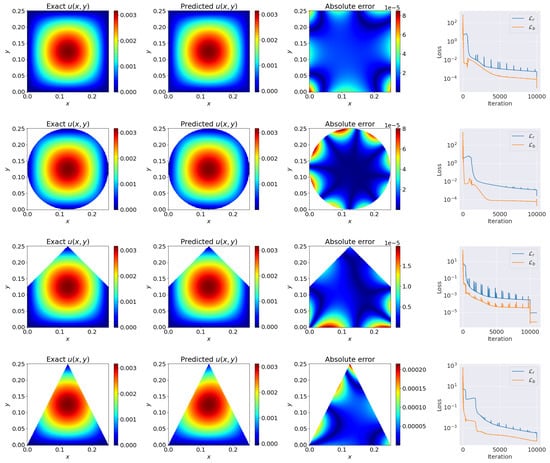

In this case, we first tested the performance of cSPINNs on a rectangular domain . We sampled = 10,000 residual points in the inner domain and = 1000 boundary points distributed on the boundary area. The ResNet block-enhanced modified network was trained with 10,000 epochs Adam and 1000 epochs L-BFGS. Meanwhile, different geometries, including circular, triangular, and pentagonal domains, were also tested to demonstrate the advantages of cSPINNs. The error between the predicted solution and the reference solution on different geometries is shown in Table 1. It is worth noting that we magnified the loss value by a constant number of c = 10,000, due to the relatively small true value (the maximum value of exact solution was about 0.003) in the solution, to ensure normal gradient backward during training. As for an irregular domain, we sampled the same number of points as for the rectangular domain. We found that the cSPINNs achieved good performance in this problem, as shown in Figure 7. The original PINNs failed, as shown in Figure 1.

Table 1.

The 2D Poisson equation: Relative errors obtained by cSPINN on different geometries. ().

Figure 7.

cSPINNs results (with ResNet block-enhanced modified neural network) for solving the 2D Poisson equation with an exact solution in .

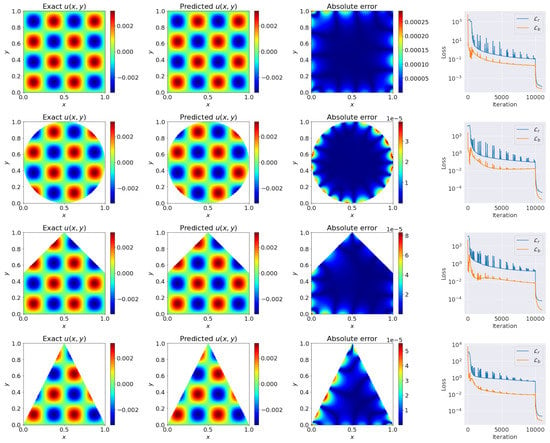

Moreover, we provided the comparison results between cSPINNs and reference solutions in the domain , which was hard for PINNs to solve, due to the high frequency of the solution, as shown in Figure 8. We show the relative errors between the predicted and the exact solution using cSPINNs on the different geometries in Table 2. To further test the performance of cSPINNs, we provided the numerical results of cSPINNs on the L-shaped domain, a classic concave geometry. In this case, we set and to have a direct comparison with PINN, as in [30]. We had the loss terms as follow:

Figure 8.

cSPINNs results (with ResNet block-enhanced modified neural network) for solving the 2D Poisson equation with an exact solution in .

Table 2.

The 2D Poisson equation: Relative errors obtained by cSPINN on different geometries. ().

- The constrained self adaptive loss for the residual of the governing equation

- Mean squared loss on the boundary condition

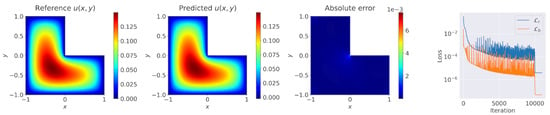

We tested the performance of cSPINN on the L-shaped domain and show the results in Figure 9. The maximum point-wise error was yielded at about 6 × 10 and the relative error 4.257 × 10. In [30], PINNs achieved accurate results with about 0.02 maximum point-wise error in the same case on the L-shaped domain. In [31], hp-VPINNs also tested the performance and, in this case, achieved about 0.02 maximum point-wise error on the domain. Therefore, cSPINNs also performed well, even on such concave geometry.

Figure 9.

cSPINNs results (with ResNet block-enhanced modified neural network) for solving the L-shaped Poisson equation with the reference solution.

4.5. High Dimensional Poisson Equation

Consider Poisson’s equation in high dimension (d = 10):

The solution of this problem was and we computed the error of cSPINN using this exact solution.

- The constrained self adaptive loss for the residual of the governing equation:

- Mean squared loss on the boundary condition:

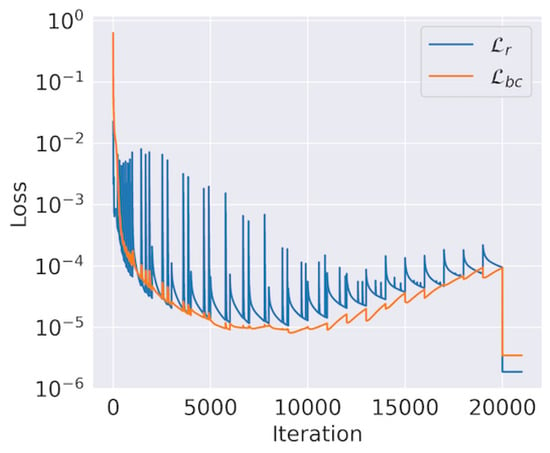

We computed the relative error between the solution of cSPINN and the exact solution. The relative error of cSPINN was 1.028 × 10, which was smaller than the Deep Ritz method [32] (about 0.4%). In this case, we sampled = 1000 residual points in the inner domain and = 100 boundary points distributed on the boundary area. The ResNet block-enhanced modified network was trained with 20,000 epochs Adam and 1000 epochs L-BFGS. The training loss history of cSPINN is shown in Figure 10. Finally, we also provide the relative computational cost between cSPINNs and PINNs in different cases in Table 3. When computing the cost, we set the cSPINNs and PINNs with the same network depth, width, and number of training epochs for fair comparison.

Figure 10.

cSPINNs’ training loss for High Dimensional Poisson equation. (with ResNet block-enhanced modified neural network).

Table 3.

The relative computational cost of solving different PDEs with cSPINNs and PINNs.

At the end of this section, we also tested the impact of the following three architectures: the Multilayer Perceptron(MLP), the modified Multilayer Perceptron (MMLP), and the ResNet block-enhanced modified network(ResMNet). During the test, the depth and width of all networks were fixed at 6 and 128, respectively. Here, we also provided the results to demonstrate the effectiveness of our proposed constrained self-adaptive weighting scheme(cSA) compared to the loss function. Meanwhile, as can be seen in Table 4, we observed that, compared to the MLP and the MMLP, the ResMNet yielded the highest accuracy. Therefore, the constrained self-adaptive weighting scheme (cSA) and the ResNet block-enhanced modified network (ResMNet) were desired in the cSPINNs.

Table 4.

Relative errors of different loss functions and different architectures.

5. Conclusions and Future

In this paper, we proposed constrained self-adaptive PINNs (cSPINNs) to adaptively adjust the weights of individual residual points, which became more robust during training, due to the bounded weights. Meanwhile, a ResNet block-enhanced modified neural network was also proposed to enhance the predictive ability of PINNs.

We demonstrated the effectiveness of our method in solving various PDEs, including the Allen–Cahn equation, the Burgers equation, the Poisson equation and the Helmholtz equation. Our method showed good performance in all the cases mentioned and outperformed PINNs, especially in the Poisson equation with periodic solution, regardless of the geometries of the computational domain. Even with sharp transition in the physical domain, cSPINNs were also robust when solving the Allen–Cahn equation, which was difficult for the original PINNs to solve. Compared with the PINNs, cSPINNs could improve the accuracy and could be implemented with just a few lines of code, which made it possible to combine our method with other extensions of PINNs to further improve the performance.

The usage of a constrained self-adaptive weighting scheme could attach higher weight values to difficult to learn regions during training, which made it possible to solve complicated problems. In this paper, we provided the numerical results of cSPINNs in solving the 10D Poisson equation and achieved better performance than the Deep-Ritz method. In the future, we will further generalize cSPINNs to solve higher dimensional PDEs and multi-physics problems.

Author Contributions

Methodology, G.Z.; Software, G.Z., H.Y., G.P., Y.D. and F.Z.; Writing — original draft, G.Z.; Writing — review & editing, G.Z.; Project administration, G.Z., H.Y. and Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded under the Auto-PINNs Research program of SandGold AI Research, Guangdong, China.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Raissi, M.; Perdikaris, P.; Karniadakis, G. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics Informed Deep Learning (Part II): Data-driven Discovery of Nonlinear Partial Differential Equations. arXiv 2017, arXiv:1711.10566. [Google Scholar]

- Song, C.; Alkhalifah, T.; Waheed, U.B. Solving the frequency-domain acoustic VTI wave equation using physics-informed neural networks. Geophys. J. Int. 2021, 225, 846–859. [Google Scholar] [CrossRef]

- Zheng, X.; Yazdani, A.; Li, H.; Humphrey, J.D.; Karniadakis, G.E. A three-dimensional phase-field model for multiscale modeling of thrombus biomechanics in blood vessels. PLoS Comput. Biol. 2020, 16, e1007709. [Google Scholar] [CrossRef] [PubMed]

- Yin, M.; Zheng, X.; Humphrey, J.D.; Karniadakis, G.E. Non-invasive inference of thrombus material properties with physics-informed neural networks. Comput. Methods Appl. Mech. Eng. 2021, 375, 113603. [Google Scholar] [CrossRef]

- Sahli Costabal, F.; Yang, Y.; Perdikaris, P.; Hurtado, D.E.; Kuhl, E. Physics-informed neural networks for cardiac activation mapping. Front. Phys. 2020, 8, 42. [Google Scholar] [CrossRef]

- Colby, L.; Wight, J.Z. Solving Allen-Cahn and Cahn-Hilliard Equations using the Adaptive Physics Informed Neural Networks. arXiv 2020, arXiv:2007.04542. [Google Scholar]

- Nabian, M.A.; Gladstone, R.; Meidani, H. Efficient training of physics-informed neural networks via importance sampling. arXiv 2021, arXiv:2104.12325. [Google Scholar] [CrossRef]

- Wang, S.; Teng, Y.; Perdikaris, P. Understanding and Mitigating Gradient Flow Pathologies in Physics-Informed Neural Networks. SIAM J. Sci. Comput. 2021, 43, A3055–A3081. [Google Scholar] [CrossRef]

- Wang, S.; Sankaran, S.; Perdikaris, P. Respecting causality is all you need for training physics-informed neural networks. arXiv 2022, arXiv:2203.07404. [Google Scholar]

- Cheng, C.; Zhang, G.T. Deep Learning Method Based on Physics Informed Neural Network with Resnet Block for Solving Fluid Flow Problems. Water 2021, 13, 423. [Google Scholar] [CrossRef]

- McClenny, L.; Braga-Neto, U. Self-adaptive physics-informed neural networks using a soft attention mechanism. arXiv 2020, arXiv:2009.04544. [Google Scholar]

- Wang, C.; Li, S.; He, D.; Wang, L. Is L2 Physics-Informed Loss Always Suitable for Training Physics-Informed Neural Network? arXiv 2022, arXiv:2206.02016. [Google Scholar]

- Yu, J.; Lu, L.; Meng, X.; Karniadakis, G.E. Gradient-enhanced physics-informed neural networks for forward and inverse PDE problems. Comput. Methods Appl. Mech. Eng. 2022, 393, 114823. [Google Scholar] [CrossRef]

- Cai, W.; Li, X.; Liu, L. A Phase Shift Deep Neural Network for High Frequency Approximation and Wave Problems. SIAM J. Sci. Comput. 2020, 42, A3285–A3312. [Google Scholar] [CrossRef]

- Wang, Y.; Han, X.; Chang, C.Y.; Zha, D.; Braga-Neto, U.; Hu, X. Auto-PINN: Understanding and Optimizing Physics-Informed Neural Architecture. arXiv 2022, arXiv:2205.13748. [Google Scholar]

- Wang, S.; Wang, H.; Perdikaris, P. On the eigenvector bias of Fourier feature networks: From regression to solving multi-scale PDEs with physics-informed neural networks. Comput. Methods Appl. Mech. Eng. 2021, 384, 113938. [Google Scholar] [CrossRef]

- Dong, S.; Ni, N. A method for representing periodic functions and enforcing exactly periodic boundary conditions with deep neural networks. J. Comput. Phys. 2021, 435, 110242. [Google Scholar] [CrossRef]

- Gao, H.; Sun, L.; Wang, J.X. PhyGeoNet: Physics-informed geometry-adaptive convolutional neural networks for solving parameterized steady-state PDEs on irregular domain. J. Comput. Phys. 2021, 428, 110079. [Google Scholar] [CrossRef]

- Wandel, N.; Weinmann, M.; Klein, R. Learning Incompressible Fluid Dynamics from Scratch - Towards Fast, Differentiable Fluid Models that Generalize. arXiv 2021, arXiv:2006.08762. [Google Scholar]

- Yang, L.; Meng, X.; Karniadakis, G.E. B-PINNs: Bayesian physics-informed neural networks for forward and inverse PDE problems with noisy data. J. Comput. Phys. 2021, 425, 109913. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, D.; Karniadakis, G.E. Physics-Informed Generative Adversarial Networks for Stochastic Differential Equations. SIAM J. Sci. Comput. 2020, 42, A292–A317. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Su, Y.; Zhao, Y.; Niu, C.; Liu, R.; Sun, W.; Pei, D. Robust anomaly detection for multivariate time series through stochastic recurrent neural network. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019. [Google Scholar]

- Berland, H.; Skaflestad, B.; Wright, W.M. EXPINT—A MATLAB package for exponential integrators. ACM Trans. Math. Softw. 2007, 33, 4-es. [Google Scholar] [CrossRef]

- Lu, L.; Meng, X.; Mao, Z.; Karniadakis, G.E. DeepXDE: A deep learning library for solving differential equations. SIAM Rev. 2021, 63, 208–228. [Google Scholar] [CrossRef]

- Kharazmi, E.; Zhang, Z.; Karniadakis, G.E. hp-VPINNs: Variational physics-informed neural networks with domain decomposition. Comput. Methods Appl. Mech. Eng. 2021, 374, 113547. [Google Scholar] [CrossRef]

- Weinan, E.; Yu, B. The Deep Ritz Method: A Deep Learning-Based Numerical Algorithm for Solving Variational Problems. Commun. Math. Stat. 2018, 6, 1–12. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).