1. Introduction

Deep neural networks (DNNs) [

1,

2,

3,

4] have revolutionized the solution of a variety of computer vision tasks, e.g., image classification and semantic segmentation, and they have refreshed the state-of-the-art records. However, since these DNN models are designed under the closed-world assumption [

5], they can be hardly deployed in real-world scenarios directly. One of the major obstacles is the out-of-distribution (OOD) samples that inevitably exist. Indeed, many recent studies have reported that DNN models make overconfident predictions on OOD samples [

6] and thus have hidden risks in terms of safety issues.

Current solutions to deal with OOD overconfidence issues can be broadly divided into two categories. One branch of research focuses on alleviating the issue by suppressing the prediction confidences on a small set of typical OOD samples [

7,

8]. However, since the suppression on this typical set can hardly generalize to all OOD samples, the OOD overconfidence issue still exists. The other branch of research instead conducts pre-step OOD detection, and thus can reject OOD samples before feeding them to the DNN models.

To conduct OOD detection, many OOD scores that show how likely a sample is to be OOD have been recently devised. Hendrycks et al. [

9] proposed utilizing probabilities from softmax distributions of DNN classifiers. Though simple, this solution is validated as often being sufficient for OOD detection. Later, researchers have investigated the improvement of OOD detection by utilizing temperature scaling and adding small perturbations [

10], Mahalanobis distance [

11], energy-based models [

12], logit normalization [

13], etc. Although good detection performance has been shown, it is hardly explainable how this is attributable to these OOD scores.

An orthogonal yet more appealing direction is to employ Bayesian models to reformulate the problem [

14], since it is much more explainable to some extent. The rationale behind this direction is that the uncertainty of input samples, i.e., the likelihood of being OOD, is usually highly related to the uncertainty of DNN parameters, which can be measured by Bayesian approximation. Recently, sketching curvature for OOD detection (SCOD) [

15] makes it efficient and effective by using the local sketched curvature (Hessian/Fisher) of DNN models as a bridge. Specifically, they measure the final uncertainty based on both the uncertainty of the model parameters and the influence of input samples on the DNN models. In particular, however, since a plethora of approximations is applied in the process, and the influence of an input sample on DNN models can hardly be estimated stably, we observe that there are deviations in the estimated uncertainty for a certain number of input samples.

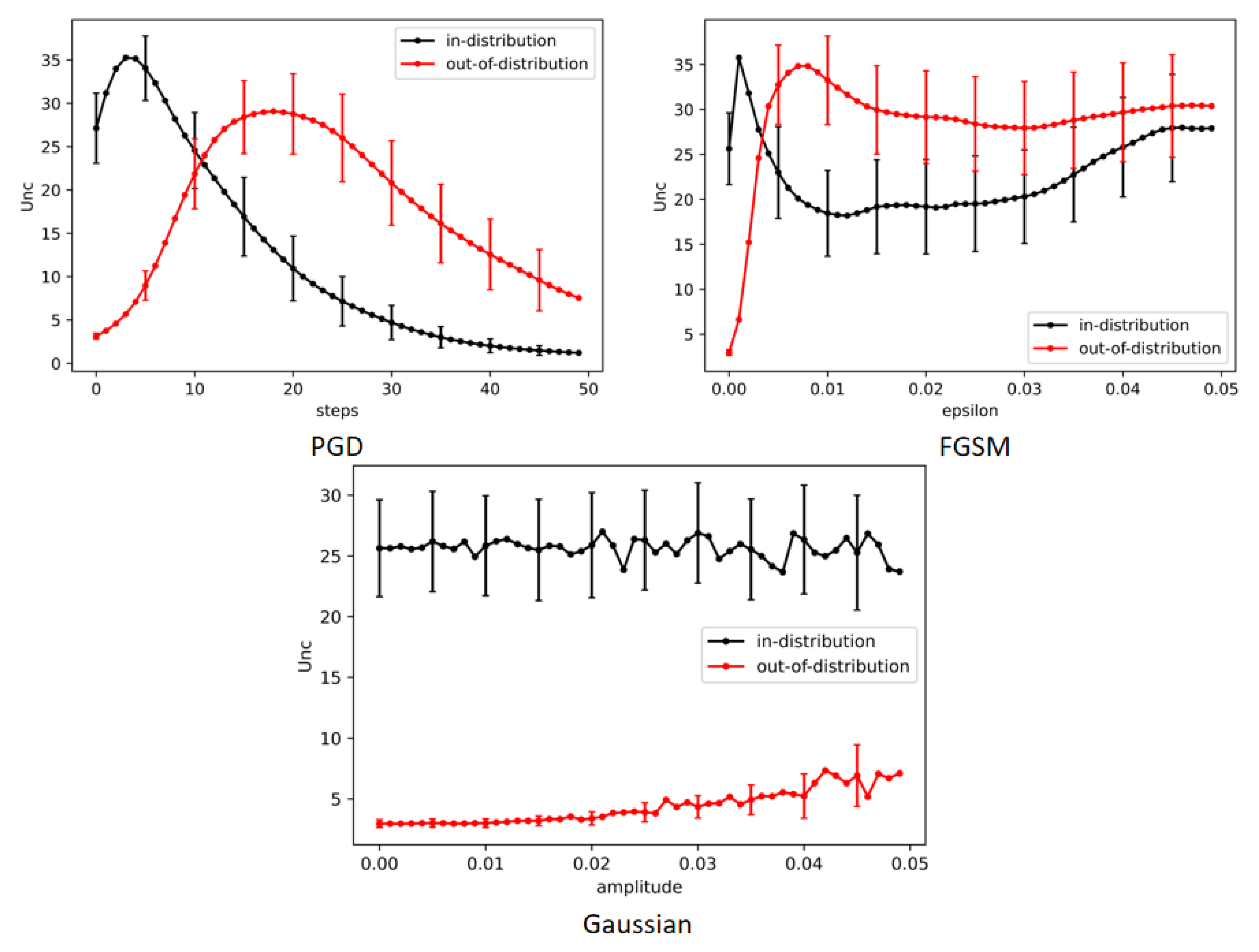

To resolve the above issue, we propose a novel AdvSCOD framework that estimates the uncertainty for each input sample by aggregating that of a small set of samples in its neighborhood. In particular, inspired by the fact that adversarial attacks that show imperceptible perturbation can lead DNN models to make totally different predictions, we enrich the input sample by applying adversarial perturbation to it, which we believe will better reflect the influence of the input sample on DNN models. Extensive experiments validate how our proposed AdvSCOD outperforms the state-of-the-art Bayesian-based methods on OOD detection. Furthermore, we also experimentally demonstrate that adversarial perturbation is better than several other types of perturbation.

Overall, our key contributions are summarized as follows:

We propose a novel AdvSCOD framework that estimates the uncertainty of each input sample by aggregating its small neighborhoods.

We conduct extensive experiments to validate the effectiveness of AdvSCOD and its superiority to state-of-the-art Bayesian-based OOD detection methods.

We provide a new perspective on evaluating the sample’s influence on DNN models in view of adversarial attacks.

3. Background and Problem Formulation

3.1. Problem Statement of OOD Detection

We formulate the problem in the context of image classification with DNNs. Given a DNN classifier trained on the dataset with pairs of images and their corresponding labels , where and and represents the parameters of f, we aim to define a measurement that can determine whether an input image belongs to the ID or OOD samples by using .

3.2. Bayesian Neural Networks and

OOD Detection

Probabilistic DNN. The DNN classifier

can be considered as a probabilistic model

. Given an input image

x, the classifier

f outputs

o using weights

,

where

o is the predicted logit, and then a distributional family

maps

o to a distribution over the targets, i.e.,

,

In this view, we can learn the weights

by maximum likelihood (MLE) estimation,

By giving a prior of

, it further becomes a maximum posterior estimate (MAP),

Bayesian Neural Networks. Instead of estimating a single optimal point as in Equation (

4), Bayesian neural networks (BNN) find the posterior distribution

of the parameter

and thus introduce uncertainty in the prediction.

Formally, the predictive distribution of an unknown label

y of a test data item

x is given by

However, directly calculating the above equation is usually intractable. Therefore, researchers usually use Laplace approximation to estimate the distribution and use Monte Carlo sampling to calculate the final expected value.

BNN-based OOD Detection. Many recent works show that the uncertainty of BNN can be utilized for OOD detection, since the predictions on ID and OOD samples have different uncertainties [

39,

40,

41,

42,

43].

Suppose the prior of

follows a Gaussian distribution with a standard deviation of

; the Laplace posterior of the variance of

can be calculated by

where

is the Hessian matrix with respect to the parameters of the pretrained DNN classifier

f, i.e.,

, and

I is an identity matrix. Therefore, we could utilize

to measure the uncertainty of DNNs, so as to conduct OOD detection [

23,

44].

However, for complex DNN models, calculating this exact Bayesian posterior approximation can still be challenging, where estimating and inversing huge matrices to calculate is demanded.

3.3. SCOD [15]

The framework of sketching curvature for OOD Detection (SCOD) estimates the uncertainty in two respects: (1) the uncertainty of the model parameters on the prediction and (2) the influence of input samples on the DNN models.

For the uncertainty of the model parameters, SCOD re-estimates the variance of parameters by replacing the Hessian matrix in Equation (

6) with the Fisher information matrix, whose calculation is more efficient and numerically stable,

where

denotes the weight-space Fisher evaluated for a particular input

and the trained weights

, which is calculated by

with

denoting the Jacobian matrix evaluated for data

under the parameter

. That is,

. Note that the process of estimating the uncertainty can be conducted offline.

As for the influence of input samples, i.e., the uncertainty determined by the certain input sample

x, we directly measure it using Equation (

8), that is,

. Note that this process is conducted online.

Therefore, the final uncertainty can be calculated as follows,

where

calculates the trace. Note that, although the calculation of Hessian matrix is much more efficient than the original Fisher matrix, SCOD further accelerates the process by splitting the original matrix into smaller ones. Since it is not the focus of this paper, we refer the reader to the original SCOD paper [

15].

Discussion and Our Solution However, since lots of information is discarded in the approximation, the calculated Fisher information matrix may not be that accurate. Furthermore, as reported in the field of adversarial attacks, a slight change in the input image can lead the classifier to make a totally different prediction. In that case, the estimation of is also quite unstable. Both factors make the calculated uncertainty inaccurate.

To handle the above issues, we propose utilizing the averaged uncertainty calculated together with an enriched sample set in its small neighborhood, instead of using the value evaluated on a single one. In particular, since applying an adversarial attack is expected to bring about the largest deviation in , we choose the enriched sample set obtained by applying adversarial perturbation.

6. Conclusions

In this paper, we have proposed a novel Bayesian-based AdvSCOD framework for robust OOD detection. It is derived from our observation that the uncertainty estimated by SCOD is not robust, since there is a lot of approximation and the influence of the input samples on DNN models can hardly be measured stably. Inspired by the fact adversarial attacks that show imperceptible perturbation can affect the prediction of DNN models significantly, we propose enriching the input sample with those in its neighborhood generated by applying adversarial perturbation, which we believe can better reflect the influence on model predictions, and then we average their uncertainties. Extensive experiments validate the effectiveness of AdvSCOD and its superiority to the state-of-the-art Bayesian-based OOD detection solutions. We hope that our work can inspire more research on handling OOD detection in a more explainable Bayesian view.

Limitation and Future Work Due to the complexity of Bayesian-based methods in sketching curvature, we found that our AdvSCOD is quite slow when the ID dataset is large. In the future, we plan to accelerate AdvSCOD by handling more difficult and large-scale settings.