Outlier Detection of Crowdsourcing Trajectory Data Based on Spatial and Temporal Characterization

Abstract

1. Introduction

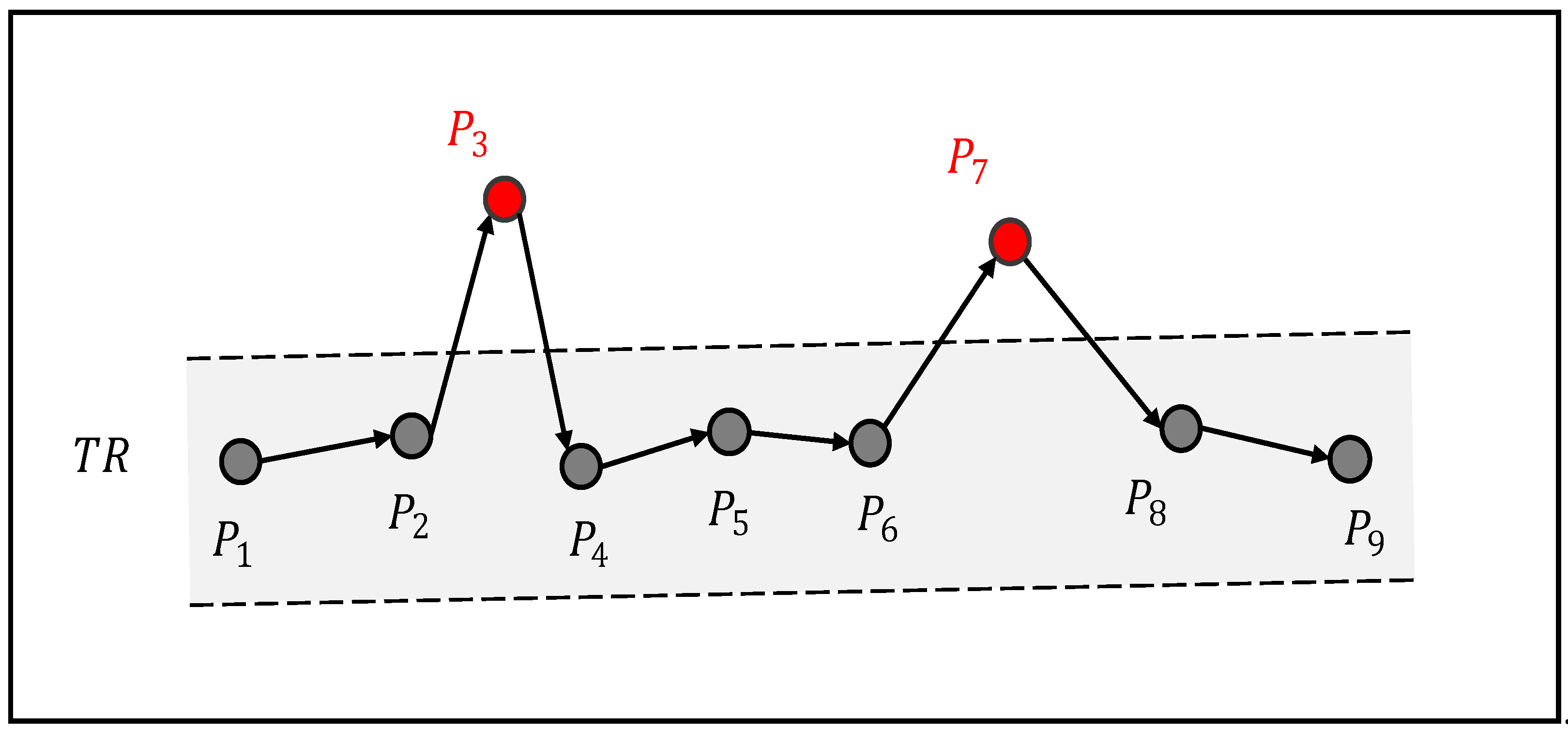

- We discuss and categorize common problems in crowdsourcing trajectory points, including trajectory point offsets that may be caused by navigation device errors or significant inconsistencies in trajectory point movement features due to acquisition process errors.

- We present two trajectory outlier definitions, including Location Offset Points (LO-outlier) and Movement Property outliers (MP-outlier).

- We propose a two-phase trajectory outlier detection framework (denoted as CTOD) to identify both types of trajectory outliers.

- We conduct a comprehensive experiment on a real-world vehicle trajectory dataset to manifest the effectiveness and superiority of our approach compared with other congeneric approaches.

2. Preliminaries

2.1. Classification of Crowdsourcing Trajectory Outliers

2.2. Challenges in Trajectory Outlier Detection

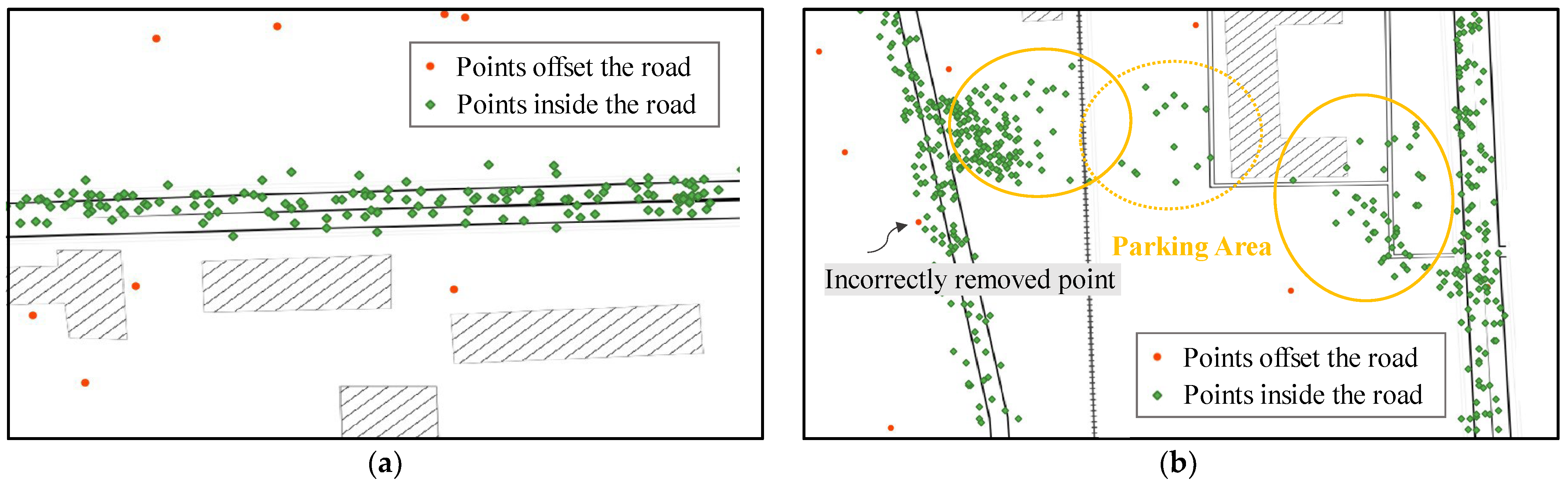

- In general, LO-outliers have a lower point density than those inside the roads. Additionally, since trajectory points are distributed unevenly, some points inside the roads with sparse points also have a low point density, causing these points to be removed as LO-outliers.

- Trajectory contributors use various navigation devices, which causes differences in the attribute categories. Some trajectory data collect attributes such as velocity and direction angle for each point, but some trajectory data only collect coordinates and time stamps. It is challenging to extract multidimensional movement features based on limited attributes.

- Trajectories are spatial sequences generated over time, so there is a spatial and temporal correlation between trajectory points. To mine the temporal correlation implied, it is necessary to explore the association between the independent movement features of the points within the trajectory. Moreover, since different movement features contribute differently to the temporal correlation extraction, extracting representative movement features for each trajectory point is challenging.

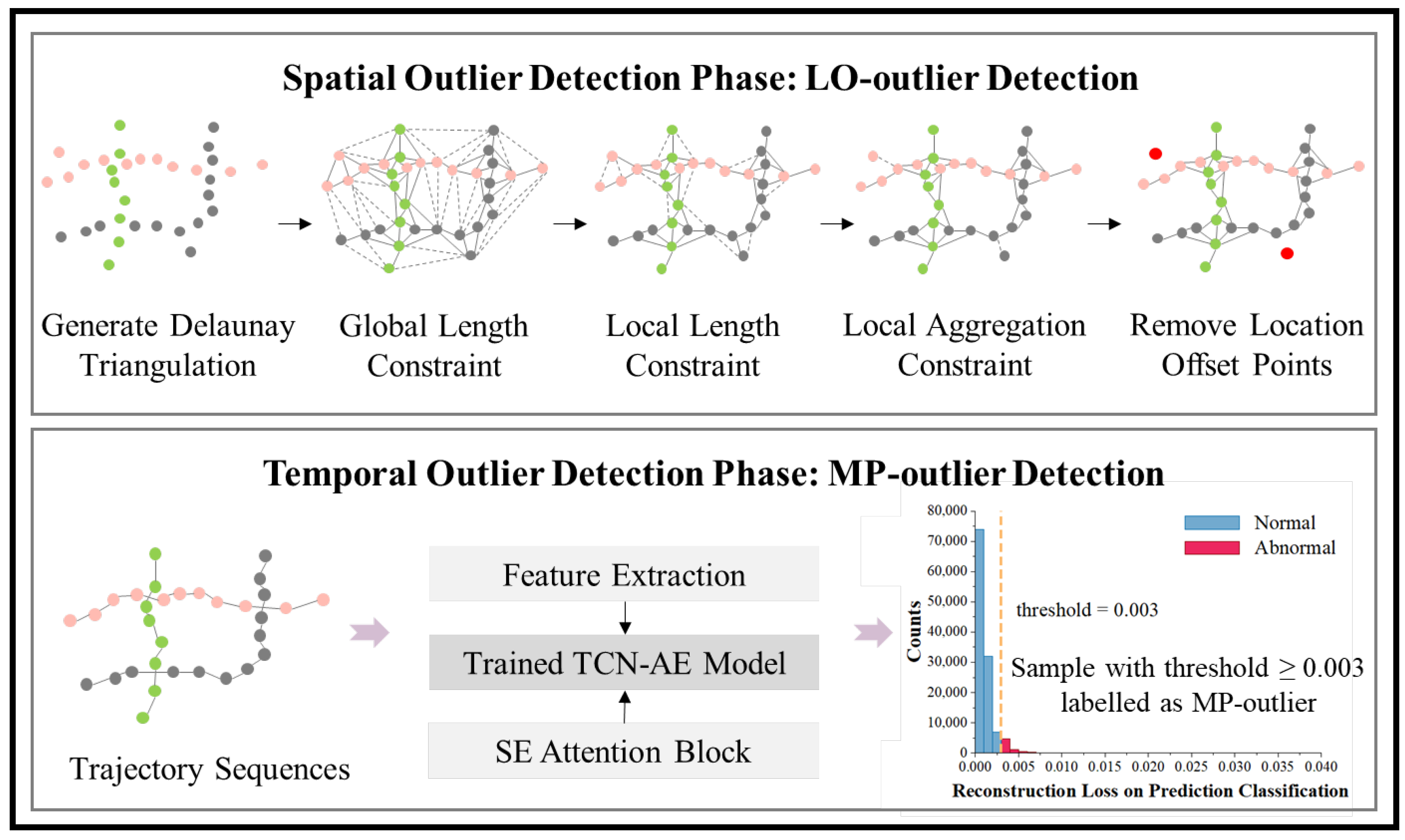

3. Framework: Spatial and Temporal Outlier Detection in Trajectory Data

3.1. Spatial Outlier Detection Phase: LO-Outlier Detection

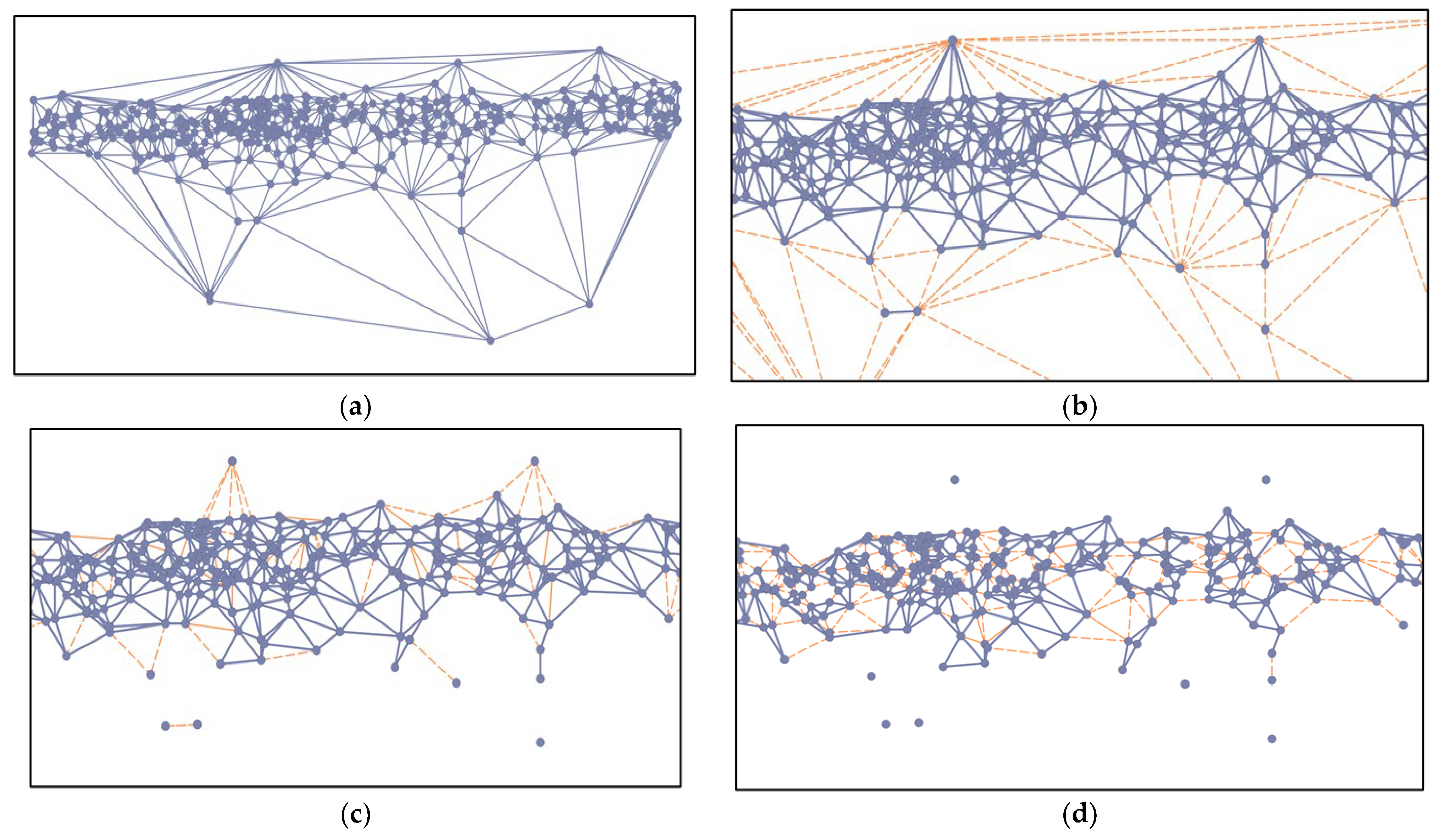

3.1.1. Delaunay Triangulation Generation

3.1.2. Global Length Constraint in Delaunay Triangulation

3.1.3. Local Length Constraint in Delaunay Triangulation

3.1.4. Local Aggregation Constraint in Delaunay Triangulation

3.1.5. Algorithm Description

- Input: A spatial point dataset S, which contains N spatial points with coordinates.

- Output: Spatial points after removal outliers.

- Step 1 Remove first-order long edges at a global level:

- For each point, calculate the and in the Delaunay triangulation and . The time complexity is linear to N.

- Remove to separate global outliers (Figure 3b). The time complexity is O(N).

- Step 2 Remove second-order long edges at a local level:

- For each point, calculate and . The time complexity is linear to N.

- Remove to separate local outliers (Figure 3c). The time complexity is O(N).

- Step 3 Deal with necks and chains:

- Remove and separate final outliers (Figure 3d). The time complexity is O(N).

3.2. Temporal Outlier Detection Phase: MP-Outlier Detection

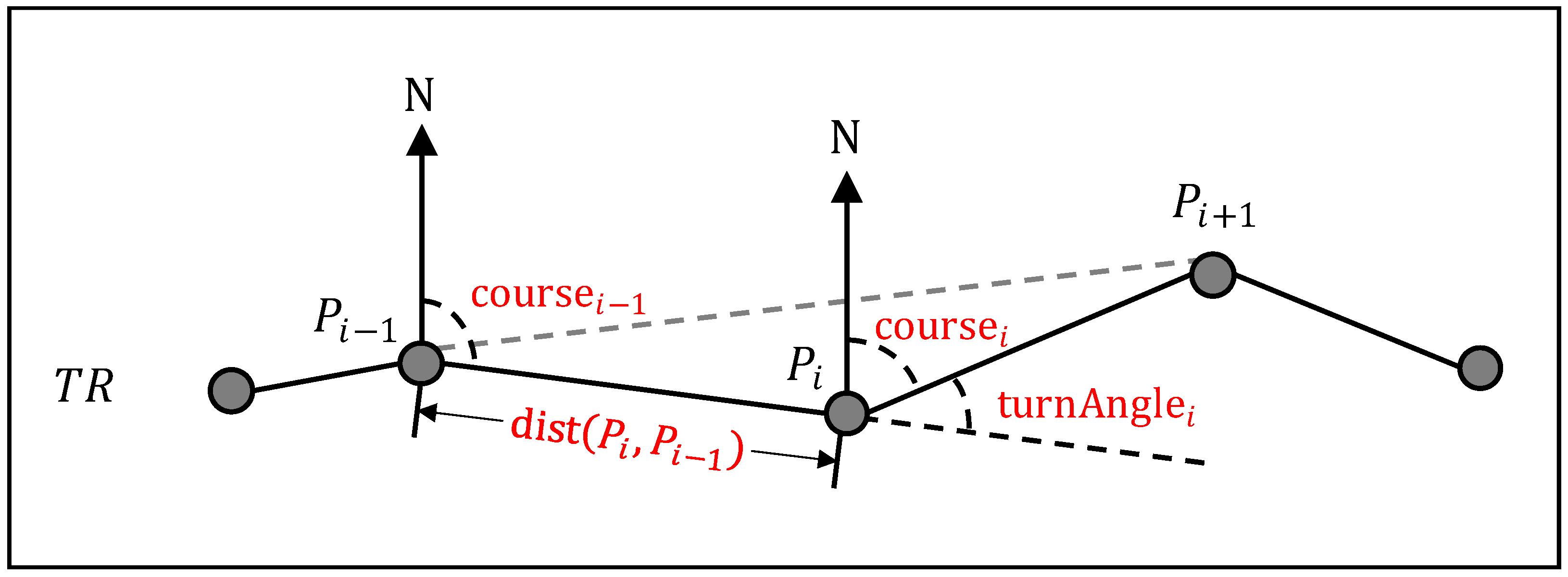

3.2.1. Feature Extraction

- Velocity

- 2.

- Acceleration

- 3.

- Course

- 4.

- Turning Angle

- 5.

- Turning Rate

- 6.

- Sinuosity

3.2.2. MP-Outlier Detection with TCN-AE

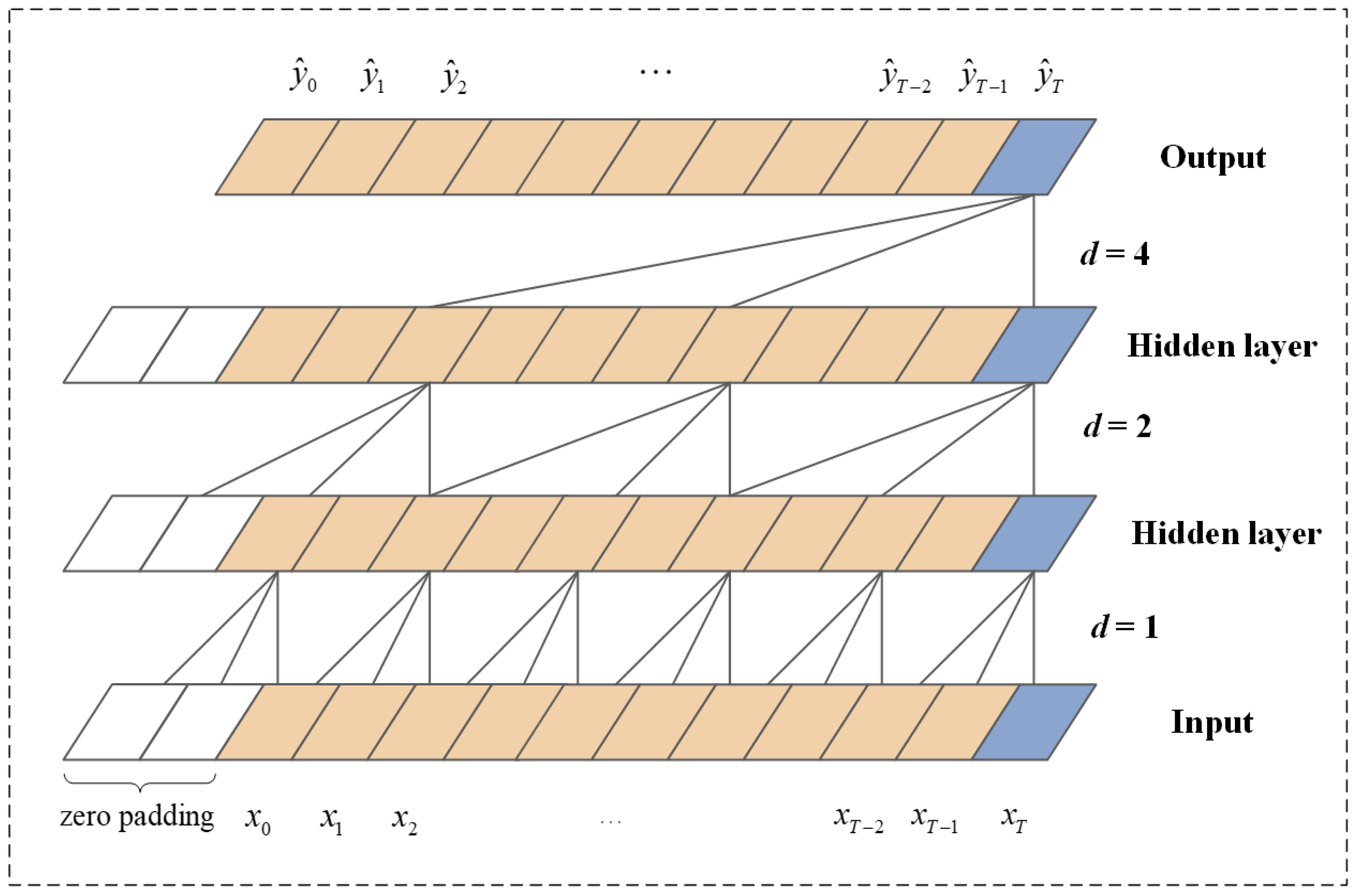

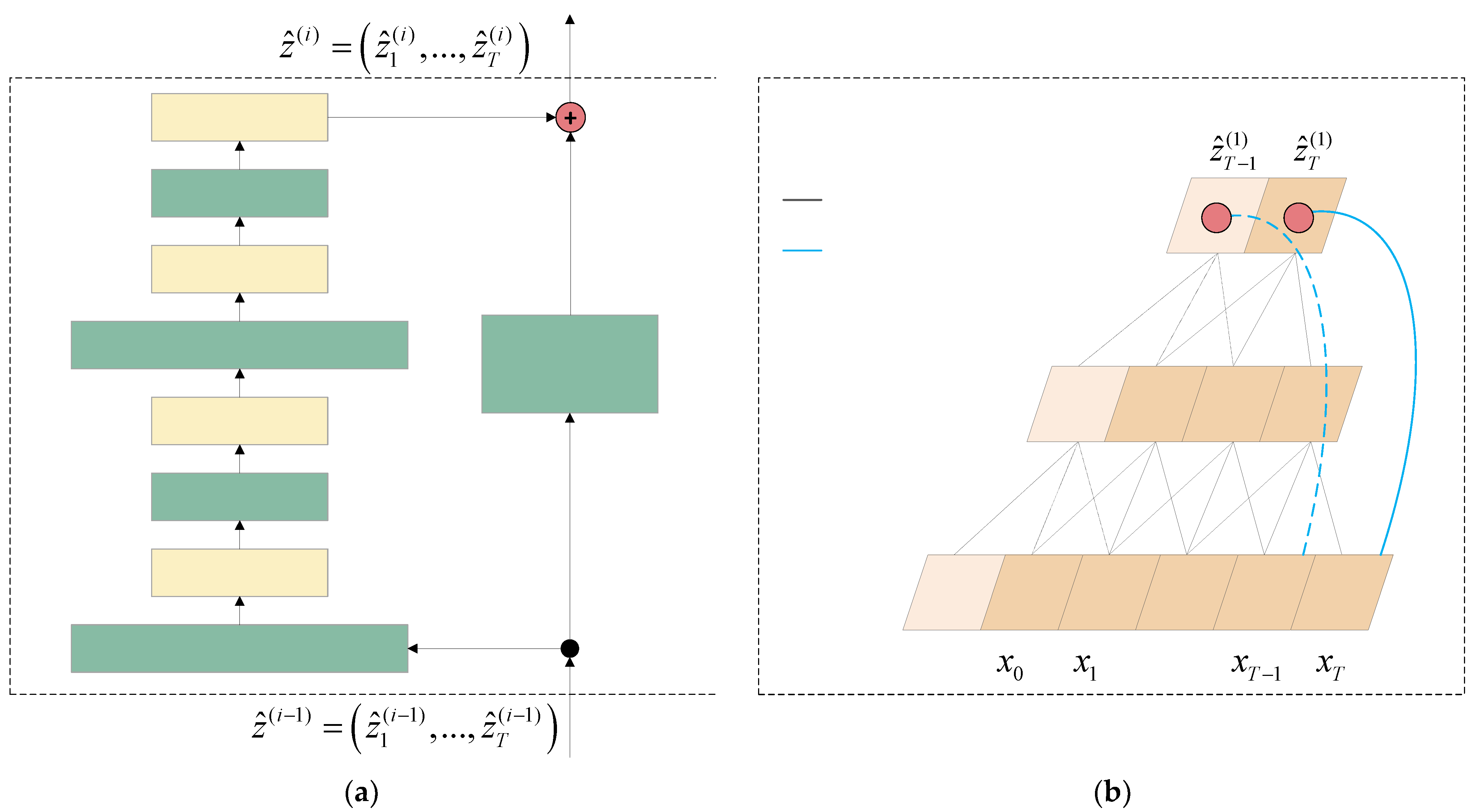

- Temporal Convolutional Network (TCN)

- 2.

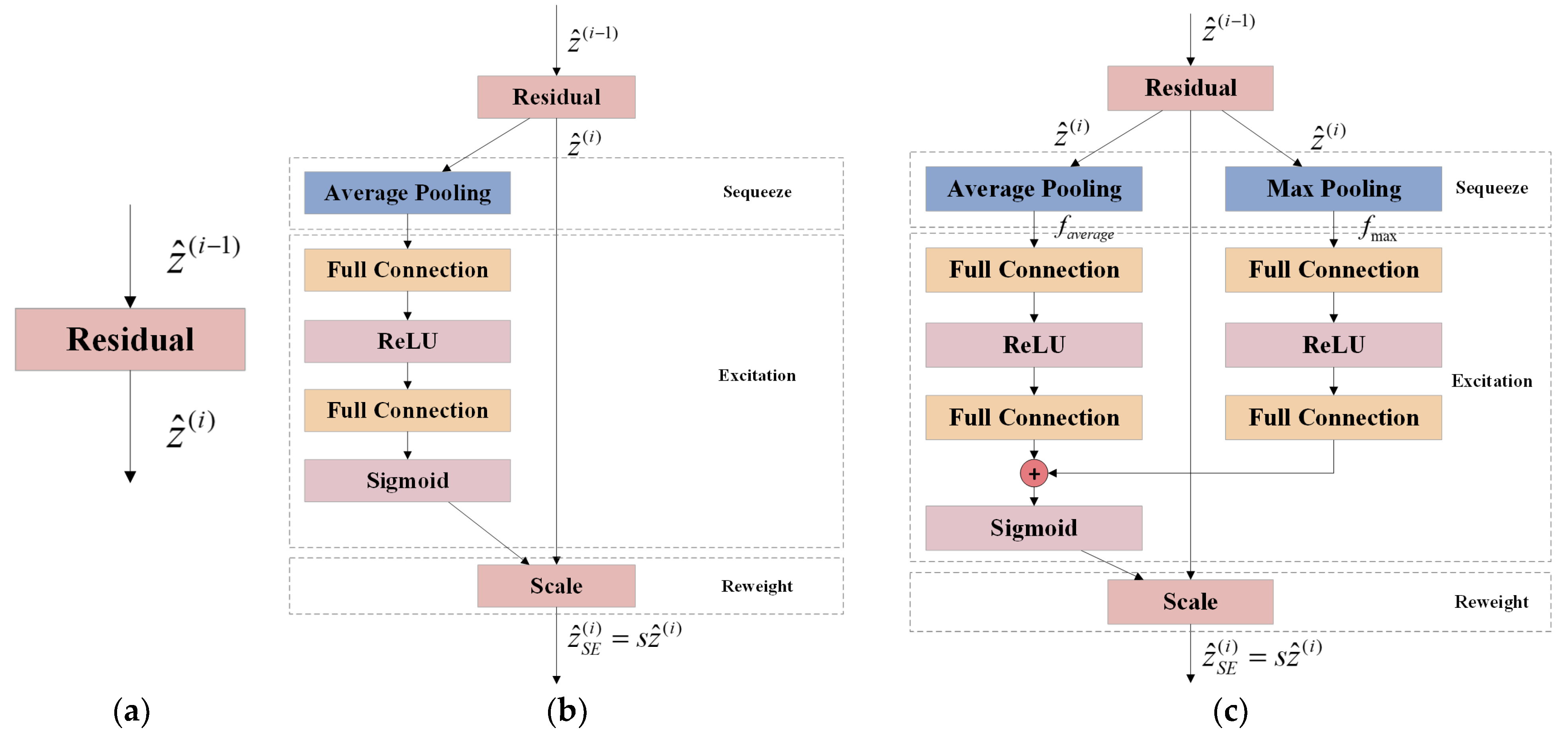

- SE Attentional Mechanism

- 3.

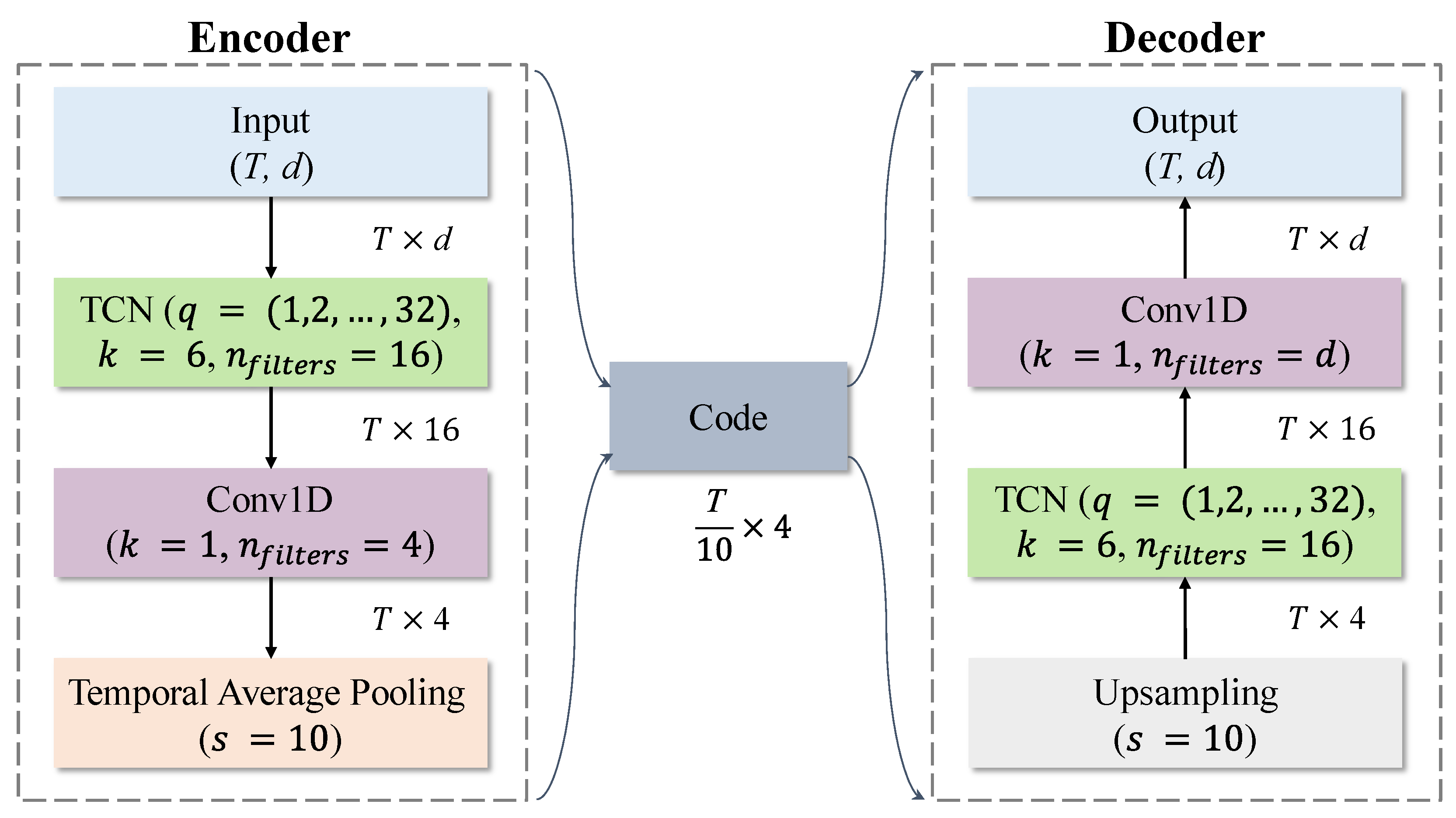

- Outlier Detection Model with TCN-AE

4. Experiment

4.1. Dataset

4.2. Evaluation Criteria

4.3. Experiment Settings

- IF [25]: IF (scikit-learn, v0.23.2) uses a number of 1000 base estimators in the ensemble and a sliding window size of w = 50.

- VAE [26]: LSTM-AE is implemented using the PyTorch framework. Both encoder and decoder use a single hidden layer with 400 dimensions, and the potential dimension is 200 dimensions.

- LSTM-AE [27]: LSTM-AE is implemented using the PyTorch framework. The encoder uses a 2-layer LSTM network with 128 units in the first layer and 64 units in the second layer. The decoder is the reverse.

- TCN-AE (baseline) [28]: Baseline TCN-AE is also implemented using the PyTorch framework. Both encoder and decoder use six dilated convolutional layers, respectively, and sixteen filters with a kernel size of k = 6.

- CTOD: TCN-AE is implemented using the PyTorch framework. Both the encoder and decoder use six dilated convolutional layers, respectively, and sixteen filters with a kernel size of k = 6. The global maximum pooling and the global average pooling are both added in the SE residual block.

4.4. Experiment Results

4.4.1. Experiment 1: Location Offset Point Cleaning Effectiveness Evaluation

4.4.2. Experiment 2: Extracted Outlier Points Evaluation

- Overall Performance

- 2.

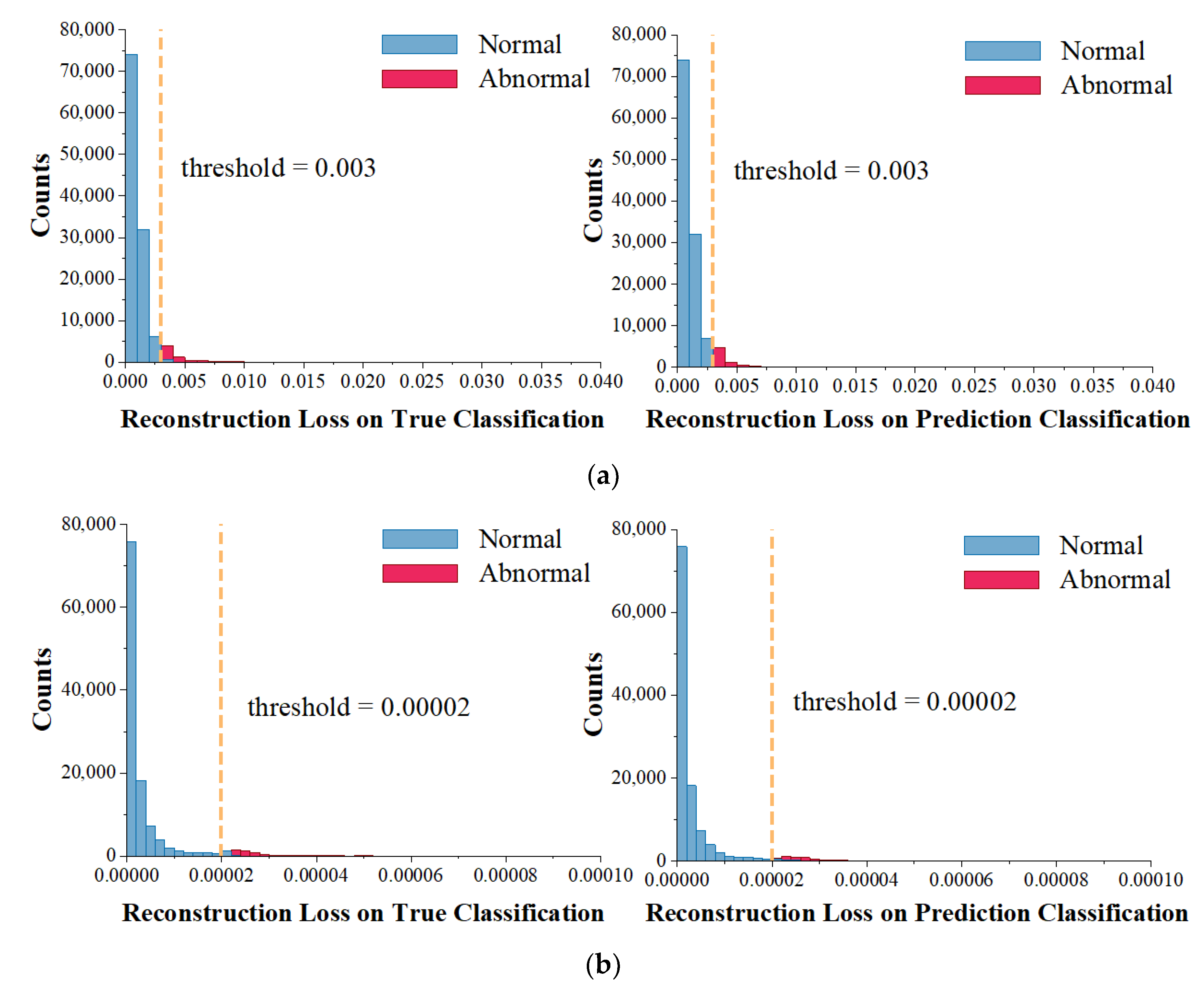

- Impact of Different Outlier Thresholds

- 3.

- Impact of Reconstruction Loss Functions

5. Conclusions

- Acceptance field: With the dilated convolutional structure, the acceptance field can easily be scaled down to the required size, allowing it to capture long-term time dependences more effectively.

- Skip connection: With skip connection, TCN-AE is less sensitive to the choice of dilated factors. For example, we can select the dilated factors or , with similar results.

- Hidden representations: By exploiting the output of the intermediate dilated convolutional layers, the input features can be accurately reconstructed at different timescales.

- Number of weights: TCN-AE requires fewer trainable weights than other architectures, such as recurrent neural networks.

- SE attention mechanism: With the SE attention mechanism, different contribution levels can be assigned to the constructed 6-dimensional input features, resulting in a more effective feature compression.

- In this paper, the threshold for outlier detection is obtained through continuous experimental testing. Our threshold produces good outlier detection results. In future work, we intend to explore trajectory outlier detection algorithms by setting sensitive parameters automatically.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yuan, G.; Sun, P.; Zhao, J.; Li, D.; Wang, C. A Review of Moving Object Trajectory Clustering Algorithms. Artif. Intell. Rev. 2017, 47, 123–144. [Google Scholar] [CrossRef]

- Xiao, P.; Ang, M.; Jiawei, Z.; Lei, W. Approximate Similarity Measurements on Multi-Attributes Trajectories Data. IEEE Access 2019, 7, 10905–10915. [Google Scholar] [CrossRef]

- Wang, C.; Ma, L.; Li, R.; Durrani, T.S.; Zhang, H. Exploring Trajectory Prediction Through Machine Learning Methods. IEEE Access 2019, 7, 101441–101452. [Google Scholar] [CrossRef]

- Kim, J.; Mahmassani, H.S. Spatial and Temporal Characterization of Travel Patterns in a Traffic Network Using Vehicle Trajectories. Transp. Res. Procedia 2015, 9, 164–184. [Google Scholar] [CrossRef]

- Meng, F.; Yuan, G.; Lv, S.; Wang, Z.; Xia, S. An Overview on Trajectory Outlier Detection. Artif. Intell. Rev. 2019, 52, 2437–2456. [Google Scholar] [CrossRef]

- Guo, T.; Iwamura, K.; Koga, M. Towards High Accuracy Road Maps Generation from Massive GPS Traces Data. In Proceedings of the 2007 IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–28 July 2007; pp. 667–670. [Google Scholar]

- Cao, K.; Shi, L.; Wang, G.; Han, D.; Bai, M. Density-Based Local Outlier Detection on Uncertain Data. In Web-Age Information Management; Li, F., Li, G., Hwang, S., Yao, B., Zhang, Z., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2014; Volume 8485, pp. 67–71. ISBN 978-3-319-08009-3. [Google Scholar]

- Liu, Z.; Pi, D.; Jiang, J. Density-Based Trajectory Outlier Detection Algorithm. J. Syst. Eng. Electron. 2013, 24, 335–340. [Google Scholar] [CrossRef]

- Wang, J.; Rui, X.; Song, X.; Tan, X.; Wang, C.; Raghavan, V. A Novel Approach for Generating Routable Road Maps from Vehicle GPS Traces. Int. J. Geogr. Inf. Sci. 2015, 29, 69–91. [Google Scholar] [CrossRef]

- Yang, X.; Tang, L. Crowdsourcing Big Trace Data Filtering: A Partition-and-filter model. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B2, 257–262. [Google Scholar] [CrossRef]

- Choi, M.-K.; Lee, H.-G.; Lee, S.-C. Weighted SVM with Classification Uncertainty for Small Training Samples. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 4438–4442. [Google Scholar]

- Xu, S.; Zhu, J.; Shui, P.; Xia, X. Floating Small Target Detection in Sea Clutter by One-Class SVM Based on Three Detection Features. In Proceedings of the 2019 International Applied Computational Electromagnetics Society Symposium-China (ACES), Nanjing, China, 8–11 August 2019; pp. 1–2. [Google Scholar]

- Degirmenci, A.; Karal, O. Robust Incremental Outlier Detection Approach Based on a New Metric in Data Streams. IEEE Access 2021, 9, 160347–160360. [Google Scholar] [CrossRef]

- Liu, B.; Yanshan, X.; Yu, P.S.; Zhifeng, H.; Longbing, C. An Efficient Approach for Outlier Detection with Imperfect Data Labels. IEEE Trans. Knowl. Data Eng. 2014, 26, 1602–1616. [Google Scholar] [CrossRef]

- Bhatti, M.A.; Riaz, R.; Rizvi, S.S.; Shokat, S.; Riaz, F.; Kwon, S.J. Outlier Detection in Indoor Localization and Internet of Things (IoT) Using Machine Learning. J. Commun. Netw. 2020, 22, 236–243. [Google Scholar] [CrossRef]

- Abdallah, M.; An Le Khac, N.; Jahromi, H.; Delia Jurcut, A. A Hybrid CNN-LSTM Based Approach for Anomaly Detection Systems in SDNs. In Proceedings of the The 16th International Conference on Availability, Reliability and Security, Vienna, Austria, 17 August 2021; pp. 1–7. [Google Scholar]

- Canizo, M.; Triguero, I.; Conde, A.; Onieva, E. Multi-Head CNN–RNN for Multi-Time Series Anomaly Detection: An Industrial Case Study. Neurocomputing 2019, 363, 246–260. [Google Scholar] [CrossRef]

- Yang, D.; Hwang, M. Unsupervised and Ensemble-Based Anomaly Detection Method for Network Security. In Proceedings of the 2022 14th International Conference on Knowledge and Smart Technology (KST), Chon buri, Thailand, 26 January 2022; pp. 75–79. [Google Scholar]

- Yao, R.; Liu, C.; Zhang, L.; Peng, P. Unsupervised Anomaly Detection Using Variational Auto-Encoder Based Feature Extraction. In Proceedings of the 2019 IEEE International Conference on Prognostics and Health Management (ICPHM), San Francisco, CA, USA, 17–20 June 2019; pp. 1–7. [Google Scholar]

- Provotar, O.I.; Linder, Y.M.; Veres, M.M. Unsupervised Anomaly Detection in Time Series Using LSTM-Based Autoencoders. In Proceedings of the 2019 IEEE International Conference on Advanced Trends in Information Theory (ATIT), Kyiv, Ukraine, 18–20 December 2019; pp. 513–517. [Google Scholar]

- Deng, M.; Liu, Q.; Cheng, T.; Shi, Y. An Adaptive Spatial Clustering Algorithm Based on Delaunay Triangulation. Comput. Environ. Urban Syst. 2011, 35, 320–332. [Google Scholar] [CrossRef]

- Zhaorong, H.A.; Tinglei, H.U.; Wenjuan, R.E.; Guangluan, X. Trajectory Outlier Detection Algorithm Based on Bi-LSTM Model. J. Radars 2019, 8, 36. [Google Scholar]

- Bai, S.; Kolter, J.Z.; Koltun, V. An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling. arXiv 2018, arXiv:1803.01271. [Google Scholar]

- Naser, M.Z.; Alavi, A.H. Error Metrics and Performance Fitness Indicators for Artificial Intelligence and Machine Learning in Engineering and Sciences. Archit. Struct. Constr. 2021, 1–19. [Google Scholar] [CrossRef]

- Liu, F.T.; Ting, K.M.; Zhou, Z.-H. Isolation Forest. In Proceedings of the 2008 Eighth IEEE International Conference on Data Mining, Pisa, Italy, 15–19 December 2008; pp. 413–422. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2022, arXiv:1312.6114. [Google Scholar]

- Jia, Y.; Zhou, C.; Motani, M. Spatio-Temporal Autoencoder for Feature Learning in Patient Data with Missing Observations. In Proceedings of the 2017 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Kansas City, MO, USA, 13–16 November 2017; pp. 886–890. [Google Scholar]

- Thill, M.; Konen, W.; Wang, H.; Bäck, T. Temporal Convolutional Autoencoder for Unsupervised Anomaly Detection in Time Series. Appl. Soft Comput. 2021, 112, 107751. [Google Scholar] [CrossRef]

- Wilcoxon, F. Individual Comparisons by Ranking Methods. In Breakthroughs in Statistics; Kotz, S., Johnson, N.L., Eds.; Springer Series in Statistics: New York, NY, USA, 1992; pp. 196–202. ISBN 978-0-387-94039-7. [Google Scholar]

| Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|

| 96.74 | 87.47 | 92.11 | 89.73 |

| Algorithm | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) | p |

|---|---|---|---|---|---|

| IF | 97.05 | 79.61 | 67.22 | 72.89 | 9.26 × 10-6 |

| VAE | 98.26 | 87.24 | 82.70 | 84.91 | 9.26 × 10-6 |

| LSTM-AE | 98.56 | 86.13 | 90.08 | 88.06 | 9.26 × 10-6 |

| TCN-AE (baseline) | 98.31 | 86.12 | 85.03 | 85.57 | 9.26 × 10-6 |

| CTOD | 98.79 | 89.40 | 90.31 | 89.85 | - |

| No. | Threshold | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|---|

| 1 | 0.001 | 67.59 | 15.40 | 99.86 | 26.69 |

| 2 | 0.002 | 94.23 | 50.60 | 99.86 | 67.17 |

| 3 | 0.003 | 98.79 | 89.40 | 90.31 | 89.85 |

| 4 | 0.0032 | 98.27 | 92.89 | 76.66 | 84.00 |

| 5 | 0.0034 | 97.59 | 95.57 | 62.14 | 75.31 |

| 6 | 0.0036 | 96.94 | 97.21 | 49.67 | 65.75 |

| 7 | 0.0038 | 96.45 | 97.99 | 40.67 | 57.48 |

| 8 | 0.004 | 96.07 | 98.17 | 34.05 | 50.56 |

| 9 | 0.005 | 95.13 | 98.52 | 17.89 | 30.28 |

| 10 | 0.006 | 94.71 | 97.81 | 10.70 | 19.28 |

| 11 | 0.007 | 94.46 | 96.59 | 6.39 | 11.99 |

| 12 | 0.008 | 94.33 | 95.25 | 4.25 | 8.13 |

| 13 | 0.009 | 94.25 | 95.35 | 2.89 | 5.61 |

| 14 | 0.01 | 94.19 | 95.31 | 1.72 | 3.38 |

| F1-Score | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| 1 | 98.56 | 83.98 | 93.63 | 88.54 |

| 2 | 98.68 | 88.51 | 88.76 | 88.63 |

| 3 | 98.52 | 83.68 | 90.79 | 87.09 |

| 4 | 98.43 | 82.35 | 92.79 | 87.26 |

| 5 | 98.57 | 84.29 | 93.46 | 88.64 |

| 6 | 98.78 | 89.49 | 90.11 | 89.79 |

| 7 | 98.13 | 78.20 | 95.41 | 85.95 |

| 8 | 98.42 | 82.54 | 93.46 | 87.66 |

| 9 | 98.67 | 88.21 | 89.88 | 89.04 |

| 10 | 98.73 | 88.91 | 90.02 | 89.46 |

| Average | 98.55 | 85.02 | 91.83 | 88.21 |

| Metric | Threshold | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|---|

| RMSE | 0.00450 | 98.52 | 88.38 | 86.20 | 87.28 |

| MAE | 0.00300 | 98.79 | 89.40 | 90.31 | 89.95 |

| MSE | 0.00002 | 98.54 | 88.36 | 86.67 | 87.51 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, X.; Yu, D.; Xie, C.; Wang, Z. Outlier Detection of Crowdsourcing Trajectory Data Based on Spatial and Temporal Characterization. Mathematics 2023, 11, 620. https://doi.org/10.3390/math11030620

Zheng X, Yu D, Xie C, Wang Z. Outlier Detection of Crowdsourcing Trajectory Data Based on Spatial and Temporal Characterization. Mathematics. 2023; 11(3):620. https://doi.org/10.3390/math11030620

Chicago/Turabian StyleZheng, Xiaoyu, Dexin Yu, Chen Xie, and Zhuorui Wang. 2023. "Outlier Detection of Crowdsourcing Trajectory Data Based on Spatial and Temporal Characterization" Mathematics 11, no. 3: 620. https://doi.org/10.3390/math11030620

APA StyleZheng, X., Yu, D., Xie, C., & Wang, Z. (2023). Outlier Detection of Crowdsourcing Trajectory Data Based on Spatial and Temporal Characterization. Mathematics, 11(3), 620. https://doi.org/10.3390/math11030620