1. Introduction

Due to the dominance of digital systems, almost all data are represented in binary formats. As the number of bits in the binary representation is limited, special attention should be paid to ensure required accuracy for a specific application, taking into account the dynamic range and statistical characteristics of data. In addition, due to the increasing amount of data being generated, it is necessary to find efficient binary representation that will provide sufficient accuracy with as few bits as possible. All of this proves the importance of studying binary formats.

The 32-bit floating-point (FP32) binary format defined by the IEEE 754 standard [

1] is commonly used in practice, especially for data representation in computers. Using a large number of bits, FP32 provides high accuracy of binary representation in a very wide range of variance of input data. Nevertheless, the floating-point formats (including the FP32) introduce high complexity, requiring powerful and expensive hardware for data processing, as well as high energy consumption [

2]. Furthermore, the FP32 format requires large memory space for data storage. It is especially impractical to implement the FP32 format on widespread sensor nodes, edge devices, and other devices with limited hardware resources (i.e., with limited processing power, memory capacity, and available energy). In fact, many embedded devices do not support the floating-point formats at all [

3].

The FP32 format is also standardly used to represent the parameters of deep neural networks (DNNs) [

4], which are currently one of the most powerful techniques for solving problems such as object detection [

5], autonomous driving [

6], natural language processing [

7], computer vision [

8], and speech recognition [

9]. A particularly current research direction that involves great research effort is implementation of DNNs on sensor nodes (obtaining smart sensors) and edge devices, in order to increase their availability and applicability [

10]. However, the fact that parameters in DNNs are represented in the FP32 format significantly limits the implementation of DNNs on sensor nodes and edge devices [

3,

11].

Based on the common practice of using digital words whose length is an integer multiple of 8 bits, the first solution that arises as a replacement for 32-bit formats is the usage of 24-bit formats, enabling a reduction in complexity without significantly degrading performance. However, the reduction in complexity that would be achieved by using the FP24 (24-bit floating-point) format [

12] instead of the FP32 is often insufficient since the FP24 also belongs to the class of floating-point formats.

The paper proposes the 24-bit fixed-point format as a better replacement for the FP32 format than the FP24 format. This will reduce the complexity in two ways, by decreasing the number of bits and by using the fixed-point format that has significantly less computational complexity, consumes less power, requires less area on chip, and provides faster calculations than floating-point formats [

11,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23], being much more suitable for implementation on sensor nodes and devices with limited hardware resources (a typical microcontroller has 128 KB RAM and 1 MB of flash, while a mobile phone can have 4 GB of RAM and 64 GB of storage [

24]). Therefore, the main goal of the paper is to optimize the 24-bit fixed-point format and to examine its performance. To achieve this goal, the analogy between fixed-point binary representation and uniform quantization established in [

25] will be exploited, allowing us to express accuracy of fixed-point formats using objective performance measures (distortion and SQNR (signal-to-quantization noise ratio)) of the uniform quantizer.

To find an optimal binary representation of some dataset, the probability density function (PDF) of data should be taken into account. In this paper, the Laplacian PDF is considered since it can be used for statistical modeling of a number of data types [

26,

27].

The approach applied in the paper, based on the above mentioned analogy, is to first optimize the 24-bit uniform quantizer and after that to optimize the 24-bit fixed-point format with the aim to achieve the same performance as of the 24-bit optimal uniform quantizer. Therefore, the design and performance calculation of the 24-bit optimal uniform quantizer is firstly considered, deriving two new closed-form approximate formulas for a very accurate calculation of its key parameter (the maximal amplitude) as a significant result of the paper. It is worth noting that the derived approximate formulas are valid for any bit-rate R, having general importance. Then, the 24-bit fixed-point format is optimized by exploring the influence of the parameter n (the number of bits used to represent the integer part of data) on the performance. The optimal value of n = 5 is obtained for the unit variance Laplacian PDF. An important conclusion obtained from the analysis is that a wrong choice for the value of n can drastically reduce the accuracy of the fixed-point representation.

Even with the optimal value n = 5, the 24-bit fixed-point format achieves lower SQNR compared to the optimal uniform quantizer, due to a mismatch in the maximal amplitude. Therefore, the paper proposes an adaptation procedure (called Adaptation 1) which enables the 24-bit fixed-point format to achieve the maximal possible SQNR of 122.194 dB for the unit variance, just like the optimal uniform quantizer.

However, there is a problem related to the 24-bit fixed-point format that SQNR changes with the change of data variance. To solve this problem, the paper proposes an additional adaptation procedure (called Adaptation_2) that converts the variance of the input data to 1. The proposed joint application of Adaptation_1 and Adaptation_2 allows for the 24-bit fixed-point quantizer to achieve the maximal SQNR for any variance of the input data, being a notable result of the paper.

Finally, the comparison with the FP24 format is performed, whereby the performance of the FP24 is calculated using the analogy between the floating-point representation and piecewise uniform quantization established in [

28]. It is shown that the proposed 24-bit fixed-point format with double adaptation achieves for 18.425 dB higher SQNR than the FP24 format. An important conclusion that can be made based on the achieved results is that the proposed 24-bit fixed-point format with the double adaptation is a much better solution than the FP24 format, for two reasons: it achieves a significantly higher SQNR having significantly less implementation complexity.

2. Design of the Optimal Uniform Quantizer

An

R-bit uniform quantizer with

N = 2

R levels and with the support region

is defined by its decision thresholds

, (

) and representation levels

, (

), where

represents the maximal amplitude and

represents the quantization step-size. The uniform quantizer maps each quantization interval

into the representation level

placed in the middle of that interval [

25].

In order to design an optimal quantizer, the probability density function (PDF) of the input data has to be taken into account. This paper considers the Laplacian PDF defined as [

27]:

where

represents the variance of the input data.

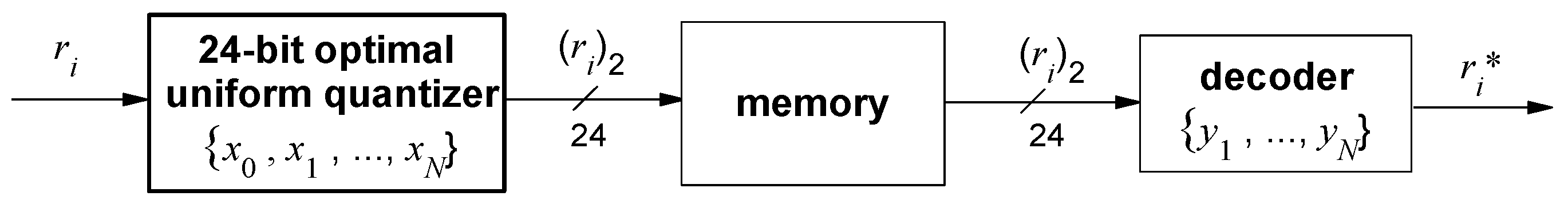

Quantization process is shown in

Figure 1. Let the input dataset consists of

M elements

(

). The variance of the input data is calculated as [

26]:

By quantization, each input element

is compared with decision thresholds

of the quantizer, determining the quantization interval where the input element belongs and generating an

R-bit code-word (in our case,

R = 24) that corresponds to the determined quantization interval. The code-word is stored in memory. When we want to reconstruct data from the binary form, a decoding has to be performed. Let

denotes the reconstructed value of the input element

. The decoding is done in a way that the reconstructed element

takes the value of one of

N discrete representation values

of the quantizer that is nearest to the value of the input element

. An irreversible error occurs during quantization, since the reconstructed values

differ from the original values

(

). Distortion

D represents the mean-square quantization error and based on the

Figure 1, it can be defined as:

The distortion

D can be also defined in another way, based on the statistical approach, as [

26]:

where

denotes the probability density function (PDF) of the input data.

For the asymptotic analysis which assumes that the number of quantization levels

N is large enough, Formulas (3) and (4) for the distortion

D are equivalent. For further analysis in this section, we will use the Formula (4) which for the uniform quantizer becomes [

25,

29]:

The first term in (5) represents the granular distortion that occurs during quantization of data from the support region

, while the second term in (5) represents the overload distortion that occurs during quantization of data outside of the support region. For

defined by (1) and for

, the expression (5) becomes:

The quality of quantization is usually measured by SQNR which is defined as [

16]:

Standard approach for designing quantizers is to minimize distortion

D or to maximize SQNR for some referent variance

Common practice in literature [

26], that will also be applied in this paper, is to take the unit variance as the referent variance (

= 1). Expressions (6) and (7) for the distortion and SQNR of the uniform quantizer for

= 1 becomes, respectively:

The maximal amplitude can be considered as a key parameter of the uniform quantizer, since all other parameters (, ) can be calculated based on . Therefore, the key task in designing the uniform quantizer is to determine the optimal value of by minimizing the distortion .

Lemma 1. Distortion of the uniform quantizer has a unique global minimum.

Proof of Lemma 1. For

defined with (8), we have that:

From the condition we obtain the equation . As the increasing linear function and the decreasing exponential function have a unique intersection point for > 0, the distortion must have a unique extremum. It follows from (11) that , meaning that this unique extremum of is in fact a unique minimum of distortion , being a global minimum of . This proves Lemma 1. □

Let

denote the value of

where

achieves the global minimum. For

, the uniform quantizer achieves the maximal SQNR that is expressed, using (9), as:

The value of can be calculated numerically in the Mathematica software package. For the 24-bit uniform quantizer we obtain the value = 21.876, achieving of 122.194 dB.

Nevertheless, to facilitate the design of the uniform quantizer, it is desirable to derive an approximate closed-form formula for calculation of the optimal

. The following approximate formula for

was proposed in [

29]:

However, this formula is not accurate enough: for R = 24 bits it gives = 23.526, producing an error of 7.542 % in relation to the optimal value , that can be too high for a number of applications.

As an important contribution of the paper, we will derive below two closed-form approximate formulas for very accurate calculation of

. For the optimal value of

, the distortion

should be minimal, meaning that its first derivative defined by (10) should be equal to 0. If we equate the expression (10) with 0, it follows that

. By logarithmization of this expression, it is obtained that:

Based on the Equation (14), we can define the iterative process

for calculation of

. If we take the value defined by (13) as the starting point

of the iterative process (15), i.e.,

, we will obtain a value for

that is very close to

just after the first iteration. Therefore, we can take the expression for the first iteration

as a closed-form approximate formula for

:

For

R = 24 bits, the Formula (16) gives the value of

= 21.825, producing an error of only 0.235 % in relation to the optimal value

. Thus, the formula (16) is much more accurate than the Formula (13), providing satisfactory accuracy for a lot of applications. However, if an even more accurate calculation of

is required for some applications, the second iteration

of the iterative process (15), obtained by putting (16) in (15), can be used as a closed-form approximate formula for a very accurate calculation of

For R = 24 bits, the Formula (17) gives the value = 21.878 that differs from by only 0.009 %.

3. 24-Bit Fixed-Point Quantizer

A real number

x can be represented in the

R-bit fixed-point representation as [

25]:

where we use one bit ‘

s’ to encode the sign of

x,

n bits (

) to encode the integer part of

x and

m bits (

) to encode the fractional part of

x. Hence, we have that

R =

n +

m + 1. Each bit

(

) in the fixed-point representation has the weight of

allowing for easy calculation of

x from the fixed-point binary form as

The number 0 is represented with all bits equal to 0. The largest positive number that can be represented in the fixed-point format is

Due to the symmetry with respect to 0, the largest negative number that can be represented is

.

Let us consider the first few positive numbers represented in the

R-bit fixed-point format. The smallest positive number that can be represented is

, the next number is

, the next is

, and so on. All these numbers are equidistant, placed on mutual distance

. Hence, we can conclude that the

R-bit fixed-point representation can represent uniformly placed numbers from the range

with the step-size

, whereby all other real numbers are rounded to the nearest one of these numbers. Therefore, the

R-bit fixed-point representation can be considered as a uniform quantizer with parameters

and

. This uniform quantizer that corresponds to the

R-bit fixed-point representation will be called

the R-

bit fixed-point quantizer. This analogy between the fixed-point binary representation and the uniform quantization is very important, allowing us to use SQNR of the

R-bit fixed-point uniform quantizer as an objective measure to assess the quality of the

R-bit fixed-point representation [

25].

The distortion of the fixed-point quantizer can be calculated using (6) as:

For the unit variance, the expression (19) becomes:

Our goal is to examine the influence of the parameter

n on the performance of the fixed-point quantizer. The optimal value of

n can be found by minimizing the distortion

, from the condition

From (21), we obtain the condition

that leads to the equation:

For

R = 24, Equation (22) becomes

By numerical solution of Equation (23), we obtain that the optimal value of

n is 4.45. However,

n must be an integer, so the optimal value of

n can be 4 or 5. To determine the optimal value of

n, but also to better understand how the value of

n affects performance, we calculate SQNR for different values of

n, using (9) and (20). The results are shown in

Table 1, where we can see that

n = 5 gives the highest value of SQNR, being the optimal value of

n. To summarize: for the 24-bit fixed-point representation, the optimal values of parameters are

n = 5,

m = 18,

= 32, and

, achieving the maximal SQNR of 119.163 dB. Another important conclusion from

Table 1 is that the wrong choice of the value of

n drastically reduces the SQNR (i.e., the accuracy of the fixed-point representation).

4. Adaptive 24-Bit Fixed-Point Quantizer

Adaptation procedures for further improvement of the performance of the 24-bit fixed-point format is presented below.

4.1. Adaptation for Data with the Unit Variance

In this subsection, we will consider data with the unit variance. To recall: for data modeled by the unit-variance Laplacian PDF, the 24-bit fixed-point quantizer with parameters n = 5 and = 32 achieves SQNR of 119.163 dB while the 24-bit optimal uniform quantizer with the maximal amplitude = 21.876 achieves = 122.194 dB. Thus, the 24-bit fixed-point quantizer achieves for 3.031 dB lower SQNR compared to the 24-bit optimal uniform quantizer, due to the mismatch of . To enable the 24-bit fixed-point quantizer to achieve the maximal SQNR of 122.194 dB, we need to adapt the input data as follows.

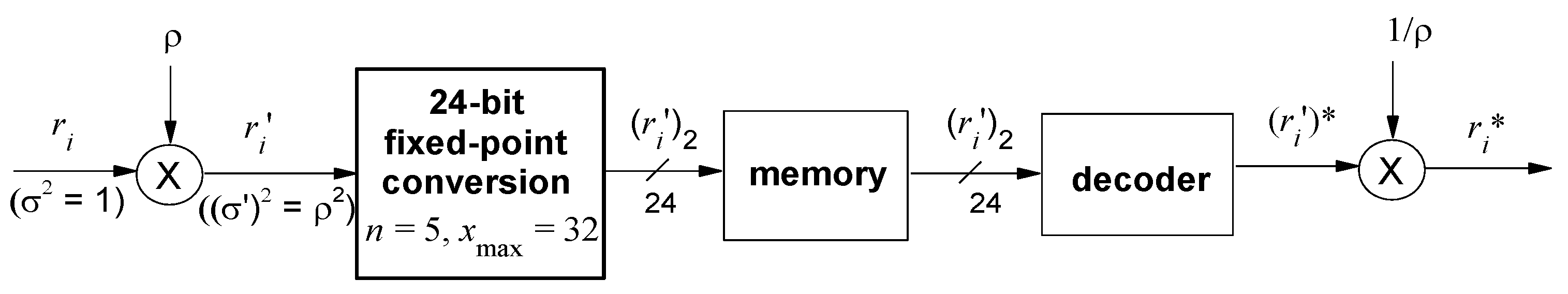

Adaptation_1. Input data, modeled by the unit-variance Laplacian PDF, should be firstly multiplied by

before the conversion to the 24-bit fixed point format, as it is shown in

Figure 2, where

(

) denotes an element of the input data,

denotes the adapted element,

denotes a 24-bit binary code-word of the adapted element

,

denotes a reconstructed value of the adapted element

and

denotes a reconstructed value of the input element

.

Lemma 2. If the variance of the input data () is , then the variance of the adapted data () is .

Proof of Lemma 2. It is given that the variance of the input data is equal to 1, i.e.,

. Then, we have that:

proving the Lemma 2. □

Theorem 1. For the input data with the unit variance, the SQNR of the adapted 24-bit fixed-point quantizer (according to the Adaptation_1), marked as , is equal to the maximal SQNR of the optimal uniform quantizer defined with (12), i.e., = .

Proof of Theorem 1. According to

Figure 2, the distortion of the adapted 24-bit fixed-point quantizer is calculated as:

The input of the 24-bit fixed-point quantizer consists of the adapted data elements

, whose reconstructed values are

,

; hence, according to (3), the distortion of the 24-bit fixed-point quantizer is calculated as:

On the other hand, the distortion of the 24-bit fixed-point quantizer can be calculated by (19) where we should use

instead of

, since the variance of the adapted data

is denoted as

. Since from Lemma 2 it follows that

, the distortion of the 24-bit fixed-point quantizer can be expressed, based on (19), as:

Due to the equivalence of formulas (3) and (4), as well as knowing that expression (26) comes from the formula (3) while expression (27) is derived from formula (4), it follows that expressions (26) and (27) for the distortion of the 24-bit fixed-point quantizer are equivalent. Based on this equivalence we have that:

Putting (28) into (25), it is obtained that:

Since

, the expression (29) becomes:

Since the variance of the input data

(

) is equal to 1, SQNR of the adaptive 24-bit fixed-point quantizer can be calculated based on (9) and (30), as:

Comparing (30) and (12), it follows that = , thus completing the proof of Theorem 1. □

Theorem 1 shows that by Adaptation_1 SQNR of the 24-bit fixed-point representation increases for 3.031 dB for the unit-variance Laplacian PDF, achieving the maximal possible value = 122.194 dB.

4.2. Double Adaptation for Data with the Variance

In this subsection, we consider the case that the variance of the input data differs from 1. Let us define the following adaptation procedure.

Adaptation_2. Calculate the variance of the input data according to (2) and if it differs from 1, divide all input elements () by .

Lemma 3. The variance of data (), obtained by applying of the Adaptation_2 procedure, is = 1.

Proof of Lemma 3. The variance of the input data is

. Then, the variance of data

obtained by the Adaptation_2 procedure is:

proving Lemma 3. □

Lemma 3 shows that by the Adaptation_2, we obtain data with the unit variance.

Our aim is to provide the same quality of the 24-bit fixed-point representation for data whose variance differs from 1 as for data whose variance is equal to 1. To achieve this, we need to perform a double adaptation, as follows.

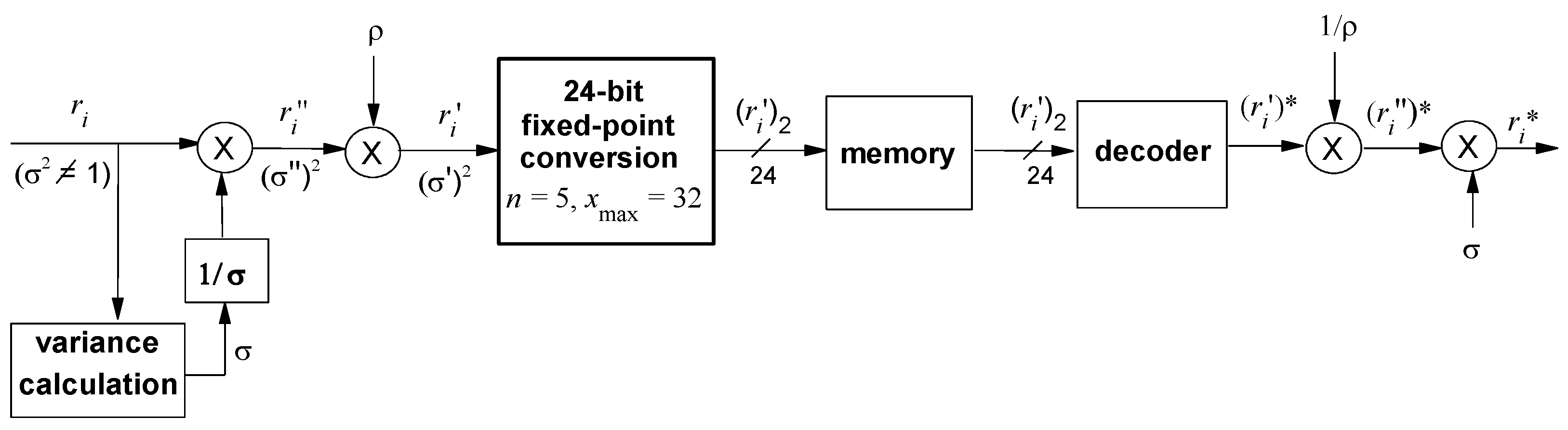

Double adaptation. If the variance of the input data differs from 1, apply the Adaptation_2 followed by the Adaptation_1 before conversion to the 24-bit fixed-point format. This is shown in

Figure 3, where

(

) denotes an element of the input data,

denotes the value of the element after Adaptation_2,

denotes the value of the element after the Adaptation_1,

denotes a 24-bit binary code-word for

that is stored in memory,

denotes a reconstructed value of

denotes a reconstructed value of

, and

denotes a reconstructed value of the input element

.

Theorem 2. For an arbitrary variance of the input data, the SQNR of the 24-bit fixed-point quantizer with the double adaptation, marked as , is equal to the maximal SQNR of the optimal uniform quantizer defined with (8), i.e., .

Proof of Theorem 2. The distortion of the double adaptation system shown in

Figure 3 is calculated as:

The data

(

), whose variance is equal to 1 according to Lemma 3, represents the input of the Adaptation_1 procedure, hence the distortion of the Adaptation_1 is defined as

. Thus, the expression (33) becomes:

Since the variance of the input data is

, SQNR of the double adaptation system (marked as

) can be defined using the expression (7). Starting from (7) and using (34) and (30), the following expression for

is obtained:

Comparing (35) and (12), it follows that , completing the proof of Theorem 2. □

Thus, for data with , we have the double adaptation: firstly, the Adaptation 2 is applied, converting the variance of the input data to 1, and after that the Adaptation 1 is performed adjusting data to the maximal amplitude of the fixed-point quantizer. In this way, as an important result of the double-adaptation, we obtain the maximal SQNR value of 122.194 dB of the 24-bit fixed-point quantizer, i.e., the highest possible accuracy of the 24-bit fixed-point representation, for any value of variance of the input data.

5. Comparison of 24-Bit Fixed-Point and Floating-Point Quantizer

In this section, we compare performance of the proposed 24-bit fixed-point format with the double adaptation and performance of the FP24 (24-bit floating-point) format. To do that, we will briefly recall some basics about the floating-point representation, defined by the IEEE 754 standard [

1]. A real number

x, represented in the 24-bit floating-point format as

is calculated as:

The biased exponent

(where

) takes values from −126 to 127, since values −127 and 128 are reserved for other purposes [

1]. The mantissa

takes values from 0 to

.

The FP24 format is symmetrical with respect to zero. The largest positive FP24 number obtained for

= 127 and

M =

is

. For each of 254 different values of

, there are

different positive real numbers represented in FP24 format, each of which corresponds to a different value of

M. The difference of any two consecutive numbers that have the same value of

is constant:

meaning that the numbers with the same value of

are equidistant. Thus, if we look at positive numbers displayed in FP24 format, there are 254 groups of

uniformly distributed (i.e., equidistant) numbers, where each group corresponds to one value of

. Due to the symmetry, the same structure exists for negative numbers. We can see that the structure of the numbers represented in the FP24 format corresponds to the structure of a symmetrical 24-bit piecewise uniform quantizer with the maximal amplitude

which has 254 linear segments in the positive part, where the uniform quantization with

levels and quantization step

is performed within each linear segment. This 24-bit piecewise uniform quantizer whose structure is equivalent to the 24-bit floating-point format (FP24), will be called the 24-bit floating-point quantizer. This analogy between the FP24 format and the 24-bit floating-point quantizer will allow us to express performance of the FP24 format using objective performance (distortion and SQNR) of the 24-bit floating-point quantizer [

28].

The distortion of the 24-bit floating-point quantizer, for input data modeled with the unit-variance Laplacian PDF can be calculated as [

28]:

where

represents the probability of the linear segment that corresponds to some specific value of

. The first term in (38) represents the granular distortion (where each member of the sum represents the granular distortion in one linear segment), while the second term represents the overload distortion. For the Laplacian PDF

defined with (1), the expression (38) becomes:

Using (7) and (39), we can obtain that the 24-bit floating-point quantizer achieves constant SQNR of 103.769 dB for the very wide range of variance of input data.

Based on the achieved results, it follows that the proposed 24-bit fixed-point quantizer with the double adaptation achieves for 18.425 dB higher SQNR (i.e., higher quality of binary representation) in a wide range of data variance compared to the FP24 format, as a result of the optimization of n as well as of the proposed double adaptation procedure. Having much smaller complexity in the same time, the proposed 24-bit fixed-point quantizer with the double adaptation can be considered as a much better solution for binary representation of data, compared to the FP24 format.