1. Introduction

Nonlinear dynamical system identification is very important in various engineering applications [

1,

2]. Several system identification approaches, encompassing both mathematical model-based and data-driven methods, have been developed to solve this difficult problem posed by the complex characteristics of dynamical systems, which often exhibit high nonlinearity, uncertainties, delays, step, disturbances, and more [

3,

4,

5,

6]. Among the various methods for system identification, as it is difficult to establish an accurate mathematical model for the dynamical systems, the data-driven methods based on intelligent methods concerned in this study have recently been widely accepted and applied in the nonlinear dynamical system identification fields [

2,

7,

8]. Various intelligent methods such as neural networks (NN) based on biological knowledge, fuzzy systems based on the operator’s knowledge with respect to the dynamical systems, fuzzy neural networks (FNN) [

9,

10,

11,

12,

13], wavelet neural networks (WNN) [

14,

15,

16], fuzzy wavelet neural networks (FWNN), and more [

17,

18,

19,

20] have been implemented to identify the nonlinear dynamical systems, and succesful identification results have been attained.

It is known that different variants of NN, multi-layer perceptron NN (MLPNN), radial basis function network (RBFN), recurrent neural network (RNN) [

19], long short-term memory (LSTM) network, and more, have been widely used for nonlinear dynamical system identification [

21,

22]. The main issue of the MLPNN model is that the weights adjustment does not exploit any of the local data structure, and the function approximation is very sensitive to the available training data [

21]. The presence of a feedback loop in RNN enhances their ability to approximate nonlinear dynamical systems. RNNs are deemed some of the most attractive models for processing information generated by various of dynamical systems, successfully overcoming the disadvantages of the MLPNN model [

22]. For example, Kumar proposed a memory recurrent Elman neural network-based method for the time-delayed nonlinear dynamical system identification [

19]. It is noted that the other requirement from the identification model is that it should be robust, that is, it should be able to compensate for the effects of uncertainties such as parameter variations and disturbance signals. An adaptive sliding-mode control system using a double-loop RNN structure was proposed for a class of nonlinear dynamic systems in Ref. [

23], which realized the merits of high precision, fast speed, and strong robustness. Chu and Fei [

8] proposed an adaptive dynamic global sliding-mode controller based on a proportional integral derivative sliding surface using a radial basis function neural network (NN) for a three-phase active power filter to obtain global robustness. Ghahderijani et al. [

24] presented a sliding-mode control scheme to provide high robustness to external disturbances and transient response with large and abrupt load changes.

Although the methods based on NN have the ability to approximate any deterministic nonlinear process with little knowledge and no assumptions [

3,

14,

25], the processing of the random weight initialization is generally accompanied with extended training cost and the training algorithm will converge to the local minima. In particular, there is no theoretical relationship between the specific learning parameters of the network and the optimal network architecture [

26]. To effectively solve these issues, WNN, i.e., an alternative NN, is proposed to alleviate the aforementioned weaknesses [

27,

28]. Usually, three types of wavelet bases, the Gaussian derivative, the second derivative of the Gaussian, and the Morlet wavelet, are suggested to construct WNN [

29]. However, in order to obtain less iteration steps in the training procedure, and arrive at the global convergence minimum of the loss function, various approaches have been proposed to initialize the dilation and translation parameters of the wavelet bases as the activation functions of the network [

30,

31,

32,

33]. For example, Luo et al. developed an identification of autonomous nonlinear dynamical system based on discrete-time multiscale wavelet neural network [

16]. Emami developed an identification of nonlinear time-varying systems using wavelet neural networks [

34]. However, wavelet neural networks involve adjusting multiple parameters, including the selection of wavelet functions, determination of wavelet scales, and the number of layers and nodes in the neural network. Adjusting these parameters requires experimentation and optimization, often involving iterative attempts with different parameter combinations and evaluating the model’s performance using appropriate evaluation metrics.

Additionally, FWNN contains the advantages of both the fuzzy systems and WNN, which can decrease the number of rules significantly. It has been successfully applied in the dynamical system identification [

35,

36]. For example, Wang et al. combined the fuzzy-neural structure with long short-term memory (LSTM) mechanism to identify the nonlinear dynamical system [

37]. However, FWNN needs a large number of neurons to obtain a reasonably good performance in the nonlinear dynamical system identification, which increases the dimension and configuration parameters of the network [

38,

39,

40].

Consequently, it is very necessary to design a new effective neural network and optimize the control parameters of the network structure, so as to effectively identify the nonlinear dynamical system. Fortunately, attractive properties such as piecewise polynomials, compact support, orthogonality, and various regularities of LW bases, constructed by Alpert et al. [

41], provide an alternative structure for WNN. These properties are very beneficial for constructing a simpler and lower computational complexity network.

In this paper, our main objective is to design a LWNN based on the advantages of LW base functions and optimize the control parameters, i.e., the number of LW bases and corresponding resolution levels of this network, using a simple Genetic Algorithm, which enhances the effectiveness and adaptability of the network applied to the nonlinear dynamical system identification. More precisely, the basic structure of LWNN is composed of the input layer, hidden layer, and output layer, which are described in

Figure 1 in detail. Due to the compact support and orthogonality properties of LW bases, the input data are parted into some subsections according to the optimal resolution level, which leads to locally connecting and sharing weights in each subsection, decreasing the calculation cost in the procedure of the network training. In the hidden layer, the essence of each main neuron is a linear combination of LW bases. Therefore, the number of LW bases and the resolution level measure the complexity of LWNN structure. The two control parameters of the network are optimized by the simple Genetic Algorithm to effectively and adaptively learn the salient features of the nonlinear dynamical system. In particular, the number of LW base functions and resolution level are usually small positive integers, and they are easy to optimize using the simple Genetic Algorithm rather than the various complex algorithms for initialization of the dilation and translation parameters used in the traditional WNN [

37]. The performance of the LWNN-GA method is supported by rigorous wavelet analysis theory, and any function in

can be approximated with any accuracy through sufficient training of LWNN [

41].

To summarize, in comparison to other neural networks, the contribution of our proposed method terms of the NN model are mainly due to the theoretical guidance, local learning structure, and adaptive adjustment mechanism, which makes the network topology more compact and simpler with a single hidden layer, attaining a high learning efficiency.

In order to demonstrate the effectiveness and feasibility of the proposed method in this article, the improved gradient descent algorithm is implemented to learn the network weight coefficients of the optimized structure model by the simple Genetic Algorithm, which can effectively approximate a benchmark piecewise function and identify three nonlinear dynamical systems. The main contributions of this paper are listed as follows:

- (1)

This paper proposes a simplified LWNN to identify the nonlinear dynamical system. In essence, the main neuron in the hidden layer is a linear combination of orthogonal explicit LW polynomial bases instead of the traditional non-polynomial activation functions, which can effectively decrease the number of learning weight coefficients and avoid the issue of the numerical instability in the nonlinear dynamical system identification process;

- (2)

The two control parameters of this network are optimized by the simple Genetic Algorithm. To be specific, the resolution level and order of LW bases are optimized to attain the optimal network structure, and the improved gradient descent algorithm is utilized to learn the network weight coefficients, which are prior and simpler to the algorithms used by the traditional WNN;

- (3)

The essential attribute of the adaptive piece-wise polynomial approximation enables the proposed method to locally connect and share weights involving only a small subset of LW coefficients. This local process structure effectively decreases the training cost with the improved gradient descent algorithm;

- (4)

Various LW bases with rich vanishing moments and regularities provide a strong approximation tool to thoroughly learn the complex characteristics shown by the uncertainties, step, ramp, and disturbances in a nonlinear dynamical system. Especially, the approximation error converges exponentially according to the optimal resolution level and order of LW bases.

As demonstrated by the numerical experiment results in

Section 4, the proposed method attains better identification accuracy than other complex neural networks, showing great potential for complex nonlinear system identification.

The remaining of this paper is organized as follows:

Section 2 introduces the rich properties of LW bases, and the basic structure of LWNN is elaborately designed.

Section 3 uses the simple Genetic Algorithm to optimize the order of the adopted LW bases and the resolution level. Then, the weight coefficients of the optimal LWNN structure are learned by the improved gradient descent algorithm. Finally, the detailed flowchart of the proposed method for identifying the nonlinear dynamic system is described. In

Section 4, the performance evaluation measure of the experiment results is described. Then, the nonlinear dynamical system identification commonly used in the literature is implemented to verify the effectiveness of the proposed method. Finally,

Section 5 gives some conclusions of this research and prospects for future work.

4. Numerical Experiments and Results Analysis

In this section, in order to verify the effectiveness and efficiency of the proposed method, the four most representative dynamical system identification examples are simulated to analyze the identification results. It is noted that the adopted examples include various complex features such as uncertainties, step, nonlinear, and ramp, which are able to be effectively recognized by the proposed LWNN-GA method.

Some researchers and scholars have proposed various methods to solve the system identification issues mentioned above. For effectiveness and clarity, the same performance measure the root mean squared error (RMSE) is utilized to compare the proposed method in this context with other existing methods. Then, the RMSE is used to calculate the difference between the estimated values and the sample actual data, and it is defined as follows

where

is the actual values of the sample data and

is estimated values of the output of LWNN at the

point, and

M is the number of the sample points, respectively.

Furthermore, the configuration parameters of the four examples simulated in this paper are elaborately described in

Table 1 as follows

To avoid particularity and contingency, the samples are randomly selected from each of the nonlinear dynamical systems, and the specific training samples and testing samples for each example corresponding to different nonlinear dynamical systems are elaborately described in

Table 1.

In addition, all numerical simulation experiments are conducted on a computer (Inter Core i5, 2.79 Ghz, 8GB RAM, OS Windows, Vista) using Matlab2020b.

4.1. Example 1

In this simulation experiment, the benchmark piecewise function proposed by Zhang and Benveniste, Ganjefar and Tofighi, and Carrillo et al. [

39] is used to compare the performance of the proposed LWNN-GA method with other existing methods. Specifically, the structure of LWNN is optimized by the simple Genetic Algorithm, and the weight coefficients are learned by the improved gradient descent algorithm, which are implemented to approximate the function described as follows.

It is noted that the training samples of this experiment are composed of 200 input–output pairs uniformly distributed in the interval

. Then, the training samples 100 and testing samples 100 for this experiment are randomly selected, as shown in

Table 1. To be specific, the data corresponding to the variable

x are transformed into the interval

according to (

5) by the simple linear transformation, which are the inputs of the proposed LWNN model, and the expected outputs of this model are the function values of

. Furthermore, the identification accuracy of the proposed model also depends upon the learning rate value. In this paper, the learning step term in (

11) is

, where

, and

varies with the number of the sample points in the subinterval

, which means that the variation learning step is devised to effectively enhance the convergence speed of the proposed model.

Finally, the approximation results for different resolution level

n and the order

p of LW bases are elaborately described in

Table 2 as follows

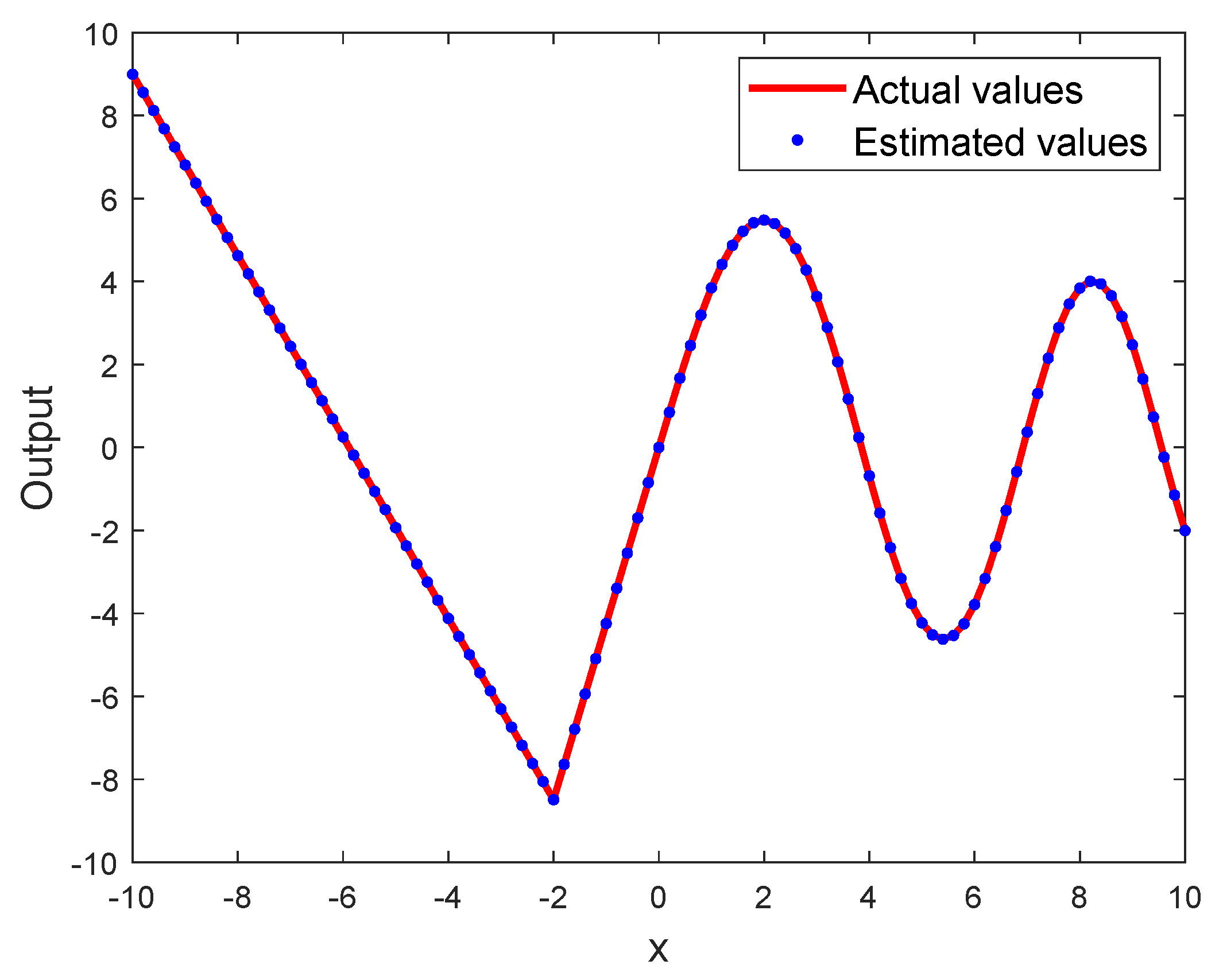

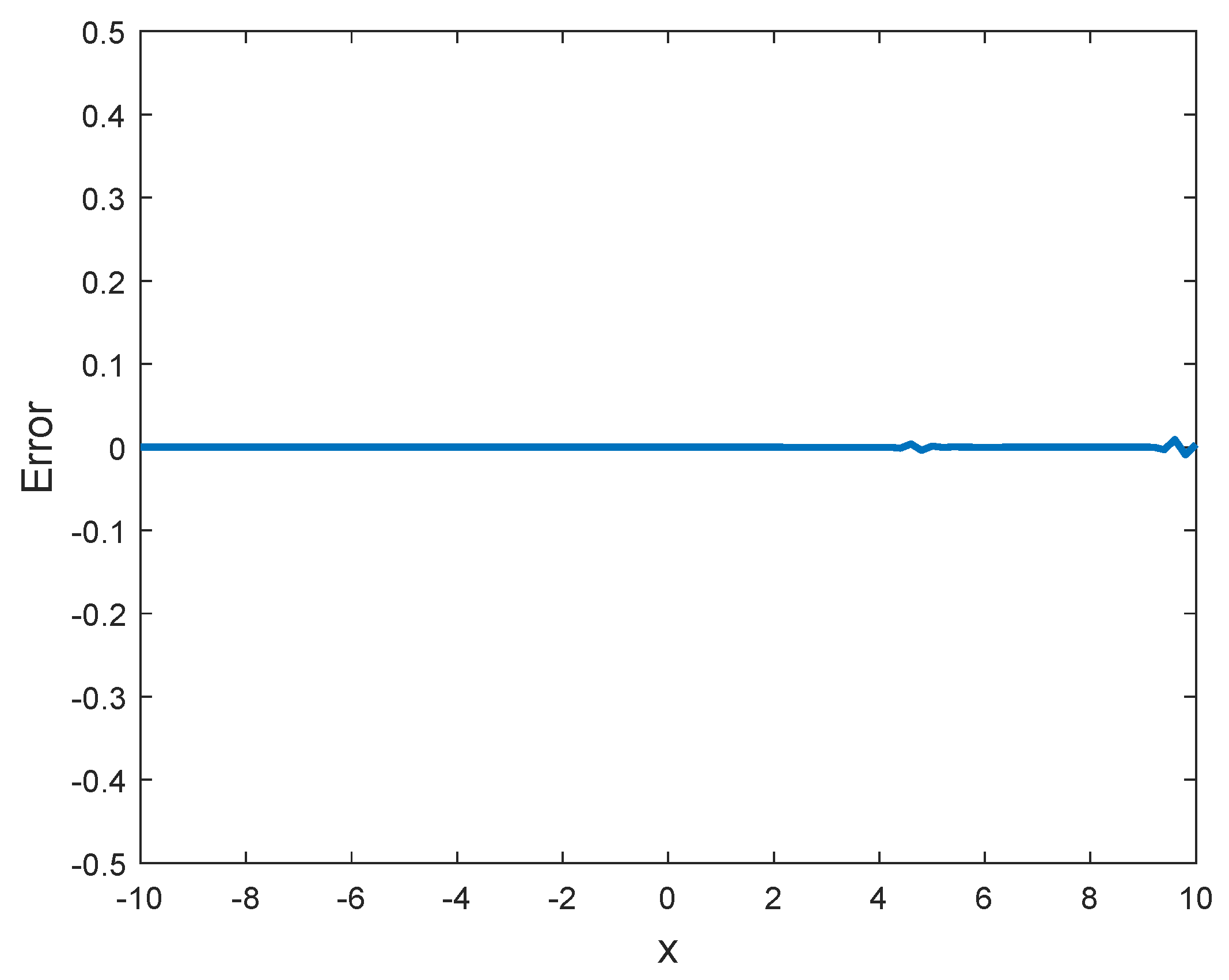

As demonstrated in

Table 2, the optimal control parameters of LWNN are attained as the resolution level

and the order

of LW bases by utilizing the simple Genetic Algorithm to optimize the structure of LWNN. Furthermore, the simulation results specifically described that the defined interval

is parted into 32 subintervals, and three polynomials on each subinterval are utilized to approximate the function. Accordingly, the optimal RMSE value is

. Finally,

Figure 4 demonstrates the strong approximation ability of LWNN, and

Figure 5 describes the approximation error variation at the corresponding discrete data points of the function as follows.

In

Figure 4, the solid line and dotted line denote the actual values and estimated values, respectively. As shown in

Figure 5, good results are achieved using the proposed method.

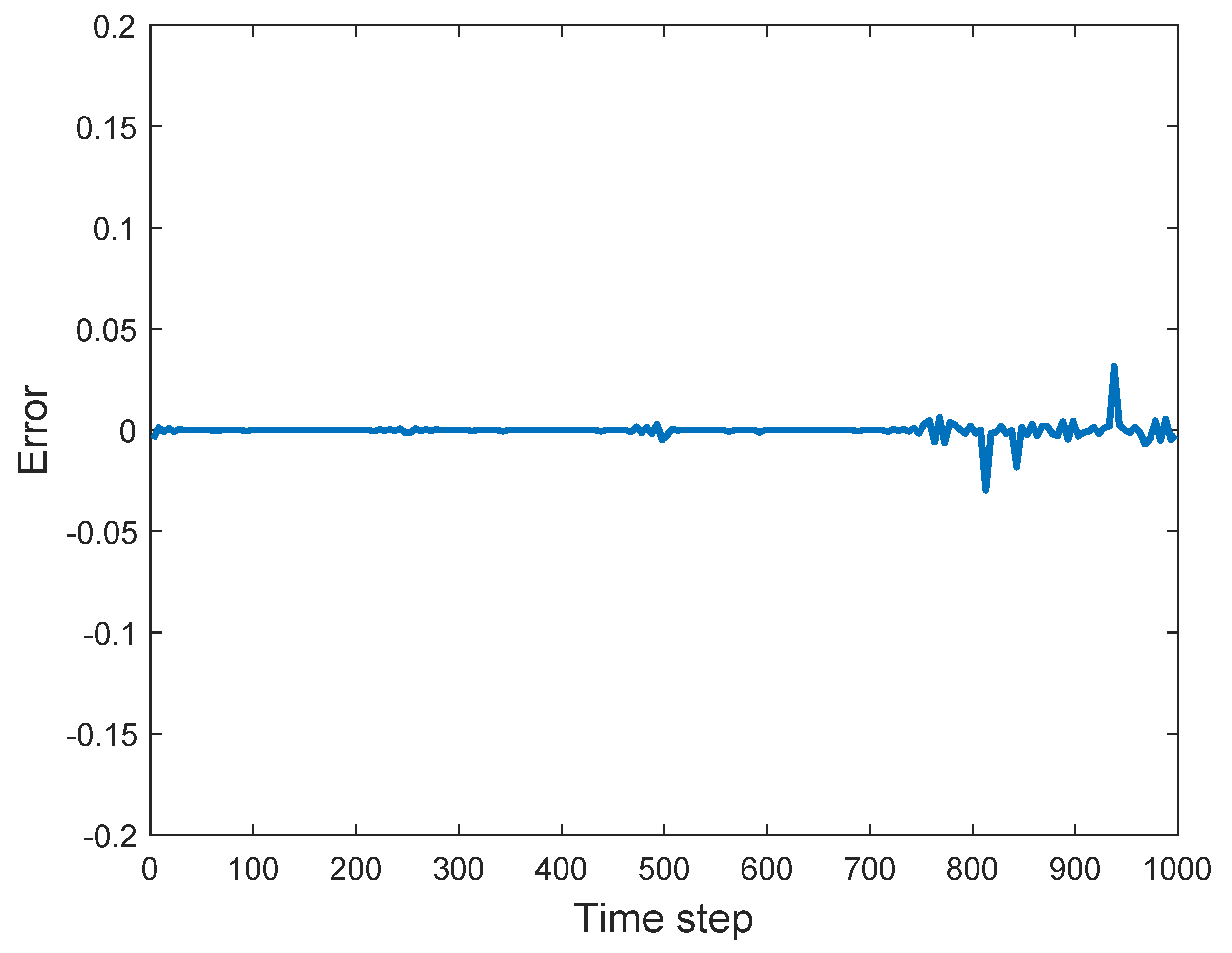

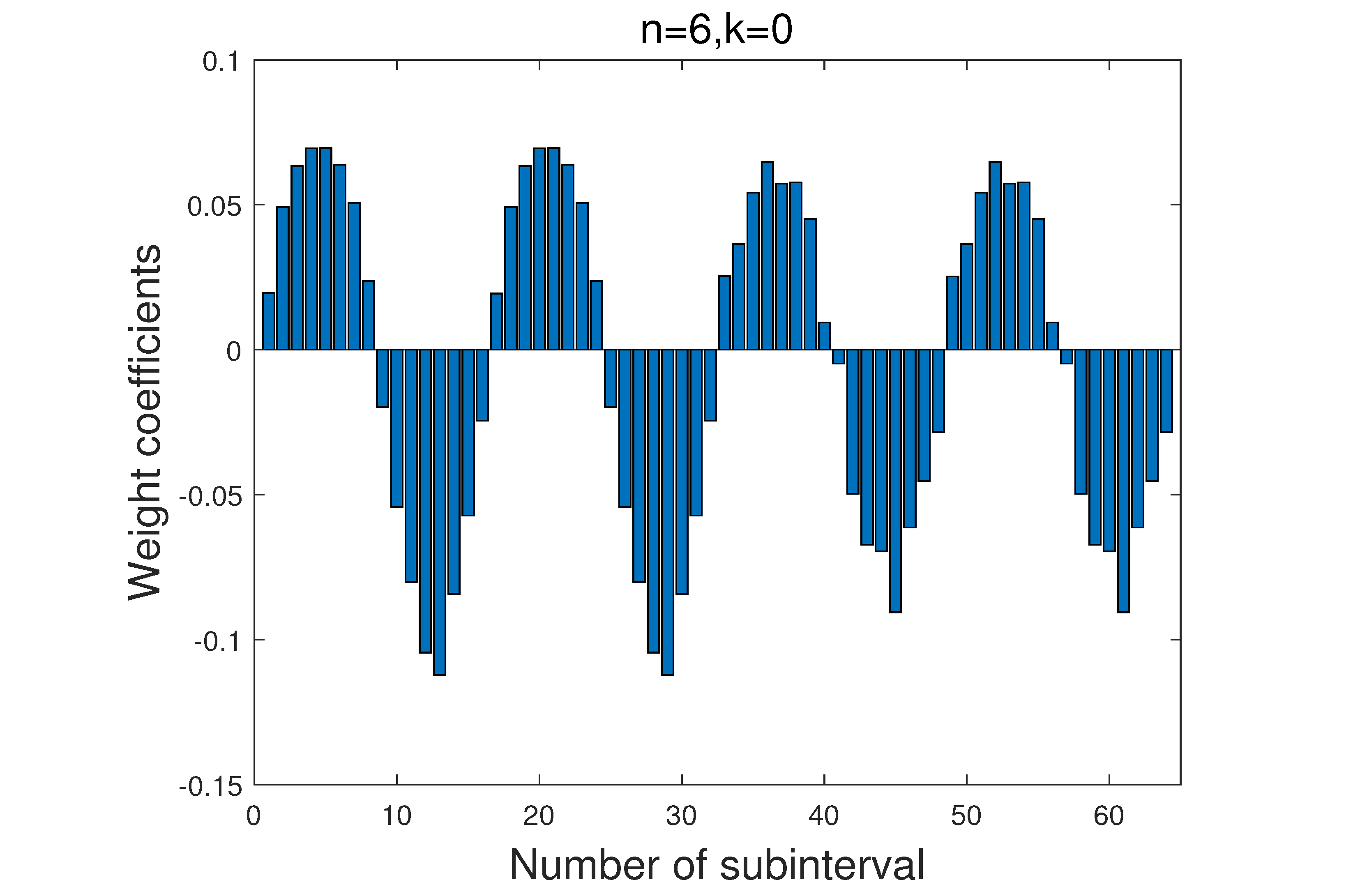

In addition, the RMSE iteration process of the proposed method is illustrated in

Figure 6, and the learned network weight coefficients of the optimized structure are elaborately described in

Figure 7, as follows.

As shown in

Figure 7, the low-order LW bases approximate the trend of the function, and the high-order LW base functions learn the detailed features. The learned weight coefficients of LWNN record the non-derivable feature at the point

of the function on the 13th subinterval with the order

and

, respectively. Especially, the weight coefficients of LWNN with the order

demonstrate the step variation of the function.

Finally, other existing methods are compared to the proposed method, and

Table 3 shows the comparison results.

As described in

Table 3, the proposed method provides the highest approximation accuracy compared to other existing methods.

4.2. Example 2

In this experiment, a nonlinear dynamical system studied by different existing methods and recognition models [

36,

38,

39,

48] is identified using the proposed method in this article. The corresponding dynamical system is described by the following difference equation

As represented by (

13), the current output of the system

depends on the system previous outputs

and the previous input

. Correspondingly, the input

of this dynamical system is described by

Then, the training samples and testing samples of the proposed method are composed of the control variable

x and the current output

values, which are the input–output sample point pairs for this dynamical system identification. By substituting the control variable data

x into (

14), the control sample data

are obtained and they are substituted into this system (

13) to generate 1000 training and testing samples. Based on the generated samples, 800 samples are randomly selected as the training samples and the remaining samples are adopted as the testing samples. Finally, the identification results of this nonlinear dynamical system using the proposed method with different resolution level

n and order

p of LW bases are elaborately shown in

Table 4 as follows.

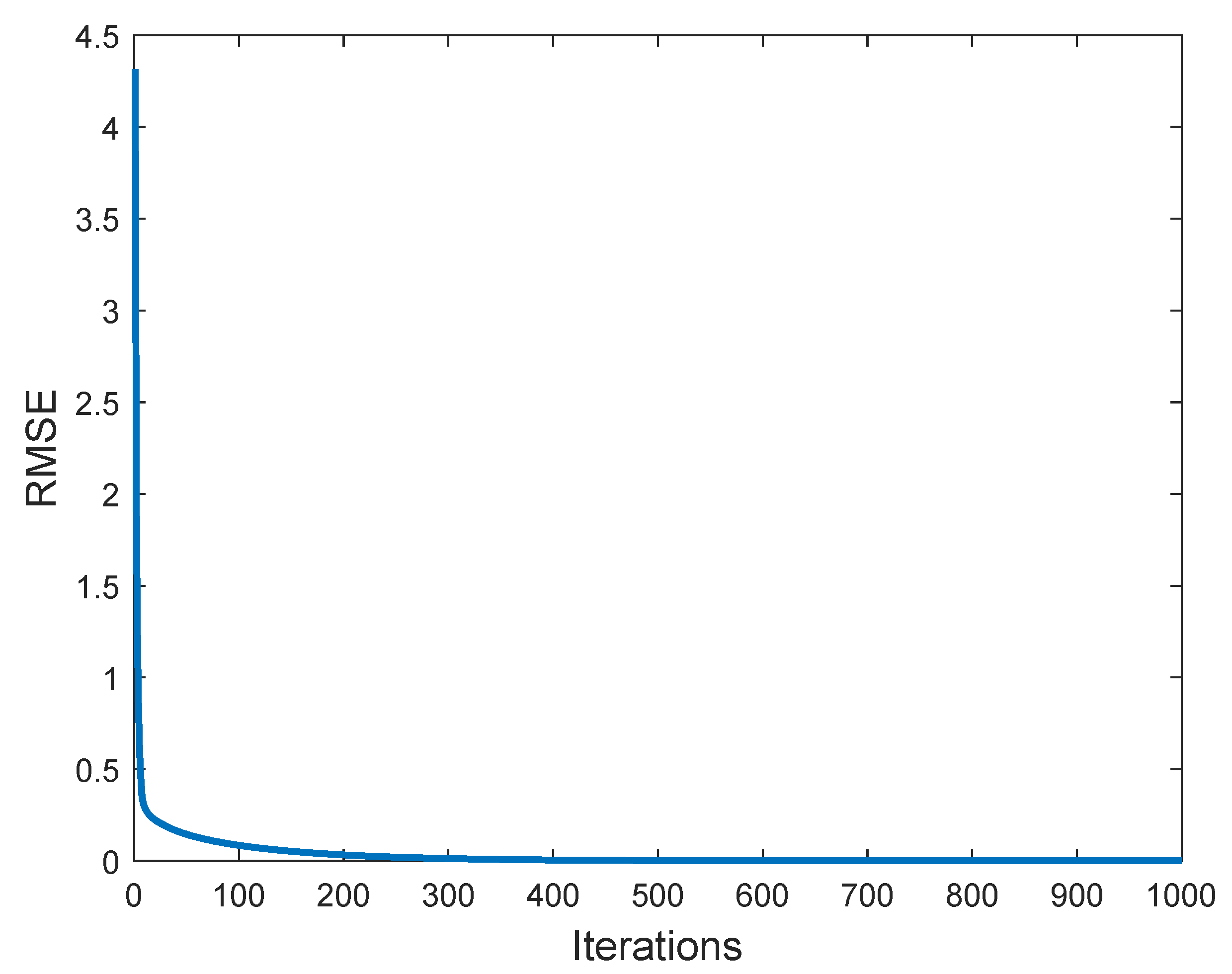

As described in

Table 4, the resolution level

and the order

of LW bases are the optimal structure of LWNN. The optimal RMSE value of this example for the testing samples is

. The good performance is described further by comparing the difference between the actual values (solid line) with the estimated values (dashed line) in

Figure 8 and

Figure 9, respectively.

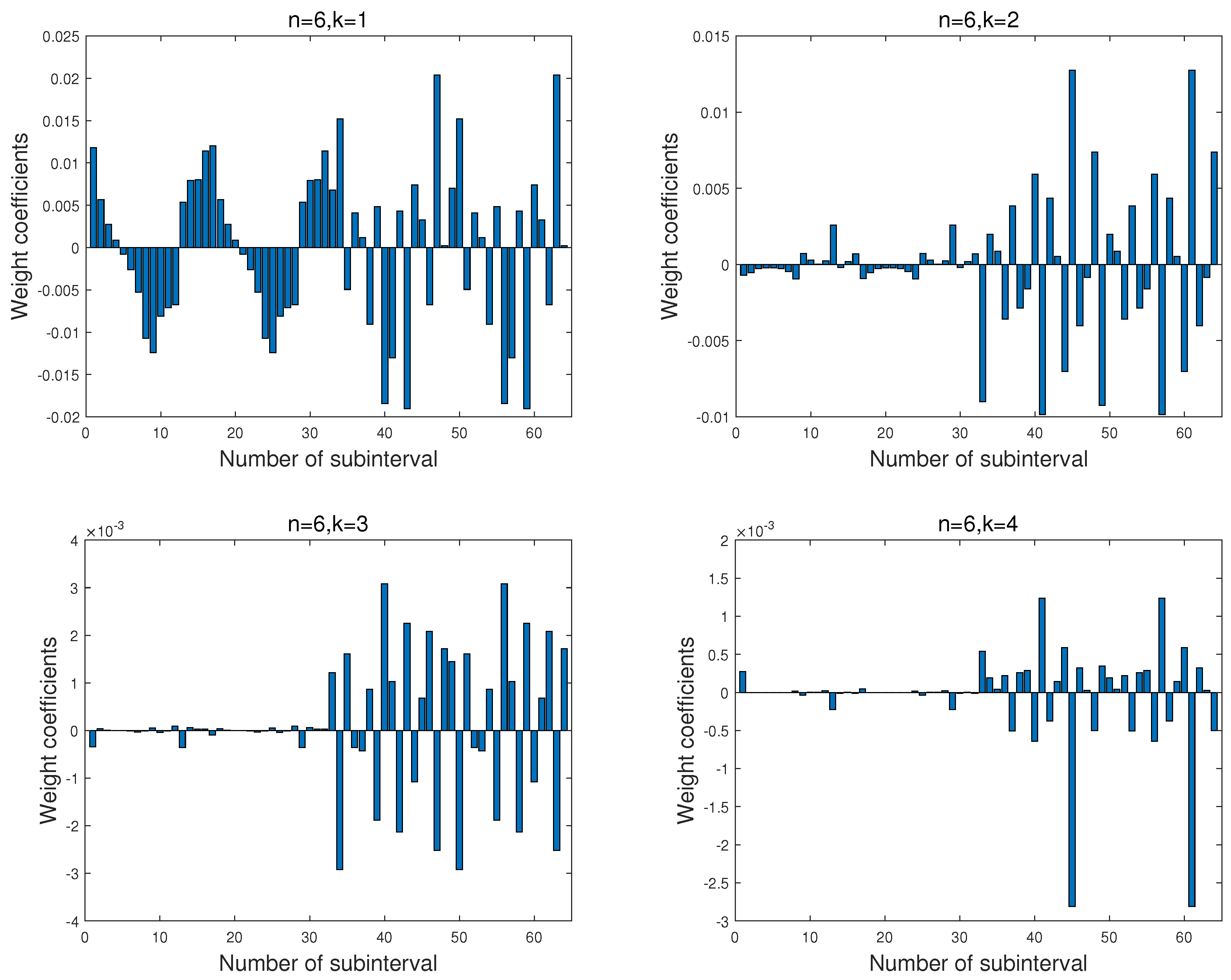

Finally, the RMSE iteration process of training LWNN to identify this dynamical system, and the network weight coefficients learned by the improved gradient descent algorithm are shown in

Figure 10 and

Figure 11, respectively.

As demonstrated in

Figure 11, the network weight coefficients with a different order of LW bases illustrate the strong ability of LWNN learning the essential features of this dynamical system. Specifically, LWNN with the order

and

has effectively learned the step feature at the points

and

of this complex dynamical system on the

th and

th subintervals, respectively. Correspondingly, the network weight coefficients of LWNN have a sudden variation in the positive and negative values at the step points. Similarly, the ramp feature at the point

of this dynamical system is described by the network weight coefficients of LW bases with the order

and

on the

th subintervals, respectively. Additionally, the comparison results with other existing methods are elaborately described in

Table 5 as follows.

From the obtained results in

Table 5, the proposed method can significantly enhance the identification accuracy due to the main neurons in LWNN representation by the polynomials, which can provide the highest accuracy with the optimal control parameters of the network structure.

4.3. Example 3

In this example, the nonlinear dynamical system mentioned in Refs. [

47,

57,

58] is represented by the following difference equation

where the function

f has the following form

The input

of this dynamical system is described by

The input–output data pairs of this dynamical system identification are still composed of the control variable

x and the current output

of this dynamical system. Accordingly, 2000 samples are randomly generated by (

15)–(

17) and 1800 samples are used as training data and the rest of the samples as testing data. The simulation results of system identification with different decomposition scale

n and order

p of Legendre multiwavelet basis are illustrated in

Table 6The optimal RMSE value of this dynamical system identification is

, which can be found in

Table 6 when the decomposition scale

and order

of Legendre multiwavelet basis. Additionally,

Figure 12 and

Figure 13 show that LWNN obtains a good response of this dynamical system, respectively.

Furthermore, the error iteration process of LWNN identification of this dynamical system and the network weight coefficients obtained are shown in

Figure 14 and

Figure 15, respectively.

In

Figure 15, the obtained network weight coefficients describe the ability of LWNN learning the feature of this dynamical system. When

, the impulse signal presented by this dynamical system becomes more complicated. Then, LWNN with the order

of Legendre multiwavelet basis records the main tread characteristic of this dynamical system, and LWNN with the order

of LW bases effectively learns this complex impulse signal feature from

to

subintervals.

In contrast to the other methods,

Table 7 shows the comparison results.

From the obtained results in

Table 7, LWNN can provide better accuracy in identifying this dynamical system.

4.4. Example 4

In this experiment, a second-order nonlinear dynamical system studied by various models [

33,

36,

37,

54] is identified using the proposed LWNN-GA method. The difference equation of this dynamical system and the function

f is represented using (

15) and (

16) in Example 3. The corresponding control signal

of this complex dynamical system is demonstrated using (

14). Then, 2500 data samples randomly generated using (

14), (

15), and (

16) are applied to identify this second-order nonlinear dynamical system by using LWNN. Accordingly, 2000 data samples are used as training data and 500 data samples are used as testing data, respectively. The simulation results of this second-order nonlinear system identification with different decomposition scale

n and order

p of Legendre multiwavelet basis are described in

Table 8.

As described in

Table 8, the optimal RMSE value obtained is

which attains the optimal resolution level

and order

of LW bases. Furthermore,

Figure 16 and

Figure 17 demonstrate that the proposed method obtains a good response of this complex dynamical system identification, respectively.

From the obtained results, it can be seen that LWNN has good performance for identification of this complex dynamical system. Then, the error iteration process of LWNN identification of this dynamical system and the network weight coefficients are shown in

Figure 18 and

Figure 19, respectively.

From the variation values of the network weight coefficients described in

Figure 19, the proposed method has the ability of thoroughly learning the complex features of this dynamical system, such as uncertainties, step, ramp, and nonlinear. The main reason is that LW bases have rich properties such as compact support, orthogonality, vanishing moments, and especially various regularities, which can effectively approximate the nonlinear dynamical system. Specifically, the optimal structure of LWNN with the order from

to

of LW bases effectively learns the main tread feature of this complex dynamical system. Correspondingly, the optimal structure of LWNN with the order

of LW bases still demonstrates the step and ramp characteristics at the points

of this complex dynamical system. The learned network weight coefficients describe the trend on the

th,

th and

th subintervals, respectively.

Additionally,

Table 9 shows the comparison results with the other methods as follows.

To summarize, according to the results obtained in the above four examples, the identification accuracy shown in

Table 2,

Table 4,

Table 6,

Table 8, and

Table 9 using the proposed method is basically consistent with the approximation error in (

4). Furthermore, as described in

Figure 6,

Figure 10,

Figure 14, and

Figure 18, the proposed method effectively decrease the learning iteration steps by combing the simple Genetic Algorithm with the improved gradient descent algorithm in the nonlinear dynamical system identification. Correspondingly, the learned network weight coefficients of LWNN with the optimal structure can describe the complex features of the dynamical systems as shown in

Figure 7,

Figure 11,

Figure 15, and

Figure 19. Therefore, the proposed method demonstrates good performance and generalization ability for the identification of various complex dynamical systems.