Abstract

This paper investigates the sparse model with penalty in the field of network data models, which is a hot topic in both statistical and social network research. We present a refined algorithm designed for parameter estimation in the proposed model. Its effectiveness is highlighted through its alignment with the proximal gradient descent method, stemming from the convexity of the loss function. We study the estimation consistency and establish an optimal bound for the proposed estimator. Empirical validations facilitated through meticulously designed simulation studies corroborate the efficacy of our methodology. These assessments highlight the prospective contributions of our methodology to the advanced field of network data analysis.

MSC:

62E20

1. Introduction

With the advancement of science and technology, an increasing volume of network data is being collected. There is an increasing need to analyze and understand the formation and features of these network-structured data. In the filed of statistics, network-structured data bring about specific challenges in statistical inference and asymptotic analysis [1]. Many statisticians have delved into the study of network models. For example, the well-known Erdős–Rényi model [2,3], assumes that the formation of connections between node pairs happens independently and with a uniform probability. In recent years, two core attributes have been a pivotal focus in research on network models: network sparsity and degree heterogeneity [4]. Network sparsity refers to the phenomenon where the actual number of connections between nodes is significantly lower than the potential maximum. It is a common occurrence in real-world networks and presents unique challenges and opportunities for analysis. Degree heterogeneity refers to the phenomenon whereby the network is characterized by a few highly connected core nodes and many low-degree nodes with fewer links. These facets are intrinsic to the comprehensive understanding of network structures and dynamics (see [5]).

Two prominent statistical models that address degree heterogeneity are the stochastic block model [6] and the model [7,8,9]. The stochastic block model seeks to encapsulate degree heterogeneity by grouping nodes into communities characterized by analogous connection patterns, as demonstrated in [6,10]. In contrast, the model addresses degree heterogeneity directly, employing node-specific parameters to characterize the variation in node connections. Degrees of nodes are foundational in summarizing network information, with their distributions offering crucial insights into the networks’ formation processes. Recent advancements in modeling degree sequence in undirected networks have explored distributions within the framework of the exponential family, where vertex “potentials” are used as defining parameters (see [11,12,13,14]). In the context of directed networks, there many studies have been conducted on constructing and sampling graphs with specified in- and out-degree sequences, often termed “bi-degree” (see [15,16,17]).

The model is renowned for its widespread application and proven statistical efficacy. Statistically, the maximum likelihood estimation of the -model parameters is recognized for its consistency and asymptotic normality, albeit predominantly in the context of relatively dense networks, as indicated in [7,18]. In practice, however, a pervasive characteristic of observed networks is their sparsity, as manifested by the presence of considerably fewer edges than the theoretically maximum attainable connections. This landscape underscores the imperative for the evolution of a sparse model. A significant advancement in this field is related to the work reported in [19], where a sparse model with penalty was studied. This innovation is not only adept at encapsulating node heterogeneity but also facilitates parameter estimation endowed with commendable statistical attributes, even within sparse network contexts. The model is distinguished by its computationally fast, theoretically tractable, and intuitively attractive nature. Nevertheless, the authors of [19] accentuated a limitation inherent in penalty-based estimation, particularly when the parameter support is unknown. They recommended that when the penalty is impractical due to undefined parameter support, -norm penalization becomes a preferred, efficient alternative for parameter estimation.

In this paper, we study the sparse model, specifically one augmented with an penalty, aiming to articulate the optimal non-asymptotic bounds in a theoretical framework. The inclusion of the penalty renders the loss function convex. This modification simplifies the resolution process, allowing for the application of convex optimization techniques, thereby boosting computational efficiency. Furthermore, theoretical analysis of the consistency of the estimated is established, and the finite-sample performance of the proposed method is verified by numerical simulation.

For the remainder of this paper, we proceed as follows. In Section 2, we introduce the sparse model with an penalty and the estimation procedure for the degree parameters (). In Section 3, we develop theoretical results to demonstrate the consistency of the estimated . Simulation studies are conducted in Section 4 to empirically validate the effectiveness and efficiency of the proposed method. We encapsulate our findings and provide a discussion of potential future directions in Section 5. Proofs of the theoretical results are comprehensively provided in Appendix A.

2. Methods

In this section, we first review the formulation of the model for undirected graphs in Section 2.1 and discuss the sparse model with penalty and its inference procedure in Section 2.2.

2.1. Review of the Model for Undirected Graphs

For the sake of convenient description, we first provide some explanations of symbols. denotes the p norm of vector . Let be an undirected simple graph with no self-loops on nodes that are labeled as , and let be its adjacency matrix. Here, we consider the element an indicator variable denoting whether node i is connected to node j: that is, if nodes i and j are connected; otherwise. Let be the degree of node i and be the degree sequence of graph . The model, which was named in [7], is an exponential random graph model (ERGM) in which the degree sequence is the exclusively sufficient statistic. Specifically, the distribution of can be expressed as

where is a vector of parameters, and . It implies that all edges () are independent and distributed as Bernoulli random variables, with success probability defined as

where is the influence parameter of vertex i. In the model, has a natural explanation because it can be used to measure the tendency of vertex i to establish connections with other vertices; that is, the larger is, the more likely vertex i is to connect to other vertices.

The negative log-likelihood function for the model is

Our prediction loss is the expectation of normalized negative log likelihood (the theoretical risk), and is the optimal parameter vector, i.e.,

and

Let be the Fisher information of . We induced “Fisher risk”:

We also calculated the risk (). In addition, when the sample size is sufficiently large, we hope to obtain a reasonable estimate (), with the Fisher risk () approaching .

The corresponding empirical risk is defined as

Many researchers have studied the maximum likelihood estimation of by minimizing the empirical risk; theoretical asymptotic results are reported in [11,17,20]. In this paper, we study the sparse model with regularization on .

2.2. Sparse Model with Penalty

In this subsection, we formulate the sparse model with penalty. First, we assume that the real parameter is sparse, with support (M) and a sparsity level (m) of

where represents the number of elements in M.

To facilitate the understanding of the analogous convergence rates of the regularization algorithm to the algorithm, the authors of [21] conducted an intricate exploration of sparse eigenvalue conditions utilizing a design matrix. This study illuminated that a minimal (sparse) subset of eigenvalues is distinctly isolated from 0. Further advancements were made by the authors of [22], who mitigated this condition, achieving it through vectors characterized by a predominant support on a compact subset, as detailed in their comprehensive analysis. In the context of this paper, we extend this discourse by also moderating this condition, with our focal point being the Fisher matrix. Specifically, we constrain the Fisher eigenvalues. For , let . We define and the complement of M as , where represents the indicative function.

- •

- Condition (A): For any such that and .

It is noteworthy that our approach specifically quantifies the support for M, marking a considerably milder condition compared to the one delineated in [21], where a more comprehensive assessment was conducted. Moreover, unlike the authors of [22], who quantified all subsets, our methodology is characterized by a focused application. In addition, the theoretical analysis presented in Section 3 shows that our subspace only needs to be considered restricted to the following set:

Under the condition of restricted eigenvalues (REs), if has a minimum eigenvalue (), we can replace it with (much less than ).

Ref. [19] adopted a penalty log-likelihood method with penalty for the sparse model. They used the monotonicity lemma to show that by assigning non-zero parameters to vertices with larger degrees, the problem of seemingly complicated calculations caused by the penalty can be overcome. However, the monotonicity lemma does not always hold. When the support of these parameters is unknown, the estimator based on the penalty is no longer computationally feasible. In view of this situation, we may naturally think of developing the penalized likelihood estimation of the norm. Similar to penalized logistic regressions [23], we consider the following regularization problem for the model:

where is a tuning parameter greater than 0.

Obviously, we know that a sparse estimate can capture important coefficients and eliminate redundant variables, while a non-sparse estimate may cause overfitting. The optimization problem (7) can be solved by the proximal gradient decent method, with the subdifferential of with respect to derived as follows:

If the Lasso estimate () is a solution of (7), then the corresponding Karush–Kuhn–Tucker (KKT) conditions (see [24]) for (7) are

Theoretically, we need to use the data-adaptive turning parameter () to ensure that the event that evaluates the KKT conditions under the given real parameter conditions has a high probability (see Lemma 2 in Section 3 and the corresponding proof presented in Appendix A).

3. Theoretical Analysis

In this section, we study the estimation consistency associated with in the context of the sparse model with an penalty. Our primary attention is centered around the Fisher risk () and the risk ().

According to algebraic knowledge, if Hessian has a consistent lower bound of eigenvalues, then a strictly convex function H is strongly convex. Generally, the exponential family is only represented in a sufficiently small neighborhood of in a strongly convex manner. Below, we quantify this behavior in the form of a theorem for the model.

Theorem 1

(Almost strong convexity). Suppose μ is the analytical standardized moment or cumulant of about a certain subspace () and is an estimator of β that satisfies . If

then we have

Proof Theorem 1.

First, we consider that if , using Lemma A2 in Appendix A, then

and the theorem is proven. Thus, we suppose that . If holds, the previous conclusion shows that the theorem has been proven. Therefore, let . Hence, . According to (A5) in Appendix A, , which is obviously contradictory. Therefore, we have completed the proof of Theorem 1. □

Remark 1.

In general, the exponential family exhibits strong convexity, primarily within localized neighborhoods of , especially in sufficiently small regions. The main outcome of Theorem 1 is to quantify when this behavior occurs. Additionally, the conditions outlined in (8) can be construed as initial “burn-in” phases. Our idea is that an initial set of samples is required until the loss of approximates the minimum loss; subsequently, quadratic convergence takes effect. This parallels the idea in Newton’s method, quantifying the steps needed to enter the quadratic convergence phase. Constants and in inequality (9) can approach , particularly with an extended “burn-in” phase. A crucial element in the proof of Theorem 1 involves expanding the prediction error in terms of moments/products.

Lemma 1.

Let and . If infinite series and converge for any , where and with . Here, represents the i-th-order derivative of the function. Then, we have

Next, we will provide the error risk bound for estimation under the RE conditions. Generally, under a specific noise model, we set the regularization parameter () as a function of the noise level. Here, the statement of our theorem is carried out in a quantitative manner, clearly showing that the choice of the appropriate value depends on the norm of the measurement error (). Therefore, under relatively mild distribution assumptions, we can easily quantify in Theorem 2. In addition, we must make the measurement error sufficiently small so that the following conditions in Lemma 2 hold.

Lemma 2.

Note that the entry of measurement errors results in a mild dimensional dependence. Therefore, Lemma 2 shows that under RE conditions, the model shows good convergence rates. According to Hoeffding’s lemma and the inequality reported in [25], below, we present Proposition 1 and quantify the mild distribution hypothesis to obtain Theorem 2.

Proposition 1.

Assuming that are independent random variables and have a common Bernoulli distribution, it is obvious that meets the boundary condition () for . Then, we have

(i) Hoeffding’s lemma: for ;

(ii) Hoeffding’s inequality: , .

Furthermore, let ; then, for any , we have

with a probability of at least .

Since the degree sequence () in the model is the sum of Bernoulli random variables, which are obviously bounded so that is sub-Gaussian, the following Theorem can be immediately drawn.

Theorem 2.

Let be a solution of (7) for the β model (). When , K is a constant, we have

and

with a probability of at least .

Proof Theorem 2.

Let ; then, satisfies the conditions of Lemma 2. Using (13) in Lemma 2, we have

By applying (15) in Proposition 1, for any , with a probability of at least

which proves (16). For the second claim of Theorem 2, according to (14) we can obtain

with a probability of at least . So far, we have completed the proof of Theorem 2. □

Remark 2.

We observe that Lemma 2 provides a general result, and Theorem 2 represents the specific outcome of Lemma 2 under a chosen. It is a concrete result of Lemma 2 in a specific scenario and can be directly proven through Lemma 2. The main theorem established in this paper, Theorem 2, is tighter than existing bounds.

4. Simulation Study

In this section, we conduct experiments to evaluate the performance of the consistency through finite sizes of networks. For a undirected graph with nodes , we generate the model as follows. Given n dimensional parameters (), the element of adjacent matrix A of an undirected graph follows a Bernoulli distribution with a success probability of

In this simulation, the true values of parameter are set to the following three cases (see [19]):

- Case 1: for and for , ;

- Case 2: for and for , ;

- Case 3: for and for , .

Furthermore, we consider three scenarios for the support of corresponding to different sparsity levels of , from sparse to dense, that is, , where denotes the largest integer smaller than a.

We generate undirected network A based on the above settings. Then, we implement the proposed sparse model with penalty to obtain the estimated parameter (). The gradient descent method is applied directly to the convex objective function (7) defined in Section 2. During the optimization procedure, the tuning parameter () is set to , with set to be . To compute support constrained MLEs, we used the proximal gradient descent algorithm, where the time-invariant step size is set to .

We carry out simulations under four different sizes of networks: , 200, 300, and 400. Each simulation is repeated = 1000 times. denotes the estimate of from the i-th simulation results, i.e., . Two evaluation criteria are used in this paper: the error and the error, which are defined as follows:

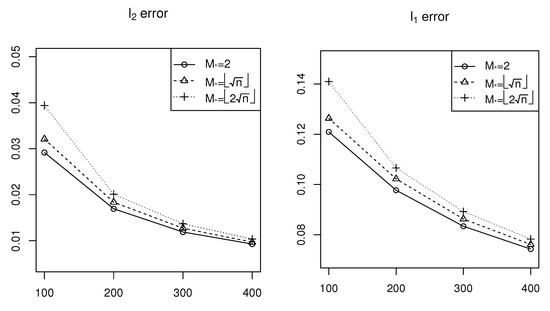

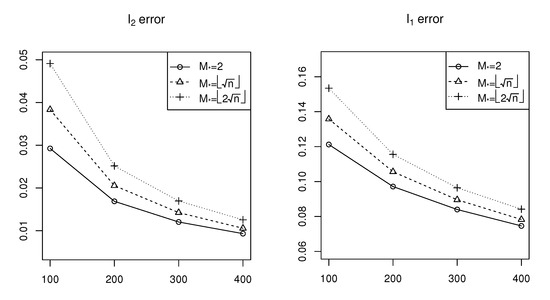

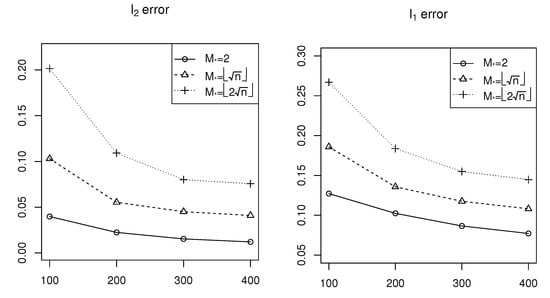

The simulation results are shown in Figure 1, Figure 2 and Figure 3 for Cases 1–3, respectively. According to Figure 1, we can see that both the error and the error decrease as the network size (n) increases, which means the estimation accuracy of generally improves as n increases. On the other hand, both the error and the error increase as increases, corresponding to variation in the sparsity level from sparse to dense, that is, the estimation accuracy of improves for sparse cases. Similar conclusions can be obtained from Figure 2 and Figure 3 relative to that derived from Figure 1.

Figure 1.

Simulation results of error and error for Case 1.

Figure 2.

Simulation results of error and error for Case 2.

Figure 3.

Simulation results of error and error for Case 3.

Comparing Figure 1, Figure 2 and Figure 3 vertically, it is observable that as the signal strength or signal-to-noise ratio of increases, estimation errors also increase. This indicates an inverse relationship between the estimation accuracy and the signal-to-noise ratio. These simulation results show the efficiency of the estimation procedure of the sparse model with penalty proposed in this paper.

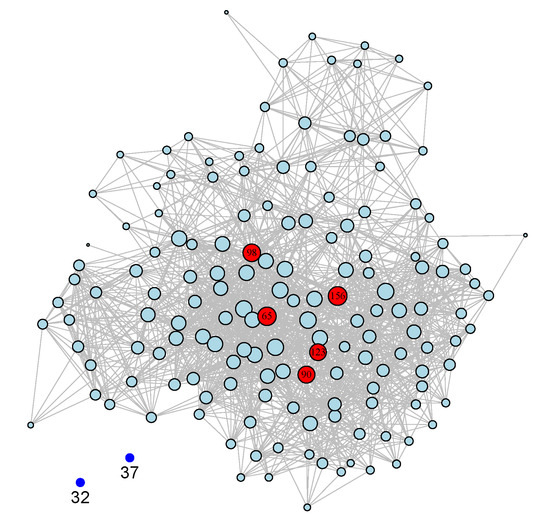

Finally, we use an example to introduce an undirected graph. We use the Enron email dataset as an example analysis [26] available from (https://www.cs.cmu.edu/~enron/, accessed on 7 May 2015). This dataset was originally acquired and made public by the Federal Energy Regulatory Commission during its investigation into fraudulent accounting practices. Some of the emails were deleted upon requests from affected employees. Therefore, the raw data are messy and need to be cleaned before any analysis is conducted. Ref. [27] applied data cleaning strategies to compile the Enron email dataset. We use their cleaned data for the subsequent analysis. We treat the data as a simple, undirected graph for our analysis, where each edge denotes that there is at least one message between the corresponding two nodes. For our analysis, we exclude messages with more than ten recipients, which is a subjectively chosen cutoff. The dataset contains 156 nodes with 1634 undirected edges, as shown in Figure 4. The quantiles of , and 1 are 0, 13, 19, 27, and 55 for the degrees, respectively. The sparse model can capture node heterogeneity. For example, the degree of the 52th node is 1, and the degree of the 156th node is 55. It is therefore natural to associate those important nodes with their individual parameters while leaving the less important nodes as background nodes without associated parameters.

Figure 4.

Enron email dataset. The vertex sizes are proportional to nodal degrees. The red circles indicate a nodal degree of more than 43. The blue circles indicate isolated nodes.

5. Conclusions

In this study, we investigated a sparse model with an penalty in the dynamic field of network data models. The degree parameter was estimated through an regularization problem, which can be easily solved by the proximal gradient descent method due to the convexity of the loss function. We established an optimal bound, corroborating the consistency of our proposed estimator, with empirical validations underscored by comprehensive simulation studies. One pivotal avenue for future research emanates from the unearthing and integration of alternative penalties that are more adaptive and efficient, facilitating enhanced model performance and a broader applicative scope. Furthermore, more effective optimization algorithms focusing on enhanced computational efficiency and adaptability to diverse network scenarios should be investigated in future studies. The sparse model proposed in this study serves as both a theoretical foundation and an algorithmic reference for future explorations into the sparse structures of other network models, such as network models with covariates [28], as well as functional covariates [29] partially functional covariates [30]. We aim to establish non-asymptotic optimal prediction error bounds. This contribution is instrumental in propelling the evolution of network models, offering insights and tools that can be adapted and optimized for diverse network structures and scenarios.

Author Contributions

Conceptualization, X.Y. and C.L.; methodology, X.Y. and L.P.; software, L.P. and K.C.; validation, K.C.; writing—original draft preparation, X.Y.; writing—review and editing, X.Y. and C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Shenzhen University Research Start-up Fund for Young Teachers No. 868-000001032037.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

The Appendix provides the detailed proofs for the theoretical results reported in Section 3.

Proof of Lemma 1.

First, we notice that is the cumulant-generating function of the sufficient statistic. Thus, . In fact, it is known from Taylor’s formula that . For (10), given and , we have

which proves (10). Using the definition of the cumulant (), we know that . In addition, we notice that and . For (11), by performing Taylor expansion of the function at , we can obtain

This completes the proof. □

For the convenience of expression, we assume that is the k-th-order central moment of the random variable () distributed under . Before presenting the proof of the main results, we provide some lemmas that will be useful in the following sections.

Lemma A1.

Assume that μ and satisfy the conditions of Theorem 1. Let and . If μ is an analytic moment, then we have

If μ is an analytic cumulant, then we obtain

Proof.

We first prove the lower bound for (A1). According to the conditions of Theorem 1 and Lemma A1, we know that has an analytical moment (); then, we have

Then,

According to the definition of r, , and we can obtain

Thus,

For the upper bound, we can obtain

This proves (A1). For the case of an analytic cumulant, the proof process of (A2) is similar, so do not repeat it here, which completes the proof. □

Lemma A2.

Assuming that the definitions of μ and are the same as Theorem 1, then we have

Furthermore, if , then

Proof.

We only consider the case of an analytic standardized moment (the cumulant situation is similar). As uses the convexity of , we have . According to (10) in Lemma 1, we have

Using Jensen’s inequality, we know that the kurtosis satisfies according to (A3). Then, ; thus, . Note that , so

For the function on the interval , we can easily obtain . According to the definition of standardized moment, we notice that . Therefore, let ,

Then, (A5) is proven. For (A6), considering that , . Thus, according to (A8), we have

Since , . Applying Lemma A1 with , we obtain

In fact, given and , . Now, for , we have . Hence, , and according to Lemma 1, we obtain

This completes the proof of Lemma A2. □

Lemma A3.

Let , and suppose that is a solution of (7); then, for any , we have

Furthermore, if β only has support on M, then we have

Proof.

First, notice that is a solution of (7); then,

By adding to both sides of the above inequality, we can obtain

Thus,

According to the definitions of and (A13), we have

For (A12), according to the sparsity of , i.e., if and if ,

□

Lemma A4.

Proof.

We use the assumptions of Lemmas A4 and (A11); then,

Thus,

Adding to both sides, we can obtain

In fact, for and for . Then,

Therefore, . Note that

Hence, . This completes the proof. □

Proof of Lemma 2.

Note that according to (7) and (A11), . According to Theorem 1,

In addition, using (A16) in Lemma A4, we know that satisfies the RE condition, so , i.e., Condition A holds. According to Schwarz’s inequality and Condition A,

According to (A12) in Lemma A3, we see that

Thus, we can obtain

Combining (A19) and (A20), we have

which proves (13). On the other hand, by applying (A20) to (A18), we can obtain

Therefore, using (A17) and (A22), we have

This completes the proof of Lemma 2. □

Proof of Proposition 1.

For , since is convex,

Then, according to ,

where , . Thus,

for all . We see that . After using Taylor’s formula to expand at , there exists such that

By applying to (A23), using the independence of , we can obtain

which proves Hoeffding’s lemma in Proposition 1. Next, we apply Hoeffding’s lemma to derive the Hoeffding’s inequality for the sum of bounded independent random variables (), with each bounded within (see [25]). We see that , and using Chernoff’s inequality for any ,

The last equation holds because the nature of the quadratic function ensures that the smallest bounded is attained at . Similarly, we can also prove that

Hence,

which proves Hoeffding’s inequality.

Let us consider an event () of KKT conditions evaluated at as follows:

Note that . Then, using Hoeffding’s inequality in Proposition 1 with , for any , we have

Then, according to (A24), . Hence, .

On the other hand, since ,

Therefore, applying the value of in (A25), we have

This completes the proof of Proposition 1. □

References

- Fienberg, S.E. A brief history of statistical models for network analysis and open challenges. J. Comput. Graph. Stat. 2012, 21, 825–839. [Google Scholar] [CrossRef]

- Erdös, P.; Rényi, A. On random graphs I. Publ. Math. Debr. 1959, 6, 18. [Google Scholar] [CrossRef]

- Gilbert, E.N. Random graphs. Ann. Math. Stat. 1959, 30, 1141–1144. [Google Scholar] [CrossRef]

- Kolaczyk, E.D.; Krivitsky, P.N. On the question of effective sample size in network modeling: An asymptotic inquiry. Stat. Sci. Rev. J. Inst. Math. Stat. 2015, 30, 184. [Google Scholar]

- Newman, M. Networks; Oxford University Press: Oxford, UK, 2018. [Google Scholar]

- Holland, P.W.; Laskey, K.B.; Leinhardt, S. Stochastic blockmodels: First steps. Soc. Netw. 1983, 5, 109–137. [Google Scholar] [CrossRef]

- Chatterjee, S.; Diaconis, P.; Sly, A. Random graphs with a given degree sequence. Ann. Appl. Probab. 2011, 21, 1400–1435. [Google Scholar] [CrossRef]

- Mukherjee, R.; Mukherjee, S.; Sen, S. Detection thresholds for the β-model on sparse graphs. Ann. Stat. 2018, 46, 1288–1317. [Google Scholar] [CrossRef]

- Du, Y.; Qu, L.; Yan, T.; Zhang, Y. Time-varying β-model for dynamic directed networks. Scand. J. Stat. 2023. [Google Scholar] [CrossRef]

- Abbe, E. Community Detection and Stochastic Block Models: Recent Developments. J. Mach. Learn. Res. 2018, 18, 6446–6531. [Google Scholar]

- Rinaldo, A.; Petrović, S.; Fienberg, S.E. Maximum lilkelihood estimation in the β-model. Ann. Stat. 2013, 41, 1085–1110. [Google Scholar] [CrossRef]

- Pan, L.; Yan, T. Asymptotics in the β-model for networks with a differentially private degree sequence. Commun. Stat. Theory Methods 2020, 49, 4378–4393. [Google Scholar] [CrossRef]

- Luo, J.; Liu, T.; Wu, J.; Ahmed Ali, S.W. Asymptotic in undirected random graph models with a noisy degree sequence. Commun. Stat. Theory Methods 2022, 51, 789–810. [Google Scholar] [CrossRef]

- Fan, Y.; Zhang, H.; Yan, T. Asymptotic Theory for Differentially Private Generalized beta-models with Parameters Increasing. Stat. Interface 2020, 13, 385–398. [Google Scholar] [CrossRef]

- Chen, N.; Olvera-Cravioto, M. Directed random graphs with given degree distributions. Stoch. Syst. 2013, 3, 147–186. [Google Scholar] [CrossRef]

- Kim, H.; Del Genio, C.I.; Bassler, K.E.; Toroczkai, Z. Constructing and sampling directed graphs with given degree sequences. New J. Phys. 2012, 14, 023012. [Google Scholar] [CrossRef]

- Yan, T.; Leng, C.; Zhu, J. Asymptotics in directed exponential random graph models with an increasing bi-degree sequence. Ann. Stat. 2016, 44, 31–57. [Google Scholar] [CrossRef]

- Yan, T.; Xu, J. A central limit theorem in the β-model for undirected random graphs with a diverging number of vertices. Biometrika 2013, 100, 519–524. [Google Scholar] [CrossRef]

- Chen, M.; Kato, K.; Leng, C. Analysis of networks via the sparse β-model. J. R. Stat. Soc. Ser. B Stat. Methodol. 2021, 83, 887–910. [Google Scholar] [CrossRef]

- Karwa, V.; Slavković, A. Inference Using Noisy Degrees: Differentially Private Beta-Model and Synthetic Graphs. Ann. Stat. 2016, 44, 87–112. [Google Scholar] [CrossRef]

- Meinshausen, N.; Yu, B. Lasso-type recovery of sparse representations for high-dimensional data. Ann. Stat. 2009, 37, 246–270. [Google Scholar] [CrossRef]

- Bickel, P.J.; Ritov, Y.; Tsybakov, A.B. Simultaneous analysis of Lasso and Dantzig selector. Ann. Stat. 2009, 37, 1705–1732. [Google Scholar] [CrossRef]

- Huang, H.; Gao, Y.; Zhang, H.; Li, B. Weighted Lasso estimates for sparse logistic regression: Non-asymptotic properties with measurement errors. Acta Math. Sci. 2021, 41, 207–230. [Google Scholar] [CrossRef]

- Bühlmann, P.; Van De Geer, S. Statistics for High-Dimensional Data: Methods, Theory and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Zhang, H.; Chen, S.X. Concentration inequalities for statistical inference. Commun. Math. Res. 2021, 37, 1–85. [Google Scholar]

- Cohen, W.W. Enron Email Dataset. Available online: https://www.cs.cmu.edu/enron/ (accessed on 7 May 2015).

- Zhou, Y.; Goldberg, M.; Magdon-Ismail, M.; Wallace, A. Strategies for cleaning organizational emails with an application to enron email dataset. In Proceedings of the 5th Conference of North American Association for Computational Social and Organizational Science (No. 0621303), Atlanta, GA, USA, 7–9 June 2007. [Google Scholar]

- Stein, S.; Leng, C. A Sparse β-Model with Covariates for Networks. arXiv 2020, arXiv:2010.13604. [Google Scholar]

- Liu, C.; Zhang, H.; Jing, Y. Model-assisted estimators with auxiliary functional data. Commun. Math. Res. 2022, 38, 81–98. [Google Scholar] [CrossRef]

- Zhang, H.; Lei, X. Growing-dimensional Partially Functional Linear Models: Non-asymptotic Optimal Prediction Error. Phys. Scr. 2023, 98, 095216. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).