Abstract

Deep learning approaches have demonstrated great achievements in the field of computer-aided medical image analysis, improving the precision of diagnosis across a range of medical disorders. These developments have not, however, been immune to the appearance of adversarial attacks, creating the possibility of incorrect diagnosis with substantial clinical implications. Concurrently, the field has seen notable advancements in defending against such targeted adversary intrusions in deep medical diagnostic systems. In the context of medical image analysis, this article provides a comprehensive survey of current advancements in adversarial attacks and their accompanying defensive strategies. In addition, a comprehensive conceptual analysis is presented, including several adversarial attacks and defensive strategies designed for the interpretation of medical images. This survey, which draws on qualitative and quantitative findings, concludes with a thorough discussion of the problems with adversarial attack and defensive mechanisms that are unique to medical image analysis systems, opening up new directions for future research. We identified that the main problems with adversarial attack and defense in medical imaging include dataset and labeling, computational resources, robustness against target attacks, evaluation of transferability and adaptability, interpretability and explainability, real-time detection and response, and adversarial attacks in multi-modal fusion. The area of medical imaging adversarial attack and defensive mechanisms might move toward more secure, dependable, and therapeutically useful deep learning systems by filling in these research gaps and following these future objectives.

Keywords:

medical image analysis; deep learning; adversary attack; adversarial defense; deep neural networks MSC:

68T01

1. Introduction

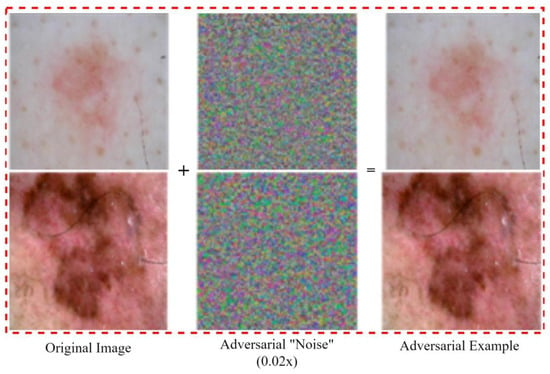

Deep neural networks (DNNs) have achieved remarkable success in natural image-processing tasks. The field of medical image analysis is not left out [1,2,3], including skin lesion diagnosis [4], diabetic retinopathy detection, and tumor segmentation [5,6]. Notably, an AI-based diabetic retinopathy detection system [7,8] has been approved by the Food and Drug Administration (FDA) of the United States [9]. In addition to enhancing efficiency and patient outcomes, medical diagnosis models driven by deep learning can reduce clinical costs. Nevertheless, despite its promise, deep learning is vulnerable to adversarial attacks [10,11], which can severely disrupt DNNs (Figure 1). These adversarial attacks can be generated by introducing imperceptible perturbations into legitimate samples, making them difficult to detect manually. Adversarial vulnerability poses a significant challenge for the application of DNNs in safety-critical scenarios such as medical image analysis [12,13,14], as it can result in misdiagnosis, insurance fraud, and a loss of confidence in AI-based medical technology.

Figure 1.

Examples of medical image adversarial attack.

The significance of robustness against adversarial attacks in medical image analysis was put forward by researchers who have analyzed the adversarial exposure of computer-aided diagnosis models from different perspectives [13,15,16]. As a result, much research has focused on defending against these adversarial attacks. Previous research focuses predominantly on adversarial training to improve network resilience and adversarial detection to identify adversarial attacks [17,18,19]. Some methods include image-level preprocessing [20] or feature enhancement techniques [21] within the adversarial defense context. These defense strategies have proven effective in establishing robustness against adversarial attacks for medical diagnosis’s unified pattern recognition [22]. However, there is a substantial divide between the research-oriented settings and evaluations of various defense methods, making comparisons difficult. There exist several survey papers on adversarial attacks and defenses in medical image analysis. However, many focus on particular medical tasks or need a detailed taxonomy and exhaustive evaluation of existing attack and defense methods for computer-assisted diagnosis models. In addition, recent developments in adversarial attack and defense for medical image analysis systems still need to be adequately addressed.

Medical image analysis is essential to healthcare because it enables precise illness diagnosis, planning of treatments, and disease monitoring. However, as machine learning (ML) and artificial intelligence (AI) are used more often in the processing of medical images, concerns have been raised concerning the possible effects of adversarial assaults. The importance of minimizing false alarms through thorough image analysis was highlighted by Marinovich et al. in their article “Artificial Intelligence (AI) for breast cancer screening” [23]. They showed that an AI model designed for breast cancer diagnosis using mammograms had a false-positive rate of 3.5%. On the other side, a hospital in the US resolved a lawsuit in 2019 for USD 8.5 million after a radiologist failed to detect a tumor in a patient’s CT scan [24]. A reliable second opinion may be provided via accurate AI-based image analysis, which lowers the likelihood of such mistakes. Zbrzezny et al.’s adversarial attack against a deep learning model used to diagnose diabetic retinopathy was proven in 2021 [25]. They deceived the model into incorrectly identifying the severity of the disease by introducing barely noticeable noise to retinal pictures. This demonstrates how susceptible AI healthcare systems are. A significant healthcare organization encountered a data breach in 2023 when nefarious individuals were able to obtain patient details, including medical photographs [26]. Personal health information abuse and identity theft may result from such breaches. Mobile applications with AI capabilities are being used to analyze medical images in several African nations, eliminating the need for radiology specialists and increasing diagnosis precision. For precise medical diagnosis and treatment choices, medical image analysis is essential. However, the vulnerability of AI systems to adversarial assaults and the possibility of human mistakes highlights the need for reliable and secure image analysis solutions to protect patient privacy and health.

The purpose of this research article is to address the aforementioned research problems by providing a systematic overview of recent advances in adversarial attack and defense for medical image analysis and discussing their benefits and limitations. In addition, we experimented with the widely known attack and defense adversarial strategy. The significant contribution of the manuscript is summarized as follows;

- This review article presents a, to date, comprehensive analysis of adversarial attack and defense strategies in the context of medical image analysis including the type of attack and defense tactics together with a cutting-edge medical analysis model for adversarial attack and defense.

- In addition, a comprehensive experiment was carried out to support the findings of this survey including classification and segmentation tasks.

- We conclude by identifying current issues and offering insightful recommendations for future research.

The introduction section is followed by the background of medical image analysis. This section is followed by Section 3, which talks about the overview of medical image adversarial attack and defense. In Section 3, more details of the medical adversarial attacks and defense are presented. Next, we have Section 4, where we carried out an extensive experiment to support our findings. The challenges and future works are presented in Section 5, while we conclude in Section 6. Figure 2 illustrates the PRISMA model strategy we used in this survey.

Figure 2.

Paper selection using the PRISMA model strategy.

2. Review Background

2.1. Medical Image Analysis

Recently, deep learning has been applied in several domains extensively [27,28,29], of which the medical sector is not left out. It may also be applied to the development of new medications, medical decision-making, and novel approaches to the creation of various forms of medicine [30,31]. Computerized clinical results heavily depend on medical imaging such as X-rays, ultrasound, computed tomography (CT), positron emission tomography (PET), and magnetic resonance imaging (MRI) as they are further examined by professionals or radiologists [32,33]. The main purpose of the processing of medical images is to make the data represented more comprehensible [34]. Medical image analysis must be conducted precisely and quickly since any delay or incorrect diagnosis can be harmful to a patient’s health [35]. Robotic deep learning is required to attain this high accuracy and quickness. Finding out which parts of the body are impacted by the illness is the main goal of medical image interpretation to help doctors understand how lesions grow. Four steps—image preprocessing, segmentation, feature extraction, and pattern detection and classification—make up most of the examination of a medical image [36,37,38]. Preprocessing is used to fix undesired image defects or to enhance image data for later processing. The method of segregating areas, such as tumors and organs, for additional research, is referred to as segmentation. Feature extraction is a means of removing specific information from regions of interest (ROIs) to help in their identification [39,40]. The categorization of the ROI is aided by classification based on extracted characteristics.

The deep learning concept has significantly advanced several AI disciplines, yet it is still open to severe security risks. The adversarial attack has received the most focus from the deep learning risk sector because it highlights several possible security issues for deep learning applications. Due to the adversarial attacks’ optical similarity to its pure version, adversarial attacks can circumvent human inspection in addition to interfering with DNNs’ interpretation process. Computer-aided diagnostic systems may significantly amplify this pernicious privacy risk, which might lead to fatal misinterpretation and possibly a crisis of societal credibility [13]. Taking into account a certain dataset where “D” depicts data distribution across pairs of provided samples and their accompanying labels . We represent the medical analysis deep learning model as with as the network parameter. An undetectable noise is frequently added to clean instances to produce adversarial attacks , which are technically described as follows:

where is the highest permitted perturbation range for subtlety and is the dimension metric . By definition, adversarial samples must be near their true equivalents using an established measure, such as the distance. The adversarial perturbation is as norm bound as . The sub-infinity and adversarial norm threat model is represented in Equation (2):

where the largely depends on the actual task (segmentation, detection, or classification). Equation (2) can be integrated using Newton-like approaches [14,41] or algorithms based on gradient descent. Other white-box adversarial attack techniques include the Limited-memory BFGS method [14], the Fast Gradient Sign Method (FGSM) [42], etc. There are also additional threat models, such as the black-box attack, which in real-life situations presents a bigger security risk to computer-aided diagnostic models [12,43].

Several adversarial defense mechanisms have been established to defend deep learning models from adversarial attacks [38,39,44]. The most popular among them is adversarial training [42,45], which can increase inherent network resilience by supplementing adversarial cases as training data. At the inference step, the adversarial learned model is anticipated to foresee both adversarial samples accurately. Based on Equation (2), the conventional adversarial training [21] may be expanded to become the following min–max optimization formation:

To interfere with the target network, internal optimization seeks out the most harmful and threatening occurrences. Improving the realistic adversarial threat spanning network parameters is the primary focus of external mitigation. In general, adversarial training improves deep neural networks’ (DNNs’) intrinsic robustness without adding any extraneous parts, while preserving its ability to make accurate inferences from valid data. Different protection strategies focus on pre-processing data (both clean and hostile instances) without influencing later computer-aided analysis networks, strengthening the intrinsic network resistance against adversarial components [20,46]. In simple terms, the data pre-processing aims to retain the original form of clean inputs while converting hostile samples into benign equivalents for future inference. The resulting optimization problem allows us to create a pre-processing-based protection.

The pre-processing module , which may include a modal or irregular operator intended to mitigate the effects of adversarial perturbations, is indicated by the weight coefficient . Nevertheless, distinguishable areas in medical imaging typically cover a small number of pixels. Relative to their equivalents in environmental images, biased characteristics still run a higher risk of being lost through pre-processing methods for medical images. Many adversarial attack and defensive strategies have demonstrated outstanding results with real-world images [47]. The tasks belonging to normal vision alongside those relevant to medical imaging nevertheless vary fundamentally in several ways, including data aspects, features, and task features. As a result, it is difficult to directly transfer adversarial attack and defensive strategies from the realm of natural imagery to the field of medicine. In addition, several research studies have shown that compared to natural images, medical images may be much more vulnerable to serious adversarial attacks [13,17,43]. The safety and reliability of computer-aided diagnostic models have to be considered carefully consideration, considering the sizeable healthcare industry and the significant effect of computer-aided diagnosis. This paper, therefore, provides a thorough overview of current developments in adversarial attack and response strategies in the field of medical image analysis.

Contemporary healthcare systems are built around pattern detection and classification in medical image analysis. Using cutting-edge technology to glean priceless information from medical imaging like X-rays, MRIs, CT scans, and ultrasounds is the central idea behind this multidimensional approach. The detection, categorization, and management of numerous medical diseases are made possible by these images, which are frequently complicated and filled with minute details. They provide essential insights into the inner workings of the human body. Fundamentally, pattern recognition is carefully examining these images to find abnormalities, irregularities, or certain traits that might point to a condition, an injury, or other health-related issues. On the contrary, classification goes beyond pattern detection by classifying the discovered patterns into distinct groups or diagnoses. Artificial intelligence and machine learning algorithms are crucial in this regard because they can analyze enormous volumes of picture data and spot patterns that the human eye would miss [48,49]. Effective pattern recognition and classification have significant effects on medical image analysis. They make it possible to identify diseases early, arrange effective treatments, and track a patient’s development over time. They also help to improve overall patient satisfaction and lower the margin of diagnosing error. Researchers and healthcare professionals are constantly working to improve and grow the methods and tools used for pattern detection and classification in this dynamic field, pushing the limits of the analysis of medical images and redefining how diseases are identified and treated. This overview just touches the surface of this important field, which is at the vanguard of contemporary medicine and provides patients everywhere with hope and innovation.

2.2. Adversarial Attack and Defense

Deep neural network (DNN) vulnerability has not been addressed or justified technically. DNN uses a substantial quantity of ambient input while training and derives conclusions from its internal framework and algorithmic process via its outcome. Szegedy et al. [9] first initiated the susceptibility of deep neural network models in image classification. Human eyes cannot tell the difference between the adversarial samples produced after applying a perturbation to the initial image, yet it was wrongly predicted by the employed deep learning network. Given the possible risk, real-world adversarial attacks on deep learning models raise serious concerns. In a noteworthy investigation, Eykholt et al. [50] perturbed an actual traffic signal with black and white graphics, leading to deliberate misclassification, to show the viability of strong physical-world attacks. These attacks are especially dangerous because they may resist a wide range of physical circumstances, such as shifting perspectives, miles, and qualities. This demonstrates how adversaries might potentially modify real-world items like traffic signs using deep learning algorithms, resulting in misunderstanding. Such attacks can trick machines that are autonomous, like self-driving vehicles, and have disastrous effects on the road, which has serious ramifications for security and privacy.

Real-world adversarial attacks on deep learning algorithms raise serious issues and can even be fatal, especially in the medical industry. Attack-related medical image misclassification might result in improper or late therapy, which could put people’s lives in peril. Recognizing the need to protect ML models in the medical field and creating strong defensive measures to reduce the dangers brought on by adversarial attacks are essential. Ma et al.’s [17] work concentrated on adversarial attacks on deep learning-based algorithms for analyzing medical images. Due to the special properties of medical image information and deep neural network models, their research on baseline medical imagery databases demonstrated that malicious attacks on medical images are simpler to design. They additionally showed how medical adversarial scenarios frequently target tissues outside of diseased ones, leading to deeply distinctive and distinguishable traits. In the same vein, Paul et al. [47] built an ensemble-based defense mechanism and examined the effect of adversarial attacks on the precision of forecasting lung nodule cancer. To increase resilience over adversarial attacks, they also looked into including hostile images in the training sample. The accuracy of CNN prediction was considerably impacted by adversarial attacks, notably the Fast Gradient Sign Method (FGSM) and one-pixel attacks. They outline that training adversarial images and multi-initialization ensembles can boost classification accuracy. Ozbulak et al. [51] combined the skin lesions and glaucoma optic disc in a segmentation task while examining the effect of adversarial attacks on deep learning models. Furthermore, they developed an Adaptive Mask Segmentation Attack, an innovative algorithm that yields an adversarial attack with accurate prediction masks based on perturbations, which are largely invisible to the human eye yet cause misclassification. This study shows the necessity for strong defenses to guarantee the dependability and accuracy of segmentation models used in healthcare settings.

3. Overview of Medical Image Adversarial Attack and Defense

This survey presents a comprehensive exploration into the realm of medical image adversarial attack and defense. Within this section, a detailed dissection of medical adversarial attacks is offered, alongside an in-depth investigation of defense strategies. This discourse will delve into the intricacies of both attack and defense methodologies within the context of medical image analysis. The accompanying Figure 3 provides a succinct visual representation of the yearly publication trend about adversarial attack and defense within the domain of medical image analysis.

Figure 3.

The number of papers published per year related to adversarial attack and defense for medical image analysis.

- A.

- Medical Image Adversarial Attack and Defense Classification Task

The classification process simply explains the partition of medical images into discrete groups, typically involving the identification of various conditions or ailments, as shown in Figure 4. The objective of adversarial attacks targeting classification tasks is to strategically modify input images to elicit inaccurate classification. The decision-making mechanism of the model can be misled by perpetrators through the introduction of varying modifications that are difficult to detect. The consequences of effective classification attacks are significant, as they can result in erroneous treatment approaches, postponed interventions, and compromised well-being of patients. Paschalis et al. [12] have used an innovative methodology to assess the resilience of deep learning networks in the context of medical imaging. The researchers examine weaknesses in these state-of-the-art networks through the utilization of adversarial instances. Different techniques were utilized for classification, such as FGSM, Deep Fool (DF) [52], and saliency map attacks (SMA) [53]. On the other hand, dense adversarial generation (DAG) [54] was applied for semantic segmentation, with varying levels of perturbation and complexity. The results of the study indicate that images affected by noise are categorized in a manner comparable to clean images during classification tasks.

Figure 4.

Example of adversary attack in a classification task scenario.

However, adversarial instances exhibit a unique tendency to be classified into other categories. The presence of Gaussian noise resulted in a decrease in the level of certainty in classification, while the majority of adversarial attacks exhibited a high level of certainty in their misclassification. Therefore, it may be argued that adversarial instances are better suitable for evaluating the resilience of models in comparison to test images that contain noise. Finlayson et al. [55] conducted a comprehensive investigation aimed at identifying vulnerabilities within deep neural network models used in the medical field. Both Pretrained Gradient Descent (PGD) and a basic patch attack were employed on three foundational models for the classification of medical disorders such as diabetic retinopathy, pneumothorax, and melanoma. The findings suggest that both forms of attacks have a high probability of success and are undetectable by human observers. Furthermore, these attacks seem to be effective even against advanced medical classifiers, especially the ResNet-50 model. It is important to note that the attacker’s degree of network exposure does not significantly impact the efficacy of these attacks.

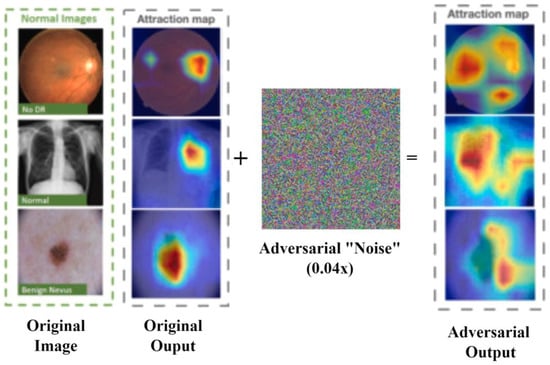

Adversarial attacks such as the Fast Gradient Sign Method (FGSM), the Basic Iterative Method (BIM) [56], and C&W attacks, were utilized in the identical medical setting as described in [55], with a specific emphasis on fundoscopy, chest X-ray, and dermoscopy. The aforementioned attacks were implemented on datasets that encompassed both two-class and multi-class classifications. Taghanaki et al. [57] conducted a thorough investigation to evaluate the susceptibilities of deep learning techniques in categorizing chest X-ray images across different illness categories. The researchers thoroughly analyzed the performance of two deep neural networks when subjected to 10 diverse adversarial attacks. In contrast to previous methodologies that employed a singular gradient-based attack, the researchers examined several gradient-based, score-based, and decision-based attack models. These models were examined on Inception-ResNetv2 and NasNet-large architectures, with their performance evaluated using chest X-ray images. To perform a more comprehensive examination of the vulnerabilities of convolutional neural networks (CNNs), Yilmaz et al. [58] undertook a groundbreaking experiment to assess the sensitivity of a classifier designed for mammographic images to adversarial attacks. The researchers analyzed the similarity between benign and malicious images utilizing the structural similarity index technique (SSIM), which is a perceptual model employed for assessing image similarity. Furthermore, Fast Gradient Sign Method (FGSM) attacks were implemented on convolutional neural networks (CNNs) that have undergone training. In recent times, a category of sophisticated attacks referred to as universal adversarial perturbations (UAP) has been presented [52]. These attacks encompass perturbations that are agnostic to visual content, hence providing enhanced realism and effectiveness. The proposed approach utilizes an iterative algorithm to apply slight perturbations to input images.

The use of chest X-ray images to gain an understanding of different ailments kinds has generated significant attention among physicians and radiologists due to the potential for automated assessment enabled by deep learning networks [59,60]. As a result, the issue of safeguarding the integrity of these models has emerged as a matter of utmost importance. Rao et al. [61] conducted a comprehensive investigation of various attack and defense strategies employed in the classification of Thorax disorders by the analysis of chest X-rays. The scholars conducted a comparison assessment whereby they examined five distinct attack types, specifically DAA, DII-FGSM, MIFGSM, FGSM, and PG. Prior research has demonstrated that deep learning networks utilized in the prediction of vulnerability to COVID-19 are vulnerable to adversarial attacks. In their seminal work, Rahman et al. [62] conducted a comprehensive analysis of the effects of adversarial perturbations on deep learning networks, establishing themselves as pioneers in this field. The scope of their analysis included six discrete deep-learning applications specifically designed to diagnose COVID-19. Additionally, the researchers incorporated multi-modal adversarial instances into several diagnostic algorithms for COVID-19. The decline in image quality observed in these instances frequently arises from the presence of irregular illumination. In light of this concern, Cheng et al. [63] addressed the matter by using an adversarial attack technique. The researchers presented a new type of attack known as the “adversarial exposure attack”. The methodology employed by the researchers entails the creation of hostile images by the manipulation of image exposure to radiation, intending to mislead the deep neural networks that underlie the image recognition system.

- B.

- Medical Image Adversarial Attack and Defense Segmentation Task

Segmentation attacks can be summarized as attacks involving the deliberate division or fragmentation of data or information to compromise its integrity, confidentiality, or availability, as shown in Figure 5. Segmentation plays a crucial function in delineating distinct regions of interest within medical images, facilitating the detection and characterization of abnormalities, organs, or malignancies. The focus of adversarial attacks in the context of segmentation tasks revolves around the manipulation of pixel values or gradients to disturb the accurate delineation of boundaries.

Figure 5.

Example of adversary attack in a segmentation task scenario.

The vulnerability of segmentation models to adversarial attacks highlights the necessity of implementing robust protections to guarantee the accurate representation of anatomical structures. Therefore, it is crucial to develop various ways to create adversarial cases that can specifically target segmentation models. To fulfill this aim, Chen et al. [64] proposed a novel approach to attack segmentation convolutional neural networks (CNNs) through the utilization of adversarial learning. The methodology employed by the researchers entails integrating a variational auto-encoder (VAE) with Generative Adversarial Networks (GAN) to produce images that demonstrate deviations and alterations in visual characteristics. The purpose of these images is to subvert the effectiveness of medical segmentation models. The quantification of the attack effect is determined by a significant decrease in the Dice score [65], a commonly employed metric for evaluating the effectiveness of segmentation in medical imaging, as matched with actual truth segmentation. To prioritize the protection of medical neural networks for the benefit of patients, Cheng and Ji [63] conducted a study to examine the effects of universal adversarial perturbations on brain tumor segmentation models across four different modalities. The researchers utilized the MICCAI BraTS dataset, which is recognized as the most extensive publicly available compilation of MRI brain tumor images, and implemented them in a U-Net model. The perturbations were created using a Gaussian distribution.

- C.

- Medical Image Adversarial Attack and Defense Detection Task

Detection attacks refer to a type of cyber-attack where an adversary attempts to evade detection of security systems or protocols. Detection tasks involve the process of identifying particular objects or anomalies within medical images, such as accurately determining the existence of cancers or lesions, as shown in Figure 6. The objective of adversarial attacks in detection tasks is to intentionally alter or confuse the visual characteristics of specific objects to circumvent the detection capabilities of the model. By making subtle alterations to the characteristics of an anomaly, individuals with malicious intent can avoid being detected, which may result in occurrences of false negatives. In line with the above, Ma et al. [17] investigated this particular issue by using four detection approaches on medical deep neural networks. These methods include Kernel density (KD) [66], Deep features (DFeat), local intrinsic dimensionality (LID) [67], and quantized features (QFeat) [68]. Li and Zhu [69] proposed an unsupervised learning method as a means to detect adversarial attacks on medical imaging. The authors posited that their unique methodology has the potential to operate as a self-contained component within any medical imaging system based on deep learning, hence augmenting the system’s resilience. Li et al. [70] developed a hybrid approach to create a robust artificial intelligence framework for medical imaging in their research.

Figure 6.

Example of adversary attack in a detection task scenario.

The architecture presented in this study is founded on the principles of semi-supervised adversarial training, unsupervised adversarial detection, and the introduction of a novel metric for evaluating the susceptibility of the system to adversarial risks (SSAT and UAD). The technique proposed by the authors effectively tackles two primary obstacles encountered in the identification of adversarial samples. These challenges are the scarcity of labeled images in medical applications and the ineffectiveness of current detection approaches when faced with fresh and previously undetected attacks.

3.1. Medical Imaging Adversarial Attacks

In the field of medical image analysis, the integration of machine learning techniques, particularly deep neural networks, has resulted in a significant improvement in both diagnostic accuracy and the development of therapeutic approaches [10,22]. However, this progress has also brought about new challenges, evident in adversarial attacks. These attacks involve deliberately injecting carefully engineered distortions into input data to deceive the models. Within the field of medical image analysis, adversarial attacks pose a significant threat to the reliability and integrity of tasks related to classification, segmentation, and detection. These tasks are crucial for accurate diagnosis and effective patient care.

3.1.1. White Box Attacks

Within the domain of adversarial machine learning, a notable category of attacks is sometimes referred to as “white box attacks”. The aforementioned attacks exhibit a distinguishing feature wherein the perpetrator possesses an in-depth understanding of the architecture, parameters, and training data of the specific machine learning model being targeted. The thorough comprehension of the underlying workings of the model allows adversaries to create intricate and tailored adversarial attacks with the specific goal of exploiting weaknesses and inducing misclassification or erroneous outputs. White-box attacks have the most potential to cause disruption. As a result, they are frequently used to evaluate the effectiveness of associated defenses as well as the resilience of machine learning and deep learning models. Zhang et al.’s key work [71] pioneered the proof that even the smallest unnoticeable perturbations placed into an image might cause a DNN to misclassify an image. The authors set out to solve the equation governing the smallest perturbation required to cause a neural network misclassification. The C&W attack technique [72] utilizes L0, L2, and L∞ norms to generate adversarial samples while adhering to specified perturbation restrictions. This algorithm is supported by an improved version of the L-BFGS optimization methodology (one of the strongest existing methods for targeted attacks). The authors of reference [73] developed FGSM, a technique for quickly calculating adversarial perturbations. An adjustment to the adversarial perturbation magnitude in the FGSM aligns with the gradient of the loss function of the model. This method makes it easier for untargeted attacks to produce misclassification by manipulating the gradient of the model’s loss function with respect to the input. The I-FGSM method was improved by [74] by focusing on the category with the lowest confidence level. This method of targeting creates adversarial samples that trick the model into categorizing segments that are vastly different from the correct one, increasing the attack’s potential for disruption. Likewise, in [53], a technique was put out to limit the L0 norm perturbation by changing only a small subset of the image pixels as opposed to the complete image. This method uses a saliency map and is greedy, repeatedly changing one pixel at a time while computing the derivative of the output bias for each input feature at the model’s final layer. With this technique, a subtle and targeted perturbation strategy is introduced (Table 1).

Table 1.

Summary of adversarial attack works in the context of medical image analysis.

3.1.2. Black-Box Attacks

Black-box attacks are instances in which the perpetrator of the attack has minimal or no understanding of the internal operations of the specific machine learning model being targeted. As opposed to existing white-box adversarial attacks that primarily rely on obtaining multiple backward gradients of target models, the attacker in this approach considers the target DNN as the locally installed model for generating related adversarial samples. When considering the merits of different scenarios, it might be argued that the general black-box scenario offers a more appropriate environment for simulating practical adversarial attacks. In essence, the attacker regards the model as an opaque entity, with solely the input–output characteristics of the model being accessible. Their only knowledge is the capacity to see the system’s outputs in response to particular inputs. However, there are occasions when this capability is limited, frequently as a result of restrictions placed on the number of requests to avoid raising suspicion, which makes their efforts more difficult. The works of [14] show that, even if two models are trained on distinct datasets, adversarial attacks designed to cause misclassification in one model may equally fool another machine, which explains the transferability of adversarial attacks. Making use of this idea, the authors of [112] launched black-box attacks against a DNN intending to develop a replacement model that is trained on artificial inputs that the target DNN labeled. This alternative model was used to provide adversarial instances that might damage the intended DNN. Ref. [113] also provided three realistic threat models that were in line with actual world circumstances within the scope of black-box scenarios. These consist of label-only, incomplete information, and query-limited options. Query-efficient methods are required by the query-limited model, which uses Natural Evolutionary Strategies to predict gradients for implementing the PGD attack. The method alternates between merging the original image and maximizing the probability of the intended target class in cases where only the top-k label probabilities are given. Similar to this, the attack makes use of noise robustness to create targeted attacks when the attacker only has access to the top-k predicted labels. The Invariant Feature Transform is used in the FGBB approach [114], which makes use of image-extracted features to direct the development of adversarial perturbations. Higher probabilities are given by the algorithm to pixels that significantly affect how the human visual system perceives objects. As a two-person game, the process of creating an adversarial example is conceptualized, with one player limiting the distance to an adversarial example and the other taking on different roles that produce the fewest possible antagonistic examples. Square Attack [115] is a novel method that functions without relying on local gradient information and is hence resistant to gradient masking. This attack chooses localized square-shaped updates in arbitrary positions using an algorithmic search mechanism. The perturbation strategically lines up with the decision boundaries as a result. Figure 7 depicts the summary of adversarial attack taxonomy in medical imaging.

Figure 7.

Taxonomy of medical image adversary attack.

3.2. Medical Adversarial Defense

In light of the potential risks, numerous defense approaches have been proposed to counteract medical adversarial attacks. This research analyzed a range of tactics and methodologies to reduce the adverse effects of adversarial attacks on the processing of medical images. Specifically, we offer a comprehensive examination of each category of adversarial defense technique, encompassing its prerequisites, limitations, and results. The mitigation of adversarial attacks in medical image analysis necessitates adopting a comprehensive strategy that integrates sophisticated methodologies derived from machine learning and medical imaging. The prioritization of the creation and integration of effective adversarial defenses is crucial within the healthcare industry as it increasingly adopts AI-powered solutions. This is necessary to protect patient safety, to uphold the accuracy of diagnoses, and to guarantee AI’s ethical and secure utilization in medical applications. The advancement of the area of medical adversarial defense and the establishment of a robust basis for AI-driven healthcare will heavily rely on the collaborative endeavors of researchers, practitioners, and policymakers on Adversarial defenses. Figure 8 depicts the summary of adversarial defense in medical imaging.

Figure 8.

Taxonomy of medical image adversary defense.

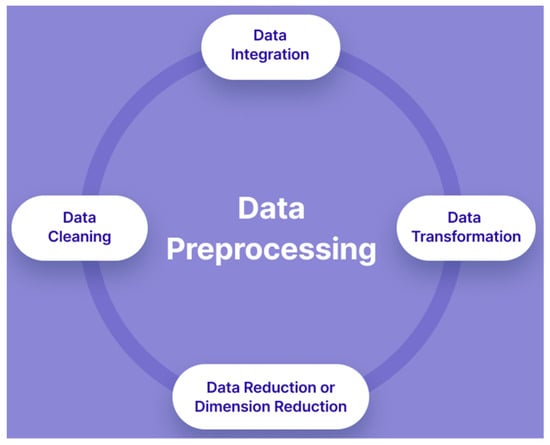

3.2.1. Image Level Preprocessing

Images need to be processed before they can be utilized for model training and inference. This encompasses but is not restricted to changes in color, size, and direction, as shown in Figure 9. Pre-processing is carried out to improve the image’s quality so we can analyze it with greater accuracy. Through preprocessing, we may eliminate undesired deformities and enhance certain properties crucial for the application we are developing. Those qualities might change based on the application.

Figure 9.

Basic steps of image preprocessing.

Typically, an adversarial image is composed of a pristine image and its matching adversarial alteration. Meanwhile, deep neural networks (DNNs) have been shown to attain impressive performance for clean images, but they are still vulnerable to adversarial attacks [14,116]. Therefore, the process of reducing the perturbation component from the adversarial example can enhance the subsequent network diagnosis. Moreover, there is no necessity to undergo retraining or make alterations to medical models when implementing image-level preprocessing techniques. This approach proves to be advantageous and secure in the domain of biomedical image analysis. Most pre-processing-based defense approaches primarily focus on medical classification tasks, as evidenced by the majority of existing research [61,117]. Therefore, it is necessary to do image-level preprocessing to preserve the identifiable components for further diagnostic purposes. The authors suggest the Medical Retrain-less Diagnostic Framework (MedRDF) [20] as a method to enhance the robustness of a pre-trained diagnosis model during the inference step. In the initial step, MedRDF generates several replicas of the input images, each of which is subjected to isotropic noise perturbations. The generation of these duplicates is anticipated by the utilization of majority voting after the application of a bespoke denoising algorithm. Furthermore, a comprehensive metric is proposed to determine the confidence level of MedRDF diagnosis, aiding healthcare professionals in their clinical practice. Kansal et al. [46] expanded upon the High-level representation Guided Denoiser (HGD) [118] to defend medical picture applications against hostile examples in both white-box and black-box scenarios instead of focusing just on pixel-level denoising. The incorporation of high-level information can enhance the process of eliminating the adversarial effect at the image level, leading to a more accurate final diagnosis without causing visual disruption.

3.2.2. Feature Enhancement

Feature enhancement, a powerful strategy in the field of machine learning, holds substantial potential when utilized as a method to mitigate adversarial risks. Adversarial attacks, which are defined by their ability to introduce subtle modifications to input data, present significant difficulties to the resilience of machine learning models. The utilization of feature augmentation approaches within the context of adversarial defense involves transforming and enhancing data representations to strengthen the model’s ability to resist these attacks. By incorporating decision-influencing features, models are strengthened with enhanced capacities to differentiate genuine patterns from adversary interference, hence bolstering their effectiveness and reliability. Numerous approaches for enhancing features have been developed to improve the resilience of medical classification models [119,120,121]. Pooling layers are frequently employed in neural network modeling as a means to decrease the size of feature maps. In their study, Taghanaki et al. [21] made modifications to the medical classification networks by substituting max-pooling layers with average-pooling layers. One possible explanation for the observed improvement in resilience is that the utilization of average-pooling allows for the acquisition of a more incredible amount of contextual information at a global level, as opposed to the selection of only the largest value in max-pooling. This increased contextual understanding presents a greater challenge for adversarial attacks. Additionally, the Auto Encoder (AE) can be integrated into computer-aided diagnosis models to perform feature-level denoising [122]. This denoising process is separate from the image-level preprocessing technique. Meanwhile, the incorporation of feature invariance guidance is employed to mitigate the model’s susceptibility to adversarial attacks. In line with the findings of reference [123], Han et al. [120] also incorporated dual-batch normalization into adversarial training. This modification resulted in a notable enhancement in the resilience of diagnostic models without compromising their accuracy under clean conditions.

In addition to the conventional medical classification problem, feature-enhanced methods have been utilized in various medical imaging tasks, such as segmentation [124], object identification [21], and low-level vision [125]. The Non-Local Context Encoder (NLCE) [126] is a proposed module that aims to enhance the resilience of biomedical image segmentation models. It functions as a plug-and-play component. The NLCE module, as noted by [21], is designed to capture spatial dependencies at a global level and enhance features by including contextual information. This module may be seamlessly integrated into different deep neural network (DNN) models for medical picture segmentation. In their study, Stimpel et al. [125] employ the guided filter technique along with a guidance map that is learned to enhance the resolution and reduce noise in medical images. The guided filter demonstrates a robust capability in mitigating the impact of adversarial attacks on the produced outputs. The exploration of feature improvement has emerged as a promising approach within the field of adversarial defense. This approach aims to tackle the significant issue of adversarial attacks by promoting the development of models that are more robust and dependable. Through the utilization of enhanced data representation, models can acquire improved abilities to navigate the complex domain of adversarial perturbations, leading to increased levels of resilience, precision, and reliability. The incorporation of feature enhancement approaches into adversarial defense tactics is anticipated to have a significant impact on fostering machine learning systems against the constantly emerging adversarial attacks as research and innovation advance.

3.2.3. Adversary Training

The fragility of modern AI systems has been brought to light by adversarial attacks, which entail deliberate modification of input data to deceive machine learning algorithms. Adversarial training, a proactive defense mechanism, has been recognized as an effective technique for improving the resilience of these models against such attacks. Through the integration of adversarial attacks during the training phase, adversarial training enhances the capability of models to tolerate perturbations and to generate dependable predictions, even when exposed to adversarial input. Notably, a significant number of research studies have diversified upon existing adversarial training techniques that were developed initially for conventional images and applied to the field of medical classification tasks [18,22,112,113,114]. The study conducted by [18,127] focused on investigating adversarial cases in medical imaging. The researchers developed multiple strategies to mitigate the impact of these adverse occurrences. During the adversarial training phase, integrating both FGSM [42] and JSMA [53] approaches is employed to generate adversarial instances. To strengthen the resilience of the system, the researchers incorporated Gaussian noise into the data used for adversarial training. Additionally, they substituted the original Rectified Linear Units (ReLU) activation function with the Bounded ReLU variant.

Xu et al. conducted a study where they not only evaluated the robustness of several computer-aided diagnosis models but also implemented PGD-based adversarial training [45] and the Misclassification Aware adveRsarial Training (MART) approach [128,129] to improve the resilience of these models. To enhance the evaluation of resilience to typical disturbances, the researchers also established a fresh medical dataset known as the Robust-Benchmark. The aforementioned efforts primarily focus on improving the ability of individual models to withstand hostile attacks. Moreover, there are various adversarial defense methods specifically designed for medical image analysis, along with the transfer of natural defense techniques to diagnostic models [130,131,132]. In their study, Liu et al. [130] examined three distinct types of adversarial augmentation cases that might be incorporated into the training dataset to enhance robustness. The authors employ Projected Gradient Descent (PGD) [45,69] as an iterative method to search for the most challenging latent code to generate adversarial nodules that the target diagnosis model cannot detect. The concept of adversarial training is a significant development in the domain of adversarial machine learning, as it offers a means to enhance models’ resilience against adversarial perturbations. Adversary training enhances the robustness and reliability of AI systems by endowing models with the capability to discern authentic inputs from hostile ones. The ongoing evolution of the adversarial landscape necessitates additional study and innovation in adversarial training approaches. These advancements have the potential to establish a more secure and reliable basis for the deployment of machine-learning models in many applications (Table 2).

Table 2.

Summary of adversarial defense works in the context of medical image analysis.

4. Experiment

This section illustrates the exhibition of the practical experimentation and evaluation of medical image adversarial attack and defense especially in the area of segmentation and classification. First, the employed datasets and the type of data preprocessing we used are introduced, followed by the evaluation metrics and the proposed approach. The qualitative and quantitative results are presented next, and we conclude the section with the results discussion.

4.1. Implemented Attack and Defense Model

In this review article, we used the ResNet-18 [165] (Figure 10) and Auto Encoder-Block Switching [166] (Figure 11) for medical image attack and defense classification while using the U-Net [3] architecture (Figure 12) for medical image segmentation task. In this study, we primarily focus on attacks under the “norm threat model,” which is the most typical case. We provide accurate results on both clean and adversarial instances, achieved using five powerful adversarial attack techniques: FGSM [42], PGD [45] with 20 steps and a step size of 1/255, CW [41], and Auto Attack (AA) [167]. The norm perturbation range includes 2/255, 4/255/ 6/255, and 8/255. On a PC running Windows and the Python environment, we carried out our experiment. The machine had a 2.30 GHz Intel(R) Core(TM) i5-8300H processor and a 4 GB NVIDIA GeForce GTX 1050 Ti graphics card. We used open-source TensorFlow.Keras deep learning framework to build the network, which we found to be a useful resource. We used distributed processing and relied on the CUDA 8.0 and CUDNN 5.1 requirements to increase the effectiveness of our training. Table 3 summarizes the implementation hyperparameters of the models.

Figure 10.

ResNet-18 architecture used for adversarial attack implementation.

Figure 11.

Auto Encoder-block switching architecture used for adversarial defense implementation.

Figure 12.

UNET architecture used for adversarial segmentation attack and defense implementation.

Table 3.

Implementation hyperparameters of the implemented models.

4.2. Dataset and Data Preprocessing

The datasets we used include (1) Messidor dataset [168], which has four classes with s total number of 1200 RGB samples of the eye fundus for classifying diabetic retinopathy based on retinopathy grade; (2) the ISIC 2017 dataset [169], which has three classes with 2750 samples and is collected by the International Skin Imaging Collaboration for the classification and segmentation of skin lesions; (3) the Chest-ray 14 dataset [170], which has 112,120 frontal view X-ray samples from 14 thorax disorders; and lastly (4) the COVID-19 database [171], which contains 21,165 chest X-ray samples with segmentation-ready lung masks. Figure 13 shows the pictorial representation of the employed datasets.

Figure 13.

Illustration of the employed dataset.

Noting that this experiment is based on classification and segmentation scenarios, we carried out both binary and multiclassification cases for the classification task. For the Messidor dataset, we used the original partition i.e., 960 fundus samples for training with several data augmentation techniques, including random rotations and flips, and the rest for testing. The preprocessing strategy that was carried out on the ISIC 2017 dataset includes resizing and center-cropping. Due to the unbalanced nature of the Chest-ray 14 dataset, we randomly selected 10,000 X-ray samples while using 8000 as training samples and 2000 as testing samples while randomly flipping and normalizing them. For the segmentation task, we used a total of 2750 X-ray images (2000 for the training set) as originally designed by the ISIC 2017 dataset.

4.3. Evaluation Metrics

We used different metrics for the different tasks carried out in this manuscript. For the classification task, we used the accuracy evaluation metrics since adversarial attacks may mislead a target model into incorrect predictions, which is measured mathematically as follows:

For the segmentation task, noting that adversarial attacks lead to incorrect segmentation results, we employed the use of mean Intersection over Union (mIoU) and Dice coefficient (F1_score) to measure the generated segmentation mask and target mask as mathematically calculated as follows:

The idea of adversarial defense tries to make neural network outputs comparable for both adversarial and clean cases. This defensive tactic strengthens the network’s ability to withstand hostile examples.

4.4. Results and Analysis

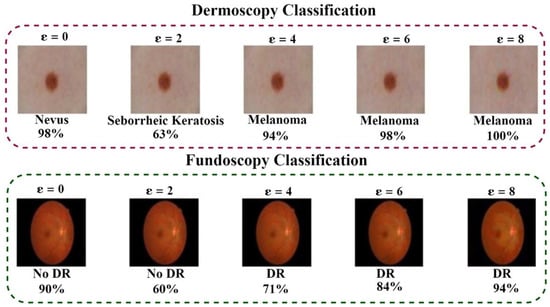

This subsection first introduces several examples of adversarial attacks on various medical diagnostic classification tasks, as shown in Figure 14, illustrating varying levels of attack potency. It is obvious that, even when subjected to small perturbations, AI models are extremely vulnerable to hostile situations. The disruption of clinical evaluation is exacerbated by the attacker’s ability to mislead the medical categorization models into making confidently false diagnoses. Furthermore, the incorrect partitioning results brought on by this attack may also result in incorrect treatment suggestions.

Figure 14.

Medical adversarial instances (classification tasks) along with predictions across a range of perturbation rates.

4.4.1. Adversary Attack Experimental Results

Table 4 summarizes the level of accuracy of the employed deep learning model against various attacks in both binary and multiclass classification situations in order to thoroughly assess the effect of adversarial attacks on medical classification algorithms. It is vital to remember that we create adversaries using the cross-entropy loss function. Based on the results presented in Table 4 and Figure 14, the utilized deep learning model accuracy noticeably decreases as the size of the attack perturbations grows. Notably, compared to its binary classification, multiclass classification models suffer from a more dramatic decline in accuracy. However, since the majority of medical adversarial defensive strategies proposed by researchers recently focus on binary tasks, we argue that achieving adversarial robustness for multiclass medical classification is a more difficult task, with ramifications for many clinical contexts.

Table 4.

Adversarial (white-box) classification result (%) using the ResNet-18 (binary and multi-class classification tasks) under various attack scenarios.

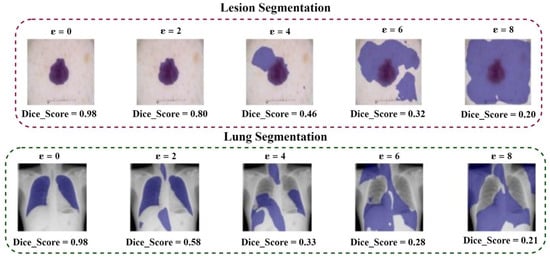

Furthermore, we assess the effectiveness of medical segmentation models against PGD-based adversarial cases in addition to adversarial attacks against medical classification, as shown in Table 5 and Figure 15. The Dice loss and Binary Cross-Entropy (BCE) loss were used as the adversarial losses whilst creating the adversary attacks. Adversarial instances produced by decreasing Dice loss might enhance attack effectiveness against medical segmentation methods.

Table 5.

Adversarial segmentation result (white box)using the u-net model based on PGD adversarial attack.

Figure 15.

Medical adversarial instances (segmentation tasks) along with predictions across a range of perturbation rate.

4.4.2. Adversary Defense Experimental Results

Modern adversarial training methods mostly focus on improving adversarial images while training. This improves the model’s capacity for precise judgments in both routine and adversarial cases. This work applies PGD-AT [45] adversarial training approaches to the field of biomedical imaging to further these methodologies. In both binary and multi-class contexts, the research illustrates the effectiveness of this strategy in obtaining adversarial resilience for medical classification (see Table 6). The findings show that adversarial-trained models preserve their resilience in the face of different attack configurations. It is important to note that PGD-AT [45] differs from other medical classification models in terms of robustness since it focuses on various methods for creating internal enemies.

Table 6.

White-box accuracy (%) of adversarial trained (PGD-AT [45]) medical classification models for binary and multi-class classification in various scenarios.

We expand our method to adversarial training in the context of medical segmentation problems in addition to demonstrating adversarial resilience for single-label classification models. By analyzing how well these models function under various attack scenarios, as shown in Table 7, we evaluate the efficacy of this adversarial training for medical segmentation. To increase the natural robustness of medical segmentation models, we use the widely used PGD-AT [45] adversarial training technique. Our findings show that compared to their natively trained counterparts, adversarial-trained segmentation models are more resilient to various types of adversarial attacks. Notably, our research shows that attacking the Dice loss still has a greater success rate than attacking the BCE loss.

Table 7.

Results of white-box resilience against PGD attack in various attack configurations utilizing u-net for biomedical segmentation.

5. Discussion

Adversarial phenomena have demonstrated substantial prospective applicability, even within the context of cutting-edge deep neural networks (DNNs), irrespective of the extent of access granted to the attacker in relation to the model, as well as their potential to remain imperceptible to the human visual system. In comparison to various other sectors within the field of computer vision, it has been established that medical-oriented DNNs exhibit heightened vulnerability when subjected to adversarial attacks. Pertinent to this notion, it has been shown that adversarial samples with limited disturbance can trick advanced medical systems, which otherwise demonstrate excellent performance when handling clean data. The aforementioned occurrence highlights the vulnerability of medical deep neural networks (DNNs) when exposed to adversarial inputs.

- A.

- Dataset and Labeling

The availability of labeled datasets for training models in medical imaging is significantly more limited compared to datasets for general computer vision tasks. Typically, datasets for general computer vision encompass a wide range of several hundred thousand to millions of annotated photographs [158]. This scarcity can be attributed to several factors, including concerns over patient privacy and the absence of widely adopted procedures for the exchange of medical data. Another issue is that the process of assigning labels to medical images is a labor-intensive and time-consuming activity, and it is worth noting that the true nature of images in medical databases sometimes presents ambiguity and controversy, even among physicians and radiologists. Hence, this disparity in dataset size has a direct impact on the performance of deep neural networks (DNNs) when used in medical imaging analysis. In light of the scarcity of accessible annotated medical datasets, several scholars have lately put forth several approaches aimed at addressing this issue employing straightforward augmentation techniques. These phenomena have a substantial impact on the generalizability of the network and render it susceptible to adversarial attacks. The effective use of basic augmentation methods, including cropping, rotating, and flipping, has shown to be successful in the creation of unique and unconventional imagery. To address the challenges of vanishing gradient and overfitting, researchers have employed several techniques such as improved activation functions, modified cost function architecture, and drop-out methods [159]. The problem of high computational load has been effectively mitigated by the utilization of highly parallel technology, such as graphics processing units (GPUs), coupled with the implementation of batch normalization techniques.

On the other hand, a synergistic methodology involves the amalgamation of convolutional neural networks (CNNs) with transfer learning approaches [160]. The essence of this approach is in the use of parameters obtained by convolutional neural networks (CNNs) in the context of the primary application to enable the training of the modal. The incorporation of transfer learning into convolutional neural network (CNN) frameworks is a significant direction for future study. This approach shows promise in addressing the challenge of limited labeled medical data. Additionally, a potential approach for increasing the quantity of the dataset involves the utilization of a crowdsourcing technique [103]. The notion of crowdsourcing in the context of health concerns involves the distribution of solutions, facilitated by skilled entities, from a specific research group to a wider population, comprising the general public. This channel offers a compelling direction for future study, facilitating a shift from individual duties to collective endeavors, resulting in societal benefits.

- B.

- Computational Resources

Deep neural networks (DNNs) have become a fundamental component in the domain of machine learning, significantly transforming several disciplines like computer vision, natural language processing, and medical diagnostics. Nonetheless, this has not come without challenges, the process of training DNNs requires a significant amount of computer power, data processing skills, and memory resources. The significance of computational resources lies in their ability to support the sophisticated architecture of deep neural networks characterized by several layers and intricate connection patterns, which enable them to learn hierarchical representations from raw input. However, the effectiveness of training is inherently dependent on the presence of enough computational resources. These resources facilitate the implementation of several rounds through the training dataset, wherein the network adapts its internal parameters to reduce the disparity between anticipated outputs and actual labels. To effectively train deep neural networks (DNNs), it is necessary to have hardware components that possess significant computational capabilities, such as graphics processing units (GPUs) or specialized hardware like tensor processing units (TPUs). These devices have been specifically designed to maximize performance in the extensive parallel calculations required for training neural networks.

AlexNet is widely recognized as one of the most prominent and influential deep neural network (DNN) architectures in the field. The first implementation of AlexNet [172] involved the utilization of dual-GPU training. However, further advancements in GPU computation allowed for the transition to a single GPU configuration, which facilitated the integration of eight deep layers into the network architecture. The AlexNet architecture is widely regarded as the foundational model for many DNN topologies. VGGNet is a convolutional neural network that has been enhanced and improved by Simonyan and Zisserman [173]. The proposed approach employs a series of stacked convolutional layers and a maximum pooling layer in a repetitive manner. The network employed in this study is a commonly utilized architecture that utilizes a range of 16 to 19 convolutional neural network (CNN) layers to extract picture data. The VGGNet’s breakthrough in extracting visual features may be attributed to its utilization of a 3 × 3 convolution and 2 × 2 pooling kernels. It is worth noting that memory constraints are also a challenge, which arises from the presence of several layers, each containing learnable parameters. As a result, a significant amount of memory is needed to record gradients, activations, and intermediate findings during the training procedure. The limitation of memory might impede the training process of big networks or require a decrease in batch sizes, thereby affecting the pace at which convergence is achieved. The training time of deep convolutional neural networks (CNNs) can be rather extensive, resulting in a significant time investment for research and development efforts. Achieving faster training can be facilitated by the utilization of distributed computing setups or by using cloud-based resources. Finally, energy consumption associated with training DNNs is substantial due to the computing requirements involved. The aforementioned element has significant importance within the field of artificial intelligence, as it aligns with the overarching goals of enhancing energy efficiency and promoting environmental sustainability.

- C.

- Robustness against Target Attacks

Within the field of medical imaging, the convergence of artificial intelligence and healthcare has significant potential. However, it is crucial to address the important frontier of achieving resilience against targeted assaults. This requires committed attention and focus. Significant progress has been achieved in enhancing the security of medical imaging systems against non-targeted or random assaults, as shown by the study conducted by Bortsova et al. [174]. However, the emergence of adversarial threats in the form of focused attacks poses a novel and severe obstacle. In the context of targeted assaults, malevolent entities purposefully aim to influence the model to induce certain diagnostic mistakes, which might pose a threat to the well-being of patients. The resolution of this dilemma necessitates a fundamental adjustment in the approach to research and development, accompanied by a significant need for tactics that possess the capability to not only identify but also successfully counteract these specific types of assaults. The study conducted by Han et al. [175] highlights the need to address this research gap to maintain the reliability and credibility of medical imaging systems. Nevertheless, the inherent features that made AI-powered medical imaging systems very promising also expose them to potential vulnerabilities in the form of adversarial assaults. Adversarial assaults include the deliberate manipulation of input data to mislead the AI model into generating inaccurate or detrimental predictions. In instances of non-targeted or random assaults, the primary objective of the attacker is to impede the operational capabilities of the model without a predetermined objective or intended recipient. These assaults may elicit worry, but they may not always result in precise diagnostic mistakes.

In contrast, targeted assaults are purposefully planned with the explicit intention of causing the AI model to produce predetermined diagnostic mistakes. Within the domain of medical imaging, this may include inducing the model to erroneously classify a medically fit individual as suffering from a grave pathological illness or to disregard a crucial sickness. The ramifications of these specific assaults in the healthcare sector are significant, possibly leading to the postponement or inaccuracy of medical interventions, superfluous medical procedures, or even endangerment of people. The current framework of defensive mechanisms in medical imaging mostly focuses on enhancing the system’s ability to withstand non-targeted or stochastic assaults. The purpose of these defensive mechanisms is to enhance the resilience of the AI model against little perturbations in input data, hence preventing the generation of inaccurate outcomes caused by modest changes in picture quality or structure. Although the aforementioned defenses provide unquestionable value, their efficacy in countering targeted assaults may be limited due to their potential inadequacy in addressing the complexity and purposefulness of such attacks. The study conducted by Bortsova et al. [174] serves as a pertinent reminder of the current emphasis on non-targeted assaults in the field of medical imaging. The research highlights the need to enhance the overall resilience of AI models to protect against typical hostile perturbations that may arise during regular functioning.

To bolster the resilience of medical imaging systems against deliberate assaults, future research endeavors must investigate novel methodologies and approaches. One potential avenue of research is the exploration and refinement of adversarial training approaches that are expressly designed to address the weaknesses that are exploited by targeted assaults. The process of adversarial training entails the incorporation of adversarial instances into the training dataset, which allows the model to acquire the ability to identify and withstand manipulations that are often found in real-world scenarios. It is crucial to note that the advancement of countermeasures against targeted assaults should not only prioritize detection but should also emphasize active mitigation. This entails enhancing medical imaging systems with the capacity to promptly detect and address any dubious input data. If a targeted attack is identified or presumed, the system needs to possess established processes that can promptly identify and notify healthcare professionals of the problem. Furthermore, the system should be equipped to possibly implement countermeasures to avert or mitigate the adverse effects of the assault on patient care. The research conducted by Han et al. [175] highlights the urgent need to tackle the issue of targeted assaults within the field of medical imaging. The research community must acknowledge the significance of this research gap and give precedence to the creation of strong defenses specifically designed for the intricacies of healthcare applications.

- D.

- Evaluation of Transferability and Adaptability

The concepts of applicability have become crucial factors that contribute to the increased effectiveness of attacks, resulting in a higher vulnerability of deep neural networks (DNNs) to misclassification. Previous research has mostly focused on examining the vulnerability of the network to basic, non-targeted attacks. Nevertheless, these basic attacks have limited effectiveness in some deep neural network (DNN) models and may be easily detected using newly developed security techniques. Similarly, there has been a significant effort to create adversarial attacks that surpass the limitations imposed by certain models and images. This has emerged as a crucial obstacle in current research in the field of computer vision. These sophisticated attacks, which are not limited by specific models or images, have the potential to be applied in many medical learning tasks. there is a lack of well-developed security mechanisms and detection systems specifically designed to mitigate universal attacks. The lack of effective defenses in the field is further emphasized by the increased vulnerability of deep neural networks (DNNs) to universal attacks, which may fool with greater ease and use fewer resources. The metric of adaptability is a significant measure that arises within the contextual framework of previous research efforts. The relevance of this phenomenon is in its ability to measure the degree to which an attack might spread its effects across several models, especially when these models are limited to a black-box state. According to [63], it has been hypothesized that attacks with high adaptability would need a sacrifice in the quality of the adversarial image while maintaining a high success rate (100%) of the attack. The application of unsupervised learning has gained popularity as a means to enhance adaptability. It functions as a feasible approach to improve the ability of networks to effectively respond to the transmission of adversarial perturbations. Hence, the primary issues and obstacles that are prevalent in the current study domain focus on the fundamental concepts of transferability and universality within the framework of adversarial attacks on deep neural networks (DNNs).

- E.

- Interpretability and Explainability

The notions of interpretability and explainability have become fundamental principles in the domain of artificial intelligence and machine learning, especially in the context of healthcare applications. The confidence that doctors and healthcare practitioners have in defensive AI systems is significantly influenced by these two interrelated characteristics. This subject has been extensively investigated in the research conducted by Saeed et ai. [176]. The trust gap has far-reaching ramifications within the healthcare sector, particularly with the decision-making process of physicians, which directly affects the well-being of patients. Busnatu et al. [177] argue convincingly for the need to emphasize interpretability and provide clear explanations for the decision-making processes of future defenses to address these problems. The use of interpretability in AI for healthcare not only improves its overall efficacy but also fosters increased acceptability among clinical professionals. To comprehensively examine the importance of interpretability and explainability, it is necessary to first acknowledge their inherent interrelation. The concept of interpretability pertains to the capacity of an artificial intelligence (AI) model to be grasped and understood by individuals, specifically those who possess expertise in the relevant field. On the other hand, explainability focuses on the capability of these models to provide explicit and logical arguments for the judgments they make. Collectively, these characteristics provide a level of transparency into the internal mechanisms of artificial intelligence (AI) systems, a trait that is progressively essential within the healthcare domain.

The absence of interpretability inside artificial intelligence (AI) systems has significant implications. When artificial intelligence (AI) models function as opaque entities sometimes referred to as “black boxes” (a phrase used by Saeed et al. [176]) healthcare professionals face a basic quandary. Patients are required to rely on these systems for crucial healthcare decisions, sometimes without possessing a comprehensive understanding of the specific processes or rationales behind a given suggestion or diagnosis. The lack of transparency undermines the confidence that healthcare professionals have in AI-driven solutions, leading to hesitancy and doubt. To tackle this difficulty, it is crucial to establish artificial intelligence (AI) defenses that possess both efficacy and interpretability. An interpretable AI system offers doctors a transparent comprehension of its decision-making process, enabling them to place more faith in and seamlessly incorporate AI suggestions into their workflow. The concept of interpretability may be seen in several forms. For instance, an artificial intelligence (AI) system can provide a comprehensive analysis of the aspects it took into account throughout the process of generating a suggestion. This includes emphasizing the most significant variables and their corresponding weights. In addition, it has the potential to provide doctors with visual representations or explanations in natural language, enhancing transparency and facilitating accessibility in the decision-making process.

Furthermore, the need for interpretability goes beyond singular suggestions. Artificial intelligence (AI) systems must include the capability to provide explanations for the rejection or disregard of certain inputs. In the field of healthcare, where each data point can influence a patient’s diagnosis or treatment plan, it is essential to comprehend the reasons behind the exclusion of a certain piece of information. These data equip healthcare professionals with the necessary knowledge to make well-informed judgments and guarantee that crucial particulars are not unintentionally disregarded. The study conducted by Busnatu et al. [177] highlights the importance of this particular technique. Their research connects with the wider industry trend towards transparent and trustworthy AI in healthcare by stressing the significance of interpretable AI defenses that provide a clear justification for their judgments. The advantages of interpretable artificial intelligence (AI) extend beyond enhancing trust and acceptability in healthcare settings. Additionally, they assist in continuous endeavors aimed at tackling concerns related to bias, justice, and accountability within artificial intelligence (AI) systems. The identification and rectification of biases or mistakes in data or algorithms is facilitated when AI judgments are clear and intelligible. This enhances the ability of AI systems to provide equitable and dependable assistance to physicians serving different patient groups. In their 2023 research, Saeed et al. [176] appropriately highlighted the understandable hesitance of practitioners to use “black box” artificial intelligence (AI) solutions. To address this hesitancy and effectively use artificial intelligence (AI) in the field of healthcare, it is imperative to prioritize the development of interpretable AI defensive mechanisms. The aforementioned defenses must not alone give precise suggestions but also provide physicians with lucid insights into the process of decision-making. By using this approach, it is possible to establish a connection between parties, enhance the capabilities of healthcare professionals to make well-informed choices and to guarantee that artificial intelligence (AI) becomes a beneficial partner in the endeavor to achieve improved healthcare results. The proposition made by Saeed et al. [176] for the implementation of interpretable AI defenses has significant importance in shaping the future of healthcare.

- F.

- Real-time Detection and Response