1. Introduction

Speaker verification is the task of accepting or rejecting a claimed identity of an individual from a set of speakers [

1]. Based on the train and test text, speaker verification can be alienated into text-dependent or text-independent [

2]. Text-dependent is the system where training utterances are the same as test phrases. Whilst text-independent refers to the system in which training and testing phrases are dissimilar. The verification procedure is based on predefined speaker models which were trained using inexpressive (neutral) speech. If the evaluation is performed using emotional speech, then the speech characteristics diverge from their estimated values and hence, results in a mismatch among train and test conditions [

1]. This mismatch represents one of the actual challenges in the prevailing speech technologies as it has been demonstrated in previous studies, that it significantly leads to the performance diminution of automatic speech recognition systems [

2,

3,

4,

5].

Over the past years, the machine learning community has recognized the advent of innovative deep learning models [

6]. These neural networks have demonstrated prodigious efficiency in achieving state-of-the-art performance results and have since become the tool of choice in several benchmarks and different artificial intelligence fields such as speaker identification/verification [

7].

Nevertheless, CNN models endure several shortcomings and defects in their implementations which may lead to limitations in the performance of certain applications [

8]. One of the fundamental challenges they face is their failure to seize the spatial relationships of low-level speech features which characterize the physical structure of an individual including pitch and energy [

9]. This problem is regularly overcome by incorporating max-pooling layers or constructing a deep neural network, causing each high-layer neuron to have a bigger receptive field. Yet, max-pooling may result in the missing and loss of some key information in the data by disregarding all except the neurons with the utmost activation value and there is an upper boundary in enlarging the receptive field by both the max-pooling and deep network [

9]. To compensate for this loss, CNN, therefore, necessitate lots of training data which makes image reconstruction a very demanding and complex operation in comparison to that in the CapsNet model [

8]. In addition, CNN are deemed more vulnerable to adversarial attacks, such as pixel perturbations, leading to erroneous classifications [

8]. Moreover, the kernels present within a CNN layer should assimilate to recognize the existence of all related characteristics in the input. As a result, transformations such as rotations and occlusion can have deleterious effects when the training dataset is not appropriately increased. Even so, the load of learning visual attributes as well as all probable alterations of these characteristics can be massive for a traditional convolutional model [

7].

To overcome the drawbacks and shortcomings of traditional deep learning architecture such as CNNs, Sabour et al. [

10] introduced a novel scheme that employs the notion of a capsule together with an iterative routing-by-agreement mechanism known as a capsule neural network. This network can be viewed as a new class of deep neural network architectures which models, in a better manner, the hierarchical relationships in the heart of a neural network. According to Patrick et al. [

8], a capsule can be represented as a number of neurons that receives and produces vectors instead of the standard scalar activations output by most of the former deep neural networks and CNN. By employing the vector neuron, the capsule network is capable of seizing the different pose information such as translation and rotation of objects and encapsulating it into a small vector which solves the problem of the loss of spatial information found in CNN [

6,

9]. The capsule network encodes the probability of an entity as its length and the pose information as its orientation. Then, using the routing by agreement algorithm between vectorized features, the network is able to learn the hierarchical relationships between the pose information contained within the network. The goal of these capsule vectors is to convey a richer description of information within the network [

9].

On the other hand, the Capsule network dowries a key constraint which is the compression algorithm that is utilized in CNN and cannot be employed directly to CapsNet. In addition, the CapsNet model is deemed a slow algorithm due to the loop that exists within the iterative dynamic routing mechanism [

11]. The number of capsule layers being deeper makes the computational complexity high in comparison to CNN models. As a result, and as an attempt to overcome the aforementioned limitations in Capsule Networks, we propose a novel solution for speaker verification which is Dual-Channel LSTM Compressed-CapsNet. In fact, this paper elucidates and studies the performance of a text-independent speaker verification system under emotional as well as stressful conditions by adopting Dual Channel–Long Short-Term Memory Compressed Capsule Neural Network (DC-LSTM-COMP CapsNet). The performance verification results attained by our proposed model are further compared to the performance achieved by the original capsule network, CNN as well as a number of classical classifiers: K-Nearest Neighbour (KNN), Support Vector Machine (SVM), and Multi-Layer Perceptron (MLP) using four distinct emotional and stressful datasets. The experimental results report that the proposed model leverages the average verification performance in terms of EER and area under the curve (AUC) evaluation metrics. The obtained values are better compared to those attained by CNN as well as the original CapsNet model. Furthermore, the proposed model has a reduced computational complexity compared to CNN as well as the original CapsNet model.

2. Literature Review

Nowadays, learning-based methods using AI are found in every aspect of society. For instance, Cao et al. [

12] suggested a novel label distribution learning (LDL) method in the domain of computer vision. The authors implemented the LDL approach using Anisotropic Spherical Gaussian (ASG) in order to predict face orientation from a single RGB image. The approach used the spherical Gaussian distribution on a unit sphere which produced unbiased expectation. Findings showed that the performance of the proposed framework outperformed the state-of-the-art methods on both AFLW2000 and BIWI datasets. Liu et al. [

13] investigated the performance of a one-stage Spatial Granularity Network (SG-Net) in video instance segmentation (VIS). The proposed SG-Net involved a feature extraction backbone, a detection head, a mask head, and a tracking head. The results showed enhanced performance in comparison to the two-stage method in terms of accuracy and inference speed using the YouTube-VIS dataset. Yan et al. [

14] proposed a Global-Local Representation Granularity (GL-RG) scheme in the domain of video captioning. The proposed approach improved the modeling of the representation of global-local vision across video frames for caption generation. The authors used considerable visual representations from different video ranges to enhance linguistic expression. In addition, they designed a new global-local encoder in order to generate rich semantic vocabulary. Besides, the authors implemented an incremental training strategy that organizes the learning of the model in an incremental manner. Experimental results demonstrated the effectiveness of the proposed model over the conventional methods using Microsoft Research Video to Text (MSR-VTT) and Microsoft Research Video Description (MSVD) databases.

The advent of deep neural network (DNN) approaches during the last decade had brought notable robustness, over traditional classifiers, to many applied domains including speech and speaker recognition which attracted considerable attention in relevant research grounds [

15]. For instance, Hourri et al. [

16] recently suggested a novel method using CNN with the aim to extract speaker characteristics by constructing CNN filters associated with the speaker. Additionally, they proposed novel vectors called convVectors. Evaluation of the gender-dependent database under noisy environments demonstrated that the proposed vectors have contributed to the leveraging of the performance, compared to the baseline, by 43% and achieving an EER equivalent to 1.05%. The work effectuated by Zhou et al. in [

17] proposed two speaker embedding methods by integrating the phonetic features through the attention mechanism into the deep convolutional network for the task of speaker verification. Furthermore, the authors explored multi-head attention and discriminative objectives in an attempt to enhance the overall system performance. The proposed scheme was evaluated on the VoxCeleb database and results showed that the incorporation of the phonetic content yielded up to 43% improvements in EER over the state-of-the-art models. Zhao et al. [

18] explored two dissimilar frameworks with the aim to model long temporal contexts in order to enhance the verification performance of the residual neural networks (ResNets). The first framework is based on joining the utterance-level mean together with variance normalization into the ResNet framework. The second approach merges the Bidirectional Long Short-Term Memory (BLSTM) and ResNet into one single scheme. Experimentations on the VoxCeleb1 corpus as well as a Microsoft internal, MS-SV, test set reveal that with attentive pooling, the advocated approaches attain up to 23–28% relative enhancement in terms of EER over a well-trained ResNet. The work performed by Hajavi and Etemad in [

19] explored the idea of using a Siamese capsule network for text-independent speaker verification in the wild. The architecture is based upon a thin-ResNet in order to extract speaker representations from speech samples and Siamese capsule network as well as dynamic routing to compute a similarity score between two embeddings. Results showed that the proposed model performs outstandingly better than all other benchmark models using less training data size achieving an average EER equivalent to 3.14%. Besides, results demonstrated that the outmost performance is attained by using representations generated directly from the feature aggregation module of the thin-ResNet and transiting them to higher-level capsules using the iterative algorithm of dynamic routing. A recent work by Shahin et al. [

20] proposed four novel DNN-based hybrid techniques in the context of speaker verification under emotional talking environments. The findings demonstrated that hidden Markov model—deep neural network (HMM-DNN) achieved the least error rates amongst all hybrid models and that deep neural network—Gaussian mixture model (DNN-GMM) yielded the least computational complexity.

Some authors considered the use of data fuzzification in the signal preprocessing stage such as [

21,

22,

23]. Their studies showed that fuzzification allows for eliminating the problem of information loss that may be associated with preprocessing methods and contribute to improve the accuracy and efficiency of the classification. The experimental analysis demonstrated that the accuracy is increased with the use of fuzzy logic. On the other hand, many articles did not use data fuzzification in the preprocessing phase and obtained outstanding classification results which outperformed the state-of-the-art methods such as [

24,

25,

26]. In fact, Zhang et al. [

24] demonstrated that the experimental results showed the excellent performance of the proposed model in comparison to the existing state-of-the-art models. Furthermore, Tuncer et al. [

25] stated that the best classification accuracy of the proposed Led-Pattern model named: LEDPatNet19 for the GAMEEMO dataset attained 99.29%. Moreover, Mohebbanaaz et al. [

26] showed the superior performance of their proposed classifier with classification results equivalent to 98.77%. In this paper, we did not use fuzzification but we will employ it in our future work in order to examine its effect on speaker recognition performance.

Recently, capsule neural networks have invaded a variety of domains and have demonstrated promising performance results in the pitch of action recognition [

27], hand-written and text recognition [

28,

29,

30], speech emotion recognition [

6,

31], and applications including speech and speaker recognition [

19,

32], etc. Shahin et al. [

33] used dual-channel long LSTM capsule networks for emotion recognition. The authors showed that the proposed model yields better accuracy than other machine learning models. Yet, there exist very limited studies which employed the use of CapsNet in the context of speaker verification. Bae and Kim [

9] worked on recognizing speech commands where the study focused on utilizing CapsNet to pose information from spectrogram features of speech.

This particular work is different from the literature in the following points: None of the prior studies explored speaker verification using emotional or stressful acoustic features of speech. In addition, the CapsNet employed in previous work did not involve the model compression approach that was applied to CNN nor the implementation’s computational complexity during the testing phase.

The main contribution of our work is the implementation of a novel proposed Dual Channel compressed LSTM CapsNet model. As far as we are aware, no work has employed the DC-LSTM-COMP to CapsNet in order to assess and evaluate the speaker verification performance in emotional and stressful environments. We carried out a series of experiments in order to evaluate the performance of our proposed model. The novelty of our study can be highlighted in the following points:

Proposing and implementing a novel CapsNet classifier for speaker verification;

The verification performance is evaluated using four distinct databases. The emotional and stressful features of speakers are utilized;

Comparison between the average verification accuracy achieved by the proposed architecture and that attained by the original CapsNet, CNN, SVM, MLP, and KNN;

Conduction of computational complexity study by measuring the testing time of each of the proposed method, original CapsNet, CNN, SVM, MLP, and KNN.

The rest of the paper is structured as follows.

Section 3 introduces the emotional and stressful speech databases as well as the feature extraction technique.

Section 4 delivers the perception and the implementation of the proposed algorithm in the context of speaker verification. In

Section 5, the threshold and the verification process are described.

Section 6 discusses how the employment of DC-LSTM-COMP CapsNet for speaker verification is different from its use for emotion recognition.

Section 7 analyses the experimental results.

Section 8 presents a discussion of the main findings, limitations as well as future research. Ultimately,

Section 9 concludes the paper.

3. Speech Corpora and Features Extraction

We used four different emotional and stressful databases: A private “Arabic Emirati speech dataset (ESD)”, “Crowd-sourced Emotional Multimodal Actors (CREMA)”, “Speech Under Simulated and Actual Stress (SUSAS)” database [

34], and the “Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS)” [

35]. Six classes of emotional states across all databases are considered: neutral, anger, happiness, sadness, fear, and disgust. The selected stressful styles are “neutral, angry, fast, slow, soft, and Lombard”.

3.1. Emirati Speech Dataset (ESD)

ESD is an Arabic dataset that involves eight different utterances widely used across the United Arab Emirates. The database consists of a total of 31 non-expert Emirati speakers (22 female, 9 male) with ages spanning between 18 and 55 years old who were prompted to utter each sentence in one of the following emotional states: neutral, anger, happiness, sadness, disgust, and fear. Every single phrase is repeated nine times.

Table 1 tabulates the eight different sentences and their English version. The training phase is made of 24 out of 31 speakers (7 males and 17 females) articulating 5 out of the 8 utterances. Every single speaker repeats each utterance 9 times in the neutral condition. As a result, the total number of samples designated for training is equivalent to 1080 (24 speakers × 5 sentences × 9 replicates/sentences in the neutral state). The remaining 7 speakers (2 males and 5 females) are allocated for both the enrolment and evaluation phases. In enrolment, the corresponding speakers express the first 5 sentences under the neutral condition which results in a total of 315 sentences (7 speakers × 5 utterances × 9 repetitions/utterances in the neutral condition). During the evaluation, the corresponding speakers express the remaining 3 utterances (out of 8) with 9 repetitions per utterance under neutral, angry, happy, sad, fear, and disgust emotional states. Henceforth, the test stage is made of 1134 samples “7 speakers × 3 sentences × 9 repetitions × 6 emotions”.

3.2. CREMA-D

A multimodal audio-visual English dataset comprising 7442 clips from 86 speakers (48 men and 43 women) of various ages and ethnicities, is the emotive CREMA dataset utilized in this work [

36]. Each speaker was asked to record 12 different statements in six different emotional categories: anger, happy, sad, disgust, fear, and neutral. The sentences include: “I think I’ve seen this before (ITS)”, “Don’t forget a jacket (DFA)”, “We’ll stop in a couple of minutes (WSI)”, and “The surface is slick (TSI)”. A total of 70 speakers are trained out of a total of 86. Under the neutral emotion, each speaker delivers 8 out of 12 sentences with one repetition of each utterance. As a result, there are a total of 560 utterances in the training phase: (70 speakers × 8 utterances × 1 time/utterance in the neutral condition). The last 16 speakers expressing the first 8 utterances under the neutral state are utilized for enrolment. The total amount of phrases used in the enrolment process is 128 (16 speakers × 8 utterances × 1 time/utterance under the neutral condition). The remaining 16 speakers articulate the final four phrases under each one of the emotional classifications during the evaluation stage. As a result, the size of the dataset in the evaluation phase is 384 (16 speakers × 4 sentences × 1 time/sentence × 6 emotional classes).

3.3. SUSAS

SUSAS database is a public English corpus developed by the Robust Speech Processing Laboratory at the University of Colorado-Boulder and funded by the Air Force Research Laboratory. It consists of two subfolders: “speech under simulated stress and speech under actual stress” [

34]. The former sub-corpus entails the speech generated while performing one of the following: “(1) dual-tracking workload computer tasks, or (2) subject motion-fear tasks (subjects in roller-coaster rides)”, whereas, the latter sub-folder, contains the simulated speech. The database is made up of nine speakers simulating three diverse regional accents and 11 stressed speaking styles. The accents are: “classes (Boston accent, General American accent, and New York accent)”. The two subcorpora encompass 35 distinct words, each is repeated twice for every speaker and every speaking style. The following stressful speaking styles are used in this work: neutral, angry, fast, slow, soft, and Lombard. The audio tokens are downsampled to 8 kHz with the aid of a 16-bit analog-to-digital converter. The training stage comprises 6 speakers out of 9 (3 speakers of Boston accent and 3 other speakers of General American accent) uttering 30 out of 35 words. During this phase, every single speaker replicates each word twice in the neutral state. Hence, a total of 360 utterances (30 words × 2 repetitions/word × 6 speakers in the neutral state) are designated for the training stage. The remaining 3 speakers are used for enrolment and testing purposes. They are of New York regional accent, enunciating the last five words in each of the six stressful acoustic conditions which are: “neutral, angry, slow, soft, Lombard, and fast”. In total, 180 speech samples (5 words × 2 repetitions × 3 speakers × 6 stressful states) are utilized for the testing stage.

3.4. RAVDESS

An English database called RAVDESS, consisting of 7356 recorded files with 24 professional actresses and actors, 12 of which are female and another 12 of which are male [

35]. It is comprised of two distinct utterances with a neutral North American English accent. There are 1440 audio files (60 trials per speaker × 24 speakers), 1012 song files (44 trials per speaker × 23 speakers), and 4904 video and multimedia files included in the corpus. Only audio and music files are utilized in this project. There are eight different emotional classifications in the RAVDESS dataset. “Neutral, angry, sad, happy, fear, and disgust” are the only six emotions that are evaluated. The first utterance (out of 2) is spoken by 20 speakers (10 male and 10 female) out of a total of 24 speakers in the training stage, with each utterance being replicated twice. Nevertheless, the total number of utterances in the training phase is 78, with both audio and song files contributing: 40 sentences from the audio files (20 speakers × 1 utterance × 2 attempts/utterance under the neutral condition) + 38 utterances from the song files (19 speakers × 1 utterance × 2 attempts/utterance in the neutral state). The last 4 speakers (2 male and 2 female) are used in the enrolment and evaluation phases. During the enrolment stage, the associated speakers utter the first sentence in the neutral state, leading to 8 sentences from audio files, overall (4 speakers × 1 utterance × 2 attempts/utterances in the neutral state) + 8 sentences issued from the song files (4 speakers × 1 utterance × 2 attempts/utterance in the neutral state). During the evaluation, the associated speakers articulate the second sentence under neutral and each of the emotional classes. As a result, the test phase will include a sum of 160 utterances from both audio and song files. Under neutral and disgust, a total of 16 sentences (8 from audio and 8 from song files) are used (4 speakers × 1 utterance × 2 attempts/utterances in the neutral condition). Under each of the happy, sad, angry, and fear emotions: a total of 32 sentences (16 from audio and 16 from song files) are used (4 speakers × 1 utterance × 4 attempts/utterances × 6 emotional states).

3.5. Feature Extraction

MFCCs are used to develop the features of the audio signals. They have been the most dominant acoustical features employed in the fields of speech and speaker recognition schemes due to their high-level estimation of human auditory perception [

37,

38]. In this study, 40-dimensional MFCCs, their delta, and delta-delta coefficients are the features extracted during the pre-processing phase. Experimental trials demonstrated that the concatenation of MFCC, delta, and delta-delta coefficients enhances the verification performance. In this work, the extraction of acoustic features is performed using libROSA which is a Python module. The frequency

f is converted to Mel frequency

m using Equation (1) [

39],

the delta coefficients are computed using the following equation [

40]:

where

represents a delta coefficient from frame

t calculated using the static coefficients

.

N is typically selected as 2. The delta-delta features are calculated in a similar way; however, they are computed in terms of the deltas instead of static coefficients.

4. The Proposed Model

4.1. Capsule Networks Model

A capsule neural network (CapsNet) is a sort of artificial neural network [

41]. Unlike regular artificial neural networks which consist of layers and connections, capsules are three-dimensional (3D) objects with volume, position space, and can be seen as “capsules of knowledge”. Each CapsNet is made of an input layer, one or more hidden layers, and an output layer.

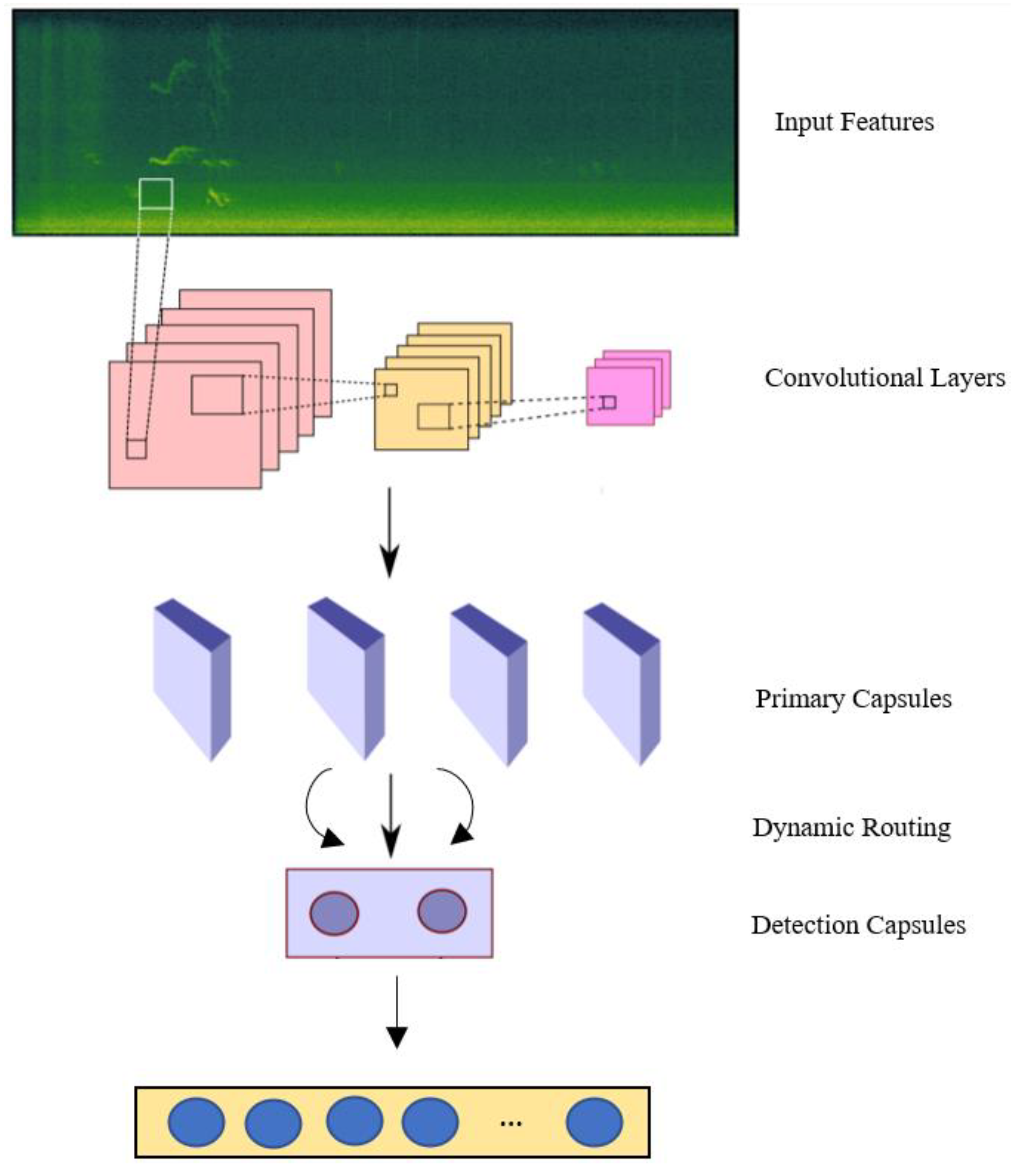

The input to the capsule goes through all the other capsules in the network, similar to how rays go through lenses in a telescope. The block diagram of CapsNet is portrayed in

Figure 1 [

42]. First, the input features activity vector is characterized by a voice spectrogram. This feature vector is the input for the traditional convolutional layers where max-pooling is used. Next, the output of CNN is used as the input for the primary capsule layer. Each capsule has an independent feature vector which is made up of multiple neurons. Next, a dynamic routing algorithm occurs in order to compute the output. The obtained vector is then forwarded to the detection capsules. Ultimately, the output layer represents the network output predictions. They are calculated by employing the Euclidean norm to the output of each detection capsule. The obtained values are the observation probabilities.

The vector inputs and outputs of a capsule are computed in such a way that the length corresponding to the output vector of a capsule characterises the likelihood that the entity characterised by the capsule is existing in the input. As a result, the non-linear “squashing” function is used in order to guarantee that the length of short vectors gets narrowed to nearly zero, while the length of long vectors gets narrowed to slightly below 1. The vector output

of a capsule

j is obtained by applying the squashing function to the total input of that capsule

. The vector output

is described by Sabour et al. [

10] as follows:

The total input of a capsule

(except the first layer of capsules) is the sum of the multiplication of all prediction vectors

obtained from the lower-lever layer, by the coupling coefficients

. These coefficients represent the coupling coefficients between capsule

i and capsule

j in the lower layer and are calculated using an iterative dynamic routing mechanism. According to Sabour et al. [

10], a prediction vector

is formed by multiplying the output obtained from the layer below

by a weight matrix

4.2. The Perception of the Proposed Model

We implement a Dual-Channel LSTM Compressed-CapsNet which is capable of resolving the limitations of the original capsule net model. The proposed approach is able to reduce computational complexity by compressing the scale of system computation. This method is followed by eliminating the outliers. As a result, CapsNet would have improved performance due to its perfect compressed input features. The input consists of the set of capsules that are involved in the solid capsule layer, where every single capsule contains n instantiation parameters.

4.3. The Proposed Modified Model Architecture for Speaker Verification

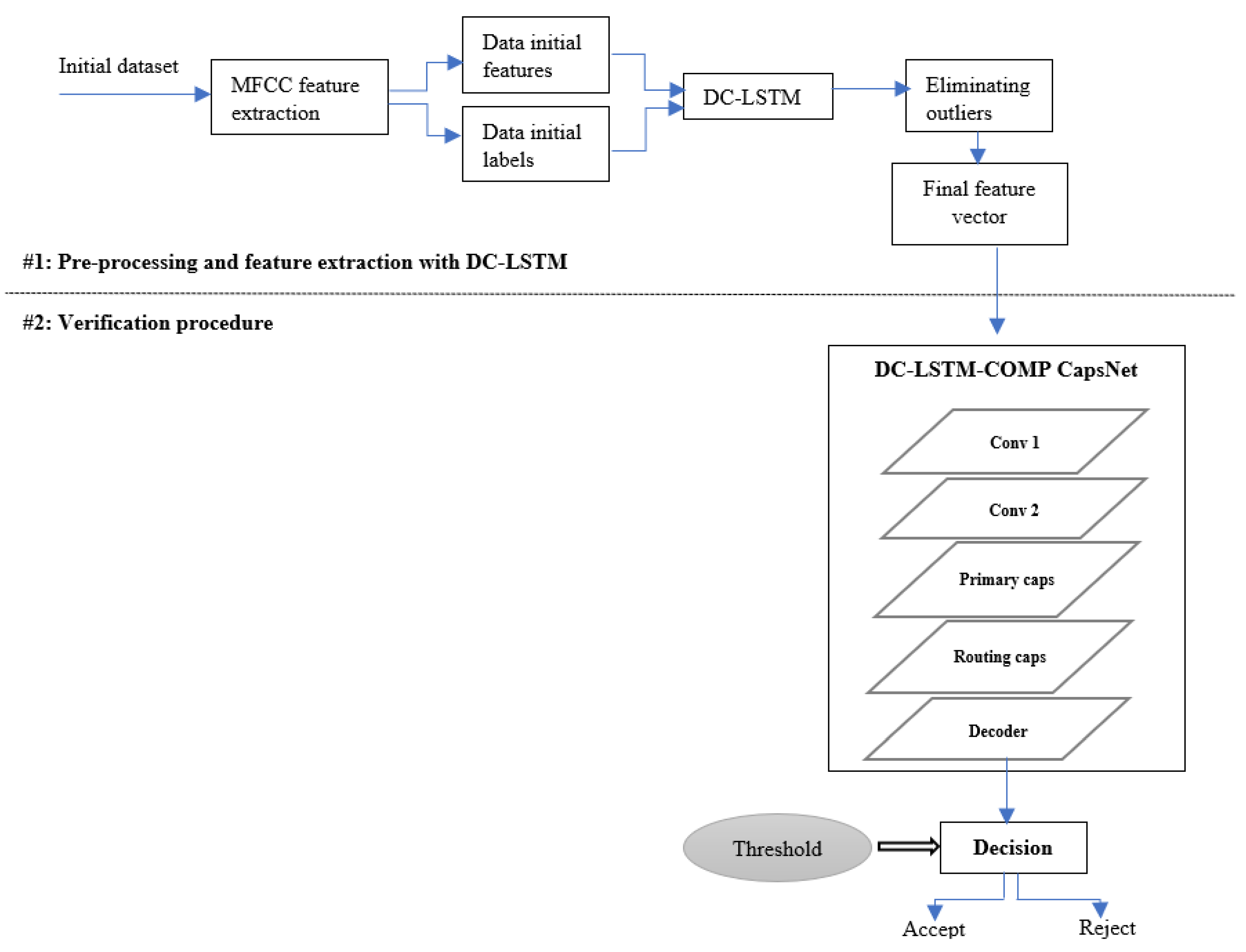

The task of speaker verification differs from speaker identification in the fact that it consists of three major phases: training/development, enrolment, and evaluation. The role of each phase is explained in the subsequent paragraphs. A general block diagram of the overall proposed algorithm is depicted in

Figure 2.

4.3.1. Training/Development

During the training phase, the proposed DC-LSTM-COMP CapsNet model is implemented and trained. Primarily, the labelled train data, which corresponds to the utterances expressed in the neutral state and uttered by the speakers allocated for training, are pre-processed using DC-LSTM as shown in

Figure 2. The outcome of this step is a compressed feature vector which is the input of the proposed DC-LSTM-COMP CapsNet model. Then, the implemented model is prepared to be trained with the aforementioned vector and speaker labels. The training is performed using the Adam optimizer and the Mean Square Error (MSE) loss. The MSE equation is defined by Bickel and Doksum [

43] as follows:

where

n is the number of data points,

are the observed values and

are the predicted values.

The output of the model in this stage is the speaker classification result.

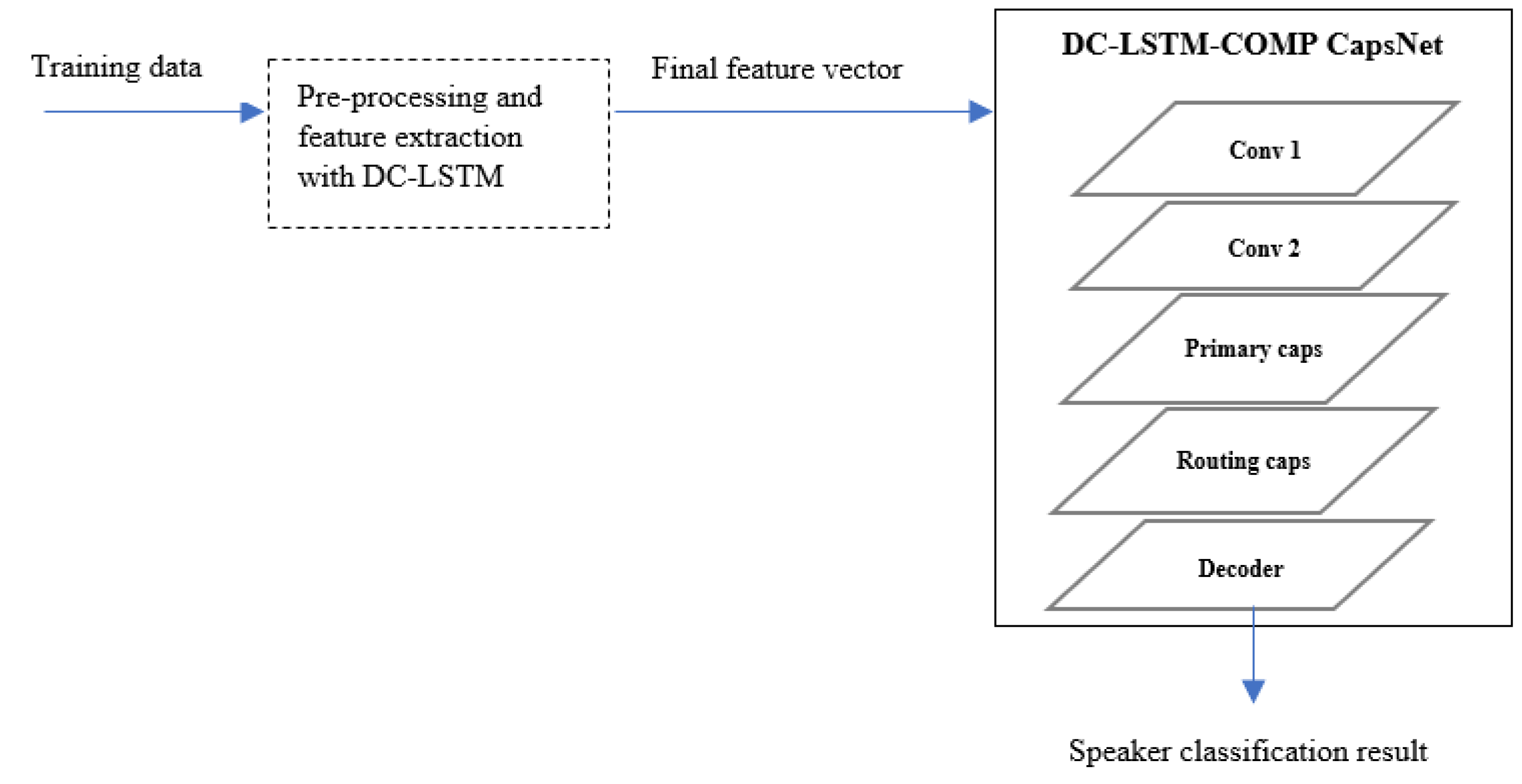

Figure 3 illustrates the architecture of the proposed approach during the training phase. For training, the × 86_64 windows machine and Intel(R) Core(TM) i5-8265U CPU @ 1.60 GHz 1.80 GHz Processor were used. In this work, the graphics processing units (GPUs) were not utilized.

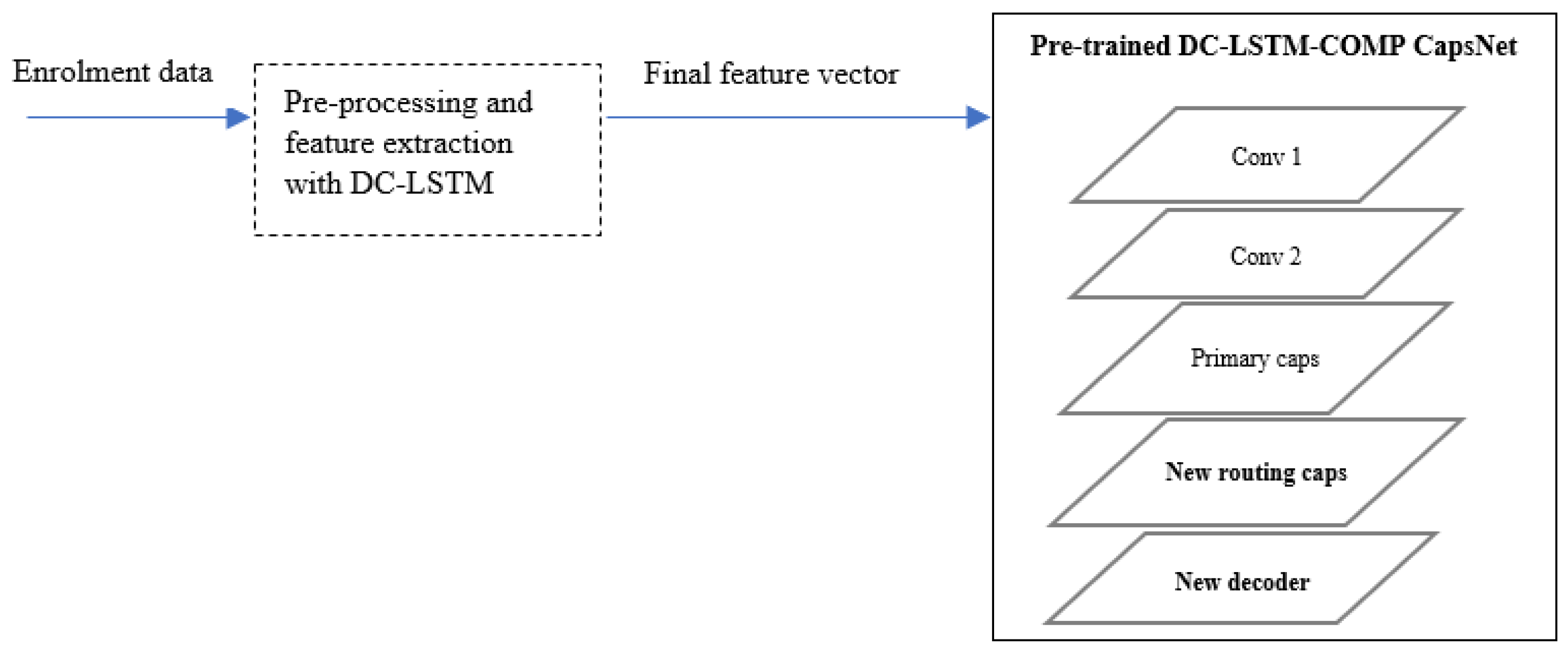

4.3.2. Enrolment

During enrolment, the corresponding utterances pronounced by the enrolment speakers under the neutral state are utilized. Initially, the enrolment utterances undergo the preliminary step of pre-processing and feature extraction with DC-LSTM. This preprocessing method is depicted in detail in

Figure 2. The resulting compressed feature vector forms the input of the pre-trained model (which was trained during the development phase in

Section 4.3.1). Then, the routing capsules along with the decoder of this loaded model are all discarded. Afterwards, new routing capsules are inserted. These routing capsules have a number of capsules equivalent to the number of speakers dedicated for enrolment. Subsequently, a new decoder is incorporated. The size of the input sample of this decoder is equal to the number of speakers designated for enrolment. Eventually, the newly created model is trained using the number of speakers dedicated for enrolment along with their corresponding labels. This model is retrained using an Adam optimizer, MSE Loss criterion, and with 15 epochs.

Figure 4 portrays the block diagram of our proposed model in the enrolment phase.

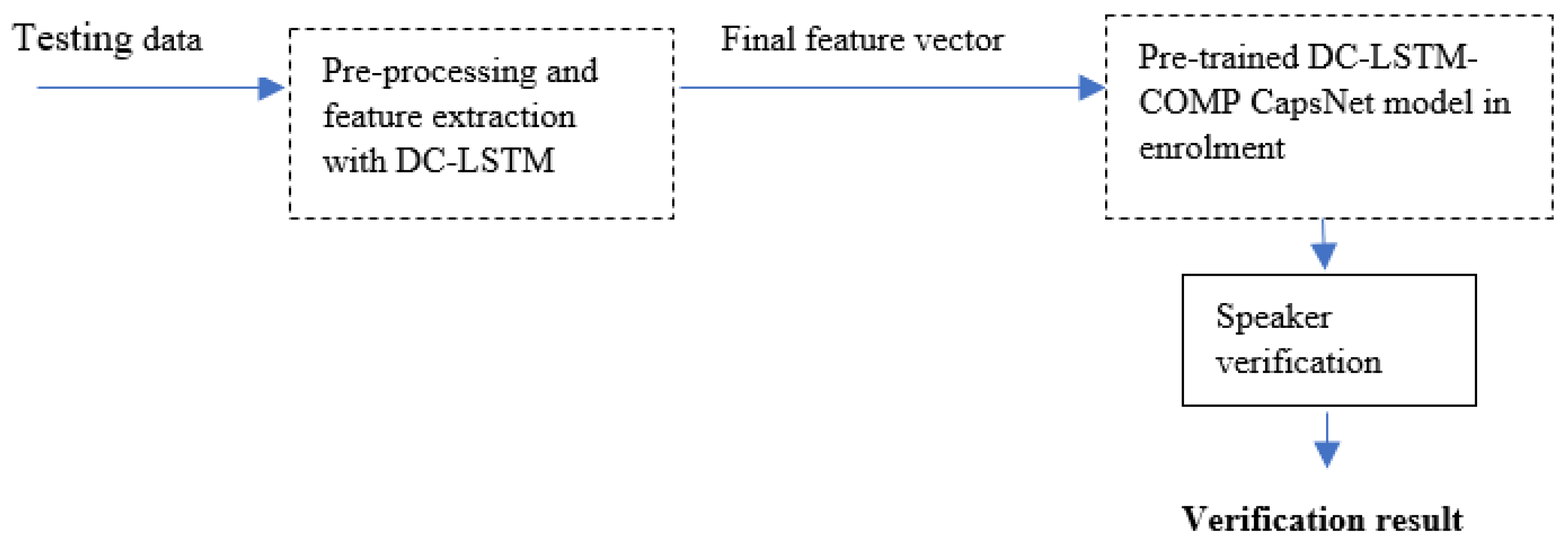

4.3.3. Evaluation

During the evaluation, first, the MFCCs and the features extracted using the DC-LSTM of the test data are derived. The test data corresponds to the sentences expressed in the different emotional/stressful states spoken by the speakers designated for evaluation. At the end of the pre-processing step, each test sample under every emotional/stressful category of each speaker has its own compressed feature vector. Afterwards, the generated feature vectors are fed into the pretrained DC-LSTM-COMP CapsNet model, which was trained in the enrolment phase in

Section 4.3.2. Eventually, the verification of speakers is accomplished. A speaker identity is either accepted or rejected based on a selected threshold (see

Section 5).

Figure 5 demonstrates the block diagram of the proposed architecture during the evaluation phase.

4.3.4. Architecture of Proposed Model

The first layer is a 2D convolutional layer with a number of channels produced by the convolution equivalent to 512. The size of the convolving kernel is 9 and stride 1. The 2nd layer has 256 units with kernel 5 and stride 1. For primary capsules, 8 capsule layers are utilized. Each primary capsule layer is made of 8 convolutional units with kernel size 9 and a stride 2. Each capsule layer has an output channel equivalent to 32. The number of capsules in digit capsules is equivalent to the number of classes dedicated to the training phase. Each capsule has one 16D capsule per speaker class and each of these capsules obtains input from the capsules in the lower layer. The decoder consists of 3 linear layers. The input of the first layer has 16*num of class nodes and uses the ReLU activation function. The second layer takes 512 nodes and uses the ReLU activation function. The third linear takes 1024 and uses the non-linear sigmoid activation function. The implementation of our model uses the Pytorch library and the Adam optimizer with l2 regularization and the default learning rate utilized is 0.001.

In order to prove the effectiveness of the modified CapsNet, we tested our model with different configuration parameters until we obtained the best test accuracy along with the least testing time. A different number of layers, a different number of filters, and different regularization techniques were used. The experimental trials demonstrated that the performance of our model did not improve when the depth of the model or the number of filters is increased. Similarly, the accuracy of our proposed model did not increase when we replaced L2 loss with L1.

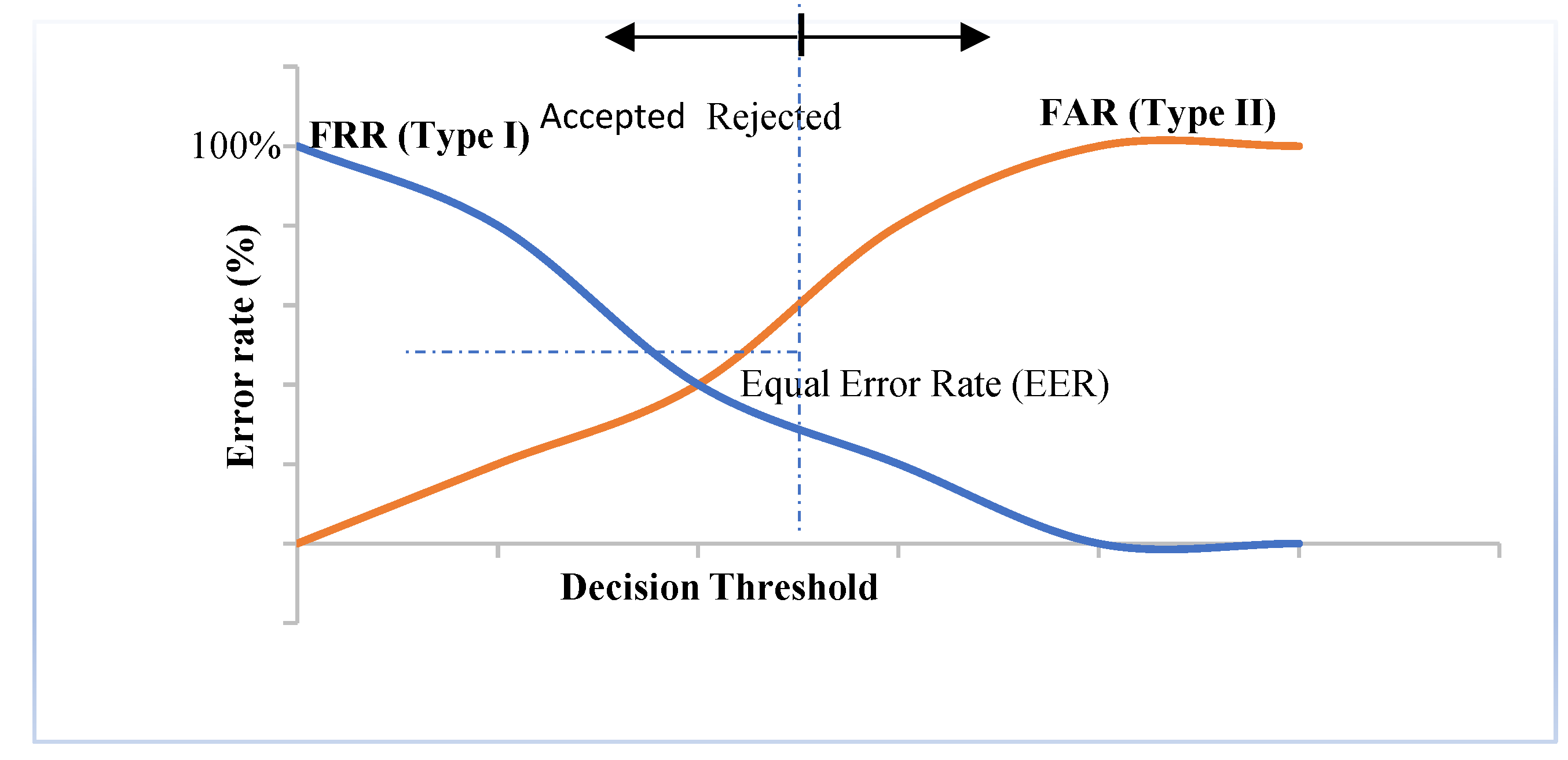

5. Threshold and Speaker Verification

In the speaker verification system, a threshold is utilized to check if a claimed identity is valid or not.

Figure 6 portrays a graphical illustration of EER in biometric systems. For an effective setting of a decision threshold, we generate an evenly spaced sequence of threshold values. Then, a loop that undergoes multiple iterations over threshold values takes place. Upon every iteration, the absolute difference between and false acceptance rate (FAR) and a false rejection rate (FRR) is computed and stored with its corresponding index, as per Munich and Perona [

44]. The threshold with the minimum value is the optimum threshold. The last phase in the verification is to compare the score obtained against the selected threshold value

as to admit or discard the claimed identity of an individual [

45] i.e.,

6. DC-LSTM-COMP CapsNet in Speaker Verification vs. Emotion Recognition

DC-LSTM-COMP CapsNet framework was first used in emotion recognition in which the emotional talking condition of the speaker is extracted from his/her speech signal. Emotion recognition is perceived as a classification task and it consists of two main phases, namely: training and testing. First, the pre-processing and feature extraction of raw audio samples was performed using DC-LSTM. Then the classification was performed using COMP CapsNet which is made of a series of convolutional layers, primary capsules, routing capsules, and a decoder. The outcome of the training phase is the recognition of the unknown emotion.

However, in this paper, the DC-LSTM-COMP CapsNet model is utilized in the context of speaker verification under emotional and stressful environments. Speaker verification is deemed a biometric classification problem that verifies the approval or denial of a claimed identity of an individual. This makes the speaker verification task more challenging in comparison to emotion recognition as it entails: (1) the presence of an enrolment phase in addition to the training and testing phases, and (2) the selection of an optimum threshold value during the verification procedure.

In fact, the only common phase between emotion recognition and speaker verification is the pre-processing and feature extraction using DC-LSTM. In this work, the architecture of our proposed framework is different than the one used in emotion recognition. Unlike the emotion recognition task, which consists of two stages only, the current research involves three main phases: training, enrolment, and testing. The enrolment phase is a vital stage in order to build a speaker verification system using our proposed model. Hence, in this paper, the dataset is divided into a training set, enrolment set, and testing set. In enrolment, the pre-trained DC-LSTM-COMP CapsNet is employed. All routing capsules and decoders used in the training are discarded. All parameters are frozen and new routing capsules as well as a new decoder are inserted. Please refer to

Section 4 for further details with respect to training, enrolment, and evaluation stages for speaker verification.

Furthermore, speaker verification necessitates the presence of a threshold in order to decide upon acceptance or rejection of a claimed identity, unlike emotion recognition. It is a key element in the speaker verification model. The selection and the determination of an optimum threshold value are accomplished through an iterative algorithm. Please refer to

Section 5 for more details with regard to the threshold and speaker verification.

7. Experimental Results

Our proposed DC-LSTM-COMP CapsNet approach has been assessed and evaluated in the context of text-independent speaker verification using four emotional datasets. The different emotional states are: neutral, angry, happy, sad, fear, and disgust.

Table 2 reports the average speaker verification results achieved by each of CNN, original CapsNet, and the proposed modified CapsNet under emotional talking environments using the private Emirati-accented database. The assessment is appraised based on two distinct evaluation metrics: Equal Error Rate (EER) and Area under the curve (AUC) scores.

According to the results reported in

Table 2, it is clear that the verification rates are the highest under neutral, followed by happy, fear, disgust, sad, and angry, respectively. Based on the reported results, we can conclude that all classifiers yield the highest verification accuracy under neutral talking conditions. On the other hand, anger emotion constantly reports the highest error rates and the lowest AUC scores. For anger emotion, the corresponding EERs are equivalent to 12.41% (based on the proposed model) compared to 14.54% (based on CapsNet) and 17.89% (based on CNN). Moreover,

Table 2 reveals that the most significant AUC scores and the lowest EER results are credited to the proposed modified CapsNet, where the average percentage verification is 10.50% compared to 11.29% and 15.77% based on the proposed model, CapsNet and CNN, respectively. Based on the achieved AUC scores, the average performance of all emotions is equivalent to 0.91 in comparison to 0.88 and 0.85 based on the proposed model, original CapsNet, and CNN, respectively. The best-attained average verification performance is highlighted in grey in the table. Despite that, the original CapsNet is surpassed by the modified one, yet the original CapsNet outperforms the CNN classifier with a difference of 0.3 AUC.

Based on the results in

Table 3, we show that the proposed CapsNet architecture surpasses the average verification performance obtained by each of the classical classifiers: SVM, MLP as well as KNN. In fact, the implemented DC-LSTM-COMP CapsNet classifier attains a remarkable reduction in the percentage error rates equivalent to 48.78%, 52.97%, and 70.69% for SVM, MLP, and KNN, respectively. The source codes of speaker verification for each of CNN, SVM, MLP, and KNN are all re-implemented by the authors.

In this work, the implementation was performed using Keras with a TenserFlow backend [

46]. The MLP classifier used 100 hidden layers with rectified linear unit function (relu) activation function, adam solver for weight optimization, and L2 regularization (alpha) set to 1.

In the KNN classifier, the number of neighbors used was 10, with uniform weights, where all points in each neighborhood are weighted similarly. The Euclidean distance metric was used. The algorithm used to compute the nearest neighbors was set to ‘auto’. This enabled the classifier to decide the most appropriate algorithm based on the values passed to fit the method.

For SVM, the linear support vector was used with a maximum number of iterations to be run equivalent to 1000.

The training of the CNN was performed using the categorical cross-entropy criterion and stochastic gradient descent optimizer with a learning rate of 0.01 and a batch size equivalent to 64.

We carried out a series of experimentations in order to validate the results achieved using the ESD corpus. The experiments are the following:

7.1. Experiment 1: CREMA-D Database

The emotional CREMA-D dataset is analyzed with the aim to assess the efficiency of our proposed model over the original CapsNet, CNN, as well as shallow classifiers. Based on the results in

Table 4, the speaker verification performance achieved by the modified CapsNet beats both the original CapsNet and CNN in terms of AUC scores. The average AUC scores yielded are 0.82 compared to 0.78 and 0.73 based on the proposed model, original CapsNet, and CNN, respectively. However, the error rates of the proposed classifier fail to surpass the original CapsNet classifier in anger, sad, fear, and happy emotions. The attained average percentage EER of the proposed model is close to that of the original CapsNet 19.14% and 18.81% based upon the modified CapsNet and the original CapsNet, respectively. The sentences expressed neutrally achieved the least error rates using all models.

The reported results in

Table 5 plainly illustrate that the proposed model achieves higher average verification accuracy, in terms of percentage EER values, in comparison to that achieved by SVM, MLP, and KNN classifiers. The achieved percentage decrease in error rates is equal to 43.70%, 56.98%, and 62.47% for SVM, MLP, and KNN, respectively. Amongst classical classifiers, the SVM model yields the best verification performance in all emotional states, followed by MLP, then KNN. The corresponding average percentage error rates are 34.0%, 44.5%, and 51%, respectively. It is clear that the performance of speaker verification under a neutral talking environment is higher than the performance of speaker verification in an emotional environment based upon each of SVM, MLP, and KNN. The corresponding EERs are equivalent to 22%, 30%, and 44% for each of SVM, MLP, and KNN, respectively.

7.2. Experiment 2: SUSAS Database

In this experiment, the SUSAS database is assessed in order to get an insight into the efficiency of the proposed DC-LSTM-COMP CapsNet model over all other models under stressful talking environments.

Table 6 illustrates the speaker verification performance results based on percentage EER and AUC values. The findings plainly show that the modified CapsNet model attains the lowest EER values while achieving the highest AUC scores across all states. Consequently, the proposed modified CapsNet surpasses each of the original CapsNet and CNN under all stressful states. The average percentage error rate is 9.59% based on the proposed model in comparison to 14.18% based on the original CapsNet and 40.83% based on CNN. The utmost verification accuracy is registered when the utterances are tested under the neutral state based on the three aforementioned classifiers. In contrast, anger emotion has remarked the poorest average verification performance with the highest EER and the lowest AUC. This is due to the insufficient architecture of CNN and the insufficient compression of the original CapsNet.

Based on the results in

Table 7, the average verification performance of the classical classifiers is inferior to that of the proposed modified CapsNet model. The achieved percentage decrease in error rates is equal to 75.92%, 77.86%, and 80.91% based on SVM, MLP, and KNN, respectively. Among conventional classifiers, KNN achieves the worst verification accuracy in comparison to both SVM and MLP with average percentage error rates equal to 50.66%, 39.83%, and 43.33%, respectively. The verification of speakers under the angry emotion yields the poorest accuracy in comparison to all other emotional classes across all classical models. The corresponding percentage EERs are 61%, 78%, and 87% based on KNN, SVM, and MLP, respectively.

7.3. Experiment 3: RAVDESS Database

In this experiment, the RAVDESS emotional database is utilized in order to appraise the effectiveness of the proposed DC-LSTM-COMP CapsNet model in comparison to the original CapsNet, CNN as well as SVM, MLP, and KNN.

Table 8 tabulates the performance of speaker verification achieved under emotional environments using the RAVDESS database. The percentage EER values and AUC scores prove that the modified CapsNet outperforms each of the original CapsNet as well as the CNN classifier. The reported average EER values are 13.56% compared to 18.81% and 27.60% based on the modified CapsNet, original CapsNet, and CNN, respectively. The neutral state records the lowest EER values, followed by happy, sad, disgust, fear, and anger, respectively.

Based on the achieved results in

Table 9, we observe that the proposed model outperforms each of the SVM, MLP, and KNN conventional classifiers. The percentage decrease in error rates is equivalent to 68.94%, 69.52%, and 70.83%, for SVM, MLP, and KNN, respectively. We notice that the sentences expressed in the neutral state produce EER values lower than that of CNN, original CapsNet, and modified CapsNet. This is due to the small size of the RAVDESS database where the training set is comprised of one single sentence. On the other hand, the average verification of all classical classifiers is poor compared to CNN, original CapsNet, and the proposed model.

7.4. Wilcoxon Signed-Rank Test

In order to validate our derived conclusions and investigate whether the yielded EERs are accurate or simply triggered by statistical fluctuations, we carried out tests of statistical significance between the proposed DC-LSTM-COMP CapsNet model with other competitive models such as the original CapsNet model, as well as the CNN model. According to Kolmogorov–Smirnov test, we concluded that the EERs obtained have a non-normal distribution. Thus, we employed the non-parametric statistical test: the Wilcoxon test. It is used to compare two populations or two observations.

Table 10 illustrates the computed

p-values achieved by the proposed framework, CNN and CapsNet. We observe that the computed

p-values obtained are less than α = 0.05. Consequently, we deduce that the proposed framework is statistically different from both models in most of the datasets.

7.5. Experiment 7: Testing Time Comparison

This experiment aims to compare the measured verification testing times recorded by each of the proposed DC-LSTM-COMP CapsNet, original CapsNet, CNN, SVM, MLP, as well as KNN models using ESD, CREMA-D, SUSAS, and RAVDESS databases.

Table 11 displays the testing time measured in seconds attained by the proposed framework, original CapsNet, CNN, SVM, MLP, and KNN using ESD, CREMA-D, SUSAS, and RAVDESS datasets. The testing time calculates the time elapsed between the launch and the end of the verification procedure. Based on the results provided in

Table 11, we observe that the testing times measurements of the proposed modified CapsNet model are very close to those attained by the original CapsNet and CNN models using all corpora. It is worth noting that the complexity of the proposed algorithm is slightly smaller than the two aforementioned classifiers. Using the Emirati database, the recorded testing times are equivalent to 4.0092 s, compared to 4.0097 and 4.6138 s based on the modified CapsNet, original CapsNet, and CNN, respectively. Using the CREMA-D dataset, 4.912 s compared to 4.954 s and 4.998 s based upon the proposed CapsNet, original CapsNet, and CNN, respectively.

Based on the testing times yielded in

Table 11, it is clear that the proposed architecture has the lowest computational complexity associated with testing times than those achieved by SVM, MLP as well as KNN conventional classifiers across all databases. The testing times of the modified CapsNet are near that reached by the MLP classifier using ESD, CREMA, and SUSAS. KNN records the highest testing time across all databases with test times equal to 12.441 s, 7.281 s, 5.783 s, and 24.37 s using ESD, CREMA-D, SUSAS, and RAVDESS, respectively.

7.6. Experiment 8: Comparison with Previous Work

In this experiment, the verification results achieved by the proposed DC-LSTM- COMP CapsNet model are compared to those attained by various classifiers in previous work such as First-Order Hidden Markov Models (HMM1s) and Second-Order Hidden Markov Models (HMM2s) as in [

46]; Third-Order Circular Hidden Markov Model (CHMM3) and Circular Suprasegmental Hidden Markov Model (CSPHMM) as in [

2]; and hybrid DNN-based models implemented in [

20] as shown in

Table 12. For a meaningful and unbiased study, the comparison is limited to the literature which used the ESD, CREMA-D, SUSAS, or RAVDESS databases in the context of speaker verification in neutral, emotional, or stressful talking environments. Our proposed model records a percentage decrease in EER equivalent to 38.95%, 26.87%, 62.31%, and 49.74% based on HMM1, HMM2, CHMM3, and CSPHMM, respectively. In addition, the proposed classifier beats the following DNN-based hybrid models: DNN-GMM, GMM-DNN, HMM-DNN, and DNN-HMM using the emotional RAVDESS corpus. Furthermore, the performance of our modified CapsNet outperforms that achieved by GMM-DNN and DNN-HMM using the SUSAS dataset. The corresponding decrease in error rate is 4.29% and 52.00% based on GMM-DNN and DNN-HMM, respectively.

The percentage decrease in error rate is computed using the subsequent equation:

8. Discussion

The outcomes of the conducted set of experiments reveal that the proposed model surpasses each of the original CapsNet and CNN classifiers using both EER values and AUC scores using the four databases. In addition, the results illustrate that there is a statistical difference between the proposed model and each of the original CapsNet and CNN for ESD, SUSAS, and RAVDESS databases. Moreover, findings prove that the proposed model surpasses the classical classifiers SVM, MLP, and KNN in emotional and stressful conditions. Besides, results show that the proposed model has a small testing time, in terms of calculated testing times, compared to all other classifiers. The proposed model achieves better verification accuracy in comparison to HMM1, HMM2, CHMM3, and CSPHMM using the ESD database in emotional-talking environments.

Nevertheless, one of the limitations of our work is that the error rates of conventional classifiers are less than that of CNN, original CapsNet, and the proposed model in the neutral state using the RAVDESS database. This is due to the small size of the dataset. In addition, the proposed DC-LSTMCOMP CapsNet model fails to be different from the original CapsNet using CREMA-D dataset. Furthermore, the recorded testing times of the proposed model are very close to that of the original CapsNet.

Our plan in the future aims to modify the proposed CapsNet model so as to enhance the verification accuracy in the angry emotional state with reduced computational complexity, in the context of testing times, in comparison to that of CNN and original CapsNet models for text-independent speaker verification. In addition, we plan to test our proposed algorithm with different routing mechanisms and inspect their effect on the verification performance for the different emotional and stressful states. Moreover, our intention is to direct our research endeavors to propose and implement novel, efficient and robust CapsNet-based hybrid algorithms with low testing time in the field of speaker verification under emotional and stressful environments. Furthermore, we aim to apply initial data fuzzification in the pre-processing stage and study its effect on speaker recognition accuracy and efficiency under neutral and emotional acoustic conditions.

9. Conclusions

In this research, we propose a novel modified CapsNet model employed in the context of text-independent speaker verification. The modified DC-LSTM-COMP CapsNet incorporates Dual-Channel LSTM layers, during the pre-processing phase, in order to extract the sequence features of amplitude/phase components together with in-phase/quadrature signal components. The proposed architecture consists of three main phases: training, enrolment, and evaluation. The experimental conclusions demonstrate that the advocated framework enhances the speaker verification performance using four different emotional and stressful datasets: Emirati-accented database, CREMA-D, SUSAS, and RAVDESS. This is because of the capability of the proposed model to overcome the limitations that exist within CNN models as well as the original CapsNet. In fact, the developed classifier is able to effectively establish the compression step while preserving sufficient energy.

Author Contributions

A.B.N.: Conceptualization, Methodology, Supervision, Writing—Original Draft, Writing—Review and Editing. I.S.: Data Curation, Methodology, Writing—Review and Editing. N.N.: Investigation, Writing—Original Draft. N.H.: Methodology, Writing—Review and Editing, A.E.: Methodology, Writing—Review and Editing. All authors have read and agreed to the published version of the manuscript.

Funding

This project was funded by the machine learning and Arabic language processing research group at the University of Sharjah.

Institutional Review Board Statement

The authors have authorization from the University of Sharjah to gather speech databases from UAE nationals based on the competitive research project titled Emirati-Accented Speaker and Emotion Recognition Based on Deep Neural Network, No. 19020403139.

Informed Consent Statement

This study did not involve any experiments on animals.

Data Availability Statement

Acknowledgments

The authors would like to convey their thanks and appreciation to the University of Sharjah for supporting the work through the research group, Machine Learning, and Arabic Language Processing.

Conflicts of Interest

The authors declare that they have no conflict of interest.

References

- Parthasarathy, S.; Busso, C. Predicting speaker recognition reliability by considering emotional content. In Proceedings of the 2017 7th International Conference on Affective Computing and Intelligent Interaction (ACII), San Antonio, TX, USA, 23–26 October 2017; pp. 434–439. [Google Scholar] [CrossRef]

- Shahin, I.; Nassif, A.B. Speaker Verification in Emotional Talking Environments based on Third-Order Circular Suprasegmental Hidden Markov Model. In Proceedings of the 2019 International Conference on Electrical and Computing Technologies and Applications (ICECTA), Ras Al Khaimah, United Arab Emirates, 19–21 November 2019; pp. 1–6. [Google Scholar]

- Parthasarathy, S.; Lotfian, R.; Busso, C.; Multimodal Signal Processing (MSP) Laboratory, Department of Electrical Engineering The University of Texas at Dallas. A study of speaker verification performance with expressive speech. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing, New Orleans, LA, USA, 5–9 March 2017; pp. 4995–4999. [Google Scholar]

- Nassif, A.B.; Shahin, I.; Hamsa, S.; Nemmour, N.; Hirose, K. CASA-based speaker identification using cascaded GMM-CNN classifier in noisy and emotional talking conditions. Appl. Soft Comput. 2021, 103, 107141. [Google Scholar] [CrossRef]

- Nassif, A.B.; Shahin, I.; Elnagar, A.; Velayudhan, D.; Alhudhaif, A.; Polat, K. Emotional speaker identification using a novel capsule nets model. Expert Syst. Appl. 2022, 193, 116469. [Google Scholar] [CrossRef]

- Zhong, X.; Liu, J.; Li, L.; Chen, S.; Lu, W.; Dong, Y.; Wu, B.; Zhong, L. An emotion classification algorithm based on SPT-CapsNet. Neural Comput. Appl. 2020, 32, 1823–1837. [Google Scholar] [CrossRef]

- Punjabi, A.; Schmid, J.; Katsaggelos, A.K. Examining the Benefits of Capsule Neural Networks. arXiv 2020, arXiv:2001.10964. [Google Scholar]

- Kwabena Patrick, M.; Felix Adekoya, A.; Abra Mighty, A.; Edward, B.Y. Capsule Networks—A survey. J. King Saud Univ.—Comput. Inf. Sci. 2022, 34, 1295–1310. [Google Scholar] [CrossRef]

- Bae, J.; Kim, D.S. End-to-end speech command recognition with capsule network. In Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH 2018, Hyderabad, India, 2–6 September 2018; pp. 776–780. [Google Scholar] [CrossRef]

- Sabour, S.; Frosst, N.; Hinton, G.E. Dynamic routing between capsules. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 3857–3867. [Google Scholar]

- Jain, A.; Fandango, A.; Kapoor, A. TensorFlow Machine Learning Projects: Build 13 Real-World Projects with Advanced Numerical Computations Using the Python Ecosystem; Packt Publishing Ltd.: Birmingham, UK, 2018. [Google Scholar]

- Cao, Z.; Liu, D.; Wang, Q.; Chen, Y. Towards Unbiased Label Distribution Learning for Facial Pose Estimation Using Anisotropic Spherical Gaussian; Springer: Cham, Swizerland, 2022; pp. 737–753. [Google Scholar]

- Liu, D.; Cui, Y.; Tan, W.; Chen, Y. SG-Net: Spatial Granularity Network for One-Stage Video Instance Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9811–9820. [Google Scholar] [CrossRef]

- Yan, L.; Wang, Q.; Cui, Y.; Feng, F.; Quan, X.; Zhang, X.; Liu, D. GL-RG: Global-Local Representation Granularity for Video Captioning. In Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, {IJCAI-22}, Vienna, Austria, 23–29 July 2022; pp. 2769–2775. [Google Scholar]

- Nassif, A.B.; Shahin, I.; Attili, I.; Azzeh, M.; Shaalan, K. Speech Recognition Using Deep Neural Networks: A Systematic Review. IEEE Access 2019, 7, 19143–19165. [Google Scholar] [CrossRef]

- Hourri, S.; Nikolov, N.S.; Kharroubi, J. Convolutional neural network vectors for speaker recognition. Int. J. Speech Technol. 2021, 24, 389–400. [Google Scholar] [CrossRef]

- Zhou, T.; Zhao, Y.; Li, J.; Gong, Y.; Wu, J. CNN with Phonetic Attention for Text-Independent Speaker Verification. In Proceedings of the 2019 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Singapore, 14–18 December 2019; pp. 718–725. [Google Scholar]

- Zhao, Y.; Zhou, T.; Chen, Z.; Wu, J. Improving Deep CNN Networks with Long Temporal Context for Text-Independent Speaker Verification. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6834–6838. [Google Scholar]

- Hajavi, A.; Etemad, A. Siamese capsule network for end-to-end speaker recognition in the wild. arXiv 2020, arXiv:2009.13480. [Google Scholar]

- Shahin, I.; Nassif, A.B.; Nemmour, N.; Elnagar, A.; Alhudhaif, A.; Polat, K. Novel hybrid DNN approaches for speaker verification in emotional and stressful talking environments. Neural Comput. Appl. 2021, 33, 16033–16055. [Google Scholar] [CrossRef]

- Levashenko, V.; Zaitseva, E.; Puuronen, S. Fuzzy Classifier Based on Fuzzy Decision Tree. In Proceedings of the EUROCON 2007—The International Conference on “Computer as a Tool”, Warsaw, Poland, 9–12 September 2007; pp. 823–827. [Google Scholar]

- Ivanova, M.S. Fuzzy Set Theory and Fuzzy Logic for Activities Automation in Engineering Education. In Proceedings of the 2019 IEEE XXVIII International Scientific Conference Electronics (ET), Sozopol, Bulgaria, 12–14 September 2019; pp. 1–4. [Google Scholar]

- Geiger, B.C.; Kubin, G. Information Loss in Deterministic Signal Processing Systems; Springer International Publishing: Cham, Switzerland, 2018; ISBN 978-3-319-59532-0. [Google Scholar]

- Zhang, Z.; Luo, H.; Wang, C.; Gan, C.; Xiang, Y. Automatic Modulation Classification Using CNN-LSTM Based Dual-Stream Structure. IEEE Trans. Veh. Technol. 2020, 69, 13521–13531. [Google Scholar] [CrossRef]

- Tuncer, T.; Dogan, S.; Subasi, A. LEDPatNet19: Automated Emotion Recognition Model based on Nonlinear LED Pattern Feature Extraction Function using EEG Signals. Cogn. Neurodyn. 2022, 16, 779–790. [Google Scholar] [CrossRef]

- Mohebbanaaz; Kumari, L.V.R.; Sai, Y.P. Classification of ECG beats using optimized decision tree and adaptive boosted optimized decision tree. Signal Image Video Process. 2022, 16, 695–703. [Google Scholar] [CrossRef]

- Ha, M.-H.; Chen, O.T.-C. Deep Neural Networks Using Capsule Networks and Skeleton-Based Attentions for Action Recognition. IEEE Access 2021, 9, 6164–6178. [Google Scholar] [CrossRef]

- Mandal, B.; Dubey, S.; Ghosh, S.; Sarkhel, R.; Das, N. Handwritten Indic Character Recognition using Capsule Networks. In Proceedings of the 2018 IEEE Applied Signal Processing Conference (ASPCON), Kolkata, India, 7–9 December 2018; pp. 304–308. [Google Scholar]

- Wu, Y.; Li, J.; Wu, J.; Chang, J. Siamese capsule networks with global and local features for text classification. Neurocomputing 2020, 390, 88–98. [Google Scholar] [CrossRef]

- Yang, M.; Zhao, W.; Ye, J.; Lei, Z.; Zhao, Z.; Zhang, S. Investigating Capsule Networks with Dynamic Routing for Text Classification. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 3110–3119. [Google Scholar]

- Wu, X.; Liu, S.; Cao, Y.; Li, X.; Yu, J.; Dai, D.; Ma, X.; Hu, S.; Wu, Z.; Liu, X.; et al. Speech Emotion Recognition Using Capsule Networks. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 6695–6699. [Google Scholar]

- Lee, K.; Joe, H.; Lim, H.; Kim, K.; Kim, S.; Han, C.W.; Kima, H.G. Sequential routing framework: Fully capsule network-based speech recognition. arXiv 2020, arXiv:2007.11747. [Google Scholar] [CrossRef]

- Shahin, I.; Hindawi, N.; Nassif, A.B.; Alhudhaif, A.; Polat, K. Novel dual-channel long short-term memory compressed capsule networks for emotion recognition. Expert Syst. Appl. 2022, 188, 116080. [Google Scholar] [CrossRef]

- Hansen, J.H.; Bou-Ghazale, S.E.; Sarikaya, R.; Pellom, B. Getting Started with SUSAS: A Speech Under Simulated and Actual Stress Database. In Proceedings of the Eurospeech, Rhodes, Greece, 22–25 September 1997. [Google Scholar]

- Livingstone, S.R.; Russo, F.A. The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS): A dynamic, multimodal set of facial and vocal expressions in North American English. PLoS ONE 2018, 13, e0196391. [Google Scholar] [CrossRef]

- Cao, H.; Cooper, D.G.; Keutmann, M.K.; Gur, R.C.; Nenkova, A.; Verma, R. CREMA-D: Crowd-sourced emotional multimodal actors dataset. IEEE Trans. Affect. Comput. 2014, 5, 377–390. [Google Scholar] [CrossRef]

- Li, L.; Wang, D.; Zhang, Z.; Zheng, T.F. Deep Speaker Vectors for Semi Text-independent Speaker Verification. arXiv 2015, arXiv:1505.06427. [Google Scholar]

- Shahin, I.; Nassif, A.B. Three-stage speaker verification architecture in emotional talking environments. Int. J. Speech Technol. 2018, 21, 915–930. [Google Scholar] [CrossRef]

- O’Shaughnessy, D. Speech Communications: Human And Machine; Addison-Wesley: Boston, MA, USA, 1987; ISBN 978-0-201-16520-3. [Google Scholar]

- Furui, S. Speaker-Independent Isolated Word Recognition Using Dynamic Features of Speech Spectrum. IEEE Trans. Acoust. Speech Signal Process. 1986, ASP-34, 3–9. [Google Scholar] [CrossRef]

- Hinton, G.E.; Krizhevsky, A.; Wang, S.D. Transforming Auto-Encoders. In Proceedings of the Artificial Neural Networks and Machine Learning—ICANN 2011, Espoo, Finland, 14–17 June 2011; pp. 44–51. [Google Scholar]

- Vesperini, F.; Gabrielli, L.; Principi, E.; Squartini, S. Polyphonic Sound Event Detection by Using Capsule Neural Networks. IEEE J. Sel. Top. Signal Process. 2019, 13, 310–322. [Google Scholar] [CrossRef]

- Bickel, P.J.; Doksum, K.A. Mathematical Statistics; Chapman and Hall/CRC: Boca Raton, FL, USA, 2015; ISBN 9781315369266. [Google Scholar]

- Munich, M.E.; Perona, P. Visual identification by signature tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 200–217. [Google Scholar] [CrossRef]

- Shahin, I. Emirati speaker verification based on HMMls, HMM2s, and HMM3s. In Proceedings of the 2016 IEEE 13th International Conference on Signal Processing, Chengdu, China, 6–10 November 2016; pp. 562–567. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A system for large-scale machine learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation OSDI 2016, Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

Figure 1.

The architecture of the original CapsNet [

42].

Figure 1.

The architecture of the original CapsNet [

42].

Figure 2.

The overall architecture of speaker verification using the proposed modified CapsNet model.

Figure 2.

The overall architecture of speaker verification using the proposed modified CapsNet model.

Figure 3.

Block diagram of speaker verification of the proposed DC-LSTM-COMP CapsNet in the training phase.

Figure 3.

Block diagram of speaker verification of the proposed DC-LSTM-COMP CapsNet in the training phase.

Figure 4.

Block diagram of speaker verification of the proposed DC-LSTM-COMP CapsNet model in the enrolment phase.

Figure 4.

Block diagram of speaker verification of the proposed DC-LSTM-COMP CapsNet model in the enrolment phase.

Figure 5.

Block diagram of speaker verification of the proposed DC-LSTM-COMP CapsNet model in the evaluation phase.

Figure 5.

Block diagram of speaker verification of the proposed DC-LSTM-COMP CapsNet model in the evaluation phase.

Figure 6.

Graphical illustration of equal error rate (EER) in verification systems.

Figure 6.

Graphical illustration of equal error rate (EER) in verification systems.

Table 1.

Emirati Speech Dataset.

Table 2.

Speaker verification performance in terms of EER and CNN of each of CNN, original CapsNet, and modified CapsNet using the Emirati dataset.

Table 2.

Speaker verification performance in terms of EER and CNN of each of CNN, original CapsNet, and modified CapsNet using the Emirati dataset.

| Emotion | CNN | Original CapsNet | Modified CapsNet |

|---|

| EER | AUC | EER | AUC | EER | AUC |

|---|

| Neutral | 9.57 | 0.95 | 8.33 | 0.96 | 7.02 | 0.98 |

| Anger | 17.89 | 0.82 | 14.54 | 0.85 | 12.41 | 0.87 |

| Happy | 15.77 | 0.85 | 12.10 | 0.88 | 9.20 | 0.91 |

| Sad | 17.40 | 0.83 | 15.05 | 0.85 | 12.00 | 0.88 |

| Fear | 16.14 | 0.84 | 13.74 | 0.86 | 10.00 | 0.90 |

| Disgust | 17.85 | 0.82 | 14.00 | 0.86 | 11.04 | 0.89 |

| Average | 15.77 | 0.85 | 11.29 | 0.88 | 10.50 | 0.91 |

Table 3.

Percentage EER using SVM, MLP, and KNN classifiers using the ESD database.

Table 3.

Percentage EER using SVM, MLP, and KNN classifiers using the ESD database.

| Emotion | SVM | MLP | KNN |

|---|

| Neutral | 9.00 | 10.00 | 19.00 |

| Anger | 29.00 | 37.00 | 42.00 |

| Happy | 21.00 | 23.00 | 35.00 |

| Sad | 25.00 | 25.00 | 45.00 |

| Fear | 24.00 | 23.00 | 45.00 |

| Disgust | 15.00 | 16.00 | 29.00 |

| Average | 20.5 | 22.33 | 35.83 |

Table 4.

Speaker verification performance in terms of EER and AUC of CNN, original CapsNet, and the proposed modified CapsNet using Crema-D.

Table 4.

Speaker verification performance in terms of EER and AUC of CNN, original CapsNet, and the proposed modified CapsNet using Crema-D.

| Emotion | CNN | Original CapsNet | Modified CapsNet |

|---|

| EER | AUC | EER | AUC | EER | AUC |

|---|

| Neutral | 21.94 | 0.86 | 15.00 | 0.82 | 10.10 | 0.91 |

| Anger | 38.54 | 0.66 | 20.92 | 0.75 | 23.72 | 0.77 |

| Happy | 32.29 | 0.73 | 17.00 | 0.80 | 17.00 | 0.83 |

| Sad | 32.33 | 0.74 | 18.50 | 0.78 | 20.00 | 0.80 |

| Fear | 38.54 | 0.69 | 20.00 | 0.76 | 21.14 | 0.79 |

| Disgust | 34.38 | 0.72 | 21.41 | 0.76 | 23.00 | 0.77 |

| Average | 32.99 | 0.73 | 18.81 | 0.78 | 19.14 | 0.82 |

Table 5.

Percentage EER using SVM, MLP, and KNN classifiers using Crema-D.

Table 5.

Percentage EER using SVM, MLP, and KNN classifiers using Crema-D.

| Emotion | SVM | MLP | KNN |

|---|

| Neutral | 22.00 | 30.00 | 44.00 |

| Anger | 40.00 | 47.00 | 50.00 |

| Happy | 35.00 | 47.00 | 53.00 |

| Sad | 32.00 | 47.00 | 54.00 |

| Fear | 38.00 | 50.00 | 53.00 |

| Disgust | 37.00 | 46.00 | 52.00 |

| Average | 34.0 | 44.5 | 51 |

Table 6.

Speaker verification performance in terms of EER and AUC of each of CNN, original CapsNet, and the proposed model using the SUSAS Database.

Table 6.

Speaker verification performance in terms of EER and AUC of each of CNN, original CapsNet, and the proposed model using the SUSAS Database.

| Emotion | CNN | Original CapsNet | Modified CapsNet |

|---|

| EER | AUC | EER | AUC | EER | AUC |

|---|

| Neutral | 26.67 | 0.78 | 9.71 | 0.90 | 5.50 | 0.96 |

| Anger | 46.67 | 0.55 | 18.88 | 0.81 | 13.09 | 0.87 |

| Fast | 33.33 | 0.64 | 12.00 | 0.88 | 7.57 | 0.93 |

| Slow | 46.67 | 0.56 | 13.96 | 0.86 | 10.50 | 0.91 |

| Soft | 45.00 | 0.51 | 15.50 | 0.85 | 11.00 | 0.89 |

| Lombard | 46.67 | 0.49 | 15.00 | 0.85 | 9.85 | 0.90 |

| Average | 40.83 | 0.59 | 14.18 | 0.86 | 9.59 | 0.91 |

Table 7.

Percentage EER using SVM, MLP, and KNN classifiers using the SUSAS database.

Table 7.

Percentage EER using SVM, MLP, and KNN classifiers using the SUSAS database.

| Emotion | SVM | MLP | KNN |

|---|

| Neutral | 6.00 | 10.00 | 27.00 |

| Anger | 78.00 | 87.00 | 61.00 |

| Fast | 20.00 | 22.00 | 46.00 |

| Slow | 23.00 | 30.00 | 49.00 |

| Soft | 42.00 | 42.00 | 58.00 |

| Lombard | 70.00 | 69.00 | 60.00 |

| Average | 39.83 | 43.33 | 50.15 |

Table 8.

Speaker verification performance in terms of EER and AUC of each of CNN, original CapsNet and the proposed modified CapsNet using the RAVDESS database.

Table 8.

Speaker verification performance in terms of EER and AUC of each of CNN, original CapsNet and the proposed modified CapsNet using the RAVDESS database.

| Emotion | CNN | Original CapsNet | Modified CapsNet |

|---|

| EER | AUC | EER | AUC | EER | AUC |

|---|

| Neutral | 22.92 | 0.88 | 15.00 | 0.85 | 9.03 | 0.91 |

| Anger | 36.46 | 0.66 | 20.92 | 0.78 | 16.50 | 0.83 |

| Happy | 31.25 | 0.71 | 17.00 | 0.83 | 12.70 | 0.88 |

| Sad | 12.50 | 0.87 | 18.50 | 0.81 | 13.11 | 0.87 |

| Fear | 31.25 | 0.67 | 20.00 | 0.80 | 15.51 | 0.85 |

| Disgust | 31.25 | 0.81 | 21.41 | 0.77 | 14.50 | 0.85 |

| Average | 27.60 | 0.77 | 18.81 | 0.81 | 13.56 | 0.87 |

Table 9.

Percentage EER using SVM, MLP, and KNN classifiers using the RAVDESS database.

Table 9.

Percentage EER using SVM, MLP, and KNN classifiers using the RAVDESS database.

| Emotion | SVM | MLP | KNN |

|---|

| Neutral | 7.00 | 2.00 | 4.00 |

| Anger | 51.00 | 57.00 | 62.00 |

| Happy | 45.00 | 47.00 | 46.00 |

| Sad | 41.00 | 47.00 | 40.00 |

| Fear | 54.00 | 59.00 | 63.00 |

| Disgust | 64.00 | 61.00 | 64.00 |

| Average | 43.66 | 44.50 | 46.50 |

Table 10.

p-Values Between the proposed model, CNN, and original CapsNet.

Table 10.

p-Values Between the proposed model, CNN, and original CapsNet.

| Database | CNN | Original CapsNet |

|---|

| ESD | 0.027 | 0.027 |

| CREMA-D | 0.027 | 0.500 |

| SUSAS | 0.027 | 0.027 |

| RAVDESS | 0.046 | 0.027 |

Table 11.

Testing times (in seconds) of speaker verification of the different classifiers using ESD, CREMA, SUSAS, and RAVDESS.

Table 11.

Testing times (in seconds) of speaker verification of the different classifiers using ESD, CREMA, SUSAS, and RAVDESS.

| | ESD | CREMA | SUSAS | RAVDESS |

|---|

| DC-LSTM-COMP CapsNet | 4.0092 | 4.912 | 3.0001 | 2.3900 |

|---|

| Original CapsNet | 4.0097 | 4.954 | 3.0010 | 2.5100 |

| CNN | 4.613 | 4.998 | 3.0010 | 2.7814 |

| SVM | 8.808 | 6.790 | 4.564 | 18.441 |

| MLP | 4.719 | 4.453 | 3.282 | 15.609 |

| KNN | 12.441 | 7.281 | 5.783 | 24.37 |

Table 12.

Comparison with related work.

Table 12.

Comparison with related work.

| References | Talking

Condition | Database | Classifier | EER of

Previous Work | EER of the

Proposed Model | Percentage

Decrease in

Error Rate |

|---|

| [45] | Neutral | Emirati | HMM1 | 11.5% | 7.02% | 38.95% |

| HMM2 | 9.6% | 26.87% |

| [2] | Emotional | Emirati | CHMM3 | 29% | 10.93% | 62.31% |

| CSPHMM | 21.75% | 49.74% |

| [20] | Emotional | RAVDESS | DNN-GMM | 20.83% | 13.56% | 34.90% |

| GMM-DNN | 23.95% | 43.38% |

| HMM-DNN | 17.70% | 23.38% |

| DNN-HMM | 23.26% | 41.70% |

| Stressful | SUSAS | GMM-DNN | 10.02% | 9.59% | 4.29% |

| DNN-HMM | 19.98 % | 52.00% |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).