1. Introduction

Many modern applications involve vast amounts of data that need to be classified into increasingly complex categorization schemes in which one data instance may simultaneously belong to several topics. This task is typically termed multi-label learning [

1,

2]. In contrast with single label classification, where each instance is associated with only one class, multi-label classification is concerned with learning from instances in which each instance can be associated with multiple labels. Many multi-label learning algorithms have been developed and applied to diverse problems: text categorization [

3,

4], the automatic annotation of multimedia contents [

5], web mining [

6], rule mining [

7], cheminformatics [

8], bioinformatics [

9], information retrieval [

10] and scientific applications [

11] among others.

Examples of multi-label problems appear in almost any application field. For instance, in extracting the aspects of restaurant reviews from social network comments. In this context, the author of the text may mention none or all aspects of a preset list, such as, service, food, anecdotes, price, and ambiance. When classifying text by the topics included any text can contain one or several topics. In predicting subcellular localization of proteins [

12] each protein can be localized in more than one part of the cell. In sentiment analysis a text can be classified as containing more than one sentiment at the same time [

13].

The key challenge of multi-label learning is taking advantage of the correlations among labels to address the exponential growth of the label space as the number of distinct labels increases; when the number of distinct labels is

q, the label space is

. One of the best-performing methods in multi-label learning is the adaptation of the

k-nearest neighbors for multi-label datasets, the Multi-Label

k-Nearest Neighbors (ML-kNN) [

14] method. There are other adaptations of

k-NN based on a binary relevant approach where the base classifier is a

k-NN rule [

15]. Any advance in the ML-kNN method is relevant as it is able to achieve very good performance, improving the results of much more complex algorithms [

16].

ML-kNN uses the

k nearest neighbors of every instance to predict the

a posteriori probability of each label. However, the distribution of the labels across a particular dataset are very different for each label [

17]. The algorithm is less accurate when the number of labels in the training set is not balanced and when the training instances are unevenly distributed in space [

18]. In those cases, using the same value of

k for all labels might be a suboptimal approach. The multi-label nature of ML-kNN does not depend on using the same

k for all labels, so we propose the use of a different

k for each label. We name this method “Multi-Label

k’s-Nearest Neighbors” (ML-k’sNN).

In ML-k’sNN, instead of using a single k value for all labels, we use a vector . A method for obtaining must be devised to derive a useful approach. We state the problem as an optimization task and propose three different algorithms. The contribution of our paper is two-fold. Firstly, we have devised a new definition of ML-kNN adapted to the use of different values of k depending on each label. Secondly, we have developed a method for obtaining the optimal value of each k for every label. In a large set of 40 problems, we compare our method with two different implementations of standard ML-kNN and achieve a significant improvement. A further experiment is carried out to compare our proposal with ten different variants of ML-kNN. We have also carried out different studies on the behavior of our proposal depending on characteristics of the datasets such as number of features, number of labels and label diversity.

Furthermore, we have combined ML-k’sNN with instance selection and showed how our proposal is able to achieve better results than standard ML-kNN with a reduction ability above 95%. Finally, we have also shown the ability of our method to be adapted to other instance-based multi-label methods, such as LAML-kNN, with very good results.

The remainder of this paper is organized as follows:

Section 2 describes the existing related work;

Section 3 describes our proposal in depth;

Section 4 explains the experimental setup;

Section 5 presents and discusses the experimental results and, finally,

Section 6 summarizes the conclusions of our work.

2. Related Work

Since ML-kNN was first developed [

14], several modifications and improvements have been proposed. Xu [

19] proposed and adaptive method based on quadratic programming to weight the votes of the different neighbors. Wang et al. [

20] proposed a locally adaptive multi-label

k-nearest neighbor (LAML-kNN) method where the instances are first clustered into different groups and then each cluster is separately processed to obtain the a posteriori probabilities. Jiang et al. [

18] developed a weighted modification of ML-kNN where different weights are assigned to each label according to the proportion of labels and the mutual information regarding the spatial distribution of unseen instances to training instances. The author states that this method can reduce the probability of misjudging the unseen instance’s label set. This method can easily adapted to our proposal using a different value of

k for each label together with the corresponding label weight. Wang et al. [

21] proposed a multi-label classification algorithm based on

k-nearest neighbors and random walk. This method constructs the set of vertices from a random walk graph for

k-nearest neighbor training of samples of certain test data and the edge set of correlations among the labels of the training samples. Although this method can also be adapted to our philosophy its computational cost would be too high as the original method has serious scalability problems.

Other methods have been developed to improve ML-kNN. Younes et al. [

22] proposed a dependent multi-label

k-nearest neighbors (DMLk-NN) algorithm to take label association into account. While predicting the relevance of a label

for

, along with the label

, DML-kNN utilises all the other labels

of the datapoints in the neighbor of the new datapoint

. As for the previous two methods, DML-kNN can be adapted to use label dependent

k values with almost no modifications. Pakrashi and Mac Namee [

23] proposed Stacked-MLkNN, which follows the stacking methodology [

24].

Using a fuzzy approach, Lin and Chen [

25] developed Mr.KNN (Soft Relevance for Multi-label Classification) which combines a fuzzy c-means algorithm with a voting mechanism based on the

k-nearest neighbors of every sample. The method voting stage can also be adapted to our label dependent approach. Vluymans et al. [

26] developed a fuzzy rough multi-label classifier, FRONEC (fuzzy rough neighborhood consensus).

On a different task, the concept of label specificity has been also applied for feature selection for multi-label datasets. Hang and Zhang [

27] developed a method called

Collaborative Learning of label semantIcs and deep label-specific Features (CLIF) for multi-label classification.

As showed above, one of the advantages of our proposal is that, as it modifies the basic definition of the standard ML-kNN method, it can be applied to any other implementation of the method, such as those cited above. In the experiments, we will compare standard ML-kNN and LAML-kNN. Although it could applied to other Ml-kNN methods we restrict ourselves to two implementations to avoid too repetitive experiments.

3. Multi-Label ’s-Nearest Neighbors (ML-k’sNN)

Formally, we define a multi-label problem as follows [

2]: Let

T be a multi-label evaluation dataset consisting of

p multi-label instances

and their associated label set

, where

,

with a label set

L, where

. Let

h be a multi-label classifier and

be the set of labels predicted by

h for the instance

. Let

,

,

be a real-valued function

. A successful learning system would tend to output larger values from function

f for the labels in

versus those not in

. The real-valued function

f can be easily transformed to a ranking function,

, where

is the predicted rank of label

for instance

. For example, if

f represents the probability of every instance of being relevant, which is the case for many multi-label classifiers,

can be obtained assigning to

its position within the sorted values of

f.

can be obtained from

when an appropriate threshold is set.

As an example if we have a problem with , and instance is composed for a sample of a certain dimension d, and . means that labels 2 and 3 are relevant for instance and labels 1, 4 and 5 are not relevant.

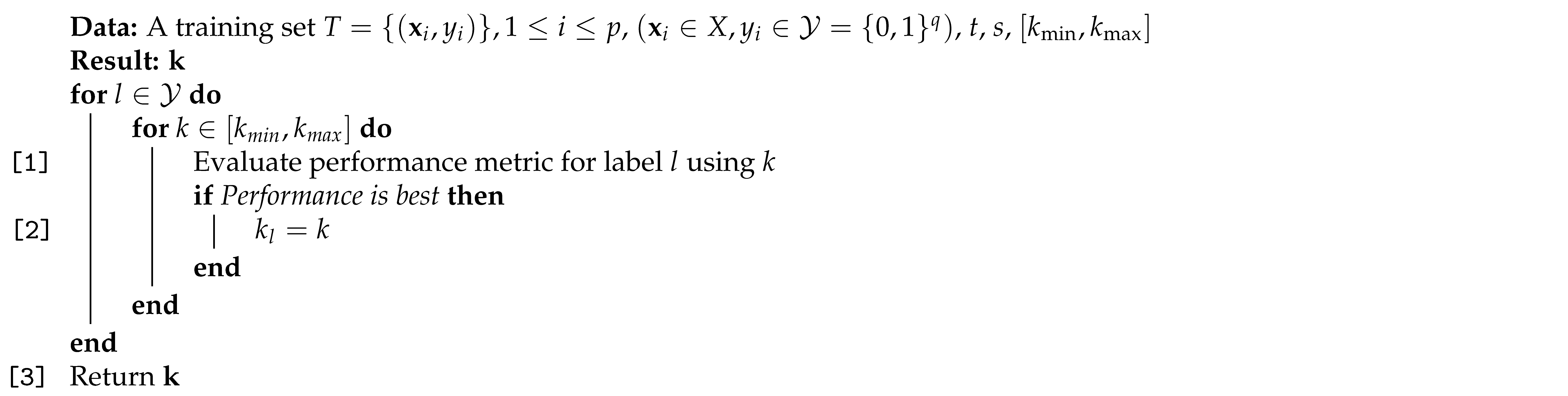

Our method is based on two different ideas. First, we modify ML-kNN to use a different value for the prediction of l-th label. We must mention that the use of multiple values for the number of neighbors k, one per label, does not modify the computational cost of the method at testing stage as both methods, ML-kNN and ML-k’sNN, must obtain the distance of the query instance to all the prototypes. The second idea describes a way to obtain the values for each label . The first idea is rather straightforward, the modification affects only the number of neighbors considered when obtaining the different probabilities for each label. Algorithm 1 depicts the proposed approach. Thus, once the vector of values is obtained, the algorithm is similar to ML-kNN. We termed our method Multi-Label k’s-Nearest Neighbors (ML-k’sNN) as it uses a variety of k values instead of just one. In this way, we will never have a ML-4’sNN in the same sense that a ML-4NN, as the number of different k’s will depend on the number of labels.

As in the standard ML-kNN algorithm,

is the prior probability of label

l being relevant (irrelevant) and

(

) is the posterior probability of label

l being relevant (irrelevant),

is the set of neighbors of

for label

j.

| Algorithm 1: ML-k’sNN: Multi-label k’s nearest neighbors algorithm. |

![Mathematics 11 00275 i001 Mathematics 11 00275 i001]() |

Once we have defined the new algorithm, we must establish how is obtained. In the standard ML-kNN, k is either arbitrarily fixed or obtained using cross-validation. It is evident that letting the expert to fix an a priori value of for every label is unreasonable as there is not way of knowing optimal values for every label. Thus, we must design a method that establishes the best values for . We define this task as an optimization problem.

This optimization problem is defined in the following way: first, we set an interval from which the values of

will be chosen

. Then, a certain metric,

m (for multi-label performance metrics refer to Zhang and Zhou [

2]), is chosen to evaluate each vector of

s. Our algorithm must optimize

m over all the possible values of

. As a base algorithm, we first define a single-label approach to the problem. In this algorithm, we obtain

as the optimum value for a standard single-label

k-NN algorithm used to classify only label

. This single-label approach is named ML-k’sNN.SL (shown in Algorithm 2). However, we must bear in mind that this algorithm does not optimize the value of metric

m, as it is based on a single-label approach.

| Algorithm 2: ML-k’sNN.SL: Multi-label k’s nearest neighbors algorithm single-label approach. |

![Mathematics 11 00275 i002 Mathematics 11 00275 i002]() |

Thus, we must devise a multi-label approach. However, when the optimization problem is addressed as a multi-label problem, the method for obtaining the vector of s depends on the metric. For multi-label classification metrics, the prediction of whether a certain label is relevant to a given instance depends only on the value of . Thus, we can optimize the value of every independently and that guarantees the optimal value. However, for label ranking, the position of a label for an instance depends on all the values of . In that case, we cannot obtain optimal values of by independently searching over each label.

When we plan as optimization target a classification metric we must bear in mind is that for every instance

, we will use the classification given by

as defined in Algorithm 1. The result of the testing stage of the algorithm is the prediction of relevant and irrelevant labels for a test label

,

, and the ranking function for the query instance,

, as defined above. This algorithm shows that

depends only on

to predict whether label

l is relevant, while

does not depend on

. This observation means that any classification metric used for multi-label problems can be optimized by obtaining the optimal value for every

independently. Thus, a simple way to optimize the given metric is to carry out a search for every

separately. This method is fast and has the additional advantage of easy parallelization. The method is outlined in Algorithm 3. This method is named ML-k’sNN.ML, where ML stands for multi-label. This additional calculation of the optimal

k for every label has a computational cost comparable with obtaining the optimal

k for standard ML-kNN using cross-validation.

| Algorithm 3: ML-k’sNN.ML: Multi-label k’s nearest neighbors algorithm multi-label approach. |

![Mathematics 11 00275 i003 Mathematics 11 00275 i003]() |

In several cases the performance of different values for k achieves the same value for the metric used. In such cases, we select, from among the equally best k’s, the nearest one to the best value for ML-kNN, obtained by 10-fold cross-validation.

However, if our optimization target is, or contains, a ranking metric, this procedure cannot be used. Although the prediction of every label is independent from the prediction of the rest of the labels in ML-kNN, the ranking of the labels does depend on all the obtained values. In such a case, an independent search for each cannot be performed. We must search for the optimal vector of k values . In this case, we must optimize metric m for all possible vectors of k’s, , with . Due to the size of the search space, an exhaustive search such as the one used in ML-k’sNN.ML is not feasible.

In such complex optimization processes, evolutionary computation has proven to be a very efficient tool. We propose a simple evolutionary algorithm where each solution is codified as a vector of integer values. The fitness of every individual is obtained using the method shown in Algorithm 1. New individuals were generated using BLX-

[

28] crossover and no mutation was applied. We intentionally use a very simple evolutionary method to avoid obscuring the results of our approach with the use of a very complex optimization process. This method is named ML-k’sNN.EC, where EC stands for evolutionary computation.

4. Experimental Setup

To produce a fair comparison between the standard ML-kNN algorithm and our proposal, we considered 40 datasets whose characteristics are shown in

Table 1. These datasets represent a varied number of problems with different numbers of instances, features and labels. Furthermore, the label densities among the datasets are very different. The selection of the datasets was carried out with the aim of using a wide variety of problems in terms of instances, features, number of labels, label density and label cardinality. Datasets from different application fields were selected as well, such as text categorization, image classification and Bioinformatics.

The tables shows a detailed description of the characteristics of the datasets. The tables shows the number of instances of the datasets, the number of inputs, the number of labels, the label cardinality, the label density, the label diversity, the proportion of distinct labels, and MeanIR and CVIR measures [

2,

17]. The table also show the source of every dataset.

Regarding comparisons, we used the Wilcoxon test [

34] as the main statistical test for comparing pairs of algorithms. For groups of methods, we first carry out an Iman–Davenport test to ascertain whether there are significant differences among methods. When the Iman–Davenport test rejects the null hypothesis, we can proceed with a post hoc Nemenyi test [

35], which compares groups of methods.

Although there are several versions of ML-kNN [

15,

18,

20], our proposal can be used to modify most of them. In our experiments, we used the original ML-kNN, and LAML-kNN. The source code, which was written in C and licensed under the GNU General Public License, and the datasets are freely available upon request from the authors.

For evaluating the classification performance of the different methods we used 10-fold cross-validation which is one of the most common methods in Machine Learning.

k-fold cross-validation involves randomly dividing the set of observations into

k groups, or folds, of approximately equal size. The first fold is treated as a validation set, and the method is fit on the remaining

folds [

36]. This process is repeated for each one of the

k folds and the performance metrics reported are the average values over the

k repetitions.

The evaluation of multi-label classification methods is more difficult because the prediction result for an instance is a set of labels, and the result can be fully correct, partially correct (with different levels of correctness) or fully incorrect [

37,

38,

39]. The metrics can be divided into two major groups:

example-based (EB) metrics and

label-based (LB) metrics. The former group evaluates the learning system for each instance (example) separately and then obtains a unique measure for the average value across the test set.

There are many metrics defined in the literature [

2]. Among them, we have chosen accuracy and F1 metrics to compare the results of the studied methods. The precision and recall metrics are not usually reported individually because each can always be maximized by selecting no relevant label or all labels as relevant. These two metrics are devoted to classification. We also consider three metrics used for measuring the ability of a classifier from the point of view of ranking the labels: coverage, ranking loss and average precision [

2]. Finally, for label-based metric we report F1 micro averaged and F1 and macro averaged.

In the experiments, we show the results of using all of these metrics as well as the results of using a subset of them in certain cases. The use of different metrics is justified because they represent the performance of the models from different points of view.

5. Experimental Results

The first set of experiments was devoted to the comparison of ML-k’sNN and LAML-k’sNN against ML-kNN and LAML-kNN. As we stated in a previous section, we carry out a different procedure depending on whether the target metric is a classification metric or a ranking metric. The first set of experiments was carried out using classification metrics. As described above, we used accuracy, F1, the macro-averaged F1 and micro-averaged F1.

Table 2,

Table 3,

Table 4 and

Table 5 show the comparison among the different methods in terms of the Wilcoxon test. The tables show, for every experiment, the average value of the performance metric (row Mean in the table), the average Friedman’s rank (row Rank), the win/loss record of the method in the column against the method in the row (row w/l), the

p-value of the Wilcoxon test (row

p) and the

values of the Wilcoxon test (row

). The average rank defined above is

the average rank for

N datasets being

the rank of

j-th algorithm on

i-th dataset, where in case of ties, average ranks are assigned.

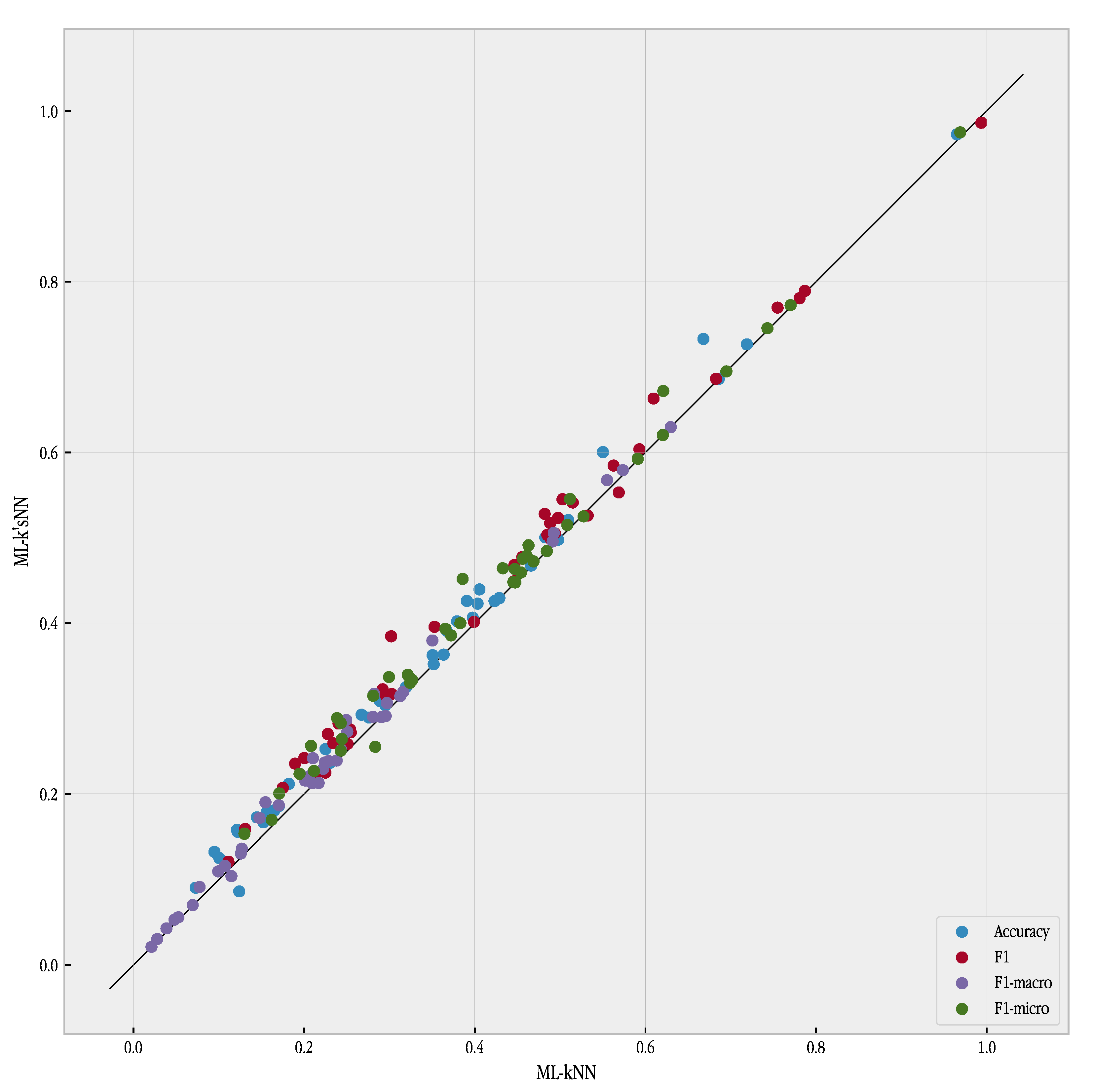

Individual results for each dataset are shown in

Figure 1. This figure represents the results for every datasets of ML-kNN and ML-k’sNN. Points above the main diagonal correspond to problems where our method outperformed ML-kNN. It can be seen in the figure that almost all points indicate a better performance of ML-k’sNN.

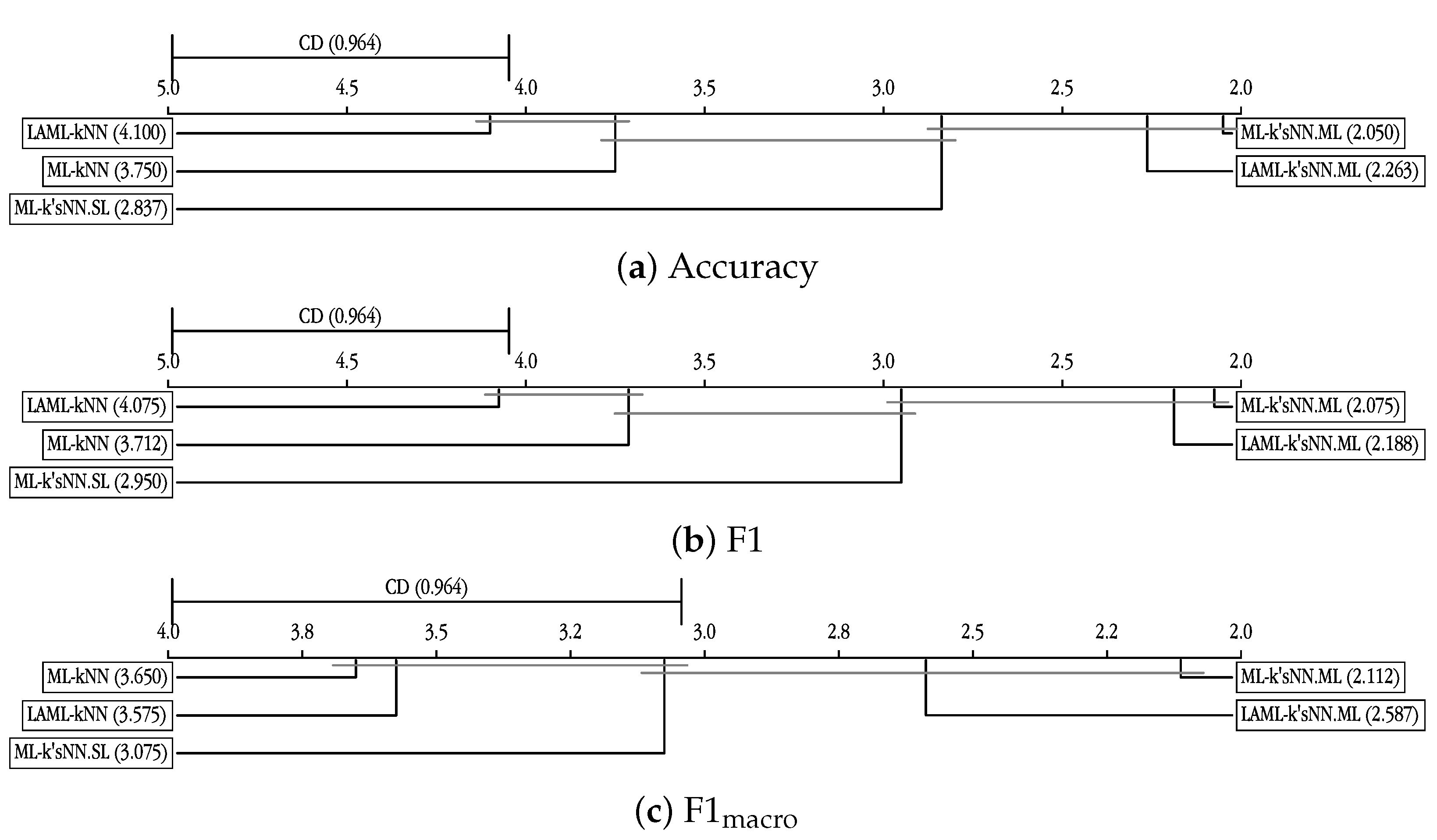

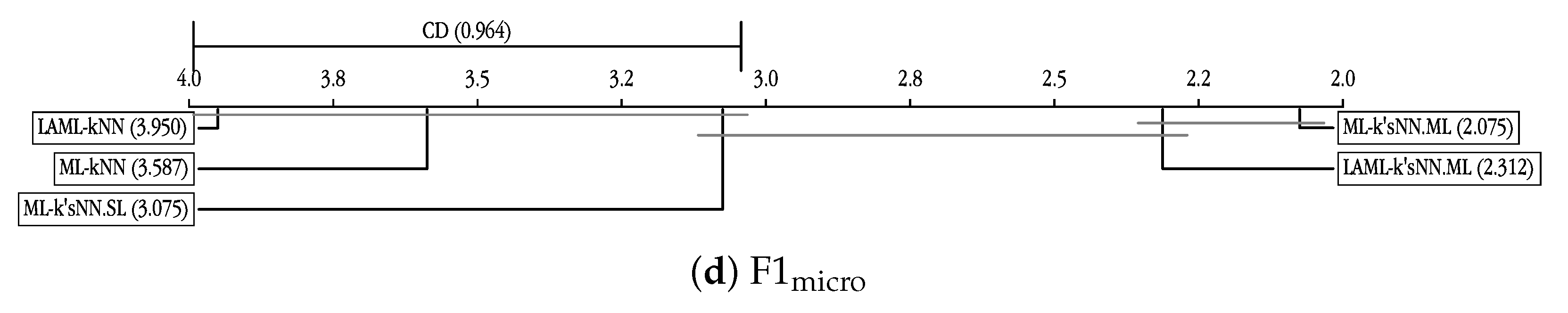

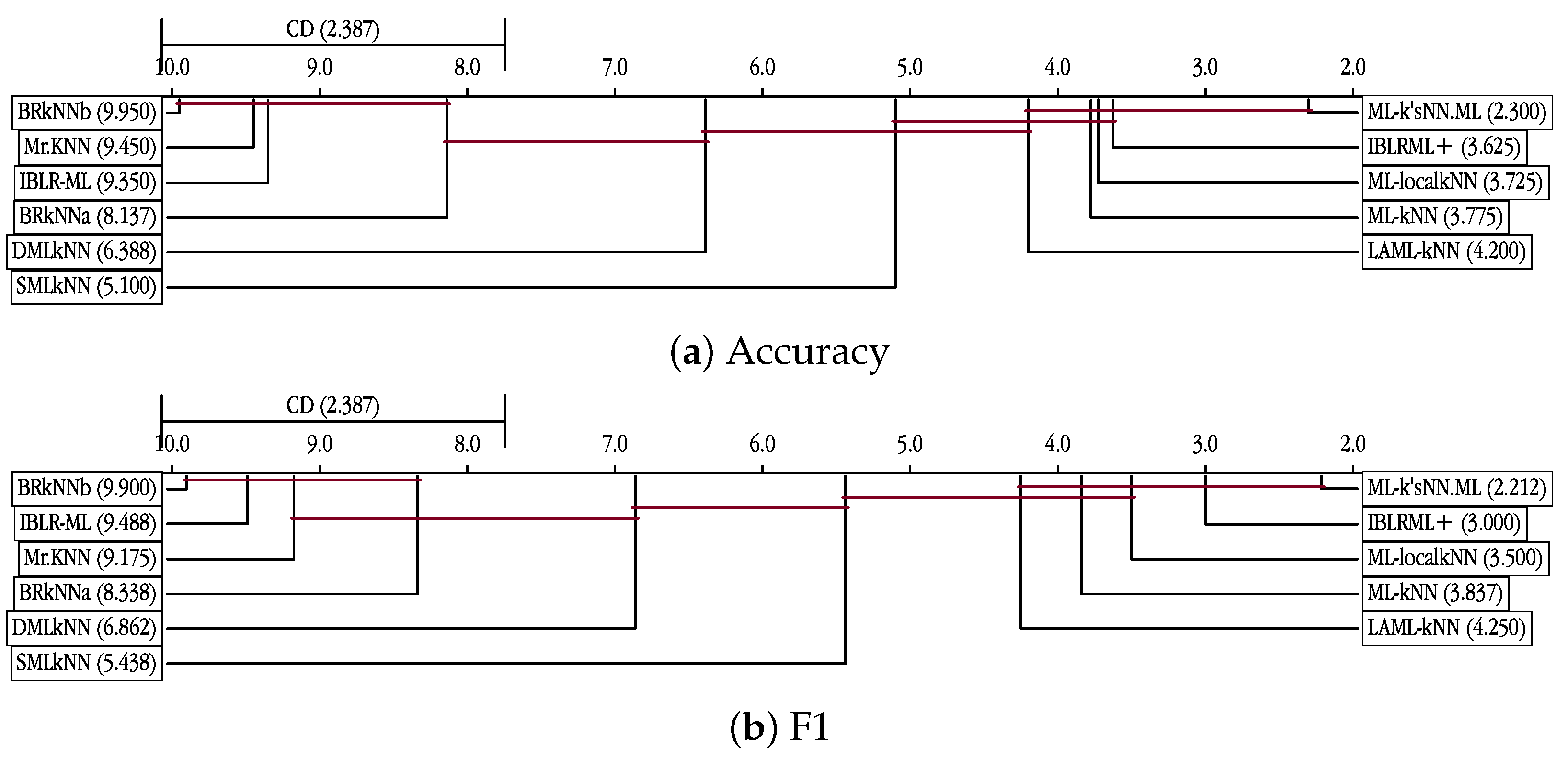

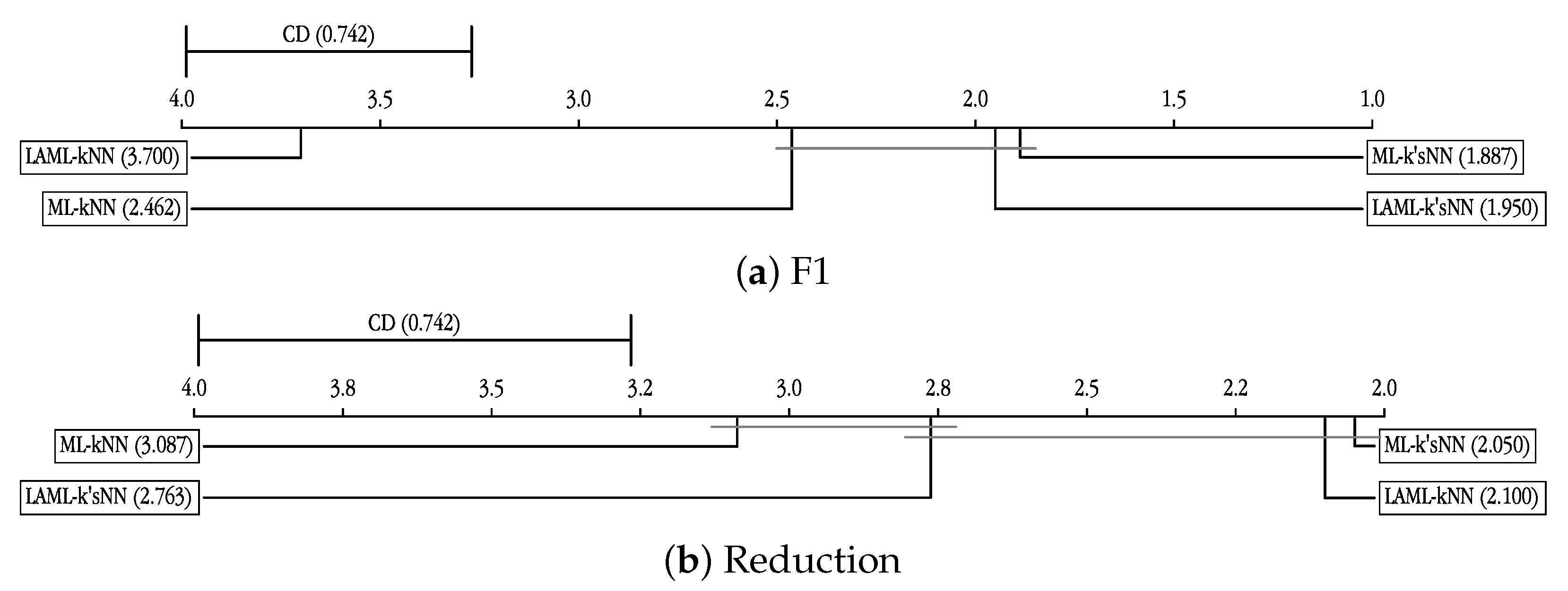

Figure 2 shows the Nemenyi test for those same metrics using a graph representation [

34]. In this plot, we show the critical difference, CD. Then we show for every algorithm its average rank,

. Two algorithms

i and

j can be considered significantly different if

. In order to show that information, a horizontal line connects all groups of algorithms that do not fulfill that condition and should be considered as performing equally. Iman–Davenport tests for all four metrics obtained a

p-value of 0.0000.

The first metric studied was accuracy.

Table 2 shows the comparison using the Wilcoxon test for the five studied methods and Figure a illustrates the Nemenyi test. The first piece of information we obtain from the Figure is that the methods are sorted from best (left hand side of the figure) to worst (right hand side) according to average ranks. This average rank is by itself a good indicator of the performance of any model. The second piece of information is given by the critical difference (CD = 0.964). If two methods’ ranks differ more than 0.964 then Nemenyi test result tells us that there are significant differences in their performance. That is the reason why the methods whose ranks are below that difference are linked with a horizontal line. Thus, according to the Nemenyi test, the single-label ML-k’sNN approach was able to significantly improve standard ML-kNN. Although it achieved a better average rank, the Nemenyi test did not find significant differences. However, the Wilcoxon test found significant differences between ML-kNN and ML-k’sNN.SL. A further study of the results showed that this approach tended to exhibit over-learning. It was the best method in terms of training performance, but its performance was worse in terms of testing accuracy. This behavior of ML-k’sNN.SL was reproduced for all the remaining metrics. ML-k’sNN.ML demonstrated better performance than ML-kNN, according to both tests. This method had a clear advantage over the standard approach, improving the results of ML-kNN for 35 of the 40 datasets. ML-k’sNN.ML and LAML-k’sNN.ML improved the two studied versions of ML-kNN.

Table 3 shows the comparison for F1 metric. For this metric, our proposed method obtained very good results. The two studied versions of ML-k’sNN based on the multi-label approach outperformed both versions of ML-kNN. The differences are significant in all cases and for both tests, Wilcoxon and Nemenyi. ML-k’sNN.SL improved the results of ML-kNN according to the Wilcoxon test, although the Nemenyi test failed to find significant differences. The improvement of LAML-k’sNN.ML with respect to LAML-kNN is remarkable.

We also carried out experiments for macro- and micro-averaged versions of the F1 metric. The results of the comparisons are shown in

Table 4 and

Table 5, respectively. Iman–Davenport tests for all three metrics obtained a

p-value of 0.000. For these two metrics, ML-k’sNN.ML and LAML-k’sNN.ML improved the results for the standard versions ML-kNN and LAML-kNN. ML-k’sNN.SL achieved a better average rank than ML-kNN, but without significant differences, according all the tests.

Our second set of experiments was devoted to ranking metrics. The results for the tests are shown in

Table 6,

Table 7 and

Table 8 for average precision, ranking loss and coverage, respectively.

Figure 3 illustrates the results for ML-kNN and ML-k’sNN for the same three metrics. To obtain a homogeneous plot, ranking loss is represented as 1-“ranking loss” and coverage is represented as the relative coverage with respect to the optimal value. In that way, all the points above the main diagonal demonstrate the superior performance of our proposal. Nemenyi test results are shown in

Figure 4.

As explained above, an evolutionary computation method was used to obtain the optimal k values when the ranking metrics needed to be optimized. The single-label version of our method, ML-k’sNN.SL, demonstrated poor performance for the three ranking metrics. This was not an unexpected result, as this method does not consider any kind of multi-label information. On the other hand, ML-k’sNN.EC achieved remarkably good performance. For all three metrics, it improved the results of the standard version, according to both the Wilcoxon and Nemenyi tests. LAML-k’sNN.EC improved the results of LAML-kNN for average precision and coverage. However, ranking loss obtained a worse average ranking, although the differences were not significant.

In order to gain a deeper understanding of our proposal, we studied the average value of the obtained

k’s for all the labels.

Figure 5a shows this average value when the datasets are sorted according to the number of features. The average values exhibited a tendency toward larger values for datasets with less features. It is also interesting to point out that, although the values for the different labels in ML-k’sNN.ML show a large variation, the averaged values were very similar to the best value obtained for ML-kNN through cross-validation, as it shown in the figure. ML-k’sNN.SL obtained systematically smaller values of

k, due to the fact that, for its single-label approach,

was often the best value.

Both LAML-kNN and LAML-k’sNN obtained smaller values than ML-kNN and ML-k’sNN. As the former two methods work with smaller clusters, it is expected that the optimal k within each cluster would be smaller.

Figure 5b shows the same average value of

k when the datasets are sorted according to the number of labels. Interestingly, ML-kNN and ML-k’sNN.ML obtained larger values when the number of labels was small or large, and lower values for the datasets with a medium number of labels.

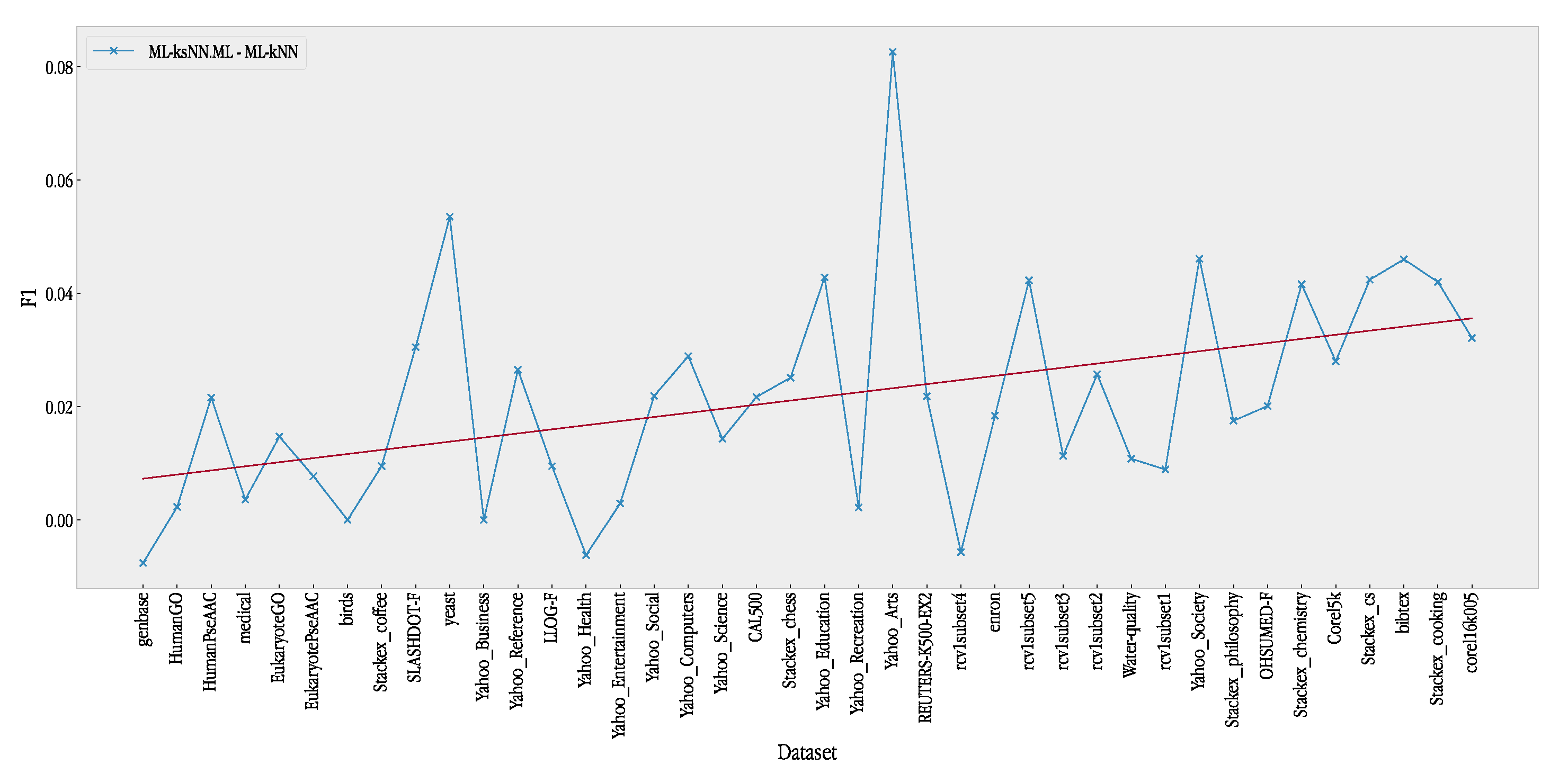

Another interesting issue is the relative behavior of ML-k’sNN with respect to ML-kNN, depending on the characteristic of the datasets. This is always a very difficult task, as most algorithms do not show a clear trend of behavior in regard to particular characteristics of the datasets. We studied the performance of the standard method and our approach regarding the diversity of the datasets.

Figure 6 shows the difference in performance using the F1 metric between ML-k’sNN and ML-kNN when the datasets are sorted in terms of increasing diversity.

The figure displays a tendency of ML-k’sNN to improve its relative performance when the diversity of the datasets increases. To obtain a clearer idea of the general trend of the differences, the figure shows a linear regression of the difference in terms of the F1 metric. Thus, linear regression has a clear upward slope. This an interesting property of our proposal, as datasets with higher diversity are usually more difficult to address. A similar study that considered the number of labels was carried out (see

Figure 7). Again, our proposed method demonstrated better behavior as the number of labels increased. These two figures shows that ML-k’sNN is more effective when the multi-label problem is more difficult, i.e., when there are many labels and many diverse sets of relevant labels.

5.1. Comparison with Other Instance-Based Learning Methods

In the previous section we have shown that our algorithm was able to beat the standard ML-kNN algorithm and another version of that algorithm, LAML-kNN. However, other modifications of ML-kNN have been proposed in the literature. In this section we present a comparison with several versions of ML-kNN and other instance-based methods. We compared the best version of our proposal, ML-k’sNN.ML for classification metrics and ML-k’sNN.EC for ranking metrics, with ML-kNN, LAML-kNN, two binary relevance implementations of k-NN in scikit learn Python package [

40], BRkNNa and BRkNNb, stacked ML-kNN [

41] (SMLkNN), dependent ML-kNN (DMLkNN) [

22], soft relevance for multi-label classification [

25] (Mr.KNN), the combination of instance-based learning and logistic regression for multilabel classification, IBLR-ML and IBLR-ML+ [

42], and a recent method for obtaining local

k values for ML-kNN, ML-localkNN [

43].

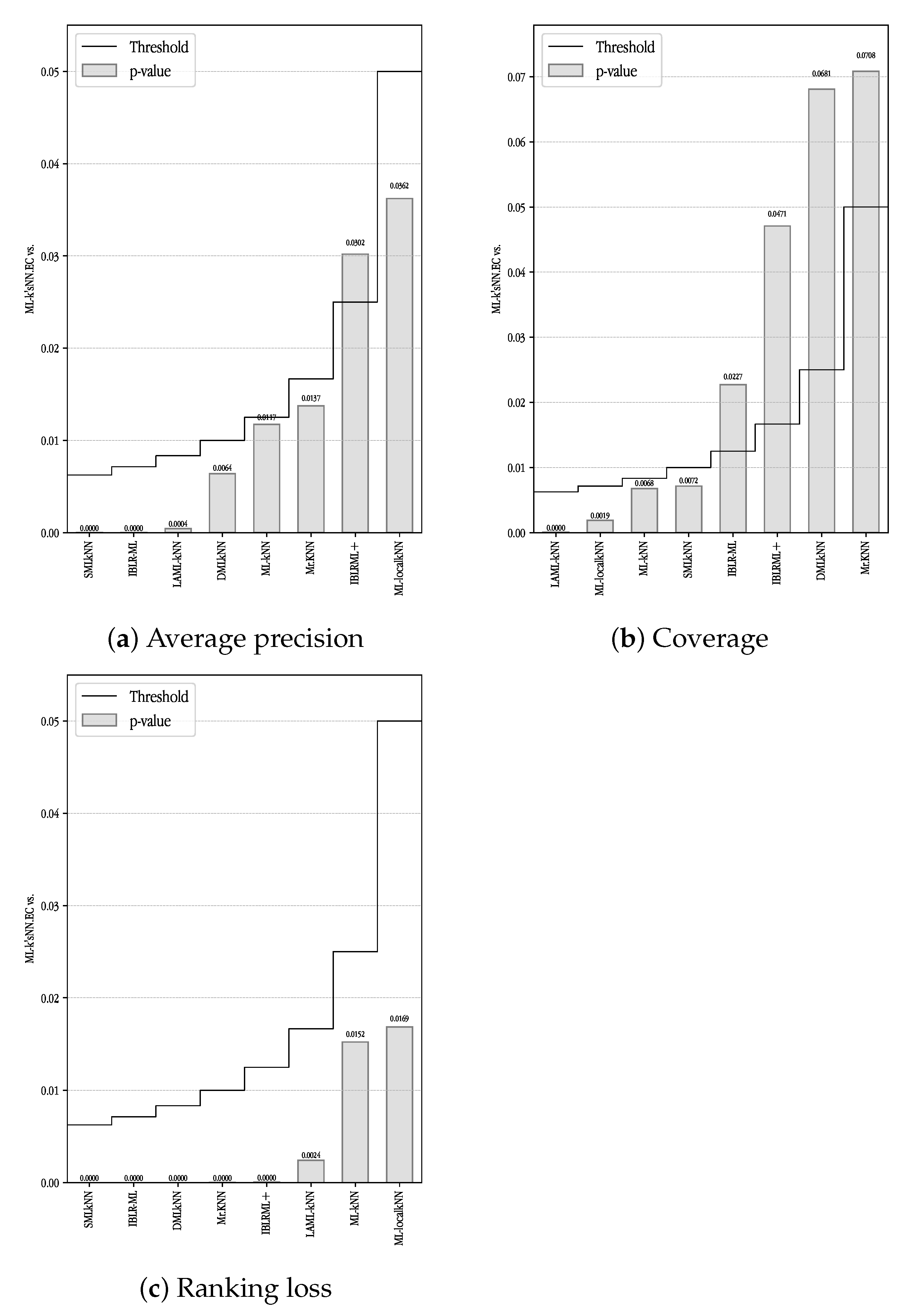

Figure 8 and

Figure 9 show the Nemenyi test for classification and ranking metrics, respectively. BRkNNa and BRkNNb are not implemented for ranking metrics so they are not shown in

Figure 9. The Iman–Davenport test found significant differences for all seven metrics.

The first interesting result was that, in general, none of the modifications of ML-kNN showed a consistently better performance than the original proposal with the exception of ML-localkNN and IBLRML+. The second interesting result was that our proposal always achieved the best performance in terms of the average ranking regardless of the metric considered. Nemenyi test found significant differences most of the times. These differences are specially marked for ranking metrics.

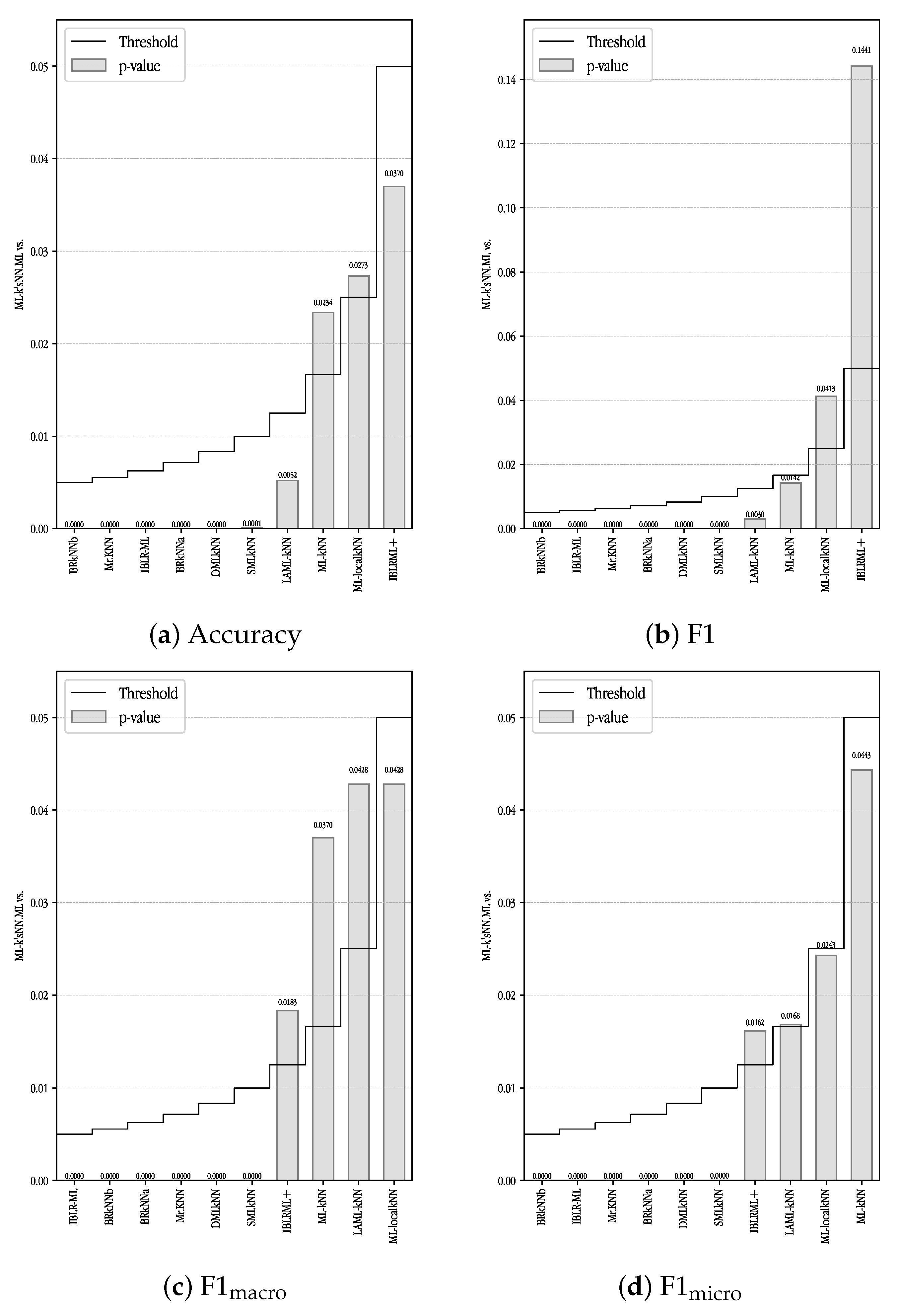

After applying the Iman–Davenport test, we carry out a Holm’s procedure [

34] for controlling the family wise error in multiple hypothesis testing. In Holm’s procedure, the best-performing algorithm in terms of Friedman’s ranks is compared in a stepwise manner against the other methods. We carried out this procedure for the seven metrics.

Figure 10 and

Figure 11 show a graphical representation of Holm test for classification and ranking metrics, respectively. The methods are shown in increasing values of average ranks and a bar plot shows the

p-value of the statistical test. A horizontal line shows the critical value of the test. Bars below this horizontal lines mean a significant difference.

In

Figure 10 we can see the good performance of ML-k’sNN. For the four classification metrics it was able to significantly improve the remaining methods. For accuracy it showed a significantly better performance than 7 of the 10 methods used for the comparison. For F1, only ML-localkNN and IBLRML+ were not worse than ML-k’sNN. For F1

and F1

, ML-k’sNN beat 6 of the 10 methods. As we cannot expect any algorithm to be always the best performing one for every dataset and metric, the fact that MLk’sNN was able to obtain the overall best results for the four metrics is remarkable. Furthermore, we must take into account that most of these methods can also use our proposal to be improved.

For ranking metrics—see

Figure 11—the performance of ML-k’sNN was even better. For averaged precision it was better than all the methods with the exception of IBLRML+ and ML-localkNN. For ranking loss it improved the results of all the methods. For coverage it also achieved the best results but the differences were not significant against the four best performing methods.

5.2. Instance Selection

Instance selection is a widely used method in single-label classification that improves the performance of instance-based methods and reduces the size of the training set [

44]. For single-label problems, evolutionary algorithms have demonstrated better performance and also a good scalability [

45,

46]. For multi-label datasets, only a few methods have been developed [

47,

48,

49,

50,

51].

An interesting aspect of this work is the study of whether our approach is able to maintain its superior performance when instance selection is applied [

52,

53]. As we are not proposing a new instance selection algorithm, we used for both the standard ML-kNN method and ML-k’sNN a very simple CHC evolutionary computational approach [

54,

55] which has proven its efficiency for instance selection in single-label problems [

56].

We used populations of 100 individuals evolved for 1000 generations. This experiment was carried out using the F1 metric.

Table 9 shows the comparison among the different methods in terms of the F1 metric and reduction. Comparisons in terms of the Nemenyi test are shown in

Figure 12.

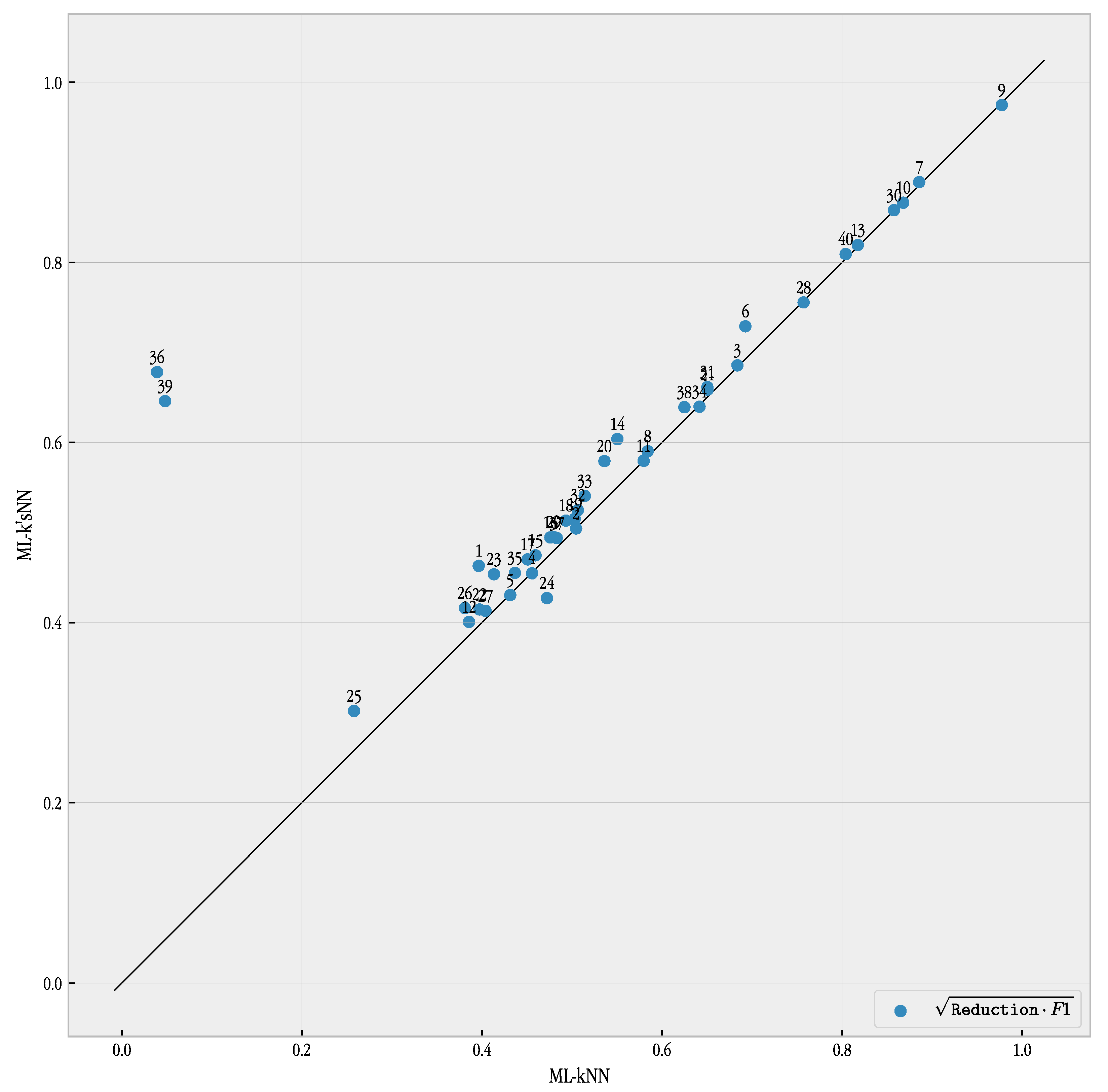

Regarding the comparison between ML-kNN and ML-k’sNN, the tables demonstrate the superior performance of our proposed method. ML-k’sNN achieved better results in both reduction and the F1 metric. These differences were significant, according to the Wilcoxon test. This behavior is illustrated in

Figure 13, where the results of the geometric mean of F1 and reduction are plotted for both ML-kNN and ML-k’sNN. With the exception of dataset #24, Stackex_coffee, the combined performance of reduction and F1 is always better for ML-k’sNN.

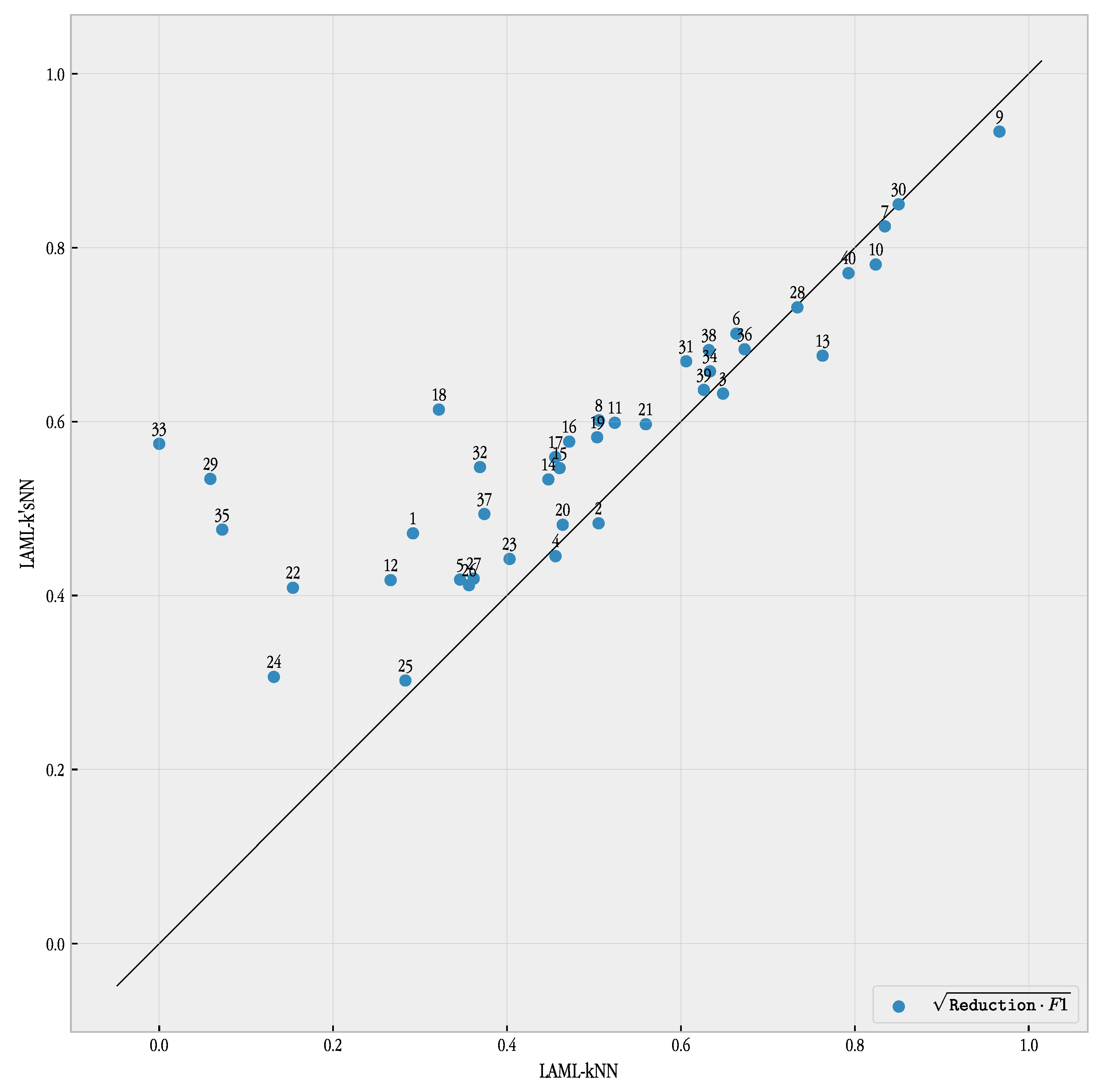

The comparison with LAML-kNN showed different results. LAML-k’sNN improved the F1 metric performance of LAML-kNN but with a lower level of reduction. However, the combined reduction and F1 metric performance—see

Figure 14—was very favorable to our proposed method. In most cases, the differences in performance are rather large, and the performance of LAML-k’sNN is clearly superior.

6. Conclusions

In this paper, we have proposed a new multi-label classification algorithm, ML-k’sNN, based on the ML-kNN algorithm in which different values of k are used for each label. Three different methods for obtaining the set of k’s for every label are proposed and tested. Depending on the metric to be optimized, a label independent or evolutionary approach are used. The complexity of the three approached varies, so choosing the best one for a specific task would depend on the available resources and performance constraints.

The proposed method is studied using two different implementations of ML-kNN, the standard ML-kNN method and the locally adaptive ML-kNN method, LAML-kNN. However, the proposed method can be applied to almost any other version of ML-kNN. Using a large set of 40 problems with different characteristics, our proposed method demonstrated better performance when compared to both ML-kNN implementations. A further study has shown that our proposed method was able to improve, as a general rule, its performance when there are more labels in the dataset or when the diversity of the labels increases, although for some datasets it might not be the case. Furthermore, the improvement demonstrated by ML-k’sNN was maintained when the method was coupled with instance selection. In fact, the good performance of our approach was kept while obtaining a large reduction in training set size.

A final experiment compared our algorithm with ten different ML-kNN variants. This comparison showed the overall best performance of ML-k’sNN for all the four classification metrics and the three ranking metrics.

A promising research line is extending our approach to other methods based on ML-kNN. Almost any variant of ML-kNN, such as the used in the experiments, can be adapted to work with a k more appropriate for every label. In this way, our proposal may benefit other variants of instance-based multi-label learning methods.