1. Introduction

In this paper, we focus on the model proposed by Hosmer [

1], which is used to study the halibut data. There are two different sources of halibut data. One is from the research cruises, where the sex, age and length of the halibut are available, while another comes from the commercial catch where only age and length can be obtained since the fish have been cleaned before the boats returned to the port. The length distribution of an age class of halibut is closely approximated by a mixture of two normal distributions, which are

where

and

are the probability density functions of the normal distributions and

is the proportion of the male halibut in the commercial catches. Hosmer [

1] estimated the parameters of the two normal distributions using the iterative maximum likelihood estimate method. Murray and Titterington [

2] generalized the problem to higher dimensions and summarized a variety of possible techniques, such as maximum likelihood estimation and Bayesian analysis. Anderson [

3] proposed a semiparametric modeling assumption known as the exponential tilt mixture model, where the estimating of the proportion is performed by a general method based on direct estimation of the likelihood ratio. This semiparametric model is further studied by Qin [

4], who extended Owen’s [

5] empirical likelihood to the semiparametric model and gave the asymptotic variance formula for the maximum semiparametric likelihood estimation. However, empirical likelihood may suffer from some computational difficulties. Therefore, Zou et al. [

6] proposed the use of partial likelihood and showed that the asymptotic null distribution of the log partial likelihood ratio is chi-square. To estimate the mixing proportion, an EM algorithm is given by Zhang [

7]. It is shown that the sequence of proposed EM iterates, irrespective of the starting value, converges to the maximum semiparametric likelihood estimator of the parameters in the mixture model. Furthermore, Inagaki and Komaki [

8] and Tan [

9] respectively modified the profile likelihood function to provide better estimators for the parameters.

Except for the estimation of parameters, another important issue is to test the homogeneity of the model. Thus, the null hypothesis is

To test the null hypothesis, the classical results on the likelihood ratio test (LRT) may be invalid. This is caused by the lack of identifiability of some nuisance parameters. To solve this problem, Liang and Rathouz [

10] proposed a score test and applied it to genetic linkage analysis. They showed that the score test has a simple asymptotic distribution under the null hypothesis and maintains adequate power in detecting the alternatives. This idea is further generalized by Duan et al. [

11] and Fu et al. [

12]. On the other hand, Chen et al. [

13,

14] proposed modified likelihood functions to make the LRT available. They gave the asymptotic theory of the modified LRT and showed that the asymptotic null distribution is a mixture of

-type distributions and is asymptotically most powerful under local alternatives. Furthermore, Chen and Li [

15] designed an EM-test for finite normal mixture models, which performed promisingly in their simulation study. To solve the problem of degeneration of the Fisher information, Li et al. [

16] used a high-order expansion to establish a nonstandard convergence rate,

, for the odds ratio parameter estimator. The methods mentioned above have been applied successfully in many real applications. For example, genetic imprinting and quantitative trait locus mapping; see Li et al. [

17] and Liu et al. [

18].

Most of the mixture models described above mainly consider the case when

and

are normal density functions or have an exponential tilt. In this paper, we want to extend the conclusion to more general cases. A similar question has been researched by Ren et al. [

19]. In their paper, a two-block Gibbs sampling method is proposed to obtain the samples of the generalized pivot quantities of the parameters. They studied both cases when

and

are normal and logistic density functions. In our paper, we assume that

and

are in a specified location-scale family with location parameter

and scale parameter

. We propose a posterior

p-value based on the posterior distribution to test the homogeneity. We aim to give a

p-value under the posterior distribution, such that it has the same frequentist properties as the classical

p-value. This means that the Bayesian

p-value under proper definition can play the same role as the classical one. To sample from the posterior distributions, we propose to use the approximate Bayesian computation (ABC) method for the case when

and

are normal density functions, which is different from the cases when

and

are general. This is because the posterior distribution of the normal case can be regarded as using the information contained in the first two samples as prior distribution and updating it via the third one without loss of information. We find in our simulation that this method is promising and efficient, even though we use the simplest reject-sampling. For the general case, since the ABC method is no longer available, we use Markov Chain Monte Carlo (MCMC) methods, such as the Metropolis–Hastings sampling method proposed by Hannig et al. [

20] and the two-block Gibbs sampling proposed by Ren et al. [

19] to sample from the posterior distribution.

The paper is organized as follow. In

Section 2, we first define the regular location-scale family and give some properties of the family. We then propose our posterior

p-value for testing the homogeneity. We further introduce the sampling method for different cases. Real data of the halibut is studied in

Section 3 to illustrate the validity of our method. The simulation study is given in

Section 4, while the conclusion is given in

Section 5.

2. Test Procedure

In this section, we consider model (

1), where the distributions are in a certain regular location-scale family. Thus, we first give the definition in the following subsection.

2.1. Regular Location-Scale Family

In this section, we first give the definition of the regular location-scale family.

Definition 1 (regular location-scale family). Let be a probability density function. If satisfies

- (1)

, ;

- (2)

is continuous;

- (3)

;

- (4)

.

Then is defined as a regular density function, andis defined as the regular location-scale family. It is easy to verify that many families of distributions are regular location-scale families. For example, let

Then

and

are regular density functions. The families of distributions that are constructed by

and

are regular, and they are the families of normal distributions and logistic distributions, respectively. The two families of distributions are included later in the paper.

The following lemma highlights some properties of this family.

Lemma 1. If is a regular density function, then we have

- (1)

;

- (2)

;

- (3)

;

- (4)

;

- (5)

;

- (6)

.

We further calculate the Fisher information matrix of the regular location-scale family with the following proposition.

Proposition 1. Assume that is in the regular location-scale family, where . The parameter space is . Let be . Then

- (1)

The score function satisfieswhereis a two-dimensional vector. denotes the expectation under the distribution of parameters . - (2)

The Fisher information matrix satisfieswhere - (3)

The Fisher information matrix is given by

Proposition 2. Assume that and is regular. Then given bywhere and has the following properties. We then give the Fisher information matrix of the normal and logistic distribution. For the normal distribution, we have

Thus, the Fisher information matrix of normal distribution is

Similarly, for the logistic distribution,

Thus, the Fisher information matrix of logistic distribution is

2.2. A Posterior p-Value

We now consider testing the homogeneity of model (

1), where

and

are in

,

This is equivalent to testing the equality of the parameters of the two density functions, that is,

Consider the density function

where

is the unknown parameter. When

is the regular density function, then the Fisher information matrix is

where

When

,

the last row and column of

is zero, which means that

and is non-definite. Thus, we may encounter some difficulties when using some traditional test methods, such as the likelihood ratio test.

We suggest a solution here. First we assume that

is known. There are then four parameters, and we still denote them by

. We use the estimate of

instead since

is unknown. This is because when the homogeneity hypothesis holds, the distribution of the population is irrelative to

, so the level of the test is irrelative to the estimate of

. We then give the inference on

below. For the first two samples, the fiducial density of

and

are

where “∝” denotes “proportion to”; see example 3 of Hannig et al. [

20]. To combine (

4) with the third sample, we regard (

4) as the prior distribution. By the Bayes’ theorem

Denote the probability measure on the parameter space determined by (

5) by

, where

,

,

,

.

denotes the random variable. We can see from expression (

5) that

is the posterior distribution under the prior distribution

Let

Then, hypotheses (

3) is equivalent to

where

.

To establish Bernstein-von Mises theorem for multiple samples, we first introduce some necessary assumptions below. Let be the log-likelihood function of the ith sample, where .

Assumption 1. Given any , there exists , such that in the expansionwhere is the true value of the parameter. is the Fisher Information matrix. The probability of the following eventtends to 0 as , where is the Euclidean norm and denotes the largest absolute eigenvalues of a square matrix, A.

Assumption 2. For any , there exists , such that the probability of the eventtends to 1 as . Assumption 3. Under the prior π, there exist , such that the integral of below exists, Assumption 4. When , We then give the Berstein-von Mises theorem for multiple samples as follows.

Theorem 1. Denote the posterior density of by , whereIf Assumptions 1, 2 and 4 hold, thenFurthermore, if Assumption 3 holds, then We can then define the posterior p-value as follows

Definition 2. Letwhere is the probability under the posterior distribution. is the posterior mean and is the posterior covariance matrix. We call a posterior p-value. It should be noted that is defined under the posterior distribution, which is the distribution of parameters given the observation . However, when studying the properties of , we regard it as a random variable and denote it by . The theorem below guarantees the validity of the posterior p-value.

Theorem 2. Under the assumption of Theorem 1, if the null hypothesis in (3) is true, that is, and , then the p-value defined by (7) satisfieswhere “” is the convergence in distribution and is the uniform distribution on the internal . The proof is given in the

Appendix A. For a given significance level,

, we may reject the null hypothesis if the

p-value is less than

.

2.3. Sampling Method

The posterior mean,

, and the posterior variance,

, in Equation (

7) can be estimated by the sample mean and variance, respectively. Now the remain problem is how to sample from the posterior distribution. When

is unknown, we first propose an EM algorithm to estimate

, then we sample from the posterior distribution where

is fixed to the estimate of

. The Markov Chain Monte Carlo (MCMC) method is commonly used. However, as we have mentioned earlier, the MCMC method needs to discard a large number of samples in the burn-in period to guarantee the samples are accepted sufficiently close to the ones from the real distribution. Fortunately, when

and

are normal density functions, we find that the posterior distribution can be transformed and sampled by using the approximate Bayesian computation (ABC) method. However, when

and

are more general, such as the logistic density functions, the two-block Gibbs sampling proposed by Ren et al. [

19] can be an appropriate substitution. We will discuss the details in the following subsection.

2.3.1. EM Algorithm for

In this subsection, we propose the EM algorithm for estimating .

The log-likelihood function of the model is

where

and

are in the same regular location-scale family,

, with parameters

and

, respectively. In the log-likelihood function of the third sample,

is

The EM algorithm was first proposed by Dempster et al. [

21] and broadly applied to a wide variety of parametric models; see McLachlan and Krishnan [

22] for a better review.

Assume that we have obtained the estimate of the parameters after

m times of iterative, denote them by

and

. We introduce the latent variable

; the component

indicates which distribution the sample

is drawn from.

when it is drawn from the first distribution

, otherwise,

. We then have

The density of the joint distribution of

is

Given

, the conditional distribution of

is

where

,

. Thus, the conditional expectation of

is

When

and

, the conditional expectation of

can be the estimate of

.

.

The log likelihood function is

Since the latent variable is unknown, we use its conditional expectation. Furthermore, the MLE of

is

Then, in the E-step, we calculate the expectation of new parameters conditional on

,

Let

, then

In the M-step, we compute the simultaneous equations below to maximize

. The solutions are the new parameters

. We give the equations of

; similarly, we can obtain

.

In the simulation study, we consider the normal and logistic cases. The maximization step of the normal case can be simplified as

while that of the logistic case is

The two steps are repeated sufficiently to gurantee the convergence. W can then obtain the MLE of the parameters.

2.3.2. Normal Case

When the estimate of

is obtained, the posterior distribution (

5) can be rewritten as

This means that the posterior distribution is equivalent to using the first two terms on the right side of the equation as the “prior distribution” and the third term as the likelihood function. For the first term, we have

By denoting the sample mean and variance by

and

, respectively, we have

which follows a normal and

distribution, respectively; that is,

Let

and

be two independent random variables. Then

Given

and

, then

and

can be regarded as the functions of

U and

V

The joint distribution of

is

Then the joint distribution of

can be calculated as

where

. This coincides with the joint fiducial density proposed by Fisher [

23,

23], which means that the fiducial distribution of

is

Similarly, can we obtain

where

and

are the sample mean and variance of the second sample and

.

With the conclusion above, sampling from the posterior distribution (

5) can be conducted by sampling first from the fiducial distribution of the parameters and then combine the information with the likelihood function of the third sample from the mixture model (

1). This can be carried out simply using the approximate Bayesian computation (ABC) method. In this case, we regard the fiducial distributions of

as the prior distribution. After we have drawn samples of parameters from (

10) and (

11), denoted by

, we generate simulations from the model below and denote them by

,

where

is the MLE of

, estimated beforehand using the EM algorithm proposed in the last subsection. We then calculate the distance between the simulations and the observation and accept those whose distance is below a given threshold,

. The algorithm is given below.

Compute the sample mean and variance of the first two samples and denote them by , , and . Calculate the MLE of using the EM algorithm and denote it by .

Sample

and

from the standard normal distribution,

from the

distribution and

from

, respectively. To sample from the fiducial distributions of the parameters, we calculate

,

,

and

using

We denote the samples of the parameters by

.

Generate a simulation of size

from

The simulation is represented by

.

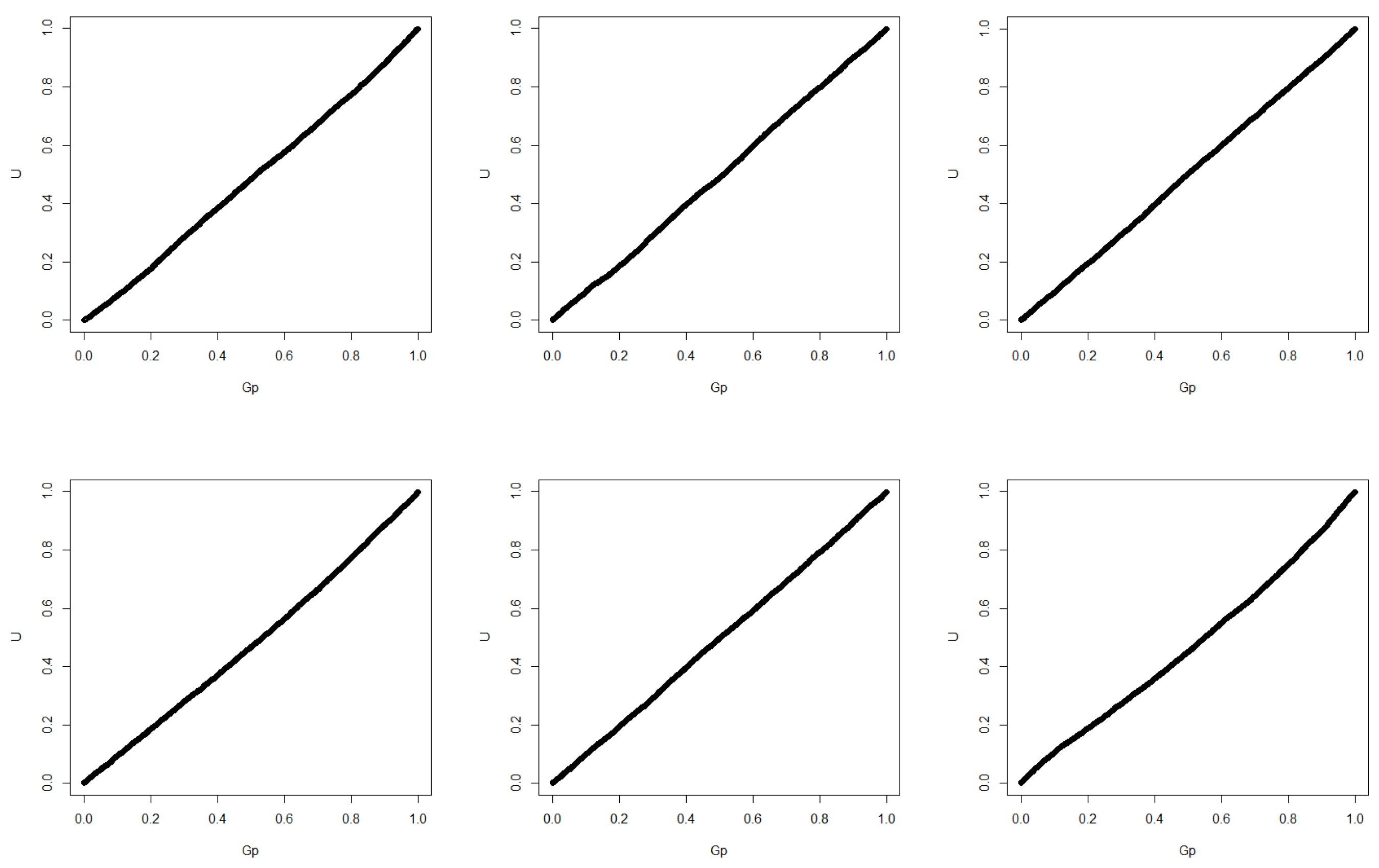

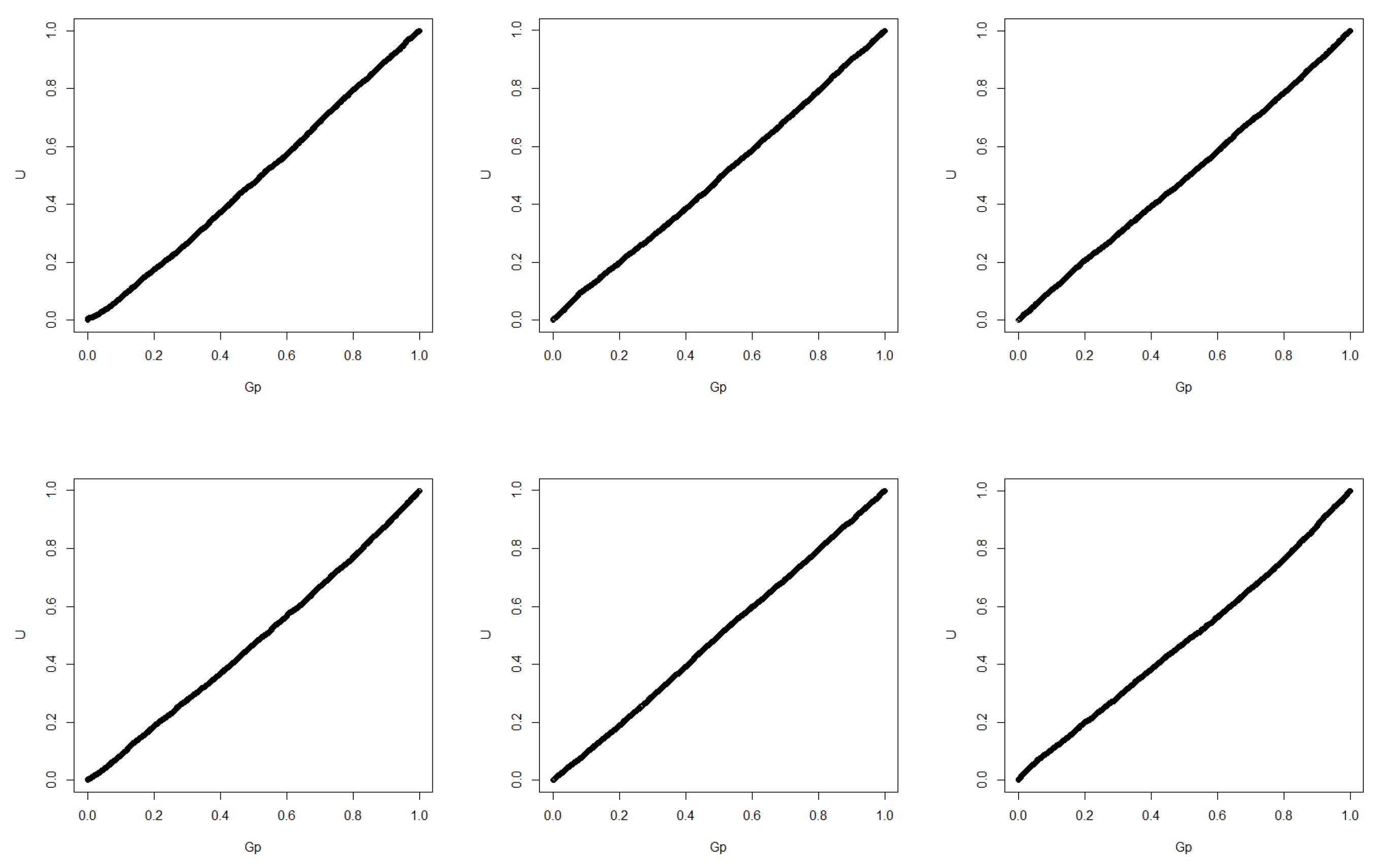

Calculate the Euclidean distance between the order statistics of the observation and the simulation . We accept the parameters if the distance is below a given threshold, . Otherwise, we reject the parameters.

The procedure is repeated until we accept a certain number of parameters.

A remark that should be noted in this algorithm is that the samples we receive are an approximation of the posterior distribution (5). We actually receive samples from

where

is the indicator function.

controls the proximity of (

12) to (

5) and can be adjusted to balance the accuracy and computational cost.

2.3.3. General Case

When

and

are not normal, to sample from the posterior (

8), it is natural to use the Markov chain Monte Carlo (MCMC) method. The Metropolis–Hastings (MH) sampling method and Gibbs sampling method are commonly used. An early version of the MH algorithm was given by Metropolis et al. [

24] in a statistical physics context, with subsequent generalization by Hastings [

25], who focused on statistical problems. Some computational problem and solutions can be further seen in Owen and Glynn [

26].

The initial values of the parameters can be determined by the EM algorithm mentioned above. For the proposal distribution, we choose

where

and

denote the gamma distribution and normal distribution, respectively.

and

,

denotes the parameters accepted in the

th loop. After we obtain

, we can further obtain

via the following two-step algorithm.

Sample

respectively from the proposal distribution (13). Compute

Accept

with probability

and let

. Otherwise, we reject the parameters and return to the first step.

The algorithm should be repeated sufficiently before obtaining the samples from the posterior distribution. This costs much more time compared with the ABC algorithm for the normal case. What is more, in our simulation we found that the MH algorithm may be too conservative. A better substitution could be the two-block Gibbs sampling proposed by Ren et al. [

19]. In this sampling method,

is first estimated using the EM algorithm, then for each loop, the parameters are updated by the conditional generalized pivotal quantities.

3. Real Data Example

In this section, we apply the proposed posterior

p-value to the real halibut dataset studied by Hosmer [

1], which was provided by the International Halibut Commission in Seattle, Washington. This dataset consists of the lengths of 208 halibut caught on one of their research cruises, in which 134 are female while the rest 74 are male. The data is summarized by Karunamuni and Wu [

27]. We follow their method and randomly select 14 males and 26 females from the samples and regard them as the first and second sample of the mixture model (

1). The remaining male proportion of 60/168 is approximately identical to the original male proportion of 74/208, which is 0.3558. One hundred replications are generated with the same procedure. Hosmer [

1] pointed out that the component for the dataset can be fitted by the normal distribution. A problem of interest is whether the sex effects the length of the halibut.

To test the homogeneity, for each replication we first use the EM algorithm to estimate

, then we use the reject-ABC method to generate 8000 samples. We choose a moderate threshold,

, to balance the accuracy and the computational cost. For the 100 replications, the mean estimate of the male proportion,

, is 0.3381, with the mean squared error of 0.0045, which illustrates the accuracy of our EM algorithm The estimates of the location and scale parameter of the male halibut are

and

, while those of the female ones are

and

. This is close to the estimates of Ren et al. [

19]. As with the hypothesis testing

, we calculate the posterior

p-value of the 100 replications. Given the significance level,

, all the

p-values are less than

. Thus, the null hypothesis is rejected, which indicates that there exists an association between the sex and length of the halibut.