Multi-Corpus Learning for Audio–Visual Emotions and Sentiment Recognition

Abstract

1. Introduction

- We propose a novel audio affective feature extractor based on a pretrained transformer model and two Gated Recurrent Units (GRU) layers. It is trained to recognize affective states at the segment level using the MCL approach.

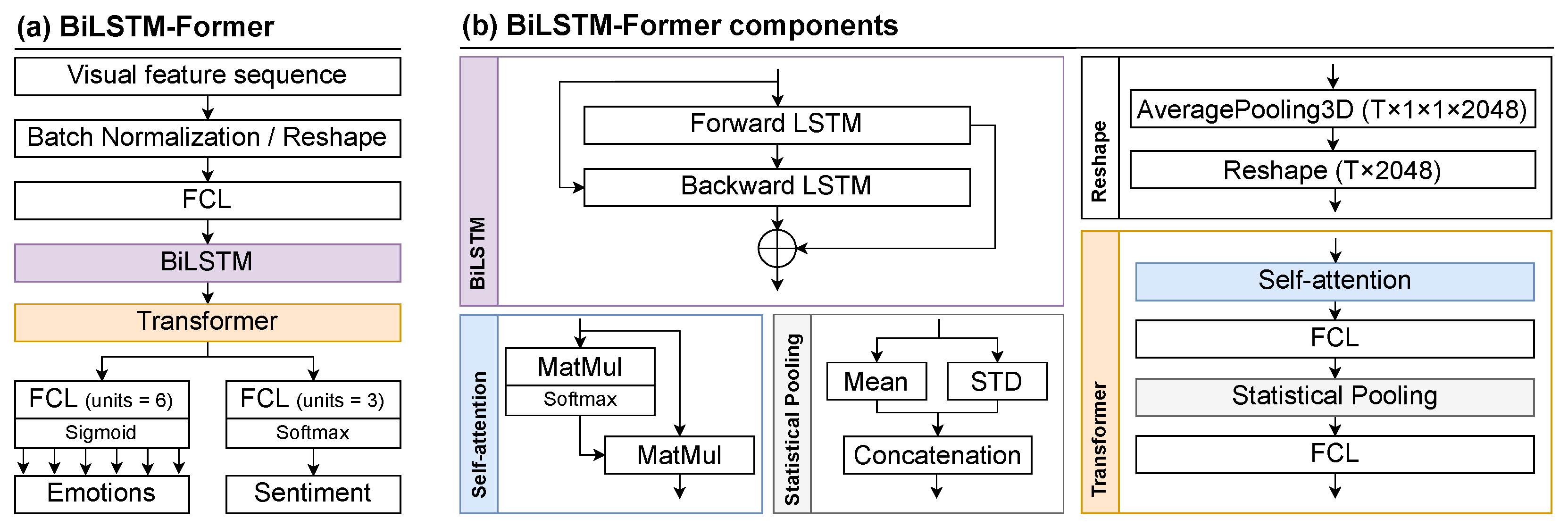

- We propose a novel visual affective feature extractor based on a pretrained 3D Convolutional Neural Network (CNN) model and two Long Short-Term Memory (LSTM) layers with a self-attention mechanism. It is trained to recognize affective states at the segment level using the MCL approach.

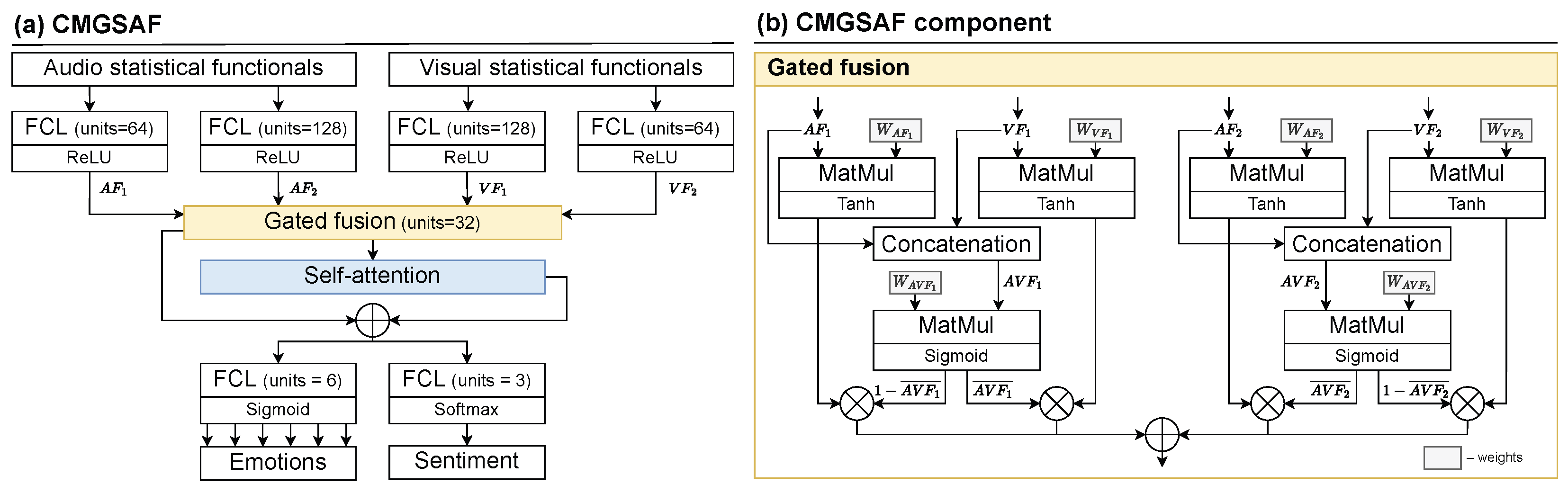

- We propose a novel Cross-Modal Gated Self-Attention Fusion (CMGSAF) strategy at the feature level trained to recognize affective states at the instance level using the Single-Corpus Learning (SCL) approach. The proposed fusion strategy outperforms State-of-the-Art (SOTA) in emotion recognition.

- We conduct exhaustive experiments comparing SCL and MCL with and without a speech style recognition task, and evaluate the effects of the speech style recognition task on affective states recognition performance.

2. Related Work

2.1. State-of-the-Art Approaches for Multi-Corpus Learning

2.2. State-of-the-Art Approaches for Affective States Recognition

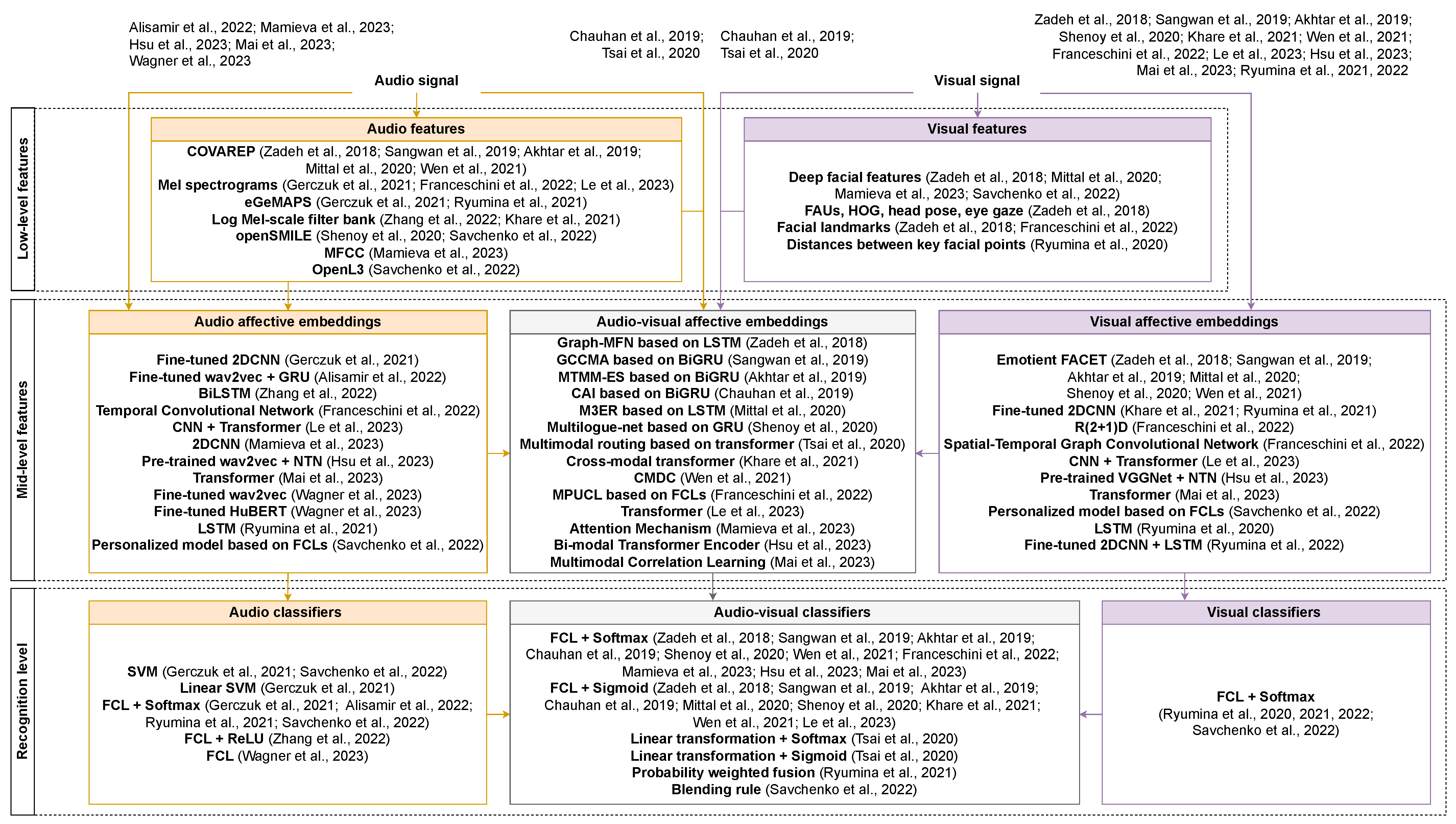

- The features used are classified as low-level features (hand-crafted, spectral, and non-specific deep features) and mid-level features (affective deep features). Deep features are predominantly extracted using CNNs and temporal models.

- To analyze visual signals, most of the proposed approaches use raw images (mostly face regions) and mid-level features extracted from them. Exceptions include approaches [18,32,40] using low-level (hand-crafted) features and their deep representations, with additional extraction of mid-level features from raw images.

- The SOTA approaches considered above use (1) single-label [21,22,34,35,38,39,40,41] or multi-label [18,24,25,26,27,28,29,30,31,32,33] emotion recognition; (2) regression [37] or categorical [18,24,25,26,28,29,31,36] sentiment recognition; and (3) categorical [20] or regression [37] valence, activation, and dominance recognition. Fully Connected Layer (FCL) and various activation functions (Softmax, Sigmoid, ReLU) are used to obtain predictions.

3. Research Corpora

3.1. RAMAS Corpus

3.2. CMU-MOSEI Corpus

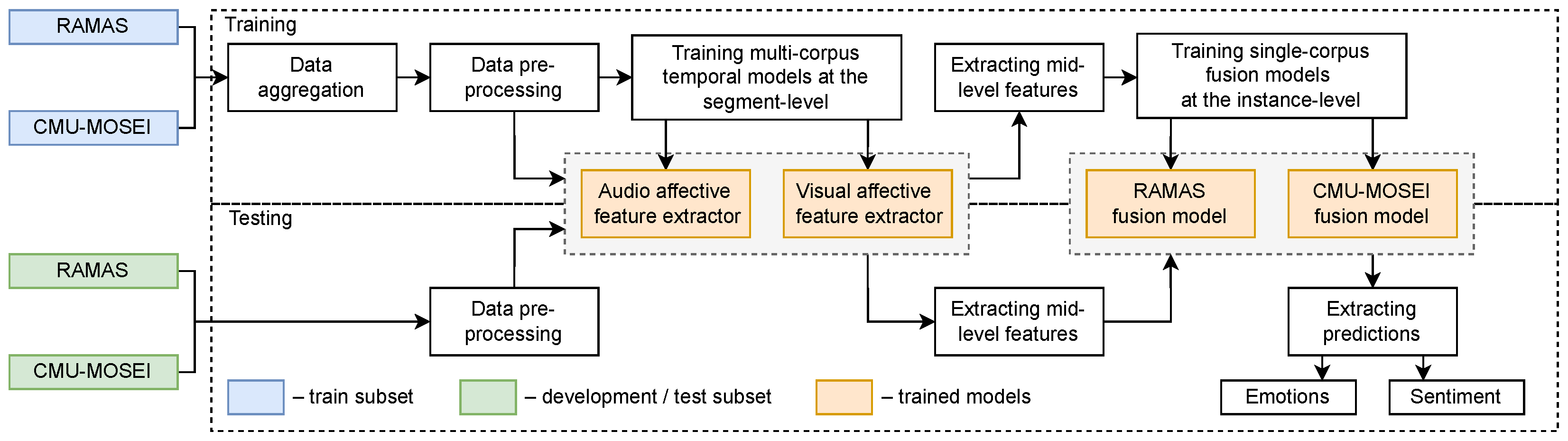

4. Methodology

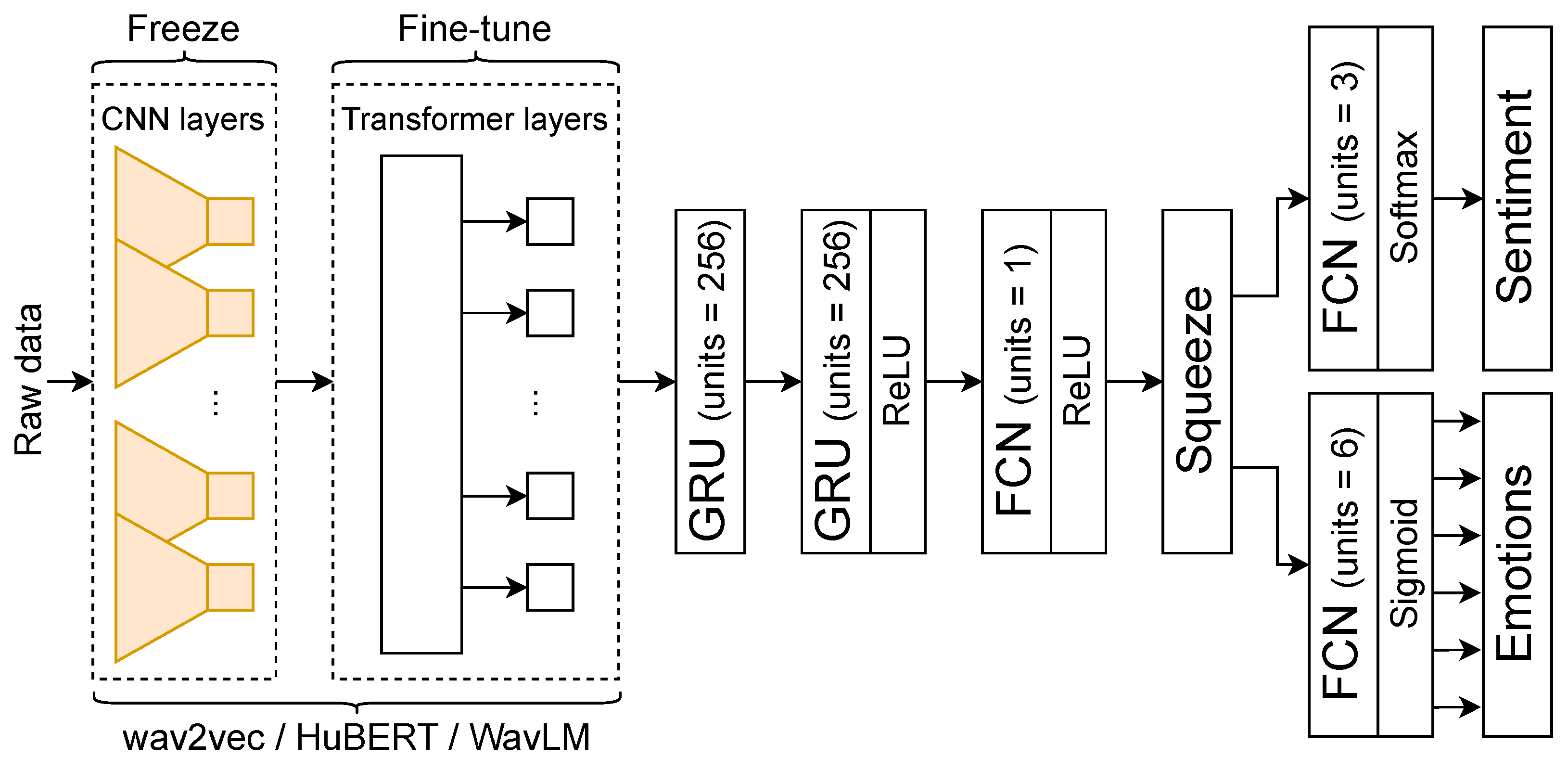

4.1. Audio-Based Affective States Recognition

- The pre-trained wav2vec (RW2V), HuBERT (RHuBERT), and WavLM (RWavLM) models proposed by https://github.com/Aniemore/Aniemore (accessed on 13 August 2023) were pre-trained using categorical emotions from the Russian Emotional Speech Dialogues (RESD) corpus. This corpus contains 4 h of audio dialogues in Russian recorded in studio quality, which is useful for training on the RAMAS corpus.

4.2. Visual-Based Affective States Recognition

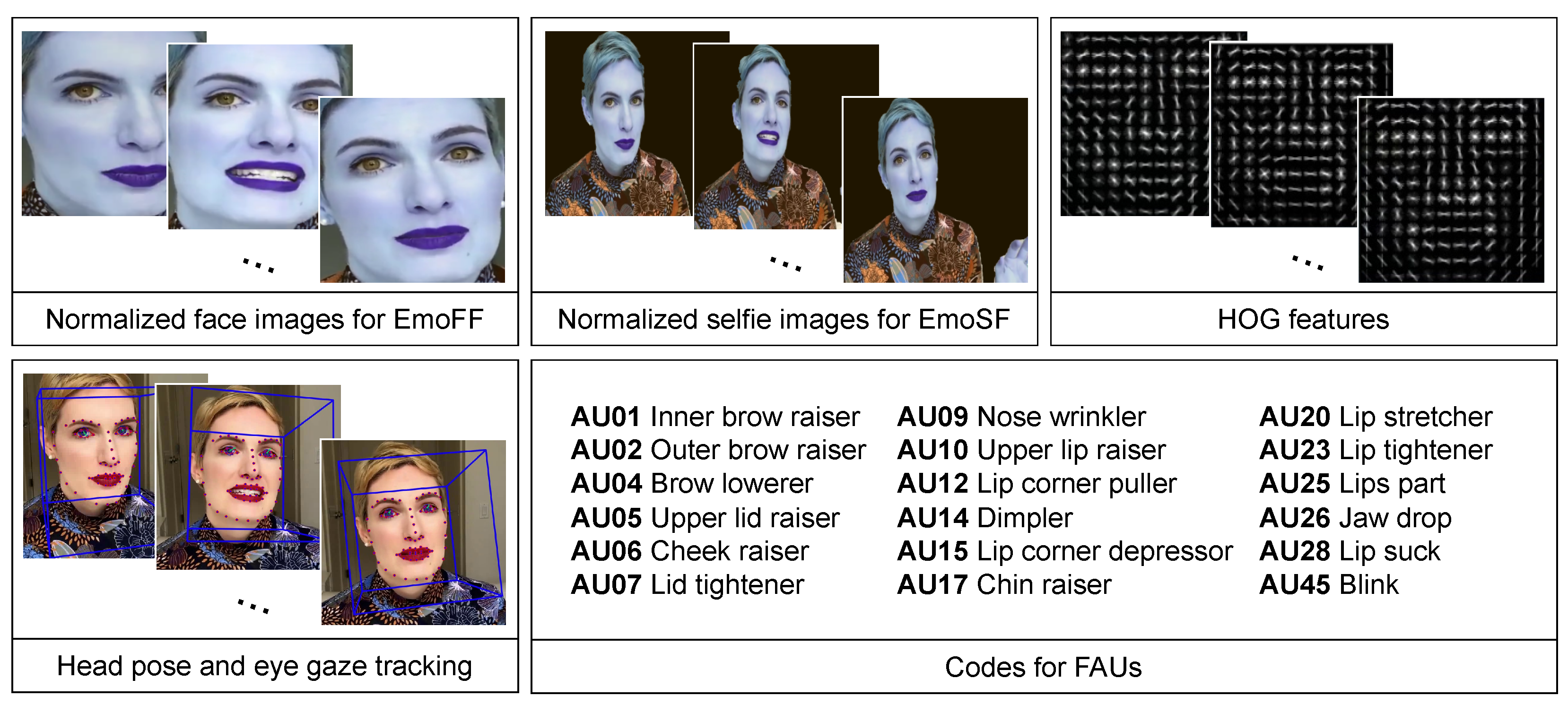

- Emotional Facial Features (EmoFF). Extracted using our EmoAffectNet model based on 2D ResNet-50 for frame-level static emotion recognition [41]. We converted 2D ResNet-50 to 3D ResNet-50 and froze the weights of four blocks (one convolutional and three residual blocks). The output size of the fifth block is (where T is the number of images in the sequence). We trained this block with a temporal model. Sequences of face region images were used as input data for 3D ResNet-50. The face regions were detected using the popular FaceMesh model [49] from the MediaPipe [50] open source library. We resized the face region images to 224 × 224 and applied channel normalization.

- Emotional Selfies Features (EmoSF). Extracted in the same way as EmoFF. By selfies, we mean the upper part of a human’s body down to the waist. Selfie regions were detected using the Selfie-Segmentation model from the MediaPipe library. The background in selfie images was filled in with black to prevent the model from focusing on non-human objects. The images were resized and normalized similarly to EmoFF.

- Histograms of Oriented Gradients (HOG). Extracted using the OpenFace [51] open source tool. HOG splits an image into small cells, then computes the directions and magnitudes of the changes in intensity values between the adjacent pixels. Thus, HOG represents information about the texture and edges of an image. The size of this feature set was .

- Head Pose, Eye Gaze and Action Units (PGAU). OpenFace can track head pose (translation and orientation) and eye gaze, and is able to detect Facial Action Units (FAUs). We combined these features into one vector. The size of this feature set was . FAUs are specific movements or muscle contractions of the face related to the expression of emotions. These movements are categorized and labeled according to a standardized coding system called the Facial Action Coding System (FACS).

4.3. Audio–Visual-Based Affective States Recognition

- PWF. To fuse the predictions of the two models, which provide probabilities for three sentiment and six binary emotion classes, we generate two weight vectors (where C is the number of classes) of sizes 2 × 3 and 2 × 6. These weight vectors are generated randomly using the Dirichlet distribution. The final prediction vector for emotion/sentiment recognition is calculated as follows:where and are prediction vectors obtained by the audio and visual modalities, respectively. This strategy has been successfully applied in [38,55,56]. Before performing PWF, we calculate the average value of the prediction vectors for each segment.

- PCF. In the research corpora, the duration of instances varies from less than one second to more than 100 s. For this reason, the number of prediction/feature vectors is different for each instance. To address this problem, we extract statistical functionals such as the mean and standard deviation from the four prediction matrices. This allows a single prediction vector to be created for each instance, for each modality, and for each recognition task. We then concatenate these audio and video statistical functionals of predictions for emotions and sentiment and use them as input to classifiers based on one FCL.

- FCF. Unlike the previous strategies, this approach is based on the mid-level features obtained by audio and video models at the segment level. Similar to the PCF strategy, we compute statistical functionals, except this time only for two feature matrices. This allows a single feature vector to be created for each instance and each modality. We then use two FCLs for emotion and sentiment recognition.

- CMGSAF. Unlike previous strategies, this one (see Figure 8) requires large computational resources, as it uses deep machine learning. To fuse modalities, we transform the statistical functionals of both feature vectors into four feature sets (two for each modality) using FCLs. Feature sets are used as input data for two successive attention layers: (1) gated fusion [57], which is responsible for feature fusion of different modalities and (2) self-attention (similar to the BiLSTM-Former model) to enhance the most informative features. CMGSAF uses four feature sets F and a number of units U as inputs, which together determine the size of the weight matrix .

5. Experimental Results

5.1. Performance Measures

5.2. Audio-Based Affective States Recognition

5.3. Visual-Based Affective States Recognition

5.4. Audio–Visual-Based Affective States Recognition

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SOTA | State-of-the-Art |

| MTL | Multi-Task Learning |

| SCL | Single-Corpus Learning |

| MCL | Multi-Corpus Learning |

| DFG | Dynamic Fusion Graph |

| Graph-MFN | Graph Memory Fusion Network |

| MTMM-ES | Multi-Task Multimodal Emotion and Sentiment |

| CAM | Context-aware Attention Module |

| CMDC | Cross-Modal Dynamic Convolution |

| MPUCL | Modality-Pairwise Unsupervised Contrastive Loss |

| CNN | Convolutional Neural Network |

| GRU | Gated Recurrent Units |

| BiLSTM | Bidirectional LSTM |

| BiGRU | Bidirectional GRU |

| FCL | Fully Connected Layer |

| RAMAS | Russian Acted Multimodal Affective Set |

| CMU-MOSEI | CMU Multimodal Opinion Sentiment and Emotion Intensity |

| EmoFF | Emotional Facial Features |

| EmoSF | Emotional Selfies Features |

| HOG | Histograms of Oriented Gradients |

| LSTM | Long Short-Term Memory |

| MELD | Multimodal EmotionLines Dataset |

| GCCMA | Gated Contextual Cross-Modal Attention |

| PGAU | Head Pose, Eye Gaze, and Action Units |

| FAUs | Facial Action Units |

| FACS | Facial Action Coding System |

| PWF | Probability-Weighted Fusion |

| CMGSAF | Cross-Modal Gated Self-Attention Fusion |

| UAR | Unweighted Average Recall |

| WA | Weighted Accuracy |

| WF1 | Weighted F1-score |

| SER | Speech Emotion Recognition |

| IEMOCAP | Interactive Emotional Dyadic Motion Capture |

| SGD | Stochastic Gradient Descent |

| RESD | Russian Emotional Speech Dialogues |

| VAD | Voice Activity Detector |

| PCF | Probability Concatenation Fusion |

| FCF | Feature Concatenation Fusion |

| MCM | Multitask learning and Contrastive learning for Multimodal sentiment analysis |

| NTN | Neural Tensor Network |

References

- Picard, R.W. Affective Computing; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Bojanić, M.; Delić, V.; Karpov, A. Call redistribution for a call center based on speech emotion recognition. Appl. Sci. 2020, 10, 4653. [Google Scholar] [CrossRef]

- van der Haar, D. Student Emotion Recognition Using Computer Vision as an Assistive Technology for Education. Inf. Sci. Appl. 2020, 621, 183–192. [Google Scholar] [CrossRef]

- Tripathi, U.; Chamola, V.; Jolfaei, A.; Chintanpalli, A. Advancing remote healthcare using humanoid and affective systems. IEEE Sens. J. 2021, 22, 17606–17614. [Google Scholar] [CrossRef]

- Blom, P.M.; Bakkes, S.; Tan, C.; Whiteson, S.; Roijers, D.; Valenti, R.; Gevers, T. Towards personalised gaming via facial expression recognition. In Proceedings of the AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment, Raleigh, NC, USA, 3–7 October 2014; Volume 10, pp. 30–36. [Google Scholar] [CrossRef]

- Marín-Morales, J.; Higuera-Trujillo, J.L.; Greco, A.; Guixeres, J.; Llinares, C.; Scilingo, E.P.; Alcañiz, M.; Valenza, G. Affective computing in virtual reality: Emotion recognition from brain and heartbeat dynamics using wearable sensors. Sci. Rep. 2018, 8, 13657. [Google Scholar] [CrossRef] [PubMed]

- Beedie, C.; Terry, P.; Lane, A. Distinctions between emotion and mood. Cogn. Emot. 2005, 19, 847–878. [Google Scholar] [CrossRef]

- Quoidbach, J.; Mikolajczak, M.; Gross, J.J. Positive interventions: An emotion regulation perspective. Psychol. Bull. 2015, 141, 655. [Google Scholar] [CrossRef] [PubMed]

- Verkholyak, O.; Dvoynikova, A.; Karpov, A. A Bimodal Approach for Speech Emotion Recognition using Audio and Text. J. Internet Serv. Inf. Secur. 2021, 11, 80–96. [Google Scholar] [CrossRef]

- Gebhard, P. ALMA: A layered model of affect. In Proceedings of the 4th International Joint Conference on Autonomous Agents and Multiagent Systems, Utrecht, The Netherlands, 25–29 July 2005; pp. 29–36. [Google Scholar] [CrossRef]

- Lim, N. Cultural differences in emotion: Differences in emotional arousal level between the East and the West. Integr. Med. Res. 2016, 5, 105–109. [Google Scholar] [CrossRef]

- Perlovsky, L. Language and emotions: Emotional Sapir–Whorf hypothesis. Neural Netw. 2009, 22, 518–526. [Google Scholar] [CrossRef]

- Mankus, A.M.; Boden, M.T.; Thompson, R.J. Sources of variation in emotional awareness: Age, gender, and socioeconomic status. Personal Individ. Differ. 2016, 89, 28–33. [Google Scholar] [CrossRef]

- Samulowitz, A.; Gremyr, I.; Eriksson, E.; Hensing, G. “Brave men” and “emotional women”: A theory-guided literature review on gender bias in health care and gendered norms towards patients with chronic pain. Pain Res. Manag. 2018, 2018, 6358624. [Google Scholar] [CrossRef] [PubMed]

- Fang, X.; van Kleef, G.A.; Kawakami, K.; Sauter, D.A. Cultural differences in perceiving transitions in emotional facial expressions: Easterners show greater contrast effects than westerners. J. Exp. Soc. Psychol. 2021, 95, 104143. [Google Scholar] [CrossRef]

- Pell, M.D.; Monetta, L.; Paulmann, S.; Kotz, S.A. Recognizing emotions in a foreign language. J. Nonverbal Behav. 2009, 33, 107–120. [Google Scholar] [CrossRef]

- Poria, S.; Hazarika, D.; Majumder, N.; Naik, G.; Cambria, E.; Mihalcea, R. MELD: A Multimodal Multi-Party Dataset for Emotion Recognition in Conversations. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 527–536. [Google Scholar] [CrossRef]

- Zadeh, A.B.; Liang, P.P.; Poria, S.; Cambria, E.; Morency, L.P. Multimodal language analysis in the wild: Cmu-mosei dataset and interpretable dynamic fusion graph. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 2236–2246. [Google Scholar] [CrossRef]

- Kollias, D.; Zafeiriou, S. Aff-wild2: Extending the aff-wild database for affect recognition. arXiv 2018, arXiv:1811.07770. [Google Scholar] [CrossRef]

- Gerczuk, M.; Amiriparian, S.; Ottl, S.; Schuller, B.W. Emonet: A transfer learning framework for multi-corpus speech emotion recognition. IEEE Trans. Affect. Comput. 2021, 33, 1472–1487. [Google Scholar] [CrossRef]

- Alisamir, S.; Ringeval, F.; Portet, F. Multi-Corpus Affect Recognition with Emotion Embeddings and Self-Supervised Representations of Speech. In Proceedings of the 10th International Conference on Affective Computing and Intelligent Interaction (ACII), Nara, Japan, 18–21 October 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Zhang, H.; Mimura, M.; Kawahara, T.; Ishizuka, K. Selective Multi-Task Learning For Speech Emotion Recognition Using Corpora Of Different Styles. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 7707–7711. [Google Scholar] [CrossRef]

- Busso, C.; Bulut, M.; Lee, C.C.; Kazemzadeh, A.; Mower, E.; Kim, S.; Chang, J.N.; Lee, S.; Narayanan, S.S. IEMOCAP: Interactive emotional dyadic motion capture database. Lang. Resour. Eval. 2008, 42, 335–359. [Google Scholar] [CrossRef]

- Sangwan, S.; Chauhan, D.S.; Akhtar, M.S.; Ekbal, A.; Bhattacharyya, P. Multi-task gated contextual cross-modal attention framework for sentiment and emotion analysis. In Proceedings of the ICONIP, Sydney, NSW, Australia, 12–15 December 2019; pp. 662–669. [Google Scholar] [CrossRef]

- Akhtar, M.S.; Chauhan, D.; Ghosal, D.; Poria, S.; Ekbal, A.; Bhattacharyya, P. Multi-task Learning for Multi-modal Emotion Recognition and Sentiment Analysis. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 370–379. [Google Scholar] [CrossRef]

- Chauhan, D.S.; Akhtar, M.S.; Ekbal, A.; Bhattacharyya, P. Context-aware interactive attention for multi-modal sentiment and emotion analysis. In Proceedings of the Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 5647–5657. [Google Scholar] [CrossRef]

- Mittal, T.; Bhattacharya, U.; Chandra, R.; Bera, A.; Manocha, D. M3er: Multiplicative multimodal emotion recognition using facial, textual, and speech cues. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 1359–1367. [Google Scholar] [CrossRef]

- Shenoy, A.; Sardana, A.; Graphics, N. Multilogue-Net: A Context Aware RNN for Multi-modal Emotion Detection and Sentiment Analysis in Conversation. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Seattle, WA, USA, 5–10 July 2020; pp. 19–28. [Google Scholar] [CrossRef]

- Tsai, Y.H.H.; Ma, M.Q.; Yang, M.; Salakhutdinov, R.; Morency, L.P. Multimodal routing: Improving local and global interpretability of multimodal language analysis. In Proceedings of the Conference on Empirical Methods in Natural Language Processing. Conference on Empirical Methods in Natural Language Processing, Online, 16–20 November 2020; pp. 1823–1833. [Google Scholar] [CrossRef]

- Khare, A.; Parthasarathy, S.; Sundaram, S. Self-supervised learning with cross-modal transformers for emotion recognition. In Proceedings of the IEEE Spoken Language Technology Workshop (SLT), Shenzhen, China, 19–22 January 2021; pp. 381–388. [Google Scholar] [CrossRef]

- Wen, H.; You, S.; Fu, Y. Cross-modal dynamic convolution for multi-modal emotion recognition. J. Visual Commun. Image Represent. 2021, 78, 103178. [Google Scholar] [CrossRef]

- Franceschini, R.; Fini, E.; Beyan, C.; Conti, A.; Arrigoni, F.; Ricci, E. Multimodal Emotion Recognition with Modality-Pairwise Unsupervised Contrastive Loss. In Proceedings of the International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; pp. 2589–2596. [Google Scholar] [CrossRef]

- Le, H.D.; Lee, G.S.; Kim, S.H.; Kim, S.; Yang, H.J. Multi-Label Multimodal Emotion Recognition With Transformer-Based Fusion and Emotion-Level Representation Learning. IEEE Access 2023, 11, 14742–14751. [Google Scholar] [CrossRef]

- Mamieva, D.; Abdusalomov, A.B.; Kutlimuratov, A.; Muminov, B.; Whangbo, T.K. Multimodal Emotion Detection via Attention-Based Fusion of Extracted Facial and Speech Features. Sensors 2023, 23, 5475. [Google Scholar] [CrossRef]

- Hsu, J.H.; Wu, C.H. Applying Segment-Level Attention on Bi-modal Transformer Encoder for Audio-Visual Emotion Recognition. IEEE Trans. Affect. Comput. 2023, 1–13. [Google Scholar] [CrossRef]

- Mai, S.; Sun, Y.; Zeng, Y.; Hu, H. Excavating multimodal correlation for representation learning. Inf. Fusion 2023, 91, 542–555. [Google Scholar] [CrossRef]

- Wagner, J.; Triantafyllopoulos, A.; Wierstorf, H.; Schmitt, M.; Burkhardt, F.; Eyben, F.; Schuller, B.W. Dawn of the transformer era in speech emotion recognition: Closing the valence gap. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10745–10759. [Google Scholar] [CrossRef] [PubMed]

- Ryumina, E.; Verkholyak, O.; Karpov, A. Annotation confidence vs. training sample size: Trade-off solution for partially-continuous categorical emotion recognition. In Proceedings of the Interspeech, Brno, Czechia, 30 August–3 September 2021; pp. 3690–3694. [Google Scholar] [CrossRef]

- Savchenko, A.; Savchenko, L. Audio-Visual Continuous Recognition of Emotional State in a Multi-User System Based on Personalized Representation of Facial Expressions and Voice. Pattern Recognit. Image Anal. 2022, 32, 665–671. [Google Scholar] [CrossRef]

- Ryumina, E.; Karpov, A. Facial expression recognition using distance importance scores between facial landmarks. In Proceedings of the CEUR Workshop Proceedings, St. Petersburg, Russia, 22–25 September 2020; Volume 2744, pp. 1–10. [Google Scholar] [CrossRef]

- Ryumina, E.; Dresvyanskiy, D.; Karpov, A. In search of a robust facial expressions recognition model: A large-scale visual cross-corpus study. Neurocomputing 2022, 514, 435–450. [Google Scholar] [CrossRef]

- Dvoynikova, A.; Markitantov, M.; Ryumina, E.; Uzdiaev, M.; Velichko, A.; Ryumin, D.; Lyakso, E.; Karpov, A. Analysis of infoware and software for human affective states recognition. Inform. Autom. 2022, 21, 1097–1144. [Google Scholar] [CrossRef]

- Perepelkina, O.; Kazimirova, E.; Konstantinova, M. RAMAS: Russian multimodal corpus of dyadic interaction for affective computing. In Proceedings of the International Conference on Speech and Computer, Leipzig, Germany, 18–22 September 2018; pp. 501–510. [Google Scholar] [CrossRef]

- Ryumina, E.; Karpov, A. Comparative analysis of methods for imbalance elimination of emotion classes in video data of facial expressions. Sci. Tech. J. Inf. Technol. Mech. Opt. 2020, 20, 683–691. [Google Scholar] [CrossRef]

- Dvoynikova, A.; Karpov, A. Bimodal sentiment and emotion classification with multi-head attention fusion of acoustic and linguistic information. In Proceedings of the International Conference “Dialogue 2023”, Online, 14–16 June 2023; pp. 51–61. [Google Scholar]

- Lotfian, R.; Busso, C. Building naturalistic emotionally balanced speech corpus by retrieving emotional speech from existing podcast recordings. IEEE Trans. Affect. Comput. 2017, 10, 471–483. [Google Scholar] [CrossRef]

- Andayani, F.; Theng, L.B.; Tsun, M.T.; Chua, C. Hybrid LSTM-transformer model for emotion recognition from speech audio files. IEEE Access 2022, 10, 36018–36027. [Google Scholar] [CrossRef]

- Huang, J.; Tao, J.; Liu, B.; Lian, Z.; Niu, M. Multimodal transformer fusion for continuous emotion recognition. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 3507–3511. [Google Scholar] [CrossRef]

- Ivanko, D.; Ryumin, D.; Karpov, A. A Review of Recent Advances on Deep Learning Methods for Audio-Visual Speech Recognition. Mathematics 2023, 11, 2665. [Google Scholar] [CrossRef]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. Mediapipe: A framework for building perception pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar]

- Baltrusaitis, T.; Zadeh, A.; Lim, Y.C.; Morency, L.P. Openface 2.0: Facial behavior analysis toolkit. In Proceedings of the 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG), Xi’an, China, 15–19 May 2018; pp. 59–66. [Google Scholar] [CrossRef]

- Febrian, R.; Halim, B.M.; Christina, M.; Ramdhan, D.; Chowanda, A. Facial expression recognition using bidirectional LSTM-CNN. Procedia Comput. Sci. 2023, 216, 39–47. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 1–11. [Google Scholar] [CrossRef]

- Clark, K.; Luong, M.T.; Manning, C.D.; Le, Q.V. Semi-Supervised Sequence Modeling with Cross-View Training. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 1914–1925. [Google Scholar]

- Markitantov, M.; Ryumina, E.; Ryumin, D.; Karpov, A. Biometric Russian Audio-Visual Extended MASKS (BRAVE-MASKS) Corpus: Multimodal Mask Type Recognition Task. In Proceedings of the Interspeech, Incheon, Korea, 18–22 September 2022; pp. 1756–1760. [Google Scholar] [CrossRef]

- Ryumin, D.; Ivanko, D.; Ryumina, E.V. Audio-Visual Speech and Gesture Recognition by Sensors of Mobile Devices. Sensors 2023, 23, 2284. [Google Scholar] [CrossRef]

- Liu, P.; Li, K.; Meng, H. Group Gated Fusion on Attention-Based Bidirectional Alignment for Multimodal Emotion Recognition. In Proceedings of the Interspeech, Shanghai, China, 25–29 October 2020; pp. 379–383. [Google Scholar] [CrossRef]

- Dresvyanskiy, D.; Ryumina, E.; Kaya, H.; Markitantov, M.; Karpov, A.; Minker, W. End-to-End Modeling and Transfer Learning for Audiovisual Emotion Recognition in-the-Wild. Multimodal Technol. Interact. 2022, 6, 11. [Google Scholar] [CrossRef]

- Hennequin, R.; Khlif, A.; Voituret, F.; Moussallam, M. Spleeter: A fast and efficient music source separation tool with pre-trained models. J. Open Source Softw. 2020, 5, 2154. [Google Scholar] [CrossRef]

- Wang, Y.; Boumadane, A.; Heba, A. A fine-tuned wav2vec 2.0/hubert benchmark for speech emotion recognition, speaker verification and spoken language understanding. arXiv 2021, arXiv:2111.02735. [Google Scholar]

- Loshchilov, I.; Hutter, F. Sgdr: Stochastic gradient descent with warm restarts. arXiv 2016, arXiv:1608.03983. [Google Scholar]

- Cao, Q.; Shen, L.; Xie, W.; Parkhi, O.M.; Zisserman, A. Vggface2: A dataset for recognising faces across pose and age. In Proceedings of the 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG), Xi’an, China, 15–19 May 2018; pp. 67–74. [Google Scholar] [CrossRef]

| Model | RAMAS | CMU-MOSEI | Average/ | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Emotion | Sentiment | Emotion | Sentiment | ||||||

| / | / | / | / | / | / | / | / | ||

| Single-Corpus trained model | |||||||||

| RW2V | 47.0/– | 73.3/– | 55.4/– * | 45.8/– | 45.0/– | 73.4/– | 55.1/– | 47.9/– | 55.4/– |

| RHuBERT | 45.9/−1.1 | 73.1/−0.2 | 53.5/−1.9 | 46.8/+1.0 | 45.6/+0.6 | 73.6/+0.2 | 57.3/+2.2 | 49.6/+1.7 | 55.7/+0.3 |

| RWavLM | 46.1/−0.9 | 73.4/+0.1 | 51.9/−3.5 | 42.6/−3.2 | 50.2/+5.2 | 74.8/+1.4 | 59.2/+4.1 | 51.6/+3.7 | 56.2/+0.9 |

| EW2V | 57.2/+10.2 | 72.7/−0.6 | 53.1/−2.3 | 46.8/+1.0 | 55.7/+10.7 | 75.3/+1.9 | 59.2/+4.1 | 51.5/+3.6 | 58.9/+3.5 |

| Multi-Corpus trained model | |||||||||

| EW2V | 48.3/+1.3 | 78.1/+4.8 | 63.6/+8.2 | 65.7/+19.9 | 50.4/+5.4 | 74.9/+1.5 | 59.9/+4.8 | 56.0/+8.1 | 62.1/+6.7 |

| Multi−Corpus trained model + speech style recognition task | |||||||||

| EW2V | 43.9/−3.1 | 76.4/+3.1 | 49.6/−5.8 | 33.3/−12.5 | 45.8/+0.8 | 74.1/+0.7 | 59.2/+4.1 | 51.3/+3.4 | 54.2/−1.1 |

| Single−Corpus trained model based on fine−tuned Multi−Corpus model | |||||||||

| EW2V | 46.8/−0.2 | 78.3/+5.0 | 59.3/+3.9 | 64.4/+18.6 | 61.0/+14.0 | 75.6/+2.3 | 59.4/+4.0 | 53.0/+7.2 | 63.5/+8.1 |

| Number of Units | EmoFF | EmoSF | HOG | PGAU |

|---|---|---|---|---|

| FCL, {128, 256, 512, 1024} | 256 | 512 | 512 | 1024 |

| LSTM layers, {64, 128, 256, 512} | 256 | 256 | 256 | 256 |

| FCLs of Transformer block, {128, 256, 512, 1024} | 256 | 512 | 512 | 256 |

| Feature Set | RAMAS | CMU-MOSEI | Average/ | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Emotion | Sentiment | Emotion | Sentiment | ||||||

| / | / | / | / | / | / | / | / | ||

| Single-Corpus trained model | |||||||||

| PGAU | 69.0/– | 81.5/– | 87.2/– | 89.2/– | 56.6/– | 70.9/– | 50.9/– | 48.3/– | 69.2/– |

| EmoSF | 72.8/+3.8 | 84.9/+3.4 | 89.2/+2.0 | 89.7/+0.5 | 57.7/+1.1 | 72.4/+1.5 | 48.9/−2.0 | 43.7/−4.6 | 69.9/+0.7 |

| HOG | 73.3/+4.3 | 85.7/+4.2 | 93.8/+6.6 | 94.3/+5.1 | 56.9/+0.3 | 72.1/+1.2 | 48.8/−2.1 | 47.2/−1.1 | 71.5/+2.3 |

| EmoFF | 78.6/+9.6 | 89.6/+8.1 | 94.2/+7.0 | 94.4/+5.2 | 62.5/+5.9 | 75.0/+4.1 | 48.9/−2.0 | 47.7/−0.6 | 73.9/+4.7 |

| Multi-Corpus trained model | |||||||||

| EmoFF | 81.7/+12.7 | 90.6/+9.1 | 91.1/+3.9 | 91.9/+2.7 | 65.4/+8.8 | 77.0/+6.1 | 49.5/−1.4 | 48.3/+0.0 | 74.4/+5.2 |

| Multi-Corpus trained model + speech style recognition task | |||||||||

| EmoFF | 76.8/+7.8 | 88.0/+6.5 | 93.0/+5.8 | 93.0/+3.8 | 63.8/+7.2 | 76.7/+5.8 | 54.6/+3.7 | 49.5/+1.2 | 74.4/+5.2 |

| Single-Corpus trained model based on fine-tuned Multi-Corpus model | |||||||||

| EmoFF | 83.6/+14.6 | 89.1/+7.6 | 91.5/+4.3 | 92.4/+3.2 | 65.0/+8.4 | 77.1/+6.2 | 52.4/+1.5 | 49.8/+1.5 | 75.1/+5.9 |

| Strategy | RAMAS | CMU-MOSEI | Average/ | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Emotion | Sentiment | Emotion | Sentiment | ||||||

| Single-Corpus trained model | |||||||||

| PWF | 79.9/– | 86.6/– | 92.3/– | 91.9/– | 65.8/– | 76.5/– | 55.1/– | 56.8/– | 75.6/– |

| PCF | 87.9/+8.0 | 90.4/+3.8 | 93.4/+1.1 | 93.0/+1.1 | 62.2/−3.6 | 78.1/+1.6 | 56.1/+1.0 | 58.0/+1.2 | 77.4/+1.8 |

| FCF | 85.9/+6.0 | 90.5/+3.9 | 94.4/+2.1 | 94.1/+2.2 | 61.7/−4.1 | 78.4/+1.9 | 55.2/+0.1 | 57.3/+0.5 | 77.2/+1.6 |

| CMGSAF | 86.5/+6.6 | 92.1/+5.5 | 93.4/+1.1 | 93.0/+1.1 | 78.1/+12.3 | 78.7/+2.2 | 55.8/+0.7 | 57.5/+0.7 | 79.4/+3.8 |

| w/o audio | 84.9/+5.0 | 91.3/+4.7 | 93.4/+1.1 | 93.0/+1.1 | 61.6/−4.2 | 75.8/−0.7 | 44.0/−11.1 | 45.9/−10.9 | 73.7/−1.9 |

| w/o video | 57.3/−22.6 | 77.4/−9.2 | 34.2/−58.1 | 50.0/−41.9 | 62.5/−3.3 | 76.8/+0.3 | 60.1/+5.0 | 60.3/+3.5 | 59.8/−15.8 |

| CMGSAF * | 87.3/+7.4 | 92.3/+5.7 | 93.8/+1.5 | 93.4/+1.5 | 72.6/+6.8 | 78.5/+2.0 | 56.1/+1.0 | 58.2/+1.4 | 79.0/+3.4 |

| w/o audio | 81.8/+1.9 | 90.7/+4.1 | 93.0/+0.7 | 92.6/+0.7 | 68.1/+2.3 | 76.9/+0.4 | 45.5/−9.6 | 47.7/−9.1 | 74.5/−1.1 |

| w/o video | 52.7/−27.2 | 79.8/−6.8 | 32.5/−59.8 | 49.2/−42.7 | 61.8/−4.0 | 76.4/−0.1 | 59.3/+4.2 | 57.8/+1.0 | 58.7/−16.9 |

| Multi-Corpus trained model | |||||||||

| PWF | 76.0/−3.9 | 89.9/+3.3 | 92.7/+0.4 | 92.2/+0.3 | 77.8/+12.0 | 76.5/+0.0 | 53.4/−1.7 | 57.7/+0.9 | 77.0/+1.4 |

| PCF | 86.1/+6.2 | 88.0/+1.4 | 91.9/−0.4 | 91.1/−0.8 | 62.3/−3.5 | 78.0/+1.5 | 55.7/+0.6 | 57.4/+0.6 | 76.3/+0.7 |

| FCF | 86.5/+6.6 | 91.8/+5.2 | 93.3/+1.0 | 93.0/+1.1 | 64.1/−1.7 | 78.2/+1.7 | 54.5/−0.6 | 57.1/+0.3 | 77.3/+1.7 |

| CMGSAF | 84.9/+5.0 | 91.3/+4.7 | 92.1/−0.2 | 91.9/+0.0 | 74.7/+8.9 | 78.7/+2.2 | 56.0/+0.9 | 57.4/+0.6 | 78.4/+2.8 |

| w/o audio | 85.1/+5.2 | 91.1/+4.5 | 92.9/+0.6 | 92.6/+0.7 | 67.7/+1.9 | 77.0/+0.5 | 45.4/−9.7 | 47.5/−9.3 | 74.9/−0.7 |

| w/o video | 51.8/−28.1 | 77.8/−8.8 | 54.0/−38.3 | 43.8/−48.1 | 63.5/−2.3 | 76.2/−0.3 | 53.4/−1.7 | 52.0/−4.8 | 59.1/−16.6 |

| CMGSAF * | 90.3/+10.4 | 91.2/+4.6 | 93.4/+1.1 | 93.0/+1.1 | 62.6/−3.2 | 78.0/+1.5 | 54.8/−0.3 | 56.6/−0.2 | 77.5/+1.9 |

| w/o audio | 87.6/+7.7 | 91.4/+4.8 | 94.1/+1.8 | 93.8/+1.9 | 58.0/−7.8 | 76.7/+0.2 | 46.4/−8.7 | 47.5/−9.3 | 74.4/−1.2 |

| w/o video | 55.2/−24.7 | 77.6/−9.0 | 50.7/−41.6 | 45.7/−46.2 | 63.8/−2.0 | 76.4/−0.1 | 53.8/−1.3 | 57.0/+0.2 | 60.0/−15.6 |

| Approach | Sentiment | Emotion | ||

|---|---|---|---|---|

| MPUCL [32] | – | – | 55.5 | 27.8 |

| Multilogue-net [28] | 75.2 | 74.0 | – | – |

| MTMM-ES [25] | 77.4 | 75.8 | 59.3 | 77.0 |

| CAI [26] | 77.4 | 74.8 | 59.5 | 76.6 |

| GCCMA [24] | 78.0 | 75.0 | 59.9 | 77.1 |

| CMGSAF | 68.8 | 67.0 | 78.1 | 78.7 |

| BCMGSAF | 66.1 | 66.6 | 72.6 | 78.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ryumina, E.; Markitantov, M.; Karpov, A. Multi-Corpus Learning for Audio–Visual Emotions and Sentiment Recognition. Mathematics 2023, 11, 3519. https://doi.org/10.3390/math11163519

Ryumina E, Markitantov M, Karpov A. Multi-Corpus Learning for Audio–Visual Emotions and Sentiment Recognition. Mathematics. 2023; 11(16):3519. https://doi.org/10.3390/math11163519

Chicago/Turabian StyleRyumina, Elena, Maxim Markitantov, and Alexey Karpov. 2023. "Multi-Corpus Learning for Audio–Visual Emotions and Sentiment Recognition" Mathematics 11, no. 16: 3519. https://doi.org/10.3390/math11163519

APA StyleRyumina, E., Markitantov, M., & Karpov, A. (2023). Multi-Corpus Learning for Audio–Visual Emotions and Sentiment Recognition. Mathematics, 11(16), 3519. https://doi.org/10.3390/math11163519