Resolving the Doubts: On the Construction and Use of ResNets for Side-Channel Analysis

Abstract

1. Introduction

1.1. Related Work

1.2. Our Contributions

- We empirically investigate several constructions for deep ResNets and provide recommendations about what type of residual block should be used and how deep the networks should be for state-of-the-art side-channel analysis.

- From these recommendations, we construct a novel architecture that is significantly deeper than the architectures previously used for SCA. This architecture performs competitively with state-of-the-art model search strategies across several datasets. In several settings, we obtained the best-known results (compared with other types of deep learning and the same number of features).

2. Background

2.1. Deep Learning

2.2. Residual Neural Networks

2.3. Profiling Side-Channel Analysis

SCA Metrics

- Guessing Entropy: Guessing entropy (GE) [32] is the average key rank over a number of attacks. This is defined as , where is the mean function. As is commonly done, we estimated it over 100 attacks.

- NoT: The NoT (Number of traces) metric refers to the number of measurements required to reduce GE to one (i.e., to see if our best guess was also the correct guess). This is defined as , for which . Then, the NoT metric estimates how many measurements are required to recover the key.

2.4. Datasets

3. Construction of Deep ResNets for SCA

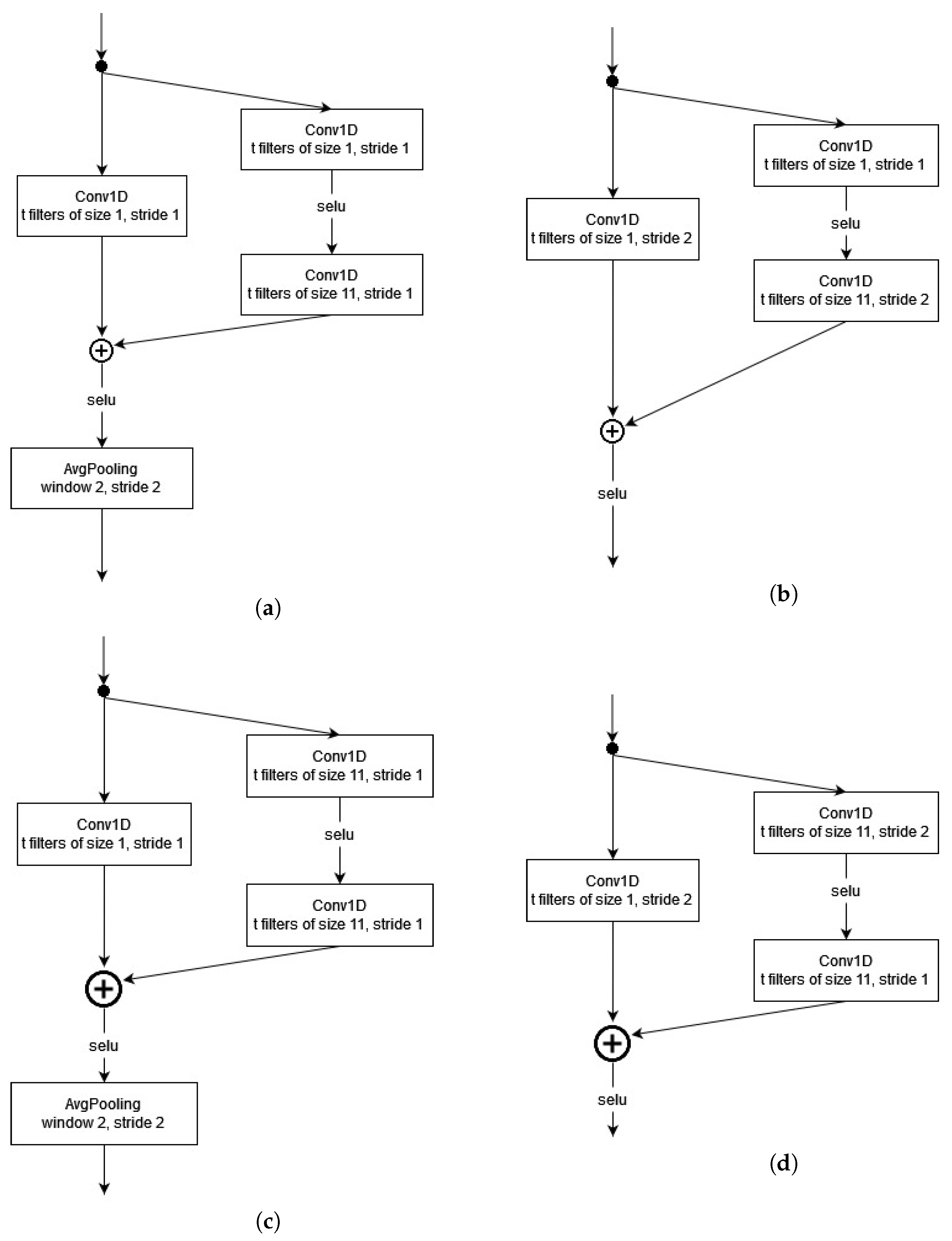

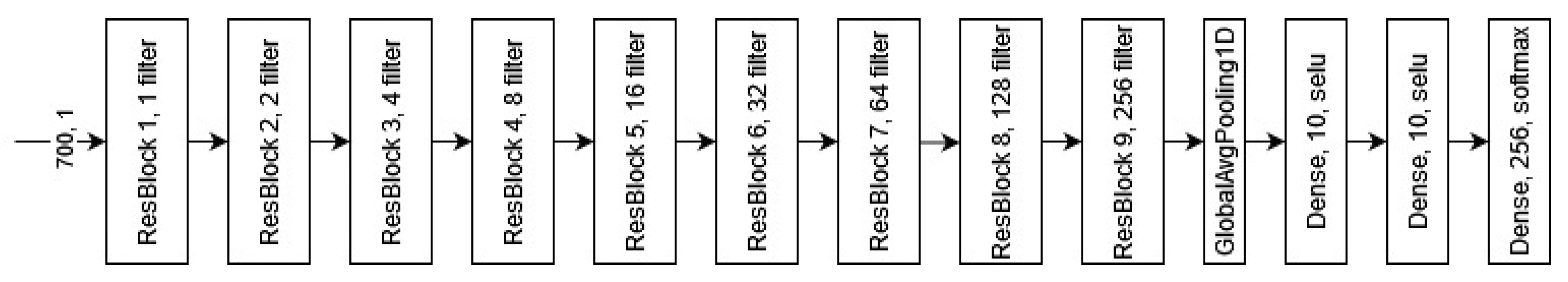

3.1. Residual Block Construction

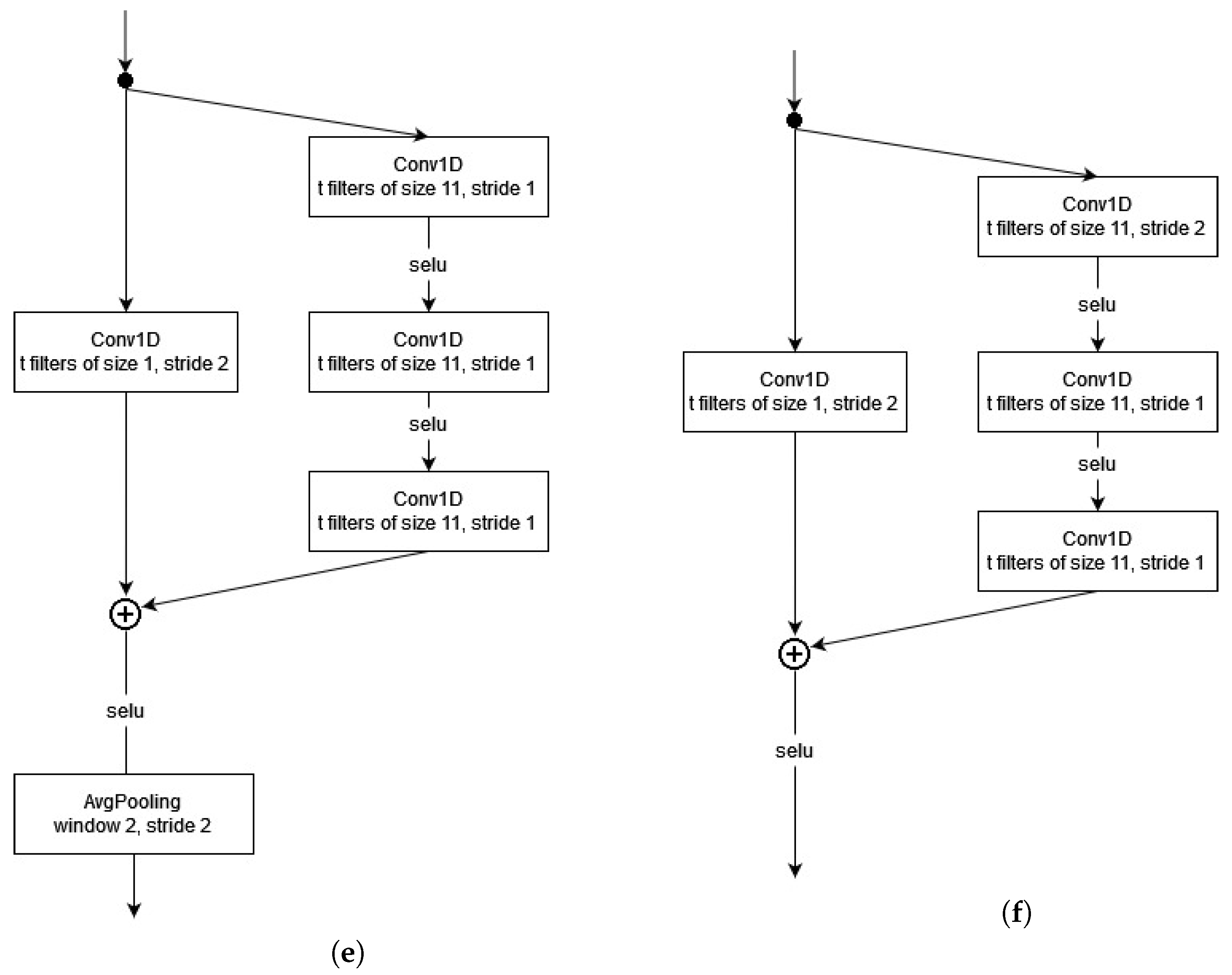

3.2. Depth of ResNets

4. Comparison With State-of-the-Art CNN and MLP Architectures

4.1. Results

4.2. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| SCA | Side-channel Attack |

| SNR | Signal-to-Noise Ratio |

| AES | Advanced Encryption Standard |

| DL | Deep Learning |

| ML | Machine Learning |

| GE | Guessing Entropy |

| HW | Hamming Weight |

| ASCAD | ANSSI SCA Database |

| ASCADf | ASCAD with a fixed key |

| ASCADr | ASCAD with random keys |

| ASCADv2 | ASCAD version 2 |

| CTF | Capture The Flag |

| CHES | Cryptographic Hardware and Embedded Systems |

| AES_HD | Advanced Encryption Standard dataset |

| CNN | Convolutional Neural Network |

| SELU | Scaled Exponential Linear Unit |

References

- Kocher, P.C. Timing attacks on implementations of Diffie-Hellman, RSA, DSS, and other systems. In Advances in Cryptology—CRYPTO’96: Proceedings of the 16th Annual International Cryptology Conference, Santa Barbara, CA, USA, 18–22 August 1996; Springer: Berlin/Heidelberg, Germany, 1996; pp. 104–113. [Google Scholar]

- Kocher, P.; Jaffe, J.; Jun, B. Differential power analysis. In Advances in Cryptology—CRYPTO’99: Proceedings of the 19th Annual International Cryptology Conference Santa Barbara, CA, USA, 15–19 August 1999; Springer: Berlin/Heidelberg, Germany, 1996; pp. 388–397. [Google Scholar]

- Benadjila, R.; Prouff, E.; Strullu, R.; Cagli, E.; Dumas, C. Deep learning for side-channel analysis and introduction to ASCAD database. J. Cryptogr. Eng. 2020, 10, 163–188. [Google Scholar] [CrossRef]

- Anand, S.A.; Saxena, N. Anand, S.A.; Saxena, N. A sound for a sound: Mitigating acoustic side channel attacks on password keystrokes with active sounds. In Financial Cryptography and Data Security: Proceedings of the 20th International Conference, FC 2016, Christ Church, Barbados, 22–26 February 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 346–364. [Google Scholar]

- Yarom, Y.; Falkner, K. FLUSH+ RELOAD: A high resolution, low noise, L3 cache side-channel attack. In Proceedings of the 23rd {USENIX} Security Symposium ({USENIX} Security 14), San Diego, CA, USA, 20–22 August 2014; pp. 719–732. [Google Scholar]

- Maghrebi, H.; Portigliatti, T.; Prouff, E. Breaking cryptographic implementations using deep learning techniques. In Security, Privacy, and Applied Cryptography Engineering: Proceedings of the 6th International Conference, SPACE 2016, Hyderabad, India, 14–18 December 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 3–26. [Google Scholar]

- Kim, J.; Picek, S.; Heuser, A.; Bhasin, S.; Hanjalic, A. Make Some Noise. Unleashing the Power of Convolutional Neural Networks for Profiled Side-channel Analysis. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2019, 2019, 148–179. [Google Scholar] [CrossRef]

- Zaid, G.; Bossuet, L.; Habrard, A.; Venelli, A. Methodology for Efficient CNN Architectures in Profiling Attacks. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2020, 2020, 1–36. [Google Scholar] [CrossRef]

- Rijsdijk, J.; Wu, L.; Perin, G.; Picek, S. Reinforcement Learning for Hyperparameter Tuning in Deep Learning-based Side-channel Analysis. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2021, 2021, 677–707. [Google Scholar] [CrossRef]

- Wu, L.; Perin, G.; Picek, S. I Choose You: Automated Hyperparameter Tuning for Deep Learning-based Side-channel Analysis. IEEE Trans. Emerg. Top. Comput. 2022, 1–12. [Google Scholar] [CrossRef]

- Lu, X.; Zhang, C.; Cao, P.; Gu, D.; Lu, H. Pay Attention to Raw Traces: A Deep Learning Architecture for End-to-End Profiling Attacks. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2021, 2021, 235–274. [Google Scholar] [CrossRef]

- Masure, L.; Strullu, R. Side-channel analysis against ANSSI’s protected AES implementation on ARM: End-to-end attacks with multi-task learning. J. Cryptogr. Eng. 2023, 13, 129–147. [Google Scholar] [CrossRef]

- Won, Y.; Hou, X.; Jap, D.; Breier, J.; Bhasin, S. Back to the Basics: Seamless Integration of Side-Channel Pre-Processing in Deep Neural Networks. IEEE Trans. Inf. Forensics Secur. 2021, 16, 3215–3227. [Google Scholar] [CrossRef]

- Zhou, Y.; Standaert, F. Deep learning mitigates but does not annihilate the need of aligned traces and a generalized ResNet model for side-channel attacks. J. Cryptogr. Eng. 2020, 10, 85–95. [Google Scholar] [CrossRef]

- Acharya, R.Y.; Ganji, F.; Forte, D. Information Theory-based Evolution of Neural Networks for Side-channel Analysis. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2023, 2023, 401–437. [Google Scholar] [CrossRef]

- Wouters, L.; Arribas, V.; Gierlichs, B.; Preneel, B. Revisiting a Methodology for Efficient CNN Architectures in Profiling Attacks. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2020, 2020, 147–168. [Google Scholar] [CrossRef]

- Schijlen, F.; Wu, L.; Mariot, L. NASCTY: Neuroevolution to Attack Side-Channel Leakages Yielding Convolutional Neural Networks. Mathematics 2023, 11, 2616. [Google Scholar] [CrossRef]

- Perin, G.; Wu, L.; Picek, S. Exploring Feature Selection Scenarios for Deep Learning-based Side-channel Analysis. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2022, 2022, 828–861. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Computer Vision–ECCV 2016: Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 630–645. [Google Scholar]

- Jin, M.; Zheng, M.; Hu, H.; Yu, N. An Enhanced Convolutional Neural Network in Side-Channel Attacks and Its Visualization. arXiv 2020, arXiv:2009.08898. [Google Scholar]

- Gohr, A.; Jacob, S.; Schindler, W. Efficient Solutions of the CHES 2018 AES Challenge Using Deep Residual Neural Networks and Knowledge Distillation on Adversarial Examples. IACR Cryptol. ePrint Arch. 2020, 2020, 165. [Google Scholar]

- Masure, L.; Belleville, N.; Cagli, E.; Cornélie, M.A.; Couroussé, D.; Dumas, C.; Maingault, L. Deep learning side-channel analysis on large-scale traces. In European Symposium on Research in Computer Security; Springer: Cham, Switzerland, 2020; pp. 440–460. [Google Scholar]

- Timon, B. Non-Profiled Deep Learning-based Side-Channel attacks with Sensitivity Analysis. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2019, 2019, 107–131. [Google Scholar] [CrossRef]

- Wu, L.; Perin, G.; Picek, S. Hiding in Plain Sight: Non-profiling Deep Learning-based Side-channel Analysis with Plaintext/Ciphertext. IACR Cryptol. ePrint Arch. 2023. [Google Scholar]

- Staib, M.; Moradi, A. Deep Learning Side-Channel Collision Attack. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2023, 2023, 422–444. [Google Scholar] [CrossRef]

- Cunningham, P.; Cord, M.; Delany, S.J. Supervised learning. In Machine Learning Techniques for Multimedia; Springer: Berlin/Heidelberg, Germany, 2008; pp. 21–49. [Google Scholar]

- Targ, S.; Almeida, D.; Lyman, K. Resnet in resnet: Generalizing residual architectures. arXiv 2016, arXiv:1603.08029. [Google Scholar]

- Hochreiter, S. The vanishing gradient problem during learning recurrent neural nets and problem solutions. Int. J. Uncertainty, Fuzziness Knowl.-Based Syst. 1998, 6, 107–116. [Google Scholar] [CrossRef]

- Chari, S.; Rao, J.R.; Rohatgi, P. Template Attacks. In Cryptographic Hardware and Embedded Systems—CHES 2002, Proceedings of the 4th International Workshop, Redwood Shores, CA, USA, 13–15 August 2002; Revised, Papers; Kaliski, B.S., Jr., Koç, Ç.K., Paar, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2002; Volume 2523, pp. 13–28. [Google Scholar] [CrossRef]

- Standaert, F.; Malkin, T.; Yung, M. A Unified Framework for the Analysis of Side-Channel Key Recovery Attacks. In Advances in Cryptology—EUROCRYPT 2009, Proceedings of the 28th Annual International Conference on the Theory and Applications of Cryptographic Techniques, Cologne, Germany, 26–30 April 2009; Joux, A., Ed.; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5479, pp. 443–461. [Google Scholar] [CrossRef]

- Picek, S.; Heuser, A.; Jovic, A.; Bhasin, S.; Regazzoni, F. The curse of class imbalance and conflicting metrics with machine learning for side-channel evaluations. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2019, 2019, 209–237. [Google Scholar] [CrossRef]

- Köpf, B.; Basin, D.A. An information-theoretic model for adaptive side-channel attacks. In Proceedings of the 2007 ACM Conference on Computer and Communications Security, CCS 2007, Alexandria, VA, USA, 28–31 October 2007; Ning, P., di Vimercati, S.D.C., Syverson, P.F., Eds.; ACM: New York, NY, USA, 2007; pp. 286–296. [Google Scholar] [CrossRef]

- Bhasin, S.; Jap, D.; Picek, S. AES HD Dataset—500,000 Traces. AISyLab Repository. 2020. Available online: https://github.com/AISyLab/AES_HD_2 (accessed on 1 June 2023).

- Perin, G.; Wu, L.; Picek, S. AISY—Deep Learning-Based Framework for Side-Channel Analysis. Cryptology ePrint Archive, Paper 2021/357. 2021. Available online: https://eprint.iacr.org/2021/357 (accessed on 1 June 2023).

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Singh, S., Markovitch, S., Eds.; AAAI Press: Washington, DC, USA, 2017; pp. 4278–4284. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015; Conference Track Proceedings. Bengio, Y., LeCun, Y., Eds.; 2015. [Google Scholar]

- Smith, L.N. Cyclical Learning Rates for Training Neural Networks. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision, WACV 2017, Santa Rosa, CA, USA, 24–31 March 2017; pp. 464–472. [Google Scholar] [CrossRef]

- Perin, G.; Chmielewski, L.; Picek, S. Strength in Numbers: Improving Generalization with Ensembles in Machine Learning-based Profiled Side-channel Analysis. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2020, 2020, 337–364. [Google Scholar] [CrossRef]

| ResNet | CNN [18] | MLP [18] | |

|---|---|---|---|

| Nr. of traces to reach GE = 1 | 160 | 87 | 104 |

| Nr. of trainable parameters | 96,800 | 7728 | 10,266 |

| ResNet | CNN [18] | MLP [18] | |

|---|---|---|---|

| Nr. of traces to reach GE = 1 | 47 | 78 | 129 |

| Nr. of trainable parameters | 489,592 | 87,520 | 34,236 |

| Nr. of Features | Nr. of Residual Blocks | ASCADf | ASCADr |

|---|---|---|---|

| 1500 | 8 | 36 | 34 |

| 2000 | 8 | 64 | 37 |

| 2500 | 9 | 86 | 37 |

| 3000 | 9 | 42 | 29 |

| 3500 | 9 | 6 | 21 |

| 4000 | 9 | 10 | 14 |

| 4500 | 10 | 9 | 9 |

| 5000 | 10 | 10 | 8 |

| ResNet | CNN [8] | |

|---|---|---|

| Nr. of traces to reach GE = 1 | 4100 | 6060 |

| Nr. of trainable parameters | 111,491 | 3278 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karayalcin, S.; Perin, G.; Picek, S. Resolving the Doubts: On the Construction and Use of ResNets for Side-Channel Analysis. Mathematics 2023, 11, 3265. https://doi.org/10.3390/math11153265

Karayalcin S, Perin G, Picek S. Resolving the Doubts: On the Construction and Use of ResNets for Side-Channel Analysis. Mathematics. 2023; 11(15):3265. https://doi.org/10.3390/math11153265

Chicago/Turabian StyleKarayalcin, Sengim, Guilherme Perin, and Stjepan Picek. 2023. "Resolving the Doubts: On the Construction and Use of ResNets for Side-Channel Analysis" Mathematics 11, no. 15: 3265. https://doi.org/10.3390/math11153265

APA StyleKarayalcin, S., Perin, G., & Picek, S. (2023). Resolving the Doubts: On the Construction and Use of ResNets for Side-Channel Analysis. Mathematics, 11(15), 3265. https://doi.org/10.3390/math11153265