Abstract

This paper presents a solution for efficiently and accurately solving separable least squares problems with multiple datasets. These problems involve determining linear parameters that are specific to each dataset while ensuring that the nonlinear parameters remain consistent across all datasets. A well-established approach for solving such problems is the variable projection algorithm introduced by Golub and LeVeque, which effectively reduces a separable problem to its nonlinear component. However, this algorithm assumes that the datasets have equal sizes and identical auxiliary model parameters. This article is motivated by a real-world remote sensing application where these assumptions do not apply. Consequently, we propose a generalized algorithm that extends the original theory to overcome these limitations. The new algorithm has been implemented and tested using both synthetic and real satellite data for atmospheric carbon dioxide retrievals. It has also been compared to conventional state-of-the-art solvers, and its advantages are thoroughly discussed. The experimental results demonstrate that the proposed algorithm significantly outperforms all other methods in terms of computation time, while maintaining comparable accuracy and stability. Hence, this novel method can have a positive impact on future applications in remote sensing and could be valuable for other scientific fitting problems with similar properties.

Keywords:

separable least squares; nonlinear optimization; python; inverse problems; trace gas retrieval; atmospheric composition; carbon dioxide; infrared spectroscopy PACS:

02.60.-x; 02.30.Zz; 92.60.-e; 42.68.Ca

MSC:

01-08; 65K10; 65D10; 15A29

1. Introduction

One of the fundamental tasks in scientific computing is to find the unknown vector of parameters , which, for given data , , , and model function , solves the least squares problem

where the vectors and have the entries and , respectively. If the model function is nonlinear, the minimization problem is solved iteratively by using step-length or trust-region methods, such as the Levenberg–Marquardt algorithm [1].

In separable least squares problems, the model function is a linear combination of nonlinear functions , , i.e.,

and the vector of parameters is split into a vector of linear parameters , and a vector of nonlinear parameters, .

In 1973, Golub and Pereyra [2] proposed a powerful method for solving such problems, called variable projection (VP). Specifically, the problem is reduced to a nonlinear least squares problem involving only, and a linear least squares problem involving only. Thus, the dimension of the nonlinear problem to be solved is reduced from to p. Later on, Golub and LeVeque [3] extended the method to the case of multiple datasets with . In this type of problem, a vector of linear parameters is associated with a dataset , while the vector of nonlinear parameters corresponds to all datasets.

One example of this type of problem can be found in the retrieval of the atmospheric composition from passive remote sensing measurements (cf. [4]). Here, an atmospheric radiative transfer model with molecular composition parameters (nonlinear) and reflectivity parameters (linear) is fitted to spectral radiance measurements to determine the underlying atmospheric state parameters. For long-lived molecules, such as CO2 or CH4, which are homogeneously spread throughout the atmosphere, it is possible to fit several (nearby) observations for one concentration value while the surface reflectivity differs for each measurement, making this a separable problem with multiple right-hand sides.

Fitting, for example, radiance spectra with three distinct linear reflectivity variables simultaneously for two nonlinear molecular concentration variables, the total number of unknowns for conventional solvers adds up to 50 (=). However, with a separable approach, such as variable projection, one can reduce this size by a factor of 25.

However, the variable projection algorithm established by Golub and LeVeque [3] to solve such problems with multiple right-hand sides is based on the assumption that all datasets have the same lengths and corresponding nonlinear models.

In this article, the theory by Golub and LeVeque [3] is, therefore, elaborated and further enhanced for scenarios such as differently sized datasets or varying nonlinear model setups (see Section 2). The modifications were not only necessary in order to apply their method to the example described above, but this new algorithm could also be useful for other scientific fitting problems. The method was implemented in Python (see Section 3) and applied to the least squares problem arising in atmospheric trace gas retrieval (see Section 4). In Section 5, experimental results are discussed and concluded.

2. Theoretical Background

Assuming a model that is a linear combination of nonlinear functions, such as (2), one can define the arising separable least squares problem as follows:

where the matrix has the entries .

2.1. The Variable Projection Method

The minimization problem in (2), written as

is reduced to two least squares problems. Golub and Pereyra [2] pointed out that for any fixed , one considers the merely linear least squares problem

which is solved by

where is the generalized inverse of the matrix . Second, for the functional

which is obtained by substituting the expression of given by Equation (6) into the residual function, one considers the nonlinear least squares problem

where and is the orthogonal projection operator onto the column space of the matrix . Thanks to this formulation, the method is called variable projection (VP).

Thus, the method of solution involves, in the first step, the computation of the minimizer , and in the second step, the computation of the vector of linear parameters as . Golub and Pereyra [2] showed that this solution method yields the correct result, under the assumption that has a locally constant rank in the neighborhood of . In order to minimize a nonlinear problem, such as (7), the derivative of the objective function, with respect to the unknown variables, is needed. If the rank of matrix was not constant across the points throughout the iteration where its derivative is calculated, the pseudo-inverse would not be a continuous function and, therefore, not differentiable.

This separation technique has powerful advantages over conventional algorithms, which all follow from the fact that the nonlinear functional only depends on (see also the review by Golub and Pereyra [5]). Since is a vector of length p and of length n, the VP method effectively turns the original nonlinear problem of variables into one of only p. This reduction of the parameter space of the problem results in a smaller size of the problem’s Jacobian and, therefore, requires less time for its computation. Moreover, O’Leary and Rust [6] pointed out that the reduced parameter space may also lead to a reduced number of local minimizers, making it more likely to find the global minimum instead of a local one. To summarize, the VP solver is a lot more efficient and also converges better than conventional methods with no separation [5,7]. Moreover, the need for a smaller initial guess vector can generally lead to a better-conditioned and more stable problem.

For solving the nonlinear least squares problem (8), one needs to calculate the partial derivatives . In this regard, Golub and Pereyra [2] provide the computational formula

which is proved in Appendix A.

Kaufman [8] proposed a modification of the original method by making use of a QR decomposition of the matrix :

where , , and are matrices with orthonormal columns (i.e., , , ) and is an upper triangular, non-singular matrix. In this case, the generalized inverse , satisfying the relation , computes as

From the invariance of the 2-norm under orthogonal transformations and ), it follows that

Since the optimal for any given is

we find , showing that the nonlinear least squares problem reduces to

Moreover, for derivative calculations, Kaufman [8] proposed the simplified formula

which is justified in Appendix A. This was shown to save function and gradient evaluation costs and, therefore, reduce the computing time per iteration, which is why this simplified version of (9) became well established.

2.2. Multiple Right-Hand Sides (MRHS)

As Golub and LeVeque [3] pointed out, there can be optimization problems where multiple sets of data are to be fit to a model function, such as (2). If the model parameters are to vary for each set , this will just result in s distinct separable problems, such as (3). There are, however, cases where only the linear variables are specific to each dataset, while the nonlinear variables have to hold for all available data simultaneously. By exploiting separability, the sizes of such minimization problems can be reduced from to just p unknown variables, which is even greater than with only one dataset.

Separable problems with s right-hand side(s) (RHS) can be posed as the minimization of

where the nonlinear parameter vector is and the matrix is , containing the linear parameter vectors for each RHS with as its columns, using the Frobenius norm . Here, the data matrix is fit to a single model of the form , where is as before (cf. Equation (3)).

2.2.1. Naive Approach

The first intuitive approach, which was also mentioned by Golub and LeVeque [3], is to reformulate (16) using the matrix

and the vectors and , such that a problem of the original vectorial form

arises. This formulation allows for solving the separable problem with multiple RHS, by means of the variable projection algorithm already discussed. However, even for a moderate number of datasets, the matrix becomes overly large. Moreover, the sparse structure and the fact that all diagonal blocks in (17) are the same can be better utilized in the earlier formulation (16).

2.2.2. Golub–LeVeque Approach

Therefore, Golub and LeVeque [3] suggested a different approach: Starting from (16), one can, in the same manner as Equation (7), exploit the problem’s separability and reduce it to a purely nonlinear minimization problem of the form

with the orthogonal projector as before. Acknowledging that the Frobenius norm in (19) is equivalent to the 2-norm of a vector function , set up as

this can be minimized with any of the established methods for nonlinear least squares problems, such as the Levenberg–Marquardt algorithm, which is used in [3]. The Jacobian matrix of can be calculated analogously by defining its column as

where is exactly as in (9).

2.2.3. Kaufman Approach

Since the Frobenius norm is invariant under the orthogonal transformation, the above method can also be written in terms of the QR decomposition of outlined in Section 2.1. This approach based on Kaufman [8] can, therefore, be established by replacing the orthogonal projector in all of the above equations of the Golub–LeVeque method, with the smaller orthogonal matrix derived in (10). Both versions of the approach are included in the Python implementation outlined in Section 3 and are, therefore, the subjects of the numerical experiments performed in Section 4.

2.3. Extensions to the Golub–LeVeque Approach

Working with real measurements, which can be subject to errors or missing data points, it is not always possible to use datasets that all have the exact same number of data points. In this case, each dataset has a specific size , such that the matrix depiction used in (16) does not hold any longer.

Moreover, the nonlinear model functions stored in the matrix often depend on further auxiliary parameters, which may vary for each individual measurement (e.g., observation angle). Thus, there can be different of size .

None of these aspects were explicitly mentioned by Golub and LeVeque [3] or Kaufman and Sylvester [9], who later simplified the Golub–LeVeque method further, with the assumption of equal lengths of the . It was only mentioned by Kaufman [10] that the TIMP package by Mullen and van Stokkum [11] allows for differently sized data vectors, but not for differently constructed s.

For the implementation introduced in this paper, both of these changes are taken into account. It can be seen that the special structure of vector (20) and matrix (21) can be exploited to rewrite

as a stack of (differently sized) vectors and, likewise, the column of its Jacobian

This necessary modification naturally increases the computational expense compared to the original Golub–LeVeque method, as matrix and its derivative have to be calculated s times instead of once. This also reduces the gain in efficiency that one would have had from exchanging with , as the QR decomposition now needs to be calculated for every single matrix . However, this modified VP method for multiple right-hand sides is still significantly more efficient than using a standard nonlinear optimizer for the same problem, as will be shown in Section 4.

3. Implementation

The first FORTRAN implementation of a variable projection algorithm that allows for multiple right-hand sides was VARP2 [12], which was developed by Randy LeVeque. It is a modification of the subroutine VARPRO [13] for classical separable problems with only a single RHS. Both work with user-provided Jacobians. Another efficient implementation of a solver for multiple datasets is the TIMP package by Mullen and van Stokkum [11], written in the statistical computing language R, where the Jacobian is calculated by finite difference approximations.

This work was partly motivated by the fact that many scientific computing problems are nowadays implemented in Python. However, the only modern implementation of a VP algorithm is a MATLAB code by O’Leary and Rust [6], which does not allow for multiple right-hand sides, as discussed in Section 2.3. Here, an implementation is introduced where the standard VP algorithm by O’Leary and Rust [6] is enhanced for multiple datasets, as described in Section 2.

The MATLAB code by O’Leary and Rust [6] was specifically designed to be brief and easily understandable, so it would be well-suited for translation. O’Leary and Rust [6] argued that many of the established implementations, such as VARPRO [13], and PORT library [14] subroutines, such as NSF/NSG [8], are somewhat outdated today and lack readability; thus, they proposed an efficient present-day implementation written in an interpreted language, with the advantage that it can easily be enhanced or modified. One reason for their code’s brevity is that they used built-in MATLAB functions, such as lsqnonlin.m, to solve the nonlinear least squares problem (7), and svd.m to solve the remaining linear problems via singular value decomposition, instead of writing their own, making the algorithm modular. Another advantage of their code is the variety of statistical diagnostics it offers, some of which were also modified for multiple datasets and used for the assessment in Section 4.

3.1. Algorithm for a Single Right-Hand Side

A short outline of a standard VP algorithm (such as the one by O’Leary and Rust [6]) can be formulated as this:

- The user supplies the measurement vector , the number of linear parameters n, additional independent variables for the model, and an initial guess for the nonlinear variables . In addition, a subroutine/function which will be called ADA (cf. [6], Sections 2.3 and 2.4) needs to be provided, which, for the given input n and , calculates the nonlinear model matrix and its partial derivatives .

- Calculate the pseudo-inverse to generate the variable projection functional from (7), which is dependent on a given .

- Use the partial derivatives to generate the Jacobian matrix for a given , as in (9).

- Minimize the variable projection functional (8) by using the results from steps 2 and 3 and insert them into an already existing nonlinear least squares solver to obtain the final result .

- Take and calculate a final to solve the remaining linear parameters , as in (6).

In the original implementation by O’Leary and Rust [6], the linear solution, including the computation of , was calculated via a singular value decomposition, which is a very robust direct method for solving linear least squares problems. Moreover, instead of using the simplification by Kaufman [8] of dropping the second term in the Jacobian (9), O’Leary and Rust [6] argued that, in modern computers, the balance between the computing time for extra iterations vs. the second term has tipped. In 1975, when Kaufman [8] proposed simplification, it used to be more efficient to put up with more functional evaluations by saving matrix computations; this could have changed today [6]. To verify this argument, a simplification by Kaufman [8] is included as a subject for testing in Section 4.3.

To solve the nonlinear problem in step 4, the least_squares function [15] from the scipy.optimize package [16] is used in the implementation. Its default solving method is the so-called trust region reflective algorithm [17], which has been shown to work efficiently within the VP algorithm for the example discussed in Section 4 [18].

3.2. Modifications for Multiple Right-Hand Sides

In the following, the modifications required for multiple right-hand sides with possibly varying lengths , are going to be discussed. For the naive approach, the only necessary change of the above procedure arises in the user-supplied function ADA from step 1; instead of one matrix and its derivative, it has to return the sparse matrix as in (17), with the possibly different on its diagonal, and the corresponding derivatives are also sparse matrices with the Jacobians on the diagonal. After setting up and , as in Equation (18), the above steps can be performed unchanged to obtain the results and .

This is different from the Golub–LeVeque approach described in Section 2.2. Here, the user-defined method ADA remains the same as in step 1 of Section 3.1; however, instead of calculating (8) in step 2, the new vector function has to be set up, such as (22). The same holds for the new derivative matrix from (23) in step 3. After this, in step 4, is minimized for ; consequently, in step 5, the final linear parameter matrix can be derived by calculating its column as follows:

Finally, for the Kaufman approach [8], it is important to note that the pseudo-inverse computed in steps 2 and 5 is now calculated via the QR decomposition (10), as in (11), while the remaining matrix of the decomposition can be taken for setting up and , just as before, by replacing in (22) and (23) with . The simplified Jacobian (15) is also adopted in the implementation of this approach.

4. Numerical Experiments

The goal of this section is to outline an example of a separable least squares problem with multiple right-hand sides and use it to evaluate the performance of the suggested VP algorithms. The tests were also set up to establish a comparison to conventional nonlinear least squares (NLS) methods, which ignore separability. Those were implemented using the least_squares function from the scipy.optimize package [15].

Without separation, the minimization problem of multiple right-hand sides can be composed as follows:

which is easily extendable to the case of varying sizes of , and correspondingly different .

4.1. Application: Trace Gas Retrieval

A real-world example for the described problem set can arise in the area of remote sensing, more specifically, in the retrieval of atmospheric trace gas concentrations from spectral radiance measurements. The concentration of carbon dioxide (CO2) or methane (CH4)—both important greenhouse gases—can be inferred from spectra observed in the short-wave infrared (SWIR). Such measurements are often spaceborne in order to achieve global coverage of the atmospheric composition.

For this paper, observations from the OCO-2 (Orbiting Carbon Observatory-2) satellite by NASA [19,20,21] were used, which was designed to monitor CO2 by measuring its absorption bands in the SWIR. In this spectral region, a radiance measurement can be modeled by the radiative transfer model (based on the Beer–Lambert law for molecular absorption neglecting scattering) [22]

dependent on the wavenumber . The term wavenumber, which represents the inverse of the often used wavelength , has the common unit cm−1 for the SWIR region, corresponding to 104/μm. The two sets of fitting parameters are for linear parameters and for nonlinear parameters .

The first term is a polynomial that approximates the wavenumber-dependent surface reflectivity of the Earth at the measurement location. Factor corresponds to (with : solar zenith angle) and accounts for the geometry of the measurement, is the incoming solar radiation (at the top of the atmosphere), and

is the total optical depth of the molecule, which is the path integral over the number density , and its pressure- and temperature-dependent cross-section . In trace gas retrieval, is the most important measure, as it is directly related to a molecule’s concentration in the atmosphere on a given path s. Unfortunately, SWIR observations do not provide enough information to retrieve the concentration profile of a molecule. Therefore, the calculation of (27) is only possible under prior assumptions of the atmospheric state (i.e., the temperature and pressure profiles , , and the molecular number density, i.e., ). Hence, a simple scaling factor is fitted as

in the forward model (26) to retrieve the “real” optical depth at the time and place of measurement. Lastly, is the spectral response function of the sensor, which has to be convolved with the monochromatic radiance in order to mimic a real measurement.

For trace gas retrieval, one has to consider all p molecules that have non-negligible absorbance in the measured spectral region. In the case of OCO-2 observations, the only relevant molecule apart from CO2 is H2O (water vapor), meaning that there are two nonlinear fitting parameters. For the linear parameters, it is common to use approximately three reflectivity coefficients (depending on the size of the spectral interval). This means that for each spectrum, the necessary fitting parameters are

Note that even though it is physically necessary to use all of these variables in a fit, the only one of interest in this context is the molecular scaling factor of the molecule l under scrutiny, which in this case is ), as this alone contains the relevant information about its atmospheric concentration.

This together with (26) clearly fits the criteria for a separable problem, and a conventional VP algorithm from the PORT Mathematical Subroutine Library [14,23] has already been tested by Gimeno García et al. [22] and validated by Hochstaffl et al. [24].

How is this an example of problems with multiple right-handed sides? Many satellites have sensors that measure radiance simultaneously in several spectral windows, e.g., OCO-2 observes the strong (around 6250 cm−1) and weak absorption bands (around 5000 cm−1) of CO2 (cf. Figure 1). Assuming consistent model input, both spectra should deliver the same values for , but as surface reflectivity varies strongly for different wavelength regions, they each have a specific reflectivity polynomial and, therefore, . Thus, for every observation, two spectral measurement windows (of different lengths) should be fitted simultaneously.

Figure 1.

Four exemplary soundings of frame 1728 from the OCO-2 level 1b (L1B) measurement product [25], each displaying radiance spectra in units [erg/s cm sr cm] from both the strong and weak bands with 809 and 651 spectral pixels each.

This concept of multiple right-hand sides can also be transferred into a spatial dimension: Some molecules, such as carbon dioxide or methane, are very long-lived, so they are distributed relatively homogeneously in the atmosphere. This means that observations from nearby locations should all yield quite similar concentrations. Thus, they might as well be fit for one at once. Note that the assumption made about atmospheric carbon dioxide might not hold for all other absorbing molecules in the observed spectral region, such as H2O, which has rather variable concentrations across the globe. However, as their variations are less than that of surface reflectivity, and no physical insight is sought from the fit of the parameter, it can be seen as a mere auxiliary parameter for completing the model and, therefore, be treated as a “constant” nonlinear fitting variable for a group of neighboring spectra. Still, in this case, the reflectivity coefficients, , representative of the surface at the place of measurement, are distinct for every geolocation and, therefore, specific to each measured spectrum. Another possible linear model parameter, which is distinct for each spectrum, would be a constant baseline correction added to the model (26), as suggested by Gimeno García et al. [22].

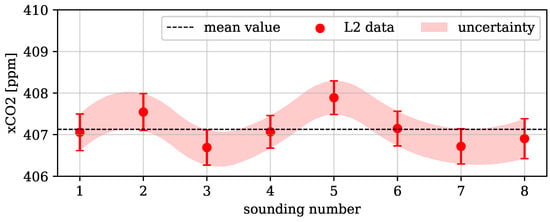

Finally, it is possible to combine both the spectral and spatial dimensions of multiple RHS fittings in trace gas retrieval. The OCO-2 satellite, for instance, always stores eight observations (“soundings”) in one so-called “frame”, with the spatial coverage not exceeding 24 km2. The concentration of carbon dioxide can be assumed to fluctuate only minimally within such an area on the globe. Figure 2 shows exemplary retrieval results of eight soundings from one OCO-2 frame. Most of the fluctuations in these results (all except for sounding number 5) could be merely due to noise in the measurements, as the mean value stays within the uncertainty for almost all of them. A fit over multiple observations, as proposed, could, therefore, help to constrain the fluctuation to a more reliable retrieval product.

Figure 2.

Total column average dry air mole fraction (i.e., “concentration”) of carbon dioxide, denoted as in the unit (parts per million), from the level 2 (L2) retrieval product of OCO-2 [26,27] for one exemplary frame (cf. Figure 1), including eight soundings.

To summarize, the OCO-2 product [25] allows for multiple RHS fits of a combined 8 (spatial) 2 (spectral) = 16 datasets (cf. Section 4.3).

The following tests were conducted with a Python version of BIRRA (Beer infrared retrieval algorithm) [22], which has been validated for the SWIR trace gas retrieval of CO by Hochstaffl et al. [24]. In this code, the Jacobian matrix of the model function (26) is set up analytically for the least squares fit, reducing numerical instabilities. BIRRA is an extension of the radiative transfer model Py4CAtS (Python for Computational Atmospheric Spectroscopy) [28], which is used to calculate the a priori total optical depths (27) needed in (26).

It must be noted that all retrievals conducted with the model described above are only supposed to evaluate the methodology and algorithms and in no way claim to represent full-fledged physical CO2 retrieval products, such as those by Crisp et al. [27]. Moreover, this technique of fitting multiple spectra measured within a certain spatial distance from each other is only reasonable as long as the assumption holds that, within an order of magnitude of the spatial resolution of the sensor, there are only small to no physically caused fluctuations/gradients in the sought trace gas concentration(s). This is, of course, not the case for localized emitters, such as power plants or biomass-burning events.

4.2. Tests with Synthetic Data

The goal of this subsection is to show the conceptual and effective differences of algorithms solving multiple RHS compared to the classical case of solving one. This analysis was conducted on the basis of synthetic spectra. Those are simulated radiance measurements generated with the radiative transfer model Py4CAtS [28]. The benefit of using this in tests is that, in the retrieval (i.e., the fitting process), there is no model error and the exact solution is known. The only deviation from a “perfect” fit is, therefore, controlled by adding noise to the modeled measurements.

In order to be representative of the later tests with real measurements, the same numbers, sizes, and types (distinct in spatial or spectral dimensions) of datasets were generated as the ones used by OCO-2. Moreover, for consistency, all test retrievals were conducted using the same number of fitting parameters, with linear ones per dataset and a total of nonlinear ones (cf. vectors in (29)). This allowed for the test cases summarized in Table 1.

Table 1.

Test cases for the analysis of fitting 1 (denoted as single) or 16 (denoted as MRHS) synthetically generated datasets, simultaneously, with both VP and NLS algorithms.

For the MRHS case, the Golub–LeVeque VP algorithm (introduced in Section 2.2) was used as a representative solver for multiple RHS problems. Tests with synthetic spectra indicated that all mentioned MRHS methods (see Table 2) yielded equal accuracy, confirming the theoretical proof offered by Golub and Pereyra [2] that the solutions found by a variable projection solver should be equivalent to those of conventional nonlinear solving methods.

Table 2.

Test cases for the analysis of real radiance spectra with both VP and NLS algorithms for multiple RHS.

For the single cases, a classical VP (based on O’Leary and Rust [6]) and a conventional NLS single-RHS solver [15] (based on Branch et al. [17]) were tested.

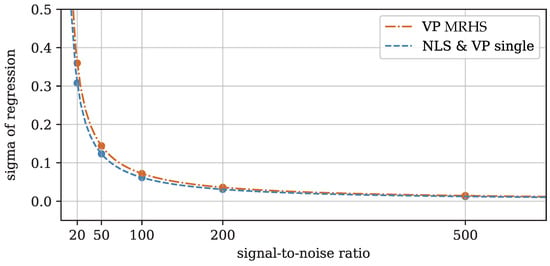

First, the fitting precision of the VP MRHS solver was compared to that of single solvers using spectra with signal-to-noise (SNR) ratios in the range of 20 to 500. One measure of the goodness of fitting results is the relative error,

compared to the true parameter values.

Figure 3 shows the distribution of these errors and the corresponding standard deviations for different SNR values. The signal-to-noise ratios achieved by satellites, including OCO-2, ranged between approximately 200 and 800 for the frames used [25]. This broad variation of OCO-2’s SNR comes from changes in the solar position and varying surface reflectivities across the orbit. As expected, both solvers achieved improved precision for increasing SNR values, since a fit becomes more accurate for less noisy data. While both single methods (NLS and VP) showed equal performances, the VP MRHS yielded standard deviations that were slightly worse. This trend is also reflected in the distributions of the relative errors, which are always more sharply distributed around zero for the single solvers than for the MRHS solver. This behavior is not surprising since a less-dimensional residual vector (coming from the shorter data vector) and fewer unknown parameters most generally leave less freedom in the fit and, therefore, lead to more precise fitting results. To phrase it differently: one can expect that, as the size of the least squares problem increases, the number of possible local minima that the fit can reach will behave accordingly.

Figure 3.

Distribution plots of the relative errors (difference between exact and fitted results) on the right and the corresponding standard deviations from the exact solution on the left for both fitting setups single and MRHS with increasing signal-to-noise ratios (SNRs).

Considering the similarly shaped distributions in Figure 3 and the fact that VP MRHS seems to improve at the same rate as NLS and VP single, MRHS fits can be viewed as equally effective. In particular, at higher SNRs, both methodologies achieve deviations from the exact results in such low orders of magnitude that the precisions of their fitted values are very comparable.

Another important measure for the accuracy of a fit is the standard deviation of the residuals (prediction errors with : vector of observations, and : fitted model), also known as the sigma of regression, defined as

where the numerator is the norm of the residual vector of the fit and the denominator represents the number of degrees of freedom (the number of data points minus the number of unknown variables). Of course, for the s right-hand side case, the number of linear parameters n becomes , and the number of data points becomes (or ), respectively.

Figure 4 shows the mean sigma of regression of all fits over the SNR. Here, the development of single and MRHS is similar to that of the errors discussed above. The mean sigmas produced by the MRHS fits are slightly higher than the ones achieved by the single solvers. Again, this is intuitive: A single solver is able to produce distinct nonlinear parameters for the noisy spectra and, therefore, has more degrees of freedom to mimic the noisy spectra. An MRHS solver, on the other hand, only has one set of nonlinear parameters for all the spectra, leading to an overall larger deviation between the “observed” and modeled data. This does not necessarily mean the latter is less accurate. On the contrary, since it is less prone to including the specific noise of the spectra into the fit, MRHS solvers could have a smoothing effect on otherwise fluctuating retrieval results (see Figure 2).

Figure 4.

Mean sigma of regression for both single and MRHS fits for different noisy spectra. The dashed lines correspond to fitted hyperbolas.

The effect of possible “overfitting” might, however, only be relevant for high noise levels (low SNR). As the sigma of regression is proportional to the noisy radiance, which is proportional to (with : normally distributed random value), it decreases by the inverse of the SNR value for both the single and MRHS fits (see fitted hyperbolas in Figure 4). In this way, as the noise increases, the sigmas of regression become more similar, such that for an SNR of 200 and higher (representative of OCO-2), the performance differences between the methodologies (single and MRHS) disappear. Thus, for reasonably good data, there is no sacrifice in the precision or accuracy of the produced results when fitting multiple RHS simultaneously instead of fitting one by one.

Now that the differences between MRHS solvers and classical single solvers are established, we need to analyze which MRHS algorithm (see Section 2.2) is the best.

4.3. Tests with Real Measurements

The goal of this subsection is to assess the performance of the new enhanced VP algorithms for multiple RHS described in Section 2.3 and Section 3.2 (VP naive, VP Golub–LeVeque, VP Kaufman) by comparing them to conventional NLS solvers.

The SciPy function used for the NLS reference approach allows the user to choose between three different nonlinear least squares algorithms (explored thoroughly for a single RHS VP algorithm by Bärligea [18]). In order to better judge the solvers’ performances, two of them were used in the tests: the trust region reflective method (‘TRF’) [17] and the Levenberg–Marquardt method (‘LM’) [1]. While the former is the most efficient, the latter can be considered the most robust [18], which could be helpful for an increasing number of variables.

In this subsection, an analysis of the test cases listed in Table 2 is conducted. For the assessment, a set of 18 OCO-2 frames was used (all measured on the 25 of May 2020 on orbit 31366a in the nadir (downward view) acquisition mode just above Australia, with a spacecraft altitude of approximately 711 km [29]); each included 8 observations in both spectral bands (cf. Figure 1), within an area of 24 km2 measured along a ground track no wider than 80 km, labeled as cloud-free (no scattering), above land (better reflectivity), and good quality, according to criteria defined by Crisp et al. [25]. With those, a few hundred test fits were performed, with the VP and NLS methods (see Table 2, with varying numbers of RHS ranging from 2 to the maximum available number of 16 (only even numbers due to the combination of the two spectral bands in one observation). Again, the fits used linear parameters per spectrum and nonlinear parameters.

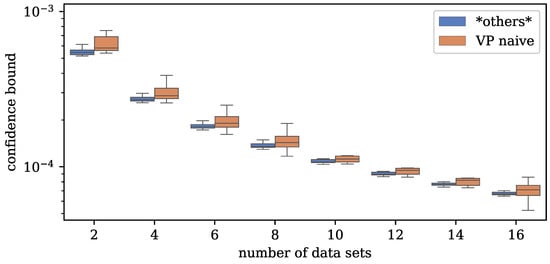

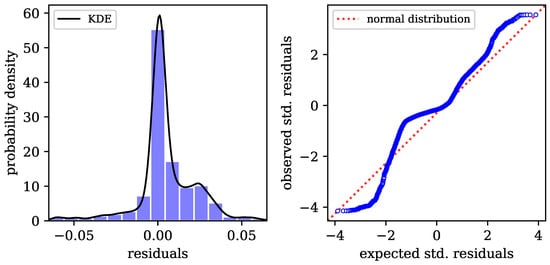

For the evaluation of accuracy, the sigma of regression, the R-Score measure, the confidence bounds of the results, and the fitted residuals, were analyzed. The sigma of regression defined in Equation (31) turned out to be equal for all of the tested methods (see the residual analysis below).

A second statistical quantity is the so-called R-score, defined as

indicating the amount of variance (the mean of the measurements ) accounted for by the fitted model . M means the cumulative size of all the datasets. R must be within , and the best possible score a fit can achieve would be 1. In the experiments, all of the discussed methods obtained R-scores of approximately 0.99. The only difference could be observed for VP GL and VP KM, which had average higher scores by 0.02% compared to all other methods, which is negligible.

In order to calculate the confidence bounds of the retrieval results, the covariance matrix

needs to be calculated, with containing the partial derivatives of the model function, with respect to the p nonlinear and linear parameters. For a VP method with multiple right-hand sides, it can be composed as follows:

Here, the first matrix is the Jacobian of the purely nonlinear function defined in (21) and (22) with respect to the nonlinear parameters , and the second matrix , defined in (17), is the Jacobian of all the linear parameters (cf. Equation (18)). For a confidence level of 95%, one can then calculate the confidence bound(s) (CB) of the retrieved parameters by

for which q represents the standard normal distribution quantile of , and the diagonal elements of are the variances of the estimated parameters .

Figure 5 shows the distribution and mean values of the calculated confidence bounds for the parameter for an increasing number of RHS. While the confidence bounds are already relatively small, they are decreasing for an increasing number of datasets, similar to before with the increasing SNR values (see Figure 4). This indicates that more data cause more accurate fitting results. However, a small difference can be observed in Figure 5 between the naive VP method and the “others” (including VP GL, VP KM, NLS TRF, and NLS LM, which all produced the same results). Apparently, the confidence bounds of the results from the naive method, though decreasing, are slightly worse than the rest. This is probably due to the different and more lavishly calculated Jacobian matrix of the naive problem (18). One can, therefore, argue that this is mainly a numerical issue and does not correspond to a lack of accuracy of the VP naive solver. In light of the measures considered above, the tested MRHS solvers all achieved equally accurate fits.

Figure 5.

Vertical box plots (with horizontal offset for better distinction) of the confidence bounds of the parameter achieved by each method for different numbers of datasets.

This was also confirmed when the residuals of the fits were analyzed, which turned out equally for all methods (cf. Table 2). The statistical diagnostics for the residuals of one exemplary VP GL fit (representative of all methods, including NLS) are shown in Figure 6. Ideally, the errors between the fitted model and measurements should be normally distributed. Due to noise and outliers in the spectral data, this distribution may, however, deviate slightly from a normal one. Yet, the fact that the residuals have their highest density around zero indicates that all the algorithms conducted reasonably good fits.

Figure 6.

Statistical diagnostics for one exemplary VP fit. The plot on the left shows the distribution of the residuals via a coarse histogram and the continuous kernel density estimate (KDE). The plot on the right is a normal probability plot, displaying the deviation of the residuals from a normal distribution.

As for the robustness, all algorithms yielded convergence rates of 100% for decent initial guesses. For a discussion on the impact of bad initial guesses, see O’Leary and Rust [6], who showed that the VP method ultimately converges more reliably than conventional NLS algorithms. This is mostly due to the fact that the former deal with a reduced nonlinear least squares problem needing only p instead of initial guesses, making the solver a lot more stable.

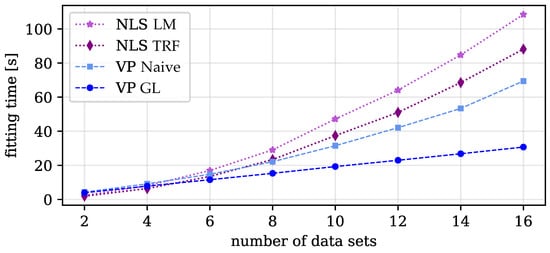

In the next step, the fitting times of all mentioned methods were analyzed to compare their computational efficiency. Figure 7 shows the mean running times for a fit for an increasing number of datasets. Here, the VP KM method is not shown since its performance is similar to VP GL. For fairly small numbers of datasets, the NLS algorithms were faster than the VP methods. This stems from the fact that these algorithms are part of the SciPy package [16], which is operationally optimized, whereas the proposed VP code was originally made as a proof of concept and is not yet optimized in the same manner. Still, this scheme changed drastically when more RHS were used. Table 3 shows the exact values for 2, 4, and 6 datasets.

Figure 7.

Comparison of the evolution of mean running times of NLS and VP methods for a fit over the number of used datasets.

Table 3.

Fitting times for VP methods compared to NLS methods for small numbers of datasets. The bold numbers mark the smallest mean values within a row.

For six RHS and more, the suggested VP GL algorithm not only becomes significantly faster than the rest, but it is also the only method with fitting times that increase linearly with the number of right-hand sides (see Figure 7), while all the other tested methods exhibit an almost quadratic evolution. This confirms that VP GL and VP KM are the most efficient methods when it comes to dealing with the rising complexity of multiple RHS problems. It also reveals the inferiority of the naive VP method compared to the ’good’ VP methods in every test. Even though the naive approach separates the problem and should, therefore, be just as stable as the good approaches, the time it needs for solving also rises quadratically with the number of fitting windows, similar to the slower (and inferior) NLS solvers. This must be due to the increasing size of the block diagonal matrix and the resulting extra costs for calculating and the Jacobian .

Comparisons of the two “good” VP algorithms (VP GL and VP KM) showed that, in all of the above categories, such as robustness or accuracy, the Kaufman approach did equally as well as the Golub–LeVeque one. The only difference could be found in the fitting times, for which the method by Kaufman [8], as predicted, was consistently faster. However, the relative improvements in the running times remained below 1% and are, therefore, almost negligible. This confirms the point made by O’Leary and Rust [6] that Kaufman’s simplification does not necessarily pose a computational benefit to modern computers anymore.

5. Discussion and Conclusions

Motivated by a real-world application in atmospheric remote sensing, a variable projection algorithm was extended to multiple right-hand sides of different sizes and nonlinear model setups. A modern MATLAB implementation by O’Leary and Rust [6] was translated into Python and modified according to the theory presented in this paper. It incorporates the ideas of Golub and LeVeque [3] and Kaufman [8] for solving separable nonlinear least squares problems with multiple RHS.

Numerical tests using synthetic data demonstrate that simultaneous fittings over multiple measurements maintain accuracy and precision compared to single dataset solvers, with potential benefits in reducing “overfitting” (with noise and outliers affecting the retrieval results) and fluctuations in the results.

A comprehensive comparison with conventional nonlinear least squares solvers using real measurements from NASA’s OCO-2 satellite [21] indicated similar accuracy among all algorithms. The most significant finding was that the variable projection methods based on Golub and LeVeque [3] and Kaufman [8] significantly outperformed all other methods in computing time, particularly as the number of datasets increased. Thus, these algorithms are deemed more efficient than conventional solvers. Furthermore, our experiments indicate that a popular simplification proposed by Kaufman [8] did not yield significant performance improvements.

The algorithm presented in this article proved to be highly effective and efficient. This indicates that the recommended modifications to the original algorithm by Golub and LeVeque [3] preserve its computational advantages. Note, that the benefits arising from a fast solver must always be considered in relation to the computational costs associated with the remaining part of the overall task. In trace gas retrieval applications, the computation time required for the forward model, i.e., radiative transfer Equation (26), can significantly exceed that of solving the inherent least squares problem. Our algorithm, thus, offers the most significant advantage in tasks where the overall performance heavily relies on the fitting process. Consequently, we endorse using this implementation not only for remote sensing, but also for other scientific problems implemented in Python with similar characteristics. The simultaneous fitting of more data can reduce fluctuations in the results, which is highly desirable in some applications.

Author Contributions

Conceptualization, A.B., P.H. and F.S.; methodology, A.B., P.H. and F.S.; software, A.B. and F.S.; validation, A.B.; formal analysis, A.B.; investigation, A.B.; data curation, A.B. and P.H.; writing—original draft preparation, A.B.; writing—review and editing, A.B., P.H. and F.S.; visualization, A.B.; supervision, P.H. and F.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The Python code for the presented variable projection algorithm, as well as examples, are available at https://atmos.eoc.dlr.de/tools/varPro/index.html (accessed on 16 May 2023). All data used for the tests with synthetic spectra can be reproduced with the radiative transfer model Py4CAtS (https://atmos.eoc.dlr.de/tools/Py4CAtS/ (accessed on 16 May 2023)) [28]. Data concerning NASA’s OCO-2 satellite are publically available at https://disc.gsfc.nasa.gov/ (accessed on 16 May 2023), provided by the Goddard Earth Sciences Data and Information Services Center (GES DISC) [26,29]. Any data arising from the numerical experiments that were used in the evaluations will be made available upon request.

Acknowledgments

We thank Thomas Trautmann and Adrian Doicu for the constructive criticism of the manuscript.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this article.

Abbreviations

The following abbreviations are used in this manuscript:

| VP | variable projection |

| RHS | right-hand side |

| MRHS | multiple right-hand sides |

| NLS | nonlinear least squares |

| OCO-2 | Orbiting Carbon Observatory 2 |

| NASA | National Aeronautics and Space Administration |

| SWIR | short-wave infrared |

| SNR | signal-to-noise ratio |

Appendix A

In this appendix, derivations for Equations (9) and (15) are presented (cf. proof by Golub and Pereyra [2]). Beginning with the full Jacobian (9): The generalized inverse satisfies the identities

Note that the second identity follows from the fact that the inverse of the symmetric matrix is also symmetric. From Equation (A2), we infer that the projector is symmetric; from Equation (A1), we infer that

By means of Equation (A3), we find

implying

with the short-hand notation . Then, using Equation (A6) and the relation , we compute the quantity as

On the other hand, because is symmetric, is also symmetric. Therefore, we have

further, in view of Equation (A7),

Finally, (A4), (A7), and (A9) yield

and the relation can be used to conclude Formula (9) presented in Section 2.1.

Regarding Equation (15): Using the orthogonality relation , we find

so that by differentiating, we have

Multiplying the above Equation from the right by and using the relation , we obtain

Now, using the identity

yields

References

- Moré, J. The Levenberg-Marquardt algorithm: Implementation and theory. In Numerical Analysis; Springer: Berlin/Heidelberg, Germany, 1978; pp. 105–116. [Google Scholar] [CrossRef]

- Golub, G.; Pereyra, V. The Differentiation of Pseudo-Inverses and Nonlinear Least Squares Problems Whose Variables Separate. SIAM J. Numer. Anal. 1973, 10, 413–432. [Google Scholar] [CrossRef]

- Golub, G.; LeVeque, R. Extensions and Uses of the Variable Projection Algorithm for Solving Nonlinear Least Squares Problems. In Proceedings of the 1979 Army Numerical Analysis and Computers Conference; Army Research Office: Durham, NC, USA, 1979. [Google Scholar]

- Hochstaffl, P.; Schreier, F.; Köhler, C.H.; Baumgartner, A.; Cerra, D. Methane retrieval from airborne HySpex observations in the short-wave infrared. Atmos. Meas. Tech. Discuss. 2022, 1–37. [Google Scholar] [CrossRef]

- Golub, G.; Pereyra, V. Separable nonlinear least squares: The variable projection method and its applications. Inverse Probl. 2003, 19, R1–R26. [Google Scholar] [CrossRef]

- O’Leary, D.; Rust, B. Variable projection for nonlinear least squares problems. Comput. Optim. Appl. 2013, 54, 579–593. [Google Scholar] [CrossRef]

- Ruhe, A.; Wedin, P. Algorithms for Separable Nonlinear Least Squares Problems. SIAM Rev. 1980, 22, 318–337. [Google Scholar] [CrossRef]

- Kaufman, L. A variable projection method for solving separable nonlinear least squares problems. BIT Numer. Math. 1975, 15, 49–57. [Google Scholar] [CrossRef]

- Kaufman, L.; Sylvester, G. Separable Nonlinear Least Squares with Multiple Right-Hand Sides. SIAM J. Matrix Anal. Appl. 1992, 13, 68–89. [Google Scholar] [CrossRef]

- Kaufman, L. Solving separable nonlinear least squares problems with multiple datasets. In Exponential Data Fitting and Its Applications; Pereyra, V., Scherer, G., Eds.; Bentham eBooks: Sharjah, United Arab Emirates, 2010; pp. 94–109. [Google Scholar] [CrossRef]

- Mullen, K.; van Stokkum, I. TIMP: An R Package for Modeling Multi-way Spectroscopic Measurements. J. Stat. Softw. 2007, 18, 1–46. [Google Scholar] [CrossRef]

- LeVeque, R. SUBROUTINE VARP2. 1985. Available online: https://www.netlib.org/opt/varp2 (accessed on 16 May 2023).

- Bolstad, J. SUBROUTINE VARPRO. 1977. Available online: https://www.netlib.org/opt/varpro (accessed on 16 May 2023).

- Fox, P.; Hall, A.; Schryer, N. The PORT Mathematical Subroutine Library. ACM Trans. Math. Softw. 1978, 4, 104–126. [Google Scholar] [CrossRef]

- SciPy v1.8.0 Manual. scipy.optimize.least_squares. Available online: https://docs.scipy.org/doc/scipy/reference/generated/scipy.optimize.least_squares.html (accessed on 16 May 2023).

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef] [PubMed]

- Branch, M.; Coleman, T.; Li, Y. A Subspace, Interior, and Conjugate Gradient Method for Large-Scale Bound-Constrained Minimization Problems. SIAM J. Sci. Comput. 1999, 21, 1–23. [Google Scholar] [CrossRef]

- Bärligea, A. Assessment of a Variable Projection Algorithm for Trace Gas Retrieval in the Short-Wave Infrared. Bachelor’s Thesis, Technical University of Munich, Munich, Germany, 2022. [Google Scholar]

- Crisp, D.; Atlas, R.; Breon, F.; Brown, L.; Burrows, J.; Ciais, P.; Connor, B.; Doney, S.; Fung, I.; Jacob, D.; et al. The Orbiting Carbon Observatory (OCO) mission. Adv. Space Res. 2004, 34, 700–709. [Google Scholar] [CrossRef]

- Eldering, A.; O’Dell, C.; Wennberg, P.; Crisp, D.; Gunson, M.; Viatte, C.; Avis, C.; Braverman, A.; Castano, R.; Chang, A.; et al. The Orbiting Carbon Observatory-2: First 18 months of science data products. Atmos. Meas. Tech. 2017, 10, 549–563. [Google Scholar] [CrossRef]

- Jet Propulsion Laboratory, California Institute of Technology. Orbiting Carbon Observatory-2. Available online: https://ocov2.jpl.nasa.gov/ (accessed on 16 May 2023).

- Gimeno García, S.; Schreier, F.; Lichtenberg, G.; Slijkhuis, S. Near infrared nadir retrieval of vertical column densities: Methodology and application to SCIAMACHY. Atmos. Meas. Tech. 2011, 4, 2633–2657. [Google Scholar] [CrossRef]

- Dennis, J.; Gay, D.; Welsch, R. Algorithm 573: NL2SOL-An Adaptive Nonlinear Least-Squares Algorithm. ACM Trans. Math. Softw. (TOMS) 1981, 7, 369–383. [Google Scholar] [CrossRef]

- Hochstaffl, P.; Schreier, F.; Lichtenberg, G.; Gimeno García, S. Validation of Carbon Monoxide Total Column Retrievals from SCIAMACHY Observations with NDACC/TCCON Ground-Based Measurements. Remote Sens. 2018, 10, 223. [Google Scholar] [CrossRef]

- Crisp, D.; Rosenberg, R.; Chapsky, L.; Keller Rodrigues, G.; Lee, R.; Merrelli, A.; Osterman, G.; Oyafuso, F.; Pollock, R.; Spiers, G.; et al. Level 1B Algorithm Theoretical Basis: Orbiting Carbon Observatory–2 & 3 (OCO-2 & OCO-3). 2021. Available online: https://docserver.gesdisc.eosdis.nasa.gov/public/project/OCO/OCO_L1B_ATBD.pdf (accessed on 16 May 2023).

- Gunson, M.; Eldering, A. OCO-2 Level 2 Geolocated XCO2 Retrieval Results and Algorithm Diagnostic Information, Retrospective Processing V10r. 2019. Available online: https://disc.gsfc.nasa.gov/datasets/OCO2_L2_Diagnostic_10r/summary (accessed on 16 May 2023).

- Crisp, D.; O’Dell, C.; Eldering, A.; Fisher, B.; Oyafuso, F.; Payne, V.; Drouin, B.; Toon, G.; Laughner, J.; Somkuti, P.; et al. Level 2 Full Physics Retrieval Algorithm Theoretical Basis: Orbiting Carbon Observatory–2 & 3 (OCO-2 & OCO-3). 2020. Available online: https://docserver.gesdisc.eosdis.nasa.gov/public/project/OCO/OCO_L2_ATBD.pdf (accessed on 16 May 2023).

- Schreier, F.; Gimeno García, S.; Hochstaffl, P.; Städt, S. Py4CAtS—PYthon for Computational ATmospheric Spectroscopy. Atmosphere 2019, 10, 262. [Google Scholar] [CrossRef]

- Gunson, M.; Eldering, A. OCO-2 Level 1B Calibrated, Geolocated Science Spectra, Retrospective Processing V10r. 2019. Available online: https://disc.gsfc.nasa.gov/datasets/OCO2_L1B_Science_10r/summary (accessed on 16 May 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).