Estimating Failure Probability with Neural Operator Hybrid Approach

Abstract

1. Introduction

2. Preliminaries

2.1. Problem Setting

2.2. Hybrid Method

| Algorithm 1 Iterative Hybrid Method [7]. |

|

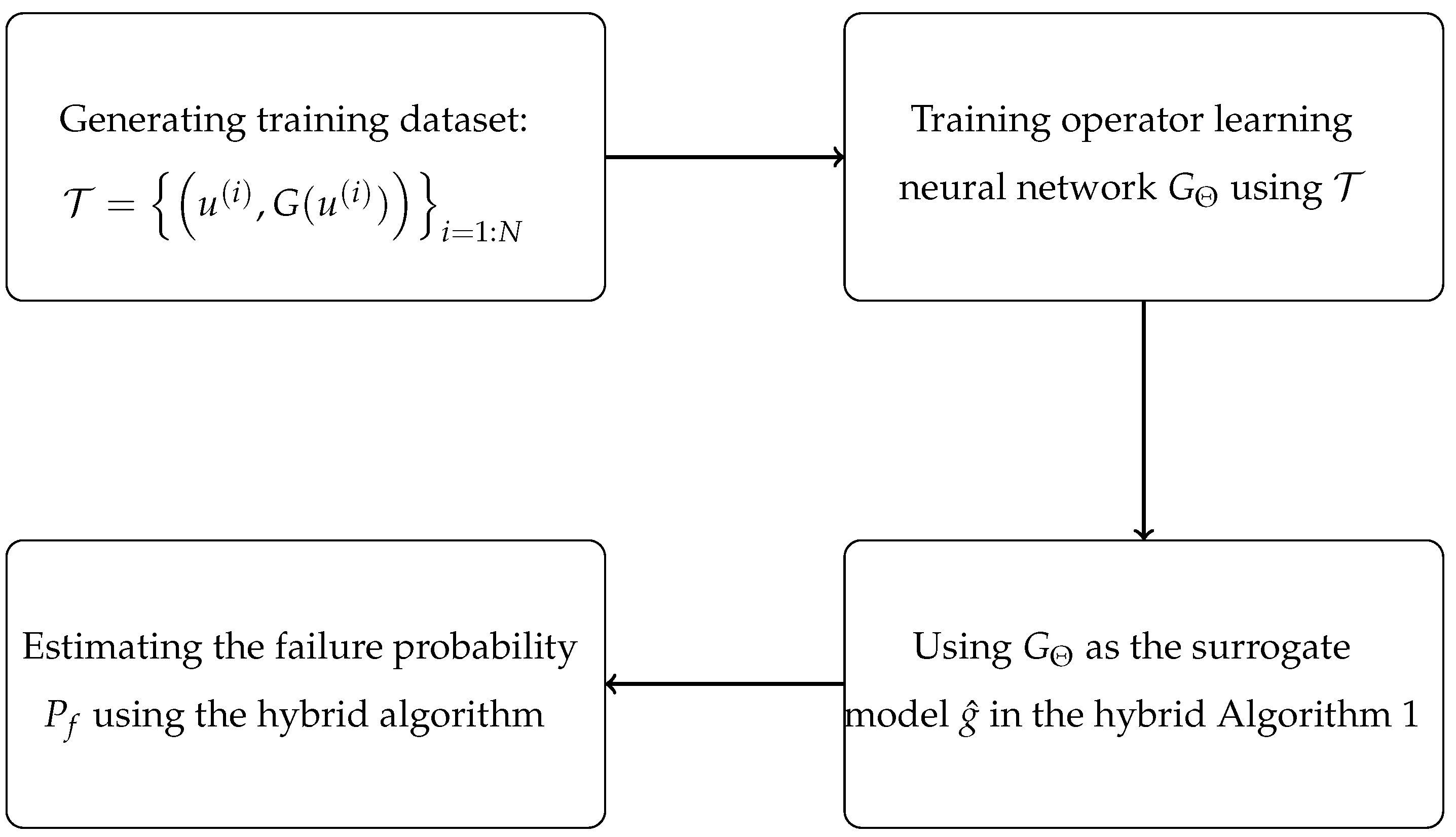

3. Neural Operator Hybrid Algorithm

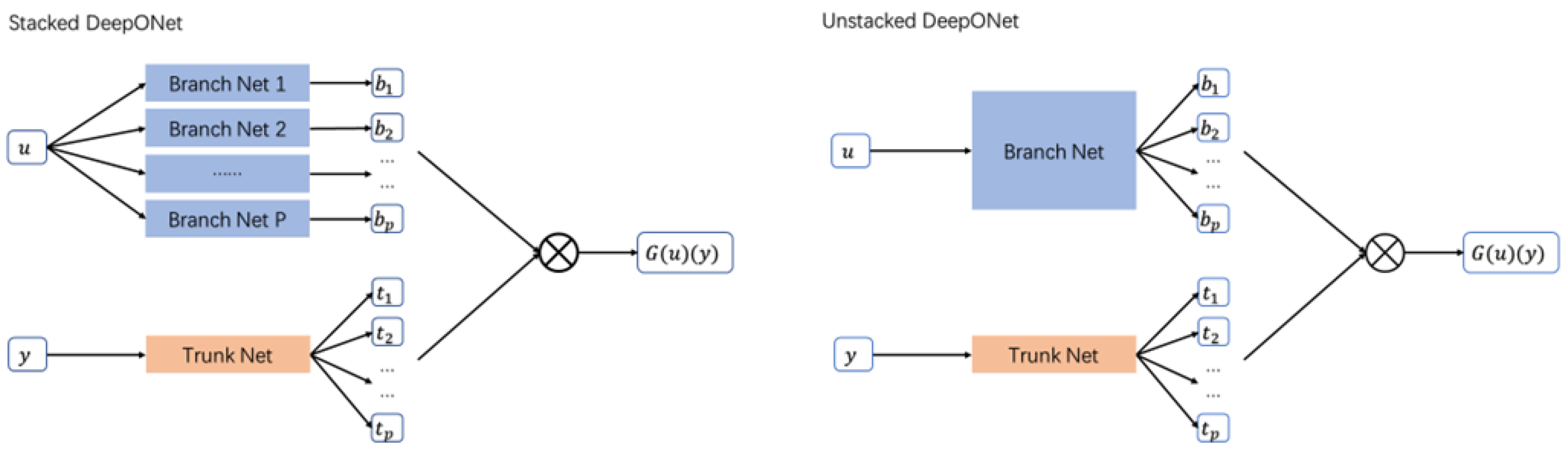

3.1. Neural Operator Learning

3.2. Neural Operator Hybrid Algorithm

4. Numerical Experiments

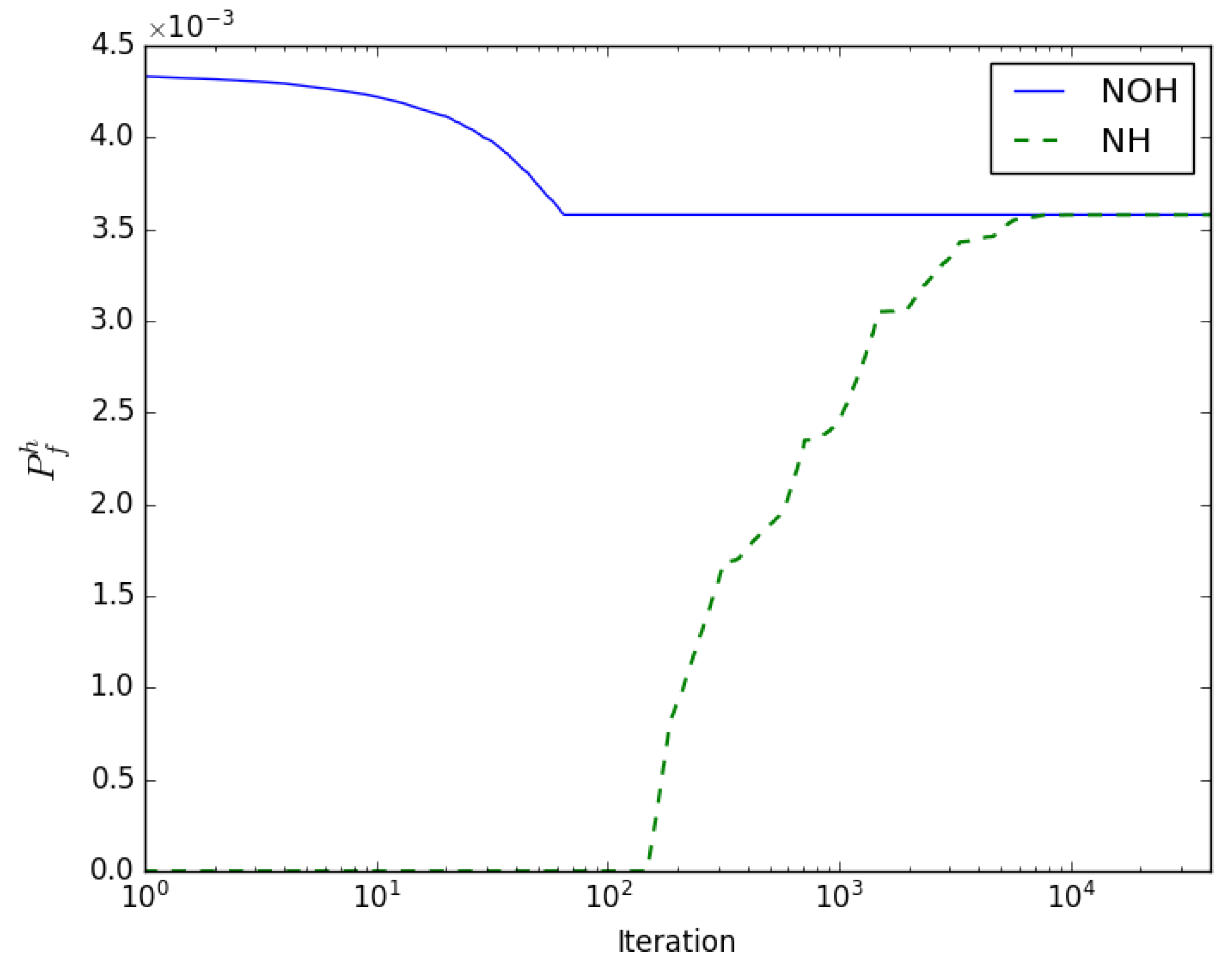

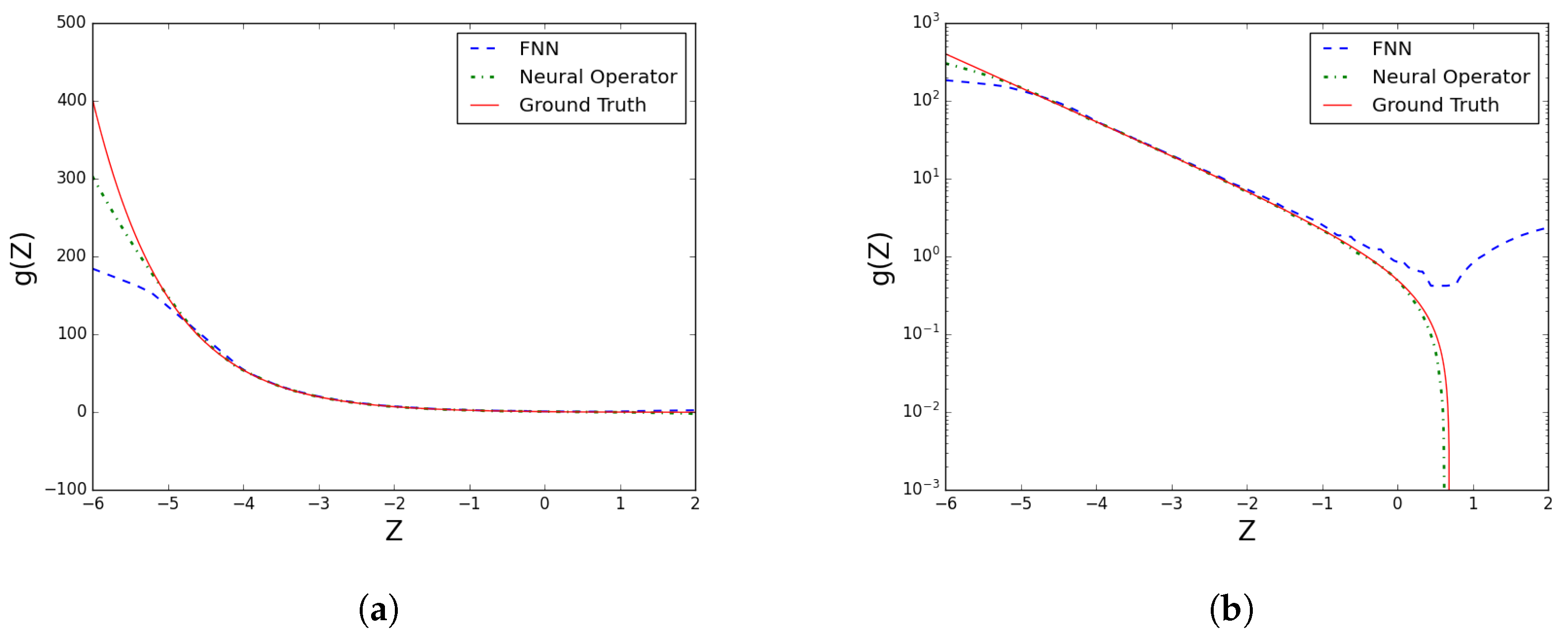

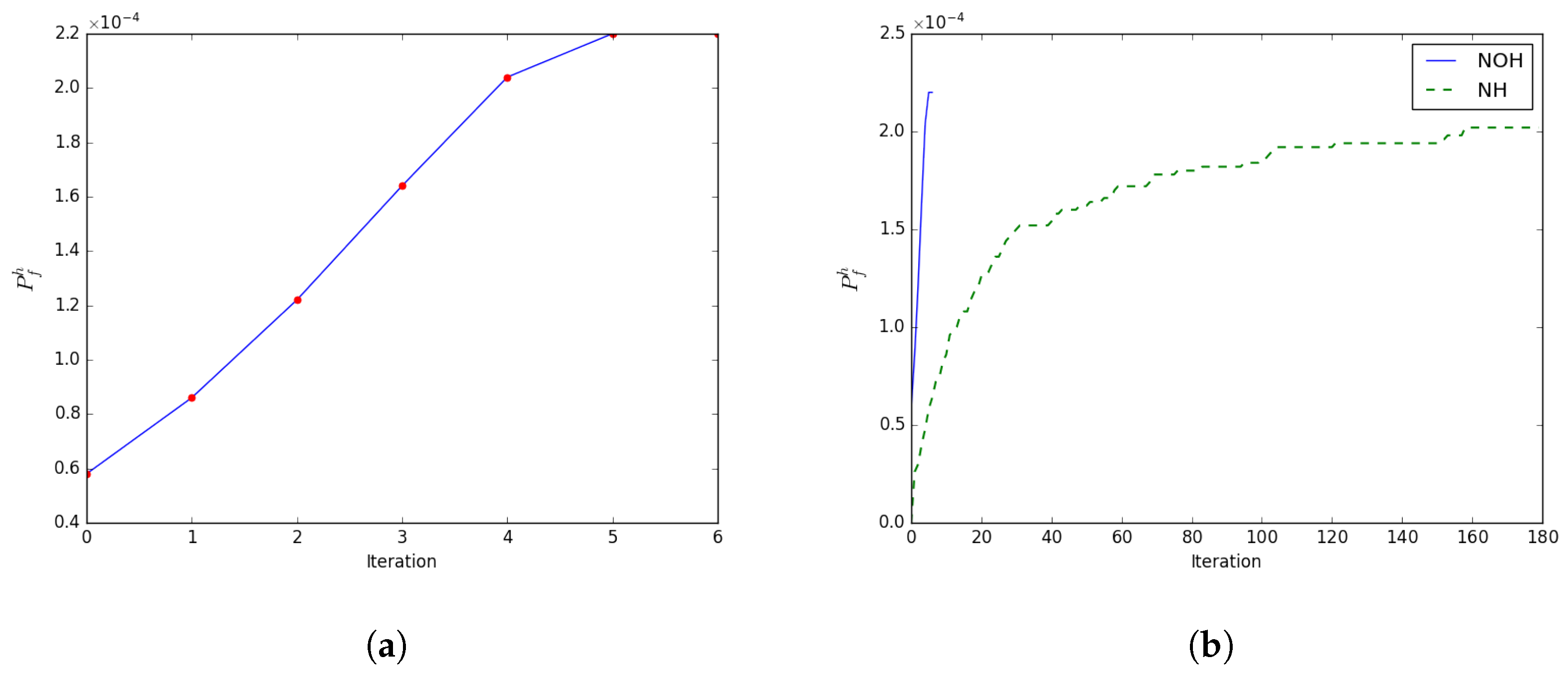

4.1. Ordinary Differential Equation

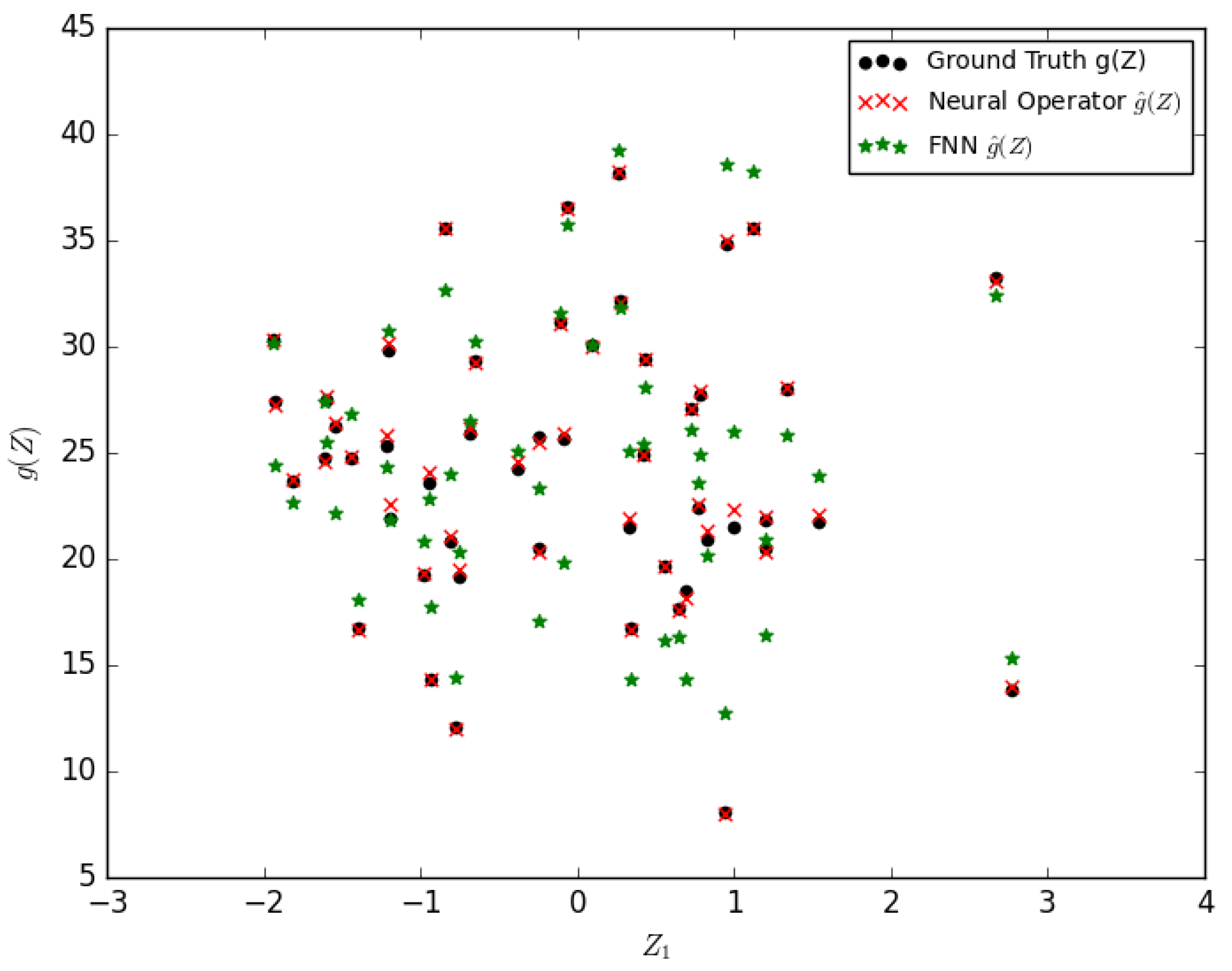

4.2. Multivariate Benchmark

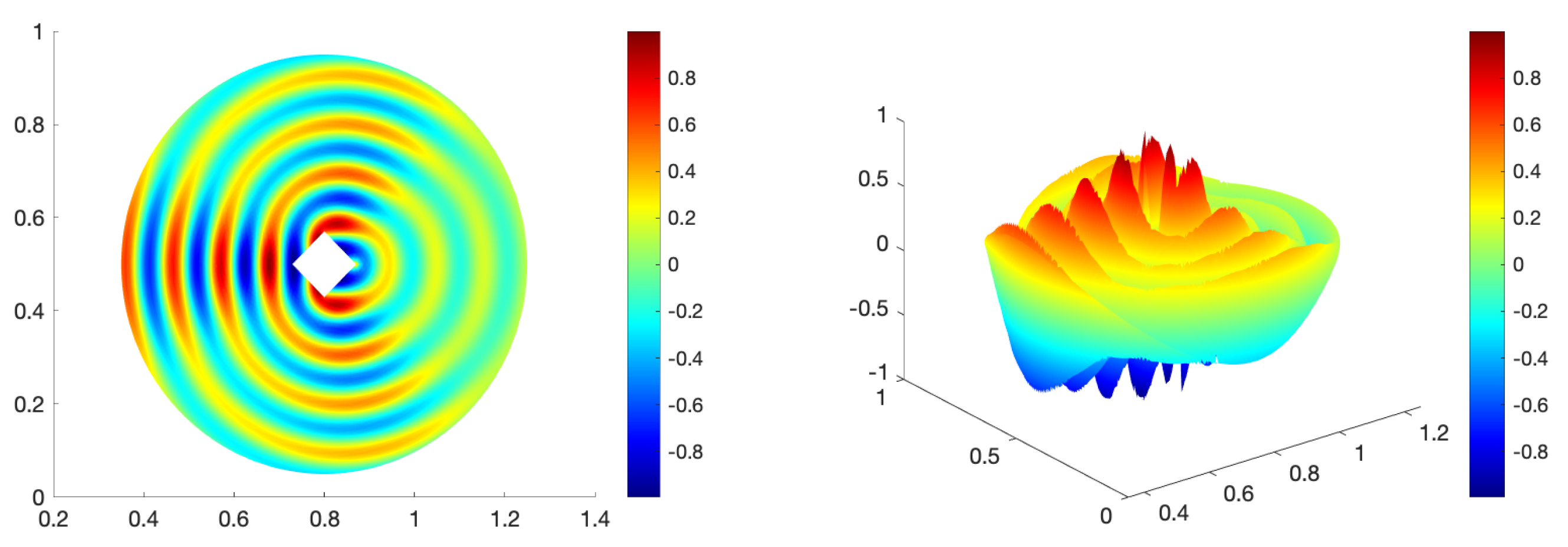

4.3. Helmholtz Equation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bjerager, P. Probability integration by directional simulation. J. Eng. Mech. 1988, 114, 1285–1302. [Google Scholar] [CrossRef]

- Ditlevsen, O.; Bjerager, P. Methods of structural systems reliability. Struct. Saf. 1986, 3, 195–229. [Google Scholar] [CrossRef]

- Der Kiureghian, A.; Dakessian, T. Multiple design points in first and second-order reliability. Struct. Saf. 1998, 20, 37–49. [Google Scholar] [CrossRef]

- Hohenbichler, M.; Gollwitzer, S.; Kruse, W.; Rackwitz, R. New light on first-and second-order reliability methods. Struct. Saf. 1987, 4, 267–284. [Google Scholar] [CrossRef]

- Rajashekhar, M.R.; Ellingwood, B.R. A new look at the response surface approach for reliability analysis. Struct. Saf. 1993, 12, 205–220. [Google Scholar] [CrossRef]

- Khuri, A.I.; Mukhopadhyay, S. Response surface methodology. Wiley Interdiscip. Rev. Comput. Stat. 2010, 2, 128–149. [Google Scholar] [CrossRef]

- Li, J.; Xiu, D. Evaluation of failure probability via surrogate models. J. Comput. Phys. 2010, 229, 8966–8980. [Google Scholar] [CrossRef]

- Li, J.; Li, J.; Xiu, D. An efficient surrogate-based method for computing rare failure probability. J. Comput. Phys. 2011, 230, 8683–8697. [Google Scholar] [CrossRef]

- Ghanem, R.G.; Spanos, P.D. Stochastic Finite Elements: A Spectral Approach; Courier Corporation: Chelmsford, MA, USA, 2003. [Google Scholar]

- Xiu, D.; Karniadakis, G.E. The Wiener-Askey polynomial chaos for stochastic differential equations. SIAM J. Sci. Comput. 2002, 24, 619–644. [Google Scholar] [CrossRef]

- Boyaval, S.; Bris, C.L.; Lelièvre, T.; Maday, Y.; Nguyen, N.C.; Patera, A.T. Reduced basis techniques for stochastic problems. Arch. Comput. Methods Eng. 2010, 4, 435–454. [Google Scholar] [CrossRef]

- Quarteroni, A.; Manzoni, A.; Negri, F. Reduced Basis Methods for Partial Differential Equations: An Introduction; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Cybenko, G. Approximation by superpositions of a sigmoidal function. Math. Control. Signals Syst. 1989, 2, 303–314. [Google Scholar] [CrossRef]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Lu, L.; Meng, X.; Mao, Z.; Karniadakis, G.E. DeepXDE: A deep learning library for solving differential equations. SIAM Rev. 2021, 63, 208–228. [Google Scholar] [CrossRef]

- Pang, G.; Lu, L.; Karniadakis, G.E. fPINNs: Fractional physics-informed neural networks. SIAM J. Sci. Comput. 2019, 41, A2603–A2626. [Google Scholar] [CrossRef]

- Zhang, D.; Guo, L.; Karniadakis, G.E. Learning in modal space: Solving time-dependent stochastic PDEs using physics-informed neural networks. SIAM J. Sci. Comput. 2020, 42, A639–A665. [Google Scholar] [CrossRef]

- Li, K.; Tang, K.; Li, J.; Wu, T.; Liao, Q. A hierarchical neural hybrid method for failure probability estimation. IEEE Access 2019, 7, 112087–112096. [Google Scholar] [CrossRef]

- Lieu, Q.X.; Nguyen, K.T.; Dang, K.D.; Lee, S.; Kang, J.; Lee, J. An adaptive surrogate model to structural reliability analysis using deep neural network. Expert Syst. Appl. 2022, 189, 116104. [Google Scholar] [CrossRef]

- Yao, C.; Mei, J.; Li, K. A Mixed Residual Hybrid Method For Failure Probability Estimation. In Proceedings of the 2022 17th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 11–13 December 2022; IEEE: New York, NY, USA, 2022; pp. 119–124. [Google Scholar]

- Chen, T.; Chen, H. Approximations of continuous functionals by neural networks with application to dynamic systems. IEEE Trans. Neural Netw. 1993, 4, 910–918. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.; Chen, H. Approximation capability to functions of several variables, nonlinear functionals, and operators by radial basis function neural networks. IEEE Trans. Neural Netw. 1995, 6, 904–910. [Google Scholar] [CrossRef]

- Lu, L.; Meng, X.; Cai, S.; Mao, Z.; Goswami, S.; Zhang, Z.; Karniadakis, G.E. A comprehensive and fair comparison of two neural operators (with practical extensions) based on FAIR data. Comput. Methods Appl. Mech. Eng. 2022, 393, 1–42. [Google Scholar] [CrossRef]

- Li, Z.; Kovachki, N.; Azizzadenesheli, K.; Liu, B.; Bhattacharya, K.; Stuart, A.; Anandkumar, A. Fourier neural operator for parametric partial differential equations. arXiv 2020, arXiv:2010.08895. [Google Scholar]

- Lu, L.; Jin, P.; Pang, G.; Zhang, Z.; Karniadakis, G.E. Learning nonlinear operators via DeepONet based on the universal approximation theorem of operators. Nat. Mach. Intell. 2021, 3, 218–229. [Google Scholar] [CrossRef]

- Lin, C.; Li, Z.; Lu, L.; Cai, S.; Maxey, M.; Karniadakis, G.E. Operator learning for predicting multiscale bubble growth dynamics. J. Chem. Phys. 2021, 154, 104118. [Google Scholar] [CrossRef]

- Cai, S.; Wang, Z.; Lu, L.; Zaki, T.A.; Karniadakis, G.E. DeepM&Mnet: Inferring the electroconvection multiphysics fields based on operator approximation by neural networks. J. Comput. Phys. 2021, 436, 110296. [Google Scholar]

- Mao, Z.; Lu, L.; Marxen, O.; Zaki, T.A.; Karniadakis, G.E. DeepM&Mnet for hypersonics: Predicting the coupled flow and finite-rate chemistry behind a normal shock using neural-network approximation of operators. J. Comput. Phys. 2021, 447, 110698. [Google Scholar]

- Papadrakakis, M.; Lagaros, N.D. Reliability-based structural optimization using neural networks and Monte Carlo simulation. Comput. Methods Appl. Mech. Eng. 2002, 191, 3491–3507. [Google Scholar] [CrossRef]

- Kutyłowska, M. Neural network approach for failure rate prediction. Eng. Fail. Anal. 2015, 47, 41–48. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Engelund, S.; Rackwitz, R. A benchmark study on importance sampling techniques in structural reliability. Struct. Saf. 1993, 12, 255–276. [Google Scholar] [CrossRef]

- Tabandeh, A.; Jia, G.; Gardoni, P. A review and assessment of importance sampling methods for reliability analysis. Struct. Saf. 2022, 97, 102216. [Google Scholar] [CrossRef]

- Teixeira, R.; Nogal, M.; O’Connor, A. Adaptive approaches in metamodel-based reliability analysis: A review. Struct. Saf. 2021, 89, 102019. [Google Scholar] [CrossRef]

| Method | |||

|---|---|---|---|

| MCS | |||

| NOH | 500 (Training) + 1750 (Evaluating) | 0.11% | |

| NH | - | - | - |

| Method | |||

|---|---|---|---|

| MCS | - | ||

| NOH | 1000 (Training) + 150 (Evaluating) | 0.81% | |

| NH | 1000 (Training) + 4175 (Evaluating) | 8.92 % |

| Method | |||

|---|---|---|---|

| MCS | - | ||

| NOH | 1000 (Training) + 100 (Evaluating) | 3.70% | |

| NH | 1000 (Training) + 875 (Evaluating) | 11.11% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, M.; Feng, Y.; Wang, G. Estimating Failure Probability with Neural Operator Hybrid Approach. Mathematics 2023, 11, 2762. https://doi.org/10.3390/math11122762

Li M, Feng Y, Wang G. Estimating Failure Probability with Neural Operator Hybrid Approach. Mathematics. 2023; 11(12):2762. https://doi.org/10.3390/math11122762

Chicago/Turabian StyleLi, Mujing, Yani Feng, and Guanjie Wang. 2023. "Estimating Failure Probability with Neural Operator Hybrid Approach" Mathematics 11, no. 12: 2762. https://doi.org/10.3390/math11122762

APA StyleLi, M., Feng, Y., & Wang, G. (2023). Estimating Failure Probability with Neural Operator Hybrid Approach. Mathematics, 11(12), 2762. https://doi.org/10.3390/math11122762