Abstract

Retrieval-based question answering in the automotive domain requires a model to comprehend and articulate relevant domain knowledge, accurately understand user intent, and effectively match the required information. Typically, these systems employ an encoder–retriever architecture. However, existing encoders, which rely on pretrained language models, suffer from limited specialization, insufficient awareness of domain knowledge, and biases in user intent understanding. To overcome these limitations, this paper constructs a Chinese corpus specifically tailored for the automotive domain, comprising question–answer pairs, document collections, and multitask annotated data. Subsequently, a pretraining–multitask fine-tuning framework based on masked language models is introduced to integrate domain knowledge as well as enhance semantic representations, thereby yielding benefits for downstream applications. To evaluate system performance, an evaluation dataset is created using ChatGPT, and a novel retrieval task evaluation metric called mean linear window rank (MLWR) is proposed. Experimental results demonstrate that the proposed system (based on ), achieves accuracies of 77.5% and 84.75% for Hit@1 and Hit@3, respectively, in the automotive domain retrieval-based question-answering task. Additionally, the MLWR reaches 87.71%. Compared to a system utilizing a general encoder, the proposed multitask fine-tuning strategy shows improvements of 12.5%, 12.5%, and 28.16% for Hit@1, Hit@3, and MLWR, respectively. Furthermore, when compared to the best single-task fine-tuning strategy, the enhancements amount to 0.5%, 1.25%, and 0.95% for Hit@1, Hit@3, and MLWR, respectively.

Keywords:

deep learning; pretrained language model; retrieval-based question answering; multitask learning; fine tuning MSC:

68T50

1. Introduction

Retrieval-based question answering (retrieval-based QA) aims to comprehensively understand query intents and retrieve relevant information from predefined knowledge bases or corpora to address user inquiries. Prior to the emergence of language models, retrieval-based QA systems primarily relied on information retrieval techniques. Users express their intents through query inputs, and relevant information is retrieved from prebuilt extensive domain knowledge bases or document collections. The ASQ system [1] employs keyword matching to respond to user queries, while WebFountain [2] and open-domain question answering (ODQA) [3] utilize rules, templates, and classifiers for answer retrieval. However, traditional text matching approaches have limitations in semantic comprehension, thereby posing challenges in handling semantically complex questions. The advent of the transformer architecture [4], including language models such as BERT [5] and the GPT series [6,7,8], has facilitated enhanced awareness and comprehension of knowledge. Consequently, the construction of specialized encoders based on language models and domain knowledge has emerged as the prevailing trend in retrieval-based QA.

In applications such as intelligent assistants, there is a demand for specialized question answering in the automotive domain (e.g., troubleshooting procedures for specific car models and engine malfunctions). In these scenarios, the system needs to provide highly accurate and comprehensive professional answers. However, the existing extractive question answering models [9,10,11] fail to meet the precision requirements of such specialized inquires. These domain-specific answers often require compilation, revision, and continuous enrichment by domain experts and cannot be solely obtained from existing models. Recently, retrieval-based QA systems have adopted an encoder–retriever architecture [12,13] as a common approach. However, nowadays, encoders, which rely on pretrained language models, suffer from the drawbacks of low specialization, biases in user intent understanding, and inadequate perception of domain knowledge. Additionally, the retriever component faces the challenge of low query efficiency. To tackle these issues, our proposed approach involves several key steps. Firstly, we construct a comprehensive knowledge base specifically designed for the automotive domain to organize domain-specific knowledge. Next, we introduce a pretraining–multitask fine-tuning framework based on masked language models. Through joint learning, we combine auxiliary task objectives and incorporate domain knowledge to enhance the model’s semantic representation ability and knowledge perception capability. Furthermore, we implement the retriever module of our system using the open-source library Faiss. This module performs similarity-based retrieval on user queries within the vector space of the question corpus. It selects answers corresponding to the top K ranked questions in terms of similarity and outputs them as the model’s response, thereby achieving a retrieval-based question-answering system tailored to the automotive domain. Additionally, to evaluate the performance of our system, we construct an evaluation dataset based on ChatGPT (https://openai.com/blog/chatgpt/, accessed on 19 May 2023) through a semiautomated process. Moreover, we introduce a novel evaluation metric called mean linear window ranking (MLWR).

In summary, the main contributions in this paper are as follows:

- We construct Chinese question-and-answer corpora, document corpora, and multitask annotated corpora specifically tailored to the automotive domain.

- We propose a joint learning framework with a pretraining–multitask fine-tuning architecture to incorporate domain knowledge and conduct a comparative analysis of the contributions of various auxiliary task objectives to model performance.

- We create an evaluation dataset based on ChatGPT using a semiautomated approach, along with the introduction of the MLWR metric for evaluation.

2. Related Work

2.1. Encoder

In retrieval-based QA systems for the automotive domain, the goal of the encoder module is to comprehensively understand and represent user intentions while incorporating domain knowledge. Traditional text semantic representations typically rely on discrete symbolic representations, including one-hot encoding for words, the bag-of-words model, and TF-IDF [14] for documents. These discrete text representations are characterized by their simplicity, conciseness, and computational ease. However, they only capture individual features, such as words, in isolation, thereby failing to capture the underlying semantic information and insufficiently expressing the semantic relationships among different symbolic data. Certain approaches [15,16] utilize feature engineering to supplement the semantic dimensions. Nevertheless, these approaches face limitations. Acquiring expert knowledge proves challenging due to the necessity for extensive expertise and limited portability. Additionally, the resulting feature vectors often possess a high number of dimensions (in the millions), giving rise to the curse of dimensionality problem, which restricts the complexity of the model. Consequently, the emergence of distributed representation learning, based on deep learning techniques, has been observed.

Mikolov et al. proposed Word2Vec [17] based on the semantic distribution hypothesis [18], which learns the distributed representation of words, called word vectors or word embeddings, by predicting their contexts. Pennington et al. presented the GloVe [19], which directly decomposes the global “word-word” co-occurrence matrix to obtain word vectors and further improves training speed. However, both Word2Vec and GloVe, along with similar models, provide fixed representations for word vectors. As a result, the distributional representation of words remains unchanged with context, limiting their effectiveness in capturing word polysemy. To address this limitation, McCann et al. proposed the CoVe [20], while Peters et al. introduced contextualized word embeddings known as ELMo [21]. These approaches extend the distributional representation of words by considering specific contexts, enabling dynamic representations of words in different contexts. Nevertheless, these dynamic representation models still have limited capabilities in modeling long-distance dependencies and parallelized computations when dealing with lengthy texts. In recent years, the development of pretrained language models based on the transformer architecture, such as GPT and BERT, has effectively addressed these issues. The GPT model utilizes an autoregressive approach to sequentially generate words, while BERT employs bidirectional encoding during pretraining, enabling the modeling of bidirectional context. These pretrained language models have demonstrated robust capabilities, yielding remarkable results in various natural language understanding and generation tasks [22,23]. In this paper, we propose a pretraining–multitask fine-tuning learning framework based on the BERT language model, aiming to incorporate domain knowledge and improve the performance of the encoder module.

2.2. Retriever

In retrieval-based QA systems, the encoder module transforms user queries into distributed vector representations and employs a retriever to efficiently search through extensive QA corpora for matching questions and answers. Researchers strive to optimize index structures to organize text vectors, thereby enhancing the efficiency and accuracy of vector retrieval. Index structures such as KD-trees [24], ball trees [25,26], and K-nearest neighbor graphs [27] have been developed to expedite the search process in the vector space and reduce unnecessary computational overhead. Additionally, researchers have explored approximate search algorithms such as product quantization (PQ) [28] and locality-sensitive hashing (LSH) [29] to improve retrieval efficiency and minimize search errors. Recent studies have introduced hierarchical approximate search algorithms, including hierarchical navigable small world (HNSW) [30], product neighbor search (PNS) [31], and HNSW-aided quantization (HAQ) [32], which further enhance the accuracy and speed of the search.

In this paper, we implement the retriever module of our system using the Faiss vector retrieval toolkit [33]. Faiss offers a variety of distance calculation methods for similarity computation, allowing for adjustments based on specific application requirements. Regrading vector organization, Faiss supports multiple index structures, facilitating faster search processes and the efficient management of large-scale datasets. Faiss also provides several approximate search algorithms that improve retrieval speed while maintaining accuracy. Furthermore, Faiss utilizes parallelization techniques such as multithreading and distributed computing to accelerate the search process and bolster its ability to handle large-scale datasets. Additionally, Faiss supports GPU acceleration, harnessing the parallel computing power of graphics processing units to expedite vector retrieval.

3. Our Approach

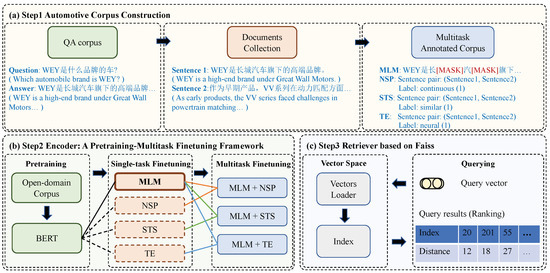

We propose a retrieval-based QA system tailored to the automotive domain. Our system consists of three key modules: an automotive domain corpus, an encoder module built on pretraining and multitask fine-tuning, and a vector retriever module that utilizes Faiss. The overall architecture of the system is illustrated in Figure 1.

Figure 1.

An overview of our retrieval-based QA system. (a) Step 1: automotive corpus construction. (b) Step 2: The encoder module under a pretraining–multitask fine-tuning framework. (c) Step 3: The retriever module based on Faiss library.

3.1. Corpus Construction

We constructed a QA dataset specific to the automotive domain by gathering question–answer pairs from authoritative resources, including professional databases, websites and other relevant resources. This dataset comprises a total of 7157 question–answer pairs, with each pair consisting of a question and its corresponding answer. Table 1 provides an illustrative example of the dataset.

Table 1.

The constructed Chinese QA corpus in automotive domain.

Furthermore, each answer within the QA dataset is treated as an individual document, contributing to a comprehensive collection of documents focused on the automotive domain. This collection encompasses over 240 K sentences, covering a wide range of automotive knowledge. It serves as the foundational resource (base corpus) for constructing the multitask annotated corpus in this paper. Backed by this base corpus, we built a multitask annotated corpus involving several tasks, including mask language modeling (MLM), next sentence prediction (NSP), semantic textual similarity (STS), and textual entailment (TE). Specific examples for each task-oriented corpus are shown in Table 2. The construction process of each task-oriented corpus is outlined as follows:

Table 2.

The constructed Chinese QA corpus in automotive domain.

- MLM Corpus: A subset of sentences is selected from the base corpus, and a certain proportion of words are randomly masked. The MLM corpus is created by annotating the original words in the masked positions.

- NSP Corpus: Each example consists of two sentences. A certain proportion of sentence pairs are randomly selected from the base corpus. Half of the pairs consist of consecutive sentences, labeled as 1, while the other half consist of randomly selected sentence pairs, labeled as 0.

- STS Corpus: The STS corpus is constructed by paraphrasing sentences from the base corpus. To ensure a balanced corpus, positive and negative examples are generated in equal proportions. A total of 50% of the sentences from the document collection are randomly sampled and paraphrased into similar sentences using iFlytek tools. The paraphrased sentences are then filtered by annotators, and those deemed similar to the original sentences are selected as positive examples (labeled as 1, similar). Negative examples are generated by employing a random matching method, where sentences of similar length to the positive examples are randomly sampled and paired to form negative examples (labeled as 0, dissimilar). This approach maintains consistency in length between positive and negative examples, thereby facilitating model training.

- TE Corpus: To construct the TE corpus, we extracted 880,000 annotated examples from an existing Chinese textual entailment dataset. Each example includes a premise and a hypothesis. Based on the inference relationship between the two, they were labeled as entailment (0), contradiction (2), or neutral (1).

3.2. Encoder: A Pretraining–Multitask Fine-Tuning Framework

Based on the multitask corpus constructed in Section 3.1, we propose a joint learning framework with a pretraining–multitask fine-tuning architecture. The objective of this framework is to integrate auxiliary task objectives and develop a specialized encoder specifically designed for the automotive domain. By incorporating domain knowledge, our framework enables better adaptation to downstream task requirements in the automotive domain.

To be more specific, we propose a multitask fine-tuning strategy based on the pretrained BERT model, as illustrated in Figure 1b. Firstly, we individually fine-tune the pretrained model on the MLM, NSP, TE, and STS tasks. Then, we evaluate the impact of each task on the model’s performance through comparative analysis. Based on this evaluation, we identify the fine-tuning tasks that have a positive influence on the model and designate them as the main tasks. In the subsequent stage of multitask fine-tuning, we engage in joint learning, where the main task is learned alongside other auxiliary tasks. This strategy fosters mutual reinforcement and interactive learning among tasks by selecting auxiliary tasks that interact positively with the main task, share parameters, and train collaboratively. Through this joint learning approach, the model is able to fully leverage the correlations and complementarities among different tasks, leading to further improvements in its performance on specific domain tasks.

Regarding the specific parameter settings during the fine-tuning stage for each task, the following configurations are applied: a batch size of 16, a dropout rate of 0.1 for attention layers, a dropout rate of 0.1 for hidden layers, 768 hidden units, a learning rate of , linear decay as the gradient decay strategy, utilization of the BERTAdam optimizer with weight decay of 0.01, and a training duration of 3 epochs. For the MLM fine tuning, a masking rate of 15% is applied. The maximum sequence length is set to 512 for all tasks, except for TE, as the TE corpus contains shorter sentence pairs. Consequently, the maximum sequence length during fine tuning for TE is set to 256.

3.3. Retriever Based on Faiss

Faiss (Facebook AI Similarity Search) is a highly efficient open-source library designed specifically for vector retrieval. It provides robust support for various vector index structures and similarity calculation methods. One of its notable strengths lies in its efficient handling of large-scale vectors, rendering it highly suitable for industrial-grade applications. In our efforts to further enhance the retrieval efficiency of the system, we introduced a dedicated vector retrieval module that harnesses the power of the Faiss framework, as depicted in Figure 1c. We first construct the vector space by loading the base corpus as supporting vectors using an encoder, as illustrated in Figure 1b. These vectors are then indexed using the IndexFlat2 structure, which is based on the Faiss library. Upon receiving a query, the retriever module first converts it into a query vector and then performs a search within the vector space to identify the most similar targets. Subsequently, a ranking is generated, presenting the top K matching questions along with their corresponding answers.

When constructing the IndexFlatL2 index, the L2 norm of each vector in the vector dataset is computed and stored in the index file along with its corresponding vector. This enables fast computation of the L2 distance between the query vector and the stored vectors during the query phase. Assuming the query vector is denoted as and any stored vector as , Equation (1) describes the computation of the squared L2 distance between and :

where represents the square of the L2 norm of the stored vector, which is precomputed and stored in the index.

During the query phase, the precomputed L2 norms are utilized to calculate the distance, eliminating the need for redundant computations. After constructing the index, the vector set is organized into a retrieval vector space. In the querying process, the vector representation of the query text is introduced into the index as the target, and a search is performed in the retrieval space to compute the L2 distance between the query vector and the stored vectors. By leveraging the IndexFlatL2 index, the retrieval system efficiently computes the L2 distance between the query vector and the stored vectors, enabling similarity ranking and nearest neighbor search based on the distance. The system returns the top K most similar vector indices, and subsequently extracts the corresponding question text and relevant information based on these indices.

4. Experiments

In this section, we first create an evaluation dataset for the automotive domain. Next, we introduce evaluation metrics utilized in our experiments. Finally, we conduct a comparative analysis against competing models to substantiate the effectiveness of our system.

4.1. Evaluation Dataset

Firstly, we created a retrieval corpus (shown in Table 3) utilizing the question set outlined in Section 3.1. Then, we randomly sampled 100 questions from the retrieval corpus. These selected questions were then subjected to synonymous rephrasing using ChatGPT, which ultimately led to the creation of the evaluation dataset (shown in Table 4).

Table 3.

The retrieval corpus consisted of all questions in QA dataset.

Table 4.

The evaluation dataset in our experiments.

Specifically, we transformed the rephrasing task description and the question text into prompts, which were fed into ChatGPT to generate the rephrased results. These results were then subject to manual screening to create the final evaluation dataset. The prompts were designed following specific principles: (1) role assignment: ChatGPT assumes the role of an automotive engineer, for instance, with a prompt such as “Imagine you are an automotive engineer”; (2) rephrasing task description: a detailed explanation of the rephrasing task requirements is provided, such as “You will receive a text related to the automotive domain and your task is to rewrite it, ensuring that the length remains similar to the original while preserving its meaning”; (3) result requirements: the desired outcomes of ChatGPT’s generation are described, for example, with a prompt such as “Please provide 6 rephrased results for each data point and rank them in descending order based on their quality”. Subsequently, human annotators manually reviewed the rephrased results generated by ChatGPT, carefully examining each question’s rephrased versions, and selecting the top four synonyms with the highest quality. This process yielded a dataset comprising 400 user queries, as depicted in Table 4. Each query corresponds to a question in the queries field and has a corresponding reference question in the retrieval corpus, indicated by the reference field. This user query dataset is utilized to evaluate the performance of the retrieval-based question-answering model.

4.2. Evaluation Metrics

The hit rate at K (i.e., Hit@K) is a widely used evaluation metric for assessing the performance of retrieval systems. It measures the capability to rank the correct target of user queries among the top K retrieval results. When conducting Hit@K for evaluation, a query is considered a hit if the true target is included in the top K retrieval results; otherwise, it is categorized as a miss. In our experiments, we utilized Hit@1, Hit@3, and Hit@5 as evaluation metrics for the retrieval model. Formally, the Hit@K is computed as

where represents the total number of hits for N retrieval tasks, and is the total number of true targets across all queries.

While Hit@K is a straightforward metric, it solely focuses on the top-ranked results, disregarding other potentially valuable outcomes. To account for the ranking of all results, we utilized the mean reciprocal rank (MRR) as an evaluation metric. MRR is computed as follows:

where represents the total number of queries, and denotes the ranking of the true target corresponding to the i-th query in the retrieved results.

In addition, we present a novel evaluation metric designed based on real user behavior. Considering that the result page of the QA system has the capability to display multiple results, the difference between the top-ranked and fifth-ranked feedback results holds minimal significance in terms of user experience. However, the MRR metric assigns a score difference of 0.8 between the first and fifth ranks (with a maximum score of 1). Moreover, considering that users typically limit their exploration to the initial pages of retrieval results, if a rank exceeds a certain threshold, it indicates a failure of the query sample to produce the correct result within the system. Despite a low ranking, MRR still provides a positive evaluation score when used for assessment. To address these concerns, we propose a new evaluation metric called mean linear window rank (MLWR). MLWR linearly adjusts the impact of ranking on the evaluation metric, and incorporates a window size to exclude query samples with rankings beyond the predefined window. This approach better aligns with the user experience requirements of the system and provides a comprehensive assessment of retrieval system performance. Formally, MLWR is computed as follows:

where represents the total number of samples, denotes the ranking of the true target corresponding to the i-th query in the retrieved results, and N is the window size.

4.3. Comparison Results

In this section, we compare the performance of pretrained models, single-task fine-tuned models, and multitask fine-tuned models on the automotive domain QA retrieval task. By thoroughly examining the experimental results, we identify the most effective multitask fine-tuning strategy.

Based on the multitask annotated corpus (see Section 3.1) and the single-task fine-tuning strategy (see Section 3.2), we performed individual fine-tuning on the general pretrained model for the MLM, TE, NSP, and STS tasks. This process yielded four fine-tuned models: FT-MLM model (fine-tuned with BERT model for the MLM task); FT-TE model (fine-tuned with BERT model for the TE task); FT-NSP model (fine-tuned with BERT model for the NSP task); and FT-STS model (fine-tuned with pretrained model for the STS task). Subsequently, we compared and analyzed the performance of these four fine-tuned models alongside the baseline model using the evaluation metrics introduced in Section 4.2. The experimental results, presented in Table 5, reveal that two text-encoding methods, namely CLS and MEAN, were employed in the experiments. CLS encoding extracts the [CLS] vector from the final layer of the model encoder as the encoded output of the text, while MEAN encoding performs average pooling on the outputs of each token in the final layer of the model encoder, producing an averaged pooling vector as the encoded output. Both of these encoding methods were utilized in the construction of the fine-tuned models and were compared in our experiments.

Table 5.

Comparison of Hit@K, MRR, MLWR using single-task fine-tuning models based on .

As shown in Table 5, the general baseline BERT model exhibits poor performance in the automotive domain retrieval question-answering task. Hit@1, using CLS encoding, is a mere 30%, with an MLWR of 47.37%. Conversely, the Hit@1, employing MEAN encoding, reaches 64.5%, accompanied by an MLWR of 79.97%. This highlights the improvement achieved by applying average pooling to the encoder outputs in enhancing representations for domain-specific texts. Individually fine-tuning the BERT model with the MLM task, based on the domain-specific corpus, yields a significant performance boost. The FT-MLM model attains a Hit@1 of 47.25% and 77% under CLS encoding and MEAN encoding, respectively. These figures reflect improvements of 17.25 and 12.5 percentage points compared to the BERT model. Furthermore, the MLWR is enhanced to 58.96% and 86.76%, respectively, corresponding to gains of 11.59 and 6.79 percentage points over the BERT model. Thus, MLM single-task fine tuning, grounded in the automotive domain corpus, proves highly effective in enhancing model performance. Additionally, Table 5 shows that individual fine tuning of the BERT model with the STS, TE, and NSP tasks results in a certain degree of performance decline. Notably, the negative impact on model performance from fine-tuning the NSP and STS tasks is relatively minor, further substantiating their potential positive contribution to model performance when engaged as auxiliary tasks in the multitask fine-tuning framework.

The preceding analysis provides valuable guidance for the subsequent steps involved in establishing multitask fine-tuning strategies. According to Table 5, it is evident that selecting the MLM task as the primary task and incorporating appropriate auxiliary tasks for joint learning is an effective strategy for multitask fine tuning. To further investigate the potential positive interactions between MLM and other auxiliary tasks within the multitask fine-tuning framework, we proceeded to fine-tune the baseline model using the MLM+STS and MLM+NSP multitask learning methods. A comparative analysis was performed against the FT-MLM model to evaluate the actual contributions of the NSP and STS tasks in the context of multitask fine-tuning. The performance results of the models under multitask fine tuning are presented in Table 6.

Table 6.

Comparison of Hit@K, MRR, MLWR using multitask fine-tuning models based on .

As shown in Table 5 and Table 6, the performance of the multitask fine-tuning models (NSP+MLM and STS+MLM) is significantly better than that of the baseline BERT model, further validating the effectiveness of fine-tuning the models based on domain-specific task corpora. From Table 6, it is evident that under MEAN encoding, the performance of the STS/NSP task+MLM multitask fine-tuning models consistently outperforms that of the STS/NSP single-task fine-tuning models, which again demonstrates the rationale for selecting the MLM task as the main task during the multitask fine-tuning phase. However, the NSP+MLM fine-tuning model performs slightly worse than the single-task FT-MLM model (see Table 6), and even under single-task fine tuning, the performance of the FT-NSP model is still slightly inferior to that of the baseline BERT model (see Table 5). This indicates that involving the NSP task in fine-tuning is not beneficial for downstream retrieval-based question-answering applications. Previous works, such as RoBERTa [34] and SpanBERT [35], have also found that using the NSP task as a pretraining objective leads to a performance decline when handling question-answering tasks. This could be due to the fact that the NSP task aims to determine whether two sentences are consecutive, which is not directly related to the objective of question-answering tasks, and the joint learning of NSP and MLM tasks fails to generate positive effects. For the STS task, under both CLS and MEAN encoding, the performance of the STS+MLM joint fine-tuning model consistently outperforms that of the single-task MLM fine-tuning model, with Hit@1 reaching 74.25% and 77.5%, respectively, which represents improvements of 27 and 0.5 percentage points compared to the FT-MLM model. MLWR reaches 87.12% and 87.71%, respectively, with improvements of 28.16 and 0.95 percentage points compared to the FT-MLM model.

To illustrate the generalization capability of our framework in mitigating bias within pretrained models, we extended our evaluation beyond the BERT-base model to also include the BERT-large and RoBERTa models. As shown in Table 7, our framework exhibits effective generalization across a variety of pretrained models. It is worth nothing that RoBERTa, which is solely pretrained using the MLM task, demonstrates good performance with the CLS encoding, achieving a Hit@1 score of 69%. However, in pretraining models such as BERT-base and BERT-large, the CLS representation primarily learns from the NSP task, resulting in suboptimal performance when directly utilizing the CLS encoding. These findings highlight the negative impact of the NSP task as a training objective on QA tasks, while emphasizing the benefits of the MLM task as a training objective for downstream QA tasks.

Table 7.

Comparison of Hit@K, MRR, MLWR using the best single-task and multitask fine-tuning models based on and RoBERTa.

4.4. Case Study

In this section, we conduct a case study to qualitatively analyze competing models. Table 8 presents the results obtained when inputting an example user query, namely “如何对高尔夫7系列车型进行自我检修?” (How to perform self-maintenance on Volkswagen Golf7 series?), and showcases the responses retrieved by the competing models utilizing in proposed framework. The baseline BERT model provides a historical overview of the seventh-generation Volkswagen Golf, while the single-task FT-MLM model compares the 308S and Golf 7 models. However, neither of these models is capable of providing an accurate answer to the query. In contrast, the STS+MLM joint fine-tuning model effectively responds with the precise and specific steps to perform maintenance on the FAW-Volkswagen Golf7, thereby correctly addressing the query. The STS+MLM joint model demonstrates its effectiveness in capturing the query intentions, surpassing other competing models in terms of qualitative performance.

Table 8.

Retrieved answers from baseline model, single-task FT-MLM model, and multitask STS+MLM model.

5. Conclusions

In this paper, we first focus on constructing QA corpora, document sets, and multitask fine-tuning datasets specifically tailored to the automotive domain. Then, we propose a pretraining–multitask fine-tuning learning framework to develop an encoder model that incorporates domain knowledge, enhancing the semantic representation of texts and enabling effective retrieval QA applications. The experimental results reveal that within the multitask fine-tuning framework (based on ), the joint fine-tuned model MLM+STS achieves the highest performance. It attains Hit@1 and Hit@3 accuracies of 77.5% and 84.75%, respectively, along with an MLWR of 87.71%. These outcomes signify substantial improvements of 12.5, 12.5, and 28.16 percentage points, respectively, compared to the baseline BERT model. Additionally, when compared to the best-performing single-task fine-tuned model, FT-MLM, the observed enhancements amount to 0.5, 1.25, and 0.95 percentage points, respectively.

Author Contributions

Conceptualization, Z.L.; methodology, Z.L. and S.Y.; writing—original draft, Z.L.; writing—review & editing, S.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Natural Science Foundation of Zhejiang Province, China (Grant No. LQ22F020027) and the Key Research and Development Program of Zhejiang Province, China (Grant No. 2023C01041).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gupta, N.; Tur, G.; Hakkani-Tur, D.; Bangalore, S.; Riccardi, G.; Gilbert, M. The AT&T spoken language understanding system. IEEE Trans. Audio Speech Lang. Process. 2005, 14, 213–222. [Google Scholar]

- Ferrucci, D.G.; Brown, E.; Chu-Carroll, J.; Fan, J.; Gondek, D.; Kalyanpur, A.A.; Lally, A.; Murdock, J.W.; Nyberg, E.; Prager, J.; et al. Building Watson: An Overview of the DeepQA Project. AI Mag. 2010, 31, 59–79. [Google Scholar] [CrossRef]

- Qu, C.; Yang, L.; Qiu, M.H. Open domain question answering using early fusion of knowledge bases and text. arXiv 2018, arXiv:1809.00782. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems (ANIPS), California, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT), Minneapolis, MI, USA, 2–7 July 2019; pp. 4171–4186. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. Available online: https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf (accessed on 19 May 2023).

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models Are Unsupervised Multitask Learners. Available online: https://cdn.openai.com/better-language-models/language_models_are_unsupervised_multitask_learners.\pdf (accessed on 19 May 2023).

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. In Proceedings of the Advances in Neural Information Processing Systems, Virtual-only Conference, 6–12 December 2020; Volume 33, pp. 1877–1901. [Google Scholar]

- Rajpurkar, P.; Zhang, J.; Lopyrev, K.; Liang, P. Squad: 100,000+ questions for machine comprehension of text. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), Austin, TX, USA, 1–5 November 2016; pp. 2383–2392. [Google Scholar]

- Rajpurkar, P.; Jia, R.; Liang, P. Know what you don’t know: Unanswerable questions for squad. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), Melbourne, Australia, 15–20 July 2018; pp. 784–789. [Google Scholar]

- Li, H.; Tomko, M.; Vasardani, M.; Baldwin, T. MultiSpanQA: A dataset for multi-span question answering. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT), Seattle, WA, USA, 10–15 July 2022; pp. 1250–1260. [Google Scholar]

- Yang, W.; Xie, Y.; Lin, A.; Li, X.; Tan, L.; Xiong, K.; Li, M.; Lin, J. End-to-end open-domain question answering with bertserini. arXiv 2019, arXiv:1902.01718. [Google Scholar]

- Zhang, Q.; Chen, S.S.; Xu, D.K.; Cao, Q.Q.; Chen, X.J.; Cohn, T.; Fang, M. A Survey for Efficient Open Domain Question Answering. arXiv 2022, arXiv:2211.07886. [Google Scholar]

- Jones, S.K. A statistical interpretation of term specificity and its application in retrieval. J. Doc. 1972, 28, 11–21. [Google Scholar] [CrossRef]

- Baldwin, T.; De Marneffe, M.C.; Han, B.; Kim, Y.B.; Ritter, A.; Xu, W. Shared tasks of the 2015 workshop on noisy user-generated text: Twitter lexical normalization and named entity recognition. In Proceedings of the Workshop on Noisy User-Generated Text, Beijing, China, 31 July 2015; pp. 126–135. [Google Scholar]

- Derczynski, L.; Maynard, D.; Rizzo, G.; Van Erp, M.; Gorrell, G.; Troncy, R.; Petrak, J.; Bontcheva, K. Analysis of named entity recognition and linking for tweets. Inf. Process. Manag. 2015, 51, 32–49. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Harris, Z.S. Distributional structure. J. Doc. 1954, 10, 146–162. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C.D. GloVe: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- McCann, B.; Bradbury, J.; Xiong, C.; Socher, R. Learned in translation: Contextualized word vectors. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), California, CA, USA, 4–9 December 2017; pp. 6297–6308. [Google Scholar]

- Peters, M.E.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep contextualized word representations. In Proceedings of the 2018 Conference of the North American Chapter of the Associ-ation for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; pp. 2227–2237. [Google Scholar]

- Devika, R.; Vairavasundaram, S.; Mahenthar, C.S.J.; Varadarajan, V.; Kotecha, K. A Deep Learning Model Based on BERT and Sentence Transformer for Semantic Keyphrase Extraction on Big Social Data. IEEE Access 2021, 9, 165252–165261. [Google Scholar] [CrossRef]

- Natarajan, B.; Rajalakshmi, E.; Elakkiya, R.; Kotecha, K.; Abraham, A.; Gabralla, L.A.; Subramaniyaswamy, V. Development of an End-to-End Deep Learning Frame-work for Sign Language Recognition, Translation, and Video Generation. IEEE Access 2022, 10, 104358–104374. [Google Scholar] [CrossRef]

- Bentley, J.L. Multidimensional binary search trees used for associative searching. Commun. ACM 1975, 18, 509–517. [Google Scholar] [CrossRef]

- Liu, T.; Moore, A.W.; Gray, A. New algorithms for efficient high-dimensional nonparametric classification. JMLR 2006, 7, 1135–1158. [Google Scholar]

- Omohundro, S.M. Five Balltree Construction Algorithms; International Computer Science Institute: Berkeley, CA, USA, 1989. [Google Scholar]

- Friedman, J.H.; Bentley, J.L.; Finkel, R.A. An algorithm for finding best matches in logarithmic expected time. ACM Trans. Math. Softw. 1977, 3, 209–226. [Google Scholar] [CrossRef]

- Jégou, H.; Douze, M.; Schmid, C. Product quantization for nearest neighbor search. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 117–128. [Google Scholar] [CrossRef] [PubMed]

- Indyk, P.; Motwani, R. Approximate nearest neighbors: Towards removing the curse of dimensionality. In Proceedings of the Thirtieth Annual ACM Symposium on Theory of Computing, New York, NY, USA, 24–26 May 1998; pp. 604–613. [Google Scholar]

- Malkov, Y.A.; Yashunin, D.A. Efficient and robust approximate nearest neighbor search using Hierarchical Navigable Small World graphs. arXiv 2018, arXiv:1603.09320. [Google Scholar] [CrossRef] [PubMed]

- Jégou, H.; Perronnin, F.; Douze, M.; Sánchez, J.; Pérez, P.; Schmid, C. Aggregating local descriptors into a compact image representation. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3304–3311. [Google Scholar]

- Wang, K.; Liu, Z.; Lin, Y.; Lin, W.; Han, S. HAQ: Hardware-Aware Automated Quantization with Mixed Precision. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Los Angeles, CA, USA, 15–20 June 2019; pp. 1669–1678. [Google Scholar]

- Johnson, J.; Douze, M.; Jégou, H. Billion-scale similarity search with GPUs. IEEE Trans. Big Data 2019, 7, 535–547. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Clark, C.; Lee, K.; Chang, M.W.; Kwiatkowski, T.; Collins, M.; Toutanova, K. SpanBERT: Improving Pre-training by Representing and Predicting Spans. Trans. Assoc. Comput. Linguist. 2020, 8, 64–77. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).