Abstract

Link prediction involves the use of entities and relations that already exist in a knowledge graph to reason about missing entities or relations. Different approaches have been proposed to date for performing this task. This paper proposes a combined use of the translation-based approach with the Convolutional Neural Network (CNN)-based approach, resulting in a novel model, called ConCMH. In the proposed model, first, entities and relations are embedded into the complex space, followed by a vector multiplication of entity embeddings and relational embeddings and taking the real part of the results to generate a feature matrix of their interaction. Next, a 2D convolution is used to extract features from this matrix and generate feature maps. Finally, the feature vectors are transformed into predicted entity embeddings by obtaining the inner product of the feature mapping and the entity embedding matrix. The proposed ConCMH model is compared against state-of-the-art models on the four most commonly used benchmark datasets and the obtained experimental results confirm its superiority in the majority of cases.

MSC:

68W11; 94-04

1. Introduction

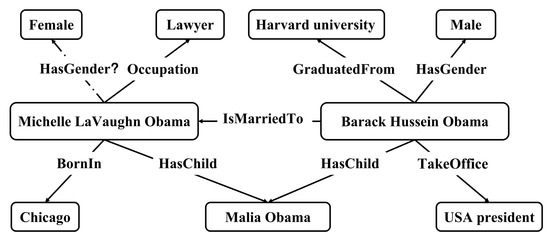

Knowledge graphs use points and edges to represent individuals in the real world and their relations to other individuals. A typical knowledge graph contains millions of individuals and hundreds of millions of relations. Knowledge graphs have been widely used in intelligent applications such as search engines, question-and-answer systems [1], and recommendation systems [2]. Since most knowledge graphs are either constructed collaboratively or (partially) automatically, they often suffer from incompleteness. For example, in Freebase [3] and DBpedia [4], the place of birth is missing in more than 66% of person entries. Moreover, in DBpedia, more than 58% of scientists are not linked to the predicate that describes what they are known for, [5]. Therefore, many studies have focused on the knowledge graph complementation task. Link prediction, which is the prediction of missing triplets based on the existing complete triplets in a knowledge graph, is the main method to overcome the incompleteness of the knowledge graph. Figure 1 shows an example of link prediction, where a solid line represents an existing relationship and a dashed line represents a relationship that needs to be inferred. For example, the relational link prediction (Michelle LaVaughn Obama, ?, Female) can be inferred from (Barack Hussein Obama, hasGender, male) and (Barack Hussein Obama, isMarriedTo, Michelle LaVaughn Obama).

Figure 1.

A fragment of the knowledge graph of the YAGO3-10 dataset [6].

Knowledge graph embedding (KGE) models have been particularly successful in solving link prediction tasks [7]. Also known as Knowledge Representation Learning (KRL), KGE is inspired by the way the human brain thinks about problems and symbolizes knowledge to perform more complex reasoning and thinking by acquiring semantic information about knowledge. It is an upstream task in machine reading comprehension, intelligent question and answer generation, information retrieval, medical treatment, etc. The superior performance of KGE models can greatly improve the accuracy of generated intelligent questions and answers and can make the search engines more intelligent in returning more proper answers that people need.

Many existing KGE models obtain vector representations of entities and relations by embedding them into a low-dimensional vector space and performing complex mathematical operations on the vector representations to measure their plausibility in the real world. TransE [8] is the most classical KGE model, which embeds entities and relations into Euclidean space and measures the rationality of a triplet by computing the distance between the sum of the head entity vector and the relation vector , and the tail entity vector in the Euclidean space. If the triplet is correct, the distance between and should be the smallest. TransE is simple and interpretable but cannot handle complex mapping patterns. In order to solve this problem, TransH [9] added the head entity to the relationship to generate their interaction features. More specifically, the head entity and tail entity are projected onto the hyperplane before the interaction to get the projection vector of the head entity and the projection vector of the tail entity , such that, using the similar semantic information vector similarity principle, is made to hold as much as possible. This translation-based type of model, however, has limited depth and can only learn shallow features of the knowledge graph. Improving the learning ability of the model without changing the model structure can only increase the number of shallow features, which results in an inability to apply the model to large knowledge graphs. Subsequently, a Convolutional Neural Network (CNN) approach was introduced for improving the shortcomings of the depth of the shallow models. ConvE [10] was the first embedding model using a 2D convolution for knowledge graph complementation. It first embeds entities and relations into a low-dimensional vector space, then concatenates entity embeddings and relation embeddings into a 2D matrix, and finally, extracts features from the matrix using 2D-convolution operations. However, this feature extraction is not very meaningful because the entities and relations are simply concatenated together without sufficient interaction. The internal elements of the entities and relations do not interact, and only a small number of elements at the connection points can interact locally. Thus, the model does not learn the deep semantic features and wastes the powerful learning ability of CNN. Therefore, in this paper, we introduce a new KGE model (ConCMH), based on a 2D convolution, which uses multiplication and inner product operations of matrices in the complex space for interaction. The innovation of ConCMH lies in the abandonment of the traditional Euclidean space and embedding entities and relationships into the complex space instead. Consisting of a real part and an imaginary part, the vector representation in the complex space can show different characteristics of entities and relationships from multiple aspects. Previously proposed KGE models all use a single interaction. For instance, ConvE simply concatenates entities and relationships, InteractE cross-concatenates the elements of entities and relationships, and TransH adds the head entity to the relationship to generate their interaction features. In contrast, the proposed ConCMH model uses a mixed interaction of addition and multiplication to further deepen the semantic connection between the head entity and relationship. Previous CNN-based models, such as ConvE, ConvKB, and InteractE, ultimately project the interaction features of the head entity and relationship into the entity embedding space through a multiplication operation to match all candidate entities. Working in the complex space, ConCMH replaces the traditional multiplication operation with an inner product operation, making the interaction features of the head entity and relationship closer to the correct tail entity.

The main contributions of this paper can be summarized as follows:

- A novel link-prediction model of a combined translation-based and CNN-based type is proposed, utilizing a mapping relationship in the complex space, which allows it to achieve more adequate entity embedding and relationship embedding of the interactions at the element level and explore the interactions from many different perspectives instead of being bound to a single additive or multiplicative interactions. In addition, the vector representation in the complex space allows the approach to take into account different properties of entities from many different aspects.

- The impact of the “test leakage” problem on model performance is numerically quantified and a practical way for reducing its effect is demonstrated.

2. Related Work

In recent years, many KGE models have been proposed, such as translation-based, CNN-based, geometry-based, path-based, etc. In this paper, we focus only on the former two types and propose a combined use of these in our ConCMH model, described in Section 4. Compared to the CNN-based models, the translation-based models have a simpler structure and use fewer parameters; these models only learn shallow features of a knowledge graph in order to be able to extend their operation to large knowledge graphs. In contrast, CNN-based models possess the ability to mine deep semantic information.

2.1. Translation-Based KGE Models

As a pioneering work in KGE, the TransE model paved the way for a variety of translation-based models. TransE embeds the triplet into a low-dimensional vector space and the relation is characterized as a translation operation between the head entity vector and the tail entity vector. The model is very efficient while also achieving very good link prediction performance. However, TransE encounters difficulties when dealing with complex relationships such as one-to-many, many-to-one, many-to-many, and symmetric relationships. For example, when dealing with the triplets (Obama, President, United States) and (Trump, President, United States), the TransE model identifies the head entity “Obama” and the head entity “Trump” as equal. However, in the real world, Obama and Trump seem to have nothing in common except that they have both served as Presidents of the United States. To solve this transfer problem, Wang et al. [9] proposed the TransH model, which introduced the concept of a hyperplane and obtained different entity representations by projecting entities onto the hyperplane.

There are also tensor-decomposition-based models similar to the translation-based models, which also use a simple structure in exchange for high efficiency. RESCAL [11] is a typical representative of this subgroup. It encodes each triplet as a tensor and a matrix and handles the knowledge graph complementation problem by optimizing the bilinear product between the core tensor and the factor matrix. Although RESCAL is a powerful model, it can easily overfit during training due to the large number of parameters used. DistMult [12] reduces the number of operations in RESCAL by replacing the core tensor with a diagonal matrix, which reduces the possibility of overfitting. However, DistMult cannot encode antisymmetric relations, which is its fatal flaw. In order to solve this problem, ComplEx [13] embeds DistMult into the complex vector space and encodes the antisymmetric relations in the triplet through the anti-symmetry of the Hermitian inner product, which makes up for the deficiencies of DistMult.

2.2. CNN-Based KGE Models

Dettmers et al. [10] argue that previous models using simple structures and few parameters were shallow and fast models, designed to scale to large knowledge graphs. These models, however, do not learn expressively as deep multilayer models, and their simple structure and efficient operations potentially limit their performance. To this end, Dettmers et al. [10] proposed ConvE as a multilayer CNN model for link prediction. ConvE uses a 2D convolution and fully connected layers to handle the interactions between entities and relations, which allows it to outperform previous models. In addition, the model has high parameter efficiency, yielding the same performance as DistMult [12] and R-GCN [14] with 8-fold and 17-fold parameter reduction, respectively. Inspired by [10], Nguyen et al. [15] also realized that CNNs can capture the effective features of interactions between triplets. Based on ConvE, the input to the CNN in their model, called ConvKB, was changed to a concatenation of head entities, relations, and tail entities, and multiple filter operations were utilized in order to generate different feature mappings, which improved the model performance to some extent. Vashishth et al. [16] affirmed the application of convolutional filters to the 2D reconstruction of entity and relational embeddings, but they found that the number of interactions that can be captured by ConvE and ConvKB is limited. The limited number of interactions largely programmatically limits the CNN feature-extraction capability. Therefore, Vashishth et al. proposed the InteractE model [16] utilizing a feature replacement and a circular convolution, which alleviated this deficiency to some extent.

Table 1 summarizes the scoring functions, relational parameters, and space complexity of the described KGE models, including also the ConCMH model proposed in this paper, where and denote the entity embedding dimension and relational embedding dimension, respectively; and denote the number of entities and relations, respectively; denotes the convolution operation; denotes the conjugate of the tail entity embedding; and denote the tandem operation of the head entity embedding and relational embedding, respectively; denotes the tandem operation of head entity embedding, relational embedding, and tail entity embedding; denotes the real part of the result; denotes the nonlinear function; and denotes the inner product operation.

Table 1.

Comparison of the KGE models.

3. Background

3.1. Knowledge Graph

A knowledge graph can be represented by , where denotes the set of entities, denotes the set of relations, and denotes the set of triplets , each consisting of a head entity and a tail entity , connected by a directed edge representing an relationship.

3.2. Link Prediction

The goal of the link prediction task is to use the complete triplets that already exist in a knowledge graph to infer the missing triplets. Depending on the missing part, the link prediction is further divided into: (i) entity prediction, i.e., given to reason about the missing tail entity, or given to predict the missing head entity; and (ii) relation prediction, i.e., given to reason about the missing relation using the head entity and tail entity.

4. Proposed Model

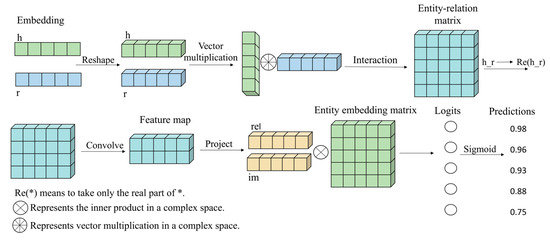

Inspired by ConEx [17] and CTKGC [18] models, we named the proposed model ConCMH.

ConCMH includes an embedding layer, interaction layer, feature extraction layer, and output layer, as shown in Figure 2. At the embedding layer, the head entity and the relation are embedded into the complex space to obtain the corresponding head entity embedding and relation embedding. At the interaction layer, the interaction between the head entity and the relation is made at the element level, whereby is made in the complex space and the real part of is taken out to form the entity–relation interaction matrix. At the feature extraction layer, the entity–relation interaction matrix is subject to feature extraction to obtain different feature mappings, which are then transformed into vectors by a remodeling operation. At the output layer, the inner product of feature vectors and entity embedding matrix is made in the complex space to match the feature vector with all candidate entity embedding. Finally, scoring is performed with a Sigmoid function, and the score of each candidate entity is obtained.

Figure 2.

The ConCMH structure.

The ConCMH layers are described in more detail in the following sections.

4.1. Embedding Layer

Knowledge graphs describe real-world facts in the form of triplets, which are not directly mathematical, so first, we have to embed entities and relations into the complex space in order to obtain vector representations of entities and relations. Given a head entity and a relation , we generate the corresponding real part and imaginary part of the head entity embedding, and the real part and imaginary part of the relation embedding, as follows:

where denotes the number of entities, denotes the number of relations, denotes the number of head entities, denotes the entity embedding dimension, and denotes the relation embedding dimension. Each head entity embedding is represented by , and each relation embedding is represented by (, which are then sent to the interaction layer.

4.2. Interaction Layer

To establish a mapping relationship among head entity embedding, relation embedding, and tail entity embedding (so that the interaction feature between the head entity and relation is closer to the tail entity), we take the head entity embedding and relation embedding for a product operation in the complex space and then make the interaction between the real part and imaginary part of the head entity, and the real part and imaginary part of the relation embedding at the element level. The interaction matrix is generated by taking the real part of the results, as follows:

Each element in the interaction matrix is composed of head entity embedding and relation embedding, which reflects the full interaction between embeddings. Next, the interaction matrix is sent to the feature extraction layer, where effective features are extracted through a convolution operation to ensure that the model learns the deep semantic information.

4.3. Feature Extraction Layer

At the feature extraction layer, the interaction matrix is subject to feature extraction by a 2D convolution operation to obtain more effective features forming the feature mapping tensor , where is the number of feature mappings with dimensions and . Then, tensor is remodeled into a vector by . Finally, vector is sent to a fully connected network, and the feature vector is generated by the weight parameter and the offset parameter , as follows:

where denotes the convolution operation, denotes the convolution kernel, the outermost denotes the linear unit function (ReLU), and the inner denotes the interactive operation of the head entity embedding and relation embedding . To speed up the model convergence, we set the convolution kernel to 3 × 200, taking into account the experience obtained with the CTKGC model [18].

4.4. Output Layer

At the output layer, ConCMH divides the output of the feature extraction layer into two parts according to the size of the entity embedding dimension and relation embedding dimension , which are used as the real part and imaginary part of the feature vector, respectively. To reduce the training time of the model and improve its efficiency, the evaluation method of “K vs. N” is applied. The feature vector is projected into the entity embedding space by a parameterized linear transformation of matrix , where denotes the number of features output by the convolution operation, and denote the number of rows and columns of the feature mapping matrix, respectively, and denotes the sum of and . In addition, the inner product of the feature vectors is obtained with all entities to generate tail entity prediction objects . Finally, a Sigmoid function is used to calculate the score of each candidate triplet to find the candidate tail entity with the highest score. The scoring function of ConCMH is shown below:

We use the Adam optimizer to train the proposed ConCMH model and optimize the loss generated by a given triplet by minimizing the following binary cross-entropy loss function :

where denotes the vector of predicted scores and denotes the label vector. When , the triplet is an incorrect triplet; and when , the triplet is a correct triplet reflecting the real world.

5. Experiments and Results

5.1. Datasets

Four datasets, namely, WN18 [8], FB15k [8], the Unified Medical Language System (UMLS) [19], and YAGO3-10 [10], were considered for use in the experiments conducted for performance comparison of the proposed ConCMH model with the state-of-the-art (SOTA) models.

WN18 is a subset of Wordnet, a broad-coverage English lexical-semantic network aggregated by researchers at Princeton University’s Cognitive Science Laboratory. There are 151,442 triplets in this strict-hierarchy dataset, containing 40,943 entities and 18 relations (the majority of which are superlative and sublative relations). FB15k is a subset of Freebase, consisting of 14,951 entities and 1345 relations mainly describing facts about movies, actors, awards, sports, and sports teams. UMLS is dedicated to the medical field, whereby 135 entities mainly describe a wide variety of diseases and 46 relations correspond to the means of treatment of diseases. YAGO3-10, a subset of YAGO3, contains 123,182 entities and 37 relations mainly used to describe various attributes of people, such as height, gender, and occupation.

As mentioned in [20], due to the “test leakage” problem, a large number of test triplets can be obtained in WN18 and FB15k simply by reversing the triplets in the training set and, because of this, simple models can get good results. To avoid this drawback, a large number of triplets possessing inverse relations in the WN18 and FB15k training sets were removed , resulting in the optimized subsets WN18RR [10] and FB15k-237 [21], respectively, whose details are shown in Table 2 along with details of the other datasets considered for use in the experiments.

Table 2.

Details of datasets considered for use in the experiments.

5.2. Evaluation Metrics

In the link prediction task, the model performance is generally measured by using four main metrics, namely, the mean reciprocal rank (MRR), Hits@10, Hits@3, and Hits@1, all having a range of values between 0 and 1. MRR refers to the inverse of the average ranking of all candidate entities in the test set, whereas Hits@X refers to the probability that the correct entity appears in the top X candidates. Higher values of MRR and Hits@X are indications of better model performance.

5.3. Experimental Setup

To lower the computation effort, a grid search algorithm was utilized to determine the range of hyperparameters. The optimal hyperparameter settings of CTKGC were used as follows: entity embedding dimension and relational embedding dimension d:{50,200,400}, learning rate Lr:{0.01,0.003,0.001,0.0005}, label smoothing ratio Ls:{0,0.1,0.2,0.3}, batch size Bs:{128,256,512,1000}, feature dropout DropOut1:{0.1,0.2,0.3}, and hidden layer dropout DropOut2:{0.1,0.2,0.3}. Drawing on the experience of CTKGC, in order to speed up the model convergence, the convolution kernel was set to 3 × 200. For obtaining the best possible results on each dataset, the following hyperparameter values were used: for FB15k-237—Lr = 0.001, Bs = 1000, DropOut1 = 0.2, and DropOut2 = 0.2; for WN18RR—Lr = 0.001, Bs = 1000, DropOut1 = 0.2, and DropOut2 = 0.5; for UMLS—Lr = 0.001, Bs = 128, DropOut1 = 0.2, and DropOut2 = 0.3; for YAGO3-10—Lr = 0.01, Bs = 1000, DropOut1 = 0.2, and DropOut2 = 0.3.

5.4. Link Prediction: Results and Analysis

The link prediction performance of the proposed ConCMH model was compared to that of SOTA models, based on the UMLS, YAGO3-10, FB15k-237, and WN18RR datasets. Because of the “test leakage” problem, described in Section 5.1, the link prediction experiments were carried out on the optimized subsets FB15k-237 and WN18RR (instead of the full datasets FB15k and WN18), which were constructed by deleting the inverse relations. The obtained results are shown in Table 3, Table 4, Table 5 and Table 6 (the best value achieved among the models for a particular metric is shown in bold). Except where noted, the results in these tables are taken from the indicated reference sources. Some data in Table 4, Table 5 and Table 6 are missing because these are not found in the corresponding reference source.

Table 3.

Link prediction performance of models on the UMLS dataset.

Table 4.

Link prediction performance of models on the YAGO3-10 dataset.

Table 5.

Link prediction performance of models on FB15k-237 dataset.

Table 6.

Link prediction performance of models on the WN18RR dataset.

On the FB15k-237 dataset (Table 5), the proposed ConCMH model outperforms all SOTA models based on all evaluation metrics. On the UMLS dataset (Table 3), ConCMH outperforms all SOTA models in terms of Hits@10 (reaching the maximum possible value of 1) and Hits@3, but it takes second and fifth places according to Hits@1 and MRR, respectively. On the WN18RR dataset (Table 6), in terms of Hits@3 and Hits@1, the proposed ConCMH model is the leader among all models considered, but it takes second (shared) place according to Hits@10 and drops to ninth place according to MRR. On the YAGO3-10 dataset (Table 4), ConCMH is the leader according to Hits@10 and Hits@1, but it takes fifth and eight places according to Hits@3 and MRR, respectively.

The reason for the ConCMH’s average performance, according to some evaluation metrics, on the UMLS, WN18RR, and YAGO3-10 datasets is that it uses a mixed interaction of addition and multiplication to deepen the semantic connection between entities and relationships, generating interaction features with deep semantic information. In addition, the 2D convolution is good at learning subtle differences between interaction features but ignores the surface information of the knowledge graph. In our future research, we will explore how ConCMH can learn surface information in knowledge graphs through convolution operations.

Generally, the proposed ConCMH model is not only superior to the translation-based models, such as TransE, DistMult, and ComplEx, but compared to the CNN-based models, such as ConvE, ConvKB, and CTKGC, its performance is also much better. The reason for this is that ConCMH combines the advantages of these two types of models so that it can not only learn the shallow features of the triplet surface but also can learn the semantic information hidden in the depth of a knowledge graph thanks to the use of a CNN connected deep structure. The biggest difference between ConCMH and CTKGC is that the former embeds entities and relations in the complex space, whereas the latter embeds entities and relations in the Euclidean space. As noted in [10], the only way to improve the expressiveness of shallow models by increasing the number of features is to increase the embedding size. Compared with that in Euclidean space, the vector embedded in the complex space is composed of a real part and an imaginary part, which actually increases the embedding size of the model invisibly, so the real part and imaginary part can reflect the different attributes of an entity from different angles, making the entity rich in features. ConCMH uses convolution operators in an efficient and fast parameter manner to form a deep network, which lowers the computational burden caused by increasing the embedding size. At its interaction layer, ConCMH combines addition, which is common for the translation-based models, with multiplication interaction, which is common for the CNN-based models, which not only retains the principle of similar semantic vectors in the Euclidean space but also gives full play to the nature of parameter sharing of convolution operators, thus linking the entities and relations in the triplets more closely. In addition, the entity–relation interaction matrix is subject to real part operation Re (), and some irrelevant feature interactions are discarded, so that the performance and efficiency of the model can be further improved, making it applicable to large-scale knowledge graphs.

5.5. ”Test Leakage” Problem: Results and Analysis

Before the “test leakage” problem was found, the WN18 and FB15k datasets were used to evaluate the performance of the KGE models. In 2015, Toutanova and Chen explained for the first time that the inverse relations in WN18 and FB15k may lead to this problem. For example, if the test set contains a triplet and the training set contains its inverse triplet , then the test triplet can be easily mapped to the training triplet. In cases such as this, a model only learns which relations are inversions of other relations, rather than learning the semantic information of the triplets.

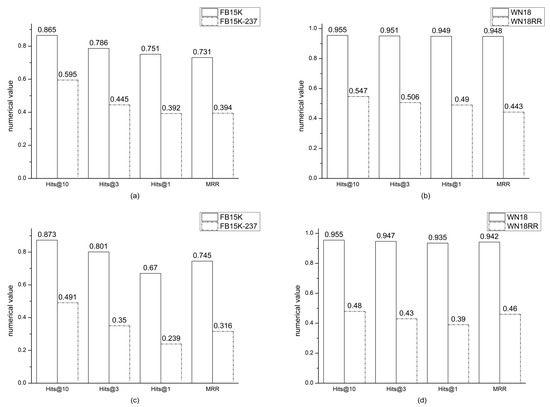

To investigate the seriousness of the “test leakage” problem, we conducted link prediction experiments on the full datasets WN18 and FB15k and their optimized versions WN18RR and FB15k-237 by comparing the performance of the ConCMH and ConvE models on each pair of the complete and optimized version of a dataset. The obtained results are shown in Figure 3. For the proposed ConCMH model, MRR is the performance metric whose values on WN18 and WN18RR differ the most (constituting a difference of 0.505 points). On FB15k and FB15k-237, the difference between the MRR values obtained for ConCMH is smaller at 0.337 points. The main reason for this is that the composition of relations in WN18 is different from that in FB15k. In WN18, 94% of the training set constitutes inverse relations, whereas in FB15k the number of inverse relations is 13% lower, resulting in a smaller number of deleted FB15k triplets when forming the FB15k-237 dataset, and in less difference between the MRR values. For ConvE, Hits@1 is the performance metric whose values on WN18 and WN18RR differ the most (constituting a difference of 0.545 points). On FB15k and FB15k-237, the biggest difference (equal to 0.451 points) is observed in the Hits@3 values obtained for ConvE. Comparing the two models, leaving “test leakage” aside, ConCMH and ConvE show almost equal performance on the WN18 dataset, with the biggest difference in the metric values of only 0.014 points (for Hits@1). However, on the optimized WN18RR dataset, the performance of the two models is quite different, with the biggest difference reaching 0.100 points (for Hits@1) and increased differences for other metric values as well. This demonstrates the importance of reducing the negative effect of the “test leakage” problem in order to come up with results that are as close to reality as possible.

Figure 3.

“Test leakage” results, using: (a) ConCMH on FB15k and FB15k-237; (b) ConCMH on WN18 and WN18RR; (c) ConvE on FB15k and FB15k-237; (d) ConvE on WN18 and WN18RR.

5.6. ConCMH Parameter Values: Results and Analysis

We also explored the influence of different parameter values on the performance of the proposed ConCMH model (based only on the WN18RR dataset). The obtained results are shown in Table 7 (the best value achieved among parameter values for a particular metric is shown in bold).

Table 7.

Influence of different parameter values on the ConCMH performance.

First, we experimented with using different sizes of the convolution kernel. When this size is expanded from 3 × 200 to 5 × 200, the values of all evaluation metrics decrease (to different degrees). So, the larger the convolution kernel’s size, the more subtle information ignored during feature extraction.

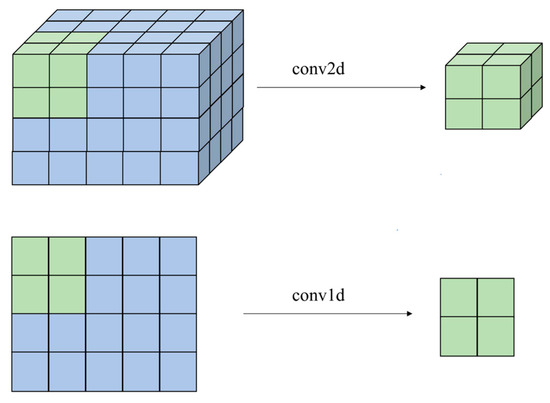

Then, we compared the use of two convolution types for feature extraction, namely 2D convolution (e.g., used by ConvE and CTKGC) and 1D convolution (e.g., used by ConvKB). In terms of computational nature, there is no difference between the two types; both move along the dimension of input data. However, the 1D convolution moves along a single dimension, while the 2D convolution moves along two dimensions. For the complementation of knowledge graphs, the essential difference between the two convolution types is related to the remodeling of input data. With the 1D convolution, the entity–relation interaction matrix needs to be remodeled into 2D, whereas with the 2D convolution, it is remodeled into 3D. In the case of the same embedding dimension, the 3D interaction matrix has more interaction points than the 2D interaction matrix, which deepens the connection of a triplet in its neighborhood, as illustrated in Figure 4. As can be seen from Table 7, when using 2D convolution, the values of all evaluation metrics for ConCMH are higher than those of 1D convolution.

Figure 4.

An illustration of the use of 1D and 2D convolution for feature extraction.

6. Conclusions and Future Work

This paper has proposed a novel link-prediction model, called ConCMH, constructed by applying a new mapping relation h × r ≈ t, along with fully interacted entity embedding and relation embedding, in the complex space. The real part of this interaction forms an entity–relation interaction matrix, from where features are extracted by means of a 2D convolution. In addition, the feature mapping is matched with the prediction entity embedding through the inner product in the complex space. Compared to the state-of-the-art (SOTA) models considered, the ConCMH model features a simpler structure and demonstrates a more powerful link-prediction ability in the majority of cases, based on experiments conducted on four popular datasets. In addition, the proposed model can be easily expanded to larger and more complex knowledge graphs.

A disadvantage of ConCMH is that, similarly to some SOTA models, it only focuses on a triplet and ignores some special natures of the triplet itself. Many triplet entities and relations have special natures such as transitivity and generalization, which can be taken into account only by combining multiple triplets together. In our future work, we are going to study this issue in depth. In addition, compared with the Convolutional Neural Networks (CNNs) used in the field of computer vision, the CNN utilized by the proposed model is still too shallow. Thus, in the future, we plan to investigate the use of a combination of a variety of CNNs for increasing network depth.

Compared with the SOTA contrastive-learning-enhanced knowledge graph representation methods [32,33], ConCMH uses a scoring function to score triplets, ignoring the semantic similarity between related entities and related entity–relation pairs between different triplets. The interaction between the target entity and relationships is too one-sided. Other entities sharing the same relationship with the target entity may also have some semantic interaction with that target entity. In our future research, we will also take this aspect into consideration.

Author Contributions

Conceptualization, L.S. and Z.J.; methodology, Z.Y.; validation, I.G. and Z.Y.; formal analysis, Z.Y.; writing—original draft preparation, Z.Y.; writing—review and editing, I.G., L.S. and Z.J; supervision, L.S.; project administration, Z.J. and I.G. All authors have read and agreed to the published version of the manuscript.

Funding

This publication has emanated from research conducted with the financial support of the National Key Research and Development Program of China under Grant No. 2017YFE0135700, and Grant No. BG05M2OP001-1.001-0003, financed by the Science and Education for Smart Growth Operational Program (2014–2020) and co-financed by the European Union through the European Structural and Investment funds.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mouromtsev, D.; Wohlgenannt, G.; Haase, P.; Pavlov, D.; Emelyanov, Y.; Morozov, A. A diagrammatic approach for visual question answering over knowledge graphs. In Proceedings of the the Semantic Web: ESWC 2018 Satellite Events: ESWC 2018 Satellite Events, Revised Selected Papers 15, Heraklion, Crete, Greece, 3–7 June 2018; pp. 34–39. [Google Scholar]

- Saxena, S.; Sangani, R.; Prasad, S.; Kumar, S.; Athale, M.; Awhad, R.; Vaddina, V. Large-Scale Knowledge Synthesis and Complex Information Retrieval from Biomedical Documents. In Proceedings of the 2022 IEEE International Conference on Big Data (Big Data), Osaka, Japan, 17–20 December 2022; pp. 2364–2369. [Google Scholar]

- Bollacker, K.; Evans, C.; Paritosh, P.; Sturge, T.; Taylor, J. Freebase: A collaboratively created graph database for structuring human knowledge. In Proceedings of the 2008 ACM SIGMOD International Conference on Management of Data, Vancouver, BC, Canada, 9–12 June 2008; pp. 1247–1250. [Google Scholar]

- Auer, S.; Bizer, C.; Kobilarov, G.; Lehmann, J.; Cyganiak, R.; Ives, Z. Dbpedia: A nucleus for a web of open data. In Proceedings of the Semantic Web: 6th International Semantic Web Conference, 2nd Asian Semantic Web Conference, ISWC 2007+ ASWC 2007, Busan, Republic of Korea, 11–15 November 2007; pp. 722–735. [Google Scholar]

- Krompaß, D.; Baier, S.; Tresp, V. Type-constrained representation learning in knowledge graphs. In Proceedings of the Semantic Web-ISWC 2015: 14th International Semantic Web Conference, Part I 14, Bethlehem, PA, USA, 11–15 October 2015; pp. 640–655. [Google Scholar]

- Mahdisoltani, F.; Biega, J.; Suchanek, F. Yago3: A knowledge base from multilingual wikipedias. In Proceedings of the 7th Biennial Conference on Innovative Data Systems Research, Asilomar, CA, USA, 4–7 January 2014; pp. 1–11. [Google Scholar]

- Wang, X.; Gao, T.; Zhu, Z.; Zhang, Z.; Liu, Z.; Li, J.; Tang, J. KEPLER: A unified model for knowledge embedding and pre-trained language representation. Trans. Assoc. Comput. Linguist. 2021, 9, 176–194. [Google Scholar] [CrossRef]

- Bordes, A.; Usunier, N.; Garcia-Duran, A.; Weston, J.; Yakhnenko, O. Translating embeddings for modeling multi-relational data. Adv. Neural Inf. Process. Syst. 2013, 26, 1–9. [Google Scholar]

- Wang, Z.; Zhang, J.; Feng, J.; Chen, Z. Knowledge graph embedding by translating on hyperplanes. In Proceedings of the AAAI Conference on Artificial Intelligence, Québec City, QC, Canada, 27–31 July 2014; pp. 1112–1119. [Google Scholar]

- Dettmers, T.; Minervini, P.; Stenetorp, P.; Riedel, S. Convolutional 2d knowledge graph embeddings. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 1811–1818. [Google Scholar]

- Nickel, M.; Tresp, V.; Kriegel, H.-P. A three-way model for collective learning on multi-relational data. In Proceedings of the 28th International Conference on Machine Learning, Bellevue, WA, USA, 28 June–2 July 2011; pp. 3104482–3104584. [Google Scholar]

- Yang, B.; Yih, S.W.-T.; He, X.; Gao, J.; Deng, L. Embedding Entities and Relations for Learning and Inference in Knowledge Bases. In Proceedings of the International Conference on Learning Representations (ICLR) 2015, San Diego, CA, USA, 7–9 May 2015; pp. 1–12. [Google Scholar]

- Trouillon, T.; Dance, C.R.; Gaussier, É.; Welbl, J.; Riedel, S.; Bouchard, G. Knowledge Graph Completion via Complex Tensor Factorization. J. Mach. Learn. Res. 2017, 18, 1–38. [Google Scholar]

- Schlichtkrull, M.; Kipf, T.N.; Bloem, P.; Van Den Berg, R.; Titov, I.; Welling, M. Modeling relational data with graph convolutional networks. In Proceedings of the Semantic Web: 15th International Conference, ESWC 2018, Proceedings 15, Heraklion, Crete, Greece, 3–7 June 2018; pp. 593–607. [Google Scholar]

- Dai Quoc Nguyen, T.D.N.; Nguyen, D.Q.; Phung, D. A Novel Embedding Model for Knowledge Base Completion Based on Convolutional Neural Network. In Proceedings of the NAACL-HLT, New Orleans, LA, USA, 1–6 June 2018; pp. 327–333. [Google Scholar]

- Vashishth, S.; Sanyal, S.; Nitin, V.; Agrawal, N.; Talukdar, P. Interacte: Improving convolution-based knowledge graph embeddings by increasing feature interactions. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 3009–3016. [Google Scholar]

- Demir, C.; Ngomo, A.-C.N. Convolutional complex knowledge graph embeddings. In Proceedings of the Semantic Web: 18th International Conference, ESWC 2021, Virtual Event, 6–10 June 2021; pp. 409–424. [Google Scholar]

- Feng, J.; Wei, Q.; Cui, J.; Chen, J. Novel translation knowledge graph completion model based on 2D convolution. Appl. Intell. 2022, 52, 3266–3275. [Google Scholar] [CrossRef]

- McCray, A.T. An upper-level ontology for the biomedical domain. Comp. Funct. Genom. 2003, 4, 80–84. [Google Scholar] [CrossRef] [PubMed]

- Toutanova, K.; Chen, D. Observed versus latent features for knowledge base and text inference. In Proceedings of the 3rd Workshop on Continuous Vector Space Models and Their Compositionality, Beijing, China, 26–31 July 2015; pp. 57–66. [Google Scholar]

- Toutanova, K.; Chen, D.; Pantel, P.; Poon, H.; Choudhury, P.; Gamon, M. Representing Text for Joint Embedding of Text and Knowledge Bases. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1499–1509. [Google Scholar]

- Nathani, D.; Chauhan, J.; Sharma, C.; Kaul, M. Learning Attention-based Embeddings for Relation Prediction in Knowledge Graphs. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 4710–4723. [Google Scholar]

- Balažević, I.; Allen, C.; Hospedales, T.M. Hypernetwork knowledge graph embeddings. In Proceedings of the Artificial Neural Networks and Machine Learning–ICANN 2019: Workshop and Special Sessions: 28th International Conference on Artificial Neural Networks, Munich, Germany, 17–19 September 2019; pp. 553–565. [Google Scholar]

- Sun, Z.; Deng, Z.-H.; Nie, J.-Y.; Tang, J. RotatE: Knowledge Graph Embedding by Relational Rotation in Complex Space. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019; pp. 1–18. [Google Scholar]

- Balažević, I.; Allen, C.; Hospedales, T. TuckER: Tensor Factorization for Knowledge Graph Completion. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 5185–5194. [Google Scholar]

- Yu, J.; Cai, Y.; Sun, M.; Li, P. Mquade: A unified model for knowledge fact embedding. In Proceedings of the Web Conference 2021, Ljubljana, Slovenia, 19–23 April 2021; pp. 3442–3452. [Google Scholar]

- Bansal, T.; Juan, D.-C.; Ravi, S.; McCallum, A. A2N: Attending to neighbors for knowledge graph inference. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 4387–4392. [Google Scholar]

- Zhang, S.; Tay, Y.; Yao, L.; Liu, Q. Quaternion knowledge graph embeddings. Adv. Neural Inf. Process. Syst. 2019, 32, 1–11. [Google Scholar]

- Zhang, Q.; Wang, R.; Yang, J.; Xue, L. Structural context-based knowledge graph embedding for link prediction. Neurocomputing 2022, 470, 109–120. [Google Scholar] [CrossRef]

- Jiang, D.; Wang, R.; Yang, J.; Xue, L. Kernel multi-attention neural network for knowledge graph embedding. Knowl. Based Syst. 2021, 227, 107–188. [Google Scholar] [CrossRef]

- Zhou, Z.; Wang, C.; Feng, Y.; Chen, D. JointE: Jointly utilizing 1D and 2D convolution for knowledge graph embedding. Knowl. Based Syst. 2022, 240, 100–108. [Google Scholar] [CrossRef]

- Yang, Y.; Huang, C.; Xia, L.; Li, C. Knowledge graph contrastive learning for recommendation. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022; pp. 1434–1443. [Google Scholar]

- Cao, X.; Shi, Y.; Wang, J.; Yu, H.; Wang, X.; Yan, Z. Cross-modal Knowledge Graph Contrastive Learning for Machine Learning Method Recommendation. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; Association for Computing Machinery: Lisboa, Portugal, 2022; pp. 3694–3702. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).