On Structured Random Matrices Defined by Matrix Substitutions

Abstract

1. Introduction

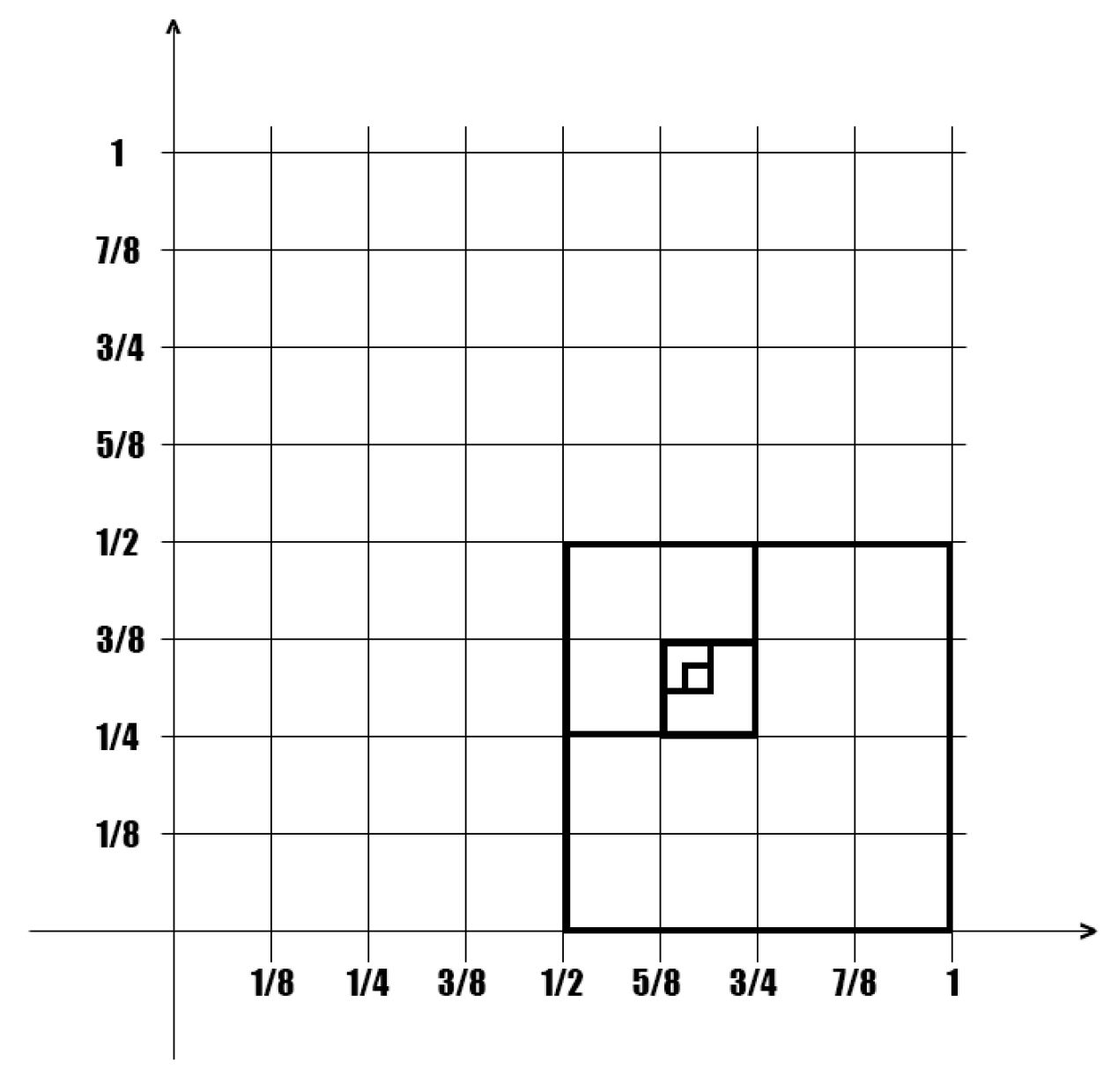

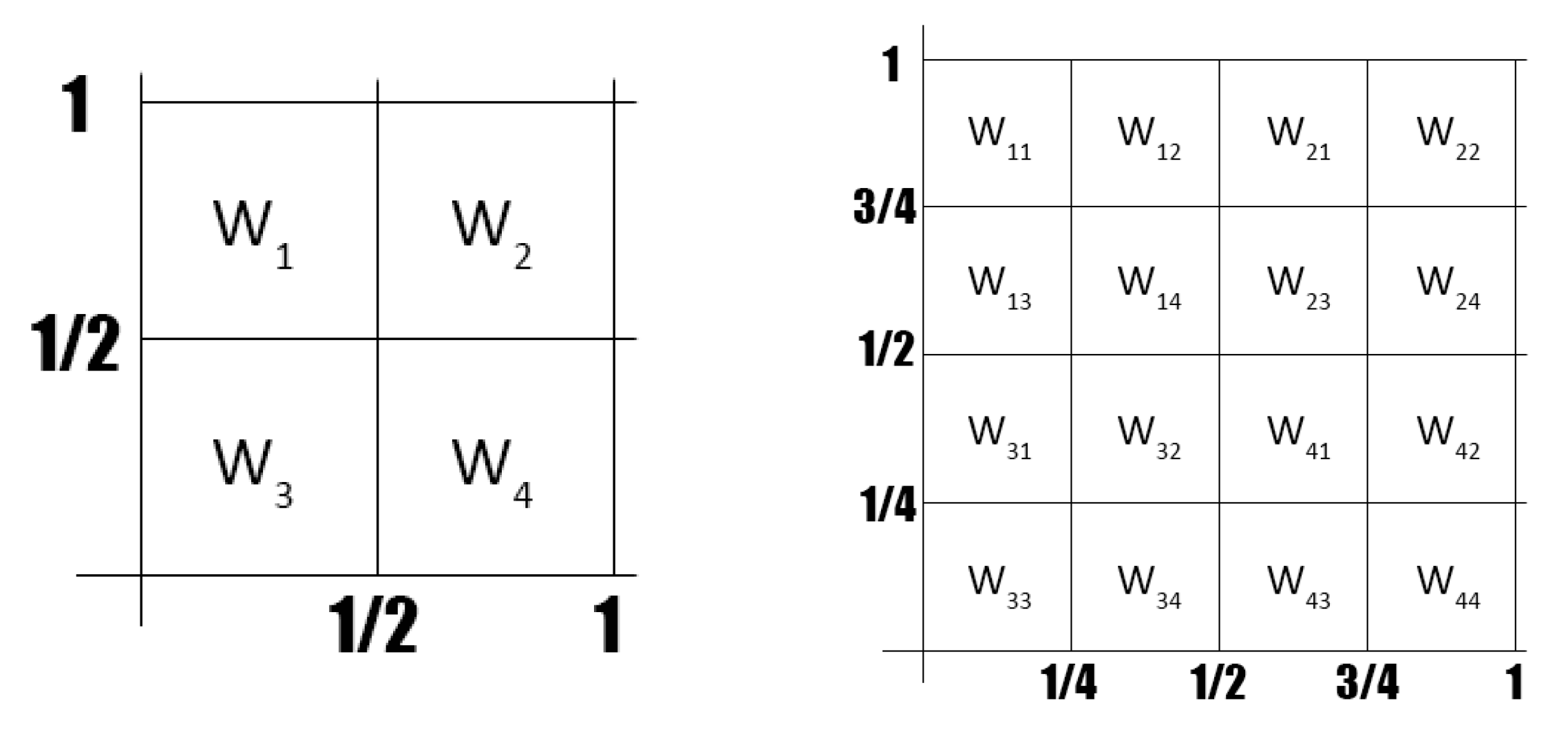

- In Section 2, we present a first example of the algorithm, used to build structured matrices, given by the iterative application of matrix valued substitutions; the second example uses powers of the Kronecker product of a given matrix and is a particular case of the generic algorithm of matrix substitutions. A general procedure of construction of the sequence of structured matrices by substitutions is detailed in Section 3.1.

- In Section 3, we present the results on fixed points of matrix substitutions.

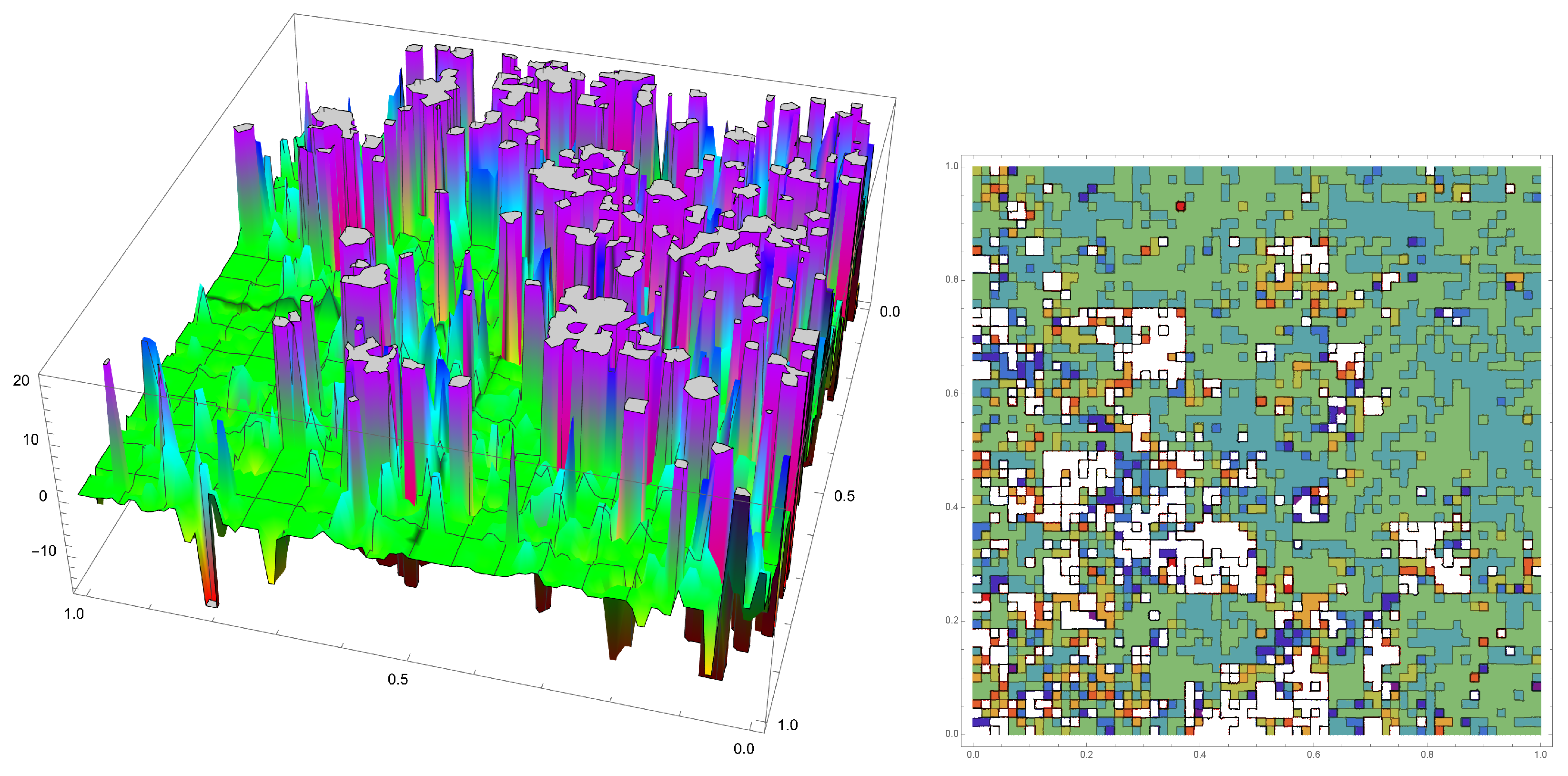

- The randomisation of structured matrices defined by matrix substitutions is studied in Section 4. Preliminary results on the spectral analysis of these random matrices are presented in Section 4.3. An application to modelling is detailed in Section 4.4 with an algorithm to associate a random field to an infinite random matrix of the kind studied in this work.

2. Structured Matrices Built by Substitutions

2.1. A Matrix Sequence Built by Iterated Application of a Matrix Substitution

2.2. A Matrix Sequence Built by Kronecker Power Iterations

3. On the Fixed Points of Affine Matrix Substitutions

3.1. Some Spaces of Matrices

- 1.

- Let us consider the initial state as for some .

- 2.

- We associate to its leading principal matrix of order n, denoted by which, we stress, is a finite matrix of order n. Let denote the set of the leading principal matrices of order n associated with the elements of , or .

- 3.

- For technical reasons we will restrain our study by considering that we chose such that for all we have a finite matrix of order d that is, such that . In the applications we may have . Let us define the global substitution rule , associated with by:

- 4.

- We define matrix substitution map denoted by by adding to the finite matrix infinite rows and columns of entries of in such a way that is an infinite matrix such that we have and such that the leading principal matrix of order n of is precisely .

- 5.

- We now define the extension of the notion of a matrix substitution map for matrices in , to the space of infinite matrices . Given that we supposed that global substitution take values in a space of finite matrices of order d, we may define for , with as the matrix , that is, an infinite matrix having entries matrices , for with .

- 6.

- The matrix substitutions sequence denoted by is defined by induction, for with or , by:

- (I)

- Given a sequence of matrices , satisfying some compatibility conditions, is it possible to determine conditions under which there exists an initial state and a matrix substitution map such that ?

- (II)

- A related and very important problem is to determine the properties of the eigenvalues of the matrices of the sequence that may be derived from the properties of .

3.2. On the Existence of Fixed Points for Matrix Substitution Maps

3.2.1. Fixed Points for Matrix Substitution Maps over Infinite Matrices

3.2.2. Fixed Points for Matrix Affine Substitutions Maps Defined over Finite Matrices

- 1.

- The restriction of τ to coincides with the norm topology .

- 2.

- is a Hausdorf space.

- 3.

- We have the Dieudonné–Schwartz lemma, that is, if a set B is bounded in then there exists some such that .

- 4.

- A sequence converges in if and and only if there exists some such that and the sequence converges in .

- 5.

- We have Köthe’s theorem, that is, is a complete space.

- 1.

- is a contraction from into for every .

- 2.

- is a contraction from into .

- 3.

- There exists and a fixed point of , that is, such that .

4. Random Matrices Associated to Structured Matrices

- Identification of a random matrix model (Section 4.1);

- Convergence in law of random matrices built on skeletons matrices derived from substitution maps having a fixed point (Section 4.2);

- Spectral analysis of some random structured matrices (Section 4.3);

- Random surfaces associated with random matrices built on skeletons matrices derived from substitution maps having a fixed point (Section 4.4).

4.1. Testing for a Given Matrix Structure in a Realisation of a Stochastic Matrix

- (A)

- The matrix has its skeleton—that is, a matrix with entries in —which is a fixed point of the matrix substitution map. This assumption is justified on the grounds of the process that originated the skeleton being over its transient phase.

- (B)

- The random variables which are entries of the random matrix form a set of independent random variables.

- (C)

- For each we have that , that is, the correspondent random variable has a probability law with a parameter.

4.2. Convergence in Law of Random Structured Matrices Built by Arbitrary Substitutions

- A global substitution given by: ;

- The associated matrix substitution map defined on ;

- A fixed point of the substitution map .

- The entries in the random matrix corresponding to same field element are equi-distributed with a given random variable .

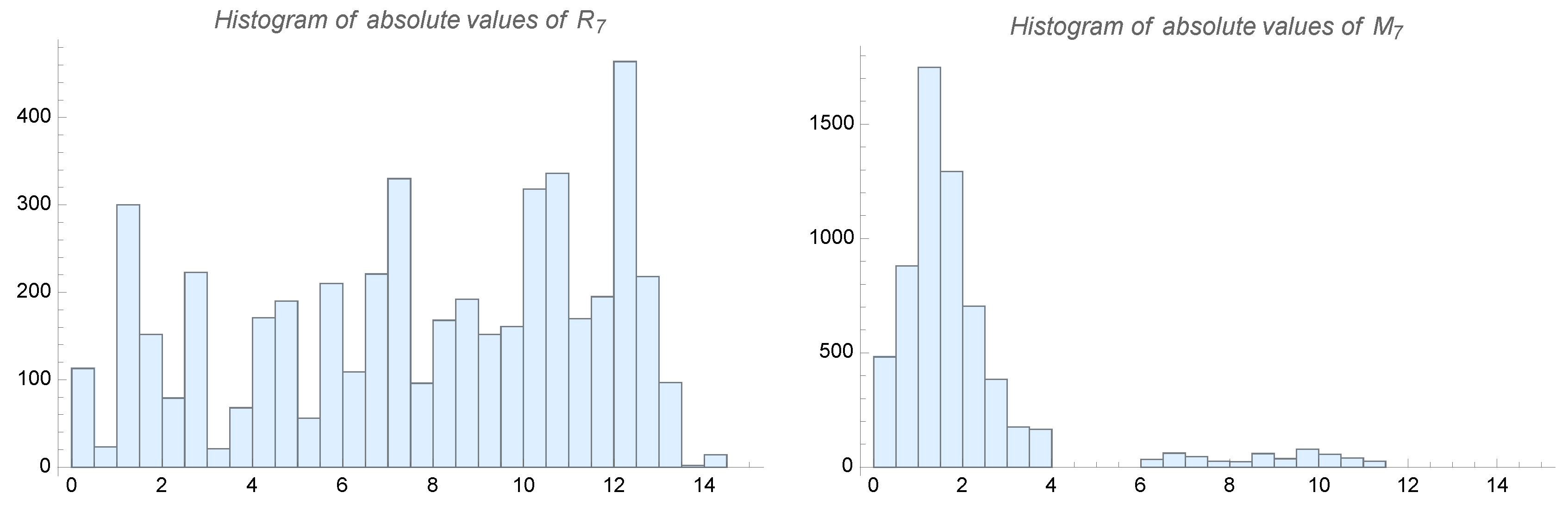

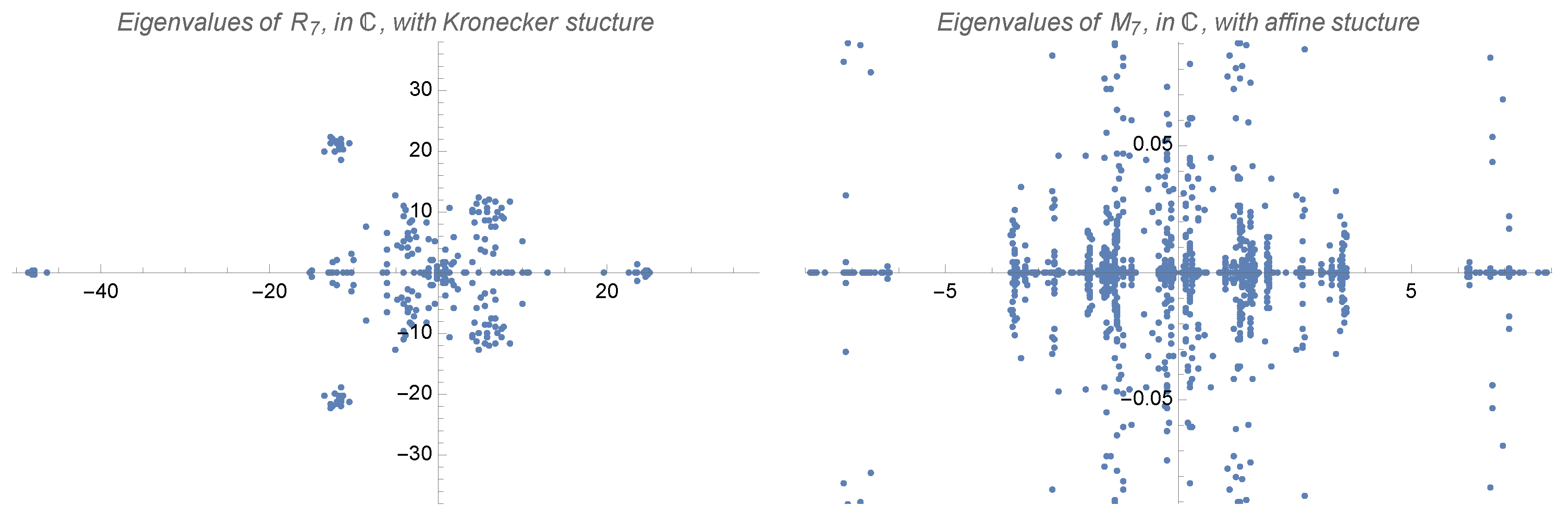

4.3. Spectral Analysis of Some Structured Random Matrices

4.4. Modelling: Random Surfaces Associated to Random Matrices

- (a)

- The left tail averages verify:for some constant m.

- (b)

- The variances of the random variables verify , for a certain to be determined later and with such that:

5. Conclusions and Future Work

- The existence of a particular type of structure of matrix substitution type is identifiable by simple statistical procedures;

- The convergence in law of a sequence of random matrices having as skeletons a sequence of matrices with entries in a finite field that, of matrix substitution type, converges to a fixed point;

- There is a generic result on the spectral analysis for the random matrices derived from a matrix substitution procedure;

- There is a canonical manner to associate a nontrivial random field with interesting properties to a random matrix having as a skeleton a matrix with entries in a finite field of matrix substitution type.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, S.; McGree, J.; Ge, Z.; Xie, Y. Computational and Statistical Methods for Analysing Big Data with Applications; Elsevier: Amsterdam, The Netherlands; Academic Press: Cambridge, MA, USA, 2016; pp. 11 + 194. [Google Scholar]

- Pytheas Fogg, N.; Berthé, V.; Ferenczi, S.; Mauduit, C.; Siegel, A. (Eds.) Substitutions in Dynamics, Arithmetics and Combinatorics; Springer: Berlin/Heidelberg, Germany, 2002; Volume 1794, pp. 15 + 402. [Google Scholar] [CrossRef]

- Queffélec, M. Substitution Dynamical Systems. Spectral Analysis, 2nd ed.; Springer: Dordrecht, The Netherlands, 2010; Volume 1294, pp. 15 + 351. [Google Scholar] [CrossRef]

- von Haeseler, F. Automatic Sequences; Walter de Gruyter: Berlin/Heidelberg, Germany, 2003; pp. 6 + 191. [Google Scholar]

- Allouche, J.P.; Shallit, J. Automatic Sequences; Theory, Applications, Generalizations; Cambridge University Press: Cambridge, UK, 2003; pp. 16 + 571. [Google Scholar] [CrossRef]

- Frank, N.P. Multidimensional constant-length substitution sequences. Topol. Its Appl. 2005, 152, 44–69. [Google Scholar] [CrossRef]

- Bartlett, A. Spectral theory of Zd substitutions. Ergod. Theory Dyn. Syst. 2018, 38, 1289–1341. [Google Scholar] [CrossRef]

- Jolivet, T.; Kari, J. Consistency of multidimensional combinatorial substitutions. Theor. Comput. Sci. 2012, 454, 178–188. [Google Scholar] [CrossRef]

- Fogg, N.P.; Berthé, V.; Ferenczi, S.; Mauduit, C.; Siegel, A. (Eds.) Polynomial dynamical systems associated with substitutions. In Substitutions in Dynamics, Arithmetics and Combinatorics; Springer: Berlin/Heidelberg, Germany, 2002; pp. 321–342. [Google Scholar]

- Ginibre, J. Statistical ensembles of complex, quaternion, and real matrices. J. Math. Phys. 1965, 6, 440–449. [Google Scholar] [CrossRef]

- Girko, V. Theory of Random Determinants; Translated from the Russian; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1988; pp. 25 + 677. [Google Scholar]

- Girko, V. Statistical Analysis of Observations of Increasing Dimension; Translated from the Russian; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1995; pp. 21 + 286. [Google Scholar]

- Bai, Z.D. Methodologies in Spectral Analysis of Large Dimensional Random Matrices, a review. Stat. Sin. 1999, 9, 611–662. [Google Scholar]

- Götze, F.; Tikhomirov, A. The circular law for random matrices. Ann. Probab. 2010, 38, 1444–1491. [Google Scholar] [CrossRef]

- Alexeev, N.; Götze, F.; Tikhomirov, A. Asymptotic distribution of singular values of powers of random matrices. Lith. Math. J. 2010, 50, 121–132. [Google Scholar] [CrossRef]

- Götze, F.; Naumov, A.; Tikhomirov, A. Distribution of linear statistics of singular values of the product of random matrices. Bernoulli 2017, 23, 3067–3113. [Google Scholar] [CrossRef]

- Götze, F.; Naumov, A.; Tikhomirov, A.; Timushev, D. On the local semicircular law for Wigner ensembles. Bernoulli 2018, 24, 2358–2400. [Google Scholar] [CrossRef]

- Götze, F.; Tikhomirov, A. Rate of convergence in probability to the Marchenko-Pastur law. Bernoulli 2004, 10, 503–548. [Google Scholar] [CrossRef]

- Mehta, M.L. Random Matrices, 3rd ed.; Pure and Applied Mathematics (Amsterdam); Elsevier: Amsterdam, The Netherlands; Academic Press: Cambridge, MA, USA, 2004; Volume 142, pp. 18 + 688. [Google Scholar]

- Anderson, G.W.; Guionnet, A.; Zeitouni, O. An Introduction to Random Matrices; Cambridge Studies in Advanced Mathematics; Cambridge University Press: Cambridge, UK, 2010; Volume 118, pp. 14 + 492. [Google Scholar]

- Guionnet, A. Grandes matrices aléatoires et théorèmes d’universalité (d’après Erdos, Schlein, Tao, Vu et Yau). Astérisque 2011, 1, 203–237. [Google Scholar]

- Tao, T. Topics in Random Matrix Theory; Graduate Studies in Mathematics; American Mathematical Society: Providence, RI, USA, 2012; Volume 132, pp. 10 + 282. [Google Scholar] [CrossRef]

- Vu, V.H. (Ed.) Modern aspects of random matrix theory. In Proceedings of the Symposia in Applied Mathematics, San Diego, CA, USA, 6–7 January 2013; Papers from the AMS Short Course on Random Matrices. American Mathematical Society: Providence, RI, USA, 2014; Volume 72, pp. 8 + 174. [Google Scholar] [CrossRef]

- Akemann, G.; Baik, J.; Di Francesco, P. (Eds.) The Oxford Handbook of Random Matrix Theory; Paperback edition of the 2011 original [MR2920518]; Oxford University Press: Oxford, UK, 2015; pp. 31 + 919. [Google Scholar]

- Erdos, L.; Yau, H.T. A Dynamical Approach to Random Matrix Theory; Courant Lecture Notes in, Mathematics; Courant Institute of Mathematical Sciences: New York, NY, USA; American Mathematical Society: Providence, RI, USA, 2017; Volume 28, pp. 9 + 226. [Google Scholar]

- Banerjee, D.; Bose, A. Patterned sparse random matrices: A moment approach. Random Matrices Theory Appl. 2017, 6, 1750011. [Google Scholar] [CrossRef]

- Bose, A. Patterned Random Matrices; CRC Press: Boca Raton, FL, USA, 2018; pp. 21 + 267. [Google Scholar] [CrossRef]

- Livshyts, G.V.; Tikhomirov, K.; Vershynin, R. The smallest singular value of inhomogeneous square random matrices. Ann. Probab. 2021, 49, 1286–1309. [Google Scholar] [CrossRef]

- Jain, V.; Silwal, S. A note on the universality of ESDs of inhomogeneous random matrices. ALEA Lat. Am. J. Probab. Math. Stat. 2021, 18, 1047–1059. [Google Scholar] [CrossRef]

- Tikhomirov, A.N. On the Wigner law for generalizided random graphs. Sib. Adv. Math. 2021, 31, 301–308. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, A.; Lin, F. Threshold function of ray nonsingularity for uniformly random ray pattern matrices. Linear Multilinear Algebra 2022, 70, 5708–5715. [Google Scholar] [CrossRef]

- Ali, M.S.; Srivastava, S.C.L. Patterned random matrices: Deviations from universality. J. Phys. A 2022, 55, 495201. [Google Scholar] [CrossRef]

- Bernkopf, M. A history of infinite matrices. A study of denumerably infinite linear systems as the first step in the history of operators defined on function spaces. Arch. History Exact Sci. 1968, 4, 308–358. [Google Scholar] [CrossRef]

- Shivakumar, P.N.; Sivakumar, K.C. A review of infinite matrices and their applications. Linear Algebra Appl. 2009, 430, 976–998. [Google Scholar] [CrossRef]

- Williams, J.J.; Ye, Q. Infinite matrices bounded on weighted ℓ1 spaces. Linear Algebra Appl. 2013, 438, 4689–4700. [Google Scholar] [CrossRef]

- Lindner, M. Infinite Matrices and Their Finite Sections; Frontiers in Mathematics; An introduction to the limit operator method; Birkhäuser Verlag: Basel, Switzerland, 2006; pp. 15 + 191. [Google Scholar]

- Warusfel, A. Structures Algébriques Finies. Groupes, Anneaux, Corps; Collection Hachette Université, Librairie Hachette: Paris, France, 1971; p. 271. [Google Scholar]

- Koan, V.K. Distributions, Analyse de Fourier, Opérateurs aux Dérivées Partielles; Number tome 1 in Cours et exercices résolus maîtrise de mathématiques: Certficat C2; Vuibert: Paris, France, 1972. [Google Scholar]

- Schaefer, H.H. Topological Vector Spaces; Springer: New York, NY, USA, 1971; Volume 3. [Google Scholar]

- Köthe, G. Topological Vector Spaces. I; Die Grundlehren der mathematischen Wissenschaften in Einzeldarstellungen. 159; Garling, D.J.H., Translator; Springer: Berlin/Heidelberg, Germany; New York, NY, USA, 1969; pp. 15, 456. [Google Scholar]

- Skorohod, A.V. Random Linear Operators; Mathematics and its Applications (Soviet Series); Translated from the Russian; D. Reidel Publishing Co.: Dordrecht, The Netherlands, 1984; pp. 16 + 199. [Google Scholar] [CrossRef]

- Guo, T.X. Extension theorems of continuous random linear operators on random domains. J. Math. Anal. Appl. 1995, 193, 15–27. [Google Scholar] [CrossRef]

- Thang, D.H.; Thinh, N. Generalized random linear operators on a Hilbert space. Stochastics 2013, 85, 1040–1059. [Google Scholar] [CrossRef]

- Quy, T.X.; Thang, D.H.; Thinh, N. Abstract random linear operators on probabilistic unitary spaces. J. Korean Math. Soc. 2016, 53, 347–362. [Google Scholar] [CrossRef]

- Chiu, S.N.; Liu, K.I. Generalized Cramér-von Mises goodness-of-fit tests for multivariate distributions. Comput. Stat. Data Anal. 2009, 53, 3817–3834. [Google Scholar] [CrossRef]

- Thas, O. Comparing Distributions; Springer: New York, NY, USA, 2010; pp. 18 + 353. [Google Scholar]

- McAssey, M.P. An empirical goodness-of-fit test for multivariate distributions. J. Appl. Stat. 2013, 40, 1120–1131. [Google Scholar] [CrossRef]

- Fan, Y. Goodness-of-Fit Tests for a Multivariate Distribution by the Empirical Characteristic Function. J. Multivar. Anal. 1997, 62, 36–63. [Google Scholar] [CrossRef]

- Shiryaev, A.N. Probability. 1, 3rd ed.; Boas, R.P., Chibisov, D.M., Eds.; Graduate Texts in Mathematics; Translated from the fourth (2007) Russian; Springer: New York, NY, USA, 2016; Volume 95, pp. 17 + 486. [Google Scholar]

- Kallenberg, O. Foundations of Modern Probability; Probability Theory and Stochastic Modelling; Third edition [of 1464694]; Springer: Cham, Switzerland, 2021; Volume 99, pp. 12 + 946. [Google Scholar] [CrossRef]

- Gel’fand, I.M.; Vilenkin, N.Y. Generalized Functions. Vol. 4: Applications of Harmonic Analysis; Translated by Amiel Feinstein; Academic Press: New York, NY, USA; London, UK, 1964; pp. 14 + 384. [Google Scholar]

- Gohberg, I.; Goldberg, S. Basic Operator Theory; Birkhäuser: Boston, MA, USA, 1980; pp. 13 + 285. [Google Scholar]

- Billingsley, P. Probability and Measure, 3rd ed.; Wiley Series in Probability and Mathematical Statistics; A Wiley-Interscience Publication; John Wiley & Sons, Inc.: New York, NY, USA, 1995; pp. 14 + 593. [Google Scholar]

- Kahane, J.P.; Peyrière, J. Sur certaines martingales de Benoit Mandelbrot. Adv. Math. 1976, 22, 131–145. [Google Scholar] [CrossRef]

- Lévy, P. Esquisse d’une théorie de la multiplication des variables aléatoires. Ann. Sci. École Norm. Sup. 1959, 76, 59–82. [Google Scholar] [CrossRef]

- Zolotarev, V.M. General theory of the multiplication of random variables. Dokl. Akad. Nauk SSSR 1962, 142, 788–791. [Google Scholar]

- Simonelli, I. Convergence and symmetry of infinite products of independent random variables. Statist. Probab. Lett. 2001, 55, 45–52, Erratum in Statist. Probab. Lett. 2003, 62, 323. [Google Scholar] [CrossRef]

- Shiryaev, A.N. Probability, 2nd ed.; Graduate Texts in Mathematics; Translated from the first (1980) Russian edition by R. P. Boas; Springer: New York, NY, USA, 1996; Volume 95, pp. 16 + 623. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Esquível, M.L.; Krasii, N.P. On Structured Random Matrices Defined by Matrix Substitutions. Mathematics 2023, 11, 2505. https://doi.org/10.3390/math11112505

Esquível ML, Krasii NP. On Structured Random Matrices Defined by Matrix Substitutions. Mathematics. 2023; 11(11):2505. https://doi.org/10.3390/math11112505

Chicago/Turabian StyleEsquível, Manuel L., and Nadezhda P. Krasii. 2023. "On Structured Random Matrices Defined by Matrix Substitutions" Mathematics 11, no. 11: 2505. https://doi.org/10.3390/math11112505

APA StyleEsquível, M. L., & Krasii, N. P. (2023). On Structured Random Matrices Defined by Matrix Substitutions. Mathematics, 11(11), 2505. https://doi.org/10.3390/math11112505