Multimodal Interaction and Fused Graph Convolution Network for Sentiment Classification of Online Reviews

Abstract

:1. Introduction

- (1)

- Previous work generally takes RNN and its variants to encode text, which is challenging to capture long-range contextual dependencies among sentences, especially for a large number of texts.

- (2)

- In most DLMSA models, images are used as a complement to texts. The alignment of textual and visual information is still limited.

- (3)

- The fusion of multimodal information based on the attention mechanism or gating mechanism not just fails to remove the irrelevant visual information but also introduce more noise during weighted summation.

- A cross-modal graph convolutional network is proposed to capture the long-range contextual dependencies of text and filter the visual noise irrelevant to the text based on which the multimodal information can be sufficiently integrated.

- The description of images is introduced into the proposed model. In this way, the alignment of images with their corresponding description is conducted through a multi-head attention mechanism.

- Experiments are carried out on datasets from Yelp.com. Experimental results verify the effectiveness of our model comparing with the state of the art.

2. Related Work

2.1. Document-Level Sentiment Analysis

2.2. Document-Level Multimodal Sentiment Analysis

3. Methodology

3.1. Task Definition

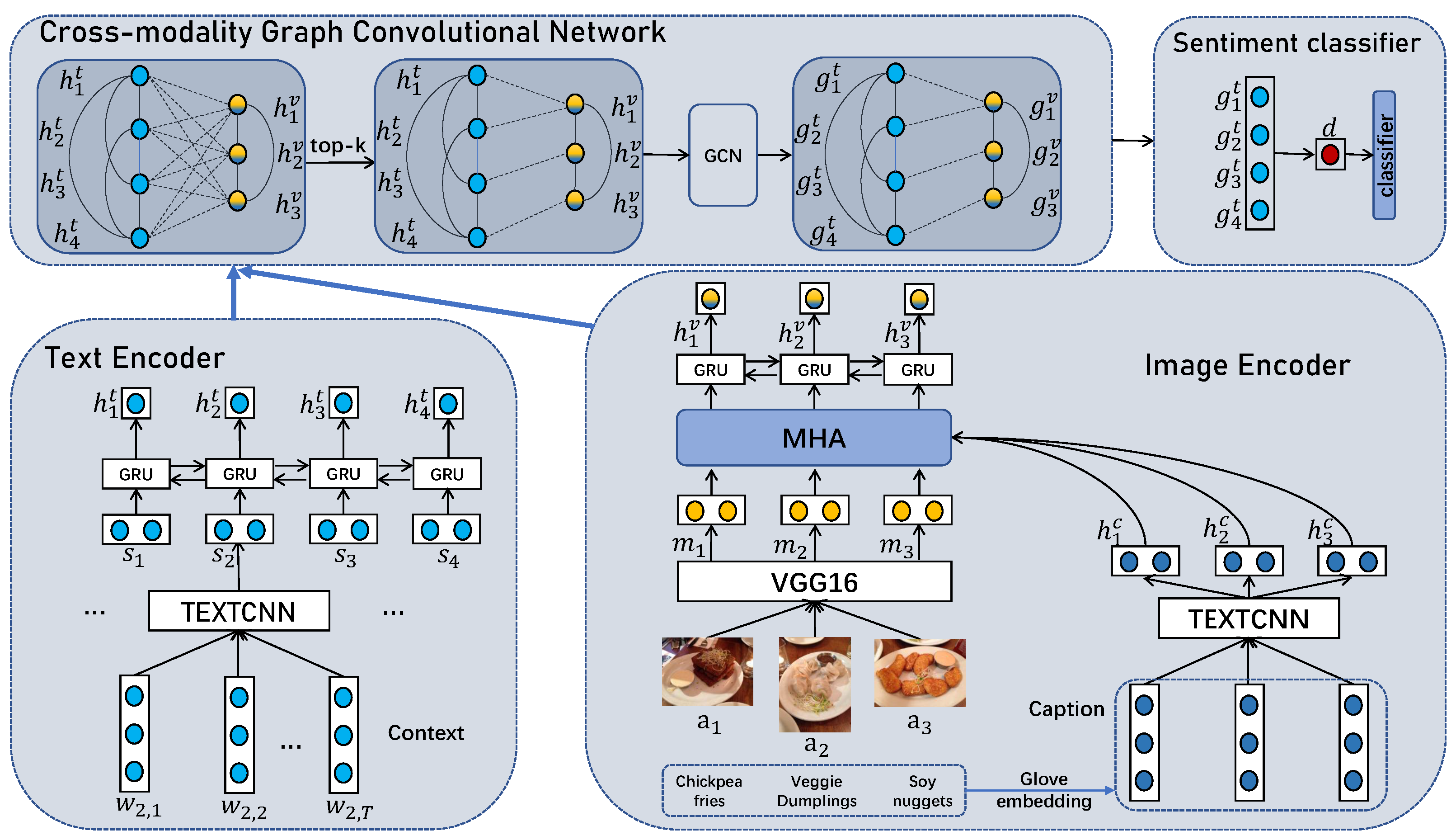

3.2. Model Architecture

3.2.1. Text Encoder

3.2.2. Image Encoder

3.2.3. Cross-Modal Graph Convolutional Network Module

- Graph construction

- Graph learning

3.2.4. Sentiment Classifier

3.2.5. Model Training

4. Experiment

4.1. Dataset

4.2. Experimental Settings

4.3. Baselines

- Bi-GRU [37]: A classical model that is based on bi-directional gated units for extracting word-level textual features and generating high-quality textual representations. Considering the multiple images, a pooling operation is used to aggregate visual features, which are further concatenated to textual features for sentiment classification. The average pooling and maximum pooling of the images are performed by Bi-GRU-a and Bi-GRU-m, respectively.

- HAN [5]: A hierarchical attention network that separately extracts word-level and sentence-level features and then generates document representation by aggregating sentence features. With respect to visual features, we also use the variants HAN-a and HAN-m to conduct average pooling and maximum pooling, respectively.

- TFN [7]: A tensor fusion approach that calculates the correlation of intermodal features and fuses the multimodal information. With respect to visual features, we also use the variants TFN-a and TFN-m to conduct average pooling and maximum pooling, respectively.

- VistaNet [8]: A HAN-based approach that computes the sentence representation attention using visual features as QUERY to highlight sentence importance. The textual and visual fusion is carried out via weighted summation.

- LD-MAN [29]: A HAN-based approach that models the textual layout as visual locations, aiming to align images with corresponding text. The multimodal representation is learned via distance-based coefficients and using a multimodal attention module.

- GAFN [9]: A gated attention network that fuses visual and textual information to generate vector representations for sentiment classification.

- HGLNET [38]: A hierarchical global–local feature fusion network that fuses global features of textual and visual modalities as well as captures the fine-grained local semantic interactions between two modalities.

4.4. Experimental Results and Analysis

4.5. Ablation Study

4.6. Impact of GCN Layers

4.7. Impact of Top-k in Cross-Modal Graph Convolutional Network

4.8. Hyperparameter Analysis

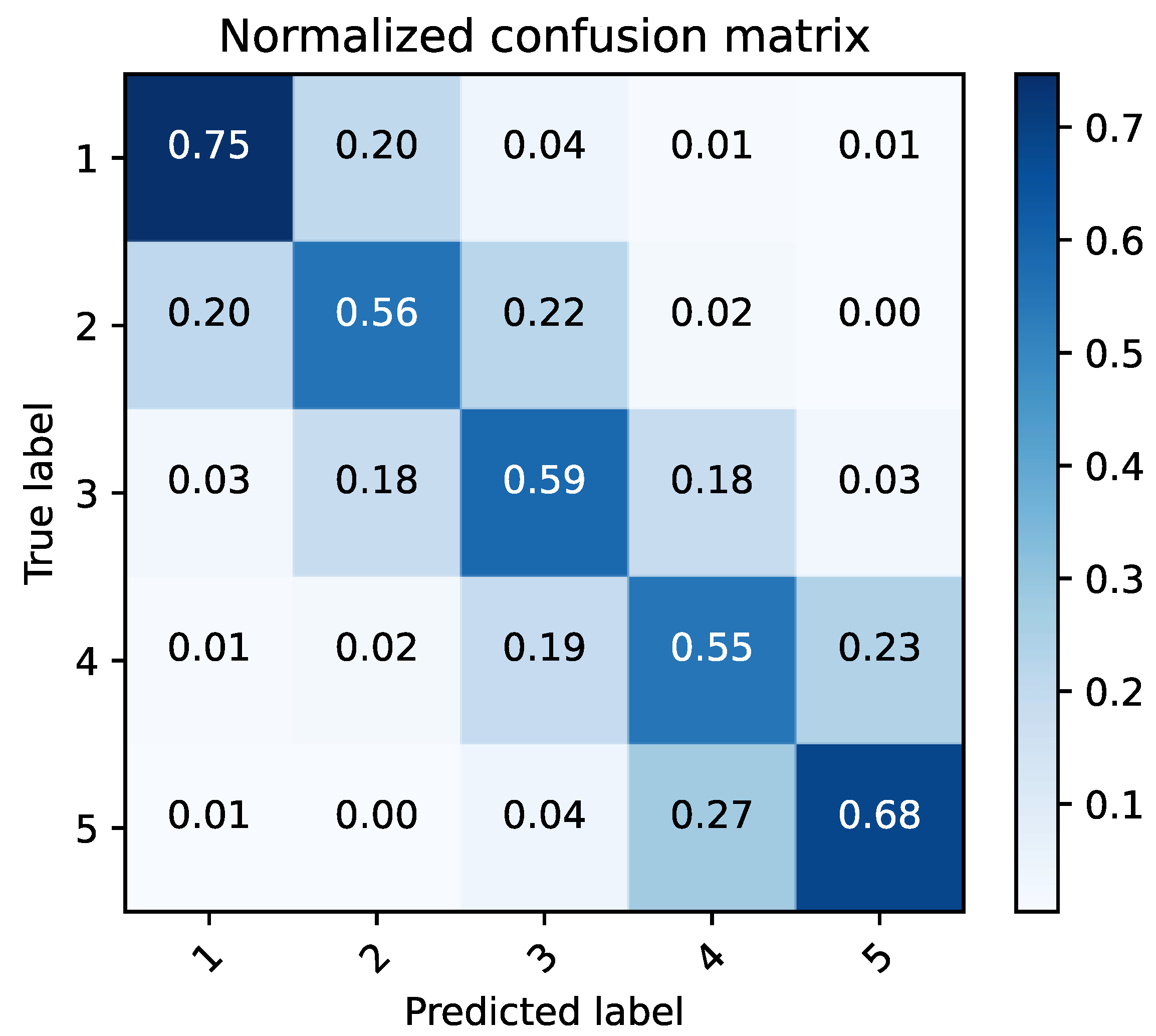

4.9. Visualization

4.10. Case Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rhanoui, M.; Mikram, M.; Yousfi, S.; Barzali, S. A CNN-BiLSTM model for document-level sentiment analysis. Mach. Learn. Knowl. Extr. 2019, 1, 832–847. [Google Scholar] [CrossRef]

- Chambers, A. Statistical Models for Text Classification and Clustering: Applications and Analysis; University of California: Irvine, CA, USA, 2013. [Google Scholar]

- Jiang, D.; He, J. Text semantic classification of long discourses based on neural networks with improved focal loss. Comput. Intell. Neurosci. 2021, 2021. [Google Scholar] [CrossRef] [PubMed]

- Zhou, C.; Sun, C.; Liu, Z.; Lau, F. A C-LSTM neural network for text classification. arXiv 2015, arXiv:1511.08630. [Google Scholar]

- Yang, Z.; Yang, D.; Dyer, C.; He, X.; Smola, A.; Hovy, E. Hierarchical attention networks for document classification. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 1480–1489. [Google Scholar]

- Huang, L.; Ma, D.; Li, S.; Zhang, X.; Wang, H. Text level graph neural network for text classification. arXiv 2019, arXiv:1910.02356. [Google Scholar]

- Zadeh, A.; Chen, M.; Poria, S.; Cambria, E.; Morency, L.P. Tensor fusion network for multimodal sentiment analysis. arXiv 2017, arXiv:1707.07250. [Google Scholar]

- Truong, Q.T.; Lauw, H.W. Vistanet: Visual aspect attention network for multimodal sentiment analysis. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 305–312. [Google Scholar]

- Du, Y.; Liu, Y.; Peng, Z.; Jin, X. Gated attention fusion network for multimodal sentiment classification. Knowl.-Based Syst. 2022, 240, 108107. [Google Scholar] [CrossRef]

- Xiong, H.; Yan, Z.; Zhao, H.; Huang, Z.; Xue, Y. Triplet Contrastive Learning for Aspect Level Sentiment Classification. Mathematics 2022, 10, 4099. [Google Scholar] [CrossRef]

- Chen, Y. Convolutional Neural Network for Sentence Classification. Master’s Thesis, University of Waterloo, Waterloo, ON, Canada, 2015. [Google Scholar]

- Zhang, X.; Zhao, J.; LeCun, Y. Character-level convolutional networks for text classification. Adv. Neural Inf. Process. Syst. 2015, 28, 1–9. [Google Scholar]

- Lai, S.; Xu, L.; Liu, K.; Zhao, J. Recurrent convolutional neural networks for text classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; Volume 29. [Google Scholar]

- Liu, P.; Qiu, X.; Huang, X. Recurrent neural network for text classification with multi-task learning. arXiv 2016, arXiv:1605.05101. [Google Scholar]

- Rao, G.; Huang, W.; Feng, Z.; Cong, Q. LSTM with sentence representations for document-level sentiment classification. Neurocomputing 2018, 308, 49–57. [Google Scholar] [CrossRef]

- Huang, Y.; Chen, J.; Zheng, S.; Xue, Y.; Hu, X. Hierarchical multi-attention networks for document classification. Int. J. Mach. Learn. Cybern. 2021, 12, 1639–1647. [Google Scholar] [CrossRef]

- Huang, W.; Chen, J.; Cai, Q.; Liu, X.; Zhang, Y.; Hu, X. Hierarchical Hybrid Neural Networks with Multi-Head Attention for Document Classification. Int. J. Data Warehous. Min. (IJDWM) 2022, 18, 1–16. [Google Scholar] [CrossRef]

- Choi, G.; Oh, S.; Kim, H. Improving document-level sentiment classification using importance of sentences. Entropy 2020, 22, 1336. [Google Scholar] [CrossRef] [PubMed]

- Sinha, K.; Dong, Y.; Cheung, J.C.K.; Ruths, D. A hierarchical neural attention-based text classifier. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 817–823. [Google Scholar]

- Liu, F.; Zheng, J.; Zheng, L.; Chen, C. Combining attention-based bidirectional gated recurrent neural network and two-dimensional convolutional neural network for document-level sentiment classification. Neurocomputing 2020, 371, 39–50. [Google Scholar] [CrossRef]

- Wang, H.; Meghawat, A.; Morency, L.P.; Xing, E.P. Select-additive learning: Improving generalization in multimodal sentiment analysis. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 949–954. [Google Scholar]

- Anastasopoulos, A.; Kumar, S.; Liao, H. Neural language modeling with visual features. arXiv 2019, arXiv:1903.02930. [Google Scholar]

- Poria, S.; Chaturvedi, I.; Cambria, E.; Hussain, A. Convolutional MKL based multimodal emotion recognition and sentiment analysis. In Proceedings of the 2016 IEEE 16th International Conference on Data Mining (ICDM), Barcelona, Spain, 12–15 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 439–448. [Google Scholar]

- Zadeh, A.; Liang, P.P.; Poria, S.; Vij, P.; Cambria, E.; Morency, L.P. Multi-attention recurrent network for human communication comprehension. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Xu, N.; Mao, W. Multisentinet: A deep semantic network for multimodal sentiment analysis. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; pp. 2399–2402. [Google Scholar]

- Xu, N.; Mao, W.; Chen, G. A co-memory network for multimodal sentiment analysis. In Proceedings of the 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018; pp. 929–932. [Google Scholar]

- Zhu, T.; Li, L.; Yang, J.; Zhao, S.; Liu, H.; Qian, J. Multimodal sentiment analysis with image-text interaction network. IEEE Trans. Multimed. 2022, 1. [Google Scholar] [CrossRef]

- Tian, Y.; Sun, X.; Yu, H.; Li, Y.; Fu, K. Hierarchical self-adaptation network for multimodal named entity recognition in social media. Neurocomputing 2021, 439, 12–21. [Google Scholar] [CrossRef]

- Guo, W.; Zhang, Y.; Cai, X.; Meng, L.; Yang, J.; Yuan, X. LD-MAN: Layout-driven multimodal attention network for online news sentiment recognition. IEEE Trans. Multimed. 2020, 23, 1785–1798. [Google Scholar] [CrossRef]

- Bengio, Y.; Ducharme, R.; Vincent, P. A neural probabilistic language model. Adv. Neural Inf. Process. Syst. 2000, 13, 1137–1155. [Google Scholar]

- Hazarika, D.; Poria, S.; Mihalcea, R.; Cambria, E.; Zimmermann, R. Icon: Interactive conversational memory network for multimodal emotion detection. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 2594–2604. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 248–255. [Google Scholar]

- Chen, M.; Wei, Z.; Huang, Z.; Ding, B.; Li, Y. Simple and deep graph convolutional networks. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; PMLR: Coventry, UK, 2020; pp. 1725–1735. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Tang, D.; Qin, B.; Liu, T. Document modeling with gated recurrent neural network for sentiment classification. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1422–1432. [Google Scholar]

- Wu, J.; Zhao, J.; Xu, J. HGLNET: A Generic Hierarchical Global-Local Feature Fusion Network for Multi-Modal Classification. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Dataset | Train | Valid | Test | ||||

|---|---|---|---|---|---|---|---|

| BO | CH | LA | NY | SF | |||

| #Docs | 35,435 | 2215 | 315 | 325 | 3730 | 1715 | 570 |

| Avg. #Words | 225 | 226 | 211 | 208 | 223 | 219 | 244 |

| Max. #Words | 1134 | 1145 | 1099 | 1095 | 1103 | 1080 | 1116 |

| Min. #Words | 10 | 12 | 14 | 15 | 12 | 14 | 10 |

| Avg. #Images | 5.54 | 5.35 | 5.25 | 5.60 | 5.43 | 5.52 | 5.69 |

| Max. #Images | 147 | 38 | 42 | 97 | 128 | 222 | 74 |

| Min. #Images | 3 | 3 | 3 | 3 | 3 | 3 | 3 |

| Methods | BO | CH | LA | NY | SF | Avg. |

|---|---|---|---|---|---|---|

| TFN-a | 46.35 | 43.69 | 43.91 | 43.79 | 42.81 | 43.89 |

| TFN-m | 48.25 | 47.08 | 46.70 | 46.71 | 47.54 | 46.87 |

| Bi-GRU-a | 51.23 | 51.33 | 48.99 | 49.55 | 48.60 | 49.32 |

| Bi-GRU-m | 53.92 | 53.51 | 52.09 | 52.14 | 51.36 | 52.20 |

| HAN-a | 55.18 | 54.88 | 53.11 | 52.96 | 51.98 | 53.16 |

| HAN-m | 56.77 | 57.02 | 55.06 | 54.66 | 53.69 | 55.01 |

| VistaNet | 63.81 | 65.74 | 62.01 | 61.08 | 60.14 | 61.88 |

| LD-MAN | 61.90 | 64.00 | 61.02 | 61.57 | 59.47 | 61.22 |

| GAFN | 61.60 | 66.20 | 59.00 | 61.00 | 60.70 | 60.10 |

| HGLNET | 65.47 | 69.58 | 60.78 | 63.43 | 60.35 | 62.07 |

| MIFGCN (Ours) | 62.86 | 67.38 | 62.01 | 61.17 | 64.04 | 62.29 |

| Model | BO | CH | LA | NY | SF | Avg. |

|---|---|---|---|---|---|---|

| MIFGCN (Full Model) | 62.86 | 67.38 | 62.04 | 61.17 | 64.04 | 62.29 |

| 65.08 | 67.08 | 61.39 | 61.05 | 58.77 | 61.53 | |

| 57.46 | 65.54 | 61.96 | 60.58 | 61.05 | 61.49 | |

| 64.13 | 65.54 | 61.47 | 60.76 | 59.12 | 61.41 | |

| 62.22 | 66.46 | 60.70 | 60.52 | 61.58 | 61.08 |

| Layers | Avg. |

|---|---|

| 1 | 61.26 |

| 2 | 61.31 |

| 4 | 62.29 |

| 8 | 61.18 |

| 16 | 61.16 |

| 32 | 60.72 |

| k | Avg. |

|---|---|

| 3 | 61.43 |

| 2 | 61.60 |

| 1 | 62.29 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, D.; Chen, X.; Song, Z.; Xue, Y.; Cai, Q. Multimodal Interaction and Fused Graph Convolution Network for Sentiment Classification of Online Reviews. Mathematics 2023, 11, 2335. https://doi.org/10.3390/math11102335

Zeng D, Chen X, Song Z, Xue Y, Cai Q. Multimodal Interaction and Fused Graph Convolution Network for Sentiment Classification of Online Reviews. Mathematics. 2023; 11(10):2335. https://doi.org/10.3390/math11102335

Chicago/Turabian StyleZeng, Dehong, Xiaosong Chen, Zhengxin Song, Yun Xue, and Qianhua Cai. 2023. "Multimodal Interaction and Fused Graph Convolution Network for Sentiment Classification of Online Reviews" Mathematics 11, no. 10: 2335. https://doi.org/10.3390/math11102335

APA StyleZeng, D., Chen, X., Song, Z., Xue, Y., & Cai, Q. (2023). Multimodal Interaction and Fused Graph Convolution Network for Sentiment Classification of Online Reviews. Mathematics, 11(10), 2335. https://doi.org/10.3390/math11102335