Abstract

(1) Background: There was a need for 3D image reconstruction from a series of 2D projections in medical applications. However, additional exposure to X-ray projections may harm human health. To alleviate it, minimizing the projection numbers is a solution to reduce X-ray exposure, but this would cause significant image noise and artifacts. (2) Purpose: In this study, a method was proposed for the reconstruction of a 3D image from a minimal set of 2D X-ray projections using a B-spline-based deformable transformation. (3) Methods: The inputs of this method were a 3D image which was acquired in previous treatment and used as a prior image and a minimal set of 2D projections which were acquired during the current treatment. The goal was to reconstruct a new 3D image in current treatment from the two inputs. The new 3D image was deformed from the prior image via the displacement matrixes that were interpolated by the B-spline coefficients. The B-spline coefficients were solved with the objective function, which was defined as the mean square error between the reconstructed and the ground-truth projections. In the optimization process the gradient of the objective function was calculated, and the B-spline coefficients were then updated. For the acceleration purpose, the computation of the 2D and 3D image reconstructions and B-spline interpolation were implemented on a graphics processing unit (GPU). (4) Results: When the scan angles were more than 60°, the image quality was significantly improved, and the reconstructed image was comparable to that of the ground-truth image. As the scan angles were less than 30°, the image quality was significantly degraded. The influence of the scan orientation on the image quality was minor. With the application of GPU acceleration, the reconstruction efficiency was improved by hundred times compared to that of the conventional CPU. (5) Conclusions: The proposed method was able to generate a high-quality 3D image using a few 2D projections, which amount to ~ 20% of the total projections required for a standard image. The introduction of the B-spline-interpolated displacement matrix was effective in the suppressing noise in the reconstructed image. This method could significantly reduce the imaging time and the radiation exposure of patients under treatment.

MSC:

68U10

1. Introduction

A 3D image, such as computed tomography (CT) or cone-beam CT (CBCT), can be generated from a series of 2D projections acquired in a single X-ray scan. They have been widely used in radiation therapy for target localization [1,2,3,4]. CBCT imaging delivers a radiation dose to a large volume of the patient’s body and collects the residual dose on the detectors. As the accumulated imaging doses to patients could be significant and increase the risk of secondary cancer induction [5,6,7], there is a demand for low-dose CBCT with an adequate image quality in clinic. For reducing the imaging dose, two parameters (exposure level and projection number) were needed to be adjusted. However, reducing the exposure level will lead to increased noise in the reconstructed images, while reducing the projection number will violate the Nyquist–Shannon sampling theorem, leading to serious streak artifacts.

To solve these issues, compressed sensing methods based on the total variation (TV) have been developed to improve the image quality of low-dose CT/CBCT [8,9,10,11,12]. Although these methods successfully reduce the noise and streak artifacts, they also tend to over-smooth the edge information. Alternatively, other compressed-sensing-based methods used prior images for low-dose CBCT [13]. In the prior-image-constrained compressed sensing (PICCS) method, prior images were used as an additional constraint to minimize the image’s total variation [14]. Adaptive-prior-image-constrained compressed sensing (APICCS) was then proposed to enhance the image quality of PICCS [15,16]. The rigid or deformable registration between the prior image and the reconstructed volume was then incorporated to improve the image quality [17,18]. Moreover, a motion model was introduced to further reduce the scan angle needed for CBCT reconstruction [19,20,21].

Based on the compressed sensing theory, images can be reconstructed from limited-view and limited-angle projections [22]. However, these methods generally require the sparseness prior of the image, which may be not valid. Based on the deformable transform, images can be reconstructed from prior images [23,24,25,26,27]. Lei et al. proposed a deformable field map method to reconstruct 3D images from limited-angle 2D projections. The missing information was made up of highly correlated prior images. However, due to the high fluctuation of the gradient derived from the objective function, the resulted deformation field map comes with a higher deviation, which could result in the failure of the image transformation [28,29]. To solve this issue, the gradient or deformation field map should be smoothed in a rational way.

Several smoothing methods for deformable transformations can be found in image registration algorithms, including demons registration, viscous fluid registration, B-spline registration and thin-plate splines. Among them, B-spline is the most popular one because of its flexibility and robustness which provide the ability to represent the complex spatial distribution of the data and image in 3D [30,31]. B-spline is used to define the displacement matrixes which map voxels in a moving image to those in a reference image. The individual voxel movement between the two images is parameterized in terms of the uniformly spaced control points that are aligned with the voxel grid, and the displacement vectors are obtained via the interpolation of the control point coefficients using piecewise continuous B-spline basis functions. B-spline interpolation is computationally intensive and requires hours for higher image resolutions [32].

As there are many computation intensity components in CBCT reconstruction, it is more efficient to implement them on a graphics processing unit (GPU) which can handle multiple similar tasks through parallel computation [33]. Its effectiveness was already demonstrated in accelerating image reconstruction tasks, including DRR [34], CBCT [35], 4DCT [36], etc. The integrated GPU-CPU platform can potentially be applied to different medical image applications, such as medical image segmentation and registration [37,38]. Also, GPUs paved the way for various promising real-time and on-line clinical applications, such as tumor motion tracking [39], respiration motion tracking and cardiac motion tracking [40].

In this study, a B-spline-based deformable transformation method was proposed for 3D CBCT reconstructions. Instead of the real-value form of the displacement matrixes in traditional methods, the B-spline coefficients of the displacement matrixes were employed. These coefficients were optimized by minimizing the objective function and finally interpolated the real-value displacement matrixes. For acceleration purposes, the computation of 2D and 3D reconstructions were implemented on a GPU. The proposed method was evaluated through a clinical head-and-neck case and compared with the traditional method. Finally, the influence of the scan angle and scan orientation on the image quality was analyzed, and the advantage of this method was discussed.

2. Methods

2.1. Deformation-Based Reconstruction Method

The traditional method used the real-value displacement matrixes to transform the prior image into the target image. In the following, V0 and V are the prior image and target image, and D is the displacement matrix. The transformation from V0 to V can be formulated as

where are the displacements matrixes along x, y, and z axes, respectively. n is the image size, and ijk are the 3D indexes of voxel. V was transformed from V0 through trilinear interpolation as described below.

Here, are the three-dimensional indices of grid adjacent to the voxel coordinates, and are the corresponding fractional parts. , and are pairs of weights along three axes.

The fidelity of target image could be guaranteed by approximating its projections to the ground-truth projections. The 2D projections Y can be calculated from V as

where P is the projection operator implemented by ray-tracing algorithm. As there are not enough equations in (3), to solve this ill-posed problem, the regularization term was introduced. Here, the bending energy defined as below was employed to enforce the flatness of D.

The objective function was then established as

This problem could be transformed to an unconstraint problem with a weight µ

The gradient of f(D) could be derived as

where P−1 is the inverse projection operator which backprojects 2D projections onto 3D volume. could be calculated with respect to D along three axes.

could be calculated with respect to D as below.

When both and were obtained, in Equation (7) could be solved.

2.2. B-Spline Interpolation

The uniform cubic B-spline basis functions were employed in this study. The real-value displacement matrix was interpolated from B-spline coefficients. In 3D case, the deformation field at any given voxel was determined with the 64 control points in the immediate vicinity of the voxel’s housing tile. B-spline interpolation was performed for each voxel with respect to the 64 control point coefficients as follows

where Ixlmn is the spline coefficient defining the x component of the displacement vector for one of the 64 control points. Nx, Ny and Nz are voxels per region (VPR) defining the distance between control points in terms of voxels in the x, y and z directions, respectively. The volume was, therefore, segmented by the B-spline control point grid into many equal-sized tiles of dimensions Nx × Ny × Nz. The three-dimensional indices xt, yt and zt of the tile for the voxel at x, y and z was given by , respectively. The coordinates of the voxel within its tile were .

The uniform cubic B-spline basis functions were given by βl(u), βm(v) and βn(w) along the x, y and z axes, respectively.

VPR was an important parameter which controlled the approximation quality of B-spline curve for the target image. The fine VPR reduced the approximation error but caused higher computation costs. The coarse VPR increased the approximation error but was more time efficient. The balance between the accuracy and efficiency was investigated in the latter section.

2.3. B-Spline-Based Reconstruction Method

With B-spline-interpolated displacement matrix D, Equations (1) and (3) were reformulated as

and the objective function of Equation (5) was updated as

The gradient of the objective function was then expressed as:

The gradient could be calculated using chain rule as

Combining Equation (2) and Equation (11), could be calculated as

According to the definition of in Equation (10), is given by

The bending energy function could also be updated as

and its discrete form is

could be calculated using chain rule as

where

Combining Equations (13) and (15) into Equation (12), could be finally obtained as

2.4. Evaluation

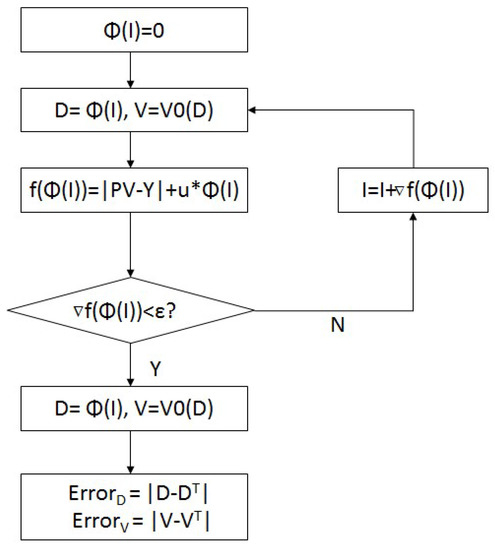

The proposed method was implemented in an iterative manner as shown in Figure 1. Initially, the B-spline coefficients and the displacement matrixes were set to zero. The gradient of the objective function was then calculated, and the B-spline coefficients were updated based on the resulting gradient. After certain iterations, the optimization process converged when the value of was less than the threshold or the maximum number of iterations reached. The real-value displacement matrixes were interpolated from the optimized B-spline coefficients. Then, the target image was transformed from the prior image via the resulted real-value displacement matrixes. Finally, the approximation errors of B-spline-interpolated displacement matrix and the reconstructed target image were calculated for analysis.

Figure 1.

The flowchart of B-spline-based reconstruction method.

To evaluate the similarity between the reconstructed target image (VR) and the ground-truth target image (VT), three intensity-based statistical metrics, normalized cross correlation, mutual information and mean absolute percentage error, were employed. Normalized cross correlation (NCC) was defined as

where µR and µT are means of VR and VT and where σR and σT are standard deviations of VR and VT. Mutual information (MI) was defined as

where p(xR) and p(xT) are the normalized histograms of VR and VT and where p(xR, xT) is the joint histogram of VR and VT. In order to calculate the intensity difference between two images, the mean absolute percentage error (MAPE) was defined as

where xRi and xTi are the voxels of images VR and VT and where n is the total number of voxels.

The proposed method was examined using a clinical head-and-neck patient. The CBCT image size was 256 × 256 × 256, and the cone-beam projection size was 512 × 384. The full-angle reference CBCT and target CBCT were reconstructed with FDK method using two sets of CB projections that were acquired on two separate days. The CB projections were acquired at every 0.55°. The influence of scan angle (30°, 45°, 60° and 90°) and scan orientation (central angle at 0°, 45° and 90°) were investigated. The accuracy of our method was evaluated by comparing the limited-angle CBCT (target image) to the full-angle CBCT (ground-truth) that was reconstructed with Feldkamp–Davis–Kress (FDK) method. The reconstruction and evaluation codes were built on the open-source platform, Plastimatch, which provides B-spline-based image registration and GPU-based image reconstruction toolkits.

3. Results

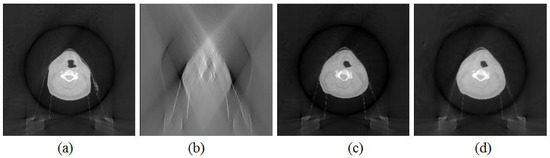

The images reconstructed using the proposed and FDK methods on the clinical head-and-neck case is shown in Figure 2. The projections were acquired with a 0° scan orientation and a 60° scan angle. The prior image that was reconstructed using a full-angle scan with the FDK method is shown in Figure 2a. The target image that was reconstructed with the FDK method using a limited-angle scan is shown in Figure 2b. The target image that was reconstructed with the B-spline-based reconstruction method using a limited-angle scan is shown in Figure 2c. As the ground-truth image, the target image that was reconstructed with the FDK method using a full-angle scan is shown in Figure 2d. The similarity between the images in Figure 2c,d was high, which indicated that the proposed method could generate comparable images, using a limited-angle scan, to the ground-truth image, using a full-angle scan.

Figure 2.

The comparison between images reconstructed with the different reconstruction methods. (a) Prior image acquired reconstructed with FDK method using full-angle scan. (b) The target image reconstructed with FDK method using limited-angle scan. (c) The target image reconstructed with B-spline-based reconstruction method using limited-angle scan. (d) The target image reconstructed with FDK method using full-angle scan.

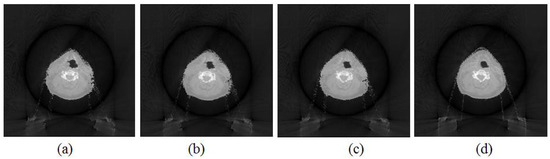

The images that were reconstructed with the deformation-based reconstruction method as described in Section 2.1 are shown in Figure 3. The projections were acquired with a 0° scan orientation and 30°/45°/60°/90° angles. It was noticed that there was strong noise in the target images that were reconstructed with 30°, 45° and 60° scan angles. The image quality of the reconstructed images became worse as the scan angle decreased. Among the four images, the image that was reconstructed with a 90° scan angle (Figure 3d) showed the best quality and was closer to the ground-truth image (Figure 2d).

Figure 3.

The images reconstructed with the deformation-based reconstruction method as described in Section 2.1. (a) Image reconstructed with 30° scan angle. (b) Image reconstructed with 45° scan angle. (c) Image reconstructed with 60° scan angle. (d) Image reconstructed with 90° scan angle.

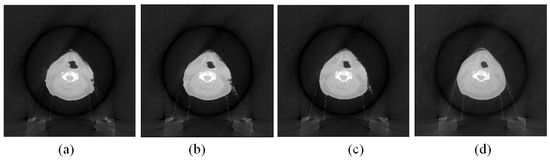

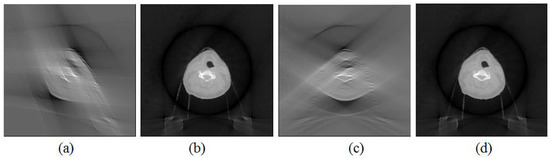

The images that were reconstructed with the proposed B-spline-based reconstruction method using a limited-angle scan as described in Section 2.3 are shown in Figure 4. The setting of this reconstruction was the same as the one used in Figure 3. It was noticed that the noise in the images as shown in Figure 4 was greatly suppressed comparing to that in the images in Figure 3. The image quality with the 60° and 90° scan angles was better and was closer to that of the ground-truth image (Figure 2d). The image quality with the 90° scan angle (Figure 4d) was the best but required 50% more projections than that with the 60 ° scan angle (Figure 4c).

Figure 4.

The images reconstructed with the B-spline-based reconstruction method as described in Section 2.3. (a) Image reconstructed with 30° scan angle. (b) Image reconstructed with 45° scan angle. (c) Image reconstructed with 60° scan angle. (d) Image reconstructed with 90° scan angle.

The influence of the scan orientation on the image quality was also investigated. The images that were reconstructed with the FDK method and the proposed method with a 0° scan orientation and a 60° scan angle are shown in Figure 2b,c. The images that were reconstructed with both methods with a 45° scan orientation and a 60° scan angle are shown in Figure 5a,b, while the images that were reconstructed with both methods with a 90° scan orientation and a 60° scan angle are shown in Figure 5c,d. The images as shown in Figure 3a–c that were reconstructed with the FDK method with three scan orientations were significantly different. The images as shown in Figure 2c and Figure 5b,d that were reconstructed with the proposed method with three scan orientations were similar to each other. This indicated that the influence of the scan orientation on the image quality of the reconstructed image was minor.

Figure 5.

The image reconstructed with different scan orientations with the FDK and proposed methods. (a) Image reconstructed with 45° scan orientation with FDK method. (b) Image reconstructed with 45° scan orientation with the proposed method. (c) Image reconstructed with 90° scan orientation with FDK method. (d) Image reconstructed with 90° scan orientation with the proposed method.

The accuracy of the B-spline-interpolated displacement matrix and the reconstructed target image with respect to the different settings of the VPR and scan angles were investigated. In Table 1, the final B-spline-interpolated displacement matrix was compared to the ground-truth displacement matrix which was obtained from the deformable transformation between the prior and ground-truth images. For a combination of the scan angle and VPR, three similarity metrics (NCC, MI and MAPE) were calculated. The result showed that, with a smaller VPR and a larger scan angle, the similarity between the resulted and ground-truth displacement matrixes was higher. In Table 2, the final reconstructed target image was compared to the ground-truth target image. For a combination of the scan angle and VPR, the same three similarity metrics were calculated. The result showed that, with a smaller VPR and a larger scan angle, the similarity between the reconstructed and ground-truth images was higher.

Table 1.

The accuracy of the B-spline-interpolated displacement matrix.

Table 2.

The accuracy of the reconstructed target image.

The running time on the CPU and GPU platforms were compared in Table 3. The inputs were the 2D projections acquired with the 30° or 60° scan angle and the 3D prior image. The dimensions of the 2D projections were 512 × 384, and the dimensions of the 3D image was 256 × 256 × 256. The output was the 3D image in the dimensions of 256 × 256 × 256. The computation of the 2D and 3D image reconstructions and B-spline coefficients were all accomplished on a GPU. Two popular graphics cards, Nvidia Geforce GTX 1080 and Nvidia Geforce GTX 2080, were tested. The computer was equipped with a Intel Xeon 2609 CPU and a 64GB DRR4 memory. It showed that the running time on a GPU was hundred times faster than that on a traditional CPU.

Table 3.

Running time on CPU and GPU for different reconstruction tasks.

4. Discussion

With a limited-angle scan, the proposed method could reconstruct images comparable to the standard images reconstructed with a full-angle scan. This will significantly reduce the time and dose during multiple data acquisition and would be beneficial for patients under treatment. With the introduction of the B-spline interpolation, the image noise and artifacts were suppressed, and the edge of the anatomical structures was improved. The VPR had a certain effect on the image quality, but it could be minimized with smaller values. With the acceleration of a GPU, the iterative CBCT reconstruction could be mostly accomplished within 10 min. This would make it possible for several clinical scenarios.

There are many factors that could affect the image quality and accuracy of the reconstructed images. Among them, the scan orientation and scan angle are the most important ones. Our experiments showed that the scan orientation had a small influence on the images, but the scan angle had a large influence on the resulting images. When the scan angle was less than 30°, there was strong noise and a larger structure discontinuity in the reconstructed images. A larger scan angle would result in a better image but would take more time for reconstruction computation. Therefore, to balance accuracy and efficiency, a 60° scan angle would be favorable for clinical use.

The introduction of the B-spline interpolation was effective for noise suppression. It could also be feasible in the other types of limited-angle reconstruction methods, such as total-variation-based and optical-flow-based reconstruction methods. In addition, this method could also be used for the reconstruction of 4D-CT and 4D-CBCT with limited-angle projections. In these 4D reconstruction tasks, the 3D volume in one phase could be used as a prior image to predict the 3D volume of another phase. As a large amount of projections is usually required in 4D applications, the reduction of the time and dose resulted by the proposed method on data acquisition would be considerable.

It is also possible to extend this method to the other industrial domains, such as magnetic resonance imaging, radar interferometry, electromagnetism, etc. As these fields differ from medical imaging, it would be important to identify the proper setting and parameters for them. For example, the imaging principle of MRIs would differ from that of CT/CBCTs substantially. The former is generated by detecting the direction of the change of protons in the water, while the latter is generated from the X-ray interaction with the material on its path and finally deposing the residual radiation dose on the detectors. The proper preprocessing and adjustments would be necessary.

5. Conclusions

A new B-spline-based limited-angle reconstruction method was developed and demonstrated its feasibility in reconstructing high-quality images comparable to the standard images. With the introduction of the B-spline interpolation, the image noise was significantly suppressed, and the contour of the anatomical structures was improved. With the GPU acceleration, the limited clinical time of image reconstruction could be greatly alleviated. Potentially, this reconstruction method could be used in the other industrial domains, but proper preprocessing and adjustments would be needed.

Author Contributions

Conception and design: H.Y., Administrative support: J.D. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Natural Science Foundation of China (No. 11975312) and the Beijing Municipal Natural Science Foundation (7202170).

Conflicts of Interest

The authors have no conflict of interest to declare.

References

- Nasseh, I.; Al-Rawi, W. Cone Beam Computed Tomography. Dent. Clin. North Am. 2018, 62, 361–391. [Google Scholar] [CrossRef] [PubMed]

- Ruschin, M.; Komljenovic, P.T.; Ansell, S.; Ménard, C.; Bootsma, G.; Cho, Y.B.; Chung, C.; Jaffray, D. Cone beam computed tomography image guidance system for a dedicated intracranial radiosurgery treatment unit. Int. J. Radiat. Oncol. Biol. Phys. 2013, 85, 243–250. [Google Scholar] [CrossRef] [PubMed]

- Létourneau, D.; Wong, J.W.; Oldham, M.; Gulam, M.; Watt, L.; Jaffray, D.A.; Siewerdsen, J.H.; Martinez, A.A. Cone-beam-CT guided radiation therapy: Technical implementation. Radiother. Oncol. 2005, 75, 279–286. [Google Scholar] [CrossRef]

- Efird, J.T.; Jindal, C.; Podder, T.K.; Kang, K.H.; Biswas, T. Cone beam computed tomography-the need for future guidance. J. Thorac. Dis. 2022, 14, 3681–3683. [Google Scholar] [CrossRef] [PubMed]

- Hioki, K.; Araki, F.; Oono, T.; Tomiyama, Y.; Nakaguchi, Y. Monte Carlo-calculated patient organ doses from kV-cone beam CT in image-guided radiation therapy. Biomed. Phys. Eng. Express 2015, 1, 025203. [Google Scholar] [CrossRef]

- Ohno, T.; Araki, F.; Onizuka, R.; Hioki, K.; Tomiyama, Y.; Yamashita, Y. New absorbed dose measurement with cylindrical water phantoms for multidetector CT. Phys. Med. Biol. 2015, 60, 4517. [Google Scholar] [CrossRef]

- Santoso, A.P.; Song, K.H.; Qin, Y.; Gardner, S.J.; Liu, C.; Chetty, I.J.; Movsas, B.; Ajlouni, M.; Wen, N. Evaluation of gantry speed on image quality and imaging dose for 4D cone-beam CT acquisition. Radiat. Oncol. 2016, 11, 98. [Google Scholar] [CrossRef]

- Jia, X.; Lou, Y.; Li, R.; Song, W.Y.; Jiang, S.B. GPU-based fast cone beam CT reconstruction from undersampled and noisy projection data via total variation. Med. Phys. 2010, 37, 1757–1760. [Google Scholar] [CrossRef]

- Sidky, E.Y.; Pan, X. Image reconstruction in circular cone-beam computed tomography by constrained, total-variation minimization. Phys. Med. Biol. 2008, 53, 4777–4807. [Google Scholar] [CrossRef]

- Shouliang, Q.; Teng, Y.; Kang, Y.; Qi, S. A separable quadratic surrogate total variation minimization algorithm for accelerating accurate CT reconstruction from few-views and limited-angle data. Med. Phys. 2018, 45, 535–548. [Google Scholar]

- Song, J.; Liu, Q.H.; Johnson, G.A.; Badea, C.T. Sparseness prior based iterative image reconstruction for retrospectively gated cardiac micro-CT. Med. Phys. 2007, 34, 4476–4483. [Google Scholar] [CrossRef] [PubMed]

- Niu, S.; Gao, Y.; Bian, Z.; Huang, J.; Chen, W.; Yu, G.; Liang, Z.; Ma, J. Sparse-view x-ray CT reconstruction via total generalized variation regularization. Phys. Med. Biol. 2014, 59, 2997. [Google Scholar] [CrossRef] [PubMed]

- Qi, Z.; Chen, G.-H. Extraction of tumor motion trajectories using PICCS-4DCBCT: A validation study. Med. Phys. 2011, 38, 5530–5538. [Google Scholar] [CrossRef] [PubMed]

- Chen, G.-H.; Tang, J.; Leng, S. Prior image constrained compressed sensing (PICCS): A method to accurately reconstruct dynamic CT images from highly undersampled projection data sets. Med. Phys. 2008, 35, 660–663. [Google Scholar] [CrossRef] [PubMed]

- Lee, H.; Xing, L.; Davidi, R.; Li, R.; Qian, J.; Lee, R. Improved compressed sensing-based cone-beam CT reconstruction using adaptive prior image constraints. Phys. Med. Biol. 2012, 57, 2287. [Google Scholar] [CrossRef]

- Huang, J.; Zhang, Y.; Ma, J.; Zeng, D.; Bian, Z.; Niu, S.; Feng, Q.; Liang, Z.; Chen, W. Iterative image reconstruction for sparse-view CT using normal-dose image induced total variation prior. PLoS ONE 2013, 8, e79709. [Google Scholar] [CrossRef]

- Stayman, J.W.; Dang, H.; Ding, Y.; Siewerdsen, J.H. PIRPLE: A penalized-likelihood framework for incorporation of prior images in CT reconstruction. Phys. Med. Biol. 2013, 58, 7563. [Google Scholar] [CrossRef]

- Dang, H.; Wang, A.S.; Sussman, M.S.; Siewerdsen, J.H.; Stayman, J.W. dPIRPLE: A joint estimation framework for deformable registration and penalized likelihood CT image reconstruction using prior images. Phys. Med. Biol. 2014, 59, 4799. [Google Scholar] [CrossRef]

- Ren, L.; Zhang, Y.; Yin, F.F. A limited-angle intrafraction verification (LIVE) system for radiation therapy. Med Phys. 2014, 41, 020701. [Google Scholar] [CrossRef]

- Zhang, Y.; Yin, F.F.; Pan, T.; Vergalasova, I.; Ren, L. Preliminary clinical evaluation of a 4D-CBCT estimation technique using prior information and limited-angle projections. Radiother. Oncol. 2015, 115, 22–29. [Google Scholar] [CrossRef]

- Zhang, Y.; Yin, F.-F.; Zhang, Y.; Ren, L. Reducing scan angle using adaptive prior knowledge for a limited-angle intrafraction verification (LIVE) system for conformal arc radiotherapy. Phys. Med. Biol. 2017, 62, 3859. [Google Scholar] [CrossRef] [PubMed]

- Ertas, M.; Yildirim, I.; Kamasak, M.; Akan, A. Digital breast tomosynthesis image reconstruction using 2D and 3D total variation minimization. Biomed. Eng. 2013, 12, 112. [Google Scholar] [CrossRef] [PubMed]

- Badea, C.T.; Schreibmann, E.; Fox, T. A registration based approach for 4D cardiac micro-CT using combined prospective and retrospective gating. Med. Phys. 2008, 35, 1170–1179. [Google Scholar] [CrossRef] [PubMed]

- Isola, A.A.; Grass, M.; Niessen, W.J. Fully automatic nonrigid registration-based local motion estimation for motion-corrected iterative cardiac CT reconstruction. Med. Phys. 2010, 37, 1093–1109. [Google Scholar] [CrossRef]

- Ehrhardt, J.; Werner, R.; Säring, D.; Frenzel, T.; Lu, W.; Low, D.; Handels, H. An optical flow based method for improved reconstruction of 4D CT data sets acquired during free breathing. Med. Phys. 2007, 34, 711–721. [Google Scholar] [CrossRef] [PubMed]

- Lustig, M.; Donoho, D.; Pauly, J.M. Sparse MRI: The application of compressed sensing for rapid MR imaging. Magn. Reson. Med. 2007, 58, 1182–1195. [Google Scholar] [CrossRef] [PubMed]

- Nie, X.; Saleh, Z.; Kadbi, M.; Zakian, K.; Deasy, J.; Rimner, A.; Li, G. A super-resolution framework for the reconstruction of T2-weighted (T2w) time-resolved (TR) 4DMRI using T1w TR-4DMRI as the guidance. Med. Phys. 2020, 47, 3091–3102. [Google Scholar] [CrossRef]

- Ren, L.; Zhang, J.; Thongphiew, D.; Godfrey, D.J.; Wu, Q.J.; Zhou, S.M.; Yin, F.F. A novel digital tomosynthesis (DTS) reconstruction method using a deformation field map. Med. Phys. 2008, 35, 3110–3115. [Google Scholar] [CrossRef]

- Ren, L.; Chetty, I.J.; Zhang, J.; Jin, J.-Y.; Wu, Q.J.; Yan, H.; Brizel, D.M.; Lee, W.R.; Movsas, B.; Yin, F.-F. Development and clinical evaluation of a three-dimensional cone-beam computed tomography estimation method using a deformation field map. Int. J. Radiat. Oncol. Biol. Phys. 2012, 82, 1584–1593. [Google Scholar] [CrossRef]

- Tustison, N.J.; Avants, B.B. Directly manipulated free-form deformation image registration. IEEE Trans. Image Process. 2009, 18, 624–635. [Google Scholar] [CrossRef]

- Lu, X.; Suo, S.; Liu, H.; Zhang, S. Three-dimensional multimodal image non-rigid registration and fusion in a High Intensity Focused Ultrasound system. Comput. Aided Surg. 2012, 17, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Rohde, G.K.; Aldroubi, A.; Dawant, B.M. The adaptive bases algorithm for intensity-based non-rigid image registration. IEEE Trans. Med. Imaging 2003, 22, 1470–1479. [Google Scholar] [CrossRef] [PubMed]

- Després, P.; Jia, X. A review of GPU-based medical image reconstruction. Phys. Med. 2017, 42, 76–92. [Google Scholar] [CrossRef] [PubMed]

- Dorgham, O.M.; Laycock, S.D.; Fisher, M.H. GPU accelerated generation of digitally reconstructed radiographs for 2-D/3-D image registration. IEEE Trans. Biomed. Eng. 2012, 59, 2594–2603. [Google Scholar] [CrossRef]

- de Molina, C.; Serrano, E.; Garcia-Blas, J.; Carretero, J.; Desco, M.; Abella, M. GPU-accelerated iterative reconstruction for limited-data tomography in CBCT systems. BMC Bioinform. 2018, 15, 171. [Google Scholar] [CrossRef] [PubMed]

- Tian, Z.; Jia, X.; Dong, B.; Lou, Y.; Jiang, S.B. Low-dose 4DCT reconstruction via temporal nonlocal means. Med. Phys. 2011, 38, 1359–1365. [Google Scholar] [CrossRef] [PubMed]

- Satpute, N.; Naseem, R.; Pelanis, E.; Gómez-Luna, J.; Cheikh, F.A.; Elle, O.J.; Olivares, J. GPU acceleration of liver enhancement for tumor segmentation. Comput. Methods Programs Biomed. 2020, 184, 105285. [Google Scholar] [CrossRef]

- Haghighi, B.; DEllingwood, N.; Yin, Y.; Hoffman, E.A.; Lin, C.L. A GPU-based symmetric non-rigid image registration method in human lung. Med. Biol. Eng. Comput. 2018, 56, 355–371. [Google Scholar] [CrossRef]

- Peng, B.; Wang, Y.; Hall, T.J.; Jiang, J. A GPU-Accelerated 3-D Coupled Subsample Estimation Algorithm for Volumetric Breast Strain Elastography. IEEE Trans. Ultrason. Ferroelectr. Freq. Control. 2017, 64, 694–705. [Google Scholar] [CrossRef]

- Lebert, J.; Ravi, N.; Kensah, G.; Christoph, J. Real-Time Optical Mapping of Contracting Cardiac Tissues With GPU-Accelerated Numerical Motion Tracking. Front. Cardiovasc. Med. 2022, 9, 787627. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).