1. Introduction

The supply chain comprises different sequential activities, such as replenishment, production and distribution, which must all be planned and optimized. The main management function of companies is planning [

1]. Planning activities aim to effectively coordinate and schedule a company’s available resources [

2]. Planning is accompanied by a set of decisions to be made by the planning manager; for example, a planner must make decisions about the quantity of materials needed for production by taking into account storage capacity and production batches to reduce production and inventory costs, production scheduling and sequencing on machines, and to finally make decisions about the delivery flow of finished products to customers or distribution centers [

3].

Many real-world combinatorial optimization problems, such as those in transportation and logistics [

4,

5,

6] and manufacturing [

7,

8,

9], pose a huge challenge due to the high complexity of most companies’ operations given the type of industry to which they belong. They are also subject to not only dynamic conditions, such as customer demands, processing times, returns on investment, but also to uncertainties, such as unavailability of items, changes in market conditions and shortages due to changes in demand [

10].

Thus, planning problems seek to maximize profit or gain while minimizing costs and meeting market, environmental and societal constraints. For example, in supply planning problems, there is a direct relation between inventory costs and the costs associated with distribution planning, such as transportation costs and on-time delivery to customers [

11]. Therefore, the difficulty of such problems is substantial due to the amount of data they handle [

12], nonlinearities and discontinuities, complex constraints, possible conflicting objectives and uncertainty [

13]. Hence, different types of solvers are used to solve these problems, as are algorithms because of their computational difficulty [

14].

Given the large number of algorithms for solving replenishment [

15], production [

16] and distribution planning problems [

17], how to effectively select an algorithm for a given task or a specific problem is an important, but also difficult issue. Peres and Castelli [

18] highlight that rules which standardize the formulations of existing combinatorial optimization problems (COP) in planning are lacking, which means that researchers have to start building an algorithm from scratch, which thus limits the interoperability of this field because the algorithms in the literature must be adjusted to solve a specific problem. These authors conclude that the consolidation of combinatorial optimization problems is lacking and note that this is important for the field of COPs to reach a higher degree of maturity.

The algorithm selection problem (ASP) is an active research area in many fields, such as operations research [

19,

20,

21] and artificial intelligence (AI) [

22,

23]. For many decades, researchers have developed increasingly sophisticated techniques and algorithms to solve difficult optimization problems [

18]. These techniques include mathematical programming approaches, heuristics, metaheuristics, nature-inspired metaheuristics, matheuristics and various hybridizations [

24]. Literature reviews such as that presented by Jamalnia et al. [

25], who reviewed the aggregate production planning problem under uncertainty between 1970 and 2018, detailed the use of approximately 24 different techniques to solve this type of problem out of 92 reviewed papers. Kumar et al. [

26] presented a literature review covering the period from 2000 to 2019 of the quantitative approaches used to solve production and distribution planning problems. They found 13 different techniques and types of solvers, including CPLEX and LINGO, to solve this type of problem out of 74 papers. Pereira et al. [

27] analyzed the tactical sales and operations planning problem. To do so, they reviewed 103 papers, where the year was not limited. They detailed about 35 different techniques to solve this type of problem. Hussain et al. [

28] conducted a literature review of the applications of metaheuristic algorithms and found 140 different metaheuristic algorithms in 1222 publications over a 33-year search period (1983 to 2016).

Different research papers have conducted experimental studies to determine the performance of an algorithm [

29,

30,

31,

32] or several algorithms according to a problem type with a collection of datasets available in the literature [

33,

34,

35]. For example, Pan et al. [

36] compared three constructive heuristics and four metaheuristics (discrete artificial bee colony, scatter search, iterated local search, iterated greedy algorithm) for the distributed permutation flowshop problem, for which they made extensive comparative evaluations based on 720 instances. However, these comparisons do not provide any enlightening results because they are generally limited to a set of algorithms and to a specific problem set [

24].

In practice, algorithm performance vastly varies from one problem state to another. In many cases, heuristic [

37], metaheuristic [

28] and matheuristic [

38] techniques involve randomization, such as genetic algorithm, particle swarm optimization, bee swarm optimization, bat algorithm, artificial tribe algorithm and firefly algorithm [

39,

40,

41,

42,

43], which results in performance variability, even across repeated trials in a single problem instance [

44]. Risk is an important additional feature of algorithms because the planner or the person in charge of selecting an algorithm for planning must be willing to settle for average or lower performance in exchange for a reasonable answer or may also find a better solution than that expected in the same resolution time. This situation is often encountered in companies that attempt to maximize their profits because these problems are solved by constructing mixed strategies, i.e., strategies that meet the desired risk and return.

Nowadays, if a study demonstrates the superiority of one algorithm over other algorithms, that algorithm can be expected to be useful for other problem types for which it has not yet been tested. No-free-lunch (NFL) theorems [

45] describe that there is no single algorithm that outperforms all algorithms in all the instances of a problem [

24].

Therefore, the selection of the most suitable algorithm to solve an optimization problem for replenishment, production and distribution planning is a very difficult task. Algorithm selection requires advanced knowledge of the efficiency of algorithms, the characteristics of the problem, as well as mathematical and statistical knowledge. However, having the necessary knowledge to find a solution with algorithms does not guarantee success [

46].

Algorithm selection depends mainly on the expected results and the data that the company has at the time. Therefore, the properties or characteristics of the business problem must be examined. For this purpose, the linearity of the problem, the number of parameters and the characteristics that the solution supports must be analyzed.

Evaluating algorithms to solve a problem usually involves more than one criterion, such as problem type, problem knowledge, performance, computation time, the quality of the expected solution and programming knowledge. Therefore, algorithm selection can be modeled as a multicriteria decision making problem [

22].

The objective of multicriteria decision making (MCDM) is to identify the most eligible alternatives from a set of alternatives based on qualitative and/or quantitative criteria with different units of measurement to select or rank them [

47]. Different techniques such as AHP, ELECTRE, PROMETHEE, SAW, TOPSIS and VIKOR are used to solve MCDM problems [

3]. Several studies have been conducted to compare the performance of these techniques; for example, that presented by Zanakis et al. [

48], which compared eight MCDM techniques (four variations of AHP, ELECTRE, TOPSIS and SAW). It concluded that different techniques are affected mainly by the number of alternatives because as alternatives increase, methods tend to generate similar final rankings. Opricovic and Tzeng [

49] performed a comparative analysis of the VIKOR and TOPSIS methods. Both these methods are based on an aggregation function that represents the closeness to the ideal. The study revealed that the main differences between the two methods were the employed normalization method types.

Opricovic and Tzeng [

50] compared the extended VIKOR method to ELECTRE II, PROMETHEE and TOPSIS. The obtained results showed that ELECTRE II, PROMETHEE and VIKOR gave similar results, while TOPSIS presented different results in some alternatives. Chu et al. [

51] made a comparison of the VIKOR, TOPSIS and SAW methods. The study revealed that SAW and TOPSIS presented similar classifications, while VIKOR presented different results. These authors concluded that VIKOR and TOPSIS provided results that were close to reality. Ozcan et al. [

52] presented a comparative analysis of the TOPSIS, ELECTRE and Grey Theory techniques for the warehouse location selection problem, where the Grey Theory provided different results to TOPSIS and ELECTRE. Instead, the last two obtained similar results.

In situations in which information is not quantifiable or incomplete, as in real-world problems where data may be incomplete or imprecise, i.e., nondeterministic, data can be represented in a fuzzy way using linguistic variables to represent decision makers’ preferences in complex or not well-defined situations. Imprecision in MCDM problems can be modeled using the fuzzy Set Theory, which is used to extend different MCDM techniques. In this background, Ertuğrul and Karakaşoğlu [

53] conducted a comparative study of the fuzzy AHP and fuzzy TOPSIS methods for the facility location selection problem. Both methods obtained the same results, i.e., the same rank order of alternatives.

Other studies have used an extension of the classical fuzzy set called the intuitionistic fuzzy set, as proposed by Atanassov [

54]. Intuitionistic fuzzy sets have been applied in many fields, such as facility location selection [

55], supplier selection [

56], evaluation of project and portfolio management information systems [

57,

58], and personnel selection [

59]. Büyüközkan and Güleryüz [

60] compared the performance of ranked fuzzy TOPSIS and intuitionistic fuzzy TOPSIS by detailing how the alternatives ranking barely differed between the two approaches.

From the above comparisons, it is clear that many techniques are available for multi-criteria decision making [

61]. These techniques have their advantages and limitations over others depending on the type of problem [

62].

Different MCDM techniques have been used for the classification algorithm selection problem, such as the study by Lamba et al. [

63] in which TOPSIS and VIKOR were used to evaluate 20 classification algorithms. Both methods obtained similar results. Peng et al. [

22] used four different MCDM techniques (TOPSIS, VIKOR, PROMETHEE II and WSM) to select multiclass classification algorithms. The TOPSIS, VIKOR and PROMETHEE II methods achieved similar classifications, while WSM obtained slightly different ones. Peng et al. [

64] evaluated ranking algorithms for financial risk prediction purposes. Using TOPSIS, PROMETHEE and VIKOR, they obtained similar results for the three main ranking algorithms. They concluded that the followed techniques were advantageous for choosing a classification algorithm.

Along these lines, TOPSIS stands out as a widely used technique that is efficient for selecting classification algorithms. It has been successful in different areas such as supply chain and logistics management, environment and energy management, health and safety management, business and marketing management, engineering and manufacturing, human resource management and transportation management [

47,

65,

66,

67] and, according to Chu et al. [

51], is able to represent reality. It is also useful for companies because it can be run with a spreadsheet [

68]. For all these reasons and given the fact that the choice of a solution method is subject to vagueness and uncertainty, we use the fuzzy TOPSIS method.

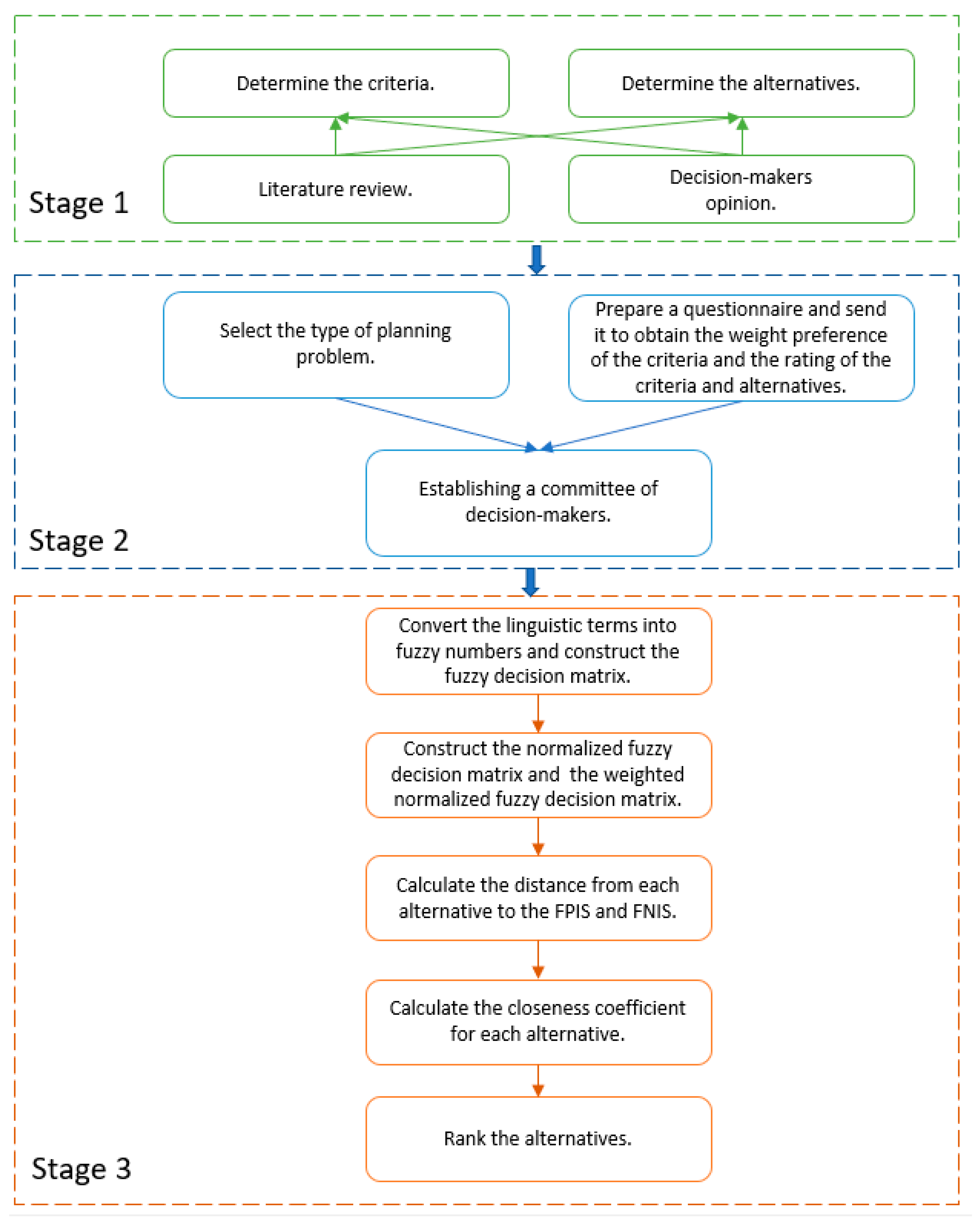

In this context, the present paper aims to answer this question: which solution method is suitable for a replenishment, production and distribution planning problem given a portfolio of algorithms or solvers?

To answer this question, and by taking into account that no research to date has analyzed the selection of algorithms for planning with a multicriteria decision method, a decision-making tool to select algorithms for a planning problem based on fuzzy TOPSIS is presented. To validate the use of the tool herein proposed, an illustrative example is presented, which has been validated by four different manufacturing companies. This paper is organized as follows.

Section 2 deals with the literature review. The adopted methodology is shown in

Section 3 and the numerical application of the methodology is presented in

Section 4. The sensitivity analysis of the results is provided in

Section 5. Finally,

Section 6 includes the conclusions and future research lines.

2. Algorithm Selection Problem Literature Review

Algorithm selection has been widely addressed by the scientific community in both the mathematics [

69,

70] and Artificial Intelligence (AI) [

71,

72] areas. In the mathematical area, Stützle and Fernandes [

73] report how the characteristics of problem instances make the performance of metaheuristics relative to the properties of instances. Therefore, it is necessary to explore the relation between algorithms and instances. In the AI area, different models have been developed to predict which algorithm is the best one for a problem instance, which is conducted by analyzing the relation between the characteristics of an instance and a set of training data used by an algorithm. In this way, with an algorithms portfolio it is possible to predict which algorithm in a new problem instance is most likely to work [

74].

Growing interest has been shown in the ASP to put previously developed algorithms to best use to solve a specific problem instead of developing new ones [

75]. According to Leyton-Brown et al. [

76], some algorithms are better than others on average, and there is rarely a best algorithm for a given problem. Instead “it is often the case that different algorithms perform well on different problem instances. This phenomenon is most pronounced among algorithms for solving NP-Hard problems, because runtimes for these algorithms are often highly variable from instance to instance”. In this context, Rice [

77] proposes the first description of methodologies to select algorithms. Kotthoff [

75] defines this as the “task of algorithm selection involves choosing an algorithm from a set of algorithms on a per-instance basis in order to exploit the varying performance of algorithms over a set of instances”.

In this regard, algorithm selection approaches have been successfully applied in different problem domains [

78]. The following table summarizes a literature review of the various papers that have approached ASP from different perspectives (

Table 1).

In manufacturing environments, formulations are usually very complex [

78] because they present a variety of specific constraints related to the company’s scope. Generally, these formulations can serve as blocks or subproblems for other formulations of other specific manufacturing environments. In this way, many formulations or algorithms can obtain similar results to the formulations proposed above. When selecting a formulation or algorithm, tuning the parameters of the different techniques is a very demanding task because each algorithm has different characteristics and the number of times that a parameter tuning has to be performed against different instances of a problem when performing a comparison can exponentially grow [

33]. Furthermore, to compare algorithms and select one, the feature set of the instances must be taken into account because the characterization of instances determines a solution approach’s performance. In practice, the information needed to establish the characteristics is not always available [

89], and experimental results may lead to the fact that there is no single best or worst algorithm for all problem instances [

46]. In this context, and as shown in

Table 1, several approaches have been proposed to address the algorithm selection challenge, including heuristic algorithms, metaheuristics, hybrid metaheuristics, hyperheuristics, and machine-learning techniques. Many of these approaches integrate similarities, such as using a set of instances to learn, measuring or predicting the performance of the best algorithm. The success of algorithm selection approaches for some problem domains has motivated us to develop a decision making tool to support planners of companies to select a solution method (algorithm or a solver) for replenishment, production and distribution planning problems.

3. Solution Methodology

For combinatorial optimization problems with realistic discrete decision variables, such as scheduling, sequencing, distribution and transportation planning problems, performing an exhaustive search space for this problem type is not a realistic option despite having a finite search space. The literature includes several heuristic, metaheuristic and matheuristic algorithms, as well as tests with commercial and non-commercial high-performance solvers to solve such problems. So, this question arises: which algorithm is to be chosen for a combinatorial optimization problem?

Generally one way of finding an algorithm to solve a combinatorial optimization problem is to exhaustively run all the available algorithms and choose the best solution [

90]. However, this method requires unlimited computational resources and companies have limited computational, programming and mathematical resources, which makes it impossible to test all the algorithms or to use several solvers to test one instance or several for a specific problem. Weise et al. [

12] emphasize that there is a variety of methods to solve different types of problems with acceptable performance, but they can be outperformed by very specialized methods.

Weise et al. [

12] consider that there is no optimization method that is better or can outperform others, and the NFL Theorem [

45] corroborates this theory. This theorem states that no optimization algorithm is likely to outperform several existing types of methods in different types of problems.

In turn, the same authors mention that the efficiency of an optimization algorithm is based on knowledge of a problem. Radcliffe [

91] emphasizes that the algorithm’s performance will improve with adequate knowledge of the problem. However, knowledge of one type of problem can be misleading for another type of problem [

89] because there is no algorithm that outperforms others in all instances of a problem. Therefore, an algorithm’s performance will be based on experience and empirical results.

Algorithm selection schemes are based mainly on approaches that either run a sequence of algorithms in a limited execution time [

80,

82] or predict the performance of an algorithm for a given instance and select the algorithm with the best predicted performance [

92].

Real-world planning problems are subject to inaccuracies and uncertainties, conflicts between constraints and objectives, discontinuities and nonlinearities [

13]. Therefore, determining which algorithm is appropriate poses a challenge that can be analyzed using a multicriteria decision technique for ranking and prioritizing algorithms because algorithm selection involves multiple decisions that require the simultaneous assessment of the various advantages and disadvantages.

In most companies, the complexity of operations has several components that must be addressed at the same time. Evaluating an algorithm to solve a problem often involves more than one criterion, such as problem type, problem knowledge, performance, computation time, the quality of the expected solution and programming knowledge.

MCDM techniques integrate different criteria and an order of preference to evaluate and select the optimal option among multiple alternatives based on the expected outcome. The objective of these techniques is to obtain an ideal solution to a problem in which a decision makers’ experience does not allow them to decide among the various considered parameters. As a result, a ranking is obtained according to the selected criteria, their respective values and the assigned weights [

93].

There are many criteria in real-life problems that can directly or indirectly affect the outcome of different decisions. Decision making often involves inaccuracies and vagueness that can be effectively dealt with using fuzzy sets. This method is especially important for clarifying decisions that are difficult to quantify or compare, especially if decision makers have different perspectives, as in this study. Therefore, we herein adopt the fuzzy TOPSIS methodology to model an algorithm or solver selection given a solution methods portfolio to solve replenishment, production and distribution planning problems.

In decision making problems, the Fuzzy Set Theory was introduced by Zadeh [

94] to overcome the ambiguity and uncertainty of human thought and reasoning by using linguistic terms to represent decision makers’ choices.

The TOPSIS method was originally proposed by Hwang and Yoon [

95]. It is based on choosing an alternative that should have the shortest distance between the positive ideal solution (PIS) and the negative ideal solution (NIS), i.e., the selected alternative is obtained with the closest solution to the PIS and is farthest away from the NIS. The main limitation of this technique is that it cannot capture ambiguity in the decision making process [

96]. To overcome this limitation, Chen [

97] developed the Fuzzy TOPSIS Method to quantitatively evaluate the score of different alternatives by conferring weight to the different criteria described with linguistic variables. This section briefly describes the employed Fuzzy Set Theory and Fuzzy TOPSIS Method.

3.1. Fuzzy Set Theory and Fuzzy Numbers

The Fuzzy Set Theory [

94,

98,

99] is associated with the TOPSIS method, and are related to another by the degree of membership of the elements in fuzzy sets. A fuzzy set is characterized by the membership function, which can come in different formats, e.g., triangular, sigmoid or trapezoidal. The membership function assigns a degree of membership to each object according to its relevance

. To represent a fuzzy set, a tilde ‘∼’ is placed [

68].

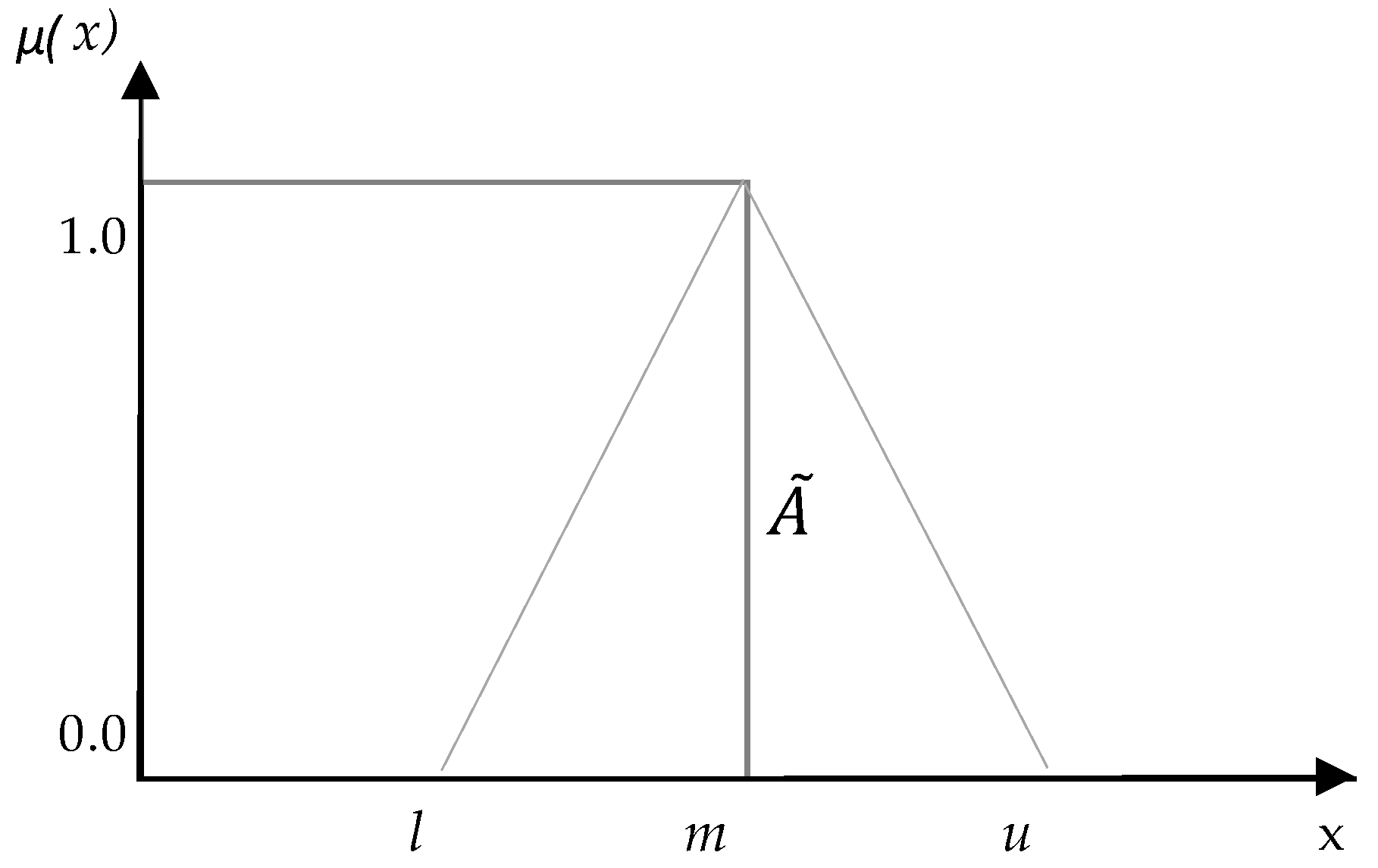

For our study, we consider a triangular fuzzy number,

which is denoted by its vertices (

l,

m,

u), as shown in

Figure 1. Triangular fuzzy numbers are used to adapt decision makers’ preference to capture the vagueness of linguistic evaluations, where

l, u and

m, respectively, denote the lower bound, the upper bound and the crisp central value.

Membership function d of triangular fuzzy number

is defined as:

where

and

are two triangular fuzzy numbers with bases

l,

m,

u. Then, the basic operational laws for triangular numbers are defined as:

By assuming that fuzzy numbers

and

are real numbers, then the distance measure is identical to the Euclidean distance. Therefore, the vertex method is defined to calculate the distance between two fuzzy numbers (see Equation (8)). Although there are several ways of measuring distances between fuzzy numbers [

100], the vertex method is a simple and efficient method [

97,

101].

3.2. The Fuzzy TOPSIS Method

The main fuzzy TOPSIS idea is based on defining the fuzzy positive ideal solution (FPIS) and the fuzzy negative ideal solution (FNIS). The chosen alternative should have the shortest distance to the FPIS and the farthest distance to the FNIS. TOPSIS follows a systematic process and logic that seek to express the logic of human choice [

102]. The basic fuzzy TOPSIS method steps are described in the following way (see [

97,

103,

104]):

Step 1. Consider a set of k decision makers (

D1,

D2,…,

Dk) with m alternatives (

A1,

A2,…,

An) and n criteria (

C1,

C2,…,

Cn) for which the decision matrix is established:

Considering that the perception of algorithms and solvers varies according to knowledge and experience with algorithms for planning, the average value method is applied; where

is the rating or score of the alternative

Ai in relation to criterion

Cj evaluated by the

K-th decision maker (Equation (10)). The weights of criteria are aggregated using Equation (11), where

describes the weight of each criterion

Cj according to decision makers

Dk.Step 2. Normalize the fuzzy decision matrix. Decision matrix

with

m alternatives and

n criteria is normalized to eliminate inconsistencies with the different units of measurement or scales to preserve the ranges of the normalized triangular fuzzy numbers.

represents the normalized decision matrix (Equation (12)):

The normalization process is performed by Equations (13) and (14), where

B and

C represent the set of benefit and cost criteria, respectively.

Step 3. Construct the weighted normalized fuzzy decision matrix

(Equation (15)).

is obtained by multiplying the weights of criteria

and the normalized fuzzy decision matrix

values:

Step 4. Obtain the FPIS (FPIS, A

+) and the FNIS (FNIS, A

−), as shown in Equations (17) and (18), respectively. The ideal solutions can be defined according to Chen [

97] as:

and

Step 5. Calculate the distances for each alternative, where

indicates the distance between the scores of alternative

Ai to the FPIS (Equation (19)), and

denotes the distances between the values of alternative

Ai to the FNIS (Equation (20)), where

represents the distance between two fuzzy numbers.

Step 6. Determine proximity coefficient

, which evaluates the rank order of all the alternatives

Ai according to their overall performance. The proximity coefficient is calculated as shown in Equation (21).

Step 7. Rank alternatives Ai, using a decreasing order of CCi values, the shortest distances from the FPIS, i.e., close to 1, to indicate that the overall performance of alternative Ai is better because it is farther away from the FNIS. Having obtained the ranking order, decision makers select the most feasible alternative Ai.

6. Conclusions

The complexity of real-world problems should be seen not only as an obstacle, but also as a research challenge for effective solutions for large-scale planning problems. Relatively small companies often face very complex problems.

It is usually very difficult for production planners in companies to determine or choose an algorithm. The algorithm selection process normally involves the experimental evaluation of several algorithms with different dataset sizes. However, these sets of experiments require considerable computational resources and long processing times. This adds to the disadvantage of having fewer resources to invest in commercial solvers. In addition, efforts often have to be duplicated when attempting to replicate the algorithms or models available in the literature.

To overcome these drawbacks, the methodological approach based on the fuzzy TOPSIS proposed herein intends to be a support tool to select a solution method for replenishment, production and distribution planning problems. To this end, 13 different criteria were defined and used to select nine different algorithm types (heuristic, metaheuristic, and matheuristic) and four solvers (commercial and non-commercial) that are often employed in planning problems. All these criteria address several important dimensions when solving a planning problem. These dimensions are related to the computational difficulty of the planning problem, programming skills, mathematical skills, algorithmic skills, mathematical modeling software skills, and also to the expected computational performance of the solution methods. These criteria were analyzed based on the linguistic values given by four planning experts from different manufacturing companies. The problem selected to apply the proposed approach was that of production planning. For this problem, the results of the methodology showed that the GA was the best alternative, while Benders’ decomposition was the worst. Given our study results, it can be concluded that it is possible to select a set of suitable candidate algorithms for solving optimization problems with the proposed approach. In this way, not only can one algorithm be selected, but so can other algorithms that provide similar solutions at the same time. The results of this methodology can guide companies to choose whether to use a commercial or non-commercial algorithm or solver. This can help companies to determine whether they should invest in a solver or use mathematical modeling or algorithm programming software and, at the same time, to understand planning staff’s training needs.

There are different approaches for algorithm selection [

44,

70,

75,

79]. These approaches are heuristic, metaheuristic and AI, and they offer benefits and disadvantages. However, these techniques can be restrictive for companies because they involve a large number of computational resources and experiments that can be affected by accuracy, the number of tested instances, instance generation, consistency, AI techniques, and training time. The proposed approach requires very few resources, is very useful thanks to its simplicity and is easily replicable. The main limitation of this technique is the appropriate selection of criteria and the balance between them, which is a subjective issue that requires experts in the planning problems field, not to mention the personal bias of experts’ opinions.

Future research could be conducted to experiment the proposed approach with the portfolio of algorithms and solvers defined in [

107], where some 50 algorithms are identified, including optimizing, heuristic, metaheuristic and matheuristic algorithms, as well as different types of commercial solvers. Alternatives and criteria could be evaluated with more decision makers. Other MCDM techniques such as ELECTRE, PROMETHEE, intuitionistic fuzzy TOPSIS, or novel methods such as the performance calculation technique of the integrated multiple multi-attribute decision making (PCIM-MADM) [

120], which incorporates four techniques (COPRAS, GRA, SAW and VIKOR) into a single final classification index, could be used.