Abstract

Industries are constantly seeking ways to avoid corrective maintenance in order to reduce costs. Performing regular scheduled maintenance can help to mitigate this problem, but not necessarily in the most efficient way. In many real life applications, one wants to predict the future failure time of equipment or devices that are expensive, or with long lifetimes, to save costs and/or time. In this paper, statistical prediction was studied using the classical and Bayesian approaches based on a unified hybrid censoring scheme. Two prediction schemes were used: (1) a one-sample prediction scheme that predicted the unobserved future failure times of devices that did not complete the lifetime experiments; and (2) a two-sample prediction scheme to predict the ordered values of a future independent sample based on past data from a certain distribution. We chose to apply the results of the paper to the Burr-X model, due to the importance of this model in many fields, such as engineering, health, agriculture, and biology. Point and interval predictors of unobserved failure times under one- and two-sample prediction schemes were computed based on simulated data sets and two engineering applications. The results demonstrate the ability of predicting the future failure of equipment using a statistical prediction branch based on collected data from an engineering system.

Keywords:

Burr-X distribution; maximum likelihood prediction; Bayesian prediction; one and two-sample prediction schemes; unified hybrid censoring; Gibbs sampler and Metropolis–Hastings algorithm MSC:

62F10; 62F15; 62N01; 62N02

1. Introduction

Industries are constantly seeking ways to avoid corrective maintenance in order to reduce costs. Performing regular scheduled maintenance can help to mitigate this problem, but not necessarily in the most efficient way, see [1,2,3]. In condition-based maintenance, the main goal is to come up with ways to treat and transform data from an engineering system, so that it can be used to build a data set to make statistical predictions about how the equipment will act in the future and when it will break down.

In many practical situations, one desires to predict future observations from the same population of previous data. This may be done by constructing an interval that will include future observations with a certain probability.

Predictive interval accuracy depends on sample size; full testing is impractical in real testing, owing to the advancement of industrial design and technology, which results in very reliable products with long lifespans. Censoring has been implemented in this case for a variety of reasons, including a lack of available resources and the need to save costs. In general, only a small percentage of failure times are recorded when a is engaged in a test environment.

Let be the ordered failure times of n identical units placed on a life-test, from a certain distribution with , , where is the vector of parameters and , . For fixed and with and upon the relation between and , an is defined by Balakrishnan with six decisions, as follows:

- (1)

- Stopping the experiment at if ;

- (2)

- Stopping the experiment at if ;

- (3)

- Stopping the experiment at if ;

- (4)

- Stopping the experiment at if ;

- (5)

- Stopping the experiment at if ;

- (6)

- Stopping the experiment at if .

Let denote the number of failures until time , . Then, the of this censored sample is as follows:

Many well-known censoring schemes can be considered as special cases from the studied , such as generalized type-I HCS, see [4] when , generalized type-II HCS, see [4] when , type-I HCS, see [5], when and , type-II HCS, see [5], when and , type-I censoring, see [6], when , , and type-II censoring, see [6], when , and .

Among the advantages of is that it is more flexible than the generalized type-I and generalized type-II ; moreover, it guarantees us more observations, which will increase the accuracy of the predictive intervals.

Ref. [7] proposes the Burr-X distribution as a member of the Burr distribution family. This model is extremely useful in the fields of statistics and operations research. Engineering, health, agriculture, and biology are just some of the fields where it can be used to great effect.

A random variable X is said to have a Burr-X with a vector of parameters if the is given by

The corresponding and are given, respectively, as:

For more details about some Burr models with related inferences using classical and Bayesian approaches, see [8,9,10,11,12,13,14,15,16,17,18].

Many contributions found in this paper, such as: studying the prediction problem in a using the classical and Bayesian approaches with making some comparisons between the two approaches, analyzing two engineering real data sets using Burr-X distribution and applying the obtained results on these real data sets as illustrative examples.

This paper is organized as follows: the point and interval prediction problems under one- and two-sample prediction schemes were studied using the classical and Bayesian approaches in Section 2 and Section 3, respectively. In Section 4, the obtained results were applied on simulated and real data sets. Our conclusions are summarized in Section 5.

2. One-Sample Prediction

Assume that n items are placed in a life-time experiment and that this experiment will be terminated at a fixed time and the number of failures until this time is D. The previous ordered failures denoted by , which can be written for simplicity as , called (Informative sample). In Balakrishnan’s , will equal in the first case, in the second case, in the third case, in the fourth case, in the fifth case and in the sixth case. Moreover, D will equal in the first case, r in the second case, in the third case, r in the fourth case, in the fifth case, and k in the sixth case. In the one-sample prediction scheme, the future failure time will be predicted based on the informative sample.

In this section, the and of the future unknown failure time will be computed using classical and Bayesian methods.

First, the conditional of the future failure time given the vector of parameters should be derived as follows:

Based on the informative sample , the of given will be the of the sth ordered value from ordered values after , which can be written as (see [15,19,20,21]):

Using this , the conditional of the future failure time given based on all cases of Balakrishnan’s is:

2.1. Classical Method (Maximum Likelihood Prediction)

In this subsection, the and of were obtained using the following (see [22]):

Substituting from (1) and (6) in (7), we have

Substituting from (2)–(4) in (8), we have

2.1.1. Point Predictor

In this subsection, the of will be obtained using two methods.

- Method (1):

- obtaining the values of , and , which maximize the logarithm of the , and will be denoted by , and , respectively. The values and will be called the and the value will be called the of .To maximize the logarithm of the , we will differentiate with respect to , and , set the resulting derivatives to zero and solve the resulting nonlinear equations. The solution of the resulting nonlinear equations will be , and .

- Method (2):

- first, the of the parameters and , denoted by and , will be obtained, then replace and by and , respectively, in the , to obtain the in the form: and finally the of will be equal to , which represents the mathematical expectation of the random variable . To obtain the () of and , we will differentiate the logarithm of the then set the resulting equations to zero and solve the resulting nonlinear equations. The solution of the resulting nonlinear equations will be and .

Based on the studied , can be written in the form:

where A is a normalizing constant and has the value

So, the of will be

where

2.1.2. Interval Predictor

A of the future failure time can be obtained by solving the following two nonlinear equations:

From (10) and (11) in (14), the two nonlinear equations in (14) can be rewritten to be of the form

By solving the previous system, the of , , can be computed.

2.2. Bayesian Method (Bayesian Prediction)

Using the following bivariate prior suggested by [23,24]:

where , and are the prior parameters ( also known as hyperparameters) and (1) replace and by its definitions from (2) and (4), the posterior of and can be written as:

Using the previous posterior and the conditional of given and , (6), after using the definition of and from (2) and (4), the Bayesian predictive of given will be as follows (see [22]):

where

where are normalizing constants.

The of will equal to (see [22]):

and the , , of can be obtained by solving the following two nonlinear equations:

It is clear that the previous system contains double integration on and , which will make the problem of finding the solution for this system very complicated. In this situation, the Gibbs sampler and Metropolis–Hastings algorithm were used to generate a random sample from the posterior ; the the system (21) will be of the form

By solving this system, the , , for will be obtained.

For more details about the Gibbs sampler and Metropolis–Hastings algorithms, see, for example [25,26,27,28].

3. Two-Sample Prediction

Assume that and represent the informative sample, from the studied and a future ordered sample of size m, respectively. It is assumed that the two samples are independent.

In this section, and of the observation will be obtained using the classical and Bayesian methods. The conditional of the observation given the vector of parameters is the of the sth ordered value from the m ordered values, which can be written as (see [15,22]):

Using the definitions of and from (2) and (4) in (23), the conditional of the observation given will be:

Based on the two-sample scheme and the same prior (16), the and of can be summarized as follows in the following subsections.

3.1. Maximum Likelihood Prediction (Point and Interval Predictors)

The can be obtained from (24) after replacing each parameter by its to be of the form

where B is a normalizing constant has the value

So, the of will be

A of can be obtained by solving the following two nonlinear equations:

From (25) and (26) in (28), the two nonlinear equations in (28) can be rewritten, to be of the form

By solving the previous system, the of , , can be computed.

3.2. Bayesian Prediction (Point and Interval Predictors)

The Bayesian predictive of given will be as follows:

where

where are normalizing constants.

The of will equal

and the , , of can be obtained by solving the following two nonlinear equations:

Using , which are generated from the posterior , then the system (33) will be of the form

By solving this system, the , , for will be obtained.

From the results of the second and third sections, it is clear that the classical method of prediction and inference in general, called the maximum likelihood approach, depends only on an informative sample from the studied distribution under a suggested censoring scheme, and does not depend on any additional information about the parameters of the population. However, for the Bayes method, it depends on the same informative sample, but in addition to additional information about the parameters of the population represented in the prior distribution of the parameters. This obviously leads to better results. The results obtained based on the samples in the next section will verify this fact.

In case of absence of information on the population parameters, we have two choices. The first is to use the Bayes approach under a vague prior and the second is to use the classical method.

4. Results

In this section, one- and two-sample and using the classical and Bayesian approaches were obtained based on simulated and real data sets.

4.1. Simulated Results

The predictive process is a process that takes in historical data to predict which areas and parts of an asset will fail and at what time. The technician can receive relevant and accurate data points, remotely. The collected data are then analyzed and predictive algorithms to determine which part are more likely to fail. This information is communicated to workers via collaboration tools and data visualization, with which they can perform maintenance work only on the parts that require it. By implementing a predictive maintenance solution (Figure 1), organizations will know when to schedule a specific part replacement and be alerted to future degradations due to faulty parts.

Figure 1.

Reactive periodic proactive predictive four stage engineering process.

In this section, the and of future failure times are computed, in one- and two-sample schemes, using the classical and Bayesian methods based on a generated informative sample for different values of , and as follows:

- For a given set of prior parameters , and , the population parameters and are generated from the joint prior (16).

- Making use of and obtained in step 1, a sample of size n of upper ordered values from Burr-X is generated.

- For different values of , and , a informative sample is generated from the complete sample in step 2.

- For different values of , and , the and of the future failure times are computed using classical and Bayes methods in a one-sample scheme, as explained in Section 2.

- The same is done in a two-sample scheme, as explained in Section 3.

- For each future failure time, the , , length of the , and the of the are computed.

Table 1. and of the future failure time , based on the generated Balakrishnan informative sample. , , .

Table 1. and of the future failure time , based on the generated Balakrishnan informative sample. , , . Table 2. and of the future failure time , based on the generated Balakrishnan informative sample. , , .

Table 2. and of the future failure time , based on the generated Balakrishnan informative sample. , , .- (a)

- For fixed , and , the length and the of the increase by increasing s because the element or will be larger, which will widen its predictive interval and, therefore, its .

- (b)

- In all six cases of the studied :

- The length and the of the decreases by increasing the ratio , which means that the results will be better by increasing the available information.

- In the cases with constant ratio and fixed , and , the length and the of the decrease by increasing k, which show us that the results will be better by increasing k.

- (c)

- In all cases, the lengths of the are shorter in case of the Bayesian method than that computed by the classical method, which means that the Bayesian method is better than the classical method.

- (d)

- In all cases, the Bayesian are less than that computed by the classical method, which is also a criterion indicating that the results obtained by using the Bayes method is better than that obtained using the classical method.

- (e)

- The values , and have been chosen so as to give all six cases of the studied .

4.2. Data Analysis

In this section, two real data sets are introduced; they were analyzed using Burr-X. The studied real data sets are from [8]. The first data set represents the failure times in the hours of 15 devices, and the second represents the first failure times in the months of 20 electronic cards. These real data sets are:

- Data I:

- 0.19, 0.78, 0.96, 1.31, 2.78, 3.16, 4.15, 4.76, 4.85, 6.5, 7.35, 8.01, 8.27, 12.06 and 31.75.

- Data II:

- 0.9, 1.5, 2.3, 3.2, 3.9, 5.0, 6.2, 7.5, 8.3, 10.4, 11.1, 12.6, 15.0, 16.3, 19.3, 22.6, 24.8, 31.5, 38.1 and 53.0.

In Table 3, the of the parameters and and the corresponding Kolmogorov–Smirnov () test statistic were computed under the Burr-X model.

Table 3.

of the parameters and the associated based on the real data sets I and II.

Under the significance level () and using the Kolmogorov–Smirnov table, the critical value for the test statistic is , which is greater than the computed test statistics for the two real data sets under the -X model. This means that the studied model fits the two biological data sets well.

and of the remaining future failure times () and of the first four observations ( ) from an independent ordered sample based on a generated Balakrishnan informative sample from the given real data sets, were computed; they are summarized in Table 4, Table 5, Table 6 and Table 7.

Table 4.

and of the future failure time based on a generated Balakrishnan informative sample from real data set I.

Table 5.

and of the future failure time , based on a generated Balakrishnan’s informative sample from real data set II.

Table 6.

and of the future failure time , based on a generated Balakrishnan informative sample from real data set I.

Table 7.

and of the future failure time , based on a generated Balakrishnan informative sample from real data set II.

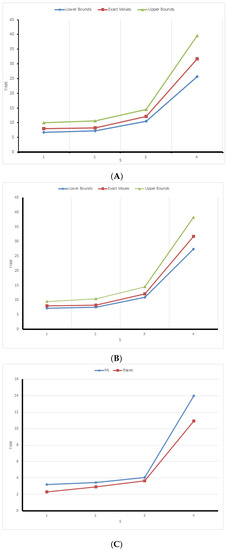

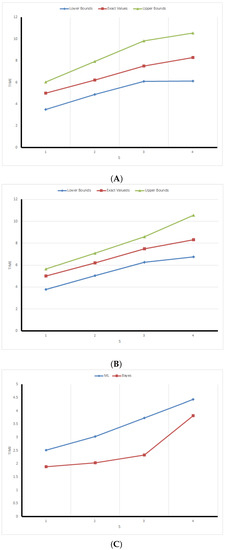

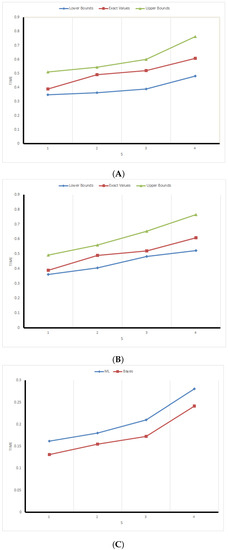

From previous tables and figures, we can observe (for fixed , and ):

- Increase the length of the predictive intervals by increasing s, because, as mentioned previously, the element or will be larger, which will widen its predictive interval.

- The length of the predictive intervals computed by the Bayesian method is less that that computed by the classical method, which means that Bayes technique is better than the other technique.

- For Bayes and classical approaches, and for all values of s, the exact value of lies in its predictive interval.

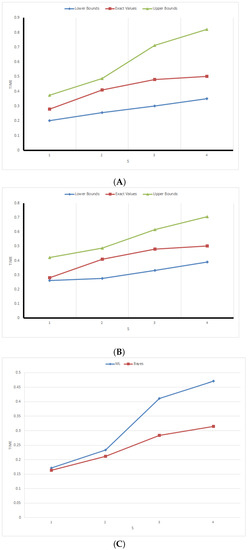

Figure 2. (A) ML one-sample predictive intervals based on sample I; (B) Bayesian one-sample predictive intervals based on sample I; (C) lengths of the one-sample predictive intervals based on sample I.

Figure 2. (A) ML one-sample predictive intervals based on sample I; (B) Bayesian one-sample predictive intervals based on sample I; (C) lengths of the one-sample predictive intervals based on sample I. Figure 3. (A) ML one-sample predictive intervals based on sample II; (B) Bayesian one-sample predictive intervals based on sample II; (C) lengths of one-sample predictive intervals based on sample II.

Figure 3. (A) ML one-sample predictive intervals based on sample II; (B) Bayesian one-sample predictive intervals based on sample II; (C) lengths of one-sample predictive intervals based on sample II. Figure 4. (A) ML two-sample predictive intervals based on sample I; (B) Bayesian two-sample predictive intervals based on sample I; (C) lengths of two-sample predictive intervals based on sample I.

Figure 4. (A) ML two-sample predictive intervals based on sample I; (B) Bayesian two-sample predictive intervals based on sample I; (C) lengths of two-sample predictive intervals based on sample I. Figure 5. (A) ML two-sample predictive intervals based on sample II; (B) Bayesian two-sample predictive intervals based on sample II; (C) lengths of two-sample predictive intervals based on sample II.

Figure 5. (A) ML two-sample predictive intervals based on sample II; (B) Bayesian two-sample predictive intervals based on sample II; (C) lengths of two-sample predictive intervals based on sample II.- (a)

- The red broken refracted line, which represents the true value of the observation to be predicted, is located between the two broken lines that represent the lower and upper bounds of the predictive internals, which confirms with 3.

- (b)

- The lower bounds increase by increasing s.

- (c)

- The upper bounds also increase by increasing s.

- (a)

- The length of the predictive interval increase by increasing s, which confirms with 1.

- (b)

- The lengths of the predictive intervals obtained using the Bayes approach are less than that obtained by the classical approach, which confirms with 2.

5. Conclusions

In this paper, the and of the future failure times from Burr-X were computed based on a (suggested by Balakrishnan et al. (2008) ) informative sample using different values of , and , using classical and Bayesian approaches, making some comparisons between the two approaches. Two engineering real data sets were introduced and analyzed using the Burr-X model to emphasize that the studied model fits the given real data sets well. Based on a generated informative sample from the given real data sets, the and of the future failure times under one- and two-sample schemes were computed using classical and Bayesian approaches; it was found that the predictive intervals using the Bayesian approach were shorter than those computed by the classical approach, which means that the Bayesian approach is better than the other approach. In addition to the tabular description of the results related to the real data sets, graphical descriptions were also introduced. The results of the work confirm that it is possible to use statistical prediction to perform predictive tasks in relation to the conditions of industrial equipment.

Author Contributions

Data curation, A.S.A.; Formal analysis, S.F.A. and A.A.A.M.; Funding acquisition, S.F.A. and A.S.A.; Investigation, S.F.A. and A.A.A.M.; Project administration, A.S.A.; Resources, S.F.A. and A.S.A.; Software, S.F.A.; Supervision, A.A.A.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors extend their appreciation to the Deputyship for Research & Innovation, Ministry of Education, Saudi Arabia, for funding this research work through project number IFPRP:373-662-1442 and King Abdulaziz University, DSR, Jeddah, Saudi Arabia.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| Bayesian predictor | |

| Bayesian predictive interval | |

| TLA | Three letter acronym |

| Censoring scheme | |

| Cumulative distribution function | |

| Coverage probability | |

| Hybrid censoring scheme | |

| IPs | Interval predictors |

| Likelihood function | |

| Maximum likelihood | |

| MLEs | Maximum likelihood estimates |

| Maximum likelihood predictor | |

| Maximum likelihood predictive function | |

| Maximum likelihood predictive interval | |

| Probability density function | |

| Predictive likelihood function | |

| Predictive maximum likelihood estimates | |

| PPs | predictors |

| Reliability function | |

| Unified hybrid censoring scheme |

References

- Calabria, R.; Guida, M.; Pulcini, G. Point estimation of future failure times of a repairable system. Reliab. Eng. Syst. Saf. 1990, 28, 23–34. [Google Scholar] [CrossRef]

- Scalabrini Sampaio, G.; de Aguiar Vallim Filho, A.R.; da Silva, L.S.; da Silva, L.A. Prediction of Motor Failure Time Using An Artificial Neural Network. Sensors 2019, 19, 4342. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Alghamdi, A.S. Partially accelerated model for analyzing competing risks data from Gompertz population under type-I generalized hybrid censoring scheme. Complexity 2021, 2021, 9925094. [Google Scholar] [CrossRef]

- Chandrasekar, B.; Childs, A.; Balakrishnan, N. Exact likelihood inference for the exponential distribution under generalized Type-I and Type-II hybrid censoring. Nav. Res. Logist. 2004, 51, 994–1004. [Google Scholar] [CrossRef]

- Childs, A.; Chandrasekar, B.; Balakrishnan, N.; Kundu, D. Exact likelihood inference based on Type- I and Type-II hybrid censored samples from the exponential distribution. Ann. Inst. Stat. Math. 2003, 55, 319–330. [Google Scholar] [CrossRef]

- Lawless, J.F. Statistical Models and Methods for Lifetime Data; John Wiley and Sons: New York, NY, USA, 1982. [Google Scholar]

- Burr, W.I. Cumulative frequency distribution. Ann. Math. Stat. 1942, 13, 215–232. [Google Scholar] [CrossRef]

- Zimmer, W.J.; Keats, J.B.; Wang, F.K. The Burr-XII distribution in reliability analysis. J. Qual. Technol. 1998, 30, 386–394. [Google Scholar] [CrossRef]

- Kim, C.; Chung, Y. Bayesian estimation of P(Y < X) from Burr-type X model containing spurious observations. Stat. Pap. 2006, 47, 643–651. [Google Scholar]

- Rastogi, M.K.; Tripathi, Y.M. Inference on unknown parameters of a Burr distribution under hybrid censoring. Stat. Pap. 2013, 54, 619–643. [Google Scholar] [CrossRef]

- Abd EL-Baset, A.A.; Magdy, E.E.; Tahani, A.A. Estimation under Burr type X distribution based on doubly type II censored sample of dual generalized order statistics. J. Egyp. Math. Soc. 2015, 23, 391–396. [Google Scholar]

- Arabi Belaghi, R.; Noori Asl, M. Estimation based on progressively type-I hybrid censored data from the Burr XII distribution. Stat. Pap. 2019, 60, 761–803. [Google Scholar] [CrossRef]

- Mohammadi, M.; Reza, A.; Behzadi, M.H.; Singh, S. Estimation and prediction based on type-I hybrid censored data from the Poisson-Exponential distribution. Commun. Stat. Simul. Comput. 2019, 1–26. [Google Scholar] [CrossRef]

- Rabie, A.; Li, J. Inferences for Burr-X Model Based on Unified Hybrid Censored Data. Int. J. Appl. Math. 2019, 49, 1–7. [Google Scholar]

- Ateya, S.F.; Amein, M.M.; Heba, S. Mohammed Prediction under an adaptive progressive type-II censoring scheme for Burr Type-XII distribution. Commun. Stat.-Theory Methods 2020, 1–13. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Rasouli, A.; Sanjari Farsipour, N. Exact likelihood inference based on an unified hybrid censored sample from the exponential distribution. J. Stat. Comput. Simul. 2008, 78, 475–488. [Google Scholar] [CrossRef]

- Osatohanmwen, F.; Oyegue, F.O.; Ogbonmwan, S.M. The Weibull-Burr XII {log logistic} Poisson lifetime model. J. Stat. Manag. Syst. 2021, 1–36. [Google Scholar] [CrossRef]

- Aslam, M.; Usman, R.M.; Raqab, M.Z. A new generalized Burr XII distribution with real life applications. J. Stat. Manag. Syst. 2021, 24, 521–543. [Google Scholar] [CrossRef]

- David, H.A. Order Statistics, 2nd ed.; John Wiley and Sons, Inc.: New York, NY, USA, 1981. [Google Scholar]

- Balakrishnan, N.; Shafay, A.R. One- and Two-Sample Bayesian Prediction Intervals Based on Type-II Hybrid Censored Data. Communi. Stat.-Theory Methods 2012, 41, 1511–1531. [Google Scholar] [CrossRef]

- Nigm, A.M.; Al-Hussaini, E.K.; Jaheen, Z.F. Bayesian one-sample prediction of future observations under Pareto distribution. Statistics 2003, 37, 527–536. [Google Scholar] [CrossRef]

- Ateya, S.F.; Mohammed, H.S. Prediction Under Burr-XII Distribution Based on Generalized Type-II Progressive Hybrid Censoring Scheme. JOEMS 2018, 26, 491–508. [Google Scholar] [CrossRef]

- Ateya, S.F. Estimation under modified Weibull distribution based on right censored generalized order statistics. J. Appl. Stat. 2013, 40, 2720–2734. [Google Scholar] [CrossRef]

- Ateya, S.F. Estimation under Inverse Weibull Distribution based on Balakrishnan’s Unified Hybrid Censored Scheme. Commun. Stat. Simul. Comput. 2017, 46, 3645–3666. [Google Scholar] [CrossRef]

- Jaheen, Z.F.; Al Harbi, M.M. Bayesian estimation for the exponentiated Weibull model via Markov chain Monte Carlo simulation. Commun. Stat. Simul. Comput. 2011, 40, 532–543. [Google Scholar] [CrossRef]

- Press, S.J. Subjective and Objective Bayesian Statistics: Principles, Models and Applications; John Wiley and Sons: New York, NY, USA, 2003. [Google Scholar]

- Upadhyaya, S.K.; Gupta, A. A Bayes analysis of modified Weibull distribution via Markov chain Monte Carlo simulation. J. Stat. Comput. Simul. 2010, 80, 241–254. [Google Scholar] [CrossRef]

- Upadhyaya, S.K.; Vasishta, N.; Smith, A.F.M. Bayes inference in life testing and reliability via Markov chain Monte Carlo simulation. Sankhya A 2001, 63, 15–40. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).