1. Introduction

Let

C be a closed, convex and nonempty subset of a real Hilbert space

. Let

be a bifunction,

be a mapping. The generalized equilibrium problem ( GEP) is defined as:

Denote by

the set of solutions of the GEP. If

, then the GEP (

1) becomes the equilibrium problem (EP):

The solutions set of (

2) is denoted by

.

In the oligopolistic market equilibrium model [

1], it is assumed that the cost functions

are increasingly piecewise-linear concave and that the price function

can change firm by firm. Namely, the price has the following form:

. Take

,

,

, and

(

are two corresponding matrixes). Then the problem of finding a Nash equilibrium point becomes the GEP (

1). The GEP is very general in the sense that it includes, as particular cases, optimization, Nase equilibrium problems, variational inequalities, and saddle point problems. Many problems of practical interest in economics and engineering involve equilibrium in their description; see [

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15] for examples.

If

for all

then the GEP (

1) becomes the variational inequality problem (VIP):

for which the solutions set is denoted by

. The VIP (

3) was introduced by Stampacchia [

16] in 1964. It provides a convenient, natural, and unified framework for the study of many problems in operation research, engineering and economics. It includes, as special cases, such well-known problems in mathematical programming as systems of optimization and control problems, traffic network problems, and fixed point problems; see [

6,

7,

17].

Many iterative methods for solving the VIPs have been proposed and studied; see [

4,

6,

7,

8]. Among them, two notable and general directions for solving VIPs are the projection method and the regularized method. In order to solve monotone variational inequality problems, Thong and Hieu [

5] recently introduced the following Tseng’s extragradient method (TEGM):

Assume

is monotone and Lipschitz continuous. Then they proved that the sequence

generated by Algorithm 1 converges weakly to some solution to the VIP (

3) under appropriate conditions. Based on Tseng’s extragradient method and the viscosity method, they also introduced the following Tseng-type viscosity algorithm (TEGMV):

| Algorithm 1: Tseng’s extragradient method (TEGM) |

Initialization: Set and let be arbitrary. Step 1. Given , compute

where is chosen to be the largest satisfying the following: If , then stop and is the solution of the VIP ( 3). Otherwise, go to Step 2. Set and return to Step 1. |

The mapping f in Algorithm 2 is a contraction of . By adding this viscosity term, they proved that the process constructed by Algorithm 2 converges strongly to under suitable conditions, where denotes the metric projection from onto the solution set .

Most recently, inspired by the extragradient method and the regularization method, Hieu et al. [

18] introduce the following double projection method (DPM),

for each

, where

. This method converges if

is

L-Lipschitz continuous and monotone.

Motivated by Thong and Hieu [

5], Hieu et al. [

18] and Tseng [

19], we introduce a new numerical algorithm for solving a generalized equilibrium problem involving a monotone and Lipschitz continuous mapping. This method can be viewed as a combination between the regularization method and the Tseng’s extragardient method. We prove that the sequences constructed by the proposed method converge in norm to the smallest norm solution of the generalized equilibrium problem. Finally, we provide several numerical experiments for supporting the proposed method.

| Algorithm 2: Tseng’s extragradient method with viscosity technique (TEGMV) |

Initialization: Set and let be arbitrary. Step 1. Given , compute

where is chosen to be the largest satisfying the following: If , then stop and is the solution of VIP. Otherwise, go to Step 2. Set and return to Step 1. |

2. Preliminaries

In this section, we use (respectively, ) to denote the strong (respectively, weak) convergence of the sequence to x as . We denote by , the set of fixed points of the mapping T, that is Let stand for the set of real numbers, and C denote a nonempty, convex and closed subset of a Hilbert space .

Definition 1. The equilibrium bifunction is said to be monotone, if: Definition 2. A mapping is said to be:

- (1)

- (2)

L- Lipschitz continuous on C, if there exists such that

Assumption 1. Let C be a nonempty, convex and closed subset of a Hilbert space and be a bifunction satisfying the following restrictions:

- (A1)

, ;

- (A2)

is monotone;

- (A3)

for all is convex and lower semicontinuous;

- (A4)

for all

- (A4′)

for every and satisfy

- (A4″)

is jointly weakly upper semicontinuous on in the sense that, if and , converges weakly to x and y, respectively, then as (see, e.g., [20]).

Obviously, the condition

implies

and the condition

implies

(see, e.g., [

21] for more details).

Lemma 1 ([

2,

3])

. Let be a bifunction satisfying Assumption A1 (A1)–(A4). For and , define a mapping by:Then, it holds that:

- (i)

is single-valued;

- (ii)

is a firmly nonexpansive mapping, i.e., for all

- (iii)

- (iv)

is nonempty closed and convex.

Remark 1. Suppose is monotone and Lipschitz continuous, is a bifunction satisfying Assumption A1 (A1)–(A4). It is easy to check that the mapping satisfies Assumption A1. Hence, from Lemma 1, we find that:

- (i)

is single-valued;

- (ii)

is a firmly nonexpansive mapping;

- (iii)

- (iv)

is nonempty, closed and convex.

Lemma 2 ([

22])

. Let be a sequence in . If and , then . Lemma 3 ([

23,

24])

. Assume is a sequence of nonnegative numbers satisfying the following inequality: where satisfy the conditions:- (i)

,

- (ii)

,

- (iii)

.

Then, .

3. Main Results

In this section, we focus on the strong convergence analysis for the smallest norm solution of the GEP (

1) by using the Tikhonove-type regularization technique. As we know, the Tikhonove-type regularization technique has been effectively applied to convex optimization problems to solve ill-posed problems.

In the sequel, we assume that

is a bifunction satisfying (A1)–(A3) and (A4′),

is monotone and

L-Lipschitz continuous. For each

, we associate the GEP (

1) with the so-called regularized generalized equilibrium problem (RGEP):

We deduce from the following Lemma 4 that the RGEP has a unique solution for each . On the other hand, noticing Remark 1 (iv), one finds that is nonempty, closed and convex. Hence there exists uniquely a point which has the smallest norm in the solutions set . The relationship between and can also be described in the following lemma.

Lemma 4. Let be monotone and L-Lipschitz continuous, be a bifunction satisfying Assumption A1 (A1)–(A3) and (A4′). Then it holds that:

- (i)

for each the has a unique solution ;

- (ii)

, and , ;

- (iii)

, .

Proof. (i) Since

is monotone and Lipschitz continuous, then

is also monotone and Lipschitz continuous. From Remark 1 (iv), we find that the solutions set of the RGEP is nonempty. For

if

are two solutions of the RGEP, then one has:

and

Adding up (

6) and (

7), we have:

In view of (

8) and the monotone property of

and

, we obtain:

which implies

. In turn, we complete the proof of (i).

Taking any

, we have

for all

, which with

, implies that

Since

is the solution of the RGEP, we then find:

Substituting

into (

11), we obtain:

Summing up inequalities (

10) and (

12), we get:

Noticing (

13), and using the monotone property of

and

, we obtain:

which implies

. Thus we have

Especially, we also obtain

Therefore, we deduce that

is bounded.

Since

C is closed and convex, then

C is weakly closed. Hence there is a subsequence

of

and some point

such that

In view of the monotone property of

, we deduce, for all

, that:

Due to the fact that

and noticing (

14), we infer that:

Letting

and noticing (A4’), we obtain:

For

and

, substituting

into above inequality, we have:

Since

is

L-Lipschitz continuous on

C, by taking

, we have:

which implies

From Lemma 4 (ii) and the lower weak semi-continuity of the norm, we obtain:

Further, due to the fact that is a unique solution which has the smallest norm in , we derive . This means as . By following a similar argument to that above, we deduce that the whole sequence converges weakly to as .

Next we show that

. Indeed, noticing the lower semi-continuous of norm, Lemma 4 (ii) and (

15), we obtain:

which means

In view of Lemma 2 and the fact that , we derive that By following the lines of proof as above, we obtain that the whole sequence converges strongly to .

(iii) Assume that

,

are the solutions of the RGEP. Then we have

and

From the above two inequalities and using the monotonicity of

and

, one obtains:

Simplifying it and noticing Lemma 4 (ii), we find:

This completes the proof. □

In the following, combining with Tseng’s extragradient method and the regularization method, we propose a new numerical algorithm for solving the GEPs. Assume that the following two conditions are satisfied:

- (C1)

and ;

- (C2)

.

An example for the sequence

satisfying conditions (C1) and (C2) is

with

. We now introduce the following Algorithm 3:

| Algorithm 3: The Tseng’s extragradient method with regularization (TEGMR) |

Initialization: Set and let be arbitrary. Step 1. Given , compute

where is chosen to be the largest satisfying the following: Set and return to Step 1. |

Lemma 5 ([

5])

. The Armijo-like search rule (17) is well defined and Theorem 1. Let C be a nonempty convex closed subset of real Hilbert spaces , be a bifunction satisfying (A1)–(A3) and (A4′), and be monotone and L-Lipschitz continuous. Then the sequence constructed by Algorithm 3 converges in norm to the minimal norm solution of the (1) under conditions (C1) and (C2). Proof. By (C1) and Lemma 4 (iii), we obtain

as

. Therefore, it is sufficient to prove that:

Since

is a solution of the RGEP for all

, we obtain that:

Using Lemma 1 (ii), we derive:

Substituting (

20) into (

18) and noticing the monotone property of

and (

17), we derive:

The last term in (

21) is estimated as follows:

Substituting (

22) into (

21), one finds:

Since

,

and

(by Lemma 5), then there exists

such that

Thus, we get from (

23) that

For each

, from the Cauchy–Schwarz inequality and Lemma 4 (iii), we infer

Therefore, it follows from (

24) and (

25) that:

We deduce from (C1), (C2) and Lemma 3 that which means . This completes the proof. □

4. Application to Split Minimization Problems

Let

C be a nonempty closed convex subset of

,

is a convex and continuous differentiable function. Consider the constrained convex minimization problem:

The monotonicity of convexity of

can be ensured by the monotonicity of

. A point

is a solution of the minimization problem (

26) if and only if it is a solution of the following variational inequality

Setting , it is not difficult to check that and From Theorem 1, we have the following result.

Theorem 2. Let be a convex and continuous differentiable function whose gradient ψ is L-Lipschitz continuous. Suppose that the optimization problem is consistent, i.e., its solution set is nonempty. Then the sequence constructed by Algorithm 4 converges to the unique minimal norm solution of the minimization problem (26) under conditions (C1) and (C2). | Algorithm 4: The Tseng’s extragradient method with regularization for minimization problems |

Initialization: Set and let be arbitrary. Step 1. Given , compute

where is chosen to be the largest satisfying the following: Set and return to Step 1. |

In this subsection, we provide some numerical examples to illustrate the behavior and performance of our Algorithm 3 (TEGMR) as well as comparing it with Algorithm (

4) (DPM of Hieu et al. [

18]), Algorithm 1 (TEGM of Thong and Hieu [

5]) and Algorithm 2 (TEGMV of Thong and Hieu [

5]).

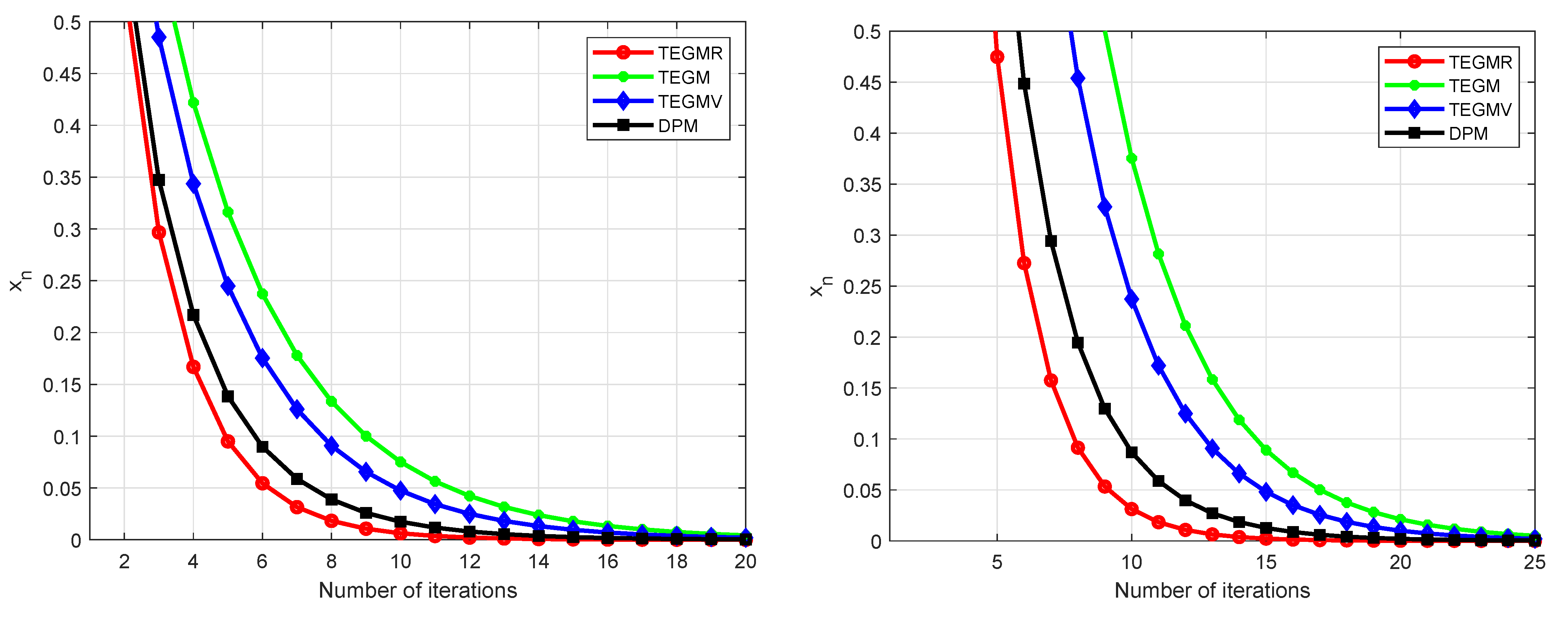

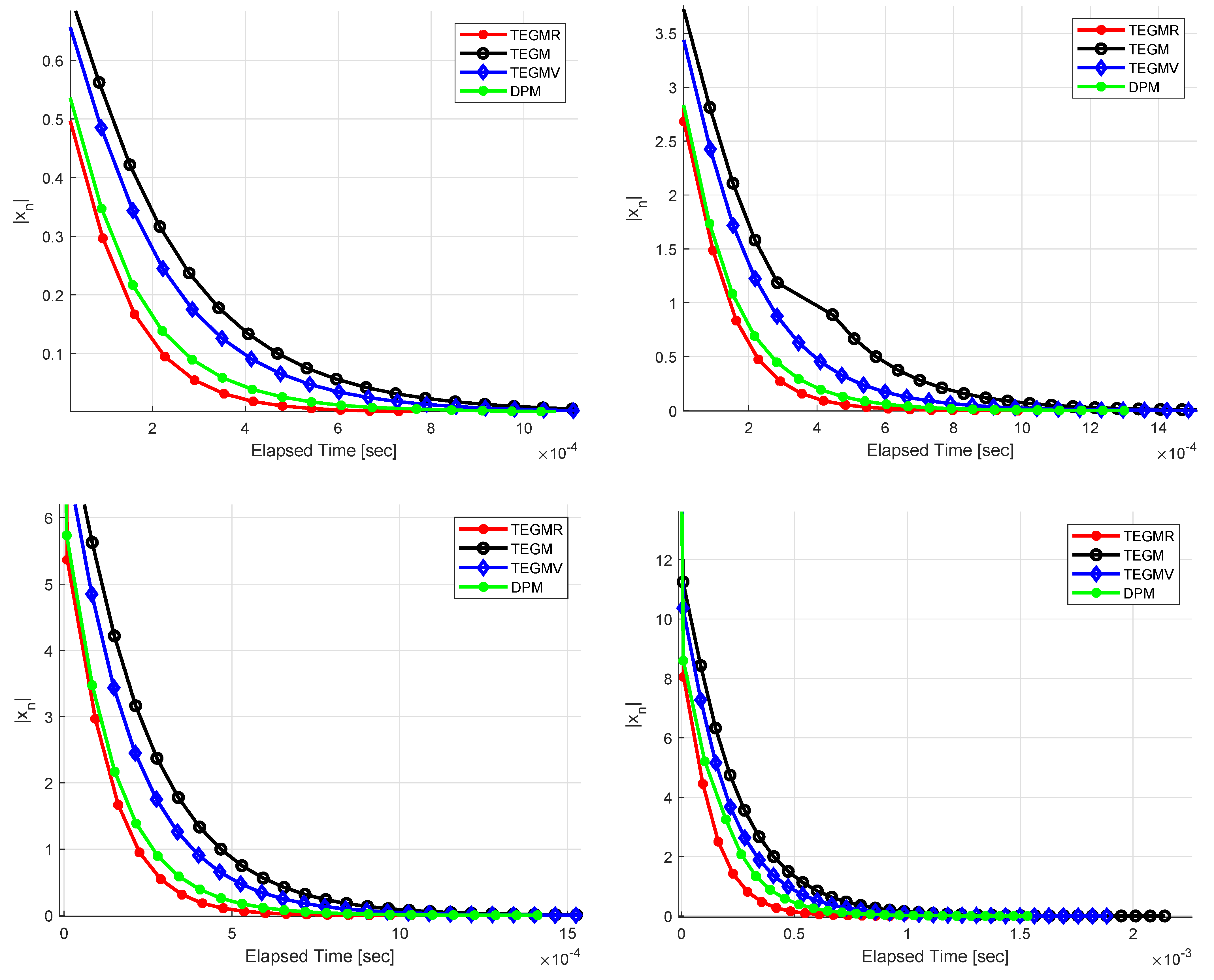

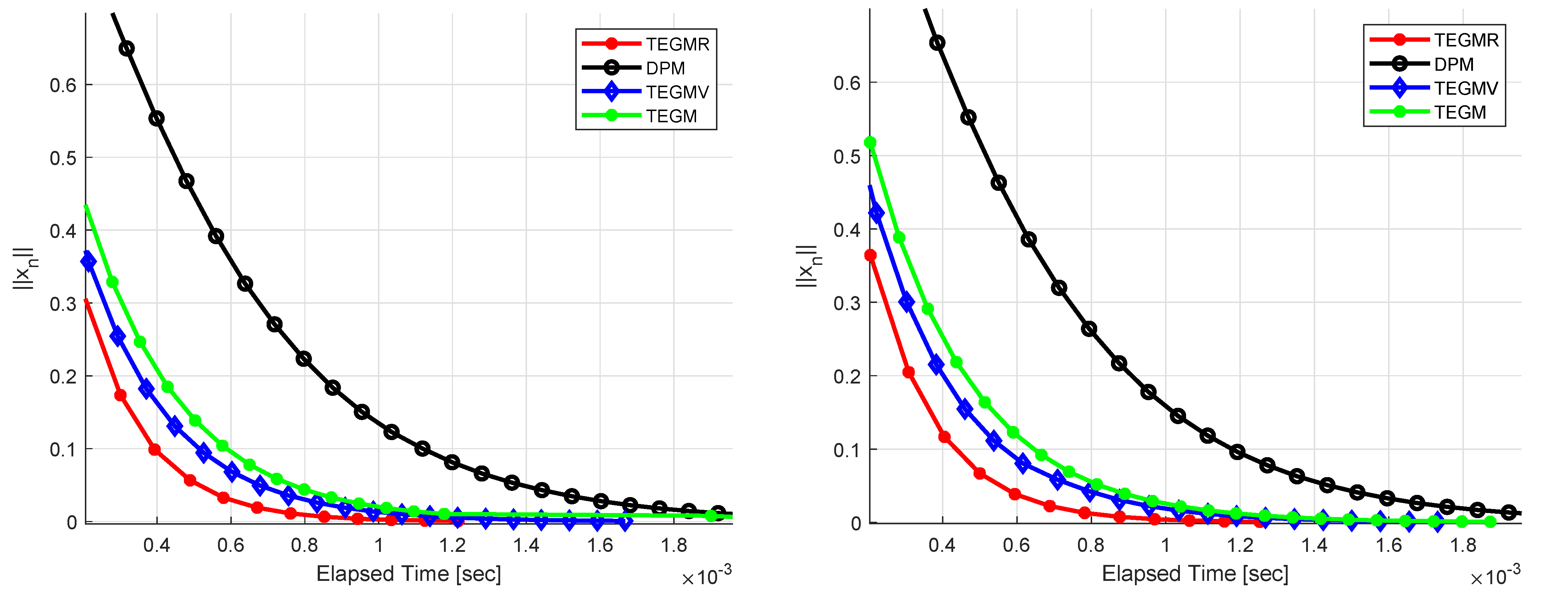

Example 1. Let be the set of real numbers. Define the bifunction for all . Let be given by , be given by for all . We get that A is monotone and 1-Lipschitz continuous. It is easy to check that It is also not difficult to check . Let us choose , , and . We test our Algorithm 3 for different values of , see Figure 1, Figure 2 and Figure 3. Example 2. Let be given by for all , be given by , be given by for all . The feasible set C is given by The maximum number of iterations is 300 as the stopping criterion and the initial values are randomly generated by rand in MATLAB. Let us choose , , and . Figure 4 describe the numerical results, for Example 2 in and and , respectively. According to all graphical representations in

Figure 1,

Figure 2,

Figure 3 and

Figure 4, one finds that Algorithm 3 performs better than than Algorithm (

4). Algorithms 1 and 2 in terms of number of iterations and CPU-time taken for computation.