Abstract

We consider the usual random allocation model of distinguishable particles into distinct cells in the case when there are an even number of particles in each cell. For inhomogeneous allocations, we study the numbers of particles in the first K cells. We prove that, under some conditions, this K-dimensional random vector with centralised and normalised coordinates converges in distribution to the K-dimensional standard Gaussian law. We obtain both local and integral versions of this limit theorem. The above limit theorem implies a limit theorem which leads to a -test. The parity bit method does not detect even numbers of errors in binary files; therefore, our model can be applied to describe the distribution of errors in those files. For the homogeneous allocation model, we obtain a limit theorem when both the number of particles and the number of cells tend to infinity. In that case, we prove convergence to the finite dimensional distributions of the Brownian bridge. This result also implies a -test. To handle the mathematical problem, we insert our model into the framework of Kolchin’s generalized allocation scheme.

Keywords:

random allocation; generalized allocation scheme; Poisson distribution; Gaussian distribution; limit theorem; local limit theorem; Brownian bridge; χ2-test MSC:

0C05; 60F05; 62G10

1. Introduction and Notation

In this paper, we study the usual random allocation model.

The random variables represent a non-homogeneous allocation scheme of n-distinguishable particles into N distinct cells if their joint distribution has the form

where are non-negative integers with , , for . Here, is the probability that the particle is inserted into the ith cell, and the random variable is the number of particles in the ith cell after allocating n particles into the cells. When , then scheme (1) is called a homogeneous allocation scheme of n distinguishable particles into N distinct cells. In [1], homogeneous and non-homogeneous allocation schemes of n distinguishable particles into N distinct cells were considered.

Our goal is to study allocations with an even number of particles in each cell. Thus, let be the set of even non-negative integers, i.e., ; let be the allocation scheme of distinguishable particles into N different cells; and let be the allocation scheme of distinguishable particles into N different cells with an even number of particles in each cell. Then, has the distribution

where are non-negative integer numbers, such that .

To describe the results of the paper, we need the following notation. denotes the convergence in distribution. , , are independent, identically distributed Gaussian random variables with mean 0 and variance 1.

In [2], it was proved that

if K is a fixed number and such that , for .

The first aim of this paper is to obtain an analogue of the above result for an allocation scheme of distinguishable particles into distinct cells having an even number of particles in each cell. We shall prove that, under some conditions,

as , see Theorems 2 and 3.

A well-known fact is that the polynomial distribution (1) is asymptotically normal, when N is fixed and . This result serves as a basis of the proof that the limit of the empirical process is the Brownian bridge, see [3]. In this paper, we shall study this problem for allocations having an even number of particles in each cell. Here, we introduce the following two random processes:

and

Observe that and , .

The Gaussian random process, , , is called a Brownian bridge if its mean value function is 0 and its correlation function is , .

For the homogeneous allocation scheme, we shall prove in Theorem 4 that the finite dimensional distributions of converge to the finite dimensional distributions of , if , such that , see Theorem 4.

Both Theorems 3 and 4 imply -tests.

Our mathematical approach is based on the well-known notion of the generalized allocation scheme introduced by V. F. Kolchin in [4]. Thus, we recall the definition of the generalized allocation scheme. The random variables obey the generalized allocation scheme of n particles into N cells, if their joint distribution has the form

for non-negative integer numbers , such that and for some independent non-negative integer valued random variables .

The simplest particular case of the generalized allocation scheme is the usual allocation of particles into cells. Thus, let be independent Poisson random variables with parameters for some and , then the generalized allocation scheme is a usual allocation scheme of n distinguishable particles into N different cells. In other words, a generalized allocation scheme defined by independent Poisson random variables with parameters , is the usual allocation scheme of n distinguishable particles into N different cells, such that , . Thus, in a certain general sense, we can consider the value in Equation (5) as the number of particles in the ith cell.

In the original paper [4], Kolchin obtained the basic properties of the generalized allocation scheme; moreover, he proved limit theorems for the number of cells containing precisely r particles. In Equation (5), the distribution of the random variable can be arbitrary. Fixing its distribution in various ways, several models of discrete probability theory, such as random forests, random permutations, random allocations, and urn schemes are obtained as particular cases of the generalized allocation scheme, see [5].

In our paper, we shall not use known limit theorems for the generalized allocation scheme, we shall just use the representation (7), which is a certain consequence of the generalized allocation scheme. To this end, we shall show that when there are an even number of particles in each cell, then the usual allocation can be described by a generalized allocation scheme in the following way. Let , be the hyperbolic cosine function.

Theorem 1.

Let be a generalized allocation scheme of particles into N cells defined by Poisson independent random variables with parameters . Then, defined by (2) can be represented as a generalized allocation scheme of particles into N cells defined by the independent random variables , with distributions

That is

for non-negative integer numbers , such that .

For identically distributed random variables , Theorem 1 was proved in [6]. One can prove Theorem 1 using similar elementary calculations as in the proof given in [6].

From Theorem 1 and (5), it follows that

where , , and the independent random variables have the distributions

Equation (7) plays a crucial role in our paper. The proof of Theorem 2 will be based on approximations of the fractional and the multipliers in (7).

The structure of our paper is as follows: In Section 2, further notation is given and the main results are presented. Theorem 2 is the integral version of the central limit theorem for the allocation scheme, when each cell contains an even number of particles. Theorem 2 is given in terms of the generalized allocation scheme, but the underlying distribution is the Poisson distribution, so the result concerns the usual allocation scheme. However, the general setting is important because in the proof, the general framework given in Theorem 1, is used. Corollary 1 is the local version of Theorem 2. Theorem 3 is a version of Theorem 2. For practical applications, Theorem 3 is more convenient than Theorem 2. Then, we turn to the homogeneous case and present Theorem 4, which states the convergence of the finite dimensional distributions to those of the Brownian bridge. In Section 3, two -tests are proposed. The first one tests the probabilities , when the sample comes from the random allocation with an even number of particles in each cell. Then, we give a proposal to apply the -test to check binary files with parity bits. The second -test can be applied when we have observations only for the numbers of particles in some unions of the cells. Examples 3 and 4 offer numerical evidence for our limit theorems. In Section 4, some auxiliary results are given. In Section 5, the proofs of the main results are presented. For the proofs, we use both known approximation theorems and direct calculations.

We shall apply the following usual notation. is the set of real numbers, is the set of positive integers, stands for the expectation, and denotes the variance. is a quantity converging to 0. if is bounded as .

2. Main Results

First, we study the non-homogeneous allocation scheme. Consider the scheme (6) and representation (7). Consider the generic random variable with parameter , having the distribution

The expectation and the variance of (see later in Equations (21) and (25)) are

where is the hyperbolic tangent function. Therefore, the expectation and the variance of

are

In our main theorem, we will use the following condition: for some ,

as .

Our first main results in this paper are the following theorems:

Theorem 2.

During the proof of Theorem 2, we shall obtain the following local limit theorem.

Corollary 1.

Under the conditions of Theorem 2, if , then, we have

uniformly for the values of , such that , , for any fixed numbers , .

In the following theorem, will denote a discrete probability distribution depending on n and N.

Theorem 3.

Let be the usual allocation scheme of distinguishable particles into N different cells with even number of particles in each cell. Assume that the allocation probabilities are which depend on n and N. Suppose that, for some ,

as . Then, we have

as .

Theorem 3 can be obtained from Theorem 2 if we use , .

Now, we turn to the homogeneous allocation scheme; we assume that in (1), the parameters are the same. If there are an even number of particles in each cell, then this allocation is described by Equation (6), and because of homogeneity, the random variables , are independent and identically distributed with distribution

where . From (6), it follows that

where are non-negative integer numbers, such that . We shall need this formula in the proof of our Theorem 4.

Theorem 4.

The idea of the proof for the particular case of two-dimensional distributions is the following. Let . The vector of two increments of the Brownian bridge

has the correlation matrix

The determinant of is

and its inverse is

During the proof of Theorem 4, we shall show that the distribution of the vector converges to the two-dimensional Gaussian distribution with a mean of 0 and covariance matrix .

3. Applications of the Main Results for -Tests and Numerical Examples

Using our main results, we can construct some analogues of the well-known -test.

The first one is a consequence of Theorem 3, so we assume the conditions of that theorem.

Theorem 5.

Let be an allocation scheme of distinguishable particles into N different cells with an even number of particles in each cell. Assume that the allocation probabilities are which depend on n and N. Suppose that conditions (14) are valid. Then, we have

as , where denotes the -distribution with degree of freedom K.

The proof of Theorem 5 is a simple application of Theorem 3 and the definition of the -distribution.

Now, we turn to an application of the above -test for a well-known method of coding, i.e., the parity checking.

Example 1.

We can apply our -test for testing a transmission channel for messages using parity bits. The well-known parity bits are used for error detection. First, we briefly describe the usage of parity bits in the case of the so-called even parity bit. Consider a binary message containing N blocks. If a fixed block contains an odd number of bits having value 1, then we add a parity bit having value 1. If the fixed block contains an even number of bits having value 1, then we set the value of the parity bit to 0. Thus, in the final block, the number of bits having value 1 should be always even. Sometimes this method is called control sum.

After transmission of the binary message through a noisy channel, one can check the parity of each block. If the parity is odd, it shows an error. More precisely, the parity check shows an odd number of errors. However, if a block contains an even number of errors, then this check does not show an error. We are interested in finding the error rate of a transmission channel, assuming that the parity check does not show any error.

Our statistical model is as follows: Consider a file which contains N blocks. The mth block, , is a sequence , where or , , and . represents the parity bit. An error in a block is a replacement of any element of the block to its opposite value, that is the true value 1 is replaced by 0, or the true value 0 is replaced by 1.

We consider the following statistical model for the errors. The file contains a binary message. It is divided into N blocks. In each block, a parity bit is used. After the transmission of the file throughout a channel, the parity check does not show any error.

To check the quality of the channel, we should obtain the original file and compare it with the transmitted one to identify the errors. We can test the hypothesis : is the probability that an error occurs in the ith block, where , . E.g., we can test that the probability that an error happens in the ith block is proportional to the length of the block by using

The numbers of errors in the N blocks are with the following properties:

- (1)

- The number of errors in the whole file is (i.e., );

- (2)

- Errors can occur in the blocks independently and the probability that an error occurs in the ith block is ;

- (3)

- The parity check does not find any block with error (that is each block has an even number of errors).

Then, the numbers of errors can be considered as the allocation of distinguishable particles into N different cells with an even number of particles in each cell.

We calculate the statistic

from Theorem 5, and if its value is larger than a critical value, then we reject hypothesis .

Now, we turn to an application of Theorem 4 to mathematical statistics. Our next example is similar to Example 1. Consider again a binary file containing N blocks and any block that contains a parity bit. Assume that the parity check does not show any error in the blocks. So, in any block, there can be an even number of errors. We are not able to find the number of errors in the blocks, but we can find the number of errors in m super blocks (i.e., in some unions of the original N blocks). Using the following procedure, we can test either the sizes of the super blocks or when the super block sizes are known; then, we can test if the errors are uniformly distributed among the original N blocks. In the next example, we describe the statistical procedure in a general mathematical setting.

Example 2.

Consider the homogeneous allocation model. Let be the numbers of particles in the cells after allocating distinguishable particles into N different cells having an even number of particles in each cell. However, the numbers are not known for us, only the numbers of particles in some neighbouring cells are known.

Let , where each has the form . So we suppose that the numbers of particles in certain sets of the cells are known, more precisely , , are known. Let be some fixed known numbers, where again each has the form of . We will check the null hypothesis , , against the alternative hypothesis for some .

To this end, we propose the following -test. Let

be the test statistic.

Let . Choose the critical value , such that , where is a random variable having -distribution with a degree of freedom of . The hypothesis is accepted if , and it is rejected if . By Theorem 4, if , then the probability of the type I error converges to

Above we used, besides Theorem 4, the following known fact from the statistical theory of -tests. If , then, for the increments of the Brownian bridge , the distribution of

is .

Example 3.

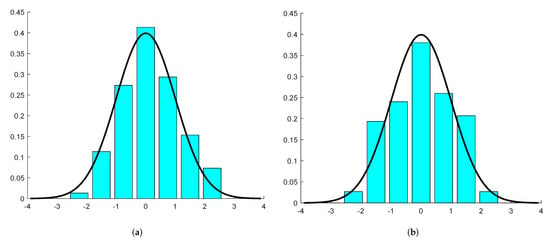

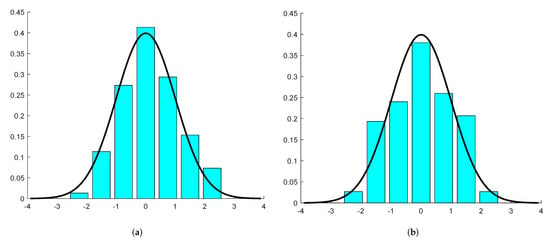

We carried out computer experiments to show numerically the results of our theorems. We simulated the allocations using random numbers. We considered a homogeneous allocation, that is, when we allocate a particle, then we choose a cell uniformly at random from the N cells. We allocated particles into cells. We repeated this experiment several times and we only saved those results when there was an even number of particles in each cell. So we saved times the results of the allocations. In this way, we obtained a sample of size for our -dimensional random vector . Then, we constructed histograms for the fist two coordinates of the above-mentioned -dimensional sample. On the left-hand side of Figure 1, the histogram of the observations of , together with the standard normal probability density function, are shown. On the right-hand side of Figure 1, the histogram for and the standard normal probability density function can be seen. The fit to the normal distribution seems to be very good. On the left-hand side of Figure 2, the joint histogram of the sample for the variables and is given. This figure supports the joint normality of the two coordinates.Therefore, we obtained numerical evidence for Theorems 2 and 3. Finally, we performed principal component analysis for the observations of the vector

Figure 1.

The histograms of the first and the second coordinates in Example 3. (a) First coordinate; (b) Second coordinate.

The first 19 principal component variances were between and , but the last one was zero; this result supports the theory that the degree of freedom of the -statistic in Example 2 is .

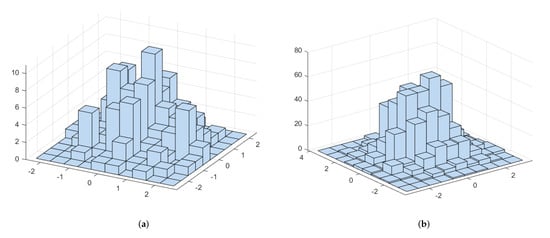

Example 4.

We carried out the same computer experiment as in Example 3, but using other parameters. We allocated particles into cells. We saved times the results of those allocations when there was an even number of particles in each cell. In this way, we obtained a sample of size for the -dimensional random vector . On the left-hand side of Figure 3, the histogram of the observations of , together with the standard normal probability density function, are presented. On the right-hand side of Figure 3, the histogram for and the standard normal probability density function are given. The fit to the normal distribution is again, very good. On the right-hand side of Figure 2, the joint histogram of the sample for the variables and is given. This figure also supports the joint normality of the two coordinates. Therefore, we obtained another numerical confirmation for Theorems 2 and 3. Then, we performed principal component analysis for the observations of the vector

The first 9 principal component variances were between and , but the last one was zero. It supports that the degree of freedom of the -statistic in Example 2 is and not m.

We mention that for relatively small values of n, e.g., for and , the numerical results show a pure fit to the normal distribution. It is also worth mentioning that we need a large sample size, i.e., , to numerically show the goodness of fit to the normal distribution.

Figure 2.

The joint histograms of the first two coordinates in Examples 3 and 4. (a) Histogram for Example 3; (b) Histogram for Example 4.

Figure 3.

The histograms of the first and the second coordinates in Example 4. (a) First coordinate; (b) Second coordinate.

4. Auxiliary Results

We shall use the following notation. Let denote a Poisson random variable with the parameter , and let , where , be a random variable with the distribution

Recall that this distribution appears in Theorem 1. We see that the distribution of is the same as the distribution of .

Lemma 1.

Let be fixed. Then, we have

as , uniformly for those values of k for which .

Proof.

We need the following approximation of the Poisson distribution by the normal density function, see p. 43 of [7]. Let have Poisson distribution , and let . Then, as ,

uniformly for , where c is an arbitrary fixed positive number.

Using the above approximation for , we obtain

as uniformly for k such that . □

Lemma 2.

For the moments of , we have

as .

Proof.

By simple calculation, one can obtain that the characteristic function of is

where . Using the hyperbolic sine function , we can obtain the derivatives of the characteristic function

and

Therefore, we obtain

Moreover,

and

It implies that . Then

Since

for , we obtain

Thus, (19) is proved. □

We shall use the following general Berry–Esseen-type inequality. We should mention that in the following Lemma 3, there is no assumption on the distributions of the random variables , .

Lemma 3.

Let , , be independent random variables with variances and expectations , . Let , and let be its variance and let be its expectation. Then, we have

Here, Φ is the standard normal distribution function and c is the constant from the Berry–Esseen inequality.

Lemma 3 was proved in [2]. Now, we shall apply Lemma 3 to our model.

Lemma 4.

In the following lemma, we shall need the characteristic functions of the random variables in (9). Thus, let

be the characteristic function of , let be the characteristic function of the centralized version of , , and let be the characteristic function of the standardized sum .

Lemma 5.

Proof.

We shall need the notation

is an integer valued random variable, so its distribution can be expressed by the following inverse Fourier transform

where is the characteristic function of . However,

So, substituting into the integral in (30), we obtain

Let and . Using the characteristic function of the standard normal law, we have, for any real z, that

Since

from Lemma 4, it follows that

for all fixed .

Since

we have

We know that

Therefore, we obtain that

as , where we applied that . Moreover,

. Here, we used that , the shape of and condition (12). Therefore, we obtain

Consequently,

, . Therefore,

for . Therefore, we obtain that

5. Proofs of the Main Theorems

Proof of Theorem 2.

During the proof, we represent in the form of (7). First, we prove a local version of our limit theorem. To this end, we study the case when the standardized random variables are inside some bounded intervals. Therefore, we need the following notation. Let , . Let

Let

be the expectation and the variance of . By Lemma 1, we have

uniformly for , , such that

Since

Therefore, we have

Let , , be such that for . Using (12) and the above calculation, we have

Therefore, by Lemma 5, we obtain

Using the above calculations, we have

Now, using (36) and (39) in formula (7), we obtain

uniformly for , such that , . Thus, we obtained Corollary 1.

Now, we can apply the well-known method of obtaining the integral version of de Moivre–Laplace theorem from its local version. Thus, using the notation , for , we obtain

Here, on the right-hand side, there is a member of the approximating sum of the integral of the K dimensional standard normal probability density function; so, we obtain

This implies Theorem 2. □

Now, we turn to the proof of Theorem 4. Thus, we consider the homogeneous allocation scheme, and we assume that there are even numbers of particles in each cell. That is why we consider Equation (6) with independent and identically distributed random variables , with distribution

As

so

are the expectation and the variance of . Let

be the sum of our random variables. We need the following corollary of Lemma 5.

Corollary 2.

Consider the homogeneous allocation scheme. Let . Then, we have

as uniformly for for any .

Proof of Theorem 4.

First, we give a detailed proof for the two-dimensional distributions; then, we sketch the proof for the arbitrary finite dimensional distributions.

Since , is a bounded function, from (44) and from condition , we obtain that . Therefore,

as . Condition implies that

Consequently, from Corollary 2, it follows that

uniformly for . Similarly,

uniformly for , and

uniformly for . Since

so we have

uniformly for , .

For short, let , . Using Equations (45)–(48) to approximate the probabilities in (16), and applying the definition of from (17), we obtain

uniformly for , .

From (49) and (4), using the same argument as in the proof of the de Moivre–Laplace theorem, we obtain

Thus, the two-dimensional distributions of converge to the two-dimensional distributions of .

Now, we sketch the proof for the l-dimensional distributions. Let . Then,

where , , and the quadratic form has the following shape

Here, we used the notation

Now, by some algebra, we see that

We need the -type matrix

and its inverse

We can see that we obtain the covariance matrix of the increments

of the Brownian bridge if we insert , , into the matrix D. Denote this matrix by (for any fixed value of l). The determinant of D is , so the determinant of is . We can also check that the matrix of the quadratic form is .

Remark 1.

Relation (52) is a local limit theorem for the random allocation.

6. Discussion

The random allocation of particles into cells is a well-known model in probability theory. There are limit theorems when either the number of particles or the number of cells or both of them tend to infinity, see [1]. The errors in the blocks of a binary file can be modelled as a random allocation. However, if the parity bits are used, then any odd number of errors in the blocks is always detected, but an even number of errors is never detected.

Therefore, describing the behaviour of the allocation model is an interesting problem when there is an even number of particles in each cell.

In this paper, we consider the numbers of particles in cells when we allocate distinguishable particles into N distinct cells having an even number of particles in each cell. For the non-homogeneous case, we study the numbers of particles in the first K cells. We were able to prove the asymptotic normality of this K-dimensional random vector when . For the homogeneous allocation model, we proved a limit theorem to the finite dimensional distributions of the Brownian bridge, if . To handle the mathematical problem, we inserted our model into the framework of Kolchin’s generalized allocation scheme. Using the above limit theorems, we obtained two -tests. As the parity bit method does not detect any even number of errors in the blocks of a binary file, we suggest applying our model to study the distribution of errors in that file.

Author Contributions

Conceptualization, A.N.C.; methodology, A.N.C.; software, I.F.; formal analysis, A.N.C. and I.F.; investigation, A.N.C. and I.F.; writing—original draft preparation, A.N.C.; writing—review and editing, I.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Acknowledgments

The authors would like to thank the referees for the helpful remarks.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kolchin, V.F.; Sevast’ynov, B.A.; Chistiakov, V.P. Random Allocations; Scripta Series in Mathematics; V. H. Winston & Sons: Washington, DC, USA, 1978. [Google Scholar]

- Chikrin, D.E.; Chuprunov, A.N.; Kokunin, P.A. Gaussian limit theorems for the number of given value cells in the non-homogeneous generalized allocation scheme. J. Math. Sci. 2020, 246, 476–487. [Google Scholar] [CrossRef]

- Billingsley, P. Convergence of Probability Measures; Wiley: New York, NY, USA, 1968. [Google Scholar]

- Kolchin, V.F. A class of limit theorems for conditional distributions. Lith. Math. J. 1968, 8, 53–63. (In Russian) [Google Scholar] [CrossRef]

- Kolchin, V.F. Random Graphs; Cambridge University Press: Cambridge, UK, 1998. [Google Scholar]

- Abdushukurov, F.A.; Chuprunov, A.N. Poisson limit theorems in an allocation scheme with even number of particles in each cell. Lobachevskii J. Math. 2020, 41, 289–297. [Google Scholar] [CrossRef]

- Timashev, A.M. Asymptotic Expansions in Probabilistic Combinatorics; TVP Science Publishers: Moscow, Russia, 2011. (In Russian) [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).