2.1. Methodology

The basic Vector Autoregression (VAR) models the Data Generation Process (DGP) stochastic part of a set of

k time series variables

(

) as a linear process of order

p. This is the well-known VAR(

p) model [

25]. Formally,

where

(

) are the

parameter matrices and

is the vector of errors, such that

, where

is the variance-covariance matrix. These errors may include a conditional heteroskedastic process to capture volatilities, not only in high frequency financial frameworks but also in macrofinancial settings [

26]. The most common estimators for this model are:

The maximum likelihood (ML) estimator (

) given by:

where

l stands for the likelihood function and

for the vector of every parameter of interest.

The ordinary least squares (OLS) estimator which is, by far, the most used estimator:

where

is the vector of estimated constant terms,

and

.

Forecasts, structural analysis methods such as Granger causality tests, Impulse Response Function (IRF) analysis, and Forecast Error Variance Decompositions (FEVD) can be constructed easily from the estimated model [

9,

11,

25,

27].

Nevertheless, the estimation of VARs presents a problem in high-dimensional or data-rich environments. The consumption of degrees of freedom increases exponentially when adding new variables to the VAR, as the number of VAR parameters to estimate increases at a rate of the square of the number of variables involved in the model [

9]. For example, a nine variable four-lag VAR has 333 unknown coefficients [

9]. The literature proposes Bayesian methods to overcome this problem [

19].

This research paper explores overcoming this problem by employing the so-called machine learning regularization methods. In particular, it analyzes the relative performance of three of these methods, proposed and computed in the R programming language by [

28], against the traditional estimation procedures in a high-dimensional monetary and financial setting.

Given the forward model

and the data

g, the estimation of the unknown source

f can be done via a deterministic method known as generalized inversion

. However, a more general method is the regularization, defined as:

The main issues in such a regularization method are the choice of the regularizer and the choice of an appropriate optimization algorithm [

23].

Regularization is the technique most widely used to avoid overfitting. In effect, every model (specifically, the VAR model) can overfit the available data. This happens when dealing with high-dimensional data and the number of training examples is relatively low [

29]. In this case, the linear learning algorithm can give rise to a model which assigns non-zero values to some dimensions of the feature vector trying to find a complex relationship. In other words, when trying to perfectly predict certain labels of training examples, the model can include noise in the values of features, sampling imperfection (because of the small dataset size), and other idiosyncrasies of the training set. In this way, regularization is a technique which forces the learning algorithm to construct a less complex model. However, this leads to slightly higher bias and a reduced variance, whereby this problem is also known as bias–variance tradeoff.

There are two main types of regularization (the basic idea is to modify the objective function by adding a penalizing term whose value increases with the complexity of the model [

29]). To present these two types, consider the OLS estimation of the VAR in its minimization problem form:

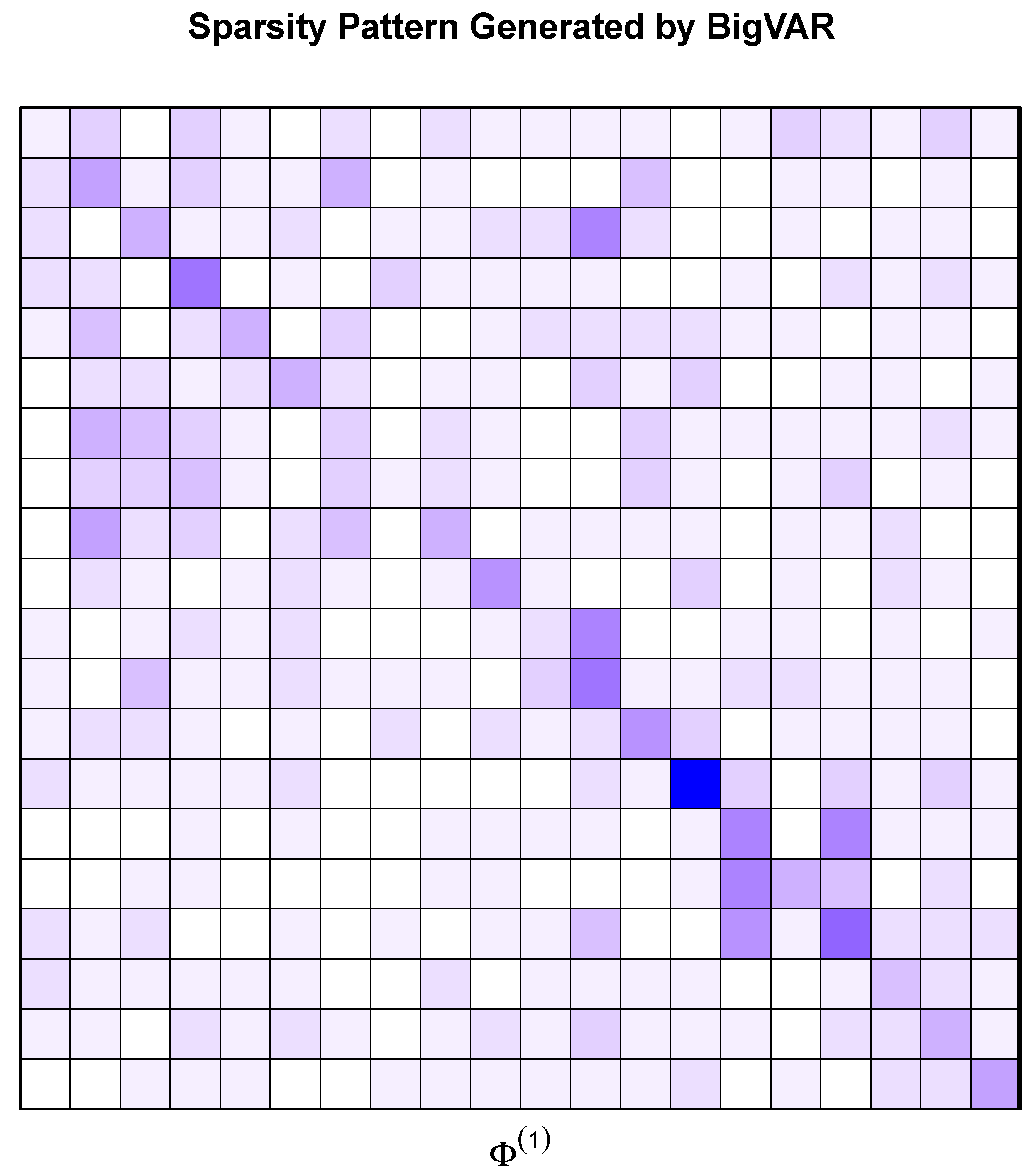

The machine learning regularization methods enter the equation by imposing sparsity and so reducing and partitioning the parameter space of the VAR by applying structural convex penalties to it [

28]. Mathematically,

where

is a penalty parameter and

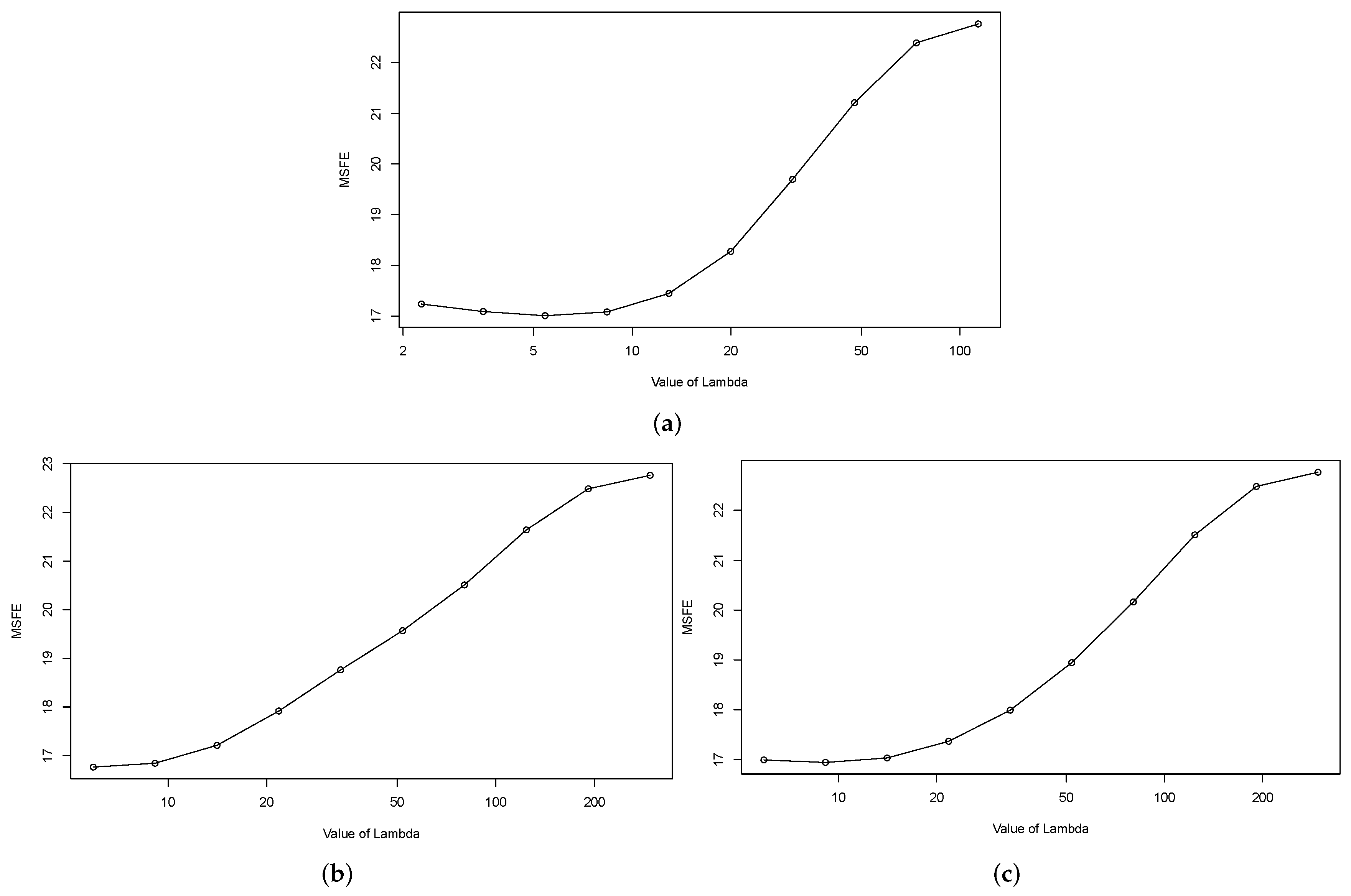

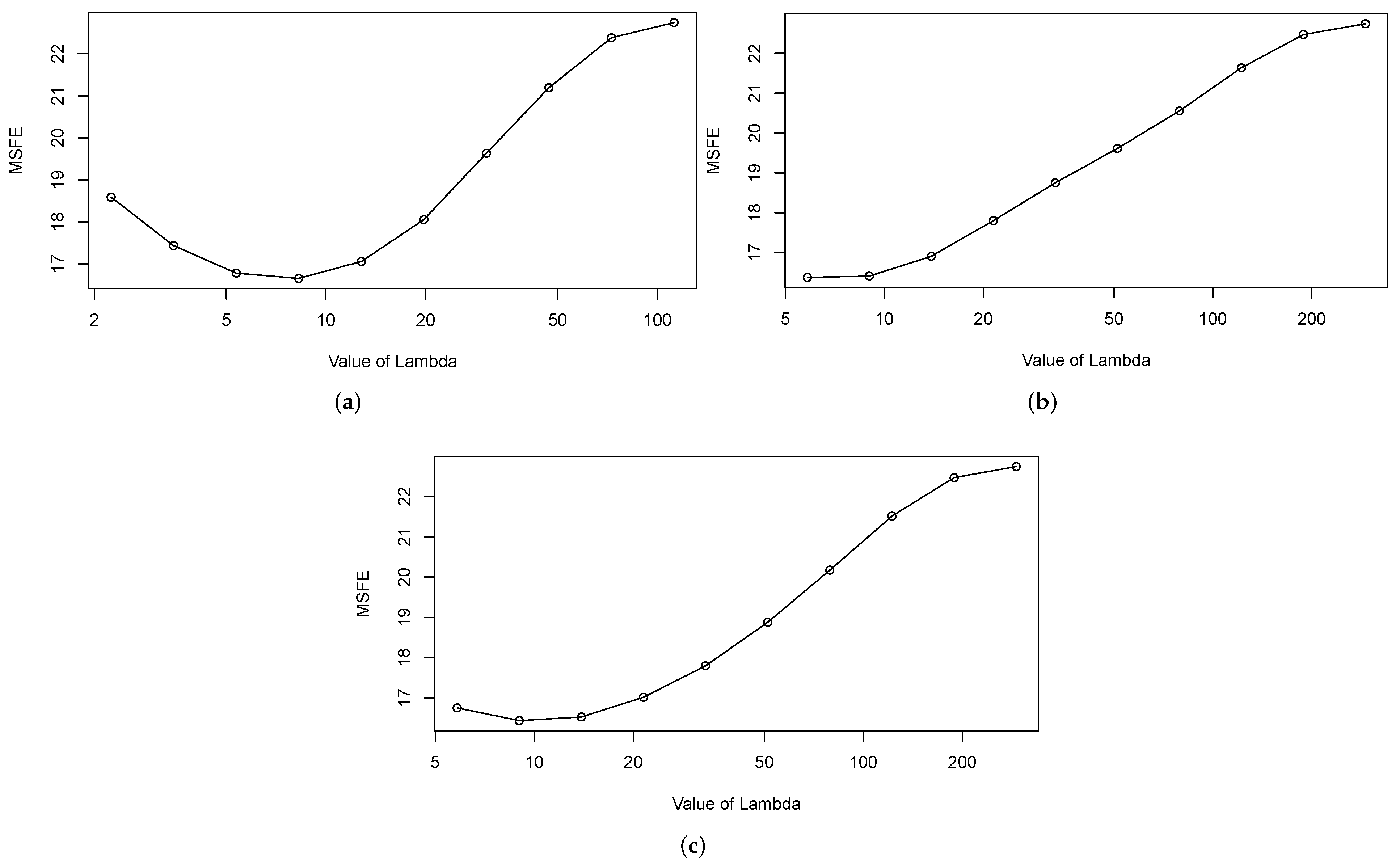

is the group penalty structure. The value of

is selected by sequential cross-validation as the minimum Mean Squared Forecast Error (

MSFE), defined as:

where

is the partition of the data used to train the model and

is the partition of the data used to evaluate the out of sample forecasting performance of it. The two main types of regularization are:

L1-regularization. In this case, the L1-regularized objective is:

where

k denotes a parameter to control the importance of the regularization. Thus, if

, one has the standard non-regularized linear regression model. On the contrary, if

k is very high, the learning algorithm will assign to most

a very small value or zero when minimizing the objective, probably leading to underfitting.

L2-regularization. In this case, the L2-regularized objective is:

On the one hand, L1-regularization gives rise to a sparse model (most of its parameters are zero) and so to a feature selection. Thus, it is useful if we want to increase the explainability of the model. On the other hand, L2-regularization gives better results if our objective is to maximize the performance of the model. Specifically, we can find ridge or Tikhonov regularization for L2 and LASSO for L1 (for an updated review of ridge regularization methods, see [

30,

31,

32]).

In particular, this paper analyzes the performance of the four following machine learning regularization structures

(

denotes the Frobenius norm of order

o) proposed by [

33]:

The performance of a model is measured by the relative

MSFE of the regularized model against the alternative models:

where

M stands for model and

are the

q-estimated models. Therefore,

is the relative performance (

P) of the machine learning model

against the alternative

. The Bayesian VAR is estimated as in [

19,

28]. Akaike’s Information Criteria (AIC) and the Bayesian Information Criteria (BIC) models are the VARs which minimize each respective criteria.

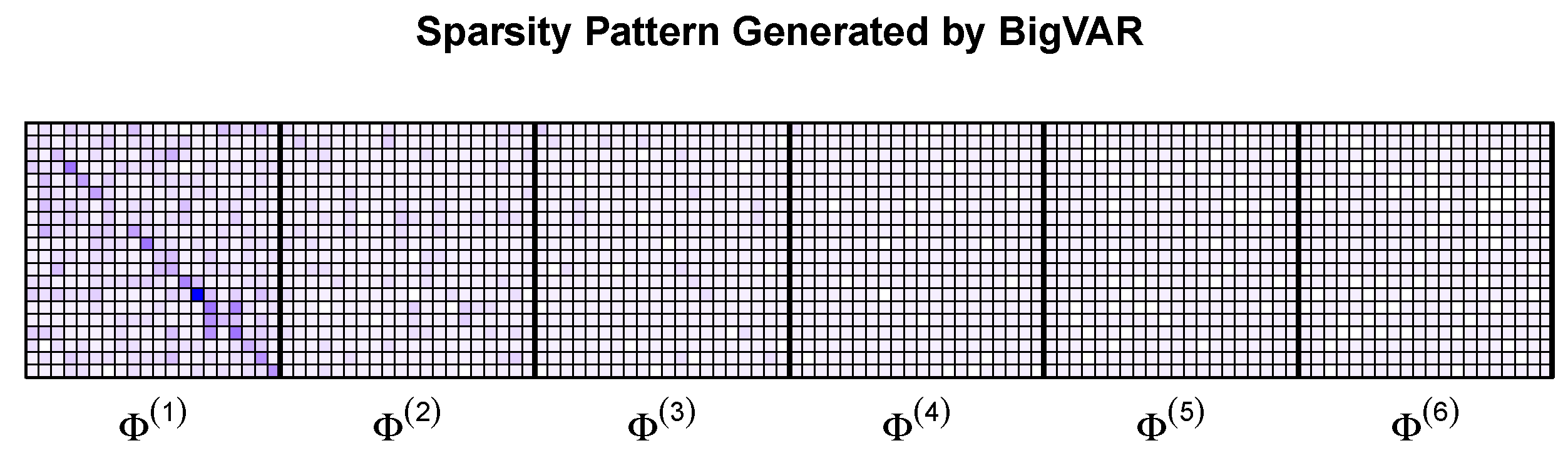

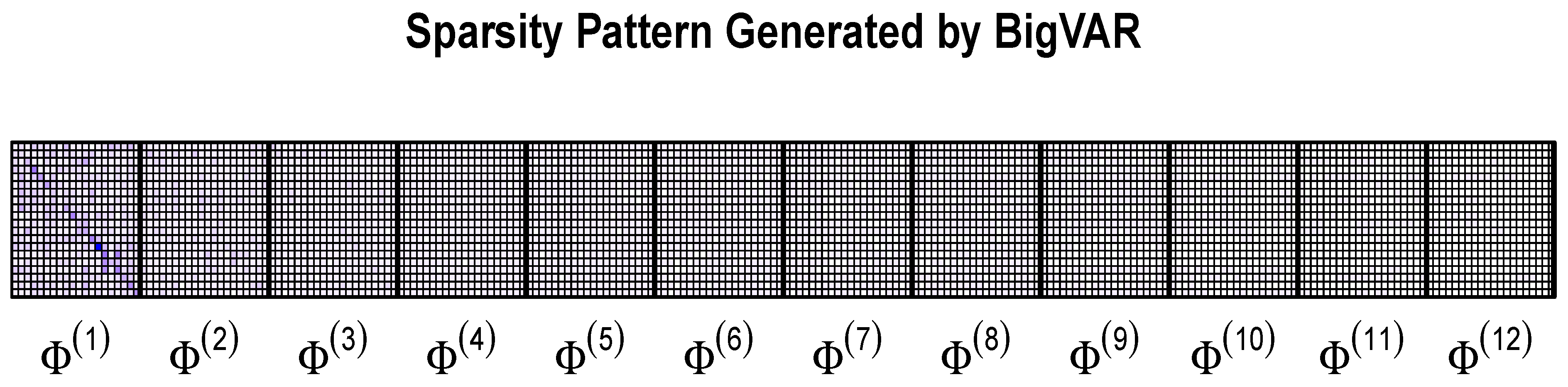

An analysis of the cross-validation (CV) behavior and the sparsity matrices of the regularization methods are also provided. Ideally, the value of would increase with the forecasting horizon (h), by reflecting the U shape of the CV curve. Similarly, the sparsity should increase with the horizon.

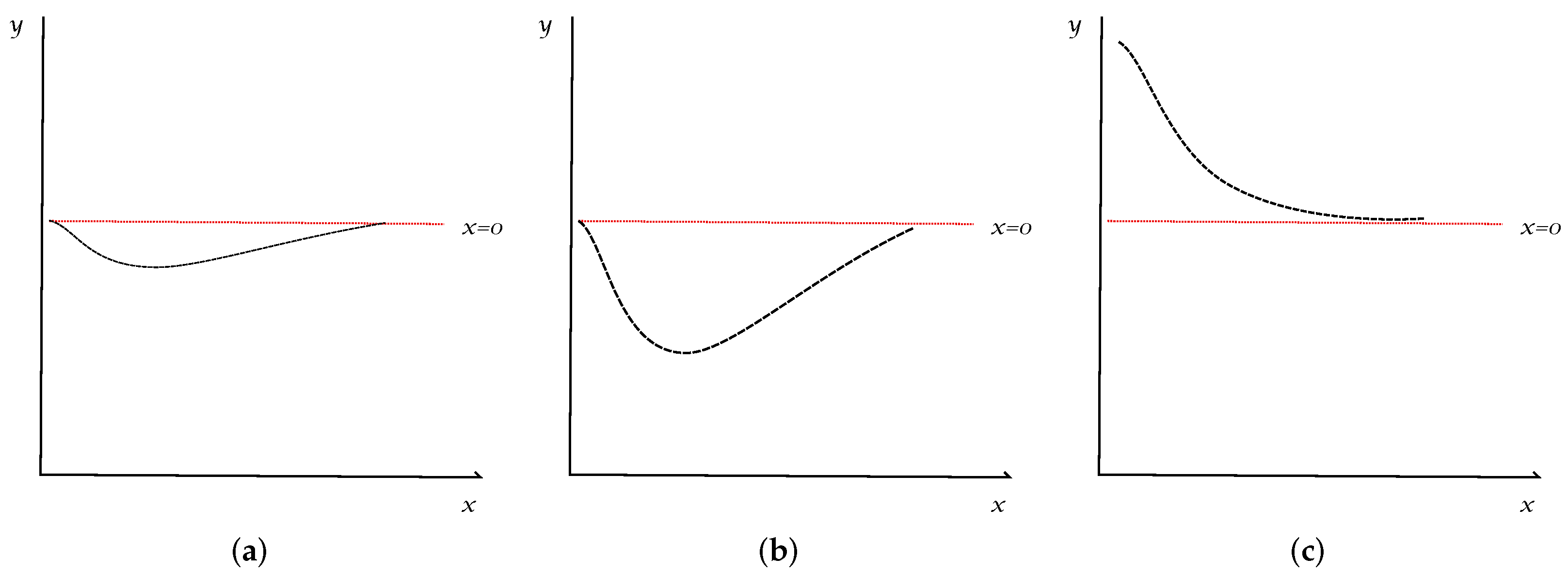

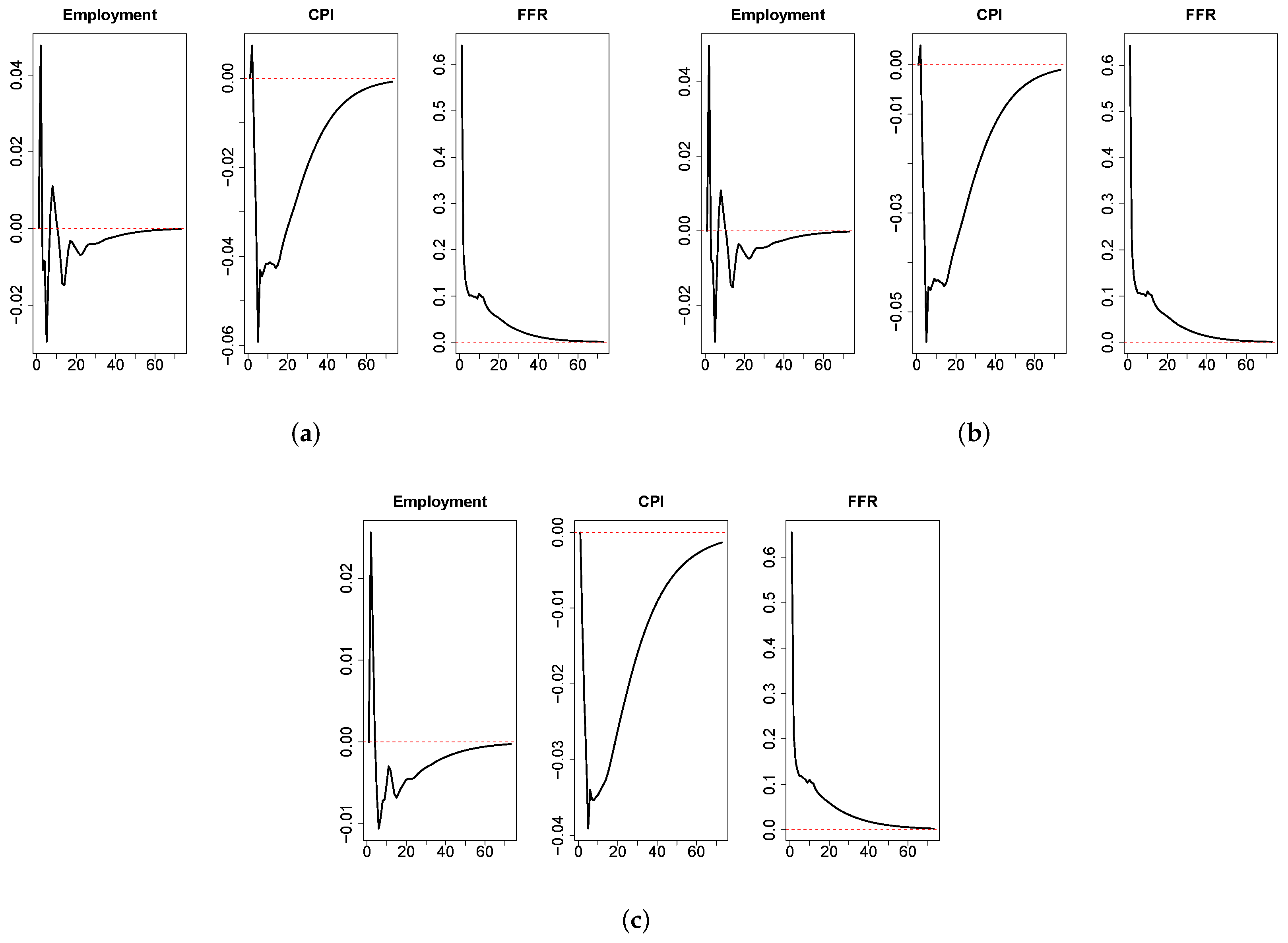

Another fundamental task of VAR models in macroeconomics, financial economics and applied time series econometrics is structural analysis. To analyze the robustness of the regularized VARs in this field, we report the Impulse Response Functions (IRF) of the models. For the sake of simplicity, we report the behavior of a variable of economic activity (employment), and a variable of inflation (consumer’s price index) after an interest rates shock of the central bank (a positive shock of one standard deviation in the federal funds rate). Due to economic theory and evidence, we expect that the IRFs behave as in

Figure 1.

Therefore, if the behavior of the empirical IRFs is similar to

Figure 1, there is evidence that the structural analysis methods of machine learning regularized VARs are consistent with economic theory and evidence. To ensure robustness, a hundred runs of the IRF estimates are performed via bootstrap methods.