Collective Intelligence in Design Crowdsourcing

Abstract

:1. Introduction and Background

1.1. The Design Process

1.2. Crowdsourcing Production Methods

1.2.1. Crowdsourcing Workflows

1.2.2. Crowdsourcing Tasks

1.2.3. Crowd Selection

1.2.4. Incentives

1.2.5. Crowdsourcing of Architecture and Urban Design

1.3. Collective Intelligence

1.3.1. Wisdom of the Crowd

1.3.2. Emergence of Collective Intelligence

2. Materials and Methods

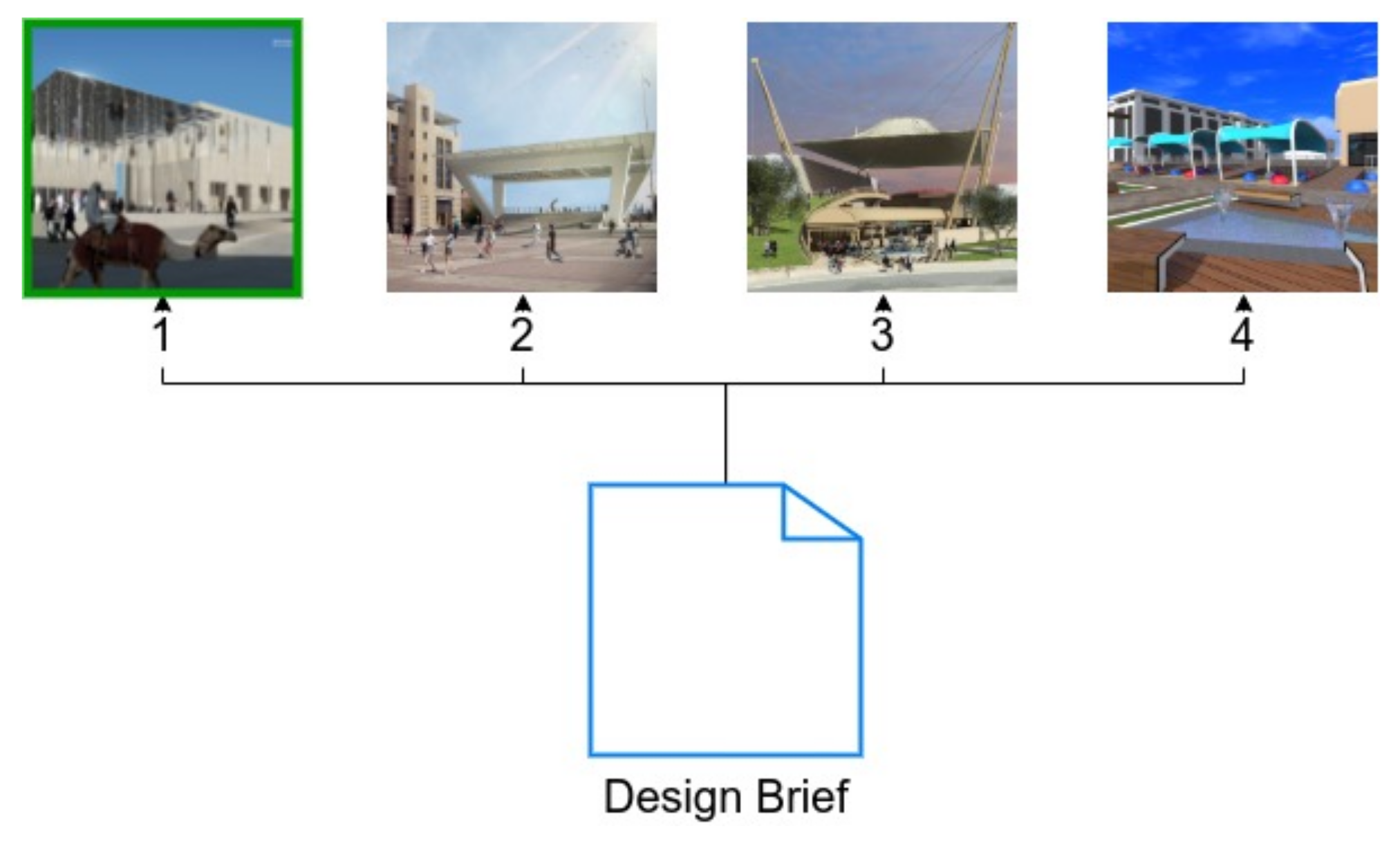

2.1. Contest-Based System

2.2. Network-Based System

2.3. Data Analysis

2.3.1. Collective Intelligence Genome

2.3.2. Collective Intelligence Complex System

2.3.3. Collective Design Diversity

2.3.4. Collective Design Measurement

3. Results

3.1. Collective Intelligence Genome

3.2. Collective Intelligence System

3.3. Collective Design Diversity

3.4. Collective Design Measurement

4. Discussion

4.1. Design Discussions

4.2. Sequential and Parallel Design Development

4.3. Evaluation and Selection

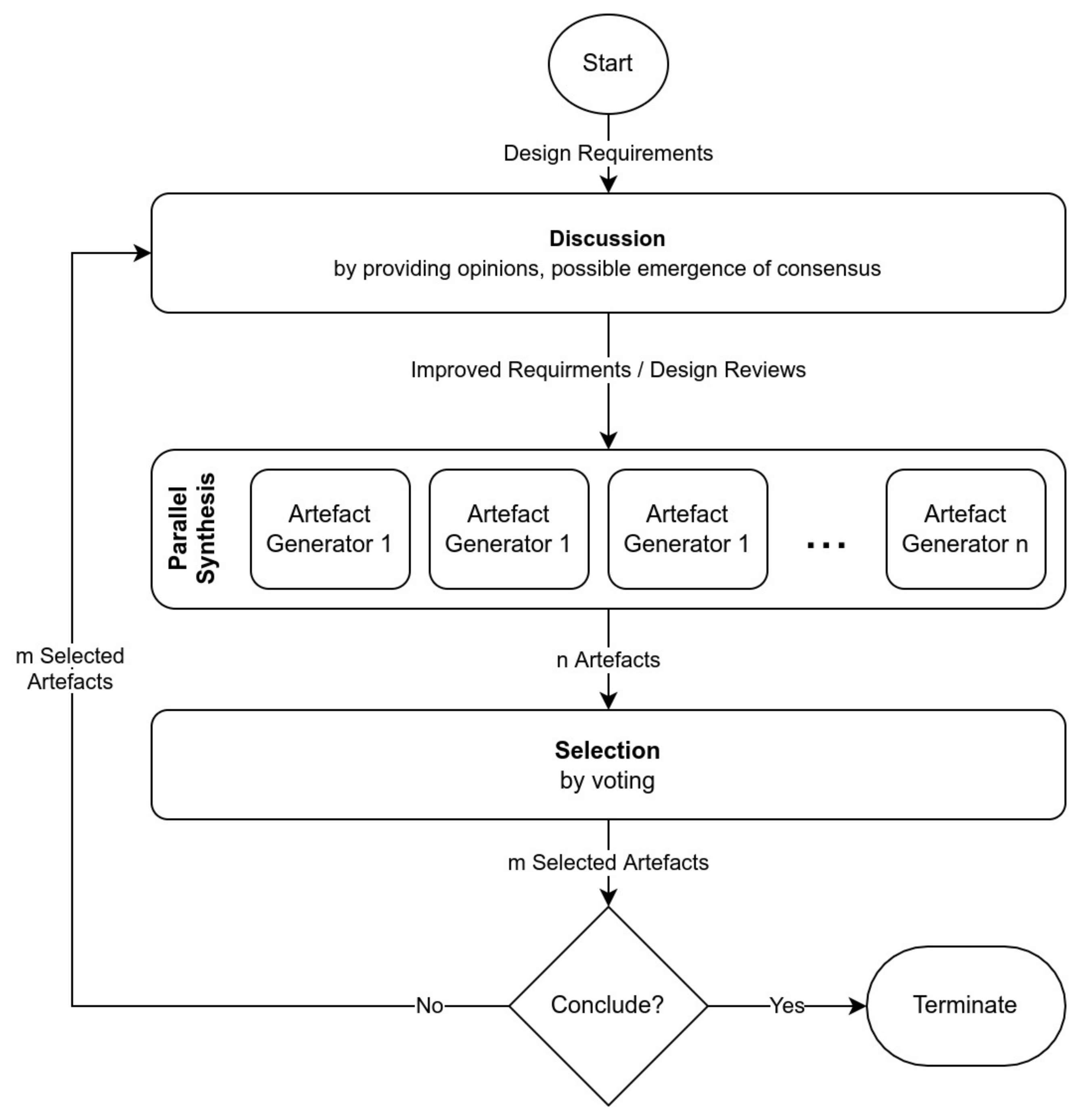

4.4. A New Crowdsourcing Design Workflow

- In the discussion stage, designers share ideas about design requirements and potential ideas. Since discussions are performed in natural language (rather than sketches), they allow project stakeholders and clients to better articulate design requirements with the assistance of the participating designers. The output of this stage is a conversation that can be summarised into an improved design brief.

- In the during parallel synthesis, designers produce sketches and diagrams of artefacts providing a solution to the design problem based on the design brief. This exploration should yield a diversity of preliminary design sketches for further discussion and elaboration. To ensure the diversity of the proposed design solutions, the designers should work in parallel, i.e., with limited communication among them. The outcome of this stage would be a collection of design artefacts.

- In the selection stage, design artefacts are subjectively evaluated by the project stakeholders and designers to identify the most promising designs. The most straightforward way to select designs is by voting on the best designs. The aggregated votes would then help to identify the designs that should be removed from the process, leaving a sub-set of the fittest designs to allow diversity.

4.5. Limitations

4.6. Future Research

5. Conclusions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Brabham, D.C. Crowdsourcing as a Model for Problem Solving. Converg. Int. J. Res. New Media Technol. 2008, 14, 75–90. [Google Scholar] [CrossRef]

- Howe, J. The Rise of Crowdsourcing. Wired Mag. 2006, 14, 1–4. [Google Scholar]

- Leimeister, J.M. Collective Intelligence. Bus. Inf. Syst. Eng. 2010, 2, 245–248. [Google Scholar] [CrossRef] [Green Version]

- Salminen, J. The Role of Collective Intelligence in Crowdsourcing Innovations. Ph.D. Thesis, Lappeenranta University of Technology, Lappeenranta, Finland, 2015. [Google Scholar]

- Shen, H.; Li, Z.; Liu, J.; Grant, J.E. Knowledge Sharing in the Online Social Network of Yahoo! Answers and Its Implications. IEEE Trans. Comput. 2014, 64. [Google Scholar] [CrossRef]

- Malone, T.W.; Laubacher, R.; Dellarocas, C. The collective intelligence genome. IEEE Eng. Manag. Rev. 2010, 38, 38–52. [Google Scholar] [CrossRef]

- Engel, D.; Woolley, A.W.; Jing, L.X.; Chabris, C.F.; Malone, T.W. Reading the Mind in the Eyes or Reading between the Lines? Theory of Mind Predicts Collective Intelligence Equally Well Online and Face-To-Face. PLoS ONE 2014, 9, e115212. [Google Scholar] [CrossRef] [Green Version]

- Malone, T.W.; Laubacher, R.; Dellarocas, C. Harnessing crowds: Mapping the genome of collective intelligence. MIT Sloan Sch. Manag. 2009, 1, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Giles, J. Internet encyclopaedias go head to head. Nature 2005, 438, 900–901. [Google Scholar] [CrossRef]

- Wilkinson, D.M.; Huberman, B.A. Cooperation and quality in Wikipedia. In Proceedings of the Conference on Object-Oriented Programming Systems, Languages, and Applications (OOPSLA), Phoenix, AZ, USA, 21–25 October 2007; pp. 157–164. [Google Scholar] [CrossRef]

- Greenstein, S.; Zhu, F. Do experts or crowd-based models produce more bias? Evidence from encyclopedia Britannica and Wikipedia. MIS Q. Manag. Inf. Syst. 2018, 42, 945–958. [Google Scholar] [CrossRef]

- Vitruvius, P.M. The Architecture of Marcus Vitruvius Pollio; Lockwood & Co.: London, UK, 1874. [Google Scholar]

- Carpo, M. The Alphabet and the Algorithm; MIT Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Lipstadt, H. Can ‘art Professions’ Be Bourdieuean Fields Of Cultural Production? The Case Of The Architecture Competition. Cult. Stud. 2003, 17, 390–419. [Google Scholar] [CrossRef]

- Porat, M.U. The Information Economy: Definition and Measurement; Technical Report; Office of Telecommunications (DOC): Washington, DC, USA, 1977. [Google Scholar]

- Wright Steenson, M. Architectural Intelligence: How Designers and Architects Created the Digital Landscape; The MIT Press: Cambridge, MA, USA, 2017; p. 328. [Google Scholar]

- Kamstrup, A. Crowdsourcing and the Architectural Competition as Organisational Technologies. Ph.D. Thesis, Copenhagen Business School, Frederiksberg, Denmark, 2017. [Google Scholar]

- Yu, L.; Nickerson, J.V.; Sakamoto, Y. Collective Creativity: Where we are and where we might go. In Proceedings of the Collective Intelligence 2012, Cambridge, MA, USA, 18–20 April 2012; Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=2037908 (accessed on 23 December 2021).

- Angelico, M.; As, I. Crowdsourcing Architecture: A Disruptive Model in Architectural Practice; ACADIA: San Francisco, CA, USA, 2012; pp. 439–443. [Google Scholar]

- Woolley, A.W.; Chabris, C.F.; Pentland, A.; Hashmi, N.; Malone, T.W. Evidence for a Collective Intelligence Factor in the Performance of Human Groups. Science 2010, 330, 686–688. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dortheimer, J.; Neuman, E.; Milo, T. A Novel Crowdsourcing-based Approach for Collaborative Architectural Design. In Anthropologic: Architecture and Fabrication in the Cognitive Age—Proceedings of the 38th eCAADe Conference; Education and Research in Computer Aided Architectural Design in Europe: Berlin, Germany, 2020; Volume 2, pp. 155–164. [Google Scholar]

- Cross, N. The Automated Architect; Pion Limited: London, UK, 1977; p. 178. [Google Scholar]

- Simon, H.A. The Sciences of the Artificial; MIT Press: Cambridge, MA, USA, 1969; p. 123. [Google Scholar]

- Jones, C.J. A method of systemic design. In Design Methods; Jones, C.J., Thornley, D.G., Eds.; Pergamon Press: Oxford, UK, 1963; pp. 53–73. [Google Scholar]

- Howard, T.; Culley, S.; Dekoninck, E. Describing the creative design process by the integration of engineering design and cognitive psychology literature. Des. Stud. 2008, 29, 160–180. [Google Scholar] [CrossRef]

- Wynn, D.C.; Clarkson, P.J. Process models in design and development. Res. Eng. Des. 2018, 29, 161–202. [Google Scholar] [CrossRef] [Green Version]

- Luckman, J. An Approach to the Management of Design. J. Oper. Res. Soc. 1967, 18, 345. [Google Scholar] [CrossRef]

- Takeda, H.; Veerkamp, P.; Tomiyama, T.; Yoshikawa, H. Modeling design processes. AI Mag. 1990, 11, 37–48. [Google Scholar]

- Maurer, M. Complexity Management in Engineering Design—A Primer; Springer: Berlin/Heidelberg, Germany, 2017; pp. 1–153. [Google Scholar] [CrossRef]

- Simon, H.A. The structure of ill structured problems. Artif. Intell. 1973, 4, 181–201. [Google Scholar] [CrossRef]

- Maher, M.L.; Poon, J.; Boulanger, S. Formalising Design Exploration as Co-evolution: A Combined Gene Approach. In Advances in Formal Design Methods for CAD: Proceedings of the IFIP WG5.2 Workshop on Formal Design Methods for Computer-Aided Design; Springer: Boston, MA, USA, 1996; pp. 3–30. [Google Scholar] [CrossRef] [Green Version]

- Dorst, K.; Cross, N. Creativity in the design process: Co-evolution of problem–solution. Des. Stud. 2001, 22, 425–437. [Google Scholar] [CrossRef] [Green Version]

- Maher, M.; Tang, H.H. Co-evolution as a computational and cognitive model of design. Res. Eng. Des. 2003, 14, 47–64. [Google Scholar] [CrossRef]

- Wiltschnig, S.; Christensen, B.T.; Ball, L.J. Collaborative problem–solution co-evolution in creative design. Des. Stud. 2013, 34, 515–542. [Google Scholar] [CrossRef]

- Browning, T.R.; Ramasesh, R.V. A Survey of Activity Network-Based Process Models for Managing Product Development Projects. Prod. Oper. Manag. 2009, 16, 217–240. [Google Scholar] [CrossRef]

- Maher, M.L. Design Creativity Research: From the Individual to the Crowd. In Design Creativity 2010; Springer: London, UK, 2011; pp. 41–47. [Google Scholar] [CrossRef]

- Bhatti, S.S.; Gao, X.; Chen, G. General framework, opportunities and challenges for crowdsourcing techniques: A Comprehensive survey. J. Syst. Softw. 2020, 167, 110611. [Google Scholar] [CrossRef]

- Estellés-Arolas, E.; González-Ladrón-de Guevara, F. Towards an integrated crowdsourcing definition. J. Inf. Sci. 2012, 38, 189–200. [Google Scholar] [CrossRef] [Green Version]

- Hosseini, M.; Shahri, A.; Phalp, K.; Taylor, J.; Ali, R. Crowdsourcing: A taxonomy and systematic mapping study. Comput. Sci. Rev. 2015, 17, 43–69. [Google Scholar] [CrossRef] [Green Version]

- LaToza, T.D.; van der Hoek, A. Crowdsourcing in Software Engineering: Models, Motivations, and Challenges. IEEE Softw. 2016, 33, 74–80. [Google Scholar] [CrossRef]

- Nakatsu, R.T.; Grossman, E.B.; Iacovou, C.L. A taxonomy of crowdsourcing based on task complexity. J. Inf. Sci. 2014, 40, 823–834. [Google Scholar] [CrossRef]

- Jiang, H.; Matsubara, S. Efficient Task Decomposition in Crowdsourcing. In PRIMA 2014: Principles and Practice of Multi-Agent Systems; Dam, H.K., Pitt, J., Xu, Y., Governatori, G., Ito, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 65–73. [Google Scholar]

- Kulkarni, A.; Can, M.; Hartmann, B. Turkomatic: Automatic Recursive Task and Workflow Design for Mechanical Turk. In Proceedings of the Workshops at the Twenty-Fifth AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 7–11 August 2011; pp. 2053–2058. [Google Scholar] [CrossRef]

- LaToza, T.D.; Ben Towne, W.; Adriano, C.M.; Van Der Hoek, A. Microtask programming: Building software with a crowd. In Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology (UIST ’14), Honolulu, HI, USA, 5–8 October 2014; pp. 43–54. [Google Scholar] [CrossRef]

- Kittur, A.; Smus, B.; Kraut, R. CrowdForge Crowdsourcing Complex Work. In Human Factors in Computing Systems; ACM: Santa Barbara, CA, USA, 2011; pp. 43–52. [Google Scholar] [CrossRef]

- Retelny, D.; Robaszkiewicz, S.; To, A.; Lasecki, W.S.; Patel, J.; Rahmati, N.; Doshi, T.; Valentine, M.; Bernstein, M.S. Expert crowdsourcing with flash teams. In Proceedings of the 27th annual ACM symposium on User Interface Software and Technology, Honolulu, HI, USA, 5–8 October 2014; ACM: New York, NY, USA, 2014; pp. 75–85. [Google Scholar] [CrossRef]

- Kittur, A.; Nickerson, J.V.; Bernstein, M.; Gerber, E.; Shaw, A.; Zimmerman, J.; Lease, M.; Horton, J. The Future of Crowd Work. In Proceedings of the 2013 Conference on Computer Supported Cooperative Work—CSCW ’13, San Antonio, TX, USA, 21–25 September 2013; ACM Press: New York, NY, USA, 2013; p. 1301. [Google Scholar] [CrossRef]

- Dortheimer, J.; Margalit, T. Open-source architecture and questions of intellectual property, tacit knowledge, and liability. J. Archit. 2020, 25, 276–294. [Google Scholar] [CrossRef]

- Cerasoli, C.P.; Nicklin, J.M.; Ford, M.T. Intrinsic motivation and extrinsic incentives jointly predict performance: A 40-year meta-analysis. Psychol. Bull. 2014, 140, 980–1008. [Google Scholar] [CrossRef]

- Rogstadius, J.; Kostakos, V.; Kittur, A.; Smus, B.; Laredoc, J.; Vukovic, M. An Assessment of Intrinsic and Extrinsic Motivation on Task Performance in Crowdsourcing Markets. In Proceedings of the International AAAI Conference on Web and Social Media, Barcelona, Spain, 17–21 July 2011; Volume 5, pp. 321–328. [Google Scholar]

- Hosio, S.; Goncalves, J.; Kostakos, V.; Riekki, J. Crowdsourcing Public Opinion Using Urban Pervasive Technologies: Lessons From Real-Life Experiments in Oulu. Policy Internet 2015, 7, 203–222. [Google Scholar] [CrossRef] [Green Version]

- Lu, H.; Gu, J.; Li, J.; Lu, Y.; Müller, J.; Wei, W.; Schmitt, G. Evaluating urban design ideas from citizens from crowdsourcing and participatory design. In Proceedings of the CAADRIA 2018—23rd International Conference on Computer-Aided Architectural Design Research in Asia: Learning, Prototyping and Adapting, Beijing, China, 17–19 May 2018; Volume 2, pp. 297–306. [Google Scholar]

- Birch, D.; Simondetti, A.; Guo, Y.k. Crowdsourcing with online quantitative design analysis. Adv. Eng. Inform. 2018, 38, 242–251. [Google Scholar] [CrossRef]

- Fisher-gewirtzman, D.; Polak, N. Integrating Crowdsourcing & Gamification in an Automatic Architectural Synthesis Process. In Proceedings of the 36th eCAADe Conference, Lodz, Poland, 19–21 September 2018; Volume 1, pp. 439–444. [Google Scholar]

- Sun, L.; Xiang, W.; Chen, S.; Yang, Z. Collaborative sketching in crowdsourcing design: A new method for idea generation. Int. J. Technol. Des. Educ. 2015, 25, 409–427. [Google Scholar] [CrossRef]

- Xiang, W.; Sun, L.Y.; You, W.T.; Yang, C.Y. Crowdsourcing intelligent design. Front. Inf. Technol. Electron. Eng. 2018, 19, 126–138. [Google Scholar] [CrossRef]

- Yu, L.; Sakamoto, Y. Feature selection in crowd creativity. Lect. Notes Comput. Sci. 2011, 6780 LNAI, 383–392. [Google Scholar] [CrossRef]

- As, I.; Nagakura, T. Crowdsourcing the Obama presidental center. In Proceedings of the Disciplines and Disruption—Proceedings Catalog of the 37th Annual Conference of the Association for Computer Aided Design in Architecture (ACADIA), Cambridge, MA, USA, 2–4 November 2017; pp. 118–127. [Google Scholar]

- As, I. Competitions in a Networked Society: Crowdsourcing Collective Deisgn Intelligence. In BLACK BOX: Articulating Architecture’s Core in the Post-Digital Era; ASCA: Pittsburgh, PA, USA, 2019; pp. 268–273. [Google Scholar]

- Levy, P. Collective Intelligence: Mankind’s Emerging World in Cyberspace; Perseus Books: New York, NY, USA, 1997; p. 255. [Google Scholar]

- Engel, D.; Woolley, A.W.; Aggarwal, I.; Chabris, C.F.; Takahashi, M.; Nemoto, K.; Kaiser, C.; Kim, Y.J.; Malone, T.W. Collective intelligence in computer-mediated collaboration emerges in different contexts and cultures. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 3769–3778. [Google Scholar] [CrossRef] [Green Version]

- Schut, M.C. On model design for simulation of collective intelligence. Inf. Sci. 2010, 180, 132–155. [Google Scholar] [CrossRef]

- Lévy, P. From social computing to reflexive collective intelligence: The IEML research program. Inf. Sci. 2010, 180, 71–94. [Google Scholar] [CrossRef]

- Mačiulienė, M.; Skaržauskienė, A. Emergence of collective intelligence in online communities. J. Bus. Res. 2016, 69, 1718–1724. [Google Scholar] [CrossRef]

- Casal, D.P. Crowdsourcing the Corpus: Using Collective Intelligence as a Method for Composition. Leonardo Music J. 2011, 21, 25–28. [Google Scholar] [CrossRef]

- Landemore, H. Collective Wisdom. In Collective Wisdom; Landemore, H., Elster, J., Eds.; Cambridge University Press: Cambridge, UK, 2012; pp. 1–20. [Google Scholar] [CrossRef]

- Galton, F. Vox Populi. Nature 1907, 75, 450–451. [Google Scholar] [CrossRef]

- Nguyen, V.D.; Nguyen, N.T. Intelligent Collectives: Theory, Applications, and Research Challenges. Cybern. Syst. 2018, 49, 261–279. [Google Scholar] [CrossRef]

- Lorenz, J.; Rauhut, H.; Schweitzer, F.; Helbing, D. How social influence can undermine the wisdom of crowd effect. Proc. Natl. Acad. Sci. USA 2011, 108, 9020–9025. [Google Scholar] [CrossRef] [Green Version]

- Bernstein, E.; Shore, J.; Lazer, D. How intermittent breaks in interaction improve collective intelligence. Proc. Natl. Acad. Sci. USA 2018, 115, 8734–8739. [Google Scholar] [CrossRef] [Green Version]

- Kim, Y.J.; Engel, D.; Woolley, A.W.; Lin, J.Y.T.; McArthur, N.; Malone, T.W. What Makes a Strong Team?: Using Collective Intelligence to Predict Team Performance in League of Legends. In Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing, Portland, OR, USA, 25 February–1 March 2017; ACM: New York, NY, USA, 2017; pp. 2316–2329. [Google Scholar] [CrossRef] [Green Version]

- Dow, S.P.; Glassco, A.; Kass, J.; Schwarz, M.; Schwartz, D.L.; Klemmer, S.R. Parallel prototyping leads to better design results, more divergence, and increased self-efficacy. ACM Trans. Comput.-Hum. Interact. 2010, 17, 1–24. [Google Scholar] [CrossRef] [Green Version]

- Shah, J.J.; Smith, S.M.; Vargas-Hernandez, N. Metrics for measuring ideation effectiveness. Des. Stud. 2003, 24, 111–134. [Google Scholar] [CrossRef]

- Hoßfeld, T.; Hirth, M.; Korshunov, P.; Hanhart, P.; Gardlo, B.; Keimel, C.; Timmerer, C. Survey of web-based crowdsourcing frameworks for subjective quality assessment. In Proceedings of the 2014 IEEE International Workshop on Multimedia Signal Processing, MMSP, Jakarta, Indonesia, 22–24 September 2014; pp. 22–24. [Google Scholar] [CrossRef] [Green Version]

- Wu, H.; Corney, J.; Grant, M. Crowdsourcing Measures of Design Quality. In International Design Engineering Technical Conferences and Computers and Information in Engineering Conference; American Society of Mechanical Engineers: New York, NY, USA, 2014; Volume 46292, pp. 1–10. [Google Scholar] [CrossRef]

- Woolley, A.W. Means vs. ends: Implications of process and outcome focus for team adaptation and performance. Organ. Sci. 2009, 20, 500–515. [Google Scholar] [CrossRef] [Green Version]

- Dortheimer, J. A Crowdsourcing Method for Architecture—Towards a Collaborative and Participatory Architectural Design Praxis. Ph.D. Thesis, Tel Aviv University, Tel Aviv-Yafo, Israel, 2021. [Google Scholar]

| Phase | What | Who | Why | How | |

|---|---|---|---|---|---|

| 1. Challenge | Create | Brief | Hierarchy | Extrinsic | Hierarchy |

| 2. Q&A | Create | Challenge clarification | Crowd and Hierarchy | Extrinsic | Collection |

| 3. Design | Create | Designs | Crowd | Extrinsic | Collection |

| 4. Rating | Create | Average scores | Crowd | Intrinsic | Averaging |

| 5. Winner selection | Decide | Improved artefacts | Hierarchy | Extrinsic | Hierarchy |

| Phase | What | Who | Why | How | |

|---|---|---|---|---|---|

| 1. Challenge | Create | Brief | Hierarchy | Extrinsic | Hierarchy |

| 2. Artefact generation | Create | Artefacts | Crowd | Extrinsic | Collection |

| 3. Selection | Decide | Selection count | Crowd | Extrinsic | Voting |

| 4. Review | Create | How to improve the artefacts | Crowd | Extrinsic | Collection |

| 5. Stopping condition | Decide | Best design | Hierarchy | Extrinsic | Hierarchy |

| 6. Improve artefact | Create | Improved artefacts | Crowd | Extrinsic | Collection |

| Local | Global | Emergence | Kind |

|---|---|---|---|

| 1. Q&A | Question and Answers collection | Consensus | Collective Intelligence |

| 2. Design generation | Design collection | No | |

| 3. Individual vote | Aggregated votes | Crowd opinion | Wisdom of the Crowd |

| Local | Global | Emergence | Kind |

|---|---|---|---|

| 1. Artefact generation | Artefact collection | No | |

| 2. Review generation | Review collection | No | |

| 3. Artefact improvement | Collection of sequential artefact improvements | Consensus | Collective Intelligence |

| 4. Individual selection | Aggregated selections | Consensus | Wisdom of the Crowd |

| Design Iteration (i) | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Number of produced designs in each iteration | 9 | 12 | 10 | 8 | 6 | 6 | 6 | 8 | 8 | 8 |

| Participation index (D) | 0 | 1 | 2 | 3 | 3 | 4 | 5 | 5 | 5 | 5 |

| Iteration | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Designer 2 | 1.926 | 1.926 | 1.926 | 2.071 | 1.117 | 0.899 | 0.764 | 0.799 | 0.813 | 0.738 |

| Designer 3 | 0.000 | 0.000 | 0.000 | 0.144 | 0.154 | 0.000 | 0.138 | 0.144 | 0.107 | 0.366 |

| Designer 4 | 0.963 | 0.963 | 0.963 | 0.722 | 0.539 | 0.578 | 0.523 | 0.385 | 0.608 | 0.527 |

| Designer 5 | 0.193 | 0.193 | 0.193 | 0.433 | 0.443 | 0.482 | 0.427 | 0.722 | 0.926 | 0.848 |

| Designer 6 | 2.697 | 2.697 | 2.697 | 1.686 | 1.310 | 1.204 | 1.024 | 1.236 | 1.208 | 1.064 |

| Designer 7 | 0.530 | 0.539 | 0.578 | 0.523 | 0.385 | 0.608 | 0.751 | |||

| Designer 8 | 1.252 | 1.252 | 1.252 | 1.236 | 1.069 | 1.040 | 0.876 | 0.888 | 0.926 | 0.896 |

| Designer 9 | 0.963 | 0.963 | 0.963 | 0.915 | 0.668 | 0.819 | 0.754 | 0.690 | 0.795 | 0.751 |

| Designer 10 | 0.578 | 0.578 | 0.578 | 0.337 | 0.250 | 0.289 | 0.234 | 0.222 | 0.203 | 0.352 |

| Designer 11 | 0.899 | 0.899 | 1.044 | 1.053 | 1.092 | 1.037 | 1.044 | 1.006 | 0.976 | |

| Designer 12 | 0.963 | 0.867 | 1.011 | 1.021 | 1.060 | 1.005 | 1.011 | 0.974 | 0.944 | |

| Designer 13 | 1.349 | 1.349 | 1.493 | 1.503 | 1.541 | 1.486 | 1.493 | 1.456 | 1.426 | |

| Designer 14 | 0.096 | 0.433 | 0.578 | 0.588 | 0.626 | 0.571 | 0.578 | 0.540 | 0.511 | |

| Designer 15 | 1.541 | 1.541 | 1.686 | 1.695 | 1.734 | 1.679 | 1.686 | 1.648 | 1.618 | |

| Designer 16 | 1.252 | 1.397 | 1.406 | 1.445 | 1.390 | 1.397 | 1.359 | 1.329 | ||

| Designer 17 | 1.220 | 1.365 | 1.374 | 1.413 | 1.358 | 1.365 | 1.327 | 1.297 | ||

| Designer 18 | 0.289 | 0.433 | 0.443 | 0.482 | 0.427 | 0.433 | 0.396 | 0.366 | ||

| Collective distance | 0.514 | 0.321 | 0.214 | 0.353 | 0.321 | 0.375 | 0.349 | 0.353 | 0.314 | 0.283 |

| High performing individuals | 2 | 3 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dortheimer, J. Collective Intelligence in Design Crowdsourcing. Mathematics 2022, 10, 539. https://doi.org/10.3390/math10040539

Dortheimer J. Collective Intelligence in Design Crowdsourcing. Mathematics. 2022; 10(4):539. https://doi.org/10.3390/math10040539

Chicago/Turabian StyleDortheimer, Jonathan. 2022. "Collective Intelligence in Design Crowdsourcing" Mathematics 10, no. 4: 539. https://doi.org/10.3390/math10040539

APA StyleDortheimer, J. (2022). Collective Intelligence in Design Crowdsourcing. Mathematics, 10(4), 539. https://doi.org/10.3390/math10040539