Transfer Learning Analysis of Multi-Class Classification for Landscape-Aware Algorithm Selection

Abstract

:1. Introduction

2. Related Work

3. Methodology

3.1. Problem Portfolio Representation

3.2. Machine Learning

- COCO (Instance Split)—The model is trained on the COCO instances and tested on the COCO instances, using instance based stratified cross validation. Here, the training data contains 14 instances of each of the 24 base problems, and the testing data contains the final 15th instance of each base problem. This means the model can learn from all of the 24 base problems.

- COCO (Problem Split)—The model is trained on the COCO instances and tested on the COCO instances, using problem based stratified cross validation. Here, the training data contains all 15 instances of 23 base problems, and the testing data contains the 15 instances of the final 24th base problem. This means the model only learns on 23 base problems and then predicts the final 24th base problem.

- Artificial—The model is trained on the artificial instances and tested on the artificial instances. Since the artificial set cannot be split by base problems, we split the training and testing sets randomly using cross validation.

- Combined—The model is trained on a combined set of both artificial and COCO instances, and tested on the combined set of instances. Cross validation is used to split the training and testing set.

- Transfer—The model trained on the artificial instance, and tested on the COCO instances. The full artificial set is used for training, and the full COCO set is used for testing.

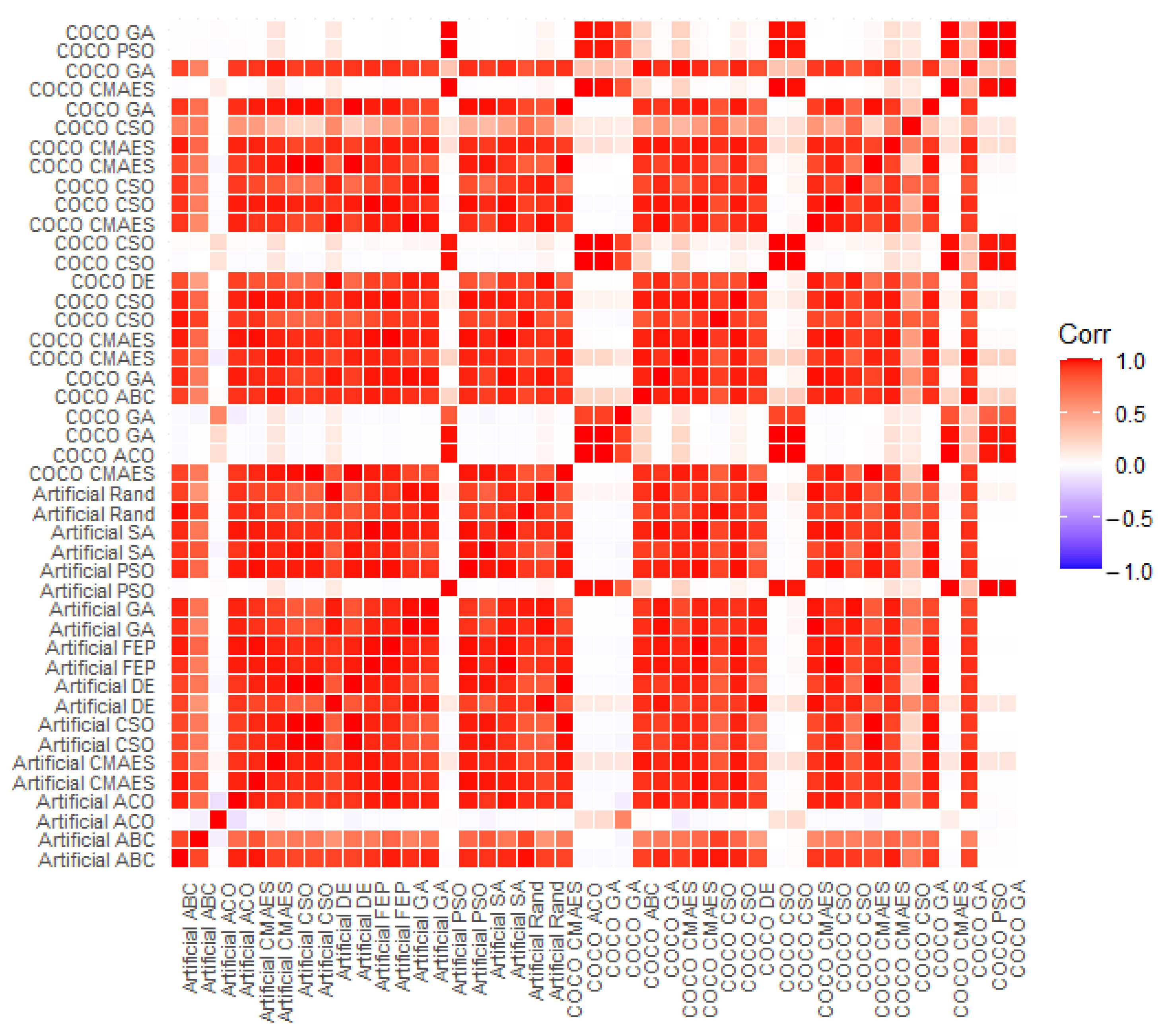

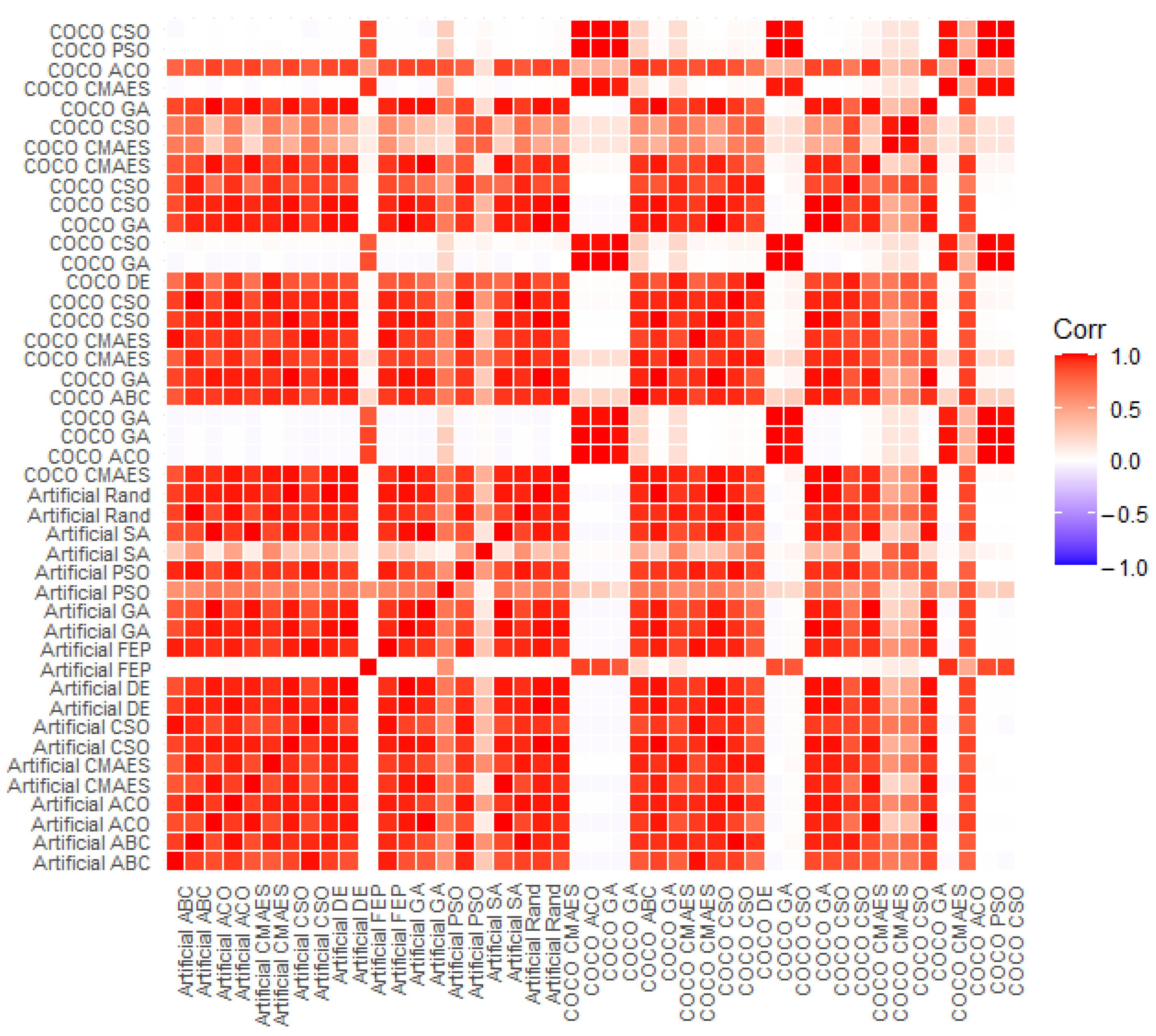

3.3. Complementarity Analysis

4. Results

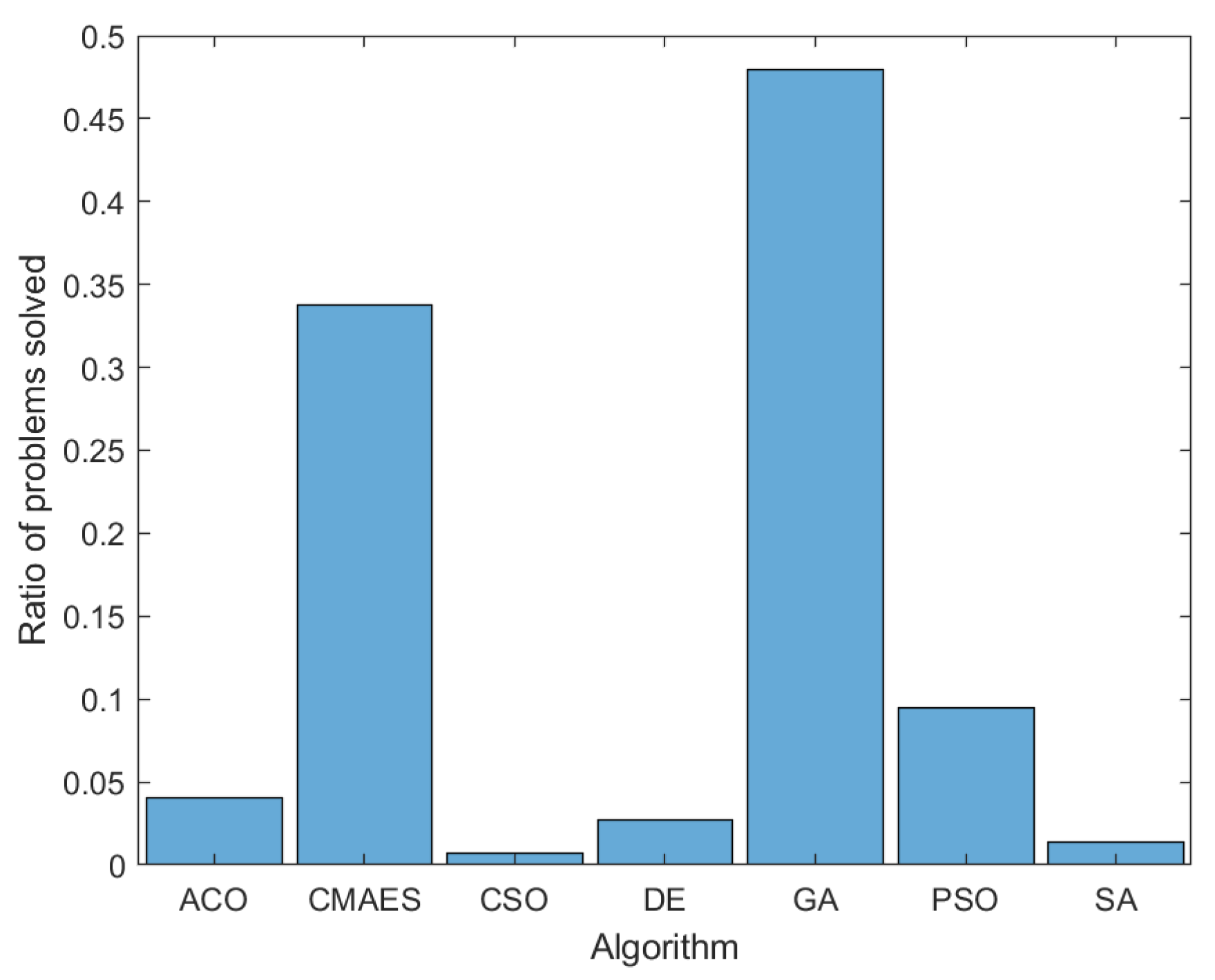

4.1. Problem Selection and Feature Calculation

4.2. Machine Learning

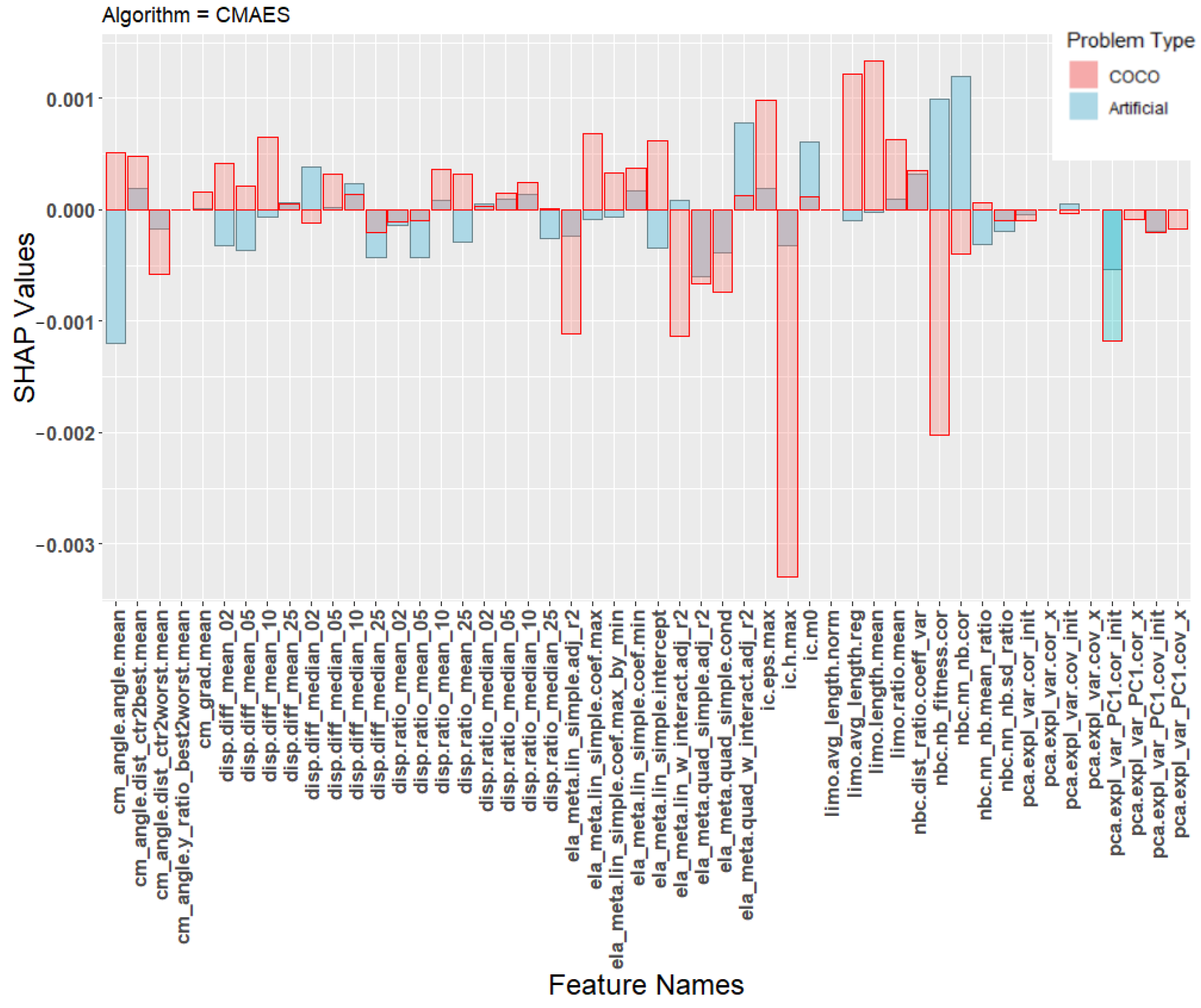

4.3. Complementarity Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| COCO | Comparing Continuous Optimizers |

| ELA | Exploratory Landscape Analysis |

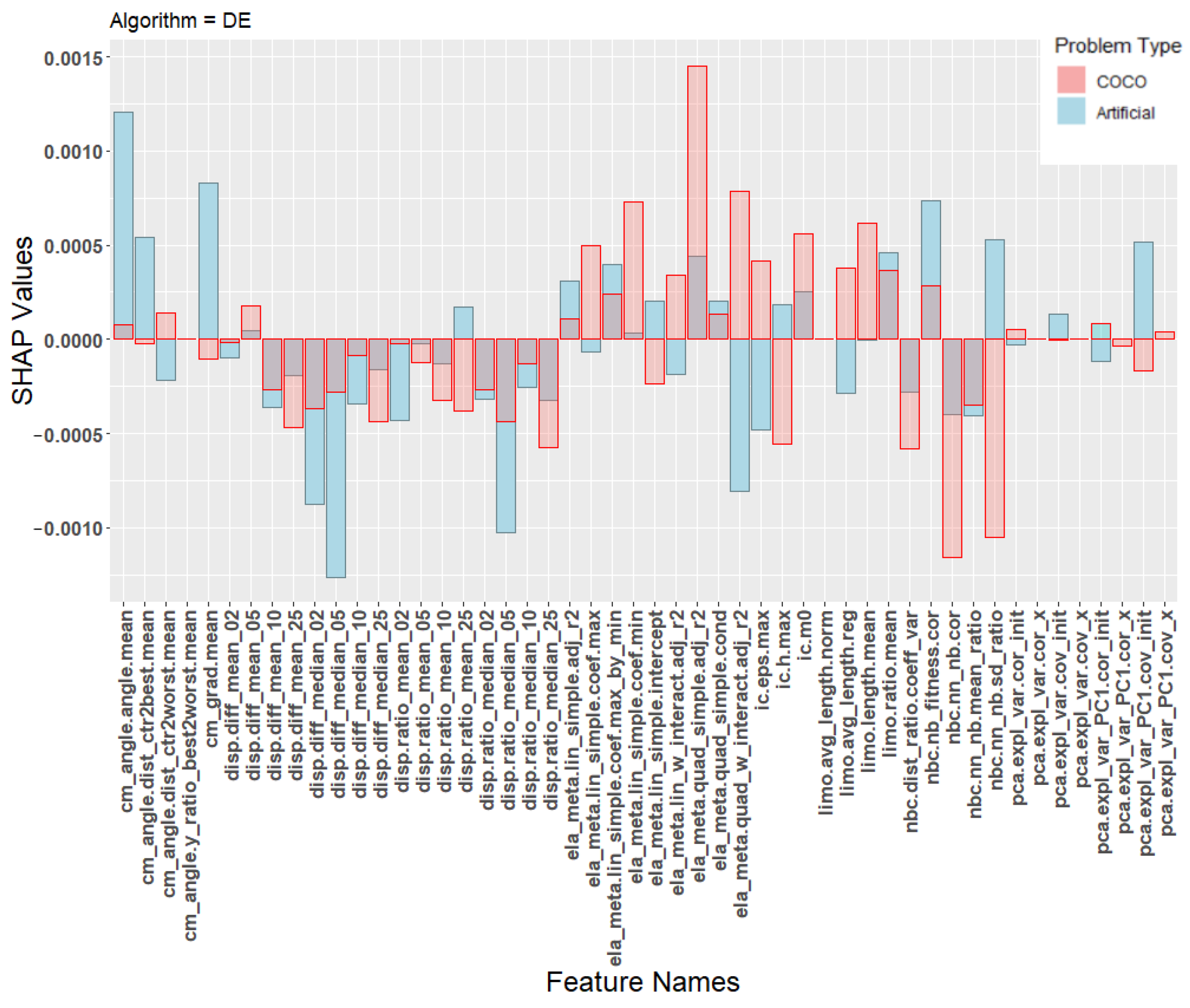

| SHAP | Shapley Additive Explanations |

| ICOP | Interpolated Continuous Optimisation Problems |

| ABC | Artificial Bee Colony |

| ACO | Ant Colony Optimization |

| CSO | Competitive Swarm Optimizer |

| DE | Differential Evolution |

| FEP | Fast Evolutionary Programming |

| GA | Genetic Algorithm |

| PSO | Particle Swarm Optimization |

| SA | Simulated Annealing |

| Rand | Random Search |

| CMA-ES | Covariance matrix adaptation |

| SVD | Singular Value Decomposition |

References

- Rice, J.R. The algorithm selection problem. In Advances in Computers; Elsevier: Amsterdam, The Netherlands, 1976; Volume 15, pp. 65–118. [Google Scholar]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef] [Green Version]

- Tian, Y.; Peng, S.; Zhang, X.; Rodemann, T.; Tan, K.C.; Jin, Y. A Recommender System for Metaheuristic Algorithms for Continuous Optimization Based on Deep Recurrent Neural Networks. IEEE Trans. Artif. Intell. 2020, 1, 5–18. [Google Scholar] [CrossRef]

- Hansen, N.; Auger, A.; Ros, R.; Mersmann, O.; Tušar, T.; Brockhoff, D. COCO: A platform for comparing continuous optimizers in a black-box setting. Optim. Methods Softw. 2021, 36, 114–144. [Google Scholar] [CrossRef]

- Mersmann, O.; Preuss, M.; Trautmann, H. Benchmarking Evolutionary Algorithms: Towards Exploratory Landscape Analysis. In Parallel Problem Solving from Nature, PPSN XI; Schaefer, R., Cotta, C., Kołodziej, J., Rudolph, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 73–82. [Google Scholar]

- Mersmann, O.; Bischl, B.; Trautmann, H.; Preuss, M.; Weihs, C.; Rudolph, G. Exploratory landscape analysis. In Proceedings of the 2011 Annual Conference on Genetic and Evolutionary Computation, Dublin, Ireland, 12–16 July 2011; pp. 829–836. [Google Scholar]

- Kerschke, P.; Trautmann, H. Automated algorithm selection on continuous black-box problems by combining exploratory landscape analysis and machine learning. Evol. Comput. 2019, 27, 99–127. [Google Scholar] [CrossRef] [Green Version]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 4768–4777. [Google Scholar]

- Kerschke, P.; Hoos, H.H.; Neumann, F.; Trautmann, H. Automated algorithm selection: Survey and perspectives. Evol. Comput. 2019, 27, 3–45. [Google Scholar] [CrossRef] [PubMed]

- Jankovic, A.; Eftimov, T.; Doerr, C. Towards Feature-Based Performance Regression Using Trajectory Data. In Applications of Evolutionary Computation (EvoApplications 2021); Springer: Berlin/Heidelberg, Germany, 2021; Volume 12694, pp. 601–617. [Google Scholar]

- Belkhir, N.; Dreo, J.; Savéant, P.; Schoenauer, M. Per instance algorithm configuration of CMA-ES with limited budget. In Proceedings of the Genetic and Evolutionary Computation Conference (GECCO’17), Berlin, Germany, 15–19 July 2017; pp. 681–688. [Google Scholar] [CrossRef] [Green Version]

- Muñoz, M.A.; Sun, Y.; Kirley, M.; Halgamuge, S.K. Algorithm selection for black-box continuous optimization problems: A survey on methods and challenges. Inf. Sci. 2015, 317, 224–245. [Google Scholar] [CrossRef] [Green Version]

- Derbel, B.; Liefooghe, A.; Vérel, S.; Aguirre, H.E.; Tanaka, K. New features for continuous exploratory landscape analysis based on the SOO tree. In Proceedings of the Foundations of Genetic Algorithms (FOGA’19), Potsdam, Germany, 27–29 August 2019; pp. 72–86. [Google Scholar] [CrossRef]

- Jankovic, A.; Doerr, C. Landscape-aware fixed-budget performance regression and algorithm selection for modular CMA-ES variants. In Proceedings of the 2020 Genetic and Evolutionary Computation Conference, Cancún, Mexico, 8–12 July 2020; pp. 841–849. [Google Scholar]

- Jankovic, A.; Popovski, G.; Eftimov, T.; Doerr, C. The Impact of Hyper-Parameter Tuning for Landscape-Aware Performance Regression and Algorithm Selection. arXiv 2021, arXiv:2104.09272. [Google Scholar]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Lacroix, B.; McCall, J. Limitations of benchmark sets and landscape features for algorithm selection and performance prediction. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, Prague, Czech Republic, 13–17 July 2019; pp. 261–262. [Google Scholar]

- Muñoz, M.A.; Smith-Miles, K. Generating new space-filling test instances for continuous black-box optimization. Evol. Comput. 2020, 28, 379–404. [Google Scholar] [CrossRef]

- Lacroix, B.; Christie, L.A.; McCall, J.A. Interpolated continuous optimisation problems with tunable landscape features. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, Berlin, Germany, 15–19 July 2017; pp. 169–170. [Google Scholar]

- Fischbach, A.; Bartz-Beielstein, T. Improving the reliability of test functions generators. Appl. Soft Comput. 2020, 92, 106315. [Google Scholar] [CrossRef]

- Eftimov, T.; Popovski, G.; Renau, Q.; Korošec, P.; Doerr, C. Linear Matrix Factorization Embeddings for Single-objective Optimization Landscapes. In Proceedings of the 2020 IEEE Symposium Series on Computational Intelligence (SSCI), Canberra, ACT, Australia, 1–4 December 2020; pp. 775–782. [Google Scholar] [CrossRef]

- Škvorc, U.; Eftimov, T.; Korošec, P. Understanding the problem space in single-objective numerical optimization using exploratory landscape analysis. Appl. Soft Comput. 2020, 90, 106138. [Google Scholar] [CrossRef]

- Karaboga, D. An Idea Based on Honey Bee Swarm for Numerical Optimization; Technical Report; Citeseer: Kayseri, Turkey, 2005. [Google Scholar]

- Socha, K.; Dorigo, M. Ant colony optimization for continuous domains. Eur. J. Oper. Res. 2008, 185, 1155–1173. [Google Scholar] [CrossRef] [Green Version]

- Cheng, R.; Jin, Y. A competitive swarm optimizer for large scale optimization. IEEE Trans. Cybern. 2014, 45, 191–204. [Google Scholar] [CrossRef] [PubMed]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Yao, X.; Liu, Y.; Lin, G. Evolutionary programming made faster. IEEE Trans. Evol. Comput. 1999, 3, 82–102. [Google Scholar]

- Deb, K.; Agrawal, R.B. Simulated binary crossover for continuous search space. Complex Syst. 1995, 9, 115–148. [Google Scholar]

- Deb, K.; Goyal, M. A combined genetic adaptive search (GeneAS) for engineering design. Comput. Sci. Inform. 1996, 26, 30–45. [Google Scholar]

- Eberhart, R.; Kennedy, J. A new optimizer using particle swarm theory. MHS’95. In Proceedings of the Sixth International Symposium on Micro Machine and Human Science, Nagoya, Japan, 4–6 October 1995; pp. 39–43. [Google Scholar]

- Van Laarhoven, P.J.; Aarts, E.H. Simulated annealing. In Simulated Annealing: Theory and Applications; Springer: Berlin/Heidelberg, Germany, 1987; pp. 7–15. [Google Scholar]

- Zabinsky, Z.B. Random search algorithms. In Wiley Encyclopedia of Operations Research and Management Science; Wiley: Hoboken, NJ, USA, 2010. [Google Scholar]

- Hansen, N.; Ostermeier, A. Completely derandomized self-adaptation in evolution strategies. Evol. Comput. 2001, 9, 159–195. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Kerschke, P.; Trautmann, H. Comprehensive Feature-Based Landscape Analysis of Continuous and Constrained Optimization Problems Using the R-Package Flaccos. In Applications in Statistical Computing: From Music Data Analysis to Industrial Quality Improvement; Bauer, N., Ickstadt, K., Lübke, K., Szepannek, G., Trautmann, H., Vichi, M., Eds.; Springer International Publishing: Cham, Germany, 2019; pp. 93–123. [Google Scholar]

- Ying, X. An overview of overfitting and its solutions. J. Phys. Conf. Ser. 2019, 1168, 022022. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and Regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Friedman, J.; Hastie, T.; Tibshirani, R.; Hastie, T. The Elements of Statistical Learning; Springer Series in Statistics New York; Springer: Berlin/Heidelberg, Germany, 2001; Volume 1. [Google Scholar]

- Lundberg, S.M. Shap. Available online: https://github.com/slundberg/shap (accessed on 30 December 2021).

| Name | Abbreviation | Reference |

|---|---|---|

| Artificial Bee Colony | ABC | [23] |

| Ant Colony Optimization | ACO | [24] |

| Competitive Swarm Optimizer | CSO | [25] |

| Differential Evolution | DE | [26] |

| Fast Evolutionary Programming | FEP | [27] |

| Genetic Algorithm | GA | [28,29] |

| Particle Swarm Optimization | PSO | [30] |

| Simulated Annealing | SA | [31] |

| Random Search | Rand | [32] |

| Covariance matrix adaptation evolution strategy | CMA-ES | [33] |

| Algorithm | Parameters |

|---|---|

| ABC | swarm_size = 100, limit = num_onlookers · dim num_onlookers = 0.5 · swarm_size num_employed_bees = 0.5 · swarm_size num_scouts = 1 |

| ACO | ants used in an iteration = 100 q = 0.3 |

| CSO | Acceleration constant = 1 |

| DE | F = 0.5, CR = 0.1, strategy = rand/1/bin |

| FEP | tournament size = 10 |

| GA | T_max = 200 Crossover probability = 1.0 = 0.5 |

| PSO | Acceleration constant = 1 Inertia weight = 0.4 |

| SA | Initial temperature = 0.1 Cooling factor = 0.99 |

| Rand | No parameters |

| CMA-ES |

| Number of Problems | 500 | 1000 | 5000 | 10,000 |

|---|---|---|---|---|

| 500 | 0.9995 | 0.9998 | 0.9715 | 0.9987 |

| 1000 | - | 0.9926 | 0.9922 | 0.9987 |

| 5000 | - | - | 0.9973 | 0.9989 |

| 10,000 | - | - | - | 0.9302 |

| cm_angle.dist_ctr2best.mean | cm_angle.dist_ctr2worst.mean |

| cm_angle.angle.mean | cm_angle.y_ratio_best2worst.mean |

| cm_grad.mean | ela_meta.lin_simple.adj_r2 |

| ela_meta.lin_simple.intercept | ela_meta.lin_simple.coef.min |

| ela_meta.lin_simple.coef.max | ela_meta.lin_simple.coef.max_by_min |

| ela_meta.lin_w_interact.adj_r2 | ela_meta.quad_simple.adj_r2 |

| ela_meta.quad_simple.cond | ela_meta.quad_w_interact.adj_r2 |

| ic.h.max | ic.eps.max |

| ic.m0 | disp.ratio_mean_02 |

| disp.ratio_mean_05 | disp.ratio_mean_10 |

| disp.ratio_mean_25 | disp.ratio_median_02 |

| disp.ratio_median_05 | disp.ratio_median_10 |

| disp.ratio_median_25 | disp.diff_mean_02 |

| disp.diff_mean_05 | disp.diff_mean_10 |

| disp.diff_mean_25 | disp.diff_median_02 |

| disp.diff_median_05 | disp.diff_median_10 |

| disp.diff_median_25 | limo.avg_length.reg |

| limo.avg_length.norm | limo.length.mean |

| limo.ratio.mean | nbc.nn_nb.sd_ratio |

| nbc.nn_nb.mean_ratio | nbc.nn_nb.cor |

| nbc.dist_ratio.coeff_var | nbc.nb_fitness.cor |

| pca.expl_var.cov_x | pca.expl_var.cor_x |

| pca.expl_var.cov_init | pca.expl_var.cor_init |

| pca.expl_var_PC1.cov_x | pca.expl_var_PC1.cor_x |

| pca.expl_var_PC1.cov_init | pca.expl_var_PC1.cor_init |

| Parameter | Value |

|---|---|

| Number of Trees (ntree) | 1000 |

| Number of sampled variables (mtry) | sqrt(number of variables) |

| Sampling with replacement (replace) | True |

| Cutoff (cutoff) | |

| Sampling Size (sampsize) | Number of instances |

| Minimum size of terminal nodes (nodesize) | 1 |

| Maximum number of terminal nodes (maxnodes) | No limit |

| Problem ID. | Best Algorithm |

|---|---|

| 1 | CMAES (GA) |

| 2 | ACO |

| 3 | GA |

| 4 | GA |

| 5 | CMAES |

| 6 | GA |

| 7 | DE (CSO, ABC, CMAES) |

| 8 | CMAES (ACO, GA, CSO) |

| 9 | CSO (CMAES) |

| 10 | CSO |

| 11 | DE (CSO) |

| 12 | GA |

| 13 | GA (CSO, CMAES) |

| 14 | CMAES (GA, CSO) |

| 15 | CSO |

| 16 | CSO |

| 17 | CSO (GA, CMAES, ACO) |

| 18 | CSO (GA, CMAES) |

| 19 | PSO (CSO, GA) |

| 20 | GA |

| 21 | FEP (CSO, GA, CMAES) |

| 22 | FEP (ACO, CSO, GA, ABC) |

| 23 | PSO (GA) |

| 24 | GA (CSO) |

| Model | Accuracy | Precision | Recall |

|---|---|---|---|

| COCO (Instance Split) | 0.68 | 0.69 | 0.60 |

| COCO (Problem Split) | 0.21 | 0.24 | 0.32 |

| Artificial | 0.54 | 0.52 | 0.54 |

| Combined | 0.61 | 0.60 | 0.59 |

| Transfer | 0.20 | 0.13 | 0.14 |

| True | ABC | ACO | CMAES | CSO | DE | FEP | GA | PSO | SA | Rand | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Pred. | |||||||||||

| ABC | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| ACO | 0 | 2 | 2 | 5 | 0 | 3 | 3 | 2 | 0 | 0 | |

| CMAES | 0 | 2 | 10 | 12 | 12 | 0 | 43 | 11 | 0 | 0 | |

| CSO | 1 | 12 | 17 | 47 | 2 | 9 | 44 | 1 | 0 | 0 | |

| DE | 1 | 4 | 9 | 27 | 6 | 1 | 16 | 8 | 0 | 0 | |

| FEP | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| GA | 0 | 0 | 0 | 0 | 0 | 0 | 9 | 0 | 0 | 0 | |

| PSO | 0 | 4 | 8 | 16 | 0 | 0 | 9 | 0 | 0 | 0 | |

| SA | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Rand | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | |

| Model | Accuracy | Precision | Recall |

|---|---|---|---|

| COCO (Instance Split) | 0.68 | 0.68 | 0.62 |

| COCO (Problem Split) | 0.26 | 0.28 | 0.31 |

| Artificial | 0.53 | 0.53 | 0.54 |

| Combined | 0.59 | 0.60 | 0.59 |

| Transfer | 0.22 | 0.13 | 0.12 |

| cm_angle.angle.mean | ela_meta.lin_simple.adj_r2 |

| ela_meta.lin_simple.intercept | ela_meta.lin_simple.coef.min |

| ela_meta.quad_w_interact.adj_r2 | ela_meta.quad_simple.adj_r2 |

| ela_meta.lin_w_interact.adj_r2 | disp.ratio_mean_02 |

| disp.ratio_median_25 | nbc.nb_fitness.cor |

| pca.expl_var_PC1.cov_init | pca.expl_var.cov_init |

| pca.expl_var.cor_init |

| True | ABC | ACO | CMAES | CSO | DE | FEP | GA | PSO | SA | Rand | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Pred. | |||||||||||

| ABC | 0 | 0 | 0 | 0 | 0 | 0 | 10 | 0 | 0 | 0 | |

| ACO | 0 | 2 | 6 | 7 | 0 | 3 | 6 | 0 | 0 | 0 | |

| CMAES | 0 | 2 | 9 | 12 | 12 | 0 | 42 | 7 | 0 | 0 | |

| CSO | 1 | 8 | 7 | 47 | 3 | 10 | 23 | 4 | 0 | 0 | |

| DE | 1 | 0 | 3 | 6 | 2 | 0 | 2 | 4 | 0 | 0 | |

| FEP | 0 | 0 | 4 | 3 | 3 | 0 | 2 | 7 | 0 | 0 | |

| GA | 0 | 0 | 8 | 12 | 0 | 0 | 22 | 0 | 0 | 0 | |

| PSO | 0 | 12 | 8 | 17 | 0 | 0 | 15 | 0 | 0 | 0 | |

| SA | 0 | 0 | 1 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Rand | 0 | 0 | 0 | 1 | 0 | 0 | 3 | 0 | 0 | 0 | |

| Model | Accuracy (All) | Accuracy (Invariant) |

|---|---|---|

| COCO (Instance Split) | 0.90 | 0.88 |

| COCO (Problem Split) | 0.47 | 0.49 |

| Transfer | 0.40 | 0.43 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Škvorc, U.; Eftimov, T.; Korošec, P. Transfer Learning Analysis of Multi-Class Classification for Landscape-Aware Algorithm Selection. Mathematics 2022, 10, 432. https://doi.org/10.3390/math10030432

Škvorc U, Eftimov T, Korošec P. Transfer Learning Analysis of Multi-Class Classification for Landscape-Aware Algorithm Selection. Mathematics. 2022; 10(3):432. https://doi.org/10.3390/math10030432

Chicago/Turabian StyleŠkvorc, Urban, Tome Eftimov, and Peter Korošec. 2022. "Transfer Learning Analysis of Multi-Class Classification for Landscape-Aware Algorithm Selection" Mathematics 10, no. 3: 432. https://doi.org/10.3390/math10030432

APA StyleŠkvorc, U., Eftimov, T., & Korošec, P. (2022). Transfer Learning Analysis of Multi-Class Classification for Landscape-Aware Algorithm Selection. Mathematics, 10(3), 432. https://doi.org/10.3390/math10030432