Abstract

Deep learning is expanding and continues to evolve its capabilities toward more accuracy, speed, and cost-effectiveness. The core ingredients for getting its promising results are appropriate data, sufficient computational resources, and best use of a particular algorithm. The application of these algorithms in medical image analysis tasks has achieved outstanding results compared to classical machine learning approaches. Localizing the area-of-interest is a challenging task that has vital importance in computer aided diagnosis. Generally, radiologists interpret the radiographs based on their knowledge and experience. However, sometimes, they can overlook or misinterpret the findings due to various reasons, e.g., workload or judgmental error. This leads to the need for specialized AI tools that assist radiologists in highlighting abnormalities if exist. To develop a deep learning driven localizer, certain alternatives are available within architectures, datasets, performance metrics, and approaches. Informed decision for selection within the given alternative can lead to batter outcome within lesser resources. This paper lists the required components along-with explainable AI for developing an abnormality localizer for X-ray images in detail. Moreover, strong-supervised vs weak-supervised approaches have been majorly discussed in the light of limited annotated data availability. Likewise, other correlated challenges have been presented along-with recommendations based on a relevant literature review and similar studies. This review is helpful in streamlining the development of an AI based localizer for X-ray images while extendable for other radiological reports.

Keywords:

deep learning; supervised learning; weak supervised learning; computer aided diagnosis; X-ray; class activation map; explainable AI MSC:

68T07

1. Introduction

Chest X-ray (CXR) is one of the most common methods for diagnosing lung diseases among radiologists. To assist radiologists in their diagnosing tasks, researchers have proposed computer aided diagnosis (CAD) systems since 1970s [1]. They are intended to minimize the risk of false negative cases while improve the speed of diagnoses [2]. Initially, rule-based systems were considered for CAD, which were based on if-then rules. The rule-based approach became limited with the expansion of use-cases, level of complexity, and unstructured data. Thus, the trend shifted toward data mining by 1990s [3]. Now, with the rise of big-data and computational resource availability, the focus is of research tends toward machine learning for achieving excellence in CAD area.

Machine learning became a de-facto approach that learns diagnosis patterns from the data without coding explicit if–then rules. This approach requires suitable data in terms of quality and quantity with the appropriate use of a learning algorithm. The classical machine learning algorithms for the past five decades achieve better performance for lower complexity tasks within structured data [4]. However, they become inefficient for complex unstructured data, e.g., for image analysis, classification, object detection, and segmentation. This presents the need for the more advanced machine learning sub-field called deep learning.

Deep learning has outperformed in all vision tasks for non-medical images for the past ten years. For medical images, the state-of-the-art techniques in deep learning have also achieved human expert level performance in diagnosing certain abnormalities in dermatology, cardiology, and radiology.

One of the main reasons for such outstanding results is the acquisition of labeled data. Labeled data comprise two parts i.e., image and tag. For X-ray image, abnormality tag can be normal, pneumonia, or cardiomegaly. Furthermore, the tag (also refers to label or annotation) may contain limited or extended information about the image. For instance, classification task requires only label, while detection requires additional information like x, y, width, and height of the target object. This becomes even richer when dealing with segmentation tasks where pixel level segregation is the target.

Alongside classification, practitioners prefer assistance in highlighting the abnormalities [5,6,7] from CAD system as a second opinion [5]. Such highlights better assist physicians toward diagnosing conclusions. This is also desirable to overcome false negative cases. According to the literature, deep learning has established a good reputation for medical image classification [6], bounding box formation [7], and segmentation [8]. Research in deep learning through medical images confronts many challenges [9,10]. Availability of quality data in large volume, no-interpretability, resource (memory, speed, space) management, and hyperparameter selection are some major bottlenecks, among many [11].

There exist brief discussions on state-of-the-art image classification models from generic to medical perspectives. For instance, [9,12,13,14] provided in depth details about the deep learning architectures, their strengths, and challenges in general. A good deal of literature, including [15,16,17,18,19], discuss stated architectures for medical image analysis. The focus of these efforts is around classification and prediction at image level [14]. For localization with bounding box and segmentation, Refs. [6,7,15,16,17,18,19,20,21] have provided brief details for X-ray images. For instance, in survey [6], several articles regarding the application of deep learning on chest radiographs were examined that were published prior to March 2021. They included publicly available datasets, together with the localization, segmentation, and image-level prediction techniques. Another study [17] mainly focused on techniques based on salient object detection while highlighted challenges in the area. To the best of our knowledge, very little discussions are available in the literature that address challenges for weak supervised learning in explainable AI perspective. Furthermore, class activation mapping has forged a new branch that offers interpretable modeling while capability for localization as biproduct. The primary focus of this paper is to explore the approaches that overcome the need for rich-labeled data acquisition and enhancing the interpretability of results for medical images. To date, the best results have been reported with supervised learning [9] where training data are labelled with rich information like class label, box labels (x, y, width, height) and/or masked data). The acquisition of such labels for medical images is expensive to generate in terms of time and efforts. Furthermore, the deep learning models, trained on such annotations are not interpretable enough for human inspection [11,22]. Subject matter specialists (SME) often require debugging the learnt deficiencies for optimization. Such analysis is performed without knowing how the model generated the output from a given input. Without interpretability, the model stays black-box and may endure bias leading to skewed decisions.

Approaches to detect objects without strong annotation are referred to as weak-supervised learning. They leverage image-level class labels to infer localization by heatmaps, saliency-maps, or attentions. We observed the growing trend toward weak-supervised learning techniques for localizing medical images. Recently, class activation map (CAM) -based approaches [23,24,25,26,27,28,29,30,31,32,33] have gained popularity in deep learning, offering (1) interpretability and (2) weak-supervised driven localization. They comprise sufficient information to constitute bounding-box and segmented regions. In this research, we explore deep learning approaches that offer the best performance for classification, localization, and interpretability in more generic form using medical image toward diagnosis.

The rest of the paper is organized in generic to specific order. A generic background has been presented in Section 2 about deep learning and its evolution from shallow artificial neural network to deeper architectures like convolution neural networks. Section 3 illustrates the metrics for the performance evaluation of the deep learning models. In Section 4, datasets for chest X-ray have been discussed in brief. Using the given datasets, most common state-of-the-art classification and localization approaches have been discussed for supervised learning in Section 5. Since supervised learning demands rich labels, whose availability is challenging in larger volume, weak supervised approaches become next choice for localization. Section 6 describes weak supervised learning approaches for localization in the context of medical applications. Based on literature reviews and available options, some gaps and challenges have been observed, as listed in Section 7 along with recommendations.

2. Background

Deep learning is a machine learning approach that primarily uses artificial neural networks (ANN) as a principal component. ANN simulates the human brain system to solve general learning problems. However, between 1980s and 1990s, it was equipped with a back-propagation algorithm [34] for learning, but remained out-of-practice due to the unavailability of suitable data and computational resources. With the advancement of parallel computing and GPU technology, it gained popularity in the 2000s to become a de-facto approach in machine learning.

At its very basic, deep learning teaches a computer how to wire input with output via hidden layers for predictions based on training data. Prediction can be made for many tasks, e.g., regression, classification, object detection, segmentation, etc.

2.1. Artificial Neural Network

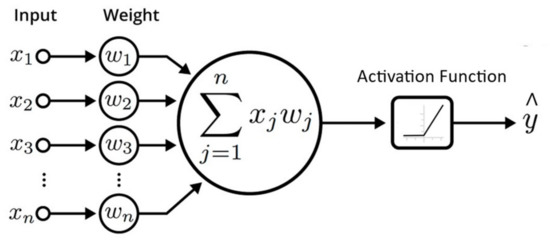

Artificial neurons represent a set of interconnected units or nodes that serve as the foundation of an ANN and are meant to mimic the function of biological brain neurons. Each artificial neuron contains inputs and generates a single output that can be transmitted to numerous other neurons (see Figure 1). The input X ∈ x1, x2, x3, …, xn is weighted with a learnable parameter W ∈ w1, w2, w3, …, wn. Their dot product is first aggregated and then one of the activation functions, e.g., tanh, sigmoid, ReLU, etc., is applied. In the training phase, the outcome of activation function is compared with actual label. The difference is backpropagated to update the W as per delta. This process is repeated for the whole dataset multiple times until the difference between activation function and actual label reaches the minimum possible value.

Figure 1.

Representation of Artificial Neural Network as Shallow Neuron.

2.2. Multilayer Perceptron

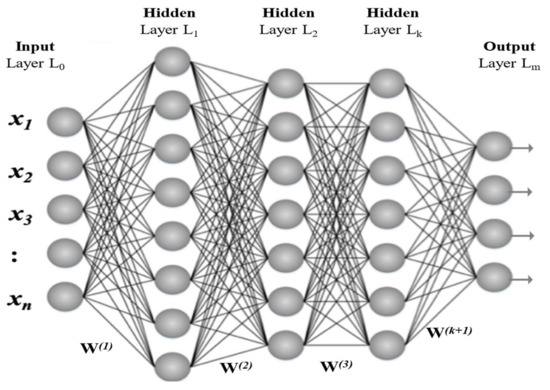

Deep learning architectures can be formed by embedding the artificial neurons into multiple hidden layers. Adding more hidden layers makes the architecture deeper, increasing the possibility of better performance. Figure 2 illustrates a three (hidden) layer deep learning architecture that is called multilayer perceptron (MLP). This kind of architecture is expensive in terms of computational resources. Therefore, they are altered in many ways, e.g., dropping-out connections, reduction in neurons in hidden layers, etc.

Figure 2.

Visualization of Deep Leaning Model using Multilayer Perceptron.

MLPs are useful in classification and regression tasks for structured data. However, they cannot perform well on unstructured data, e.g., images and sound streams.

2.3. Convolutional Neural Network

The convolutional neural network (CNN) is another type of deep learning architecture that replaces the general matrix multiplication with convolution operator [12]. CNN architecture was induced by the functions of the visual cortex. Their design specializes in addressing pixel data and mostly applied in image and sound analysis tasks. The convolution operator is the core of CNN that makes them shift invariant. The convolution kernels/filters slide along input features and extract useful information in feature maps within concise space.

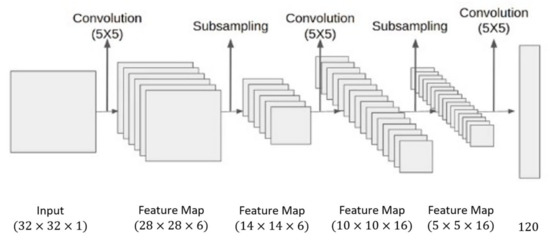

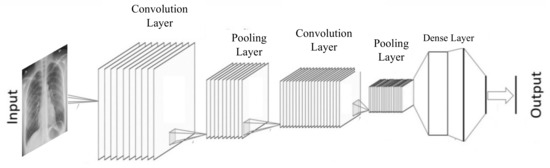

Pooing is another operator that is mostly used in conjunction with convolution in almost all CNN architectures. Like convolution, pooling also reduces the dimension of the feature map to make the features generalized and independent of their location in the image. However, pooling operator is fixed that is not meant to be learnt during training and contributes to reduce overfitting effects. CNN also uses some other operations to achieve better performance like dropout, batch normalization, skip connections, etc. There are many varieties in convolution base neural network architectures. The two most common approaches are end-to-end convolutional and hybrid with non-convolutional task. The end-to-end convolutional networks begin with larger resolution with one-or-three channels and end with one-by-one resolution but fatter channels (see Figure 3). The hybrid convolutional networks, use its convolutional part for feature extraction while the remaining is used for final task like classification (see Figure 4). The convolutional neural network first gained popularity when Yann LeCun created LeNet-5 for recognizing handwriting digits in 1989 [35].

Figure 3.

LeNet-5 architecture.

Figure 4.

An illustration of Convolutional Neural Network with convolution and pooling layers for feature extraction and dense layer for classification.

This architecture consisted of 5 layers and employed a backpropagation algorithm for training. Motivated by its success, more scholars explored the approach and developed more robust CNN architectures.

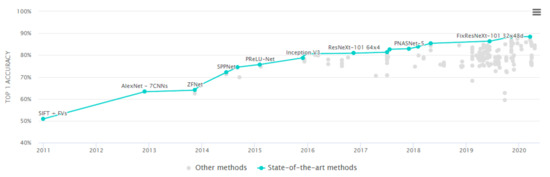

Meanwhile, during the 2000s, researchers like Fei-Fei Li were working on a project called ImageNet to create a large image dataset. It crowdsourced its labeling process and initiated ImageNet challenge. The problem was to recognize object categories in common images that one can find on the Internet. The challenge became popular among the machine learning community where various approaches were adopted in competition. The classical methods hit a plateau in terms of performance. In 2012, Krizhevsky et al. ranked top with their CNN based network called AlexNet. Since then, the leaderboard has consisted of CNN models (see Figure 5).

Figure 5.

ImageNet Challenge Leaderboard from 2011 to 2020.

ImageNet competitions promoted the research in deep learning architectures. They are still first choice for any image classification task. Xception [36], VGG [37], ResNet [38,39], Inception [40], MobileNet [41,42], DenseNet [43], NASNetMobile [44], and EfficientNet [45] are just a few are them that are available in Tensorflow and Pytorch as ready to use modules. Since they are capable to predict 1000 classes within daily use objects, they require too few changes to be adapted for similar domains.

One noticeable gap has been found in medical domain, when they have been adapted with ImageNet weights. In order to detect COVID-19 cases, authors have used pretrained ImageNet models i.e., MobileNetV2, NASNetMobile, and EfficientNetB1 in [46]. The same strategy has also been adapted in [47]. They used them as base models which were later fine-tuned on medical images.

Most commonly available deep learning models are available in with pre-trained weights in Tensorflow, Pytorch, Caffe2, and Matlab. Taking advantage of their availability and respective performance, we include them in our experimental setup. Based on their results within our research, they will be part of transfer and ensemble learnings. Table 1 lists popular TensorFlow architectures.

Table 1.

Popular Deep learning models trained on ImageNet for classification.

3. Method for Performance Analysis

An evaluation matrix is a key component used to gauge the performance of a machine learning model. There exist several methods that require comprehension and selection for a given task. Use of multiple metrics has widely been observed for the medical domain [48]. This section briefly discusses various performance matrixes.

3.1. Accuracy

Classification accuracy (CA) or simple classification is the basic metric that is used for gauging the performance of a classification model in machine learning. It is the ratio of number of correct predictions to the total number of input samples.

Classification is the simplest metric that is vulnerable for giving false sense of achieving high accuracy. Other metrics illustrate more clear performance by adding the following components in their equations:

- True Positive: output that correctly indicates the presence of a condition.

- True Negative: output that correctly indicates the absence of a condition.

- False Positive: output that wrongly indicates the presence of a condition.

- False Negative: output that wrongly indicates the absence of a condition.

3.2. Precision

Precision also known as positive predictive value (PPV) refers to the proportion of positive cases that were correctly identified.

3.3. Sensitivity

Sensitivity or recall is the proportion of actual positive cases which are correctly identified.

3.4. Specificity

Specificity is the proportion of actual negative cases which are correctly identified.

3.5. Jaccard Index

Jaccard index is also known as intersection over union (IoU). Almost all object detection algorithms (i.e., bounding box) consider IoU as core evaluator. It is defined over sets as (Intersection between two sets)/(Union of two sets).

| (5) |

In computer vision, it evaluates the overlap between two bounding boxes. The keynote for IoU in weak surprised learning is the unavailability of ground truth values. This makes it challenging to validate the performance of given model. Among alternatives, one way to quantify model performance on IoU can be the use of ground truth values for smaller testset. Such a testset can be taken from the same distribution and annotated by field experts, e.g., a radiologist. Another option could be the application of the same model on another domain’s rich annotated dataset where ground truth information may not be exposed or used during training but only for validation and testing.

3.6. Evaluation Matrix for Medical Diagnosis

In medical diagnosis, the cost of failing to diagnose the fatal disease of a sick person is much higher than the cost of sending a healthy person to more tests. Therefore, specificity and sensitivity are most suitable matrices when it comes to classification tasks.

4. Chest X-ray Datasets

Deep learning performs best on large volume of data. With the digitization technologies, medical institutions can collect radiographs in large volume. Alongside, researchers have extracted the textual reports associated with the radiographs and applied natural language processing (NLP) to categorized them for further research [49]. The use of computer aided labeling tools have also enabled data preparation at faster pace, e.g., LabelMe LabelImg, VIA, ImageTagger [50]. For instance, Snorkel [51] offers intelligence in generating masks for segmentation tasks with limited human supervision. Using some of these facilities, X-ray datasets with a large number of images have been formed for research purposes.

Some of the most cited datasets have been illustrated in Table 2. With the formation of large datasets, e.g., ChestXray8 [49], CheXpert [52], and VinDr-CXR [53], deep learning became sufficiently trainable for better performance.

Table 2.

Popular Chest X-ray datasets.

4.1. ChestXray8

ChestXray8 [49] consists of 112,120 chest radiographs from 30,805 patients collected between 1992 and 2015. They were collected at National Institute of Health (Northeast USA). Each CXR is an 8-bit grayscale image having 1024 × 1024 pixels that can have multiple labels. NLP was applied on their associated reports to label them within 14 types of abnormalities.

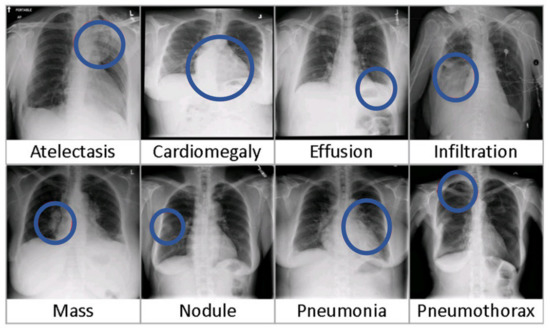

The dataset also includes 880 hand labeled bounding box labels for localization. Some CXR images have more than one B.Boxes that makes 984 labels in total. Only eight out of 14 disease types were marked for BBox annotation. Figure 6 illustrates sample images for some classes. Without much manual annotation, this dataset poses some issues regarding the quality of its labels [54].

Figure 6.

Illustration of Eight common thoracic diseases from ChestXray8.

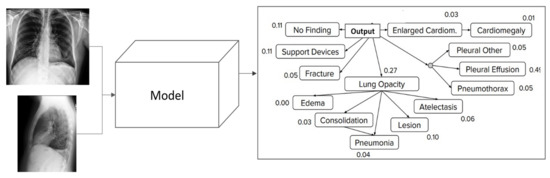

4.2. CheXpert

CheXpert [52] dataset was formed by Stanford Hospital that consists of 224,316 chest radiographs from 65,240 unique patients. The images were collected between 2002 and 2017 that spans within 12 abnormalities (see Figure 7). Each image is 8-bit grayscale with no change in original resolution.

Figure 7.

Predicting abnormality (pneumonia) from CheXpert.

The dataset was annotated using a rule-based labeler from radiology report that specified the absence, presence, uncertainty, and no-mention of 12 given abnormalities.

4.3. CheXpert

PadChest [55] dataset contains 160,868 images from 67,000 patients. It was created at San Juan Hospital (Spain) within 2009 and 2017. The images are in original resolution with 16-bit grayscale. The annotation for these images were created in two step process. First, a small portion of 27,593 images were manually labeled by a group of physicians. Using these labels, in a second step, an attention based RNN was trained to annotate the rest of the dataset. The labeled images were then evaluated against a hierarchical taxonomy that is based on UML standard.

4.4. VinDr-CXR

VinDr-CXR [53] dataset was created from the images collected from two of the Vietnam’s largest hospitals i.e., Hospital-108 and the Hanoi Medical University Hospital. They followed three step process to generate the database. First, data were collected from the hospitals between 2018 and 2020. Secondly, data were filtered to remove outliers such as images of body parts other than chest. Lastly, the annotation step was executed. It consists of 15,000 CXRs, out of which 18,000 were manually annotated by a group of 17 experienced radiologists with the classification and localization of 22 common thoracic diseases.

4.5. Montgomery County and Shenzhen Set

The Montgomery County and Shenzhen set [56] dataset consists of two CXR datasets produced by the U.S. National Library of Medicine. The Montgomery County (MC) contains manually segmented lung masks offers a benchmark for the evaluation of automatic lung segmentation methods. It has 138 X-ray radiographs, out of which 58 are TB positive cases. They were collected in collaboration with the Department of Health and Human Services, Montgomery County, Maryland (USA). The radiographs are 12-bit grayscale with either 4020 × 4892 or 4892 × 4020 pixels. The Shenzhen dataset contains 662 CXRs, including 335 cases with manifestations of TB. They were collected in collaboration with Shenzhen No.3 People’s Hospital, Guangdong Medical College, Shenzhen, China. The images are in PNG format with 3000 × 3000 pixels resolution. The datasets offer segmented masks of lungs in finer quality, making it a nice candidate for test or validation sets.

4.6. JSRT Database

The JSRT database [57] was developed by the Japanese Society of Radiological Technology. It comprises 154 CXRs with lung nodule. Out of these nodules, 100 are malignant while 54 are benign. Each image is 12-bit, 4096 gray scale 2048 × 2048 matrix size, 0.175 mm pixel size. The lung nodule images have been divided into 5 groups according to the degrees of subtlety. Moreover, nodule location information has also been added with X and Y coordinates. This dataset is though small but still useful for research and educational purpose. The application of classical machine learning methods is feasible, but deep learning may not be a useful approach.

4.7. JSRT Database

MIMIC-CXR [58] is a CXR dataset containing 371,920 images from 64,588 patients. The radiographs have been collected from emergency department of Beth Israel Deaconess Medical Center between 2011 and 2016. The images are 8-bit grayscale in full resolution and labelled using a rule-based labeler from associated reports.

The dataset quoted above are obviously not an exhaustive list of available datasets. They are mostly cited and publicly available till to date. Furthermore, they have been included in this report to cover the breadth of their kind. For instance, Montgomery, Shenzhen, JSRT offered rich annotations for segmentation [56,57,58]. Other noticeable datasets, e.g., PadChest [55], VinDr-CXR [53], Tuberculosis [56], and Kaggle [17], contributed in data diversity. They, along with others [19,59], created a sound benchmark for weak supervised driven localization over heatmaps, bounding box, and segmentation [7,60,61,62,63]. The major gap can be observed in interoperability of models for the datasets. A model that is trained, validated, and tested on one dataset was not reported valid on same domain’s other dataset. We would refer this gap as lack of domain sharing. If a COVID-19 classifier (model) performs 90% well on dataset-A, then it should achieve near level performance on dataset-B of similar domain.

5. Diagnosis Using Chest Radiographs

Detecting the signs of symptoms in X-ray images has been widely studied [54,62,64]. Deep learning methodologies in this area have demonstrated their value for localization and classification [64]. The fuel for such advancement were the availability of large size datasets and computational resources. This encouraged researchers to design deeper and wider deep learning architectures [37]. Some architectures became more popular because of their general-purpose offering irrespective to a specific domain [9].

Medical diagnosis is the most sensitive area where precision and reliability are the key requirements for any CAD system [3]. A patient with positive abnormal conditions must be captured even with slight chances.

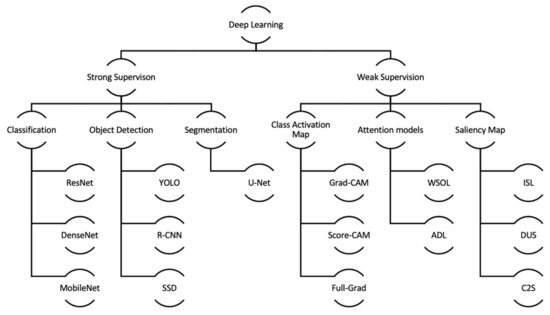

In Figure 8, we illustrate a taxonomy of deep learning approaches that can be used for object detection and segmentation from the literature. This taxonomy can also be considered when planning to develop or train a localizer for medical images like X-ray images. Strong supervision, as explained in Section 5.1, shall be highly preferred if the given dataset contains all the required ground truth information. For such instances, all classification tasks must be executed with a strong supervised approach. The same approach shall also be carried out for object detection and segmentation when rich annotated information is available. However, most datasets in the medical domain may not contain required spatial information. For such scenarios, weak supervision can be considered.

Figure 8.

Taxonomy of Localization Approaches within Deep Learning.

This section briefly discusses the trends in medical diagnosis using deep learning in general while keeping the focus on localization. The first subsection highlights deep learning methods from supervised learning that deal with image-level prediction and localization. The next subsection discusses the same tasks in weak supervised learning.

5.1. Classification and Localization Using Supervised Learning

A dense volume of literature has highlighted the strengths of supervised learning in classification and localization using deep learning [9,13,21]. Formally, supervised learning refers to a task that learns f: X → Y from a training data set D = , where X is the feature space, Y = {}, ∈ X, and ∈ Y. Assuming, are generated according to an unknown independent and identical distribution . In this approach, the predictive models are constructed by learning from a large number of training examples, where each example has at least one label that indicates its ground-truth output [65].

Deep learning has achieved top ranking performance in classification tasks with supervised learning. In the context computer vision, classification is also known as image-level prediction. In this task, trained model predicts labels by analyzing an entire image. The reason behind such performance is the availability of data that encouraged the researchers to experiment with sophisticated deep learning architectures even if they are computationally expensive. For image level prediction, the training dataset requires semantic organization like sub-division into classes. This opens new chapters of challenges, e.g., class-imbalance, missing labeling, incorrect labeling, generalization, and more. To deal with all or some of these challenges, various methodologies have been proposed. Table 3 summarizes some of such efforts that were made in the past three years.

Similar to classification at the image level, the localization task has also gained attention in the past decade using deep learning [66,67]. Localization refers to the task of highlighting the area-of-interest within an image either with bounding box, segmented contour, heatmap, or segmentation mask. In medical diagnosis, classification without localization answers half of the question [10]. Medical practitioners expect assistance not only at the radiograph level with abnormality detection, but also to visualize the signs and location. This requirement has been addressed in the literature by drawing BBox or segmentation. The associated challenge is the acquisition of rich dataset that is extended beyond image level annotation. For BBox task, each image must have x, y, width, and height. Likewise, segmentation task requires mask as annotation data. There also exist some ground-truth labels for BBox or segmentation in the listed datasets (see Table 2). Leveraging these annotations with combination to specialized networks and pre and post processing [14], some literature has been included in Table 3.

Object detection and segmentation techniques use various approaches to overcome data, computation, and performance bottlenecks. In these techniques, object detection and localization are either performed in two stages or one.

5.2. R-CNN

Ross Grishick et al. proposed R-CNN [68] that performs object detection in two stages. First, multiple regions are extracted and proposed using selective search [69] in bottom-up flow. CNN extracts feature from the candidate regions that are fed into an SVM to classify the presence of the object within that candidate region proposal. Moreover, it also predicts four values of the bounding box, which are offset values to increase the precision. The problems with R-CNN are longer training and prediction time. Its selective search also lacks the ability to learn that causes bad proposal generations.

5.3. SPP-Net

SPP-Net [70] was introduced right after R-CNN. The SPP-Net managed the model agnostic of input image size that improved the prediction speed of bounding box as compared to the R-CNN, without compromising on the mAP. Spatial pyramid pooling was used in the last layer of their network. This removed the fixed-size constraint of the network.

5.4. Fast R-CNN

To overcome the limitations of R-CNN, Ross Grishick et al. built Fast R-CNN [71]. Instead of feeding the proposed regions to the CNN, a convolutional feature map was generated from the input image. It helped in identification of right region. This approach significantly reduced the training time. For prediction at test stage, region proposal task was still an issue that required further improvements.

Table 3.

List of popular Techniques for Classification and Localization using Weak Supervised Learning.

Table 3.

List of popular Techniques for Classification and Localization using Weak Supervised Learning.

| S.No | Ref No | Methodology | Dataset |

|---|---|---|---|

| 1 | [72] | Using lung cropped CXR model with a CXR model to improve model performance | ChestX-ray14 JSRT + SCR, |

| 2 | [73] | Use of image-level prediction of Cardiomegaly and application for segmentation models | ChestX-ray14 |

| 3 | [74] | Classification of cardiomegaly using a network with DenseNet and U-Net | ChestX-ray14 |

| 4 | [75] | Employing lung cropped CXR model with CXR model using the segmentation quality | MIMIC-CXR |

| 5 | [76] | Improving Pneumonia detection by using of lung segmentation | Pneumonia |

| 6 | [77] | Segmentation of pneumonia using U-Net based model | RSNA-Pneumonia |

| 7 | [78] | To find similar studies, a database has been used for the intermediate ResNet-50 features | Montgomery, Shenzen |

| 8 | [79] | Detection and localization of COVID-19 through various networks and ensembling | COVID |

| 9 | [80] | GoogleNet has been trained with CXR patches and correlates with COVID-19 severity score | ChestX-ray14 |

| 10 | [81] | A segmentation and classification model proposed to compare with radiologist cohort | Private |

| 11 | [82] | A CNN model proposed for identification of abnormal CXRs and localization of abnormalities | Private |

| 12 | [83] | Localizing COVID-19 opacity and severity detection on CXRs | Private |

| 13 | [84] | Use of Lung cropped CXR in DenseNet for cardiomegaly detection | Open-I, PadChest |

| 14 | [85] | Applied multiple models and combinations of CXR datasets to detect COVID-19 | ChestX-ray14 JSRT + SCR, COVID-CXR |

| 15 | [86] | Multiple architectures evaluated for two-stage classification of pneumonia | Ped-pneumonia |

| 16 | [87] | Inception-v3 based pneumoconiosis detection and evaluation against two radiologists | Private |

| 17 | [88] | VGG-16 architecture adapted for classification of pediatric pneumonia types | Ped-pneumonia |

| 18 | [89] | Used ResNet-50 as backbone for segmentation model to detect healthy, pneumonia, and COVID-19 | COVID-CXR |

| 19 | [90] | CNN employed to detect the presence of subphrenic free air from CXR | Private |

| 20 | [91] | Binary classification vs One-class identification of viral pneumonia cases | Private |

| 21 | [92] | Applied a weighting scheme to improve abnormality for classification | ChestX-ray14 |

| 22 | [93] | To improve image-level classification, a Lesion detection network has been employed | Private |

| 23 | [94] | An ensemble scheme has been used for DenseNet-121 networks for COVID-19 classification | ChestX-ray14 |

5.5. Faster R-CNN

R-CNN [68] and Fast R-CNN [69] both used selective search [69] for creating region proposals, which was slowing-down the network performance. This shortcoming was identified and fixed by Shaoqing Ren et al. in Faster R-CNN [95]. They replaced the selective search with object detection algorithm. This enabled the network to learn the region proposal.

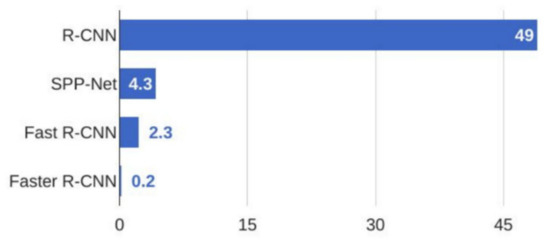

Among the two staged networks, Faster R-CNN was the fastest as can be observed in Figure 9.

Figure 9.

Comparison of Test-Time Speed between R-CNN, SPP-Net, Fast R-CNN and Faster R-CNN.

5.6. YOLO

Joseph Redmon et al. designed YOLO (You Only Look Once) [96] in 2015, a single shot object detection network. Its single convolutional network predicts the bounding boxes and the class probabilities. YOLO has gained popularity for its superior performance over the previous two-shot object detection techniques. The model divides the input image into grids and computes the probabilities of an object inside each grid. Next, it combines nearby high-value probability grids as single object. Using non-max suppression (NMS), low-value predictions are ignored. During training, the center of each object is detected and compared with the ground truth, where weights are adjusted according to the delta. In subsequent years, multiple improvements have been made to the architecture and released in successive versions, i.e., YOLOv2 [97], YOLOv3 [98], YOLOv4 [99], and YOLOv5 [100].

5.7. SSD

As the name describes, single shot detector (SSD) [101] takes a single shot for detecting multiple objects within the input image. It was designed by Wei Liu et al. in 2016 and combines Faster R-CNN (anchor approach) and YOLO (one-step structure) key capabilities to perform faster and with greater accuracy. Furthermore, SSD employed VGG-16 as a backbone and adds four more convolutional layers to form the feature extraction network. Performance of SSD300 has been reported 74.3% mAP at 59 FPS. Similarly, SSD500 achieves 76.9% mAP at 22 FPS, outperforming Faster R-CNN and YOLOv1 at sound margins.

6. Localization Using Weak-Supervised Learning

The localization task requires more processing efforts and resources as compared to image-level classification. Supervised learning is indeed a first-to-try approach to deal with it. However, the major challenge for supervised learning is the acquisition of required annotation. This becomes worse for medical imaging for the fact that the labeler must generally be a medical professional [60]. For the large volume of correct annotation, the task becomes too expensive in terms of time and cost. Table 4 outlines various alternatives within weak supervised approaches for localization.

Table 4.

Summary of Weak Supervised based Deep Learning approaches for object detection.

As an alternative to supervised learning where acquisition of BBox or segmented masks are not feasible, weak supervision can play a vital role. Learning with weak supervision involves learning from incomplete, inexact, or inaccurate labels. Weakly supervised learning for predictive models learn about the task (e.g., BBox detection) indirectly from noisy or incomplete labels [65]. In this work, we explore three main classes of weak supervised driven localization called class activation maps, attention models, and saliency.

Addition to the approaches given in Figure 8 and Table 4, there exist other techniques that have shown better feasibility for localization. For instance, self-taught object localization by masking out image regions has been proposed to identify the regions that cause the maximal activations to localize objects [102]. Similarly, objects have been localized by combining multiple-instance learning with CNN features [103]. In [104], authors have proposed transferring mid-level image representations. They argued that some object localization can be realized by evaluating the output of CNNs on multiple overlapping patches. However, the localization abilities were not actually evaluated by these methods. Since they are not trained end-to-end, therefore requiring multiple forward passes, this makes them harder to scale to real-world datasets [28,29,30].

6.1. Class Activation Map (CAM) Based Localization

Class activation map is an effective approach for obtaining the discriminative image areas that a CNN uses to identify a certain class in the image. The aim of CAM base techniques is to produce a visual explanation map. These maps are illustrated via heatmaps that show weights for vital areas of an input image that contribute to the model’s conclusions at pixel-level. The vanilla version of CAM has emerged since 2014 and evolved in multiple variants as listed in Table 5.

Table 5.

Illustration of popular CAM Variants.

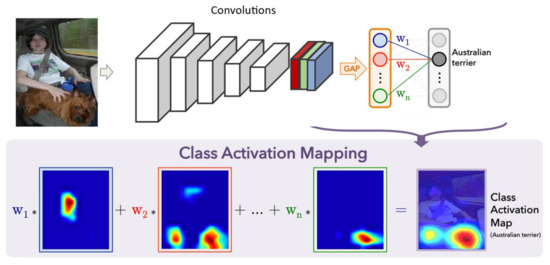

6.1.1. CAM (Vanilla Version)

The idea of class based maps was inspired by global max pooling (GMP) [105]. The GMP was applied to localize an object by a single point. Their localization was limited to pointing out a target object with a single point rather than bounding the area of full object. Their work was extended in [23] by replacing GMP with global average pooling (GAP) (see Figure 10). The intuition was to take benefits from the loss for average pooling while the network identifies objects’ discriminative regions. This approach, known as class activation map (CAM), was generic enough for the network it was not trained on. CAM can be a first of its kind in identifying discriminative regions using GAP.

Figure 10.

Highlighting class-wise discriminative regions using Class Activation Mapping.

Though it inspired the community for its visualization idea, there are tradeoffs concerning the complexity and performance of the model. This was specifically applicable to CNN architectures whose last layer is either a GAP layer or alterable to inject GAP. For the latter case, the altered model needs retraining to adjust new layer weights.

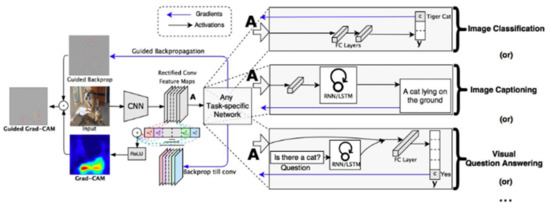

6.1.2. Grad-CAM

The main limitation of CAM is alteration in architecture that was immediately resolved by subsequent variants. The first variant launched as Grad-CAM [29] that uses the gradients of any targeted class for producing a coarse location map (see Figure 11). To illustrate its contribution to the target class, it uses the average gradients of a feature map. This eliminates the needs of architectural modification and model retraining. Grad-CAM highlights the salient pixels in the given input image and improves the CAM’s capacity for generalization for any commercial CNN-based image classifier.

Figure 11.

Overview of Grad-CAM for Image classification, captioning, and Visual question answering.

Since Grad-CAM does not rely on weighted average, the localization area corresponds to bits and parts of it instead of the entire object. This decreases its ability to properly localize objects of interest in the case of multiple occurrences of the same class. The main reason for this decrease is emphasizing the global information that local differences are vanished in it.

6.1.3. Grad-CAM++

As its name suggests, Grad-CAM++ [24] can be thought of as a generalized formulation of Grad-CAM. Likewise, it also considers convolution layer’s gradients to generate a localization map for salient regions on the image. The main contribution of Grad-CAM++ is to enhance the output map for the multiple occurrences of same object in a single image. Specifically, it emphasizes the positive influences of neurons by taking higher-order derivatives into account.

On the way forward while computing gradients, both the variants suffer from the problem of diminishing gradient when they are saturated. This causes the area of interest either missed or highlighted with too small values to be noticed. The issue becomes worse if the classifier does not earn a better reputation in terms of the accuracy metric.

6.1.4. Score-CAM

To address the limitations of gradient based variations, Score-CAM was proposed in [30]. In general, Score-CAM prefers global encoding features instead of in local ones. It works in perturbation form where mask part of regions is observed within input with respect to target score. It extracts the activations during forward pass from last convolutional layer. The resulted shape is up-sampled as per input image which are then normalized to in [0, 1] range. The normalized activation map is multiplied with original input image such that the up-sampled maps are projected to generate a mask. Lastly, the masked Image is passed to CNN with SoftMax output.

Score-CAM has been referred as post-hoc visual explainer that excludes the use of gradients. However, it pipelines of subtasks makes it computationally expensive among its class. Moreover, it usually performs well on visual comparison, but its localization results remain coarse, which further causes certain cases of non-interpretability.

6.1.5. Layer-CAM

Layer-CAM generates class activation map by taking different CNN’s layers into account [31]. It first multiplies the activation value of each location in the feature map by a weight and then combined linearly. This generates class activation maps from shallow layers. This hierarchical semantic operation makes Layer-CAM to utilize information from several levels to capture fine-grained details of target objects. This makes it easy to make it applicable to off-the-shelf CNN based classifiers without altering the network architectures and the way their back-propagation work.

Layer-CAM is an effective method to improve the resolution of the generated maps. In some cases, their quality drops due to the noise of inherited gradients. This can be overcome by finding an alternative approach from the use of gradients or suppressing the responsible noise.

6.1.6. Eigen-CAM

Eigen-CAM eliminates dependance on the backpropagation for gradients, the score of class relevance, or maximum activation locations [28]. In short, it does not rely on any form of weighting features. It calculates and displays the principal components of the acquired features from the convolutional layers. It performs well in creating the visual explanations for multiple objects in an image.

Like other variants, Eigen-CAM demands no alteration in CNN models or retraining but also excludes dependency of gradients. It is agnostic of classification layers because it just requires the learnt representations at the final convolution layer.

6.1.7. XGrad-CAM

In stated models, the authors observed insufficient theoretical support which they have attempted to address in [27]. They proposed XGrad-CAM and devised two axioms, sensitivity and conservation. The method is an extension to Grad-CAM that scales the gradients by the normalized activations. Their goal was to satisfy both the axioms as much as possible in order to make the visualization method more reliable and theoretically sound. Since the properties of these axioms are self-evident therefore, their confirmation shall make the CAM outcome more reliable. XGrad-CAM complies both the axioms’ constraints while maintaining a linear combination of feature maps.

6.1.8. Other Variants

The research community is active in class activation method enhancements and has proposed many other variants. For instance, Ablation-CAM [26] observes the impact of the output drops after zeroing out activations. Full-Grad [25] considers the gradients of the biases from all over the network, and then sums them to generate maps. Poly-CAM [33] combines earlier and later network layers to generate CAM with high resolution. Likewise, Reciprocal CAM [32] (Recipro-CAM) is a lightweight and gradient free method which extracts masks into feature maps by exploiting the correlation between activation maps and network outputs.

Table 5 list some newly and enhanced techniques of our interest. They have primarily been designed and trained for non-medical images to achieve higher accuracy in weak supervision. Their transparency for understandability and configurations motivates us to leverage their capabilities for medical images.

In recent literature, we found some CAM based work within X-ray imaging tasks. A deep learning and grad-CAM based visualization has been presented to detect COVID-19 cases in [22]. They conducted experiments to visualize the signs by Grad-CAM.

Similarly, domain extension transfer learning (DETL) [106] has been proposed for COVID-19 using Grad-CAM. DeepCOVID-XR [107] employed Grad-CAM to distinguish pneumonia, COVID-19, and normal classes from chest X-rays. GradCAM++ is a variant of Grad-CAM that use send order gradients has been utilized in [58,59]. Other than COVID-19, X-ray images have been used to identify tuberculosis [108]. The authors used small datasets with strong annotated data with compact architecture. Authors in [109] leveraged transfer learning for diagnosing lung diseases. These approaches have tried to highlight area of interests in chest X-rays using heatmaps. However, no further attention has been paid to extract bounding box or segmentation masks. They presented the quality of their performance by visual observations.

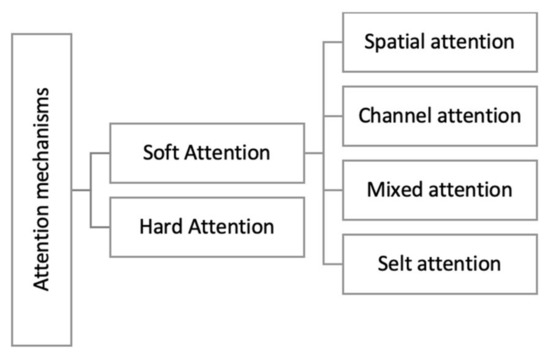

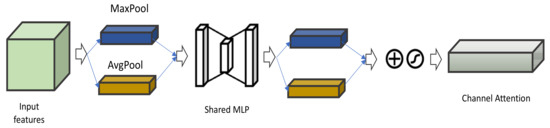

6.2. Attention Models

Weak supervised leaning for localization mostly follows a two stage-model. The first stage answers, where to look [110] and second estimates mask or bounding area. Attention methods simulate cognitive efforts to enhance key parts of attention while fading out the non-relevant information [111]. These mechanisms primarily give different weights to different information. During the past decade, attention mechanisms have evolved alongside other computer vision tasks. As illustrated in Figure 12, they can be broadly grouped into two broad classes, i.e., soft and hard attention [111].

Figure 12.

Subgroups of Attention models.

6.2.1. Soft Attention

Soft attention is the most popular branch that offers flexibility and ease of implementation [111]. Its applications can be found in many fields of computer vision, e.g., classification [112,113], object detection [114], segmentation [115,116], model generation [111], etc. The mechanism can be further divided into sub-fields.

Spatial attention: Spatial attention aims to resolve the CNN limitation for being spatially invariant w.r.t., the input data efficiently [117]. The spatial transformation network (STN) [118] proposes a processing module for handing transaction-invariance explicitly. It is designed to be inserted into CNN architecture. This adds capability in CNN to spatially transform feature maps actively without extra training supervision.

The spatial transformer [118,119] can be designed as separate layer for seamless implementation without making any change to loss function. Figure 13 illustrates the implementation of spatial transformation as (a) input image, (b) predicts the objects, (c) apply transformation, and (d) classify as class.

Figure 13.

Illustration of STN model.

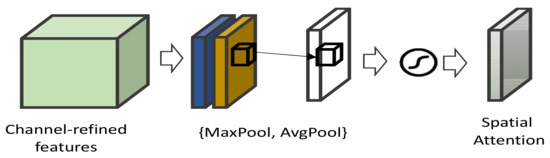

Channel attention: In a CNN, the channel attention module produces an attention map by utilizing inter-channel relationship of features [120,121]. For a given input image or video frame (see Figure 14), the focus of channel attention is on ‘what’ is meaningful [122]. For instance, CNN applies convolutional kernels on the RGB image, which results in more channels, each containing different information.

Figure 14.

Illustration Channel Attention module.

Similarly, areas of an image having greater mean weight can be exploited, leading to the channels requiring more attention.

Mixed attention: The combination of multiple attention mechanisms into one framework has been discussed in CBAM [121]. This combination offers better performance at the cost of implementation complexity. Such a combination guides the network on ‘where’ to look as well as ‘what’ to look or pay attention. They can also be used in conjunction with supervised learning methods for improved results [123].

6.2.2. Hard Attention

Hard attention can be considered an efficient approach because important features are selected directly from the input [111]. They have shown improved performance in classification [124] and localization [125,126]. It mimics inattentional blindness [127] of the brain where brain temporarily ignores other (surrounding) signals while engage in a demanding (stressful) task [128]. Hard attention models are capable to make decision by considering only a subset of pixels in the input image. Typically, such inputs are in the form of a series of hints. Training such attention model is challenging because class label supervision is difficult which further becomes difficult to scale for complex datasets. To overcome this deficiency, Sacceder [125] was proposed to improve the accuracy using a pretraining step. It requires only class labels so that initial attention location can be produced for policy gradient optimization.

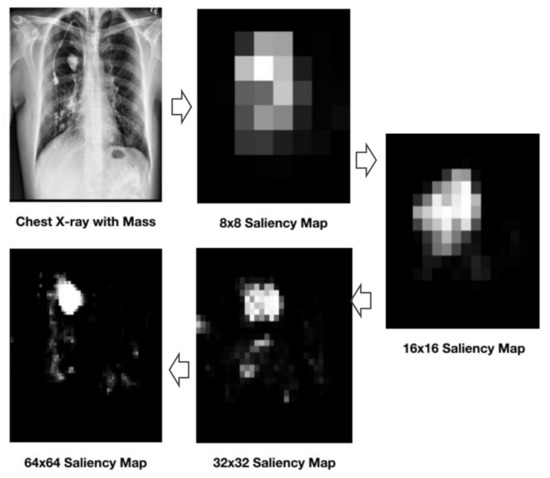

6.3. Saliency Map

Saliency map refers to a form of image where region-of-interest gets focus first. The goal of saliency maps generation techniques is to align the pixel value with the importance of target object presence. For instance, Figure 15 illustrates an example CXR image that highlights the presence of a mass with opaquer cloud than the rest of image.

Figure 15.

Illustration of CXR with saliency maps of increasing resolutions.

OpenCV offers three forms of classical saliency estimation algorithms [129] that are readily available for applications, i.e., Static saliency, Motion saliency, and Objectness. Static saliency [130] uses the combination of image features and statistics to localize. Motion saliency [131] seeks movements in given video to detect saliency by optical flow. Objectness [132] generate bounding boxes and computes the likelihood of where the target object may lie in them.

Various map estimating techniques exist extensively in deep learning. TASED-Net [133] works in two stages, i.e., encoder and prediction networks, respectively. STAViS [134] employs one network to combine spatiotemporal visual and auditory information to generate a final saliency map.

Variety of approaches can be found in the literature to generate saliency maps using weakly supervised learning [21,135]. According to Zhao Q. et al. [21], salient base localization can be divided into two branches, namely bottom-up (BU) and top-down (TD). The bottom-up [136] takes local feature contrast as central element irrespective of the scene’s semantic contents. Various local and global features can be extracted to learn local feature contrast including edges or spatial information. With this approach, high-level and multi-scale semantic information cannot be explored using the low-level features. This generates low contrast salient maps instead of salient objects. The top-down [137,138] salient object detection approach is task oriented. It takes the prior knowledge about the object in its context, which helps in generating the salient maps. For instance, in semantic segmentation, TD generates saliency map by assigning pixels to object categories. Following the top-down approach, an image level supervision (ILS) was proposed [139] in two stages. First classifier is trained with foreground features and then generate saliency maps. The have also developed an iterative conditional random field to refine the spatial labels to improve the overall performance.

In [140], authors proposed deep unsupervised saliency using a latent saliency prediction module and a noise modeling module. They have also used a probabilistic module to deal with noisy saliency maps. Cuili Y. et al. [141] opted to generate saliency map with their technique called Contour2Saliency. Their coarse-to-fine architecture generates saliency maps and contour maps simultaneously. Hermoza R. et al. [61] proposed a weakly supervised localization architecture for CXR using saliency map. Their two-shot approach first performs classification and then generates a saliency map. They refine the localization information using straight-through Gumbel-Softmax estimator.

7. Challenges and Recommendations

This section contains the take aways of this review in light of the cited literature in sub-sections. Though they are equally useful for non-medical CV tasks, they remain highly connected to visual tasks for radiology images.

7.1. Disclosure of Training Data

The availability of dataset plays important roles in the advancement of medical research within machine learning. Two of the important utilities of these datasets are (1) validity of proposed work and (2) further advancements. Examples of such work can be found in [81,82,83,87,90,91,93,107]. They trained their models on private data which may not be re-producible by other researchers. This can become an obstacle for extending the model with more improvements. One obvious reason for such non-disclosure is the patient privacy concerns. Focus of research is another reason, where the effort was mainly made to develop architectures rather than data management. Similarly, data sharing platform availability for larger volume can be a challenge for some researchers. Furthermore, dealing with legal frameworks that cover patients’ personal and health-care information becomes another major challenge. The example of such frameworks are General Data Protection Regulation (GDPR) and Health Insurance Portability and Accountability Act (HIPAA). Abouelmehdi K., et al. have highlighted similar concern in [142] and proposed to solve them by simulating specialized approaches that support decision making and planning strategies. Likewise, van Egmond et al. [143] suggested an inner-join secure protocol for training the model while preserving privacy of patient. Dyda A et al. [144] have discussed differential privacy that can preserve confidentiality during data sharing. We believe that medical image datasets should be made available by following data privacy and confidentiality compliance checklist.

7.2. Source Code Sharing

Like data, source code sharing has a positive impact on acceleration of research in machine learning for medical domain. However, it has no critical challenges the way data share does. There exist many platforms that can be utilized for storing and sharing the source code including but not limited to github, Bitbucket, gitlab, etc. Github among these platforms has been mostly used since 2008. Paper-with-code is another such platform that offers free sharing or machine learning artifacts, e.g., paper, code, datasets, methodologies, and evaluation tables.

Although the trend of source code sharing is rising in the machine learning community, many articles lack this feature, e.g., [71,109,145]. Sharing source code can save time in re-producing the same outputs for each interested party. Research committees have been found complaining of a lack of sufficient details preventing them to re-code the same technique. This presents the intense need for publishing the relevant code such that the same results can be produced and further contributions in the field can be made.

7.3. Diversity in Data

The diversity of machine learning models is an important factor that affects model performance with respect to generalization at the prediction phase [146]. Model trained more on a specific class/race/geography can suffer from respective biasness [49,52]. Such narrow vision can cause biasness in algorithmic decisions. Robust datasets play key role to avoid biasness in the outcome. Accommodating sufficient samples for each class from real-world contributes to the robust property of the dataset. In medical diagnosing, diversity in data can be achieved by including relative samples from different part of the world. The datasets discussed in this work are also tagged with the geographical locations (see Table 2). Efforts can be made to ensemble multiple datasets either completely or partially to extend the volume and generalization. Diversity in the training data creates learning challenges for a model to learn but expand the performance with generalization in real world.

7.4. Domain Adaption

Domain adaption is another diversity extending feature. It trains an already trained model on another dataset of same domain [147]. For instance, a chestxry14 trained DenseNet can be fine-tuned on CheXpert, and PadChest incrementally generalizes pneumonia detection. The end-to-end process can be conducted in two steps. First, run a validation test on the new dataset. Second, analyze the result-set and fine-tune the selected classes on need bases. It is a subcategory of transfer learning where source and target domains have the same feature space but different distributions [148]. The given medical imaging literature lacks domain adaption and has mostly opted to retrain on multiple datasets. Furthermore, models trained on one dataset for a specified task have not been tested and reported on another dataset having the same task.

7.5. Interpretability

Unlike the decision tree or k-nearest neighbor, deep learning can be considered as black box for its results to be non-interpretable [149]. Its complexity makes it a flexible approach that has tight dependencies on learnable and hyper-parameters [150]. However, the outcomes are harder to explain to humans that makes a challenging issue in medical field where small incorrect decision may cause death situations [151]. Classification models (Image-level) that output probabilities about some specified diseases are most questionable. However, localization models that highlight area-of-interest via bounding boxes, masks, or heatmaps may experience little criticism for the outputs. Still, when model performance is not good then it may require analyzing the internal process on how the outputs are generated. Medical professionals are always curious regarding how the model learns. This will enable them to improve the model by providing appropriate training data. The literature reveals that saliency-maps and class activation maps (CAM) have potentials to elevate trust on ML [23]. Furthermore, the variants of CAM [24,29,30] have achieved better results that sufficiently explain what the model learnt and how it perceived the given input.

7.6. Deriving Bounding Boxes and Segmentation Contour from Heatmaps

Heatmaps is a one of the common ways to highlight critical regions on the image using distinct color schemes [152]. Low resolution images are prone to produce incorrect and misleading highlights while high resolution images consume more data and computing resources. In medical diagnoses, weakly supervised localization approaches, e.g., saliency, attention, or CAM, have been mostly used to generate heatmaps. They highlight the areas of interest like signs-of-abnormality by intensity of colors. The interpretation of such visuals is not easy and require proper guidance and explanation. Sometimes, heatmap painted (X-ray) images become annoying during visual inspection. Practitioners may have to switch between original and heatmap-painted images to understand the complete picture. This creates an opportunity for research work to simplify the visual inspection. One possible solution can be the derivation of bounding box from the heatmap. Another alternative can be the extraction of segmentation contour. The shallow boxes and contours for these artifacts presents clear localization scheme. We believe that derivation of bounding box or segmentation contour may require post-processing iterations to optimize the quality of visuals.

7.7. Comparative Analysis with Strong Annotation

Weak supervised learning approaches are applied to noisy, incomplete, and sometime unlabeled data. In visual tasks, the performance is evaluated by visual inspections. For fewer samples such visuals assessment is appropriate but still need a quantitative matric like intersection-over-union. The problem for IoU calculation is the unavailability of ground-truth value. This may require two stages that were not observed in the literature. First, establish baselines with strong supervised learning models for bounding box [71,153] and segmentation [8,154]. Second, derive bounding box and segmentation masks from the heatmaps for comparative analysis. For instance, let’s assume that chest-xray14 contains 1000 images with bbox annotations while remaining have image-level labels. Using CAM based approach, we can train a model on non-bbox labeled images and test on bbox annotated data (i.e., 1000 images). This will enable us to analyze the performance of our weak-supervised model against the hand-crafted ground truth values.

7.8. Infer Diagnosis from Classification and Localization

According to [155], diagnostic criteria refer to a set of signs, symptoms, and related tests that have been developed for routine clinical care use. This guides medical practitioners on the care of individual patients. To conclude diagnoses, practitioners must consider patient profile, history, and lab tests in conjunction with diagnostic criteria. This definition is important to consider while developing CAD systems. Based on just one X-ray image, the patient cannot be diagnosed with a certain condition. It requires other signs and symptoms to finally conclude the presence or absence of a disease. Image level prediction is the least useful output that suggest nothing but declare the presence or absence a sign. Localization, however, highlights the signs and location of a condition that can better assist a physician in right direction.

7.9. Emerging Techniques

Deep learning has become a state-of-the-art approach for solving many problems. This creates opportunities to even solve its own issues and challenges. Generally, deep learning performs well if the right combination of data, computing, and configurations is used. These requirements are not always easy to meet. This becomes even more challenging for medical analysis tasks as discussed in sections above. To overcome such challenges, the following techniques can be employed for given tasks.

Transfer Learning: Transfer learning has been used in medical imaging models due to a lack of training data. Pretrained models of the ImageNet dataset are adopted instead of training from scratch.

Ensemble Learning: Ensemble learning combines the predictions from multiple models to gain confidence in the predicted class. The consensus policy among members can be simple, e.g., average, median, or complex as per domain and task. AI driven literature in this respect has not discussed the details of aggregation or consensus policy.

Generative Adversarial Networks: Generative adversarial networks (GANs) represent a powerful approach for generating new images by learning patterns from training sets.

8. Conclusions

This paper presents a comprehensive review of tools and techniques that have been adapted for localizing abnormalities in X-ray images using deep learning. The most cited datasets that are publicly available for given tasks have been discussed. The challenges, e.g., privacy, diversity, and validity, have been highlighted for datasets. Using these datasets, supervised learning techniques have been discussed in brief for classification and localization. Supervised learning techniques for localization rely on rich annotation, e.g., x, y, width, height for bounding box or segmentation masks. Such labels are harder to acquire, opening directions for weakly supervised learning approaches. Three major categories of weak-supervised learning techniques were discussed in brief. Finally, gaps and improvements have been listed and discussed for further research.

Author Contributions

Conceptualization, M.A. and M.J.I.; methodology, M.A., M.J.I. and I.A.; software, M.A., M.J.I. and I.A.; validation, M.A., M.J.I. and I.A.; formal analysis, M.A. and M.J.I.; investigation, M.A. and M.J.I.; resources M.A., M.J.I., I.A., M.O.A. and A.A.; data curation, I.A.; writing—original draft preparation, M.A. and M.J.I.; writing—review and editing M.J.I., I.A., M.O.A. and A.A.; visualization, I.A.; supervision, M.J.I., I.A., M.O.A. and A.A.; project administration, M.O.A. and A.A.; funding acquisition, A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This project is funded by the Deputyship for Research & Innovation, Ministry of Education in Saudi Arabia for funding this research work through the project number “IF_2020_NBU_360”.

Institutional Review Board Statement

Not Applicable.

Informed Consent Statement

Not Applicable.

Data Availability Statement

Data are available from authors on request.

Acknowledgments

The authors extend their appreciation to the Deputyship for Research & Innovation, Ministry of Education in Saudi Arabia for funding this research work through the project number “IF_2020_NBU_360”.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shortliffe, E.H.; Buchanan, B.G. A model of inexact reasoning in medicine. Math. Biosci. 1975, 23, 351–379. [Google Scholar] [CrossRef]

- Miller, R.A.; Pople, H.E.; Myers, J.D. Internist-I, an Experimental Computer-Based Diagnostic Consultant for General Internal Medicine. N. Engl. J. Med. 1982, 307, 468–476. [Google Scholar] [CrossRef] [PubMed]

- Doi, K. Computer-aided diagnosis in medical imaging: Historical review, current status and future potential. Comput. Med. Imaging Graph. 2007, 31, 198–211. [Google Scholar] [CrossRef] [PubMed]

- Hasan, M.J.; Uddin, J.; Pinku, S.N. A novel modified SFTA approach for feature extraction. In Proceedings of the 2016 3rd International Conference on Electrical Engineering and Information Communication Technology (ICEEICT), Dhaka, Bangladesh, 22–24 September 2016; pp. 1–5. [Google Scholar]

- Chan, H.; Hadjiiski, L.M.; Samala, R.K. Computer-aided diagnosis in the era of deep learning. Med. Phys. 2020, 47, e218–e227. [Google Scholar] [CrossRef]

- Çallı, E.; Sogancioglu, E.; van Ginneken, B.; van Leeuwen, K.G.; Murphy, K. Deep learning for chest X-ray analysis: A survey. Med. Image Anal. 2021, 72, 102125. [Google Scholar] [CrossRef]

- Wu, J.; Gur, Y.; Karargyris, A.; Syed, A.B.; Boyko, O.; Moradi, M.; Syeda-Mahmood, T. Automatic Bounding Box Annotation of Chest X-ray Data for Localization of Abnormalities. In Proceedings of the 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI), Iowa City, IA, USA, 3–7 April 2020; IEEE: Iowa City, IA, USA, 2020; pp. 799–803. [Google Scholar]

- Munawar, F.; Azmat, S.; Iqbal, T.; Gronlund, C.; Ali, H. Segmentation of Lungs in Chest X-ray Image Using Generative Adversarial Networks. IEEE Access 2020, 8, 153535–153545. [Google Scholar] [CrossRef]

- Ma, Y.; Niu, B.; Qi, Y. Survey of image classification algorithms based on deep learning. In Proceedings of the 2nd International Conference on Computer Vision, Image, and Deep Learning; Cen, F., bin Ahmad, B.H., Eds.; SPIE: Liuzhou, China, 2021; p. 9. [Google Scholar]

- Agrawal, T.; Choudhary, P. Segmentation and classification on chest radiography: A systematic survey. Vis. Comput. 2022, Online ahead of print. [Google Scholar] [CrossRef]

- Amarasinghe, K.; Rodolfa, K.; Lamba, H.; Ghani, R. Explainable Machine Learning for Public Policy: Use Cases, Gaps, and Research Directions. arXiv 2020, arXiv:2010.14374. [Google Scholar]

- Bhatt, D.; Patel, C.; Talsania, H.; Patel, J.; Vaghela, R.; Pandya, S.; Modi, K.; Ghayvat, H. CNN Variants for Computer Vision: History, Architecture, Application, Challenges and Future Scope. Electronics 2021, 10, 2470. [Google Scholar] [CrossRef]

- Shrestha, A.; Mahmood, A. Review of Deep Learning Algorithms and Architectures. IEEE Access 2019, 7, 53040–53065. [Google Scholar] [CrossRef]

- Chen, C.; Wang, B.; Lu, C.X.; Trigoni, N.; Markham, A. A Survey on Deep Learning for Localization and Mapping: Towards the Age of Spatial Machine Intelligence. arXiv 2020, arXiv:2006.12567. [Google Scholar]

- Yang, R.; Yu, Y. Artificial Convolutional Neural Network in Object Detection and Semantic Segmentation for Medical Imaging Analysis. Front. Oncol. 2021, 11, 638182. [Google Scholar] [CrossRef] [PubMed]

- Xie, X.; Niu, J.; Liu, X.; Chen, Z.; Tang, S. A Survey on Domain Knowledge Powered Deep Learning for Medical Image Analysis. arXiv 2004, arXiv:2004.12150. [Google Scholar]

- Maguolo, G.; Nanni, L. A Critic Evaluation of Methods for COVID-19 Automatic Detection from X-ray Images. arXiv 2020, arXiv:2004.12823. [Google Scholar] [CrossRef] [PubMed]

- Solovyev, R.; Melekhov, I.; Lesonen, T.; Vaattovaara, E.; Tervonen, O.; Tiulpin, A. Bayesian Feature Pyramid Networks for Automatic Multi-Label Segmentation of Chest X-rays and Assessment of Cardio-Thoratic Ratio. arXiv 2019, arXiv:1908.02924. [Google Scholar]

- Ramos, A.; Alves, V. A Study on CNN Architectures for Chest X-rays Multiclass Computer-Aided Diagnosis. In Trends and Innovations in Information Systems and Technologies; Rocha, Á., Adeli, H., Reis, L.P., Costanzo, S., Orovic, I., Moreira, F., Eds.; Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2020; Volume 1161, pp. 441–451. ISBN 978-3-030-45696-2. [Google Scholar]

- Bayer, J.; Münch, D.; Arens, M. A Comparison of Deep Saliency Map Generators on Multispectral Data in Object Detection. arXiv 2021, arXiv:2108.11767. [Google Scholar]

- Zhao, Z.-Q.; Zheng, P.; Xu, S.; Wu, X. Object Detection with Deep Learning: A Review. arXiv 2019, arXiv:1807.05511. [Google Scholar] [CrossRef]

- Panwar, H.; Gupta, P.K.; Siddiqui, M.K.; Morales-Menendez, R.; Bhardwaj, P.; Singh, V. A deep learning and grad-CAM based color visualization approach for fast detection of COVID-19 cases using chest X-ray and CT-Scan images. Chaos Solitons Fractals 2020, 140, 110190. [Google Scholar] [CrossRef]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. arXiv 2015, arXiv:151204150. [Google Scholar]

- Chattopadhyay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-CAM++: Improved Visual Explanations for Deep Convolutional Networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 839–847. [Google Scholar]

- Srinivas, S.; Fleuret, F. Full-Gradient Representation for Neural Network Visualization. arXiv 2019, arXiv:1905.00780. [Google Scholar]

- Desai, S.; Ramaswamy, H.G. Ablation-CAM: Visual Explanations for Deep Convolutional Network via Gradient-free Localization. In Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), Snowmass Village, CO, USA, 1–5 March 2020; pp. 972–980. [Google Scholar]

- Fu, R.; Hu, Q.; Dong, X.; Guo, Y.; Gao, Y.; Li, B. Axiom-based Grad-CAM: Towards Accurate Visualization and Explanation of CNNs. arXiv 2020, arXiv:2008.02312. [Google Scholar]

- Muhammad, M.B.; Yeasin, M. Eigen-CAM: Class Activation Map using Principal Components. arXiv 2020, arXiv:2008.00299. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Wang, H.; Wang, Z.; Du, M.; Yang, F.; Zhang, Z.; Ding, S.; Mardziel, P.; Hu, X. Score-CAM: Score-Weighted Visual Explanations for Convolutional Neural Networks. arXiv 2020, arXiv:1910.01279. [Google Scholar]

- Jiang, P.-T.; Zhang, C.-B.; Hou, Q.; Cheng, M.-M.; Wei, Y. LayerCAM: Exploring Hierarchical Class Activation Maps for Localization. IEEE Trans. Image Process. 2021, 30, 5875–5888. [Google Scholar] [CrossRef]

- Byun, S.-Y.; Lee, W. Recipro-CAM: Gradient-free reciprocal class activation map. arXiv 2022, arXiv:2209.14074. [Google Scholar]

- Englebert, A.; Cornu, O.; De Vleeschouwer, C. Poly-CAM: High resolution class activation map for convolutional neural networks. arXiv 2022, arXiv:2204.13359. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. arXiv 2017, arXiv:1610.02357. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:151203385. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks. arXiv 2016, arXiv:1603.05027. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. arXiv 2016, arXiv:1602.07261. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. arXiv 2019, arXiv:1801.04381. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. arXiv 2018, arXiv:1608.06993. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning Transferable Architectures for Scalable Image Recognition. arXiv 2018, arXiv:1707.07012. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2020, arXiv:1905.11946. [Google Scholar]

- Khan, E.; Rehman, M.Z.U.; Ahmed, F.; Alfouzan, F.A.; Alzahrani, N.M.; Ahmad, J. Chest X-ray Classification for the Detection of COVID-19 Using Deep Learning Techniques. Sensors 2022, 22, 1211. [Google Scholar] [CrossRef]

- Ponomaryov, V.I.; Almaraz-Damian, J.A.; Reyes-Reyes, R.; Cruz-Ramos, C. Chest x-ray classification using transfer learning on multi-GPU. In Proceedings of the Real-Time Image Processing and Deep Learning 2021; Kehtarnavaz, N., Carlsohn, M.F., Eds.; SPIE: Houston, TX, USA, 2021; p. 16. [Google Scholar]

- Tohka, J.; van Gils, M. Evaluation of machine learning algorithms for health and wellness applications: A tutorial. Comput. Biol. Med. 2021, 132, 104324. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. ChestX-ray8: Hospital-scale Chest X-ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3462–3471. [Google Scholar]

- Sager, C.; Janiesch, C.; Zschech, P. A survey of image labelling for computer vision applications. J. Bus. Anal. 2021, 4, 91–110. [Google Scholar] [CrossRef]

- Ratner, A.; Bach, S.H.; Ehrenberg, H.; Fries, J.; Wu, S.; Ré, C. Snorkel: Rapid training data creation with weak supervision. Proc. VLDB Endow. 2017, 11, 269–282. [Google Scholar] [CrossRef]

- Irvin, J.; Rajpurkar, P.; Ko, M.; Yu, Y.; Ciurea-Ilcus, S.; Chute, C.; Marklund, H.; Haghgoo, B.; Ball, R.; Shpanskaya, K.; et al. CheXpert: A Large Chest Radiograph Dataset with Uncertainty Labels and Expert Comparison. arXiv 2019, arXiv:1901.07031. [Google Scholar] [CrossRef]

- Nguyen, H.Q.; Pham, H.H.; Linh, L.T.; Dao, M.; Khanh, L. VinDr-CXR: An open dataset of chest X-rays with radiologist annotations. PhysioNet 2021. [Google Scholar] [CrossRef] [PubMed]

- Oakden-Rayner, L. Exploring the ChestXray14 Dataset: Problems. Available online: https://laurenoakdenrayner.com/2017/12/18/the-chestxray14-dataset-problems/ (accessed on 8 August 2022).

- Bustos, A.; Pertusa, A.; Salinas, J.-M.; de la Iglesia-Vayá, M. PadChest: A large chest X-ray image dataset with multi-label annotated reports. Med. Image Anal. 2020, 66, 101797. [Google Scholar] [CrossRef] [PubMed]

- Jaeger, S.; Candemir, S.; Antani, S.; Wáng, Y.-X.J.; Lu, P.-X.; Thoma, G. Two public chest X-ray datasets for computer-aided screening of pulmonary diseases. Quant. Imaging Med. Surg. 2014, 4, 475–477. [Google Scholar] [PubMed]