Abstract

The classical curve-fitting problem to relate two variables, x and y, deals with polynomials. Generally, this problem is solved by the least squares method (LS), where the minimization function considers the vertical errors from the data points to the fitting curve. Another curve-fitting method is total least squares (TLS), which takes into account errors in both x and y variables. A further method is the orthogonal distances method (OD), which minimizes the sum of the squares of orthogonal distances from the data points to the fitting curve. In this work, we develop the OD method for the polynomial fitting of degree n and compare the TLS and OD methods. The results show that TLS and OD methods are not equivalent in general; however, both methods get the same estimates when a polynomial of degree 1 without an independent coefficient is considered. As examples, we consider the calibration curve-fitting problem of a R-type thermocouple by polynomials of degrees 1 to 4, with and without an independent coefficient, using the LS, TLS and OD methods.

Keywords:

polynomial fitting; parameter estimation; orthogonal distances; least squares; total least squares MSC:

65D10; 65D15

1. Introduction

The curve-fitting or parameter estimation procedure consists of determining the parameters of a curve to obtain the best possible fit to observations. Depending on the curve, this problem can be classified as linear or nonlinear [1,2]. The most used curves for fitting in biology, physics, statistics and engineering are polynomials [3,4,5,6,7]. The polynomial fitting is a linear problem and the most popular method used to solve it is least squares (LS) [8,9,10], which minimizes the sum of the squares of vertical errors from the data points to the polynomial curve. Another method that can be used to solve the problem is total least squares (TLS), which considers that both x and y are subject to errors; this method was developed mainly by Golub and Van Loan [11] and Van Huffel and Vandewalle [12]; however, the consideration that both variables x and y are subject to errors dates back at least to 1943 in the work of Deming [13]. Another way to solve the polynomial fitting is to consider the minimization of the sum of the squares of orthogonal distances from the data points to the polynomial curve, called in this article as the orthogonal distances (OD) method. Adcock [14] was the first to use the OD method for the straight line fitting; however, it has also been studied and used for the straight line and planes fitting in [15,16,17,18,19,20,21].

The remainder of this paper is structured as follows: Section 2 presents a brief description of the LS and TLS methods. The OD method is developed in Section 3 and it is also compared with the TLS method using the polynomial of degree 1. The approximation of the output voltage corresponding to different temperatures of a R-type thermocouple [22] using polynomials of degrees 1 to 4 by LS, TLS and OD methods is carried out in Section 4. Finally, a discussion and concluding remarks are presented in Section 5 and Section 6, respectively.

In general, this article studies the polynomial fitting problem by the OD method, and the main contributions are the following:

- Development of the OD method for polynomial fitting of degree n.

- Polynomial fitting of thermoelectric voltage data from a R-type thermocouple by OD, LS and TLS methods.

- From the numerical experiments studied, the TLS and OD methods are not equivalent in general. However, both methods obtain the same estimates when the polynomial of degree 1 without an independent coefficient is used.

2. Polynomial Fitting by LS and TLS Methods

The polynomial fitting of degree n consists of determining the unknown parameters of the polynomial defined for all real numbers x, such that . A brief description of the LS and TLS solutions for polynomial fitting are presented below.

2.1. The LS Method

The LS method is one of the most widely used minimization criteria for parameter estimation. The first publication of the method was made by A. M. Legendre in 1805; however, C. F. Gauss claimed that he used the method 10 years earlier [16]. The classical LS method seeks to determine the curve , which best fits to observations with , minimizing the sum of the squares of vertical errors defined as ; however, there are some variants of the method, such as weighted least squares, generalized least squares and maximum likelihood, among others, and it can be applied to different mathematical models, such as algebraic models, and continuous and discrete dynamic models [23,24,25,26,27,28] using the off-line and recursive schemes [20,29,30].

Since the article considers the classical LS method under the off-line scheme, the vector of vertical errors for the polynomial of degree n and observations is given by

Defining

we obtain

where , and .

Taking the squared 2-norm of vector (1), we obtain

The LS solution is the vector such that it minimizes the functional (2). Since it is a linear minimization problem, the LS solution can be found by elementary calculus [10,31,32]. The LS solution which minimizes (2) is unique if matrix has independent columns, which means that it has rank . Therefore, the LS solution satisfies the normal equations and is defined by .

On the other hand, if matrix has dependent columns or is not invertible, the LS solution which minimizes (2) is defined by

where is called the Moore–Penrose pseudoinverse of , and it is defined by

Equation (3) is called the pseudoinverse solution of the LS method, and the Moore–Penrose pseudoinverse is defined by the matrices of the singular value decomposition (SVD) given by the following theorem. Throughout the text, the rank of any matrix will be denoted by .

Theorem 1.

([10]). Let any matrix . The SVD of is

where and are unitary matrices and . The numbers are called the singular values of and .

More properties of the Moore–Penrose pseudoinverse can be found in [10,32].

2.2. The TLS Method

This section presents the most important results of the TLS method from [12] for polynomial fitting.

Rewriting the system of linear algebraic equations as follows

since , and , it can be defined and to obtain

Since Equation (4) represents a homogeneous system of linear algebraic equations, the solution is in the null space of , which means that the parameters vector is also in the null space of . If matrix in (4) has rank , the SVD of is given by

where the singular values and singular vectors and satisfy

From (5), it can be see that system (4) does not have a vector in the null space of , and therefore the TLS method searches for a matrix with , such that is minimal. Once a minimizing is found, any vector satisfying is called the TLS solution and is the TLS correction.

The minimization is found by the Eckart–Young–Mirsky theorem. This theorem was initially proved by Eckart and Young [33] using the Frobenius norm, and later Mirsky [34] proved it using the 2-norm. In this work, it is used with the 2-norm. The Eckart–Young–Mirsky theorem using the 2-norm is presented below.

Theorem 2.

([12]). Let the SVD of be given by with and let ; then, the matrix

satisfies

Applying the Eckart–Young–Mirsky Theorem 2 to (4), the best matrix approximation to the matrix is given by

Notice that has rank , then the singular values and singular vectors , now satisfy

From Equation (6), the vector is in the null space of , and therefore, a TLS solution satisfying is given by

The TLS solution will be scaled to obtain in the last row of (7):

then, if , the parameters vector is given by

An alternative form to obtain (8) is as follows. Since the solution is also an eigenvector of , it is associated with the eigenvalue . Hence the eigenvalue–eigenvector equation is given by

substituting we obtain

The following theorem establishes the uniqueness and existence conditions of the TLS solution.

Theorem 3.

([12]). Let the singular values of be , and the singular values of be . If then the TLS problem has a unique solution.

As we can see, one problem of the TLS solution is the loss of uniqueness due to repeated singular values, and another is the indeterminate form in (8) if ; those problems are called nongeneric TLS problems, and more details of them can be found in [35,36,37].

3. Polynomial Fitting by OD Method

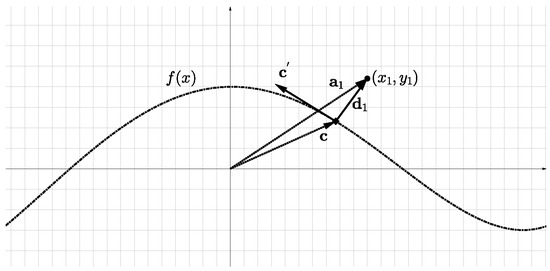

The OD method minimizes the sum of the squares of the orthogonal distances from observations to the polynomial curve . Considering Figure 1, where is the vector of the observation , is a vector on the curve , is the tangent vector at and the vector is defined by

Figure 1.

Orthogonal distance from a point to curve.

The vector in Figure 1 is orthogonal to the curve at if the dot product between vectors and satisfies

Substituting and in (12), we obtain

Equation (13) is called the orthogonality condition, and its solution determines the point on the curve where the vectors and are orthogonal. This point also determines the orthogonal distance vector . The 2-norm of vector is the orthogonal distance from the observation to the curve denoted by .

If the polynomial curve considered is , then by the orthogonality condition (13) and the squared 2-norm of vector (11), the squares of the orthogonal distances for the observations with are given by

with . Defining the distances vector and taking the squared 2-norm of , we obtain

Equation (14) is the functional of the sum of the squares of the orthogonal distances from observations to the polynomial curve . The parameters that minimize the functional (14) are defined below.

From Equation (17) we obtain

Since the sets of values and are the critical points of function (15), the second derivative test is used to determine the minimum.

Definition 1.

Let be a differentiable function with respect to and . Let . If is a critical point of f, that is, and . Then

- If and , then is a local minimum value of f.

- If and , then is a local maximum value of f.

Using the second derivative test Definition 1, the parameters and that minimize (15) are determined by (20) and (21).

On the other hand, if we consider now a polynomial of degree 2, . Then, substituting and into the orthogonality condition (13) we obtain the cubic equation

with

The real solution of Equation (25) determines the points on the curve , where the orthogonal distances for each observation are determined. Then, solving (25) by Cardano’s formulas [38], the real solution for each observation is determined by the formula

where

Hence, the squared 2-norm of vector (11) is defined by

Taking the squared 2-norm of the distances vector , the functional of the sum of the squares of the orthogonal distances for the polynomial of degree 2 is defined by

Notice that the polynomial fitting of degree 2 by OD method is a nonlinear minimization problem.

Considering now a cubic polynomial curve, , then by the orthogonality condition (13), a fifth degree equation is obtained:

with

Equation (28) in general cannot be solved analytically; the roots must be obtained with a numerical method [31,39,40]. Once Equation (28) is solved for each observation, the points on the curve determine the orthogonal distance vectors , then the squared 2-norm of the distances vector determines the functional to be minimized:

The general case is when a polynomial curve of degree n is considered, . From the orthogonality condition (13), an equation of degree is obtained. Since it is of an odd degree, it has at least one real root. Hence the functional of the sum of the squares of orthogonal distances to be minimized is given by

As we can see, there are two numerical problems when the polynomial fitting problem of degree n is solved by the OD method. Firstly, a real-root-finding problem of an equation of degree given by the orthogonality condition (13) and then a nonlinear minimization problem of the sum of the squares of the orthogonal distances is given by (30). In this sense, the real-root-finding problem can be solved by the fzero routine from MATLAB, whereas the fminsearch routine also from MATLAB [41] can be used to solve the nonlinear minimization problem. It is worth mentioning that the polynomial fitting problem of degree 1 can be solved by the analytic formulas (20) and (21), and the second derivative test Definition 1.

Contrasting the OD and TLS Methods

In previous sections, the LS, TLS and OD methods for the polynomial fitting were presented. However, Golub and Van Loan [11] and Van Huffel and Vandewalle [12] mentioned that the TLS method also minimizes the orthogonal distances. In order to review this statement, this section analyzes the parameter estimation by the TLS and OD methods for the most simple polynomial, the straight line .

Applying the OD method, the functional of the sum of the squares of the orthogonal distances for the straight line fitting to be minimized is given by

with .

On the other hand, the TLS solution for the straight line satisfies the eigenvalue–eigenvector equation

where and . Premultiplying Equation (32) by and solving for , we obtain

and substituting and results init gets

Notice that the numerator of Equation (33) is the same as in Equation (2), then, substituting (2) in (33) reduces to

where is the parameters vector. Since the parameters vector for the straight line is , Equation (34) reduces to

Notice that Equations (35) and (31) are not equal, since

then TLS method does not minimize the orthogonal distances. However, if we consider the straight line without independent coefficient , Equations (35) and (31) are equal and both methods minimize the orthogonal distances. Hence the OD and TLS methods are not equivalent in general.

The next section presents the parameter estimation of polynomial functions of degree 1 to 4 with and without an independent coefficient by the LS, TLS and OD methods.

4. Polynomial Fitting of Thermoelectric Voltage by LS, TLS and OD Methods

Considering the observations of temperature and thermoelectric voltage from a R-type thermocouple for the temperature range −50 C to 1064 C with increment of 1 C obtained from [22], this section shows the calibration curve fitting of the R-type thermocouple by polynomials of degree 1 to 4 by LS, TLS and OD methods.

In order to evaluate the predictive performance of the calibration polynomials, the criteria of the extreme values of errors, the average of the absolute errors, the standard deviation and the coefficient of determination were used [3,4,6].

The error of calibration polynomial is defined as

where is thermoelectric voltage data and is the predicted value of the thermoelectric voltage with the calibration polynomial. The average of the absolute errors is given by

where is the absolute value of and m is the number of data. The standard deviation of the calibration polynomial is defined by

The coefficient of determination is given by

where is the average of the thermoelectric voltage data.

The calibration polynomial fitting with an independent coefficient is presented below.

4.1. Calibration Polynomial Fitting with Independent Coefficient

The polynomial fitting of functions (40) by LS and TLS methods was solved by Equations (3) and (10), respectively.

On the other hand, the polynomial fitting of degree by the OD method was carried out applying Algorithm 1, which uses the analytic formulas (20) and (21), and the second derivative test Definition 1. The nonlinear minimization of function (27) for the polynomial fitting of degree was solved applying the Algorithm 2 which uses the fminsearch routine from MATLAB. Finally, the polynomial fitting of functions of degree and was solved applying the Algorithm 3 which uses the fzero and fminsearch routines.

| Algorithm 1 Polynomial fitting of degree 1 by OD method. |

| Input Data . Output Parameters . Step 1. Compute Step 3. If and then is a local minimum. Step 4. Output . |

| Algorithm 2 Polynomial fitting of degree 2 by OD method. |

| Input Data ; initial approximation of the parameters ; optimization options: TolFun, TolX, MaxIter, MaxFunEvals. Output Parameters . function fun Step 1. Call fminsearch. Step 2. Output . |

| Algorithm 3 Polynomial fitting of degree by OD method. |

| Input Data ; initial approximation of the orthogonality condition solution ; initial approximation of the parameters ; optimization options: TolFun, TolX, MaxIter, MaxFunEvals. Output Parameters P. function funZ function fun Step 1. Call fminsearch. Step 2. Output P. |

Using Algorithms 1–3 and long output display format, the calibration polynomials of degree 1 to 4 by the LS, TLS and OD methods are presented in Table 1, Table 2, Table 3 and Table 4, respectively. The performance of the calibration polynomials is presented in Table 5.

Table 1.

Calibration polynomial of degree 1.

Table 2.

Calibration polynomial of degree 2.

Table 3.

Calibration polynomial of degree 3.

Table 4.

Calibration polynomial fitting of degree 4.

Table 5.

Prediction performance of the calibration polynomials.

Since each set of parameters in Table 1, Table 2, Table 3 and Table 4 is distinct, different calibration polynomials were obtained with all methods used. It is important to mention that if we had used a shorter output display format, some parameters estimated would have been the same.

The polynomial fitting without the independent coefficient is presented below.

4.2. Calibration Polynomial Fitting without Independent Coefficient

The calibration polynomials for the R-type thermocouple without independent coefficient are defined by

Using the observations of temperature and thermoelectric voltage for the temperature range − 50 C to 1064 C, the calibration polynomial fitting of degrees 1 to 4 without an independent coefficient by the LS, TLS and OD methods are presented in Table 6, Table 7, Table 8 and Table 9, respectively. The performance of the calibration polynomials is shown in Table 10.

Table 6.

Calibration polynomial of degree 1 without independent coefficient.

Table 7.

Calibration polynomial of degree 2 without independent coefficient.

Table 8.

Calibration polynomial of degree 3 without independent coefficient.

Table 9.

Calibration polynomial of degree 4 without independent coefficient.

Table 10.

Prediction performance of the calibration polynomials without independent coefficient.

From Table 6, regardless of the output display format type, the estimates for by the TLS and OD methods were the same. On the other hand, since each set of parameters in Table 7, Table 8 and Table 9 is distinct, the TLS method also minimizes the orthogonal distances when a polynomial of degree 1 without an independent coefficient is considered.

5. Discussion

The article presents the OD method for the polynomial fitting problem for which the minimization criteria are the sum of the squares of the orthogonal distances from data points to the polynomial curve; hence, the functional obtained is nonlinear, just like the minimization problem. Applying the OD method to fit calibration polynomials of a R-type thermocouple, different polynomials were obtained compared with the LS and TLS methods. However, if we consider the calibration polynomial of degree 1 without an independent coefficient, the OD and TLS methods obtain the same estimates. An important aspect to consider is the output display format of computations, because if a short output format is used, some results with the OD and TLS methods would be the same. On the other hand, from a practical point of view, the polynomial fitting by the OD method requires computations of roots of equations of degree and a nonlinear minimization, so the method can be implemented with different minimization and root finding algorithms.

6. Conclusions

This work presented two classical methods for solving the polynomial fitting problem, the least squares (LS) and total least squares (TLS) methods. Then, using elementary vector geometry, the orthogonal distances (OD) method for solving the polynomial fitting problem of degree n was developed. All methods used in this work have advantages and disadvantages. The LS and TLS solutions are easier to obtain than the OD method, as they are obtained by Equations (3) and (10), respectively. The OD solution for the polynomial fitting of degree n > 1 needs to solve two numerical problems. Firstly a real-root finding routine is applied to an equation of degree 2n − 1 resulting from the orthogonality condition (13); since it is of an odd degree, it has at least one real root. Then a nonlinear minimization routine is applied to the functional of the sum of the squares of the orthogonal distances (30). The polynomial fitting problem of degree 1 by OD method is solved applying the analytic Formulas (20), (21) and the second derivative test Definition 1. On the other hand, the LS and TLS methods are ill-conditioned problems [10,12,39] which cause deficient estimates; furthermore, the TLS method has nongeneric problems [35,36,37]. Since the OD method considers a different minimization criterion than the LS and TLS methods, it represents a different alternative for polynomial fitting. Furthermore, the numerical experiments demonstrated that TLS and OD methods are not equivalent in general, and that the performance criteria of the LS and OD methods are similar. However, from the cases studied, TLS and OD methods obtain the same estimates when the polynomial of degree 1 without an independent coefficient is considered, and therefore, the performance criteria are also equal. It is important to highlight that when the independent coefficient is not considered, the performance criteria are similar to the three methods used. On the other hand, since the minimization of the functional of the OD method is nonlinear and numerical computations of roots are also needed, the implementation of the OD method needs a large computing capacity and efficient algorithms.

Finally, future works include applying the calibration curves obtained in practical applications and solving polynomial fitting problems from different disciplines with the OD method, using different minimization and root-finding algorithms.

Author Contributions

Conceptualization, C.V.-J.; methodology, C.V.-J.; software, L.A.C.-C.; validation, L.A.C.-C. and Y.L.-H.; formal analysis, L.A.C.-C.; investigation, S.I.P.-R. and C.M.M.-V.; data curation, Y.L.-H.; writing—original draft preparation, L.A.C.-C.; writing—review and editing, L.A.C.-C., S.I.P.-R. and C.M.M.-V.; visualization, S.I.P.-R., Y.L.-H. and C.M.M.-V.; supervision, C.V.-J.; project administration, L.A.C.-C.; funding acquisition, C.V.-J., L.A.C.-C., S.I.P.-R., Y.L.-H. and C.M.M.-V. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Secretaría de Investigación y Posgrado SIP IPN under grants 20221223, 20227019, 20221135 and 20220039.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We are greatly indebted to the anonymous referees for their useful suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bard, Y. Nonlinear Parameter Estimation; Academic Press: New York, NY, USA, 1974. [Google Scholar]

- Lancaster, P.; Salkauskas, K. Curve and Surface Fitting: An Introduction; Academic Press: London, UK, 1986. [Google Scholar]

- Chen, A.; Chen, C. Evaluation of piecewise polynomial equations for two types of thermocouples. Sensors 2013, 13, 17084–17097. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Weng, Y.K.; Shen, T.C. Performance evaluation of an infrared thermocouple. Sensors 2010, 10, 10081–10094. [Google Scholar] [CrossRef] [PubMed]

- Izonin, I.; Tkachenko, R.; Kryvinska, N.; Tkachenko, P. Multiple linear regression based on coefficients identification using non-iterative SGTM neural-like structure. In International Work-Conference on Artificial Neural Networks; Springer: Berlin/Heidelberg, Germany, 2019; pp. 467–479. [Google Scholar]

- Chen, A.; Chen, H.Y.; Chen, C. A software improvement technique for platinum resistance thermometers. Instruments 2020, 4, 15. [Google Scholar] [CrossRef]

- Zheng, J.; Hu, G.; Ji, X.; Qin, X. Quintic generalized Hermite interpolation curves: Construction and shape optimization using an improved GWO algorithm. Comput. Appl. Math. 2022, 41, 1–29. [Google Scholar] [CrossRef]

- Abdulle, A.; Wanner, G. 200 years of least squares method. Elem. Math. 2002, 57, 45–60. [Google Scholar] [CrossRef][Green Version]

- Lawson, C.L.; Hanson, R.J. Solving Least Squares Problems; SIAM: Philadelphia, PA, USA, 1995. [Google Scholar]

- Björck, Å. Numerical Methods for Least Squares Problems; SIAM: Philadelphia, PA, USA, 1996. [Google Scholar]

- Golub, G.H.; Van Loan, C.F. An analysis of the total least squares problem. SIAM J. Numer. Anal. 1980, 17, 883–893. [Google Scholar] [CrossRef]

- Van Huffel, S.; Vandewalle, J. The Total Least Squares Problem: Computational Aspects and Analysis; SIAM: Philadelphia, PA, USA, 1991. [Google Scholar]

- Deming, W.E. Statistical Adjustment of Data; John Wiley & Sons: New York, NY, USA, 1943. [Google Scholar]

- Adcock, R.J. A problem in least squares. Analyst 1878, 5, 53–54. [Google Scholar] [CrossRef]

- Pearson, K. LIII. On lines and planes of closest fit to systems of points in space. Lond. Edinb. Dublin Philos. Mag. J. Sci. 1901, 2, 559–572. [Google Scholar] [CrossRef]

- Petras, I.; Podlubny, I. Least squares or least circles? A comparison of classical regression and orthogonal regression. Chance 2010, 23, 38–42. [Google Scholar] [CrossRef]

- Scariano, S.M.; Barnett, W., II. Contrasting total least squares with ordinary least squares part I: Basic ideas and results. Math. Comput. Educ. 2003, 37, 141–158. [Google Scholar]

- Smith, D.; Pourfarzaneh, M.; Kamel, R. Linear regression analysis by Deming’s method. Clin. Chem. 1980, 26, 1105–1106. [Google Scholar] [CrossRef] [PubMed]

- Linnet, K. Performance of Deming regression analysis in case of misspecified analytical error ratio in method comparison studies. Clin. Chem. 1998, 44, 1024–1031. [Google Scholar] [CrossRef] [PubMed]

- Cantera, L.A.C.; Luna, L.; Vargas-Jarillo, C.; Garrido, R. Parameter estimation of a linear ultrasonic motor using the least squares of orthogonal distances algorithm. In Proceedings of the 16th International Conference on Electrical Engineering, Computing Science and Automatic Control, Mexico City, Mexico, 11–13 September 2019. [Google Scholar]

- Luna, L.; Lopez, K.; Cantera, L.; Garrido, R.; Vargas, C. Parameter estimation and delay-based control of a linear ultrasonic motor. In Robótica y Computación. Nuevos Avances; Castro-Liera, I., Cortés-Larinaga, M., Eds.; Instituto Tecnológico de La Paz: La Paz, Mexico, 2020; pp. 9–15. [Google Scholar]

- Garrity, K. NIST ITS-90 Thermocouple Database–SRD 60. 2000. Available online: https://data.nist.gov/od/id/ECBCC1C1302A2ED9E04306570681B10748 (accessed on 28 March 2022).

- Åström, K.J.; Eykhoff, P. System identification—A survey. Automatica 1971, 7, 123–162. [Google Scholar] [CrossRef]

- Englezos, P.; Kalogerakis, N. Applied Parameter Estimation for Chemical Engineers; CRC Press: Boca Raton, FL, USA, 2000. [Google Scholar]

- Ding, F.; Shi, Y.; Chen, T. Performance analysis of estimation algorithms of nonstationary ARMA processes. IEEE Trans. Signal Process. 2006, 54, 1041–1053. [Google Scholar] [CrossRef]

- Liu, P.; Liu, G. Multi-innovation least squares identification for linear and pseudo-linear regression models. IEEE Trans. Syst. Man Cybern. Part Cybern. 2010, 40, 767–778. [Google Scholar]

- Sun, X.; Ji, J.; Ren, B.; Xie, C.; Yan, D. Adaptive forgetting factor recursive least square algorithm for online identification of equivalent circuit model parameters of a lithium-ion battery. Energies 2019, 12, 2242. [Google Scholar] [CrossRef]

- Li, M.; Liu, X. Maximum likelihood hierarchical least squares-based iterative identification for dual-rate stochastic systems. Int. J. Adapt. Control Signal Process. 2021, 35, 240–261. [Google Scholar] [CrossRef]

- Zhang, X.; Ding, F. Adaptive parameter estimation for a general dynamical system with unknown states. Int. J. Robust Nonlinear Control 2020, 30, 1351–1372. [Google Scholar] [CrossRef]

- Kang, Z.; Ji, Y.; Liu, X. Hierarchical recursive least squares algorithms for Hammerstein nonlinear autoregressive output-error systems. Int. J. Adapt. Control Signal Process. 2021, 35, 2276–2295. [Google Scholar] [CrossRef]

- Burden, R.; Faires, J.; Burden, A. Numerical Analysis; Cengage Learning: Boston, MA, USA, 2016. [Google Scholar]

- Strang, G. Introduction to Linear Algebra; Wellesley-Cambridge Press: Wellesley, MA, USA, 2016. [Google Scholar]

- Eckart, C.; Young, G. The approximation of one matrix by another of lower rank. Psychometrika 1936, 1, 211–218. [Google Scholar] [CrossRef]

- Mirsky, L. Symmetric gauge functions and unitarily invariant norms. Q. J. Math. 1960, 11, 50–59. [Google Scholar] [CrossRef]

- Van Huffel, S.; Vandewalle, J. Analysis and solution of the nongeneric total least squares problem. SIAM J. Matrix Anal. Appl. 1988, 9, 360–372. [Google Scholar] [CrossRef]

- Van Huffel, S. On the significance of nongeneric total least squares problems. SIAM J. Matrix Anal. Appl. 1992, 13, 20–35. [Google Scholar] [CrossRef]

- Van Huffel, S.; Zha, H. The total least squares problem. In Handbook of Statistics; Elsevier: Amsterdam, The Netherlands, 1993; Volume 9, pp. 377–408. [Google Scholar]

- Uspensky, J.V. Theory of Equations; McGraw-Hill: New York, NY, USA, 1963. [Google Scholar]

- Atkinson, K.E. An Introduction to Numerical Analysis; John Wiley & Sons: New York, NY, USA, 2008. [Google Scholar]

- Dennis, J.E., Jr.; Schnabel, R.B. Numerical Methods for Unconstrained Optimization and Nonlinear Equations; SIAM: Philadelphia, PA, USA, 1996. [Google Scholar]

- MathWorks. Optimization Toolbox User’s Guide; MathWorks: Natick, MA, USA, 2020. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).