Deep Large-Margin Rank Loss for Multi-Label Image Classification

Abstract

1. Introduction

2. Related Works

2.1. Multi-Label Image Classification

2.2. Large-Margin Classification

3. Method

3.1. Notations

3.2. Large-Margin Ranking Loss

3.3. Negative Sampling

4. Discussion

4.1. Implementation Details and Baselines

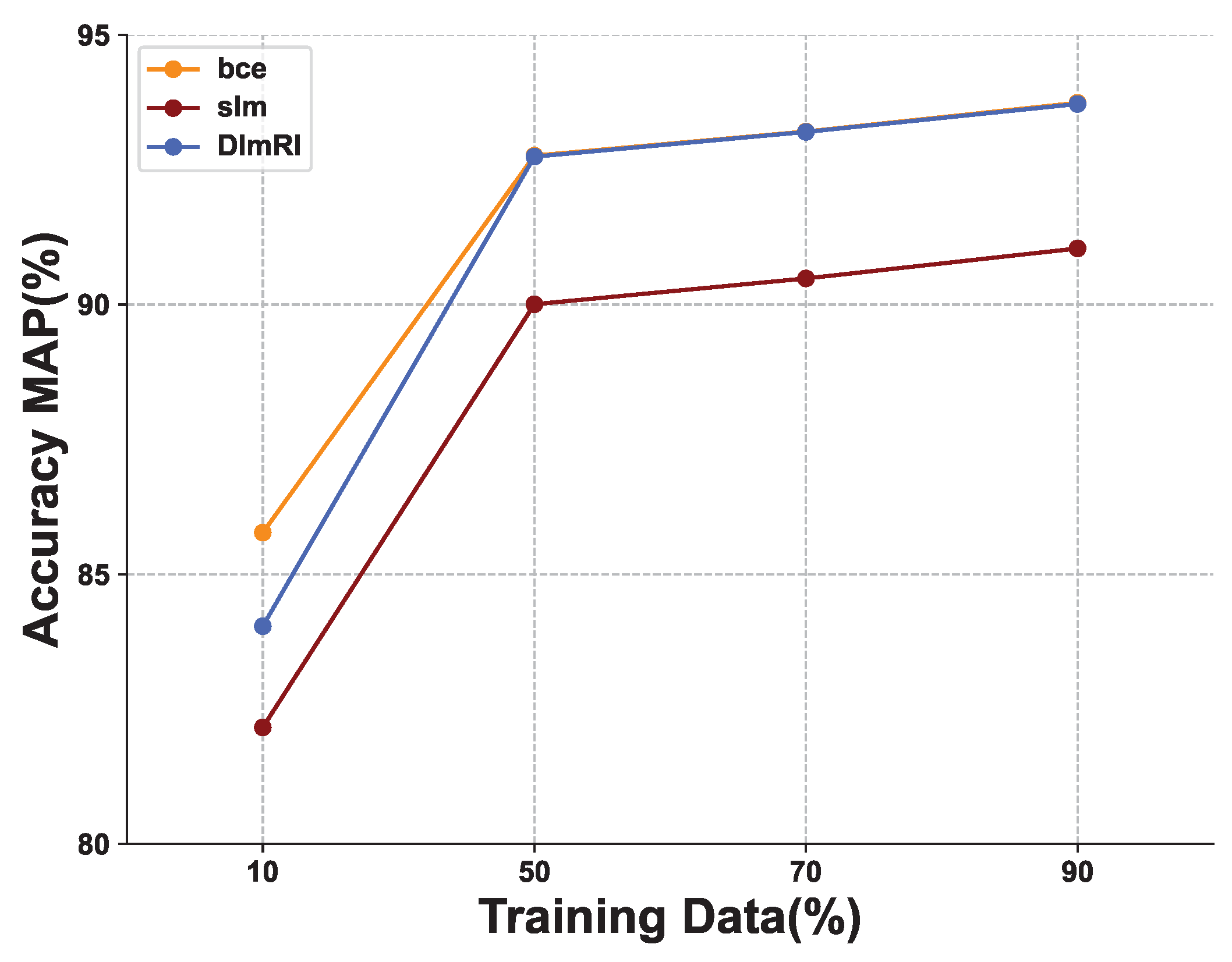

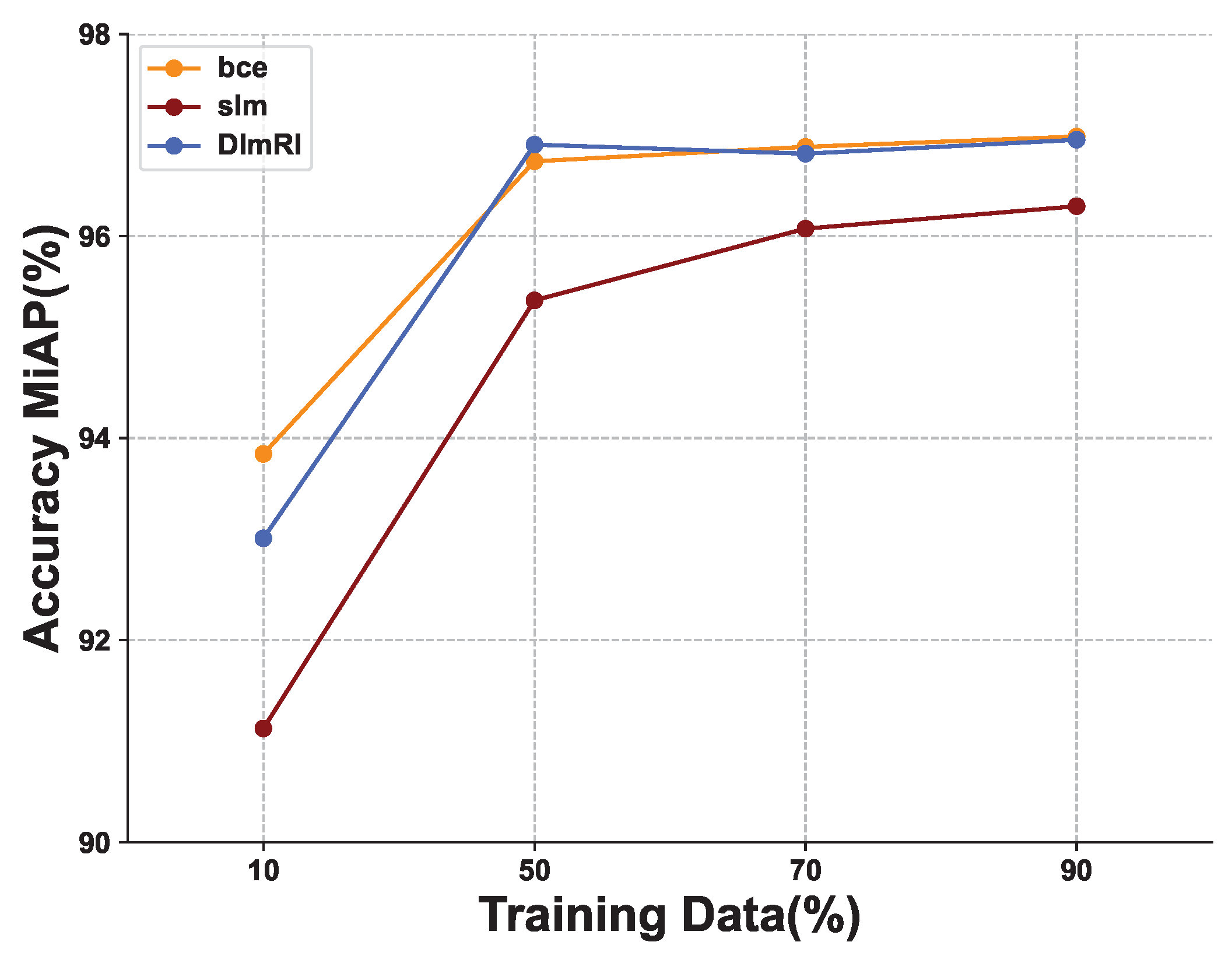

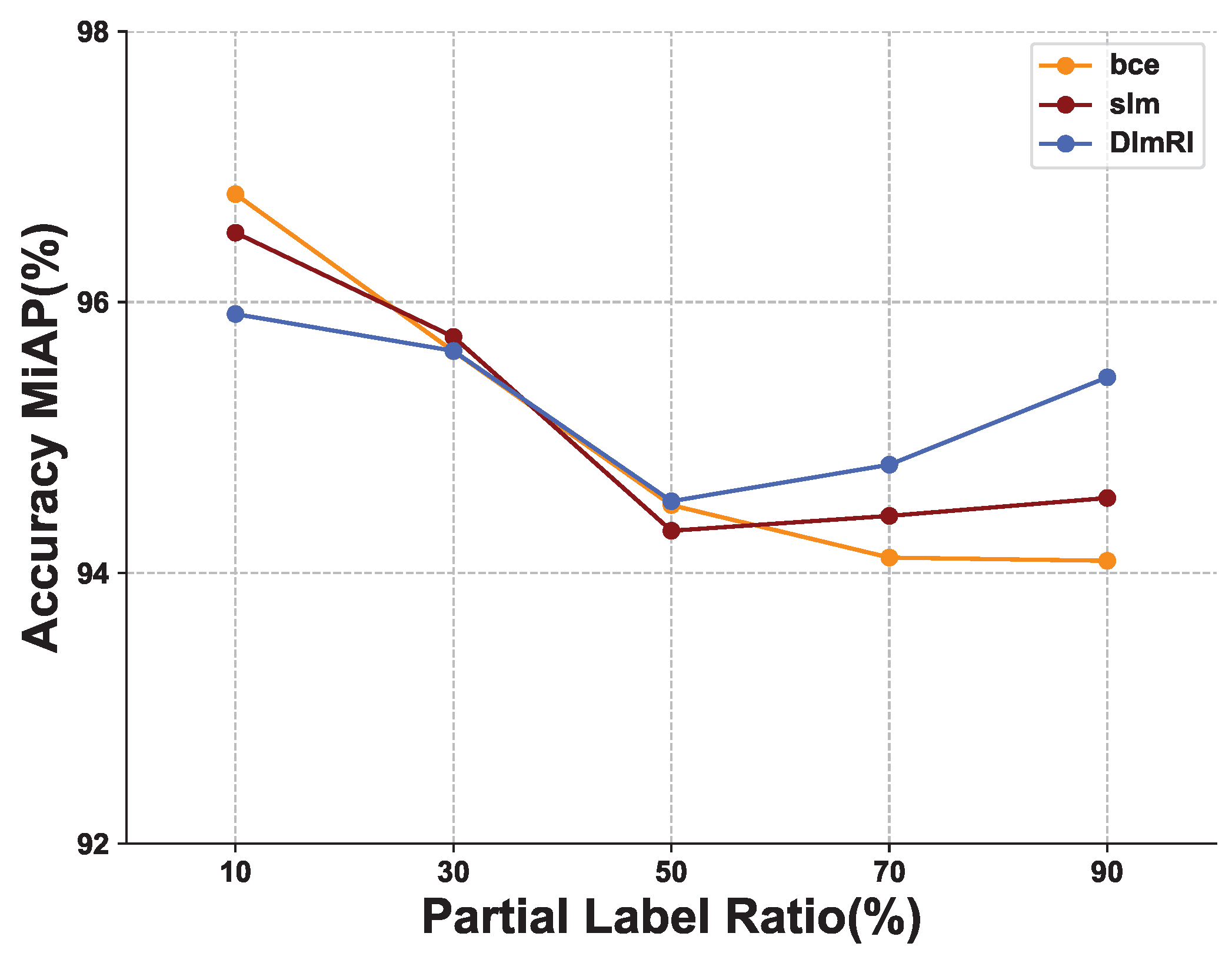

4.2. Results on VOC2007 Dataset

4.3. Results on MS-COCO Dataset

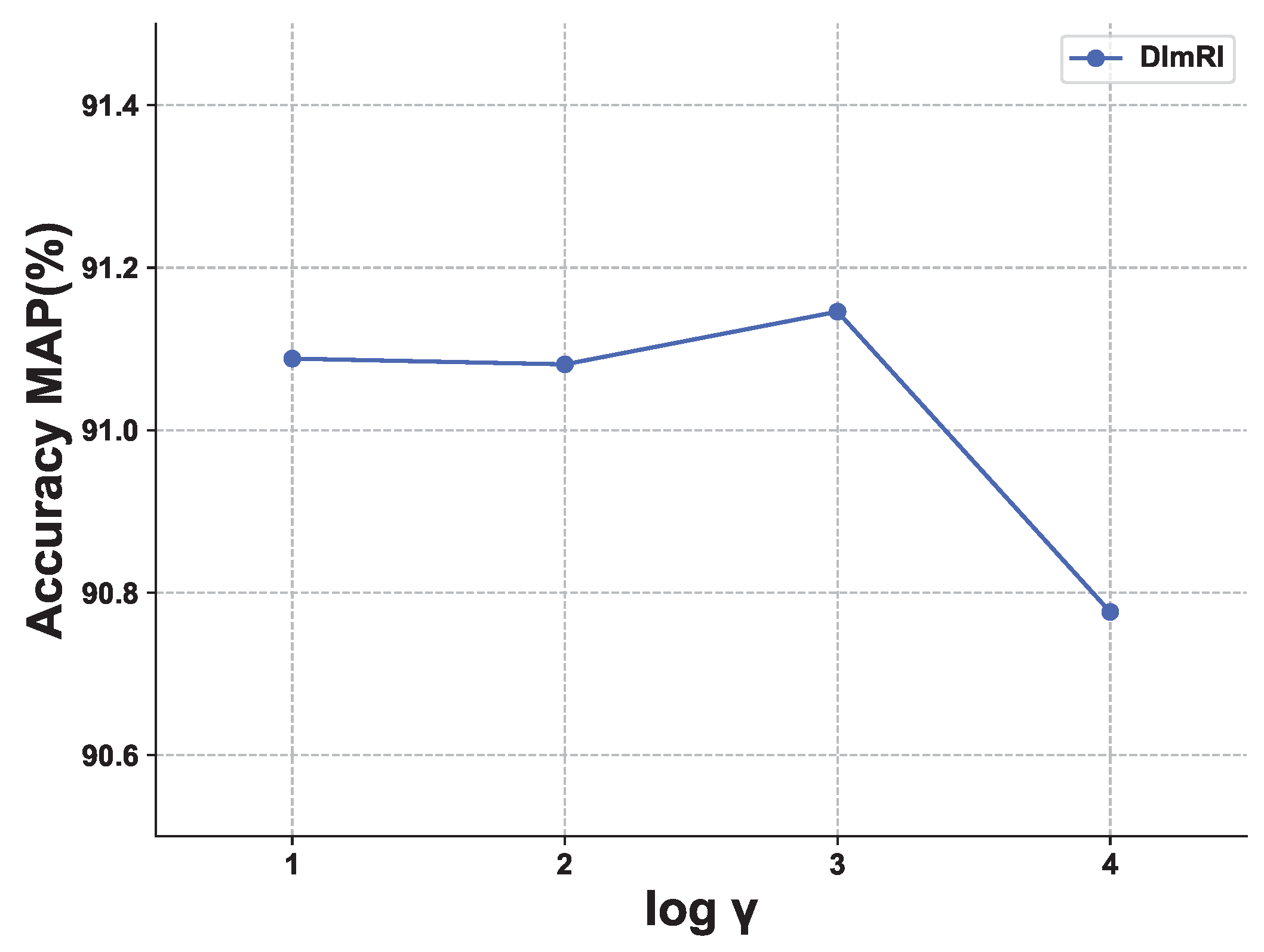

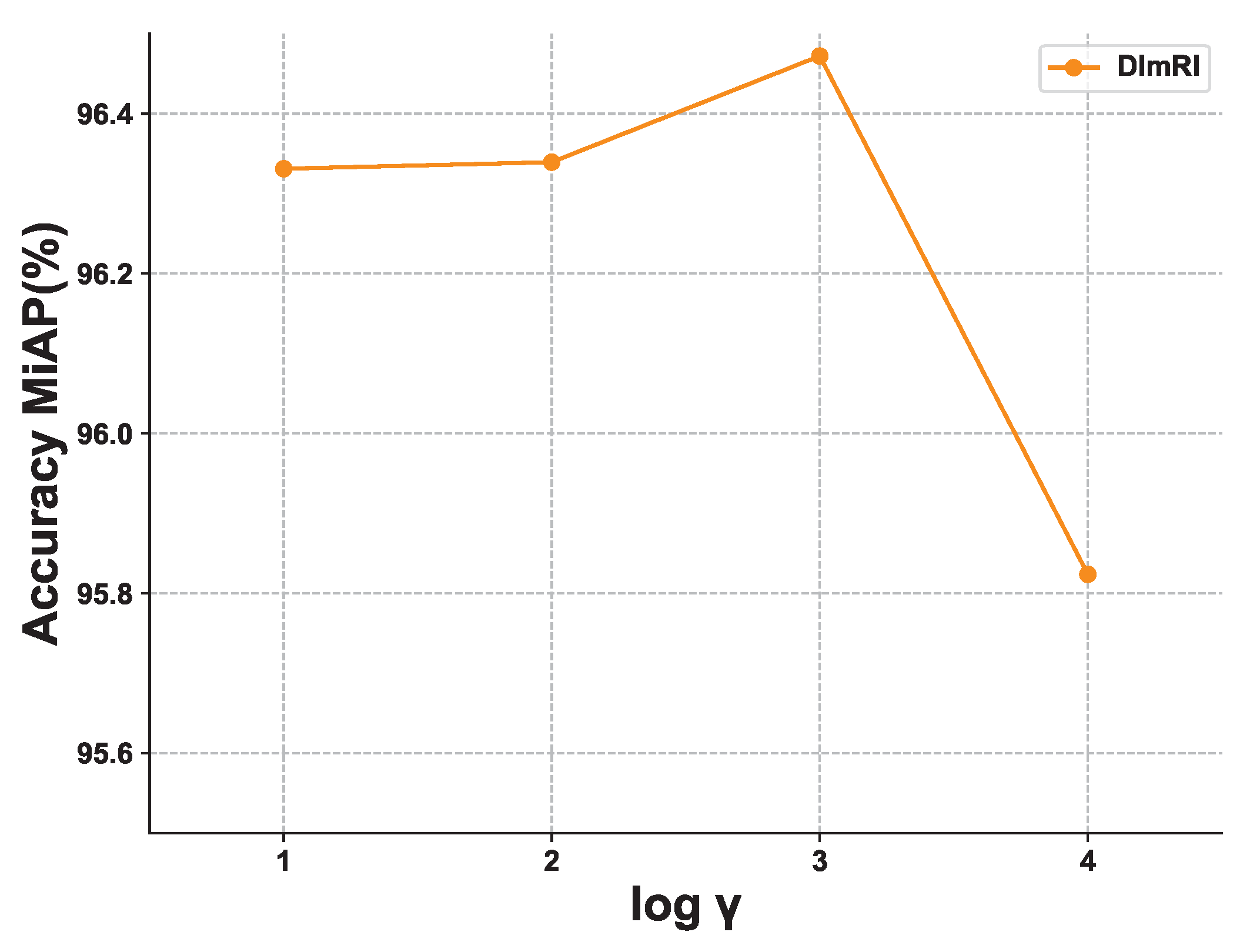

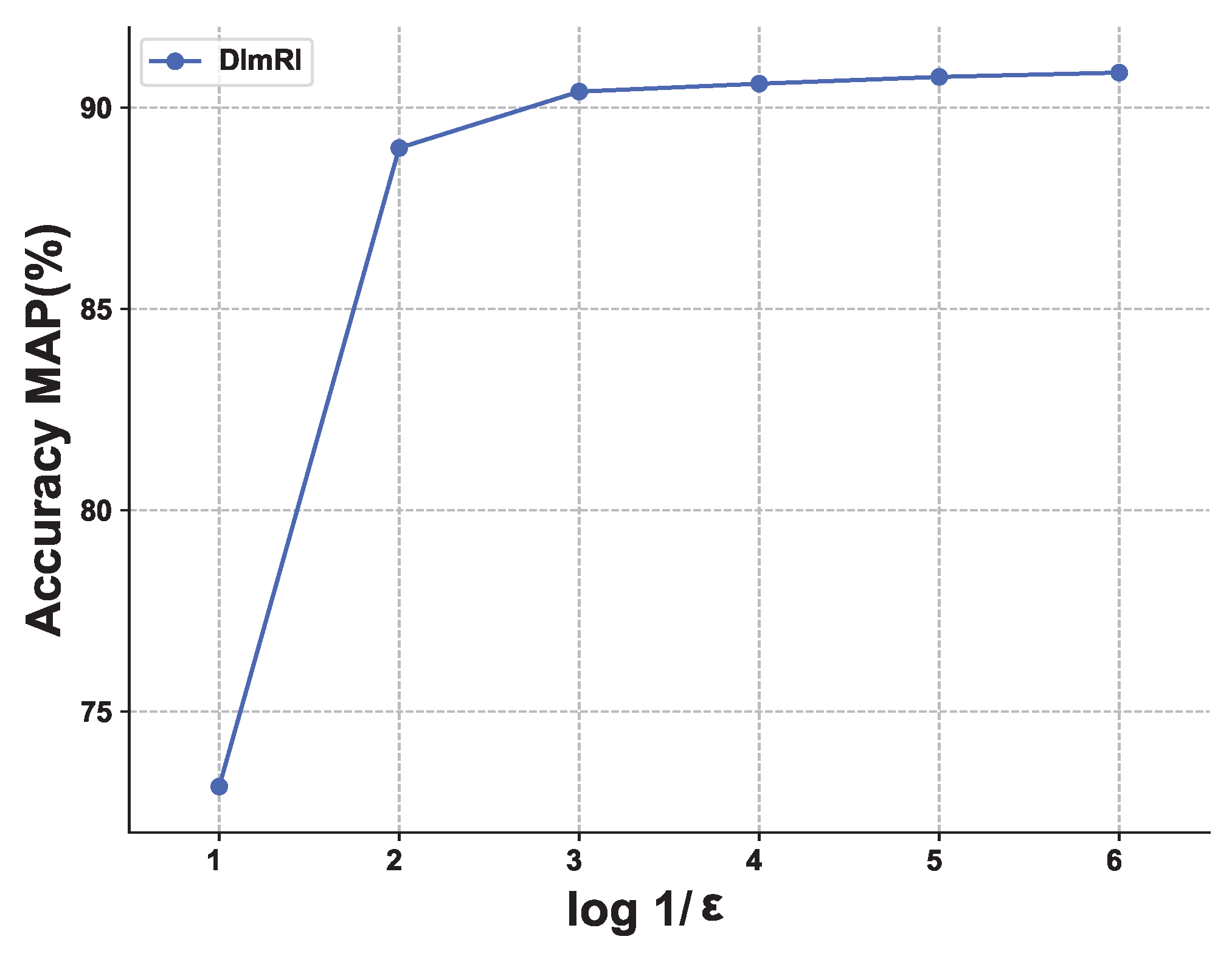

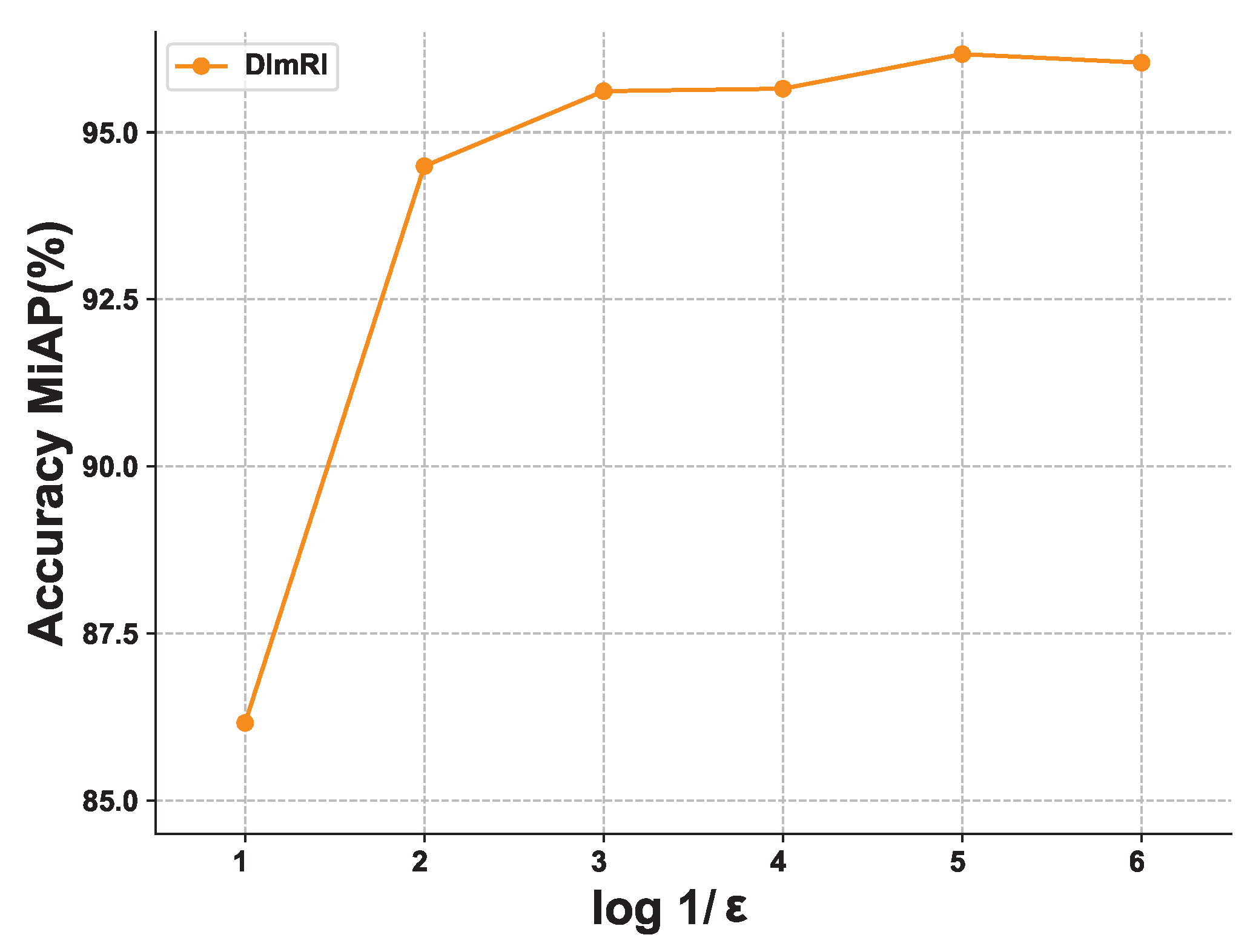

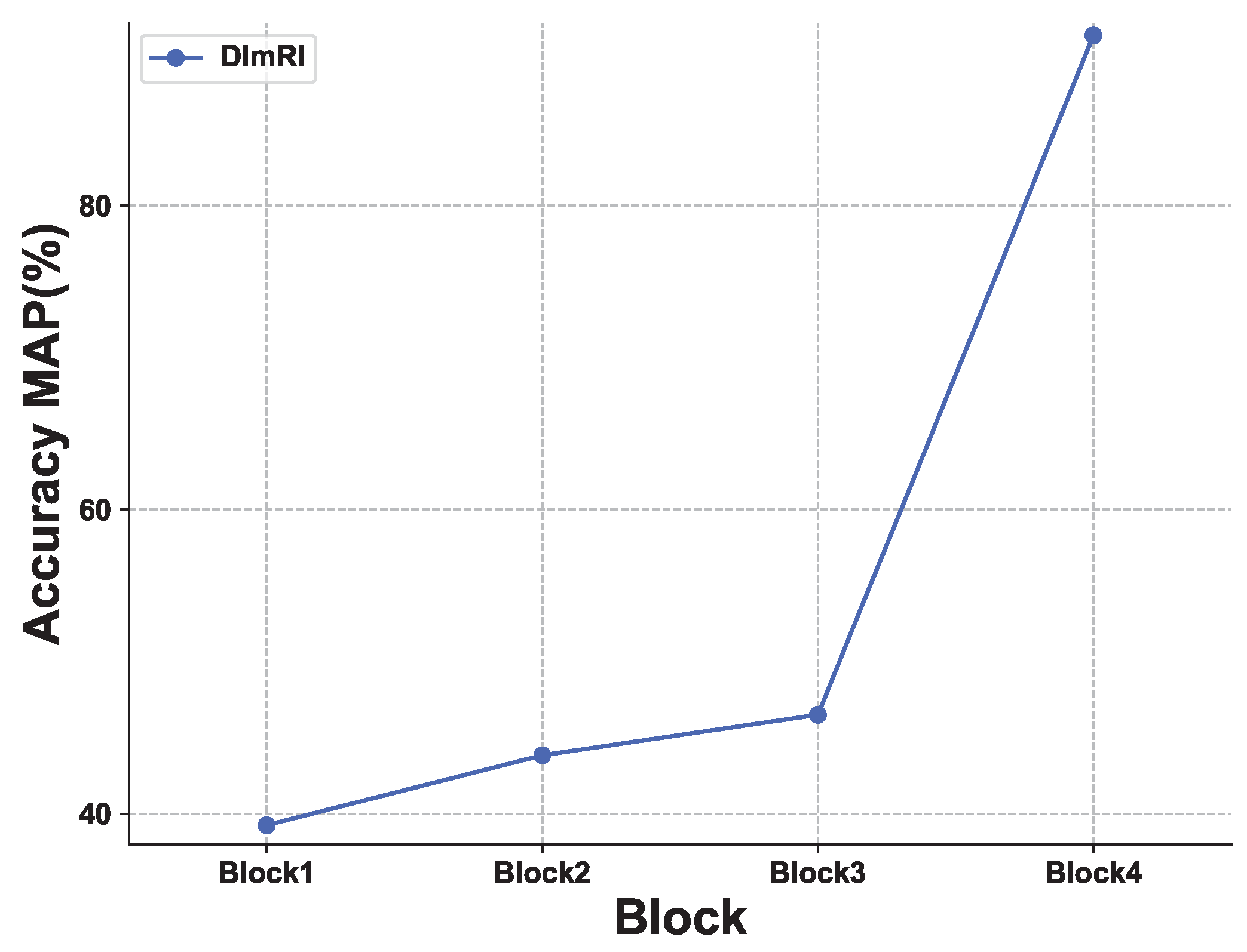

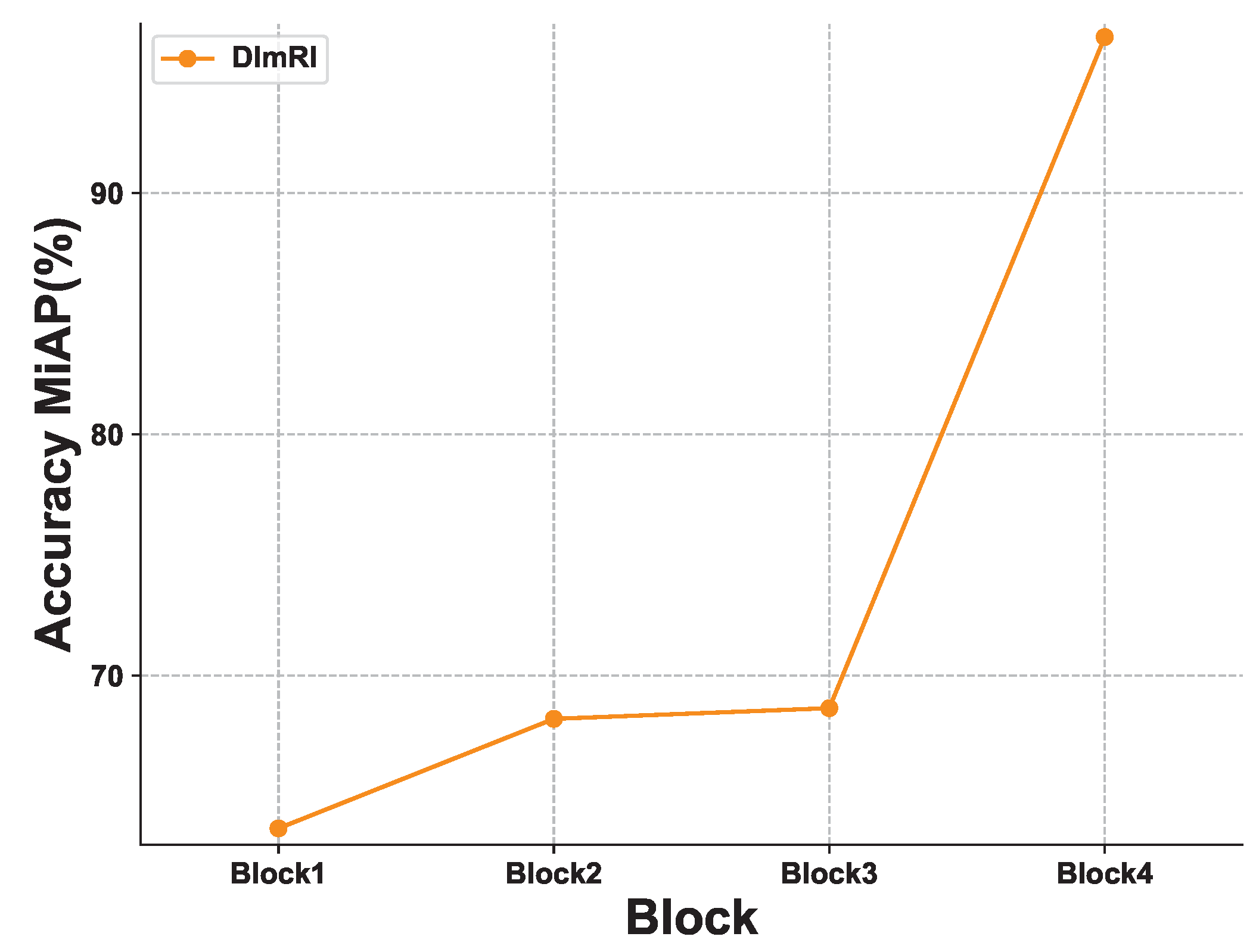

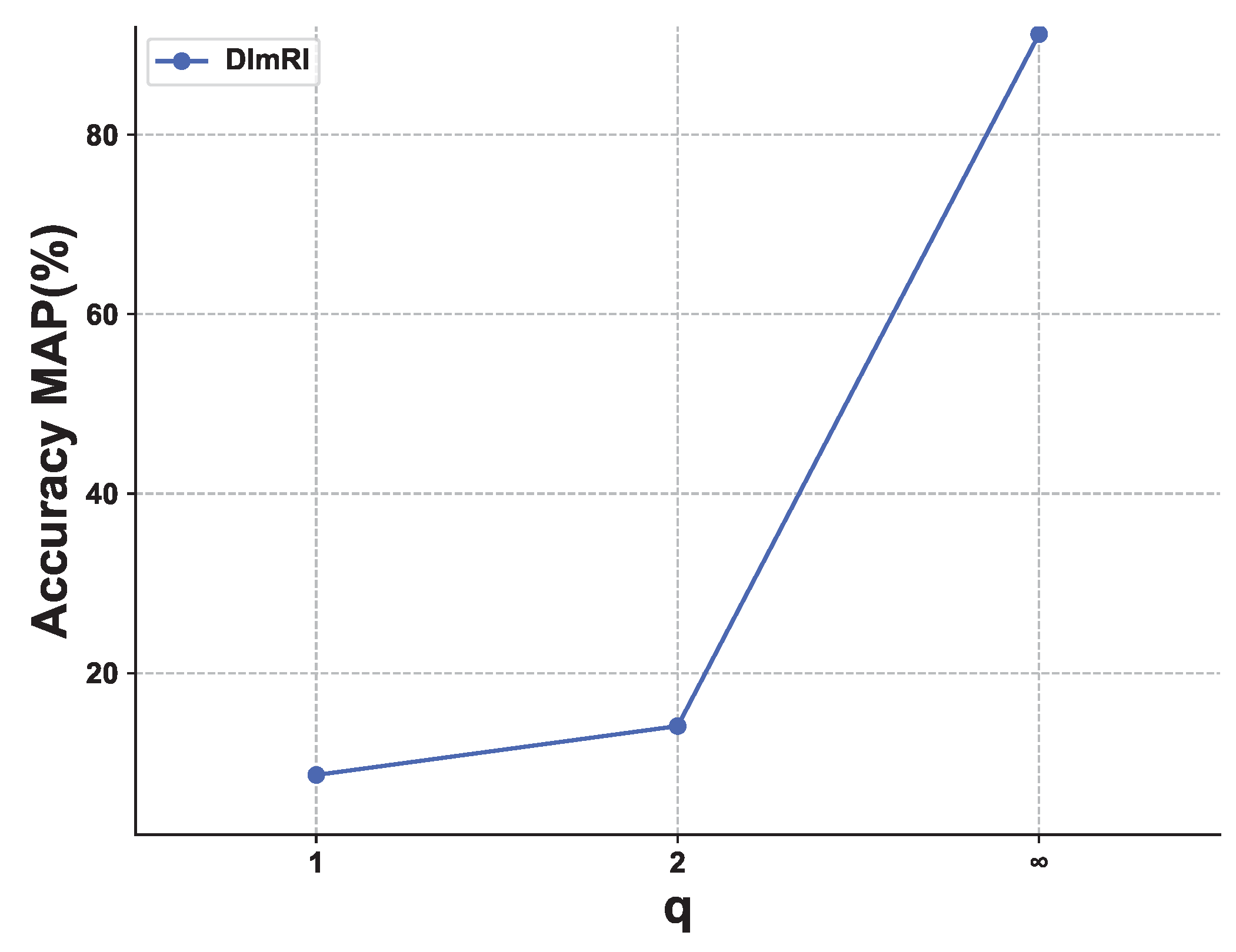

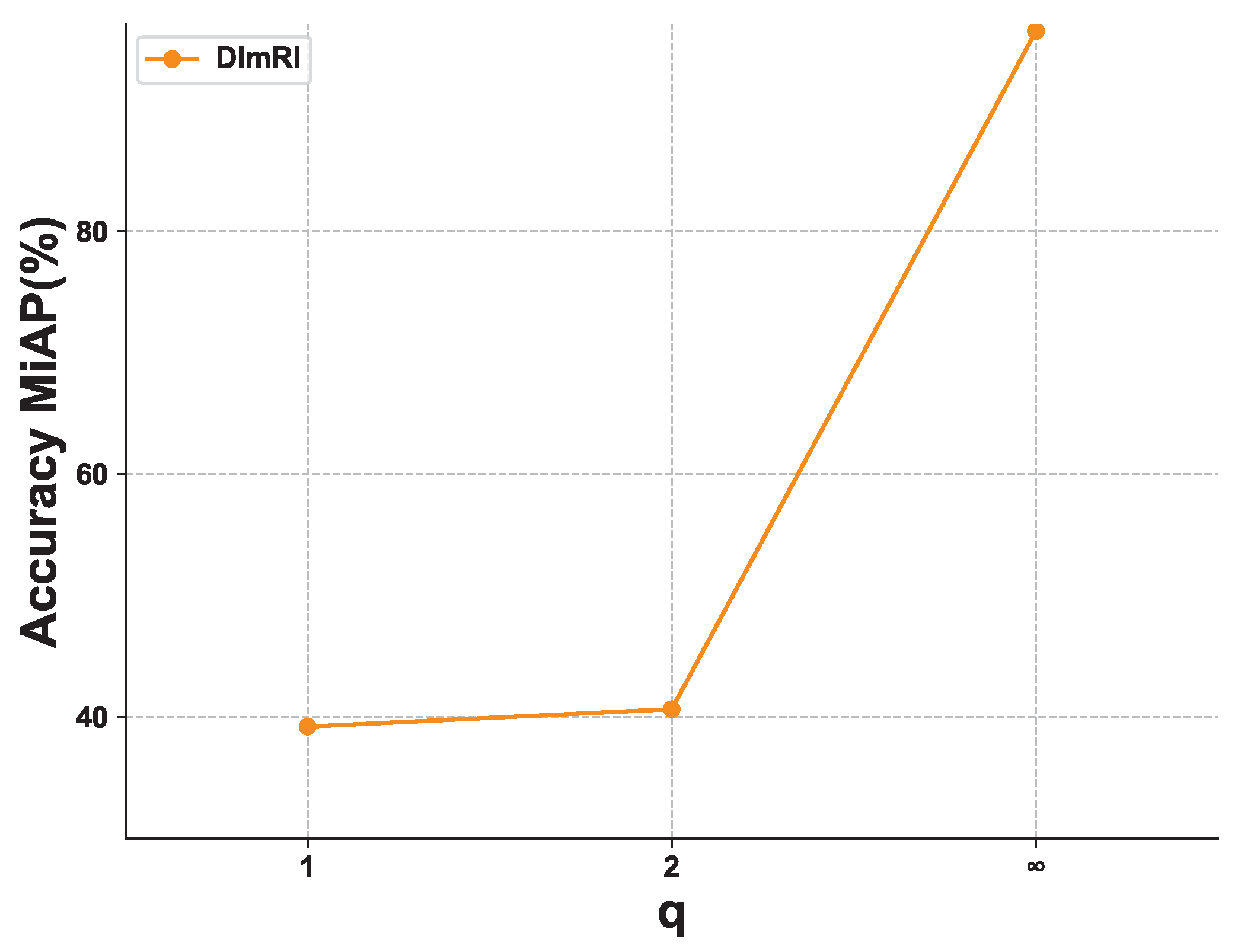

4.4. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, L.; Zhan, W.; Tian, W.; He, Y.; Zou, Q. Deep integration: A multi-label architecture for road scene recognition. IEEE Trans. Image Process. 2019, 28, 4883–4898. [Google Scholar] [CrossRef] [PubMed]

- Chen, B.; Zhang, Z.; Lu, Y.; Chen, F.; Lu, G.; Zhang, D. Semantic-interactive graph convolutional network for multilabel image recognition. IEEE Trans. Syst. Man Cybern. Syst. 2021, 52, 4887–4899. [Google Scholar] [CrossRef]

- Ge, Z.; Mahapatra, D.; Sedai, S.; Garnavi, R.; Chakravorty, R. Chest x-rays classification: A multi-label and fine-grained problem. arXiv 2018, arXiv:1807.07247. [Google Scholar]

- Gérardin, C.; Wajsbürt, P.; Vaillant, P.; Bellamine, A.; Carrat, F.; Tannier, X. Multilabel classification of medical concepts for patient clinical profile identification. Artif. Intell. Med. 2022, 128, 102311. [Google Scholar] [CrossRef] [PubMed]

- Boutell, M.R.; Luo, J.; Shen, X.; Brown, C.M. Learning multi-label scene classification. Pattern Recognit. 2004, 37, 1757–1771. [Google Scholar] [CrossRef]

- Tsoumakas, G.; Vlahavas, I. Random k-labelsets: An ensemble method for multilabel classification. In European Conference on Machine Learning; Springer: Berlin/Heidelberg, Germany, 2007; pp. 406–417. [Google Scholar]

- Zhang, M.L.; Zhou, Z.H. Ml-knn: A lazy learning approach to multi-label learning. Pattern Recognit. 2007, 40, 2038–2048. [Google Scholar] [CrossRef]

- Elisseeff, A.; Weston, J. A kernel method for multi-labelled classification. Adv. Neural Inf. Process. Syst. 2001, 14, 681–687. [Google Scholar]

- Lowe, D.G. Distinctive image features from scaleinvariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- Ojala, T.; Pietikäinen, M.; Harwood, D. A comparative study of texture measures with classification based on featured distributions. Pattern Recognit. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- Wang, J.; Yang, Y.; Mao, J.; Huang, Z.; Huang, C.; Xu, W. Cnn-rnn: A unified framework for multi-label image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2285–2294. [Google Scholar]

- Chen, Z.M.; Wei, X.S.; Wang, P.; Guo, Y. Multi-label image recognition with graph convolutional networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5177–5186. [Google Scholar]

- Xu, Z.; Liu, Y.; Li, C. Distributed information-theoretic semisupervised learning for multilabel classification. IEEE Trans. Cybern. 2022, 52, 821–835. [Google Scholar] [CrossRef]

- Ma, J.; Chiu, B.C.Y.; Chow, T.W.S. Multilabel classification with group-based mapping: A framework with local feature selection and local label correlation. IEEE Trans. Cybern. 2020, 52, 4596–4610. [Google Scholar] [CrossRef]

- Elsayed, G.; Krishnan, D.; Mobahi, H.; Regan, K.; Bengio, S. Large margin deep networks for classification. Adv. Neural Inf. Process. Syst. 2018, 31, 842–852. [Google Scholar]

- Chen, L.; Wang, R.; Yang, J.; Xue, L.; Hu, M. Multi-label image classification with recurrently learning semantic dependencies. Vis. Comput. 2019, 35, 1361–1371. [Google Scholar] [CrossRef]

- Liu, F.; Xiang, T.; Hospedales, T.M.; Yang, W.; Sun, C. Semantic regularisation for recurrent image annotation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2872–2880. [Google Scholar]

- Meng, Q.; Zhang, W. Multilabel image classification with attention mechanism and graph convolutional networks. In Proceedings of the ACM Multimedia Asia, Beijing, China, 16–18 December 2019; pp. 1–6. [Google Scholar]

- Wu, X.; Chen, Q.; Li, W.; Xiao, Y.; Hu, B. Adahgnn: Adaptive hypergraph neural networks for multi-label image classification. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 284–293. [Google Scholar]

- Oquab, M.; Bottou, L.; Laptev, I.; Sivic, J. Is object localization for free?-weakly-supervised learning with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 685–694. [Google Scholar]

- Gou, J.; Sun, L.; Du, L.; Ma, H.; Xiong, T.; Ou, W.; Zhan, Y. A representation coefficient-based k-nearest centroid neighbor classifier. Expert Syst. Appl. 2022, 194, 116529. [Google Scholar] [CrossRef]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Martins, A.; Astudillo, R. From softmax to sparsemax: A sparse model of attention and multilabel classification. In Proceedings of the International Conference on Machine Learning (PMLR 2016), New York, NY, USA, 19–24 June 2016; pp. 1614–1623. [Google Scholar]

- Zhu, K.; Wu, J. Residual attention: A simple but effective method for multi-label recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 184–193. [Google Scholar]

- Ma, Z.; Chen, S. A Similarity-based Framework for Classification Task. IEEE Trans. Knowl. Data Eng. 2022. [Google Scholar] [CrossRef]

- Izadinia, H.; Russell, B.C.; Farhadi, A.; Hoffman, M.D.; Hertzmann, A. Deep classifiers from image tags in the wild. In Proceedings of the 2015 Workshop on Community-Organized Multimodal Mining: Opportunities for Novel Solutions, Brisbane, Australia, 26–30 October 2015; pp. 13–18. [Google Scholar]

- Xie, M.K.; Huang, S.J. Partial multi-label learning with noisy label identification. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3676–3689. [Google Scholar] [CrossRef]

- Durand, T.; Mehrasa, N.; Mori, G. Learning a deep convnet for multi-label classification with partial labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 647–657. [Google Scholar]

- Huynh, D.; Elhamifar, E. Interactive multi-label cnn learning with partial labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9423–9432. [Google Scholar]

- Gong, Y.; Jia, Y.; Leung, T.; Toshev, A.; Ioffe, S. Deep convolutional ranking for multilabel image annotation. arXiv 2013, arXiv:1312.4894. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Sun, S.; Chen, W.; Wang, L.; Liu, T.-Y. Large margin deep neural networks: Theory and algorithms. arXiv 2015, arXiv:1506.05232. [Google Scholar]

- Sokolić, J.; Giryes, R.; Sapiro, G.; Rodrigues, M.R.D. Robust large margin deep neural networks. IEEE Trans. Signal Process. 2017, 65, 4265–4280. [Google Scholar] [CrossRef]

- Li, Y.; Song, Y.; Luo, J. Improving pairwise ranking for multi-label image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3617–3625. [Google Scholar]

- Everingham, M.; Eslami, S.M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Baeza-Yates, R.; Ribeiro-Neto, B. Modern Information Retrieval; ACM Press: New York, NY, USA, 1999; Volume 463. [Google Scholar]

- Xie, M.K.; Huang, S.J. Ccmn: A general framework for learning with class-conditional multi-label noise. IEEE Trans. Pattern Anal. Mach. Intell. 2022. [Google Scholar] [CrossRef] [PubMed]

- Imambi, S.; Prakash, K.B.; Kanagachidambaresan, G.R. PyTorch. In Programming with TensorFlow; Springer: Cham, Switzerland, 2021; pp. 87–104. [Google Scholar]

| Methods | Noisy Ratio 5% | Noisy Ratio 10% | Noisy Ratio 15% | Noisy Ratio 20% |

|---|---|---|---|---|

| bce (MAP) [31] | 88.79 | 85.50 | 82.83 | 80.05 |

| slm (MAP) [41] | 88.70 | 85.48 | 83.13 | 79.96 |

| DlmRl (MAP) | 90.77 | 90.57 | 90.24 | 89.90 |

| bce (MiAP) [31] | 94.74 | 93.28 | 91.69 | 89.82 |

| slm (MiAP) [41] | 94.70 | 93.19 | 91.68 | 89.94 |

| DlmRl (MiAP) | 95.96 | 95.68 | 95.00 | 94.77 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, Z.; Li, Z.; Zhan, Y. Deep Large-Margin Rank Loss for Multi-Label Image Classification. Mathematics 2022, 10, 4584. https://doi.org/10.3390/math10234584

Ma Z, Li Z, Zhan Y. Deep Large-Margin Rank Loss for Multi-Label Image Classification. Mathematics. 2022; 10(23):4584. https://doi.org/10.3390/math10234584

Chicago/Turabian StyleMa, Zhongchen, Zongpeng Li, and Yongzhao Zhan. 2022. "Deep Large-Margin Rank Loss for Multi-Label Image Classification" Mathematics 10, no. 23: 4584. https://doi.org/10.3390/math10234584

APA StyleMa, Z., Li, Z., & Zhan, Y. (2022). Deep Large-Margin Rank Loss for Multi-Label Image Classification. Mathematics, 10(23), 4584. https://doi.org/10.3390/math10234584