Abstract

Detecting vulnerabilities in programs is an important yet challenging problem in cybersecurity. The recent advancement in techniques of natural language understanding enables the data-driven research on automated code analysis to embrace Pre-trained Contextualized Models (PCMs). These models are pre-trained on the large corpus and can be fine-tuned for various downstream tasks, but their feasibility and effectiveness for software vulnerability detection have not been systematically studied. In this paper, we explore six prevalent PCMs and compare them with three mainstream Non-Contextualized Models (NCMs) in terms of generating effective function-level representations for vulnerability detection. We found that, although the detection performance of PCMs outperformed that of the NCMs, training and fine-tuning PCMs were computationally expensive. The budgets for deployment and inference are also considerable in practice, which may prevent the wide adoption of PCMs in the field of interest. However, we discover that, when the PCMs were compressed using the technique of knowledge distillation, they achieved similar detection performance but with significantly improved efficiency compared with their uncompressed counterparts when using 40,000 synthetic C functions for fine-tuning and approximately 79,200 real-world C functions for training. Among the distilled PCMs, the distilled CodeBERT achieved the most cost-effective performance. Therefore, we proposed a framework encapsulating the Distilled CodeBERT for an end-to-end Vulnerable function Detection (named DistilVD). To examine the performance of the proposed framework in real-world scenarios, DistilVD was tested on four open-source real-world projects with a small amount of training data. Results showed that DistilVD outperformed the five baseline approaches. Further evaluations on multi-class vulnerability detection also confirmed the effectiveness of DistilVD for detecting various vulnerability types.

Keywords:

pre-trained contextualized embedding; function-level; vulnerability detection; model compression; knowledge distillation MSC:

68T07

1. Introduction

Recent years have witnessed substantial growth in the number of disclosed software vulnerabilities [1]. Since 2017, the figure of newly discovered vulnerabilities had exceeded 12,000 annually. In 2020, the number reached more than 18,000, which is the highest reported annual number heretofore, and it excluded the vulnerabilities discovered internally in proprietary code [2,3]. This suggests that eliminating the occurrence of vulnerabilities prior to the release of the software is challenging and effective, and efficient vulnerability detection solutions are eagerly required. Once these vulnerabilities are exploited by attackers, server consequences such as data breaches may happen, leading to financial and social damage [3,4,5]. For example, in 2014, the “Heartbleed” vulnerability that existed in the early version of OpenSSL affected almost two-thirds of active websites worldwide. It is estimated that the cost of fixing this vulnerability could reach $500 million [6]. In 2017, a vulnerability in Apache Struts had been exploited, compromising 143 million customers’ financial information.

To eliminate vulnerabilities before the deployment of software, researchers have proposed many approaches to automate the processes of detection. Among them, there is a line of studies that utilize data-driven and deep-learning techniques for vulnerability detection [7,8,9,10,11,12,13,14]. With deep-learning techniques, the potentially vulnerable patterns can be extracted from source code or from code representations of different forms. Compared with conventional machine learning, deep-learning techniques have the potential of capturing intrinsic patterns from complex data with an improved level of generalization ability [1]. To generate meaningful representations for code tokens, word embedding models, such as Word2Vec is applied. However, to correctly capture potentially vulnerable patterns of code requires a deep learning algorithm to understand code semantics in contexts [1]. The conventional word embedding solutions, which are usually based on shallow neural networks, are incapable of generating rich representations of code tokens based on the difference of their surrounding contexts [15]. To facilitate the understanding of code semantics, algorithms need to be contextual, capable of learning the meanings formed by identifier names and the code logic revealed from control flows and data flows. Thus, the non-contextual neural models may fail to capture rich information for downstream code analysis tasks such as vulnerability detection.

Many recent advancements have been made by applying Pre-trained Contextualized Models (PCMs) for Natural Language Processing (NLP). Built on top of the deep contextualized embedding model–Bidirectional Encoder Representations from Transformers (BERT) [16], a number of BERT-like models have been proposed to facilitate various language understanding tasks, achieving State-of-the-Art (SOTA) results. Motivated by the promising outcomes, the applications of BERT-like models for code analysis have received much attention. However, the effectiveness and feasibility of applying PCMs for vulnerability detection have not been systematically studied. In this paper, we perform a comprehensive study to evaluate the performance and efficiency of six prevalent PCMs in terms of static function-level vulnerability detection. For performance evaluation, we explore three Non-Contextualized Models (NCMs), three PCMs and three lightweight PCMs compressed using knowledge distillation to compare their effectiveness of generating code representations for function-level vulnerability detection. These models are fine-tuned on 40,000 synthetic C functions and trained on around 79,200 real-world C function samples. For efficiency evaluation, we monitor the fine-tuning time and training time and examine how much efficiency gain could be obtained in terms of the performance loss caused by compressing PCMs using the technique of knowledge distillation.

Finally, based on the performance and efficiency evaluation, we encapsulate the most cost-effective distilled PCM as a framework named the Distilled contextualized model for Vulnerability Detection (DistilVD). The framework is an end-to-end detector taking source code functions as the input, and the output is the probability of the corresponding input functions being vulnerable. To further evaluate the effectiveness of DistilVD on detecting vulnerable functions in real-world software projects and its capability of identifying multi-class vulnerabilities, we compare DistilVD with five existing vulnerability detection solutions. Results showed that DistilVD outperformed the baselines and achieved SOTA performance on multi-class vulnerability detection. Part of the dataset used in this paper is publicly available (https://cybercodeintelligence.github.io/CyberCI/, accessed on 26 November 2022).

In summary, the contributions of this paper are three-fold:

- We perform a comprehensive and systematic comparison to evaluate nine non-contextualized and contextualized models, including three NCMs which are Word2Vec [17], FastText [18], and Global Vectors for word representation (GloVe) [19]; three PCMs which are Embeddings for Language Models (ELMo) [20,21], RoBERTa [22], and CodeBERT [23]; and three compressed PCMs which are distilled RoBERTa, distilled CodeBERT, and TinyBERT [24]. Empirical results show that PCMs are much more effective than NCMs in producing useful representations resulting in better performance of the downstream vulnerability detection tasks.

- Based on the evaluation results, we further compare PCMs and their distilled counterparts in terms of their cost-effectiveness for vulnerable function detection. We found that the distilled CodeBERT is faster to train/infer while maintaining similar performance behavior compared with CodeBERT and other models. Hence, we encapsulate the distilled CodeBERT as an end-to-end vulnerability detection framework, called DistilVD, which can take source code functions as inputs and output the probability of such functions being vulnerable. No code analysis is needed.

- Further performance evaluations are conducted to compare DistilVD with existing vulnerability detection systems for detecting vulnerabilities and identifying 10 different vulnerability types. Evaluation results show that DistilVD is the best-performing system for vulnerable function detection in most of the cases and achieve the best performance in identifying multi-class vulnerabilities.

The remaining paper is organized as follows: Section 2 presents the related studies which applied deep contextual models for code analysis and recent studies applying deep learning for vulnerability detection. Section 3 presents the workflow of this study and the design decisions. The experiments for evaluating and comparing DistilVD with existing detection systems are presented in Section 4, and Section 6 concludes this paper.

2. Related Work

Many recent studies have demonstrated the potentials and effectiveness of neural techniques for various code analysis tasks including software vulnerability discovery. This section reviews existing studies which propose different embedding models for software code analysis and related works for vulnerability detection.

2.1. Embedding Models for Representing Software Code

The bimodality of software code, being executable by computers and readable by humans, enables the application of NLP techniques for code analysis [25]. The embedding techniques aim to convert software code tokens to meaningful vector representations, enabling neural networks which designed originally to handle numeric data to be able to process software code that is “discrete” in nature. Mainstream code embeddings, such as Word2Vec, utilize distributed representations to capture the meanings of words distributed in the components of fixed-length vectors [26], allowing neural networks to learn rich meanings from code. It had been applied by many existing studies [7,8,9,10,11,12,13,27,28] for learning vulnerable representations.

However, the static or non-contextual embedding models are not able to generate different representations for the same code token appeared in different code contexts. To better optimize the learning of contextual representation of words or code tokens, contextualized embedding methods are proposed, enabling the embedding of words to be generated according to contexts. ELMo [20] was proposed to be one of the representative contextual embeddings which utilizes multiple layers of bidirectional Long Short-Term Memory (LSTM) networks. Different layers of LSTM can learn different representations and weights, allowing the model to generate various embeddings for words according to different contexts.

Transformer [29] exhibits better potential of language understanding, particularly in dealing with long-range dependencies. BERT [16], which equips with multiple layers of the bidirectional encoder of Transformer, has achieved SOTA performance in many NLP tasks. For code analysis, CodeBERT [23] was proposed by using BERT to train on a large code corpus containing six programming languages. To better utilize the structural information of data flow and control flow, GraphCodeBERT [30] was proposed. To facilitate the code analysis tasks on a specific programming language, researchers proposed CuBERT [15], which is pre-trained on Python.

2.2. Software Vulnerability Detection

The data-driven and machine learning approaches have been widely adopted for detecting vulnerabilities. Conventional machine learning-based approaches usually require experts to extract features. For example, Lomio et al. [31] applied process metrics extracted from commit messages such as the number of added lines, deleted lines, and added methods as features. However, Deep-learning-based approaches can leverage the representation-learning capability of neural models for the automated extraction of high-level features, which can be purely data-driven. An early study had applied a deep belief network for learning semantic patterns to detect bugs in Java projects [32]. Later on, a Bidirectional LSTM (Bi-LSTM) network was applied for vulnerability detection on C projects [7,8,12,33,34,35], since the LSTM network in bidirectional form can better capture contextual dependencies in code sequences.

The convolutional structure of Convolutional Neural Network (CNN) enables the network to extract features from small code contexts. This allows CNNs to be alternative choices for extracting vulnerable features [11,36,37,38]. In addition, there is another line of studies which use customized network architecture to achieve vulnerability detection. A Maximal Divergence Sequential Auto-Encoder (MDSAE) is proposed for extracting features from machine instruction sequences [39]. Choi et al. [40] propose to use memory network which uses extra built-in memory blocks to capture extra long-range dependencies for identifying buffer overflow vulnerabilities. After that, Sestili et al. [41] further extended and improved the memory network so it can operate on compilable code.

The aforementioned studies all work on the sequence of code tokens, extracting the flatten representations that are sequential. Many researchers argued that the control and data flows are well-structured which can also reveal useful information for understanding code semantics indicative of vulnerable patterns. Therefore, a number of studies utilize Graph Neural Networks (GNNs) for structural information extraction, including FUNDED [42], Devign [3], VulSPG [43], and Zhuang et al. [44]. However, these studies highly depend on code analysis to obtain code graph. In contrast, our work directly takes source code as inputs without involving any tools for code processing, which is more convenient in practice.

3. Background and Motivation

This section briefly presents the challenges faced by Deep Learning (DL)-based vulnerability detection approaches and motivations of the proposed framework.

3.1. Problem Formulation

A static vulnerable function detection problem can be formulated as a function-level vulnerability detector D, taking a list of source code functions from a program as input and outputs a ranking list of input functions based on their likelihood of being vulnerable. Let be all C source code functions in a software project for detection, a detector achieves , where 1 refers to a detection result of being definitely vulnerable, and 0 means being definitely non-vulnerable, such that measures the probability of an input function containing vulnerable code snippet. We treat as a vulnerability score which allows us to investigate a small number of functions of the top risk due to time and resources’ limits.

In this paper, we aim to find a detector D which contains a neural embedding model M and a feed-forward network C (e.g., a Multi-Layer Procession (MLP)). Given an input function , a trained M generates a function representation indicative of vulnerable patterns which facilitates the feed-forward network to achieve: .

3.2. Vulnerable Pattern Learning

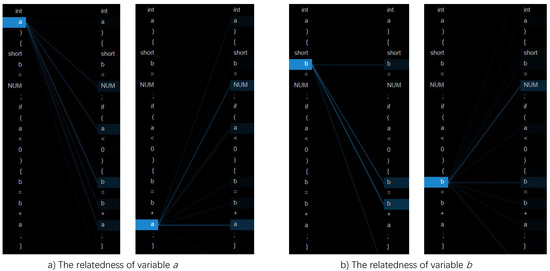

To capture the potentially vulnerable patterns, a model needs to understand different meanings of a code token based on its context to comprehend code semantics [1], in order to generate representative vulnerable representations. A code context can contain data flows and/or control flows with logical operations, variable declarations, assignments, calculations, etc. Therefore, it is important for the model to be contextual. A contextualized model can dynamically generate representations of polysemous words according to contexts. In a code context, a variable can be assigned to different values and the assignment statements are crucial for analyzing a data flow. As Figure 1 [3] shows, a toy example presented in Figure 1b is vulnerable to integer overflow. One way to patch the vulnerability is to apply a constraint to variable a by placing an if statement to allow the assignment of variable b to be executed when the value of a is non-positive. Therefore, it can be seen that the meaning of variable a has been changed (i.e., its value can only be negative) after the conditional expression. Thus, an embedding model should be able to generate a different representation for variable a after the if statement is applied.

Figure 1.

Toy examples [3] of (b) an integer overflow and (a) one way to avoid it.

Identifying a vulnerability depends on the understanding of code semantics and code logic based on contexts. As shown in Figure 1a,b, the difference between the two code contexts (highlighted by the yellow background) is the existence of an if statement. A model should understand the meaning of type short and the value of 32767 to be able to judge the occurrence of the integer overflow vulnerability in the given code context. Hence, a comprehensive and contextualized model is required.

3.3. From Context-Dependent to Context-Sensitive

3.3.1. Limitations of NCMs

The NCMs, such as Word2Vec and FastText, can generate word representations based on a small context. For example, the Continuous Bag Of Words (CBOW) implementation of Word2Vec considers the conditional probability of generating a word based on its surrounding words. However, once the model is trained, the word representation vector is assigned based on a fixed lookup table regardless of the context of the word. Hence, these models are unable to handle polysemous words because they fail to generate a different representation of a word when its context alters.

3.3.2. The LSTM-Based PCM

For many PCMs, they are designed for various context-sensitive tasks [45]. ELMo is a representative PCM builds on the Bi-LSTM network. Applying LSTM/Bi-LSTM used to be common practice for various NLP tasks because an LSTM node equips with a gate mechanism and a memory cell, capable of processing long-term sequential interactions of input sequences. According to Zaremba and Sutskever [46], an input to an LSTM node at the current time step t can be formulated by the following transition equations:

where , , and denote input, forget and output gates, respectively. Four input weights , , and correspond to the unit input, the input gate, forget and output gates, respectively. There are also four recurrent weights , , and , and several bias terms , , , , and b, respectively. The is a built-in memory cell of an LSTM node which can maintain its internal state over multiple time steps; is a hidden state and is an input node. The weight matrix is the weight between the output and the next hidden layer. The symbol and represent Sigmoid function and Hyperbolic Tangent function, respectively. The ⊙ signifies element-wise multiplication. is the output based on the input and the hidden state , while the hidden state depends both on the current state (i.e., the information stored in ()) and the previous state (). During the training phase, the gates and the memory cell selectively memorize/forget the information from current and previous states, so that the information obtained in the preceding sequence can be optionally kept for access when processing the succeeding sequences [47], which allows the network to learn long-range dependencies in a sequence.

The bidirectional form of LSTM consists of two LSTM networks which can learn both forward and backward states to assemble a complete state representation. This facilitates the learning of word dependencies in a sequence from both directions, forming the capability of learning contextual dependencies. Given the current time step t, let and be the value of the hidden layers in the forward and reverse directions, respectively, and the output of a node in a Bi-LSTM network can be calculated by:

where function z can be a concatenating, summation, averaging or multiplication function. Therefore, can be seen as a vector representation of a hidden state holding the contextual information of .

To better learn a longer range of contextual dependencies of sequences and to understand word semantics, an LSTM-based model–ELMo was proposed [20]. It has a char-level CNN as the input layer and a two-stacked Bi-LSTM network. A word is encoded by the char-level CNN, which is and passes to the Bi-LSTM networks. For a layer of Bi-LSTM, the output representation is a concatenation of the output of both forward and backward LSTM network: , where j refers to the j-th layer. Therefore, the word passes through a L-layer Bi-LSTM network can have different representations, which can be formulated by the following [20]:

The highlights of ELMo are not only to take advantage of multiple stacked Bi-LSTM networks to learn contextual representations of a word, but also to utilize the representations generated by different layers. These representations reveal different levels of abstractions which can be further adjusted by trainable weights, catering for various downstream tasks. According to Peters et al. [20], the layer-related representations of ELMo for the word can be obtained by:

where refers to the task-specific representation computed for word , denotes the trainable parameters that are task-specific, is a trainable weight at j-th layer, and is a task-specific hyper-parameter. Hence, equipped with the contextual learning capabilities of Bi-LSTM network, ELMo is capable of learning more profound meanings of words by combining the representations generated by different layers according to downstream tasks.

3.3.3. The Transformer-Based PCMs

The emerging Transformer utilizes the self-attention mechanism for modeling sequences and had achieved SOTA performance on many Natural Language Understanding (NLU) tasks. Instead of maintaining hidden states to incorporate contextual representations of words in an LSTM, the novel self-attention of Transformer facilitates the understanding of word relevance by calculating attention scores [48]. Firstly, it creates three vectors which are the query vector , the key vector , and the value vector by multiplying the embedding of an input with three weights , , and . Secondly, a score of the input is computed by taking the dot product of the query vector with the key vectors of the remaining words. Therefore, by having the scores of other words in a sentence, we can know how relevant these words are to the target word. By knowing the relatedness of one word with the other words, it helps to generate representations of words which are context-sensitive. Thirdly, to achieve stable gradients, the calculated scores of inputs are divided by the square root of the dimension of key vectors, denoted by . Then, the results are passed through a softmax function to guarantee all scores are summed to 1. Finally, the attention score of each input is derived by the multiplication of the softmax score with the key vector. The process of computing the attention score of input can be formulated by the following [29]:

To pay attention to different positions of a sequence, forming a larger attended context, Transformer uses multiple self-attentions, called “multi-headed” attention. According to Alammar [48], having “multi-headed” attention enables multiple sets of query, key, and value weight matrices to project input embeddings into different representational spaces, which improves the ability to focus on multiple positions of a sequence. Lastly, the resulted matrices are concatenated to produce a single matrix and passed to a feed-forward network. The process can be formulated as follows:

where is a trainable weight matrix.

The encoder structure of a Transformer mainly consists of self-attention, a feed-forward network, and a residual connection. BERT architecture builds on the encoder part of a Transformer but in a bidirectional form, which facilitates the learning of word representations contextually. In addition, BERT uses more attention heads than the default implementation of a Transformer, which enhances the learning of rich contextual semantics of words. Furthermore, compared with ELMo, which contains a two-layer Bi-LSTM, BERT-Base equipped with 12 Transformer encoder layers bidirectionally, capable of representing multiple levels of contextualized representations, which enables the network to understand the profound meanings of contexts, based on which a word’s meaning, and semantics can be more accurately learned.

To evaluate a BERT-like model for understanding a code context and capturing the long-range dependencies of variables, we feed the model with the code sequences of the toy example shown in Figure 1. We visualize the code that has been attended by the attention mechanism of the encoder structure of a Transformer. Figure 2 shows the neuron views from four attention-heads, demonstrating that semantically related variables can be correctly recognized, as represented by the blue lines connecting between them.

Figure 2.

It presents the neuron views from four attention-heads of the second last layer of the distilled CodeBERT when the code sequence of toy examples shown in Figure 1a. It shows the captured long-range relatedness of variables a and b with other code tokens. The variables on the left are the target variables. Variables on the right which are connected by the lines from the target variable are the related variables attended by the attention-heads, showing that the model can correctly identify the variables that have relatedness. For example, Figure 2a shows that target variable a being highlighted is connected to a, and b by light blue lines, indicating that a is related to itself in a conditional expression and to b in an assignment statement of b = b + a;. In addition, variable a is also related to value NUM because NUM is assigned to variable b. This figure is generated using BertViz [49], which is a tool for visualizing attention mechanism in Transformer-based models.

3.3.4. The Pre-Training Strategy

It is a common practice to pre-train both PCMs and NCMs. Then, they can be fine-tuned according to downstream tasks. The pre-training strategy allows a model to learn general knowledge which can be task-agnostic to facilitate the learning of task-specific knowledge. Pre-training model in one task can initialize model parameters which may speed up the learning process in a new but related tasks. The initialized parameters of a pre-trained model contain the learned knowledge from a pre-training task which can be transferred to a new task.

For BERT-like models, two unsupervised pre-training tasks are usually performed: (1) masked language model, and (2) next sentence prediction. The first task aims to train the model to understand word contexts while the second one intends to train the model to comprehend the relationship between sentences, which require the model to understand a very large context. Therefore, BERT-like models usually require a large natural language corpus for pre-training. This is attributed to their deep and complex architecture having many parameters which are capable of holding sufficient domain-related knowledge. In contrast, the amount of data used to pre-train NCMs is generally task-specific and smaller in size.

The emerging PCMs have demonstrated their success in various NLP and NLU tasks [15,23,30,50]. Encouraged by the performance of BERT-like models in NLP tasks, researchers have further improved and optimized how BERT is trained, proposing BERT variants, such as RoBERTa, which further push the SOTA in the fields of NLP. Motivated by this, researchers pre-train these PCMs on large code corpus and apply them for both NLP and code analysis tasks [51], which opens a door for applying PCMs for code processing.

3.4. Knowledge Distillation

Knowledge distillation is a promising technique for model compression and acceleration [52,53,54]. It may be a feasible solution for compressing a large PCM for the cost-effective vulnerability detection. The distillation process aims to train a compact model called student to match the behavior of a large model called teacher. On one hand, during the process of distillation, a complex and large model can be decompressed to produce a less complex and smaller model which facilitates the adoption and deployment. On the other, knowledge distillation allows the knowledge to be transferred (or distilled) from the teacher (the large model to be compressed) to the student (the compressed model), which enables the student to learn the generalization ability of the teacher. According to Hinton et al. [53], this is achieved by minimizing a loss function L during the training process of the student to match the softened target probabilities of the teacher. A “temperature” T variable is applied to the softmax function to control the softness of the output probability distribution. The process of knowledge distillation can be formulated as:

where and are hyper-parameters; denotes the softened cross-entropy loss function involving the temperature T; the refers to the hard cross-entropy loss function when , that is, to use ground truth labels; is the ground truth value of class j and we have ; N refers to the total number of labels; and are the values on class j being outputted by the softmax function of the teacher and the student at temperature = T, respectively; according to Hinton et al. [53], and can be calculated as follows:

Please note that, when , we obtain standard softmax functions. When T increases, the probability distribution obtained by the softmax function becomes softer. Using the scenario of vulnerability detection as an example, when , given a vulnerable function, the teacher can tell that it is vulnerable while the student should tell that it is very likely to be vulnerable, and it is very unlikely to be non-vulnerable.

3.5. Motivations

Detecting vulnerabilities requires the understanding of code semantics, code logic, and contexts, which is what PCMs can be applied for. Nevertheless, the effectiveness of applying PCMs for vulnerability detection has not been sufficiently studied. There are two major issues which may prevent these models from being widely adopted in practice. Firstly, compared with NCMs, PCMs are generally large and deep, containing significantly more parameters, as shown in Table 1. Therefore, they are computationally expensive to train, fine-tune, and deploy. Secondly, a large model generally requires more data to fit. Due to the scarcity of vulnerability data, it is expensive to obtain a large amount of vulnerability labels for effective training. Therefore, there is a need for systematic and comprehensive study to evaluate the effectiveness and efficiency of applying mainstream PCMs for detecting vulnerabilities. Particularly, we aim to discover how much performance gain could be obtained using PCMs compared with NCMs and discuss whether the gained performance is worthwhile at the cost of efficiency. In addition, since a smaller model offers faster training, fine-tuning, and inference, we intend to evaluate whether the PCMs compressed by the technique of knowledge distillation achieve the balance between the performance and efficiency.

Table 1.

The features of the selected nine models, showing their number of layers, parameters, size, and the amount of data used for pre-training.

4. Evaluation Methodology

This section describes the dataset used and the experiments designed for evaluating the NCMs, PCMs, and distilled PCMs from the perspectives of detection performance and efficiency.

4.1. Evaluation Workflow

The evaluation process consists of two stages, as it is shown in Figure 3. The first stage is to evaluate nine selected PCMs and NCMs in terms of their effectiveness for generating useful representations for vulnerable function detection. In the second stage, based on the evaluation result, the best-performed models are encapsulated in a framework for the efficiency evaluation, aiming to identify the most efficient PCMs which balance between performance and computational cost. To achieve this, firstly, the framework is fine-tuned with the artificially constructed C function samples derived from the Software Assurance Reference Dataset (SARD) project [55] provided by the National Institute of Standards and Technology (NIST). The purpose of fine-tuning using synthetic C function samples allows the model to possibly capture the syntax, semantics, and structure of C code. Secondly, after fine-tuning, our proposed framework can be further trained and validated using a real-word dataset. Thirdly, the trained framework can be used for detecting vulnerable functions.

Figure 3.

The workflow of the evaluation and the design of the framework, consisting two stages. Stage 1 evaluates NCMs, PCMs, and distilled PCMs in terms of their detection effectiveness. Stage 2, based on the evaluation of Stage 1, the best-performed models are selected for efficiency analysis. Then, the most cost-effective model is encapsulated as a framework, called DistilVD, and it is further evaluated for detecting vulnerable functions and vulnerability types.

4.2. Dataset

An end-to-end vulnerability detection solution can take source code functions as the input without the need of any code analysis efforts. Therefore, we choose two readily available data sources which provide C source code functions to form the dataset for our performance evaluation. The number of collected and labeled functions from each data source is listed in Table 2. Due to the shortage of the data with vulnerability type labels, we also include a part of the data provided by Wang et al. [42].

Table 2.

The data sources used for evaluations.

4.2.1. The Synthetic Dataset

The synthetic vulnerability dataset is collected from the SARD project. It contains artificially constructed test cases to simulate known vulnerable code settings and patterns. Test cases from the SARD project are compilable and executable, aiming to test the performance of static code analysis tools. In this paper, we randomly selected 20,000 vulnerable and 20,000 non-vulnerable C functions from the SARD project for evaluation and insure each selected synthetic function sample has at least 30 tokens.

4.2.2. The Real-World Dataset

We use two real-world datasets for evaluation. The first one is the extension of a vulnerability dataset provided by Lin et al. [27], consisting of 12 popular C open-source projects and libraries, having 1984 vulnerable and 130,035 non-vulnerable functions (as shown in Table 2). We followed the methods provided by [27] for manually labeling the vulnerable functions and collecting the non-vulnerable ones. The second one is provided by Wang et al. [42] whose dataset provides vulnerability type labels, and their dataset is only used when evaluating the performance of multi-class vulnerabilities.

4.3. Evaluation Metrics

Due to the data imbalance issue, the metrics that we apply for performance evaluation are the top-k precision (denoted as P@K) and top-k recall (denoted as R@K). By measuring the proportion of vulnerable functions returned in a function list, we can better examine the capability of a detector for identifying potentially vulnerable candidates from a large amount of non-vulnerable functions. These performance metrics are commonly used in the field of information retrieval, such as for measuring the performance of search engines, since the relevant documents usually account for a small portion of the whole document set [56] and users would view the most relevant documents instead of checking every one returned. This is similar to the scenario of vulnerability detection. In practice, the number of vulnerable functions is considerably fewer in number than non-vulnerable ones. It is also time-consuming to verify all functions returned by a detection system. Instead, code inspectors may only check the functions marked as the most probably vulnerable ones due to considerations of time and resources.

Hence, in our context, P@K refers to the proportion of the top-k retrieved functions that vulnerable functions are accounted for. R@K refers to the number of vulnerable functions that are in the top-k retrieved functions accounted for the total number of vulnerable functions. We treat the vulnerable class as the positive class, and P@K and R@K metrics can be calculated using the following equations:

where TP@k denotes the true positive functions found in the k returned functions which are the most likely to be vulnerable; FP@k and FN@k refer to the false positive and false negative functions that are incorrectly identified in k returned functions, respectively. For datasets without data imbalance issues, the conventional performance metrics, including accuracy, precision, recall, and F1-score, are used.

4.4. Experiment Settings

This subsection presents the detailed experiment settings for the evaluation of PCMs and NCMs, and the performance comparison of DistilVD with existing detection systems.

4.4.1. Research Questions

The experiments are conducted to answer the following Research Questions (RQs):

- RQ1: Are the PCMs more effective than the NCMs in terms of generating useful representations which facilitate the downstream function-level vulnerability detection task?

- RQ2: Whether distilled PCMs, being smaller and faster, are more effective than their undistilled counterparts for detecting vulnerable functions?

- RQ3: In the downstream task of function-level vulnerability detection, how efficient are distilled PCMs compared with their undistilled counterparts in terms of fine-tuning, training, and inference?

- RQ4: How effective is DistilVD compared with some existing vulnerability detection systems for identifying vulnerable functions from non-vulnerable ones?

- RQ5: How effective is DistilVD compared with some existing vulnerability detection systems for detecting different types vulnerabilities?

Among the above RQs, the first three aim to find the most cost-effective PCMs for vulnerable function detection. The last two examine the effectiveness of the proposed framework compared with existing detection solutions.

4.4.2. Experiment Setup

The experiments and the dataset are set differently to answer the aforementioned RQs.

For answering RQ1–3, we compare three groups of mainstream models in terms of their performance for generating representations for vulnerability detection. The three groups are: (1) the NCMs which are Word2Vec, FastText, and GloVe; (2) the PCMs which are ELMo, RoBERTa, and CodeBERT; and (3) the distilled PCMs which are distilled RoBERTa, distilled CodeBERT, and TinyBERT.

We use both the synthetic samples from the SARD project and the real-world samples from open-source projects for training Word2Vec, FastText, and GloVe. The PCMs and distilled PCMs have been pre-trained. In our experiments, we fine-tune the PCMs and their distilled counterparts on our dataset. For ELMo, RoBERTa, and distilled RoBERTa, they have been trained using a large language corpus. Therefore, we fine-tune them using the synthetic SARD dataset with 20,000 vulnerable and non-vulnerable samples, respectively. For CodeBERT and distilled CodeBERT, they have been pre-trained on both natural languages and programming languages. In particular, they have been trained on six different programming languages other than C. Hence, we also fine-tune them using the same amount of synthetic C samples. Compared with the size of datasets used for pre-training the PCMs, the synthetic SARD dataset used for fine-tuning is relatively small. We make sure that the fine-tuning process on PCMs to be completed in 12 h in our hardware settings. This is because, in practice, software development teams may not usually be equipped with high-end GPUs which can handle the training on a large amount of data. The pre-training and fine-tuning settings are listed in Table 3.

Table 3.

The experiment setting for answering RQ1-3, listing the data sources for pre-training/fine-tuning the non-contextualized and contextualized models.

Function samples collected and labeled from real-world open-source projects are used for training, validation, and test since the real-world samples can better reflect the realistic code patterns which are diverse and complex. We mix all the data of open-source projects together and partition them into training, validation, and test sets based on the ratio of 3:1:1. The number of vulnerable and non-vulnerable functions in each set are listed in Table 4. We do not balance the number of vulnerable and non-vulnerable samples, which intends to simulate the real-world detection scenarios where the vulnerable and non-vulnerable samples of two classes are highly imbalanced.

Table 4.

The experiment setting for answering RQ1-3, listing the partitioned data set of 12 real-world open-source projects for training, validation, and test.

To evaluate the effectiveness of all nine PCMs and NCMs in terms of vulnerability detection, we add dense layers followed by the models to form networks as classifiers, allowing the generating representations of code tokens to pass through dense layers for classification. In these groups of experiments, we do not address the data imbalance issue by keeping a ratio of vulnerable and non-vulnerable functions unchanged.

For answering RQ4, we select the most cost-effective PCMs based on the previous stage of evaluation and encapsulate it as a framework called DistilVD. The, we compare DistilVD with five existing vulnerability detection systems using the selected real-world dataset for identifying vulnerable functions from non-vulnerable ones. The vulnerability detection systems for comparison include (1) unsupervised static analysis tools and (2) supervised deep learning-based detection methods. For the former, we choose two open-source tools which are Flawfinder (version 2.0.7) [57] and Cppcheck (https://github.com/danmar/cppcheck, accessed on 27 November 2022). Both of them had been adopted as baselines in many related studies, such as [10,37,58]. For the latter, we choose three existing studies which focus on function-level detection granularity for comparison. This is because we have full access to the complete datasets adopted by these studies and their code for implementation are publicly available. These studies are Cross-VD [8], Multi-VD [12], and DeepBalance [34]. For deep learning-based vulnerability detection systems, their performance may be restricted due to the shortage of vulnerability data since for many mid-size open-source projects, and the disclosed vulnerabilities may not exceed 300. To simulate the practical case where the labeled vulnerability data is limited, we use a relatively small training and validation sets, aiming to simulate the real-world detection scenarios where vulnerable samples are scarce. For a selected project to test, we partition the dataset of this project into training, validation, and test with the ratio of 3:2:5. To strengthen the learning, we add the remaining 11 open-source projects to the training set. This allows all deep learning-based detection systems to learn the common features that are project-independent from the 11 projects, which can possibly prevent under-fitting caused by the insufficiency of vulnerability data. In practice, practitioners can also add historical vulnerability data from other software projects to facilitate the learning of vulnerable patterns which are project-agnostic.

Among the 12 open-source projects, we choose four projects for performance comparison, which are FFmpeg, LibTIFF, LibPNG, and OpenSSL. The FFmpeg and OpenSSL are mid-size projects and the LibTIFF and LibPNG are small-size ones based on the number of functions collected. The number of vulnerable and non-vulnerable functions in each project for comparison is listed in Table 5.

Table 5.

The experiment setting for answering RQ4, listing the partitioned data set of individual software projects for evaluation, including FFmpeg, OpenSSL, LibTIFF, and LibPNG. When one project is used for evaluation, the remaining 11 projects are used for training.

The cost-sensitive learning is applied for addressing the data imbalance between vulnerable and non-vulnerable functions in real-world projects. This is achieved by assigning different weights to vulnerable and non-vulnerable classes, allowing the loss function of a classifier to balance two imbalanced classes by adjusting misclassification cost. In this paper, we use the following equation to obtain weights of each class:

where is 2, referring to vulnerable and non-vulnerable classes. The is the number of samples in one class. Therefore, the misclassification cost of the vulnerable samples is higher than that of non-vulnerable ones, enabling classifiers to overcome the data imbalance issue during the training process.

For answering RQ5, we compare DistilVD with three existing vulnerability detection systems for not only identifying vulnerable functions but also determining their types. Due to the shortage of vulnerability samples with different types, we combine the datasets provided by Lin et al. [27], Wang et al. [42], and the SARD dataset to form a 20,000-sample dataset, having 10,146 vulnerable and 9854 non-vulnerable functions. The vulnerable functions are further categorized into ten different categories corresponding to ten vulnerability types, as it is shown in Figure 5. Each type has roughly 2000 vulnerable and non-vulnerable functions (The non-vulnerable functions are either patched vulnerable ones or the artificial code samples from the SARD project). We partition the dataset into training, validation, and test sets based on the ratio of 3:1:1 and ensure that the proportions of each vulnerability type are equal among the partitioned training, validation, and test sets.

4.5. Experiment Environment

The implementation of CodeBERT and distilCodeBERT is based on PyTorch [59] (version 1.9.0). The ELMo is provided by Tensorflow Hub (https://tfhub.dev/google/elmo/2, accessed on 27 November 2022) and the implementation is on Keras [60] (version 2.2.4) with Tensorflow [61] backend (version 1.14.0). The Word2Vec and FastText implementations are provided by the gensim package (version 3.8.3) [62] using all default settings. The Python implementation of GloVe is on GitHub (https://github.com/maciejkula/glove-python, accessed on 27 November 2022). The PCMs and the distilled PCMs as well as their implementations used in this paper can be found at Hugging Face AI community, which are as follows: RoBERTa (https://huggingface.co/bert-base-uncased, accessed on 27 November 2022), CodeBERT (https://huggingface.co/microsoft/codebert-base, accessed on 27 November 2022), Distilled RoBERTa (https://huggingface.co/distilroberta-base, accessed on 27 November 2022), Distilled CodeBERT (https://huggingface.co/huggingface/CodeBERTa-small-v1, accessed on 27 November 2022), and TinyBERT (https://huggingface.co/huawei-noah/TinyBERT_General_4L_312D, accessed on 27 November 2022). Experiments are carried out on a Windows Server 2019 system equipped with 128GB RAM and an NVIDIA RTX 3090 GPU.

5. The Vulnerability Detection Framework and Evaluation Results

This section presents the evaluation and analysis of results. Based on the outcomes of the experiments, an end-to-end function-level vulnerability detection framework called DistilVD is proposed and further evaluated by comparing with five baseline systems.

5.1. Experiment Results for Answering RQ1 and 2

To find out whether the PCMs are more effective than the NCMs in terms of detecting function-level vulnerabilities, we carry out nine groups of experiments based on the settings described in the previous sub Section 4.4.2. Table 6 lists the number of vulnerable functions detected in the test set and the P@K and R@K calculated according to the retrieved k functions, where k ranges from 50 to 400. We set the number of k as being less than 400 because there are 394 vulnerable functions in the test set according to the settings of this experiment.

Table 6.

The comparison results of using nine different embedding solutions for function-level vulnerability detection. The nine models are three NCMs which are Word2Vec, FastText, and GloVe; three PCMs which are ELMo, RoBERTa, and CodeBERT; three distilled PCMs which are distilled RoBERTa, distilled RoBERTa, and distilled CodeBERT.

In summary, all PCMs, including the distilled ones, achieved significantly better performance than the NCMs on our dataset, as Table 6 shows. Among three NCMs, Word2Vec outperformed FastText and GloVe. However, among the returned 400 functions, the network with Word2Vec could only correctly find 150 vulnerable functions, accounting for 38% of the total (top-k recall 38%). ELMo, which achieved the worst performance among PCMs, outperformed Word2Vec. When retrieving 400 functions, the network with ELMo could identify 153 vulnerable functions.

Compared with PCMs, their distilled counterparts slightly underperformed. When retrieving 400 functions, RoBERTa could find 235 vulnerable functions while distilled RoBERTa found 227, which was 2% less. When retrieving 400 functions, the network with distilled CodeBERT found 235 vulnerable functions while the network incorporated with CodeBERT found 237, accounting for 60% of the total. When returning less than 200 functions, distilled CodeBERT performed slightly better than CodeBERT. When more than 200 functions were returned, the network with CodeBERT achieved better performance.

5.2. Efficiency Analysis for Answering RQ3

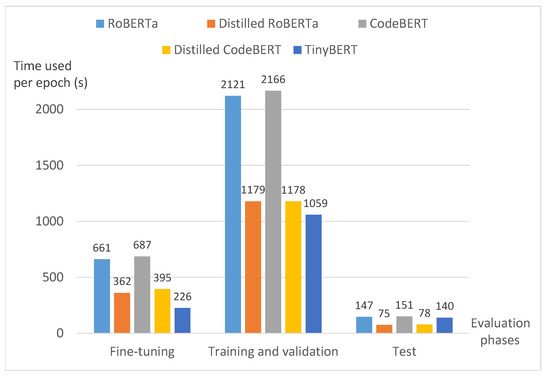

Since the performance difference between PCMs and their distilled counterparts was within 5%, we examined their efficiency in the fine-tuning, training/validation, and test phases according to the experiment setting described in sub Section 4.4.2. Due to ELMo having an apparent performance gap between the other PCMs and distilled PCMs, we exclude ELMo in the efficiency analysis. For the remaining five models, we monitor the time consumed for different evaluation phases and we record the average time used during one epoch for the five models, as it is shown in Figure 4.

Figure 4.

The comparison of the efficiency among five PCMs and distilled PCMs, measured by the time used in each epoch during the fine-tuning, training/validation, and test phases (the test phase is treated as one epoch). All models are evaluated with the training batch size being equal to 8 and validation batch size being 16.

As it can be seen, CodeBERT consumed more time than the other models in three evaluation phases. When fine-tuning on 40,000 synthetic functions, it took CodeBERT 487 s to finish one epoch. Similarly, RoBERTa needed 661 s in the fine-tuning phase. However, for their distilled counterparts, the time needed for fine-tuning almost reduced to half, being 395 and 362 s, respectively. During the training and validation phase, both CodeBERT and RoBERTa required more than 2100 s to finish one epoch. For distilled CodeBERT and distilled RoBERTa, they could save almost 1000 s per epoch, which were about 84%, and 80% faster, respectively. During the test phase, the time used for the distilled PCMs was also reduced to nearly half. Among the distilled PCMs, the TinyBERT was the most efficient during the fine-tuning, training, and validation phases.

Noticeably, the computational resources invested for the adoption of all PCMs and distilled PCMs should also include the pre-training and distillation phases. If the time and resources used for per-training are not considered, the distilled CodeBERT is the most cost-effective model among all models since it outperformed other distilled PCMs while achieving similar performance to that of CodeBERT.

5.3. Experiments for Answering RQ4

Since the distilled CodeBERT is more cost-effective than other models in our dataset, we encapsulate distilled CodeBERT as an end-to-end detection framework named DistilVD for detecting function-level vulnerabilities. Particularly, we aim to compare the detection performance of DistilVD with some selected baseline systems in simulated real-world scenarios where there are limited vulnerable samples for training.

To examine the effectiveness of DistilVD for identifying vulnerable functions, five existing function-level detection systems were used as baselines for comparison. The experiment setting is described in sub Section 4.4.2. Table 7 lists the results of DistilVD and five baseline systems on four open-source projects which are FFmpeg, OpenSSL, LibTIFF, and LibPNG. The number of functions retrieved ranges from 10 to 200 since there are less than 200 vulnerable functions in the test set, as Table 5 shows.

Table 7.

Comparative results of DistilVD with different function-level vulnerability detection systems on four open-source projects: FFmpeg, OpenSSL, LibTIFF, and LibPNG.

In general, DistilVD was the best-performing system on project FFmpeg when retrieving more than 10 functions. As Table 7 shows, when returning 200 potentially vulnerable functions from the test set containing totally 2900 functions, DistilVD could correctly find 79 vulnerable functions, accounting for 65% of the total. For project OpenSSL, DistilVD outperformed the other detection systems when k ranges from 30 to 150. On project LibTIFF, when returning more than 50 functions, DistilVD also outperformed the other systems, correctly identifying 53 vulnerable functions, that is, found 79% of vulnerable functions in the test set. On project LibPNG, DistilVD achieved similar performance with DeepBalance in the majority cases and outperformed the other detection systems. For non-deep learning-based detection systems, Flawfinder and Cppcheck suffered from a relatively high false-positive rate on our dataset.

Apart from Flawfinder, all five systems performed well on project LibPNG. Table 7 shows that DistilVD, Multi-VD, and DeepBalance could identify all the vulnerable functions when 150 functions were retrieved. When returning 200 functions, all the deep learning-based detection systems could achieve 100% recall. Noticeably, Cppcheck achieved a recall of 91% when returning 87 functions, making its performance comparable with the other deep learning-based detection systems. In contrast, Flawfinder only identified 45% of the total.

The results suggest that, even with tens or hundreds of labeled vulnerable data for training, our framework incorporated with the distilled CodeBERT could achieve SOTA detection performance. It is reasonable to expect that, with more vulnerable data being added for pre-training and fine-tuning, the performance can be further improved.

5.4. Experiments for Answering RQ5

The performance of DistilVD for detecting vulnerability types is evaluated by comparing with three deep learning-based systems which are Cross-VD, Multi-VD, and DeepBalance. Figure 5 presents the results of detecting overall vulnerabilities and each vulnerability type. Results are measured by accuracy, precision, recall, and F1-score, and are shown in four sub-figures.

Figure 5.

Performance comparison of the proposed framework (shown as DistilVD), Cross-VD, Multi-VD, and DeepBalance for detecting overall vulnerabilities and individual vulnerability types. (a) the accuracy achieved by four systems; (b) the precision achieved by four systems; (c) the recall achieved by four systems; (d) the F1-score achieved by four systems.

In general, a performance boost is witnessed for all four detection systems when comparing with their performance on four open-source projects. This is because the majority samples used for forming the 20,000-sample dataset for evaluating the performance of vulnerability type derive from the SARD dataset. These samples are artificially constructed to simulate the real-world vulnerable patterns. Naturally, these synthetically generated patterns are not as complex and diverse as the real-world vulnerable patterns exhibited in the real-world software projects. Therefore, synthetic patterns are relatively easier to be captured by deep learning algorithms compared with the real-world ones.

As it is shown by Figure 5, DistilVD outperformed the other detection systems in detecting almost all selected vulnerability types, particularly for CWE-74, CWE-404, and CWE-704. It is the only method that achieved an overall accuracy of over 90%. For recall, DeepBalance slightly outperformed DistilVD when identifying five vulnerability types, which are CWE-191, CWE-369, CWE-573, CWE-665, and CWE-670 (see Figure 5c). When measuring in other metrics, especially the accuracy and the F1-score, DeepBalance was the second behind DistilVD, and obvious performance gaps can be observed.

The results reveal that DistilVD can be an ideal candidate for multi-class vulnerability detection tasks. The 6-layer distilled CodeBERT is deeper than the networks implemented by the baseline systems. Therefore, it can be seen as a bigger container, capable of holding more knowledge and having more potential of capturing the subtle differences among the vulnerable patterns of different types when more data are available. Nevertheless, how various vulnerable patterns of different vulnerability types affect the performance of DistilVD remains to be further studied.

In summary, compared with NCMs, PCMs are more effective in terms of generating vulnerable representations, leading to improved detection performance in our dataset. Due to identifying vulnerabilities requiring the analysis of code contexts, we attribute the performance improvement to PCMs being capable of capturing long-range dependencies of code and being able to better understand contextual semantics. In addition, PCMs are usually deeper and larger than NCMs in terms of the model structure, which has the potential of capturing more diverse patterns of vulnerabilities and obtaining profound meanings of code tokens. This may also contribute to more effective vulnerable function detection.

Applying PCMs for vulnerability detection can be computationally expensive. Thus, knowledge distillation is utilized for compressing the PCMs. The evaluation shows that the distilled PCMs are smaller in size and faster to train, validate, and test but achieve similar detection performance. DistilVD is based on distilled CodeBERT which achieves better performance compared with baseline systems in terms of detecting vulnerable functions and identifying vulnerability types.

5.5. Limitations and Future Work

The proposed framework, DistilVD, has several limitations which are worthy of further study. Firstly, DistilVD shares the limitations of static code analysis, which means that it will have relatively high false-positive rate compared with dynamic methods. Furthermore, as DistilVD focuses on the detection at the granularity of function level, vulnerabilities that span multiple functions or files cannot be identified by DistilVD. A feasible future direction can be to detect patterns of unsanitized sink and untrusted variables so that a potentially vulnerable data flow can be identified.

Secondly, in contrast to static tools such as Flawfinder and Cppcheck, our proposed method falls into the category of supervised learning approach. Hence, the time and computational resources invested for model tuning and training need to be considered in practice. In addition, the performance of DistilVD highly depends on the quality and quantity of labeled data. However, this issue will be addressed since there are increasingly more publicly available datasets appeared online and the computational resources are becoming cheaper and more accessible. The PCMs for specific code analysis task are also becoming popular, such as GraphCodeBERT [30] and CuBERT [15], which can be added for evaluating their effectiveness for generating code representations for vulnerability detection.

A possible future direction can be constructing a PCM solely for the analysis of C and C++ code. In this paper, distilled CodeBERT used in our framework is not pre-trained using C/C++ languages, and we fine-tune it on 40,000 synthetic C functions. It is interesting to examine if distilled CodeBERT is pre-trained on a large amount of C/C++ code, how much performance gain can be obtained for bug or vulnerability detection on C/C++ software projects.

Thirdly, the proposed framework directly processes on source code sequences, obtaining only the "flatten" representations from code while neglecting structural information. Considering that several program representations, such as Abstract Syntax Tree (ASTs) and Control Flow Graphs (CFGs), are presented in graph/tree format, the structural representations of code extracted from graphs may reveal useful information indicative of potentially vulnerable patterns. Therefore, another interesting research direction can be incorporating a GNN for extracting structural representations from code graphs.

Furthermore, this paper can be extended to have a more comprehensive and systematic performance comparison with more vulnerability detection approaches. Currently, we only compare DistilVD with function-level detection methods which extract representations from code sequences. There are several recent studies which focus on other detection granularities, e.g., Vuldeepecker [10], which is based on “Code gadget” and Devign [3] and FUNDED [42] which are based on graph representations derived from code. Comparing with a wide range of studies can help us better understand the limitations of our method and leads to further improvement.

6. Conclusions

We perform a comprehensive comparison to evaluate nine non-contextualized and contextualized models to systematically study their feasibility and effectiveness for software vulnerability detection. Empirical study revealed that PCMs were more effective than NCMs in terms of detecting function-level vulnerabilities. Among the PCMs, the distilled PCMs were slightly underperformed in terms of the detection performance, but they were approximately 80% faster to train compared with their undistilled counterparts. Based on the empirical results, we introduce DistilVD, a static vulnerability detection framework based on the distilled CodeBERT that was the most cost-effective model in our evaluation. Comparative experiments proved the effectiveness of the distilled CodeBERT for generating useful representations for downstream vulnerability detection. Further evaluations on four real-world open-source projects and multi-class vulnerability detection confirmed that DistilVD was a competitive solution among many vulnerability detectors.

It is hoped that our benchmark can be a reference for researchers who work in the field of data-driven code analysis and bug/vulnerability detection. The proposed framework, DistilVD, which is an end-to-end detector, can be beneficial to developers who have a small number of vulnerable functions at hand and want to quickly test whether their source code contains unidentified vulnerabilities. For researchers, DistilVD can act as a baseline system that can be evaluated/compared with new detection methods and approaches. Additionally, part of our data used in this work is publicly available, and we will publish the whole dataset, hoping that it will contribute to this fast-developing field.

Author Contributions

Conceptualization, G.L. and H.J.; methodology, G.L. and H.J.; software, G.L., H.J. and D.W.; validation, G.L., H.J. and D.W.; formal analysis, G.L. and H.J.; investigation, G.L., H.J. and D.W.; resources, G.L. and H.J.; data curation, G.L. and H.J.; writing—original draft preparation, G.L. and H.J.; writing—review and editing, G.L. and D.W.; visualization, G.L. and D.W.; supervision, H.J. and D.W.; project administration, H.J.; funding acquisition, G.L. and H.J. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Fujian Natural Science Foundation Project, under Grant No. 2021J011131 and No. 2021J011128; and in part by the National Education Science Planning Key Topics of the Ministry of Education—”Research on the core quality of applied undergraduate teachers in the intelligent age”, under Grant No. DIA220374; and in part by the Research on Education and Teaching Reform Project in Sanming University, under Grant No. J2010305 and No. J2010307.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Part of the dataset used in this paper is publicly available at: https://cybercodeintelligence.github.io/CyberCI/, accessed on 27 November 2022.

Acknowledgments

The authors are thankful for the support of the University of Fujian Provincial Key Laboratory of Industrial Big Data Analysis and Application and the Fujian Key Lab of Agriculture IOT Application.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lin, G.; Wen, S.; Han, Q.; Zhang, J.; Xiang, Y. Software Vulnerability Detection Using Deep Neural Networks: A Survey. Proc. IEEE 2020, 108, 1825–1848. [Google Scholar] [CrossRef]

- Statista. Number of Common IT Security Vulnerabilities and Exposures (CVEs) Worldwide from 2009 to 2021. 2021. Available online: https://www.statista.com/statistics/500755/worldwide-common-vulnerabilities-and-exposures/ (accessed on 4 August 2022).

- Zhou, Y.; Liu, S.; Siow, J.K.; Du, X.; Liu, Y. Devign: Effective Vulnerability Identification by Learning Comprehensive Program Semantics via Graph Neural Networks. In Proceedings of the NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Dong, Y.; Guo, W.; Chen, Y.; Xing, X.; Zhang, Y.; Wang, G. Towards the detection of inconsistencies in public security vulnerability reports. In Proceedings of the 28th USENIX Security Symposium USENIX Security 19, Santa Clara, CA, USA, 14–16 August 2019; pp. 869–885. [Google Scholar]

- Zhang, J.; Pan, L.; Han, Q.L.; Chen, C.; Wen, S.; Xiang, Y. Deep Learning Based Attack Detection for Cyber-Physical System Cybersecurity: A Survey. IEEE/CAA J. Autom. Sin. 2021, 9, 377–391. [Google Scholar] [CrossRef]

- Fruhlinger, J. The Heartbleed Bug: How a Flaw in OpenSSL Caused a Security Crisis. 2022. Available online: https://www.csoonline.com/article/3223203/the-heartbleed-bug-how-a-flaw-in-openssl-caused-a-security-crisis.html (accessed on 19 November 2022).

- Lin, G.; Zhang, J.; Luo, W.; Pan, L.; Xiang, Y. POSTER: Vulnerability Discovery with Function Representation Learning from Unlabeled Projects. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 2539–2541. [Google Scholar]

- Lin, G.; Zhang, J.; Luo, W.; Pan, L.; Xiang, Y.; De Vel, O.; Montague, P. Cross-project transfer representation learning for vulnerable function discovery. IEEE Trans. Ind. Inform. 2018, 14, 3289–3297. [Google Scholar] [CrossRef]

- Li, Z.; Zou, D.; Xu, S.; Jin, H.; Zhu, Y.; Chen, Z.; Wang, S.; Wang, J. SySeVR: A Framework for Using Deep Learning to Detect Software Vulnerabilities. arXiv 2018, arXiv:1807.06756. [Google Scholar] [CrossRef]

- Li, Z.; Zou, D.; Xu, S.; Ou, X.; Jin, H.; Wang, S.; Deng, Z.; Zhong, Y. VulDeePecker: A Deep Learning-Based System for Vulnerability Detection. arXiv 2018, arXiv:1801.01681. [Google Scholar]

- Harer, J.A.; Kim, L.Y.; Russell, R.L.; Ozdemir, O.; Kosta, L.R.; Rangamani, A.; Hamilton, L.H.; Centeno, G.I.; Key, J.R.; Ellingwood, P.M.; et al. Automated software vulnerability detection with machine learning. arXiv 2018, arXiv:1803.04497. [Google Scholar]

- Lin, G.; Zhang, J.; Luo, W.; Pan, L.; De Vel, O.; Montague, P.; Xiang, Y. Software vulnerability discovery via learning multi-domain knowledge bases. IEEE Trans. Dependable Secur. Comput. 2019, 18, 2469–2485. [Google Scholar] [CrossRef]

- Li, Z.; Zou, D.; Xu, S.; Chen, Z.; Zhu, Y.; Jin, H. Vuldeelocator: A deep learning-based fine-grained vulnerability detector. IEEE Trans. Dependable Secur. Comput. 2021, 19, 2821–2837. [Google Scholar] [CrossRef]

- Wei, H.; Lin, G.; Li, L.; Jia, H. A Context-Aware Neural Embedding for Function-Level Vulnerability Detection. Algorithms 2021, 14, 335. [Google Scholar] [CrossRef]

- Kanade, A.; Maniatis, P.; Balakrishnan, G.; Shi, K. Learning and evaluating contextual embedding of source code. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 5110–5121. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. Adv. Neural Inf. Process. Syst. 2013, 26, 3111–3119. [Google Scholar]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching Word Vectors with Subword Information. Trans. Assoc. Comput. Linguist. 2017, 5, 135–146. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Peters, M.E.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep contextualized word representations. In Proceedings of the North American Chapter of the Association for Computational Linguistics, New Orleans, Louisiana, 1–6 June 2018. [Google Scholar]

- Gardner, M.; Grus, J.; Neumann, M.; Tafjord, O.; Dasigi, P.; Liu, N.H.; Peters, M.; Schmitz, M.; Zettlemoyer, L.S. A Deep Semantic Natural Language Processing Platform. arXiv 2017, arXiv:1803.07640. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Feng, Z.; Guo, D.; Tang, D.; Duan, N.; Feng, X.; Gong, M.; Shou, L.; Qin, B.; Liu, T.; Jiang, D.; et al. Codebert: A pre-trained model for programming and natural languages. arXiv 2020, arXiv:2002.08155. [Google Scholar]

- Jiao, X.; Yin, Y.; Shang, L.; Jiang, X.; Chen, X.; Li, L.; Wang, F.; Liu, Q. Tinybert: Distilling bert for natural language understanding. arXiv 2019, arXiv:1909.10351. [Google Scholar]

- Manias, G.; Mavrogiorgou, A.; Kiourtis, A.; Kyriazis, D. An Evaluation of Neural Machine Translation and Pre-trained Word Embeddings in Multilingual Neural Sentiment Analysis. In Proceedings of the 2020 IEEE International Conference on Progress in Informatics and Computing (PIC), Shanghai, China, 18–20 December 2020; pp. 274–283. [Google Scholar]

- Alon, U.; Zilberstein, M.; Levy, O.; Yahav, E. code2vec: Learning distributed representations of code. Proc. ACM Program. Lang. 2019, 3, 40. [Google Scholar] [CrossRef]

- Lin, G.; Xiao, W.; Zhang, J.; Xiang, Y. Deep learning-based vulnerable function detection: A benchmark. In Proceedings of the International Conference on Information and Communications Security, Beijing, China, 15–17 December 2019; pp. 219–232. [Google Scholar]

- Lin, G.; Xiao, W.; Zhang, L.Y.; Gao, S.; Tai, Y.; Zhang, J. Deep neural-based vulnerability discovery demystified: Data, model and performance. Neural Comput. Appl. 2021, 33, 13287–13300. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Guo, D.; Ren, S.; Lu, S.; Feng, Z.; Tang, D.; Liu, S.; Zhou, L.; Duan, N.; Svyatkovskiy, A.; Fu, S.; et al. Graphcodebert: Pre-training code representations with data flow. arXiv 2020, arXiv:2009.08366. [Google Scholar]

- Lomio, F.; Iannone, E.; De Lucia, A.; Palomba, F.; Lenarduzzi, V. Just-in-time software vulnerability detection: Are we there yet? J. Syst. Softw. 2022, 188, 111283. [Google Scholar] [CrossRef]

- Wang, S.; Liu, T.; Tan, L. Automatically learning semantic features for defect prediction. In Proceedings of the 38th International Conference on Software Engineering, Austin, TX, USA, 14–22 May 2016; pp. 297–308. [Google Scholar]

- Li, Z.; Zou, D.; Xu, S.; Jin, H.; Qi, H.; Hu, J. VulPecker: An automated vulnerability detection system based on code similarity analysis. In Proceedings of the 32nd Annual Conference on Computer Security Applications, New York, NY, USA, 5–8 December 2016; pp. 201–213. [Google Scholar]

- Liu, S.; Lin, G.; Han, Q.L.; Wen, S.; Zhang, J.; Xiang, Y. DeepBalance: Deep-learning and fuzzy oversampling for vulnerability detection. IEEE Trans. Fuzzy Syst. 2019, 28, 1329–1343. [Google Scholar] [CrossRef]

- Zou, D.; Wang, S.; Xu, S.; Li, Z.; Jin, H. μVulDeePecker: A deep learning-based system for multiclass vulnerability detection. IEEE Trans. Dependable Secur. Comput. 2019, 15, 2224–2236. [Google Scholar] [CrossRef]

- Lee, Y.J.; Choi, S.H.; Kim, C.; Lim, S.H.; Park, K.W. Learning binary code with deep learning to detect software weakness. In Proceedings of the KSII The 9th International Conference on Internet (ICONI) 2017 Symposium, Vientiane, Laos, 17–20 December 2017. [Google Scholar]

- Russell, R.; Kim, L.; Hamilton, L.; Lazovich, T.; Harer, J.; Ozdemir, O.; Ellingwood, P.; McConley, M. Automated vulnerability detection in source code using deep representation learning. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 757–762. [Google Scholar]

- Wu, F.; Wang, J.; Liu, J.; Wang, W. Vulnerability detection with deep learning. In Proceedings of the 2017 3rd IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 13–16 December 2017; pp. 1298–1302. [Google Scholar]

- Le, T.; Nguyen, T.; Le, T.; Phung, D.; Montague, P.; De Vel, O.; Qu, L. Maximal Divergence Sequential Autoencoder for Binary Software Vulnerability Detection; OpenReview.net: Vancouver, BC, Canada, 2018. [Google Scholar]

- Choi, M.j.; Jeong, S.; Oh, H.; Choo, J. End-to-end prediction of buffer overruns from raw source code via neural memory networks. arXiv 2017, arXiv:1703.02458. [Google Scholar]

- Sestili, C.D.; Snavely, W.S.; VanHoudnos, N.M. Towards security defect prediction with AI. arXiv 2018, arXiv:1808.09897. [Google Scholar]

- Wang, H.; Ye, G.; Tang, Z.; Tan, S.H.; Huang, S.; Fang, D.; Feng, Y.; Bian, L.; Wang, Z. Combining graph-based learning with automated data collection for code vulnerability detection. IEEE Trans. Inf. Forensics Secur. 2020, 16, 1943–1958. [Google Scholar] [CrossRef]

- Zheng, W.; Jiang, Y.; Su, X. VulSPG: Vulnerability detection based on slice property graph representation learning. arXiv 2021, arXiv:2109.02527. [Google Scholar]

- Zhuang, Y.; Suneja, S.; Thost, V.; Domeniconi, G.; Morari, A.; Laredo, J. Software Vulnerability Detection via Deep Learning over Disaggregated Code Graph Representation. arXiv 2021, arXiv:2109.03341. [Google Scholar]

- Ethayarajh, K. How contextual are contextualized word representations? comparing the geometry of BERT, ELMo, and GPT-2 embeddings. arXiv 2019, arXiv:1909.00512. [Google Scholar]

- Zaremba, W.; Sutskever, I. Learning to execute. arXiv 2014, arXiv:1410.4615. [Google Scholar]

- Olah, C. Understanding LSTM Networks. GITHUB Blog. 2015. Available online: https://colah.github.io/posts/2015-08-Understanding-LSTMs/ (accessed on 27 November 2022).

- Alammar, J. The Illustrated Transformer. 2018. Available online: http://jalammar.github.io/illustrated-transformer/ (accessed on 20 November 2022).

- Vig, J. A Multiscale Visualization of Attention in the Transformer Model. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Florence, Italy, 2019; pp. 37–42. [Google Scholar] [CrossRef]

- Mashhadi, E.; Hemmati, H. Applying CodeBERT for Automated Program Repair of Java Simple Bugs. arXiv 2021, arXiv:2103.11626. [Google Scholar]

- Allamanis, M.; Barr, E.T.; Devanbu, P.; Sutton, C. A survey of machine learning for big code and naturalness. ACM Comput. Surv. 2018, 51, 81. [Google Scholar] [CrossRef]

- Buciluǎ, C.; Caruana, R.; Niculescu-Mizil, A. Model compression. In Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Philadelphia, PA, USA, 20–23 August 2006; pp. 535–541. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Gou, J.; Yu, B.; Maybank, S.J.; Tao, D. Knowledge distillation: A survey. Int. J. Comput. Vis. 2021, 129, 1789–1819. [Google Scholar] [CrossRef]

- NIST. Software Assurance Reference Dataset Project. 2018. Available online: https://samate.nist.gov/SRD/ (accessed on 20 August 2022).

- Christopher, D.; Manning, P.R.; Schütze, H. Introduction to Information Retrieval; Cambridge University Press: Cambridge, UK, 2009; Chapter 8; pp. 151–175. [Google Scholar]

- Wheeler, D.A. Flawfinder. 2016. Available online: https://www.dwheeler.com/flawfinder/ (accessed on 20 May 2018).

- Perl, H.; Dechand, S.; Smith, M.; Arp, D.; Yamaguchi, F.; Rieck, K.; Fahl, S.; Acar, Y. Vccfinder: Finding potential vulnerabilities in open-source projects to assist code audits. In Proceedings of the 22nd SIGSAC Conference on CCS, Denver, CO, USA, 12–16 October 2015; pp. 426–437. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.e.a. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 8024–8035. [Google Scholar]

- Keras. 2015. Available online: https://github.com/fchollet/keras (accessed on 27 November 2022).

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A System for Large-Scale Machine Learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI 16), Savannah, GA, USA, 2–4 November 2016; Volume 16, pp. 265–283. [Google Scholar]