1. Introduction

As the infectious disease caused by the coronavirus (COVID-19) rapidly spread around the world, in March 2020 the World Health Organization declared a new infectious disease pandemic [

1]. COVID-19 is a respiratory infection syndrome caused by infection with the SARS-CoV-2 virus. COVID-19 is spread through droplets from coughing or sneezing or by touching objects contaminated with the virus and then touching the eyes, nose, or mouth. However, COVID-19 is a high-risk infectious disease that causes severe respiratory illnesses, such as pneumonia, or death. In particular, it is perilous for people over 65 with underlying medical conditions, such as high blood pressure [

2], diabetes [

3], chronic cardiovascular disease [

4], and obstructive pulmonary disease [

5]. To protect against COVID-19, limiting face-to-face contact with others is the best way to reduce the spread of the virus.

However, the elderly are suddenly cut off from the outside environment and social relationships due to social distancing [

6]. As the COVID-19 situation continues, older adults experience more limitations in going out and in daily activities, and depression increases as a result [

7]. In particular, since the elderly are classified as a high-risk group for infection, they are undoubtedly more susceptible to exposure to the COVID-19 virus [

8]. They are also restricted from daily activities because they are more fearful of COVID-19 than populations such as adolescents and adults. However, while containment of the physical environment is an effective way to prevent infectious diseases, social distancing increases psychological problems for which older people need to receive emotional support. Anxiety, stress, and life activities were all identified as contributing reasons for geriatric depression during the COVID-19 epidemic [

9]. However, there is a lack of research on how the spread of COVID-19 affects the elderly regarding their subjective health, stress levels, daily routines, and social distancing. Therefore, we suggest a deep neural network (DNN) model to predict the depression of the elderly during the pandemic period based on social factors related to stress, health status, daily changes, and physical distancing.

Artificial intelligence (AI) algorithms are frequently viewed as mysterious black boxes that make illogical choices. The idea that a machine-learning model and its output can be explained in a way that “makes sense” to a human being at an acceptable level is known as explainability (also called “interpretability”) [

10]. While they may be less effective, some classes of algorithms, such as more conventional machine-learning algorithms, tend to be easier to understand. While some are more efficient than others, such as deep-learning systems, they are still challenging to understand. The ability to better understand AI systems is still a topic of active research. There are numerous approaches for making the output of a black-box classification model more interpretable [

11,

12,

13,

14]. One strategy is providing a global explanation by cataloging traits that are often more significant when generating a prediction [

15]. However, it is preferable to have an instance-specific justification in healthcare. Consequently, patient can receive more tailored treatment as a result of increased autonomy in decision making.

In order to obtain an explanation for one particular occurrence, any black-box classification model may be utilized with a newly created framework known as local interpretable model-agnostic explanation (LIME) [

16]. This method works by providing local justification for the classification and identifying the fewest characteristics that maximally contribute to the likelihood of a single-class result for a single observation. Despite the fact that LIME has previously been used with healthcare classification models, nothing is known about how well healthcare professionals can understand and accept LIME explanations.

In this paper, we provide a combination model that combines a LIME-based explainability model with a deep-learning model. The integrated model can explain a DNN classifier’s predictions understandably and accurately. Additionally, our explainability model may help psychologists and psychiatrists identify depression in seniors following the COVID-19 epidemic. The construction of this study is as follows: Chapter 2 explains the sources of data, analyzed variables, and model evaluation and explains the procedure of the model development. Chapter 3 compares the results of the developed prediction model with those of ML classifier models, such as logistic regression, gradient-boosting classifier, extra-gradient-boosting classifier, K-neighbors classifier, and support vector machine with kernel radial basis function. Lastly, chapter 4 presents directions for future studies.

2. Materials and Methods

2.1. Materials

This study used raw data from the Korea Centers for Disease Control and Prevention’s 2020 Community Health Survey. Since 2008, the local social health survey has been conducted at public health centers across the country with the goal of identifying local health statistics in order to establish and evaluate local health and medical plans, as well as to ask health-related questions at the regional level. As of July 2020, the target population resided in the Republic of Korea. The survey’s target population was adults aged 19 and up who lived in a chosen sample household at the time of the survey. A detailed description of sampling in the Community Health Survey is described in Kang et al. [

17]. The community health survey was conducted by a trained researcher visiting the sample households directly from 16 August to 31 October 2020. The researcher went to 18 different locations to conduct face-to-face computer-assisted personal interviewing consisting of 142 questions. In the 2020 Community Health Survey, 229,269 people took part, with 97,230 of them being over the age of 60. However, 36,258 people with the presence of 22 variables were used for the final analysis.

2.2. Data Prepocessing

The dataset needed to be preprocessed before being fitted into the model. The dataset was preprocessed in this study to handle missing values, rebalance variables, and encode labels. In order to handle missing values, rows with missing values and columns with 50% null values were removed from the dataset. The dataset was reduced to 42,788 samples and 22 variables after removing missing values. As the target variable, we chose the feature called “dep,” which meant “experienced depression” (yes or no).

Only 2501 of the 42,788 samples in the dataset experienced depression, whereas the total number of individuals without experiences of depression was 40,287 (94% of the target feature). As a result, we had to rebalance the dataset using both the oversampling and undersampling methods. The minority samples’ size was increased at random by 30% of the majority goal, and then the majority samples’ size was lowered until the number of majority samples was equal to twice the minority in the current dataset. After rebalancing the dataset, the number of “experienced depression” samples was 12,086, and the number of “no experience with depression” samples was 24,172. Finally, the dataset had a total of 36,258 samples with 22 variables encoded, as shown in

Table 1.

2.3. Development of Deep Neural Network Model

In this study, we used a Keras sequential model wrapped in a SciKeras API to create a DNN model. In the Python programming language, Keras is a high-level neural network API [

18]. SciKeras is an easy-to-use API for working with Keras deep neural networks similar to Scikit-Learn API in machine learning (ML). This was accomplished by wrapping Keras with a Scikit-Learn interface. Then, we could use these wrapped instances as Scikit-Learn ML model instances and invoke methods such as fit(), predict(), and score(). The functions of GridSearchCV() and predict_proba() were mainly what we needed to apply for tuning hyperparameters and the LIME explanation in the following steps.

The neurons in the human brain serve as the basis for DNN design. It is a deeper-layered artificial neural network. When an artificial neural network, also known as a feedforward neural network, has more than one hidden layer, it is referred to as being “deep” [

19]. The phrase can also refer to a graded structure in which the depth designates the number of layers. The features computed in one hidden layer may be reused in subsequent hidden layers by deep neural networks. With fewer weights and units, a deep neural network (DNN) can approximate many natural functions by taking advantage of the compositional structure in a function [

20]. Similar to a lookup table, a shallow neural network must piece together the function it approximates, whereas a deep neural network can benefit from its hierarchical structure. By increasing the precision with which a function can be approximated on a fixed budget of parameters, a more complex architecture can improve generalization after learning new examples [

20].

The architecture of a DNN model and the activation function to be utilized must be decided before the model can be built. Therefore, a significant portion of deep learning involves hyperparameter optimization. The reason for this is the complexity and difficulty of configuring neural networks, which require setting a large number of parameters. Additionally, training time for individual models can be increased.

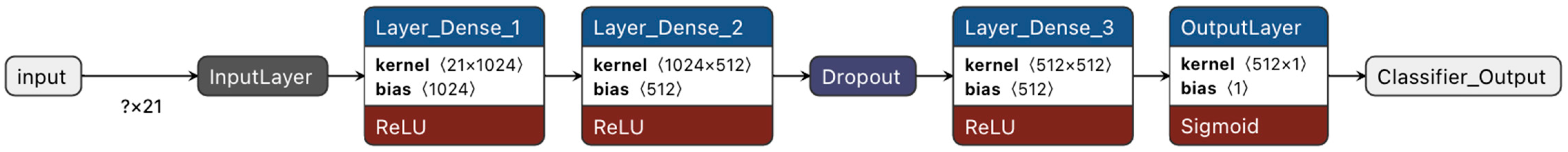

In this work, we tuned the hyperparameters of the DNN model using the grid search technique. Grid search is a method for optimizing model hyperparameters. This approach is available in Scikit-Learn via the GridSearchCV class. We could also use GridSearchCV for the Keras sequential model wrapped in SciKeras because the SciKeras API was similar to Scikit-Learn. One model was then built and assessed using the GridSearchCV procedure for each set of parameters. Each model was assessed using cross-validation, and we employed 5-fold cross-validation. We obtained the best model, which used three hidden layers, a ReLU activation function for hidden layers, a Sigmoid activation function for the output layer, a 0.5 dropout rate, an Adam optimizer with 0.001 of learning_rate, and a “binary crossentropy” loss function, after tuning the DNN model’s hyperparameters, as shown in

Figure 1.

A rectified linear unit (ReLU) activation function was employed for hidden layers, as already indicated. Any real value can be the input for a neuron with the ReLU activation function, but a neuron can only become active when the input is larger than 0. However, the Sigmoid function, which ranges from 0 to 1, makes it an excellent choice for output layers in the context of binary classification. In order to complete the necessary preparations for the model to learn, the dataset was split into two sets, one for training and one for testing, with an 80:20 split, respectively.

2.4. Model Evaluation

Evaluating the effectiveness of the proposed DNN model against competing methods for classification tasks is a crucial step after implementation. We used the loss function and the accuracy score to assess the model’s effectiveness. In addition to accuracy, the most well-known terms for a binary classification test include precision, recall, and F1-score, all of which provide statistical measurement of a classifier model’s efficacy. The calculations of the accuracy, precision, recall, and F1-scores are shown in Equations (1)–(4), respectively.

A loss function, sometimes called a cost function, is a statistical measure that considers the degree of dissimilarity between a prediction and an actual value. As a result, we can observe a model’s performance in more detail. Additionally, the test’s accuracy was determined by its capacity to distinguish between depression and non-depression instances. Calculating the proportion of true-positive and true-negative results in all the analyzed cases was necessary to estimate the test’s accuracy. Meantime, precision was determined by dividing the number of correctly identified positive samples by the total number of positive samples. Precision gauges how accurately a sample is classified as positive by a model. On the other hand, the recall was determined by dividing the total number of correctly categorized positive samples by the overall number of positive samples. Recall gauges a model’s capacity to identify positive samples. The more positive samples identified, the larger the recall. Consequently, the F1-score equalized the weights of recall and precision by being the harmonic mean of the two. It is useful when both recall and precision are important. In addition to performance evaluation based on the aforementioned measures, the model’s improvement percentage was also computed. The improvement was calculated by comparing the accuracy results from the DNN and SVM models.

2.5. Developing Model Explanations Using LIME

Explaining the results of a machine-learning (ML) system constitutes establishing a connection between the inputs to a system and the results the system produces in a way that humans can understand. This subject matter has become quite important in recent years [

21]. Modern machine-learning systems (such as deep-learning-based prediction models) include highly parametric designs that make it challenging to understand the reasoning behind a model’s conclusions. In this study, we used local interpretable model-agnostic explanations (LIME) to develop explanations for the output of the model, indicating the correlation between depression and other variables.

Using the LIME technique, any black-box machine-learning model can be approximated with a local, interpretable model to account for each distinct prediction. The inspiration for the concept came from a 2016 study in which the authors perturbed the initial datapoints, fed them into a black-box model, and then observed the related results. The algorithm then weighted the additional datapoints in relation to the original point. In the end, the dataset was fitted using a surrogate model of linear regression utilizing the sample weights. Each raw datapoint could then be explained using the trained explanation model. More precisely, we let Ω(g) be a measure of complexity. In the classification, f(x) represented the probability (or binary indicator) that x belonged to a certain class. In order to determine the locality around x, we further used π

x(z) as a closeness measure between an instance of z to x. Let

(f, g, π

x) be a measure of how inaccurately g approximated f in the locale denoted by π

x. We needed to keep Ω(g) low enough to be interpretable by people while minimizing

(f, g, π

x) to guarantee local fidelity and interpretability. As a result, a LIME explanation was obtained:

. This formulation could be used with different explanation families G, fidelity functions L, and complexity measures Ω [

22].

4. Discussion

4.1. Risk of Depression

Depression is a phenomenon that manifests itself noticeably in instances of disaster or calamity [

23]. Because infectious diseases, such as COVID-19, have higher morbidity and mortality rates than natural catastrophes or acts of terrorism, they have greater negative effects on the overall populace. Researchers from all over the world are gravely concerned about the rise in mental health problems in both vulnerable communities and the general population. Wang et al. (2020) [

24] evaluated psychological impact, stress, anxiety, and depression in a study about the general population’s mental health during the COVID-19 outbreak in China. Regarding anxiety and depression, 8.1% of respondents to the initial poll reported moderate-to-severe stress. In a different study from China, Liu et al. (2020) [

25] examined 253 people from one of the areas most impacted by the COVID-19 pandemic and found that 7% of them had post-traumatic stress symptoms one month after the pandemic’s breakout. According to Özdin et al. (2020) [

26], who examined the elements that contributed to depression in the Turkish population during the COVID-19 pandemic, 45.1% of the population and 23.6% of the population, respectively, scored over the depression cut-off mark and with depression, respectively. In line with this study’s concerns, Hyland at al. (2020) [

27] found that the general Irish population’s 65-year-old and older residents experienced the highest levels of anxiety specifically related to the COVID-19 pandemic.

Previous studies have shown that the impact of COVID-19 has significantly impacted the mental health of people in different countries. As pandemics of new infectious diseases bring intensive infectious disease management policies, such as isolation, quarantining, social distancing, and cohort blockading, at the national level, the levels of social and economic anxiety in the local community may be greater than that of natural disasters. In particular, the elderly are more likely than younger individuals to experience major mental health problems [

23]. These affect physical health, as well as the quality of life, of these individuals. Thus, it is essential to preserve individuals’ mental health and to develop psychological interventions that can improve the mental health of vulnerable groups during the COVID-19 pandemic. However, not much extensive epidemiological research is available on elderly community residents’ anxiety and mental health during the COVID-19 epidemic. Longitudinal studies are necessary to determine the traits of depression focusing on the high-risk COVID-19 depression group reported in this study.

4.2. Proposed Evaluation Framework

The accuracy of the DNN model was 89.92%, higher than that of the LR, GBM, XGB, KNN, and SVM models, which varied from 4% to 13%. This result supports the idea that a DNN is a good classifier for large amounts of data. The dataset used for this research had a very imbalanced initial set of class labels. Additionally, it had a sizable amount of missing values. Although random oversampling and random undersampling were utilized to circumvent this, it was apparent that these dataset restrictions could lead to bias. The fact that 66.67% of the samples were accepted as non-depression was likely why the test performance of our best model performed better for the larger non-depression group than for the smaller depression group.

This research offered a methodology for objectively assessing LIME with healthcare-related black-box machine-learning models. The interdependence of LIME’s performance with that of a machine-learning model must be considered when assessing LIME or other model-agnostic interpretability methodologies. This is significant because the end user can consider LIME and a machine-learning model to be one system. This means that, if a model performs poorly, LIME may be rated unfairly. The most obvious answer to this problem is to improve a model’s performance in order to reduce its detrimental impact on LIME evaluation. We employed the GridSearchCV approach in this study to tune the DNN model’s hyperparameters. In order to improve the performance of the model, we plan to test other hyperparameter-tuning techniques in the future, such as Bayesian, hyperband, and hyperopt.

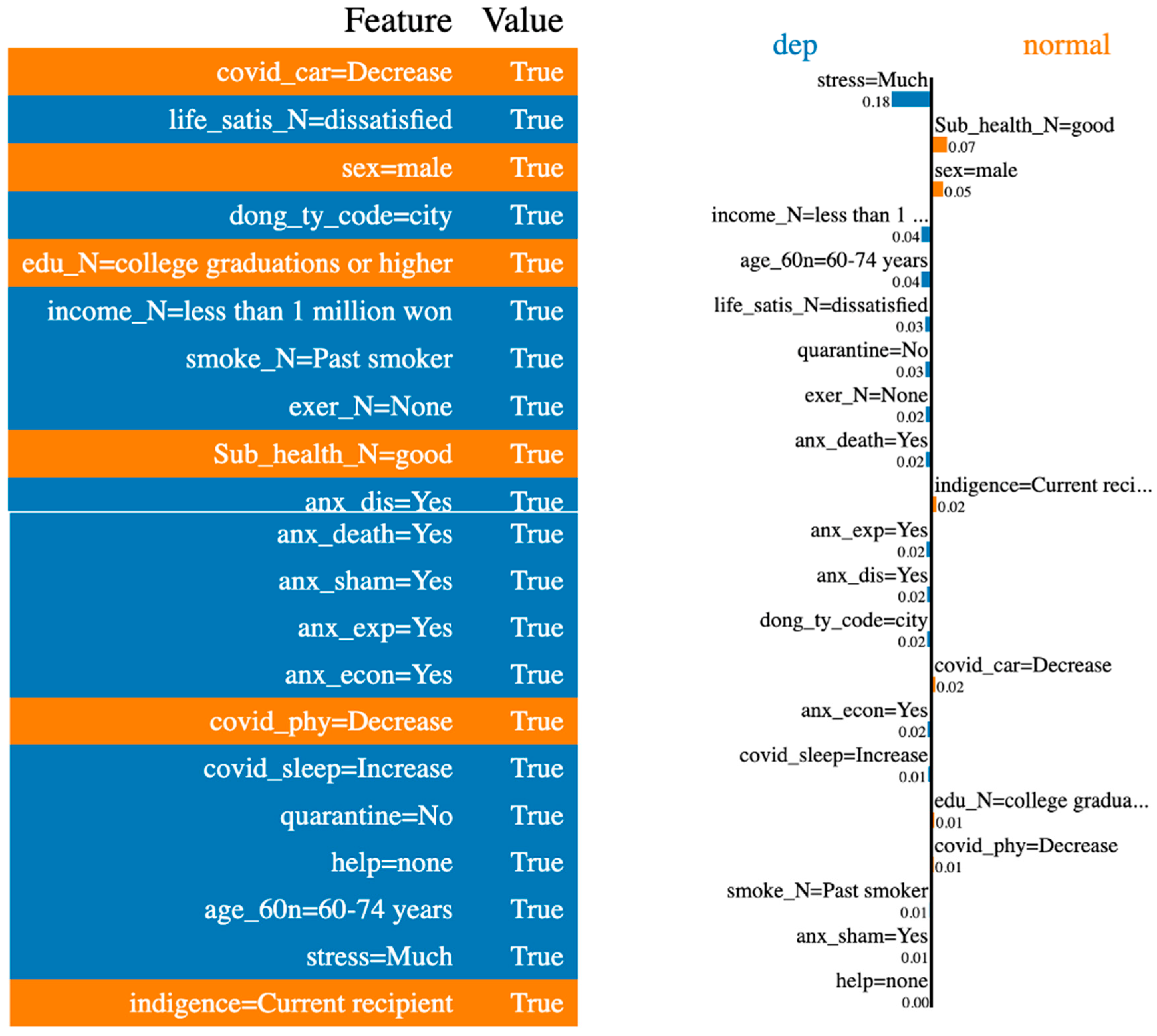

According to our LIME study, stress, subjective health, sex, changes in sleep-time activity after COVID-19, and age (in the order listed) made the greatest contributions to the prediction. In actual life, depression quite likely results from these conditions [

28,

29,

30,

31]. As a result, our DNN model could be utilized as a tool to help psychologists or psychiatrists treat patients, as well as to predict a patient’s status using large-scale surveys.

4.3. Limitations

LIME has many benefits. It is flexible enough to be utilized in various machine-learning models, simple to deploy, quick to compute, and good at giving concise explanations that appeal to physicians on the go. However, there are some restrictions that make up the majority of the current LIME research. First, LIME’s explanations are not always stable or consistent; this is because different samples are used, or different boundaries are drawn around which local datapoints are included for a local model. Furthermore, LIME’s explanations of a simple, local linear model may not necessarily be consistent with the model’s global logic for complicated datasets. Additionally, it is unclear how to choose the kernel width or the number of features for a local model, which forces a compromise between the complexity and interpretability of a local model.

Moreover, this study used raw data from the Korea Centers for Disease Control and Prevention’s 2020 Community Health Survey. It is difficult to perform diagnostic tests for depression in large-scale epidemiological studies. For this reason, our study, which analyzed secondary data, used depression experience as an outcome variable. In future studies, we intend to include a medical diagnosis of depression as a variable in the outcome.

In the current study, our DNN model’s hyperparameters were optimized to achieve the greatest performance. Ensemble learning, however, can combine several individual models to obtain a generalized performance [

32]. As a result, we intend to improve our model in the future to increase its reliability for mental healthcare by developing an explainable deep-ensemble-learning model.