Abstract

Reporting the empirical results of swarm and evolutionary computation algorithms is a challenging task with many possible difficulties. These difficulties stem from the stochastic nature of such algorithms, as well as their inability to guarantee an optimal solution in polynomial time. This research deals with measuring the performance of stochastic optimization algorithms, as well as the confidence intervals of the empirically obtained statistics. Traditionally, the arithmetic mean is used for measuring average performance, but we propose quantiles for measuring average, peak and bad-case performance, and give their interpretations in a relevant context for measuring the performance of the metaheuristics. In order to investigate the differences between arithmetic mean and quantiles, and to confirm possible benefits, we conducted experiments with 7 stochastic algorithms and 20 unconstrained continuous variable optimization problems. The experiments showed that median was a better measure of average performance than arithmetic mean, based on the observed solution quality. Out of 20 problem instances, a discrepancy between the arithmetic mean and median happened in 6 instances, out of which 5 were resolved in favor of median and 1 instance remained unresolved as a near tie. The arithmetic mean was completely inadequate for measuring average performance based on the observed number of function evaluations, while the 0.5 quantile (median) was suitable for that task. The quantiles also showed to be adequate for assessing peak performance and bad-case performance. In this paper, we also proposed a bootstrap method to calculate the confidence intervals of the probability of the empirically obtained quantiles. Considering the many advantages of using quantiles, including the ability to calculate probabilities of success in the case of multiple executions of the algorithm and the practically useful method of calculating confidence intervals, we recommend quantiles as the standard measure of peak, average and bad-case performance of stochastic optimization algorithms.

Keywords:

algorithmic performance; experimental evaluation; metaheuristics; quantile; confidence interval; stochastic algorithms; evolutionary computation; swarm intelligence; experimental methodology MSC:

68T20

1. Introduction

Computers can solve many versatile problems quickly, accurately and efficiently, because of good exact algorithms. However, for some problems, such exact and efficient methods are unknown, despite the decades of effort from the research community. For some problems, such as NP-hard problems [1], out of which many are NPO [2], it is still an open theoretical question as to whether it is even possible to have such algorithms. For NP-hard and NPO problems, the existing exact algorithms are not efficient i.s. for solving large problem instances; on a modern supercomputer it can take an unreasonable amount of time like e.g., hundreds of years. Because of this, swarm and evolutionary computation methods provide the pragmatic approach of creating approximate algorithms that often give good results in a reasonable time.

There is also an important theoretical result, the no free lunch theorem [3], that applies to stochastic optimization algorithms such as swarm and evolutionary computation, that states [4]:

That any two algorithms are equivalent when their performance is averaged across all possible problems.

Because of this, one stochastic optimization algorithm might have better results with one set of problems, but worse results on some other set of problems than another particular algorithm. Since we cannot expect to have one algorithm for all such problems, there are many different stochastic optimization methods, and all of them need to be evaluated experimentally.

In the area of swarm intelligence and evolutionary computation, the vast majority of scientific articles contain some sort of experimental research. Nevertheless, performing experiments and presenting the results correctly is not a trivial task. The main source of hardness is the stochastic nature of these algorithms, and their inability to guarantee an optimal solution in a reasonably short time. Although the stochastic nature of swarm and evolutionary computation algorithms creates a challenge for experimental work, the randomness should not be seen as a weakness of these algorithms, but their main strength. By rerunning the stochastic algorithms it is possible to obtain better solutions than would be possible if these algorithms were derandomized, e.g., by fixing an initial seed for the random number generator. Therefore, it is important to note that appropriate research methodology should embrace this randomness and not try to avoid it.

The shortcomings of common experimental practice are becoming more evident and recognized by the scientific community; thus, improving the experimental research methodology has become a hot topic. Many newly published works point out the pitfalls in current practice, or propose certain procedures or methodology [5,6,7,8,9,10,11,12,13,14].

When it comes to measures or indicators of algorithmic performance, the current practice is all about average performance. As Eiben and Jelasity [15] pointed out:

In EC (EC is the abbreviation for Evolutionary Computation), it is typical to suggest that algorithm A is better than algorithm B if its average performance is better. In practical applications, however, one is often interested in the best solution found in X runs or within Y days (peak performance), and the average performance is not that relevant…

Their proposal was to use the best obtained solution for assessing peak performance. It is worth noting that reporting the best obtained solutions and using them for comparing algorithms was rather common long before Eiben and Jelasity recommended it as a measure for peak performance. Birattari and Dorigo [16] correctly observed that the best achieved result cannot be reproduced by another researcher, and thus should not be used as a measure of algorithmic performance. Even though they did confirm that

…it is perfectly legitimate to run (algorithm) A for N times and to use the best result found; in this sense, the authors of [15,17] are right when they maintain that a proper research methodology should take this widely adopted practice into account.

Thus, the problem of proper research methodology for peak performance and multiple runs remained an open question.

In [18], we proposed quantiles as a measure of algorithmic performance that can solve problems of peak performance and multiple executions of swarm and evolutionary optimization algorithms easily. That paper argued theoretically that, besides the aforementioned suitability, quantiles have other very important advantages over the arithmetic mean. However, this paper did not present empirical examples for using quantiles, and did not elaborate procedures that can quantify statistical errors caused by a limited number of algorithm repetitions in experiments.

In this paper, we compared arithmetic mean and quantiles on empirical examples, which confirmed some of our theoretical predictions. Moreover, we have found a practical way of dealing with statistical errors when using quantiles to assess algorithmic performance.

In spite of the fact that research methodology is a rather active area of swarm and evolutionary computation, other publications deal mostly with other aspects of empirical research. In [5] Derrac et al., (2021) proposed the usage of nonparametric procedures for pairwise and multiple comparisons of evolutionary and swarm intelligence algorithms. Omran et al., (2022) proposes the use of permutation tests for metaheuristic algorithms [14]. Osaba et al., (2021) covered various phases of research methodology in their tutorial [7], while Mernik et al., (2015) warned against mistakes which can make the comparison of metaheuristics unfair [8]. Črepinšek, Lie and Mernik presented guidelines for assisting researchers to allow replications and comparisons of computational experiments when solving practical problems [9]. Lie et al. (2019) discovered and analyzed two possible paradoxes, namely, “cycle ranking” and “survival of the nonfittest”, which can happen when comparison of multiple algorithms is performed [10], while Yan, Liu and Li (2022) proposed a procedure to avoid these paradoxes happening [11]. For many other related works, we suggest an exhaustive review of the performance assessment methodology published by Halim, Ismail and Das [6] in 2021, which contains more than 250 referenced works about experimental methodology.

Our contribution to the experimental methodology is in finding a suitable measure of peak and bad-case performance, giving an interpretation of the probability of achieving a solution after one or multiple executions of the algorithm, or the probability of succeeding in finding the required solution after a specified number of function evaluations, experimental findings about discrepancies between the arithmetic mean and median and finding a suitable way of calculating confidence intervals of probabilities in quantiles.

The remainder of this paper is structured as follows. In Section 2, we explain quantiles and their advantages for assessing algorithmic performance. In Section 3, we propose a method for dealing with statistical errors related to limited sample size. Section 4 contains a description of the experimental settings, and provides the obtained results and their analysis. The final conclusions are given in Section 5.

2. Quantiles or Percentiles in Measuring Algorithmic Performance

Arithmetic mean is a very basic statistical measure, and many schoolchildren know how to calculate the arithmetic mean of a sample. Quantiles in general do not experience nearly such widespread use, but they are common in medicine, science and technology, especially some specific quantiles.

Quantiles are associated with the proportion or probability p, and can be denoted with symbol or as p quantile. By definition, for some random variable X from the population and probability p, it holds that

E.g., for , there is at least a 30% probability that randomly chosen variable X from the population will be lesser than or equal to , and at least a 70% probability that X will be greater than or equal to .

Some quantiles have special names, such as median, quartiles and percentiles. A median is a 0.5 quantile, i.e., . The first, the second and the third quartiles are equal to 0.25 quantile, 0.5 quantile and 0.75 quantile, respectively, i.e., , , . Similarly, 10th percentile, 50th percentile, and 90th percentile are equal to 0.10 quantile, 0.50 quantile and 0.90 quantile, respectively, i.e., , , and .

Quantiles are commonly used when there is an interest in performance or the relative position of some data in comparison to others. Johnson and Kuby wrote in [19] that

Measures of position are used to describe the position a specific data value possesses in relation to the rest of the data when in ranked order. Quartiles and percentiles are two of the most popular measures of position.

One example of well-known usage of quartiles is for scientific journal rankings, where Q1 journals might generally be more respected than Q2, Q3 or Q4 journals. Physicians often use percentiles when they assess children’s height and weight according to their age, by comparing them against, e.g., World Health Organization (WHO) growth reference data [20]. Percentiles are especially common in medicine [21,22,23] and environmental science [24,25,26]. They are also used to analyze application performance and network resources’ monitoring [27,28,29,30], and in many other areas.

It is interesting to note the difference between a median (0.5 quantile) and an arithmetic mean. For example, a mean salary is something that is more interesting for a government or a company owner, because, when a mean salary is multiplied by the number of workers, it is possible to calculate the total amount of money. On the contrary, for a person who is considering working in a certain country, company or particular profession, the median (or some other percentile) salary is much more interesting. This is especially important when the median and mean are significantly different, e.g., when their distribution is asymmetrical, because arithmetic mean in this case can be rather misleading; for example, in a small company with eight employees and an owner, where the owner pays himself 1990 and the other employees 300, 310, 320, 320, 330, 330, 340 and 350 (in a particular currency). If a mean salary, which is 510, is advertised as an average salary, this would be highly misleading, and the median, which is 330, would be much more appropriate. (A similar example is provided in the book Elementary Statistics [19], and the values in this paper have bean adjusted so that the mean to median ratio is roughly equal to USA wages in 2020 [31]).

In the performance assessment of evolutionary computation and swarm intelligence optimization algorithms, there are significant advantages of using percentiles or other quantiles [18]:

- Percentiles can be used for peak performance, average performance and bad-case performance. In the case of minimization problems for peak performance, it would be suitable to use , , , , etc. For average performance, it would be suitable to use the median instead of the arithmetic mean, and for bad-case performance Q3 = , , , etc.

- Percentiles have a nice interpretation in a way that says something about the solution quality and the probability of achieving such a solution. This is in the case that computational resources such as time or the number of generated solutions is constrained, and the solution quality is the observed value; e.g., there is at least a 50% probability of achieving a solution of quality or better. In a different case, when the required solution quality is specified, and time (or the number of function evaluations or some other computational resource) is the observed value, percentiles can provide data about time and probability that a specified solution will be obtained within such time; e.g., there is at least a 50% probability that a solution of the specified quality will be obtained in, at most, time.

- Percentiles can take into account the common and useful practice of multiple runs of an algorithm for the same problem instance. The advantage of the stochastic nature of algorithms can be exploited in this way. Table 1 contains the probabilities for a certain number of repetitions (n) and quantiles (), so when the algorithm is run only once, there is at least a 0.75 probability of obtaining a solution of quality or better than that. When the same algorithm is repeated 10 times, there is at least a 0.9437 probability of obtaining a solution of quality or better, and at least a 0.99999905 probability of achieving a solution of quality or better. The probability of 0.99999905 is calculated based on the equation in [18], and is rounded to 1.0000 in Table 1.

Table 1. Probabilities for multiple runs rounded to four digits.

Table 1. Probabilities for multiple runs rounded to four digits. - Percentiles can be used even when the arithmetic mean is impossible to calculate. This applies only to the case when the required solution quality is specified and the needed time (number of generated solutions, number of iterations or some other computational resources) is the observed value.

- Evolutionary computation and swarm intelligence algorithms can have very asymmetrical distribution, and a 50th percentile (median) can be more adequate than the arithmetic mean for average performance.

Median, quartiles, percentiles and other quantiles are easy to calculate manually and there are readily available functions, MEDIAN(), QUARTILE() and PERCENTILE(), in spreadsheet software such as Microsoft Excel and LibreOffice Calc. It is interesting to note that, in Microsoft Excel and Libre Office Calc, the function PERCENTILE() takes a probability that can represent a non-integer percent, and thus, actually calculate any quantile, not only percentiles.

3. Statistical Errors

If one could repeat an experiment an infinite number of times, she or he could know the true distribution and calculate the true arithmetic mean, true median and other true quantiles. In practice, it is only possible to repeat an experiment a limited number of times, get some random sample and calculate the sample mean or chosen quantiles from that sample. The calculated mean and quantiles generally differ from their true values, and usually with a larger sample, one can expect to increase accuracy, i.e., to get calculated values that are closer to the true parameters.

To make the discussion about statistical errors more explicit, let there be an algorithm and a problem instance for which true values and the experimentally obtained sample values are as follows:

- .

In this case, the error for the arithmetic mean is . For the quantile, it is possible to consider two approaches. One way to think about the empirical estimate of the quantile is to say that the probability of is set as absolute, and that the error of the estimated value is . The other way is to think that value is accurate, and that the error is made in the statement about probability p, which might be somewhat different from . Therefore, the error made in the probability is .

By using bootstrapping it is possible to calculate confidence intervals based on the empirical distribution of data, and not relying on some presumed theoretical distribution, e.g., normal distribution. From the experimentally obtained sample it is easy to calculate the arithmetic mean, if one exists, or a desired quantile of the sample. After that, the accuracy of this procedure can be estimated with a bootstrapping technique which can provide a standard error, a bias, and a confidence interval. The confidence interval for some statistic value () can be calculated with the help of and , both obtained with the resampling of the bootstrap process. For example, normal approximation of the 68% confidence interval is defined by expression (2) and a 95% confidence interval by expression (3).

Of course, only in the case that the bias is equal to zero, expressions (2) and (3) are simplified to (4) and (5) and expression (4) is often written in a shorter way, as (6).

However, in publications in the area of evolutionary computation and metaheuristics, currently, it is common to see a reported arithmetic mean and standard error without noting or mentioning the bias, although without any statistical tests that would confirm the apparent assumption that the bias is indeed equal to, or close to zero.

4. Experimental Research

To demonstrate our proposals in practical research, two sets of experiments were carried on the CEC2010 [32] benchmark, which contains unconstrained continuous optimization problems. It has 20 single-objective, unconstrained problem instances, named F01, F02, …, F20. For each problem instance, the number of used variables is ten (). The nature-inspired optimization algorithms, labeled as A, B, C, D, E, F, and G, are actual implementations of the artificial bee colony (ABC) algorithm [33], the coral reefs optimization (CRO) algorithm [34], adaptive differential evolution with an optional external archive (JADE) [35], self-adaptive differential evolution (JDE) [36], particle swarm optimization (PSO) [37], the random walk algorithm (RWSi) and a self-adaptive differential evolution algorithm with population size reduction and three strategies (jDElscop) [38], respectively. For these algorithms, we used implementations from the open-source EARS project [39], including RWSi, which is a simple random walk algorithm without a learning mechanism. The algorithm F (RWSi) was used only as a baseline algorithm that we expected would, normally, always be the worst algorithm. We made the source code of all the algorithms that are used in this research publicly available at https://github.com/UM-LPM/EARS/tree/master/src/org/um/feri/ears/algorithms/so to disclose all the implementation details and to promote replication of the results by other researchers. These algorithms are just examples that we used to demonstrate the proposed methods, and to capture some of the phenomena that occur during this kind of research. We chose rather different types of algorithms with completely different origins, and also a few rather similar algorithms (three variants of differential evolution), because both situations occur in experimental research. There are, of course, a very large number of other algorithms that could have been used, such as social engineering optimizer (SEO) [40], ant inspired algorithm [41], grasshopper optimization algorithm [42], red deer algorithm (RDA) [43], quantum fruit fly optimization algorithm [44] and beetle antennae search (NABAS) algorithm [45] to name only a small fraction of the possibilities. Our experiment relied heavily on a random number generator; therefore, we used the well-tested Mersenne Twister Random Number Generator [46]. We also used the recommended parameter settings for each implemented algorithm. We did not include them in this section because we want to avoid moving the focus from the experimental methodology to the algorithms, but we have added them in the Appendix A in order to enable the replication of the conducted experiments. The reason for using labels A to G for the algorithms was to emphasize that this is not a research study about finding the best algorithm in its best implementation and parameter settings for the used problem instances. This is a research study about experimental methodology, and the algorithms are merely realistic examples. Each experiment was repeated 101 times, and, after that, the (median) and arithmetic mean were calculated to assess the average performance of the algorithm. The sample size of 101 was chosen to allow simple and unambiguous calculation of different quantiles, as explained in [18]. For the peak performance we used , and for the bad-case performance, .

Two sets of experiments were performed—one with a restricted number of function evaluations in which the solution quality was the observed value—and the other in which a particular solution quality was required and the number of function evaluations was observed.

Quantiles alone can be used to evaluate or specify the performance of a stochastic algorithm on a particular problem instance, but, in addition, some statistical tests can be performed when the performance of multiple algorithms on many problem instances is of interest. For that purpose, the Friedman test can be used, along with post hoc procedures for pairwise comparison. In these procedures, quantile values can be used as input data. The Friedman test is a rank-based non-parametric statistical test that requires random data that can be ordered for each row (problem instance). This test does not require that data obey some specific distribution (such as normal distribution). Calculated percentiles, or other quantiles from a random sample, are random variables, and their values can be ordered; therefore, they can be used as the input for a Friedman test.

Since quantiles provide some guarantees about the probability of getting a certain solution quality or succeeding after a certain number of function evaluations, it might be interesting to provide some confidence intervals to quantify possible statistical errors in the assessment of true quantiles. For this purpose, we have used the bootstrapping method with 10,000 replications, and the first-order normal approximation for a 95% equi-tailed two-sided confidence interval.

The rest of this section is organized as follows. In Section 4.1, the average performance is analyzed by using the median and arithmetic mean of the solution quality. The assessment of average performance had somewhat similar results for arithmetic mean and median, but there were some discrepancies. The most important, based on the arithmetic mean, algorithm D was the best average performing, but, based on median, algorithm C was the best-performing algorithm. Further investigation of the differences between the performance of algorithm C and algorithm D is presented in Section 4.2, and their results revealed that the median was the better measure of average performance than arithmetic mean. In Section 4.3, we report the results of the average performance assessment based on observations about the required computational resources (number of function evaluations). In this case, the arithmetic mean was totally inadequate, and median proved to be a usable approach. Section 4.4 contains the results of the peak performance and bad-case performance assessments for the observed solution quality and observed number of function evaluations. In Section 4.5, we present the 95% confidence intervals for arithmetic mean, median, and calculated with the bootstrap method.

4.1. Average Observed Solution Quality

In this Section, we present the experiments and results acquired by limiting the number of function evaluations to 10,000 for each algorithm and observing the quality of the obtained solutions. The arithmetic means of solutions and the median solutions () for the conducted experiments are presented in Table 2 and Table 3, respectively. The number in the parentheses represents the rank that a particular algorithm achieved for the selected problem instance when compared with other algorithms on the same problem instance. The best algorithm for a particular problem instance, marked with bold font in the Tables, is normally ranked with 1, and the worst is ranked with 7, e.g., algorithm C for F07 achieved rank 1 in terms of mean solution, since it performed better than any other tested algorithm (Table 2). In some cases, multiple algorithms shared the best rank for a particular problem instance. For example, algorithm C and algorithm G shared the second and third places and had the rank 2.5 for the problem instance F18 (Table 3). At the bottom row of each Table, there is an average rank for a particular algorithm that can be used in a Friedman test.

Table 2.

Arithmetic mean of solution quality with corresponding rank.

Table 3.

Quantiles of solution quality with corresponding rank.

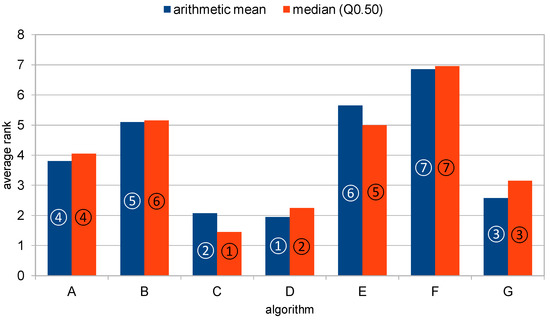

When it comes to arithmetic mean, algorithm D was the best-ranked algorithm, followed by algorithms C, G, A, B, E, F. In the ranking based on median, the best algorithm was algorithm C, followed by algorithms D, G, A, E, B and F. These results are illustrated in Figure 1. As expected, algorithm F (RWSi), which was used as the baseline algorithm, was ranked in the last position by both approaches. The results suggest that there was a rather high agreement between arithmetic mean and median () in the average performance assessment. However, there is an important disagreement in the decision as to which algorithm achieved the best average performance. For arithmetic mean, this was algorithm D, but for median, this was algorithm C. This issue is investigated further in the following Section.

Figure 1.

Comparison of arithmetic mean and median-based reasoning about algorithmic performance.

4.2. Disagreement of Mean and Median

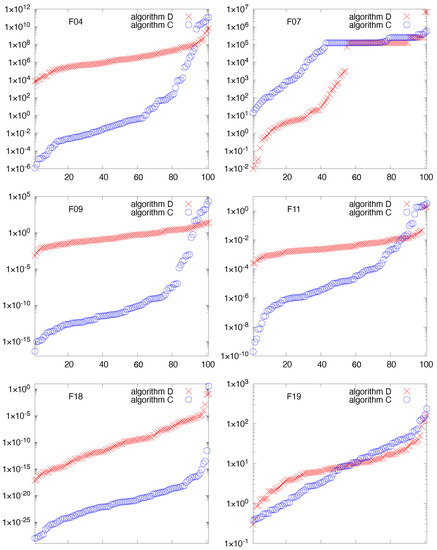

A deeper investigation of algorithmic performance was carried out due to the discrepancies between arithmetic mean and median () for algorithms C and D. By comparing the data from Table 2 and Table 3, it is evident that the arithmetic mean and median disagree about the manifested performance of algorithms C and D for problem instances F04, F07, F09, F11, F18 and F19. For other problem instances, arithmetic mean and median agreed which is better—algorithm C or algorithm D. Thus, it was necessary to conduct a deeper analysis only for the affected instances.

A pairwise comparison of algorithms C and D based on complete lists of the obtained solutions sorted by solution quality for a particular problem instance is shown in Figure 2. The numbers on the abscissa represent the position of the solution in the sorted list. The best solution is the first in the list (number 1) and the worst solution is the last in the list (number 101). The ordinates show the solution quality in a logarithmic scale, due to huge differences between the best and the worst solutions.

Figure 2.

Solution quality for the sorted list of solutions obtained by algorithms C and D for F04, F07, F09, F11, F18 and F19 instances, respectively. The ordinate shows the quality of solution (also known as fitness or cost) and the abscissa the cardinal number of solutions in the sorted list − number 1 is the best obtained solution and number 101 is the worst solution.

For problem instance F04, the arithmetic mean suggests that algorithm D was better than algorithm C, and median suggests the opposite (Table 2 and Table 3). However, the results presented in Figure 2 reveal that algorithm C outperformed algorithm D significantly, which is in agreement with the median and in disagreement with the arithmetic mean. There were 82 solutions (out of 101) obtained by algorithm C that were better than the best solution obtained by algorithm D. When comparing the two sorted lists, algorithm D has a better solution than algorithm C only in place 94. Only in a few rare cases for which algorithm C obtained the worst solutions did the arithmetic mean fail in comparing algorithms C and D.

For most of the other problem instances, F07, F09, F11 and F18, a detailed analysis revealed that median made a good judgment and arithmetic mean failed. In some cases, this was even more emphasized than in the case of F04, but for F07, this is less visible from the graph in Figure 2, since the two lines are close to each other. Actually, for F07, algorithm D found 33 solutions that were better than the best solution found by algorithm C, and, moreover, only in positions 100 and 101 did algorithm C have better solutions than algorithm D.

The only problem instance for which the detailed analyses of the complete list of solutions did not provide an obvious verdict was F19. Based on the obtained lists of solutions, it is hard to decide for this instance which algorithm was better—algorithm C or algorithm D. They both showed similar performance, and perhaps the decision depends on personal preference.

Taking into consideration that, for five out of six interesting problem instances, the median provided an adequate assessment and the arithmetic mean failed, and in only one case the discrepancy between median and arithmetic mean could not be resolved, it seems that the median was more successful in evaluating algorithmic performance than the arithmetic mean.

4.3. Average Observed Number of Function Evaluations

In this Section, we report the average performance based on the second set of experiments, where a particular solution quality was specified, an optimal solution with the precision of , and the number of function evaluations was the observed quantity. This was possible since, for all problem instances, the optimal solutions were known. When the algorithm did not reach such solution after 100,000 function evaluations, the algorithm was interrupted as unsuccessful.

The major issue with this approach is how to deal with executions of the algorithm that cannot find solutions of required quality for an unacceptably long time. In these cases, the algorithm has to be interrupted, and these runs can be declared unsuccessful. It is obvious if the algorithm was interrupted after 100,000 function evaluations that the algorithm would need more than 100,000 function evaluations, and possibly an infinite number of evaluations to reach the desired solution quality.

Unfortunately, it is rather common to see published results where this fact is ignored completely. Some researchers simply calculate the arithmetic mean only from successful runs, or take into account the number of function evaluations after which the algorithm was interrupted as if it was a successful run. To illustrate such inadequate practice, we have presented “results” this way in Table 4. In this case, out of 140 results in this Table, 61 are reported incorrectly. The wrongly reported results are emphasized in the text by a bold font. If we had used the limiting number of function evaluations instead (100,000), there would be 96 wrongly reported results out of 140 results.

Table 4.

Arithmetic mean of the required number of function evaluations with corresponding rank when unsuccessfu cases are simply ignored—the wrong way to report results.

The most ridiculous example of such malpractice occurred for the problem instance F01 in Table 4. Here, the algorithm F (RWSi) had the best result! The arithmetic mean of the required number of function evaluations was one. This is a very puzzling result, taking into account that other algorithms needed thousands or tens of thousands of function evaluations to obtain the specified solution quality. The secret of “such success” is revealed in Table 5, where we reported the success rate. Out of 101 runs, algorithm F (RWSi) succeeded only once, and that was in the first iteration of the algorithm, so the arithmetic mean of successful runs seems incredibly good. Obviously, knowing the whole story, it is very unlikely that some researchers would consider the algorithm F (RWSi) to be the best algorithm for F01. This ridiculous situation also happened for some other problem instances, e.g., for F11 algorithm E, the mean number of the function evaluations for successful runs was 4128, which was better (ranked) than D, which had 4821, although the success rate for algorithm E was only 2%, and for algorithm D, it was 79% (Table 5).

Table 5.

Success rate (SR).

The correct way to report arithmetic mean is shown in Table 6. However, ranking n/a results should be conducted with special caution. In general, these results are unknown, but not necessarily worse than other results for a particular problem instance. So, here, another serious problem arises. The whole ranking for this problem instance may be compromised because of maybe only one n/a result in the row (for one problem instance). Unfortunately, because of the many n/a results in Table 6, it is impractical or impossible to assess algorithmic performance correctly based on the arithmetic mean.

Table 6.

Arithmetic mean of the required number of solutions—the correct way.

The medians () of the required number of function evaluations needed to obtain the specified solution quality are presented in Table 7. When using quantiles, there are no n/a results, but there are some results. In these cases, it is known that their value is larger than the prespecified value, but the exact value cannot be calculated. It is important to observe that the number of n/a in Table 6 is 96, and the number of results for median is 59. More usable results in the case of median when compared with arithmetic mean were expected, and can be explained easily by taking the success rate into consideration (Table 5).

Table 7.

Median () of the required number of solution evaluations with corresponding rank.

It is useful to note that for quantiles is a more usable result than n/a for arithmetic mean. In addition, it is important to note that cannot compromise the whole row (the results for one problem instance) as n/a in the case of arithmetic mean can. However there is one subtle question. What about different results for some particular problem instance? Is it suitable to give them equal ranking, since we do not know the exact values of the quantiles? One way of proceeding with this is that the researcher or practitioner decides that all are unacceptable and equally bad; thus, equal ranking is suitable. Alternatively, perhaps some additional criteria could be used to rank these solutions between themselves, such as the success rate or the quality of the obtained solutions. In our report, we have chosen the first possibility.

4.4. Peak Performance and Bad-Case Performance

In order to assess the peak performance of the algorithms we calculated , and for bad-case performance, we calculated . The assessment was conducted on two sets of performed experiments. In the first set of experiments, the number of function evaluations was set to 10,000 and the quality of solution was the observed value. In the second set of experiments, the optimal solution within precision was required, and the number of function evaluations was the observed value.

The results of the experiments with and of the observed solution quality are presented in Table 8 and Table 9, respectively. Of course, the peak performance of the algorithms was better than the average (Table 3) or bad-case performance. Additionally, in the case of peak performance, it was more common for the algorithms to achieve optimal results, and, thus, more often have tied scores. The results for the observed number of function evaluations necessary to achieve the required solution quality are presented in Table 10 for and in Table 11 for . In the case when the number of function evaluations was higher than the defined threshold of 100,000, we reported the value , and we ranked all those occasions with the worst rank. The worst rank was sometimes shared within multiple algorithms, since they all failed to obtain the required solution within the allowed resources. Of course, within , there was the smallest number of cases, while had the largest number of such cases.

Table 8.

Quantiles of the obtained solution quality.

Table 9.

Quantiles of the obtained solution quality.

Table 10.

Quantiles of the required number of solutions evaluations with corresponding rank.

Table 11.

Quantiles of the required number of solution evaluations with corresponding rank.

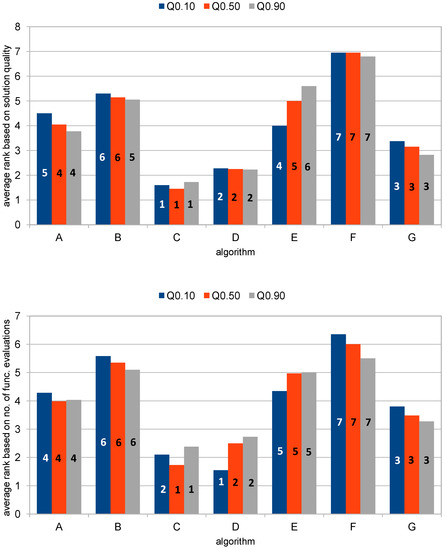

Figure 3 shows the relative positions of the algorithms based on solution quality on the top, and based on the number of function evaluations on the bottom. Regarding the solution quality, algorithm C has the best rank, algorithm D the second best, algorithm G the third best and algorithm F the worst rank, with , and . Algorithms A, B, and E have different relative positions for , , and . e.g., algorithm E had better peak performance than algorithms A and B, while it had worse bad-case performance than those algorithms.

Figure 3.

Average ranks based on , and in the case of the observed solution quality and the number of function evaluations.

Regarding the number of function evaluations, algorithm D had the best performance and the second-best performance for and , while algorithm C had the second-best performance for and the best performance for and . The rest of the algorithms are positioned in the following order: G, A, E, B and F for , and , where F is the worst, which is not surprising, since this is a simple random walk algorithm without any heuristics or learning capabilities (RWSi).

When choosing the best algorithm, it is important to know that the correct decision depends on the conditions in which the algorithm is used. Some algorithms might be more greedy and converge faster to some reasonably good solutions, while the others converge slowly to much better solutions. Under different stopping criteria, different algorithms can achieve the best performance. Additionally, some algorithms can have better peak performance than other algorithms, and worse average and bad-case performance than those other algorithms. The choice of using peak performance (), average performance () or bad-case performance () depends on the application case and personal preference, if only one execution is intended. In the case of multiple executions of the algorithm, the peak performance can be the best choice, since the probability of getting such solutions can be increased arbitrarily with the number of executions [18].

In the case that different algorithms are used in combination manually or to assemble some hyper-heuristics, it is not enough to take into account only average ranks. Based on the results in Table 8, the combination of algorithms C, D and E would be much better than the combination of C, D and G, although algorithm G had a better average rank (3.375) than algorithm E (4.00).

4.5. Confidence Interval for Calculated Statistic

If one could repeat an experiment an infinite number of times, then the true distribution would be observed, and calculating the true arithmetic mean, true median and other true quantiles might be possible. In practice, it is only possible to repeat an experiment a limited number of times, get a random sample and calculate the sample mean or chosen quantile from that sample. The calculated mean or quantile generally differ from their true values, and usually with a larger sample, one can expect to increase accuracy, i.e., to get calculated values that are closer to the true parameters.

By using the obtained sample and the bootstrapping technique, it is possible to provide a confidence interval for arithmetic mean. Confidence intervals for the calculated arithmetic means are presented in Table 12, e.g., for algorithm A and problem instance F1, the confidence interval is to , and inside that interval is the calculated mean value (Table 2).

Table 12.

Confidence intervals for sample mean of the solution quality.

In the case of quantiles, there are two possible options in providing confidence intervals. One option is to consider the probability of the quantile to be the absolute value, and that an error happened in assessing the quantile’s value, such as in the case of arithmetic mean. The other option is to consider the quantile value to be absolute, and that the error is instead in the probability. For this study, we chose the second option, and provided confidence intervals for quantile probability, as presented in Table 13. Perhaps it is easier to accept small insecurity due to statistical error in the probability of finding the solution, rather than in the value of the solution, but there are also other more important advantages of the second option over the first one. So, instead of writing the calculated sample value, e.g., for algorithm D and problem F20 (Table 3), it would be possible to write with 95% confidence .

Table 13.

Confidence intervals of the probability for sample quantiles of solution quality.

When the qualities of the obtained solutions around a particular quantile have very different values from a large range, then the confidence intervals for quantile values can be rather large. In contrast with that, the obtained confidence intervals for quantile probability are very stable, e.g., for all cases in which the calculated probability was exactly 0.5, the confidence interval for the true probability was , when the bounds are rounded to two decimal places. In other words, for each sample quantile where p was calculated to be 0.5, with 95% equi-tailed two-sided confidence, the true . However in these cases, there were small differences, which are apparent when the bounds of the confidence interval are written with more decimal places, e.g., with higher precision, these intervals are [0.4029417, 0.5972663], [0.4030771, 0.5975189], [0.4016863, 0.5972697], etc.

As presented in Table 13, there are cases in which the interval bounds are significantly higher than 0.4 and 0.6. This happened when with some , e.g., for problem F13 and algorithm E, we obtained such solutions that the calculated sample values were for which the calculated probability is 0.68 and 95% confidence interval is . For a detailed inspection of such phenomena, all solutions for F13 obtained by algorithm E are presented in Appendix B. It might be useful to note that higher probabilities (around 0.68) are better than minimum set to 0.5. This is why we use the phrase “with probability at least p” and do not use “with probability p”.

An interesting case happened for F15 and algorithm C, where the calculated probability was 0.99 and the confidence interval calculated by the bootstrap was , because bootstrap treats these values like any other values, not knowing that they are probabilities. Taking into account that these values are probabilities and that the solution is indeed obtained by the algorithm, the confidence interval is actually an intersection of the bootstrap obtained interval and a half open interval . In the case of F15 and algorithm E, this gives the final interval . For some cases, all bootstrap resampled data have the probability 1.00, and therefore, the true probability is either exactly 1.00 or very close to this value, but the bootstrap method cannot calculate confidence intervals. In these cases, we have used the notation .

The results for 95% confidence intervals of probabilities for sample and are presented in Table 14 and Table 15, respectively.

Table 14.

Confidence intervals of the probability for sample quantiles of solution quality.

Table 15.

Confidence intervals of the probability for sample quantiles of solution quality.

A nice property of confidence intervals of probabilities, unlike the confidence intervals of arithmetic mean, is that values are easy to compare across algorithms and across problem instances (horizontally and vertically in the Tables).

5. Conclusions

Measuring the performance of stochastic optimization algorithms that are used in swarm and evolutionary computation is rather challenging. Their stochastic nature is often seen as a weakness, but we argue that this property can be exploited, and can become an advantage with multiple executions of stochastic algorithms. This paper explored the benefits of using quantiles as a measure of performance of such algorithms experimentally. Using quantiles solves the problem noted by Eiben and Jelasity [15] that, in practice, usually the peak performance is more important than the average performance of the algorithm. This peak performance cannot be measured by the best obtained solution, as warned by Birattari and Dorigo [16]. As a solution to this problem, we proposed quantiles for this purpose, such as the 0.05 quantile, 0.1 quantile or 0.25 quantile. The experiments performed in this research confirmed that quantiles, in this case the 0.1 quantile, are suitable for this task. Similarly, the performed experiments demonstrated that quantiles are very useful for measuring average performance (median) and bad-case performance (e.g., the 0.9 quantile).

The experimental comparison revealed that arithmetic mean and median assessment of average performance based on solution quality was somewhat similar, but there were some important discrepancies. The arithmetic mean appointed one algorithm as the best average performing, and the median appointed the other. Detailed analyses revealed that the median was more relevant as a measure of average performance.

When it came to average performance based on the number of function evaluations, the experiments confirmed that arithmetic mean was completely inadequate, and that median was a practically usable method for that approach, not only for average performance, but also for peak performance and bad-case performance.

An important benefit of using quantiles to measure the performance of stochastic optimization algorithms is that it allows calculation of the probabilities of achieving high-quality solutions after multiple executions of the algorithm. This probability can be increased arbitrarily with the number of multiple executions.

The performed research also demonstrated the very practical method of using bootstrap techniques to find the confidence intervals for the probability of empirically obtained quantiles.

Due to their numerous advantages, we propose that quantiles should be used as a standard measure of peak, average and bad-case performance for stochastic optimization algorithms.

Our contribution to the experimental methodology is in founding adequate measures of peak and bad-case performance, applying quantiles in a way that binds probability with single and multiple executions of the algorithm, experimental findings of the discrepancy between the arithmetic mean and median and finding the adequate method of assigning confidence to sampled quantiles.

In our future work, we plan to investigate the possibilities of adapting these methods, and retaining their interpretations to assess the performance of stochastic optimization algorithms on dynamic optimization problems and stochastic optimization problems.

Author Contributions

Conceptualization, N.I., R.K. and M.Č.; methodology, N.I.; software, M.Č.; validation, N.I., R.K. and M.Č.; formal analysis, N.I.; investigation, N.I.; resources, M.Č.; data curation, M.Č. and N.I.; writing—original draft preparation, N.I., R.K. and M.Č.; visualization, N.I. and M.Č. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data used in this research are provided in this article.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Parameters of the Algorithms Used in this Research

Appendix A was added in revised version of the paper

- ABC (A)

- -

- population size (pop_size) = 60

- -

- food number (foodNumber) = 30

- CRO (B)

- -

- width of coral reef grid (n) = 10

- -

- height of coral reef grid (m) = 10

- -

- percentage of an initially occupied reef (rho) = 0.6

- -

- percentage of broadcast spawners (fbs) = 0.9

- -

- percentage of depredated corals (rho) = 0.1

- -

- percentage of broadcast spawners (fbs) = 0.1

- -

- attempts to settle (attemptsToSettle) = 3

- JADE (C)

- -

- population size (pop_size) = 30

- -

- CR mean adaptive control parameter (muCR) = 0.5

- -

- location adaptive control parameter (muF) = 0.5

- -

- elite factor (p) = 0.05

- -

- adaptive factor (c) = 0.1

- JDE (D)

- -

- population size (pop_size) = 30

- PSO (E)

- -

- population size (pop_size) = 30

- -

- inertia weight (omega) = 0.7

- -

- cognitive coefficient (C1) = 1.4

- -

- social coefficient (C2) = 1.4

- RWSi (F)

- jDElscop (G)

- -

- starting population size (variable_pop_size) = 100

Appendix B. All Solutions Obtained by Algorithm E on Problem Instance F13

These are all the solutions obtained by algorithm E on problem instance F13, sorted in ascending order: 0.000432, 0.000535, 0.000757, 0.003873072, 0.003951989, 0.004050086, 0.004051413, 0.004479071, 0.006448967, 0.006952159, 0.007619501, 0.007991934, 0.008536945, 0.011411089, 0.012323921, 0.018586634, 0.166896547, 0.166896547, 0.166896547, 0.166896547, 0.166896547, 0.166896547, 0.166896547, 0.166896547, 2.209658618, 2.209658618, 2.209658618, 2.209658618, 2.209658618, 2.209658618, 2.209658618, 2.209658618, 2.209658618, 2.296896922, 2.296896922, 2.296896922, 2.296896922, 2.296896922, 2.296896922, 2.296896922, 2.296896922, 2.296896922, 2.296896922, 2.296896922, 2.296896922, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 2.386298997, 34.42343704, 34.42343704, 34.42343704, 34.42343704, 34.42343704, 34.42343704, 34.42343704, 34.42343704, 40.30653622, 40.30653622, 40.30653622, 40.30653622, 40.30653622, 40.30653622, 40.30653622, 40.30653622, 93.57691902, 147.069254, 147.069254, 147.069254, 348.5550942, 348.5550942, 348.5550942, 501.7195706, 502.104965, 540.0252023, 1112.691799, 1112.691799, 1112.86844, 1112.86844, 1367.681491, 1369.724253.

For this sample, there are many quantiles with equal calculated value, i.e., .

References

- Arora, S.; Barak, B. Computational Complexity: A Modern Approach, 1st ed.; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Hromkovic, J. Algorithmics for Hard Problems—Introduction to Combinatorial Optimization, Randomization, Approximation, and Heuristics, 2nd ed.; Texts in Theoretical Computer Science. An EATCS Series; Springer: Berlin/Heidelberg, Germany, 2004. [Google Scholar] [CrossRef]

- Wolpert, D.; Macready, W. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Wolpert, D.; Macready, W. Coevolutionary free lunches. IEEE Trans. Evol. Comput. 2005, 9, 721–735. [Google Scholar] [CrossRef]

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

- Halim, A.H.; Ismail, I.; Das, S. Performance assessment of the metaheuristic optimization algorithms: An exhaustive review. Artif. Intell. Rev. 2021, 54, 2323–2409. [Google Scholar] [CrossRef]

- Osaba, E.; Villar-Rodriguez, E.; Del Ser, J.; Nebro, A.J.; Molina, D.; LaTorre, A.; Suganthan, P.N.; Coello Coello, C.A.; Herrera, F. A Tutorial On the design, experimentation and application of metaheuristic algorithms to real-World optimization problems. Swarm Evol. Comput. 2021, 64, 100888. [Google Scholar] [CrossRef]

- Mernik, M.; Liu, S.H.; Karaboga, D.; Črepinšek, M. On clarifying misconceptions when comparing variants of the Artificial Bee Colony Algorithm by offering a new implementation. Inf. Sci. 2015, 291, 115–127. [Google Scholar] [CrossRef]

- Črepinšek, M.; Liu, S.H.; Mernik, M. Replication and comparison of computational experiments in applied evolutionary computing: Common pitfalls and guidelines to avoid them. Appl. Soft Comput. 2014, 19, 161–170. [Google Scholar] [CrossRef]

- Liu, Q.; Gehrlein, W.V.; Wang, L.; Yan, Y.; Cao, Y.; Chen, W.; Li, Y. Paradoxes in Numerical Comparison of Optimization Algorithms. IEEE Trans. Evol. Comput. 2020, 24, 777–791. [Google Scholar] [CrossRef]

- Yan, Y.; Liu, Q.; Li, Y. Paradox-free analysis for comparing the performance of optimization algorithms. IEEE Trans. Evol. Comput. 2022, 1–14. [Google Scholar] [CrossRef]

- LaTorre, A.; Molina, D.; Osaba, E.; Poyatos, J.; Del Ser, J.; Herrera, F. A prescription of methodological guidelines for comparing bio-inspired optimization algorithms. Swarm Evol. Comput. 2021, 67, 100973. [Google Scholar] [CrossRef]

- Carrasco, J.; García, S.; Rueda, M.; Das, S.; Herrera, F. Recent trends in the use of statistical tests for comparing swarm and evolutionary computing algorithms: Practical guidelines and a critical review. Swarm Evol. Comput. 2020, 54, 100665. [Google Scholar] [CrossRef]

- Omran, M.G.H.; Clerc, M.; Ghaddar, F.; Aldabagh, A.; Tawfik, O. Permutation Tests for Metaheuristic Algorithms. Mathematics 2022, 10, 2219. [Google Scholar] [CrossRef]

- Eiben, A.E.; Jelasity, M. A critical note on experimental research methodology in EC. In Proceedings of the Evolutionary Computation, 2002, CEC ’02, Washington, DC, USA, 12–17 May 2002; IEEE Press: Honolulu, HI, USA, 2002; Volume 1, pp. 582–587. [Google Scholar] [CrossRef]

- Birattari, M.; Dorigo, M. How to assess and report the performance of a stochastic algorithm on a benchmark problem: Mean or best result on a number of runs? Optim. Lett. 2007, 1, 309–311. [Google Scholar] [CrossRef]

- Eiben, A.E.; Smith, J.E. Introduction to Evolutionary Computing; Springer: Berlin/Heidelberg, Germany; New York, NY, USA, 2003. [Google Scholar]

- Ivkovic, N.; Jakobovic, D.; Golub, M. Measuring Performance of Optimization Algorithms in Evolutionary Computation. Int. J. Mach. Learn. Comp. 2016, 6, 167–171. [Google Scholar] [CrossRef]

- Johnson, R.; Kuby, P. Elementary Statistics, 11th ed.; Cengage Learning: Boston, MA, USA, 2012. [Google Scholar]

- WHO Multicentre Growth Reference Study Group. WHO Child Growth Standards: Length/Height-for-Age, Weight-for-Age, Weight-for-Length, Weight-for-Height and Body Mass Index-for-Age: Methods and Development; World Health Organization: Geneva, Switzerland, 2006.

- Port, S.; Demer, L.; Jennrich, R.; Walter, D.; Garfinkel, A. Systolic blood pressure and mortality. Lancet 2000, 355, 175–180. [Google Scholar] [CrossRef]

- Kephart, J.L.; AU Sánchez, B.N.; Moore, J.; Schinasi, L.H.; Bakhtsiyarava, M.; Ju, Y.; Gouveia, N.; Caiaffa, W.T.; Dronova, I.; Arunachalam, S.; et al. City-level impact of extreme temperatures and mortality in Latin America. Nat. Med. 2022, 28, 1700–1705. [Google Scholar] [CrossRef]

- Born, D.P.; Lomax, I.; Rüeger, E.; Romann, M. Normative data and percentile curves for long-term athlete development in swimming. J. Sci. Med. Sport 2022, 25, 266–271. [Google Scholar] [CrossRef]

- Choo, G.H.; Seo, J.; Yoon, J.; Kim, D.R.; Lee, D.W. Analysis of long-term (2005–2018) trends in tropospheric NO2 percentiles over Northeast Asia. Atmos. Pollut. Res. 2020, 11, 1429–1440. [Google Scholar] [CrossRef]

- Suzuki, T.; Hosoya, T.; Sasaki, J. Estimating wave height using the difference in percentile coastal sound level. Coast. Eng. 2015, 99, 73–81. [Google Scholar] [CrossRef]

- Iglesias, V.; Balch, J.K.; Travis, W.R. U.S. fires became larger, more frequent, and more widespread in the 2000s. Sci. Adv. 2022, 8, eabc0020. [Google Scholar] [CrossRef]

- Anjum, B.; Perros, H. Bandwidth estimation for video streaming under percentile delay, jitter, and packet loss rate constraints using traces. Comp. Commun. 2015, 57, 73–84. [Google Scholar] [CrossRef]

- Use Percentiles to Analyze Application Performance. Available online: https://www.dynatrace.com/support/help/how-to-use-dynatrace/problem-detection-and-analysis/problem-analysis/percentiles-for-analyzing-performance (accessed on 23 July 2022).

- Application Performance and Percentiles. Available online: https://www.atakama-technologies.com/application-performance-and-percentiles/ (accessed on 23 July 2022).

- Application Performance and Percentiles. 2018. Available online: https://www.adfpm.com/adf-performance-monitor-monitoring-with-percentiles/ (accessed on 23 July 2022).

- Measures Of Central Tendency For Wage Data. Available online: https://www.ssa.gov/oact/cola/central.html (accessed on 28 July 2022).

- Tang, K.; Li, X.; Suganthan, P.N.; Yang, Z.; Weise, T. Benchmark functions for the cec’2010 special session and competition on large-scale global optimization. In Nature Inspired Computation and Applications Laboratory; Technical report; University of Science and Technology of China: Anhui, China, 2009. [Google Scholar]

- Karaboga, D.; Basturk, B. A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm. J. Global Optim. 2007, 39, 459–471. [Google Scholar] [CrossRef]

- Salcedo-Sanz, S.; Del Ser, J.; Landa-Torres, I.; Gil-López, S.; Portilla-Figueras, J. The coral reefs optimization algorithm: A novel metaheuristic for efficiently solving optimization problems. Sci. World J. 2014, 2014. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Sanderson, A.C. JADE: Adaptive Differential Evolution With Optional External Archive. IEEE Trans. Evol. Comp. 2009, 13, 945–958. [Google Scholar] [CrossRef]

- Brest, J.; Greiner, S.; Boskovic, B.; Mernik, M.; Zumer, V. Self-Adapting Control Parameters in Differential Evolution: A Comparative Study on Numerical Benchmark Problems. IEEE Trans. Evol. Comp. 2006, 10, 646–657. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Brest, J.; Maučec, M.S. Self-adaptive differential evolution algorithm using population size reduction and three strategies. Soft Comp. 2010, 15, 2157–2174. [Google Scholar] [CrossRef]

- EARS—Evolutionary Algorithms Rating System (Github). 2016. Available online: https://github.com/UM-LPM/EARS (accessed on 20 October 2022).

- Fathollahi-Fard, A.M.; Hajiaghaei-Keshteli, M.; Tavakkoli-Moghaddam, R. The Social Engineering Optimizer (SEO). Eng. Appl. Artif. Intell. 2018, 72, 267–293. [Google Scholar] [CrossRef]

- Kudelić, R.; Ivković, N. Ant inspired Monte Carlo algorithm for minimum feedback arc set. Exp. Syst. Appl. 2019, 122, 108–117. [Google Scholar] [CrossRef]

- Soto-Mendoza, V.; García-Calvillo, I.; Ruiz-y Ruiz, E.; Pérez-Terrazas, J. A Hybrid Grasshopper Optimization Algorithm Applied to the Open Vehicle Routing Problem. Algorithms 2020, 13, 96. [Google Scholar] [CrossRef]

- Fathollahi-Fard, A.M.; Hajiaghaei-Keshteli, M.; Tavakkoli-Moghaddam, R. Red deer algorithm (RDA): A new nature-inspired meta-heuristic. Soft Comput. 2020, 24, 14637–14665. [Google Scholar] [CrossRef]

- Zainel, Q.M.; Darwish, S.M.; Khorsheed, M.B. Employing Quantum Fruit Fly Optimization Algorithm for Solving Three-Dimensional Chaotic Equations. Mathematics 2022, 10, 4147. [Google Scholar] [CrossRef]

- Liao, B.; Huang, Z.; Cao, X.; Li, J. Adopting Nonlinear Activated Beetle Antennae Search Algorithm for Fraud Detection of Public Trading Companies: A Computational Finance Approach. Mathematics 2022, 10, 2160. [Google Scholar] [CrossRef]

- Matsumoto, M.; Nishimura, T. Mersenne Twister: A 623-dimensionally Equidistributed Uniform Pseudo-random Number Generator. ACM Trans. Modeling Comput. Simul. 1998, 8, 3–30. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).