Solving a Class of High-Order Elliptic PDEs Using Deep Neural Networks Based on Its Coupled Scheme

Abstract

1. Introduction

- Based on the coupled scheme of the fourth-order biharmonic Equation (1), we constructed a CDNN architecture for dealing with (1) by means of the Deep Ritz method used to solve variational problems; this architecture is composed of two independent DNNs. Compared with the existing DNN methods, the CDNN will reduce effectively the complexity of the algorithm, save the resources of the computer and make the neural networks easier to train. In the meantime, this model performs remarkably well and obtains considerable results.

- According to the property of spectral bias or frequency preference for the DNN, we introduced a Fourier mapping with sine and cosine as the activation function of the first layer for the DNN model; it can mitigate the pathology of the spectral bias of the DNN. In the viewpoint of function approximation, the DNN model with Fourier mapping mimics the Fourier expansion, in which, the first layer with Fourier mapping can be regarded as a series of Fourier basic functions and the output of the DNN is the (nonlinear) combination of those basis functions.

- By introducing some compared DRM models with different activation functions to solve the original form of the biharmonic equation, we show that our CDNN model performs better when solving (1) in various dimensional space.

2. Deep Neural Network and ResNet Architecture

3. Unified coupled DNNs Architecture to Biharmonic Equation

4. Numerical Experiments

4.1. Training Setup

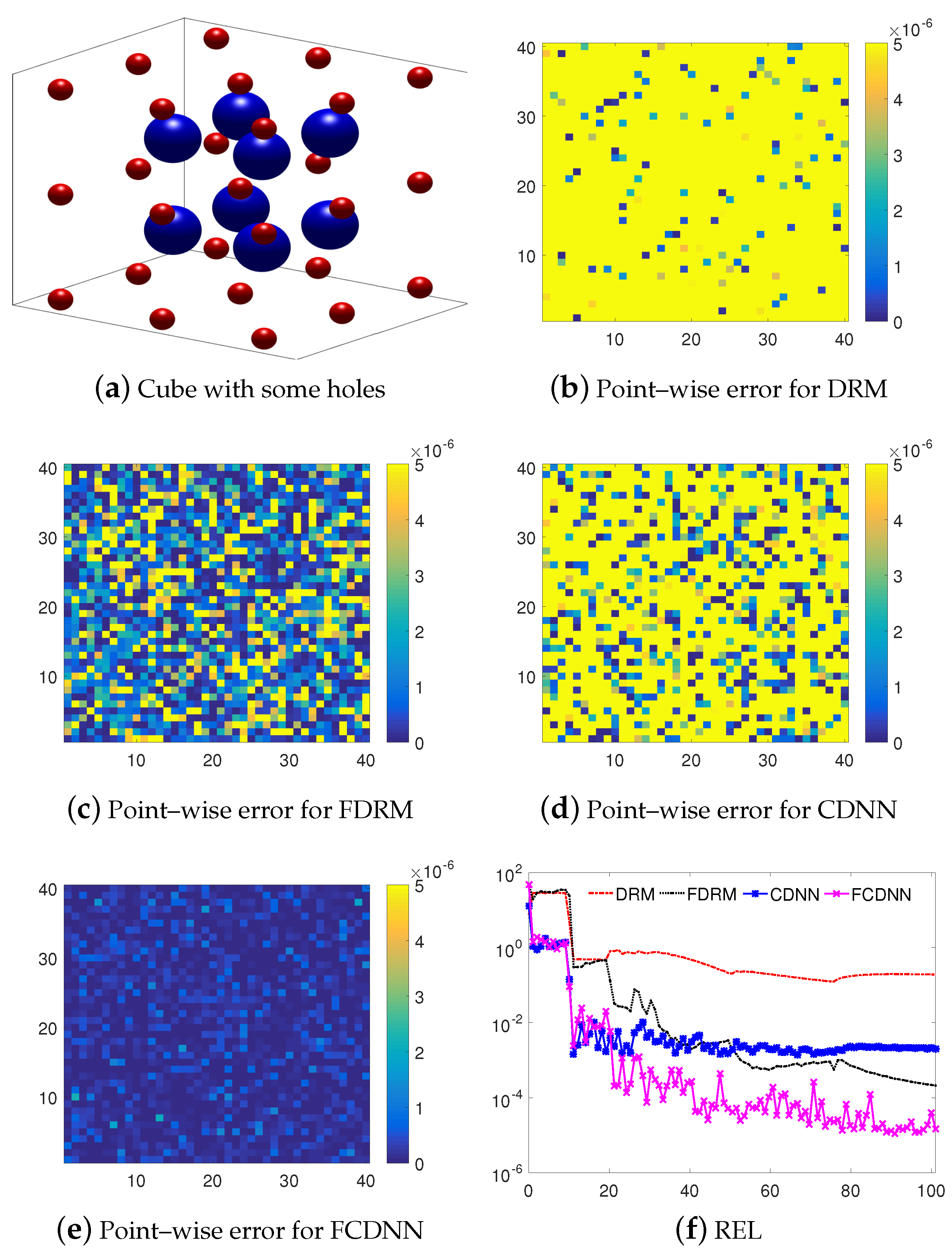

- DRM: A normal DNN model with tanh being its activation function for all hidden layers and its output layer being linear.

- FDRM: A normal DNN model with Fourier mapping as the activation function for its first hidden layer, where its activation functions for the remainder hidden layers are chosen as tanh and its output layer is linear. The vector as in (16) is set as , and we will repeat it when the length of is less than the number of neural units for the first hidden layer.

- CDNN: A coupled DNN model with tanh being its activation function for all hidden layers and its output layer being linear.

- FCDNN: A coupled DNN model with Fourier mapping as the activation function for its first hidden layer, where its activation functions for the remainder hidden layers are chosen as tanh and its output layer is linear. The vector as in (16) is set as , and we will repeat it when the length of is less than the number of neural units for the first hidden layer.

4.2. Numerical Examples

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wahba, G. Spline Models for Observational Data; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1990. [Google Scholar]

- Greengard, L.; Kropinski, M.C. An integral equation approach to the incompressible navier–stokes equations in two dimensions. SIAM J. Sci. Comput. 1998, 20, 318–336. [Google Scholar] [CrossRef]

- Ferziger, J.H.; Peric, M. Computational Methods for Fluid Dynamics; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Christiansen, S. Integral equations without a unique solution can be made useful for solving some plane harmonic problems. IMA J. Appl. Math. 1975, 16, 143–159. [Google Scholar] [CrossRef]

- Constanda, C. The boundary integral equation method in plane elasticity. Proc. Am. Math. Soc. 1995, 123, 3385–3396. [Google Scholar] [CrossRef]

- Gupta, M.M.; Manohar, R.P. Direct solution of the biharmonic equation using noncoupled approach. J. Comput. Phys. 1979, 33, 236–248. [Google Scholar] [CrossRef]

- Altas, I.; Dym, J.; Gupta, M.M.; Manohar, R.P. Multigrid solution of automatically generated high-order discretizations for the biharmonic equation. SIAM J. Sci. Comput. 1998, 19, 1575–1585. [Google Scholar] [CrossRef]

- Ben-Artzi, M.; Croisille, J.P.; Fishelov, D. A fast direct solver for the biharmonic problem in a rectangular grid. SIAM J. Sci. Comput. 2008, 31, 303–333. [Google Scholar] [CrossRef]

- Bialecki, B. A fourth order finite difference method for the Dirichlet biharmonic problem. Numer. Algorithms 2012, 61, 351–375. [Google Scholar]

- Bi, C.J.; Li, L.K. Mortar finite volume method with Adini element for biharmonic problem. J. Comput. Math. 2004, 22, 475–488. [Google Scholar]

- Wang, T. A mixed finite volume element method based on rectangular mesh for biharmonic equations. J. Comput. Appl. Math. 2004, 172, 117–130. [Google Scholar] [CrossRef]

- Eymard, R.; Gallouët, T.; Herbin, R.; Linke, A. Finite volume schemes for the biharmonic problem on general meshes. Math. Comput. 2012, 81, 2019–2048. [Google Scholar] [CrossRef]

- Baker, G.A. Finite element methods for elliptic equations using nonconforming elements. Math. Comput. 1977, 31, 45–59. [Google Scholar] [CrossRef]

- Lascaux, P.; Lesaint, P. Some nonconforming finite elements for the plate bending problem. Rev. Française D’automatique Inform. Rech. Opérationnelle Anal. Numérique 1975, 9, 9–53. [Google Scholar] [CrossRef]

- Morley, L.S.D. The triangular equilibrium element in the solution of plate bending problems. Aeronaut. Q. 1968, 19, 149–169. [Google Scholar] [CrossRef]

- Zienkiewicz, O.C.; Taylor, R.L.; Nithiarasu, P. The Finite Element Method for Fluid Dynamics, 6th ed.; Elsevier Butterworth-Heinemann: Oxford, UK, 2005. [Google Scholar]

- Ciarlet, P. The Finite Element Method for Elliptic Problems; North-Holland Publishing Company: Amsterdam, The Netherlands, 1978. [Google Scholar]

- Smith, J. The coupled equation approach to the numerical solution of the biharmonic equation by finite differences. II. SIAM J. Numer. Anal. 1968, 5, 104–111. [Google Scholar] [CrossRef]

- Ehrlich, L.W. Solving the biharmonic equation as coupled finite difference equations. SIAM J. Numer. Anal. 1971, 8, 278–287. [Google Scholar] [CrossRef]

- Brezzi, F.; Fortin, M. Mixed and Hybrid Finite Element Methods; Springer: New York, NY, USA, 1991. [Google Scholar]

- Cheng, X.L.; Han, W.; Huang, H.C. Some mixed finite element methods for biharmonic equation. J. Comput. Appl. Math. 2000, 126, 91–109. [Google Scholar] [CrossRef]

- Davini, C.; Pitacco, I. An unconstrained mixed method for the biharmonic problem. SIAM J. Numer. Anal. 2000, 38, 820–836. [Google Scholar] [CrossRef]

- Lamichhane, B.P. A stabilized mixed finite element method for the biharmonic equation based on biorthogonal systems. J. Comput. Appl. Math. 2011, 235, 5188–5197. [Google Scholar] [CrossRef]

- Stein, O.; Grinspun, E.; Jacobson, A.; Wardetzky, M. A mixed finite element method with piecewise linear elements for the biharmonic equation on surfaces. arXiv 2019, arXiv:1911.08029. [Google Scholar]

- Mai-Duy, N.; See, H.; Tran-Cong, T. A spectral collocation technique based on integrated Chebyshev polynomials for biharmonic problems in irregular domains. Appl. Math. Model. 2009, 33, 284–299. [Google Scholar] [CrossRef]

- Bialecki, B.; Karageorghis, A. Spectral Chebyshev collocation for the Poisson and biharmonic equations. SIAM J. Sci. Comput. 2010, 32, 2995–3019. [Google Scholar] [CrossRef]

- Bialecki, B.; Fairweather, G.; Karageorghis, A.; Maack, J. A quadratic spline collocation method for the Dirichlet biharmonic problem. Numer. Algorithms 2020, 83, 165–199. [Google Scholar] [CrossRef]

- Mai-Duy, N.; Tanner, R. An effective high order interpolation scheme in BIEM for biharmonic boundary value problems. Eng. Anal. Bound. Elem. 2005, 29, 210–223. [Google Scholar] [CrossRef]

- Adibi, H.; Es’haghi, J. Numerical solution for biharmonic equation using multilevel radial basis functions and domain decomposition methods. Appl. Math. Comput. 2007, 186, 246–255. [Google Scholar] [CrossRef]

- Li, X.; Zhu, J.; Zhang, S. A meshless method based on boundary integral equations and radial basis functions for biharmonic-type problems. Appl. Math. Model. 2011, 35, 737–751. [Google Scholar] [CrossRef]

- E, W.; Han, J.; Jentzen, A. Deep learning-based numerical methods for high-dimensional parabolic partial differential equations and backward stochastic differential equations. Commun. Math. Stat. 2017, 5, 349–380. [Google Scholar] [CrossRef]

- Weinan, E.; Yu, B. The deep ritz method: A deep learning-based numerical algorithm for solving variational problems. Commun. Math. Stat. 2018, 1, 1–12. [Google Scholar]

- Berg, J.; Nyström, K. A unified deep artificial neural network approach to partial differential equations in complex geometries. Neurocomputing 2018, 317, 28–41. [Google Scholar] [CrossRef]

- Sirignano, J.; Spiliopoulos, K. DGM: A deep learning algorithm for solving partial differential equations. J. Comput. Phys. 2018, 375, 1339–1364. [Google Scholar] [CrossRef]

- Nabian, M.A.; Meidani, H. A deep learning solution approach for high-dimensional random differential equations. Probabilistic Eng. Mech. 2019, 57, 14–25. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Zou, Z.; Zhang, H.; Guan, Y.; Zhang, J. Deep residual neural networks resolve quartet molecular phylogenies. Mol. Biol. Evol. 2020, 37, 1495–1507. [Google Scholar] [CrossRef] [PubMed]

- Guo, H.; Zhuang, X.; Rabczuk, T. A deep collocation method for the bending analysis of Kirchhoff plate. CMC-Comput. Mater. Contin. 2019, 59, 433–456. [Google Scholar] [CrossRef]

- Samaniego, E.; Anitescu, C.; Goswami, S.; Nguyen-Thanh, V.M.; Guo, H.; Hamdia, K.; Zhuang, X.; Rabczuk, T. An energy approach to the solution of partial differential equations in computational mechanics via machine learning: Concepts, implementation and applications. Comput. Methods Appl. Mech. Eng. 2020, 362, 112790. [Google Scholar] [CrossRef]

- Li, W.; Bazant, M.Z.; Zhu, J. A physics-guided neural network framework for elastic plates: Comparison of governing equations-based and energy-based approaches. Comput. Methods Appl. Mech. Eng. 2021, 383, 113933. [Google Scholar] [CrossRef]

- Mohammad, V.; Ehsan, H.; Maryam, K.; Nasser, K. A physics-informed neural network approach to solution and identification of biharmonic equations of elasticity. J. Eng. Mech. 2021, 148, 04021154. [Google Scholar]

- Zhongmin, H.; Zhen, X.; Yishen, Z.; Linxin, P. Deflection-bending moment coupling neural network method for the bending problem of thin plates with in-plane stiffness gradient. Chin. J. Theor. Appl. Mech. 2021, 53, 25–41. [Google Scholar]

- Goswami, S.; Anitescu, C.; Rabczuk, T. Adaptive fourth-order phase field analysis using deep energy minimization. Theor. Appl. Fract. Mech. 2020, 107, 102527. [Google Scholar] [CrossRef]

- Lyu, L.; Zhang, Z.; Chen, M.; Chen, J. MIM: A deep mixed residual method for solving high-order partial differential equations. J. Comput. Phys. 2022, 452, 110930. [Google Scholar] [CrossRef]

- Wang, S.; Wang, H.; Perdikaris, P. On the eigenvector bias of Fourier feature networks: From regression to solving multi-scale PDEs with physics-informed neural networks. Comput. Methods Appl. Mech. Eng. 2021, 384, 113938. [Google Scholar] [CrossRef]

- Tancik, M.; Srinivasan, P.; Mildenhall, B.; Fridovich-Keil, S.; Raghavan, N.; Singhal, U.; Ramamoorthi, R.; Barron, J.; Ng, R. Fourier features let networks learn high frequency functions in low dimensional domains. Adv. Neural Inf. Process. Syst. 2020, 33, 7537–7547. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NE, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Duan, C.; Jiao, Y.; Lai, Y.; Li, D.; Yang, Z. Convergence rate analysis for deep ritz method. Commun. Comput. Phys. 2022, 31, 1020–1048. [Google Scholar] [CrossRef]

- Jiao, Y.; Lai, Y.; Lo, Y.; Wang, Y.; Yang, Y. Error analysis of deep Ritz methods for elliptic equations. arXiv 2021, arXiv:2107.14478. [Google Scholar]

- Robert, C.P.; Casella, G. Monte Carlo Statistical Methods; Springer: New York, NY, USA, 2004. [Google Scholar]

- Xu, Z.Q.J.; Zhang, Y.; Luo, T.; Xiao, Y.; Ma, Z. Frequency principle: Fourier analysis sheds light on deep neural networks. Commun. Comput. Phys. 2020, 28, 1746–1767. [Google Scholar] [CrossRef]

- Rahaman, N.; Baratin, A.; Arpit, D.; Draxler, F.; Lin, M.; Hamprecht, F.; Bengio, Y.; Courville, A. On the spectral bias of neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 5301–5310. [Google Scholar]

- Jacot, A.; Gabriel, F.; Hongler, C. Neural tangent kernel: Convergence and generalization in neural networks. Adv. Neural Inf. Process. Syst. 2018, 8571–8580. [Google Scholar]

- Xu, Z.J. Understanding training and generalization in deep learning by fourier analysis. arXiv 2018, arXiv:1808.04295. [Google Scholar]

- Xu, Z.Q.J.; Zhang, Y.; Xiao, Y. Training behavior of deep neural network in frequency domain. In Proceedings of the International Conference on Neural Information Processing, Vancouver, BC, Canada, 8–14 December 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 264–274. [Google Scholar]

| DRM | FDRM | CDNN | FCDNN | |

|---|---|---|---|---|

| REL | ||||

| Time | 1.05 h | 1.37 h | 0.42 h | 0.55 h |

| DRM | FDRM | CDNN | FCDNN | |

|---|---|---|---|---|

| REL | ||||

| Time | 1.10 h | 1.26 h | 0.44 h | 0.55 h |

| DRM | FDRM | CDNN | FCDNN | |

|---|---|---|---|---|

| REL | 0.192 | |||

| Time | 2.97 h | 4.16 h | 0.50 h | 0.54 h |

| CDNN | FCDNN | |

|---|---|---|

| REL | 0.0793 | 0.00042 |

| Time | 3.57 h | 4.03 h |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Wu, J.; Zhang, L.; Tai, X. Solving a Class of High-Order Elliptic PDEs Using Deep Neural Networks Based on Its Coupled Scheme. Mathematics 2022, 10, 4186. https://doi.org/10.3390/math10224186

Li X, Wu J, Zhang L, Tai X. Solving a Class of High-Order Elliptic PDEs Using Deep Neural Networks Based on Its Coupled Scheme. Mathematics. 2022; 10(22):4186. https://doi.org/10.3390/math10224186

Chicago/Turabian StyleLi, Xi’an, Jinran Wu, Lei Zhang, and Xin Tai. 2022. "Solving a Class of High-Order Elliptic PDEs Using Deep Neural Networks Based on Its Coupled Scheme" Mathematics 10, no. 22: 4186. https://doi.org/10.3390/math10224186

APA StyleLi, X., Wu, J., Zhang, L., & Tai, X. (2022). Solving a Class of High-Order Elliptic PDEs Using Deep Neural Networks Based on Its Coupled Scheme. Mathematics, 10(22), 4186. https://doi.org/10.3390/math10224186