BreaST-Net: Multi-Class Classification of Breast Cancer from Histopathological Images Using Ensemble of Swin Transformers

Abstract

1. Introduction

- i.

- We investigated the ability of existing SwinT models for both binary (benign vs. malignant) and eight-class (includes four benign and four malignant subtypes) classification without disturbing their architectures to enable effective transfer learning.

- ii.

- Further, we investigated the power of ensemble learning, and the results indicated that the ensemble of these four SwinT models (BreaST-Net) indeed provided better performance relative to individual models’ performance for both binary as well as multi-class classification tasks.

- iii.

- Furthermore, for the multi-class classification, the models ensembling was implemented using the dataset as a whole and using the dataset stratified with respect to the images acquired at zoom factors 50×, 100×, 200×, and 400×.

- iv.

- Eventually, the five-fold cross-validation and testing were implemented for all of the individual as well as the ensemble models.

2. Related Work

2.1. Based on Mammography

2.2. Based on Ultrasonography

2.3. Based on Histopathology

3. Methods

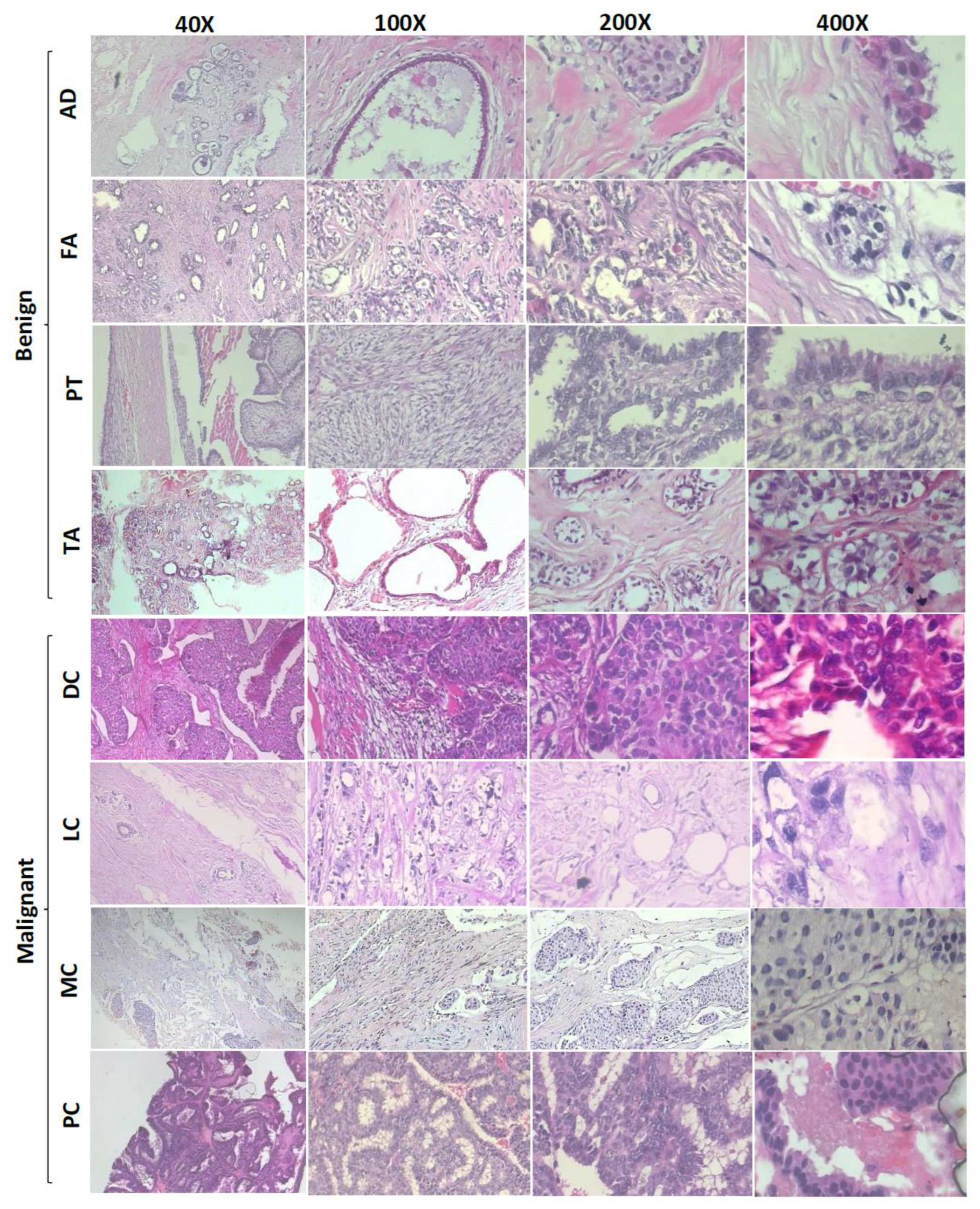

3.1. Dataset

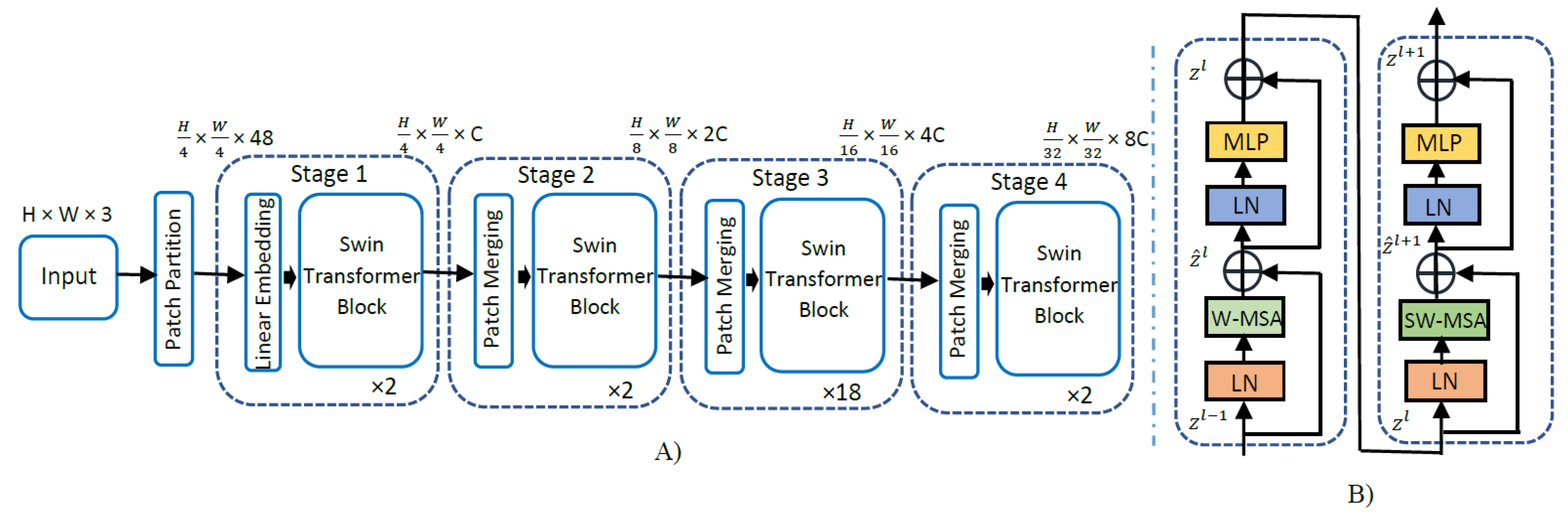

3.2. Swin Transformer

3.3. Model Cross-Validation and Testing

3.4. Performance Metrics

3.5. Model Ensembling

4. Results

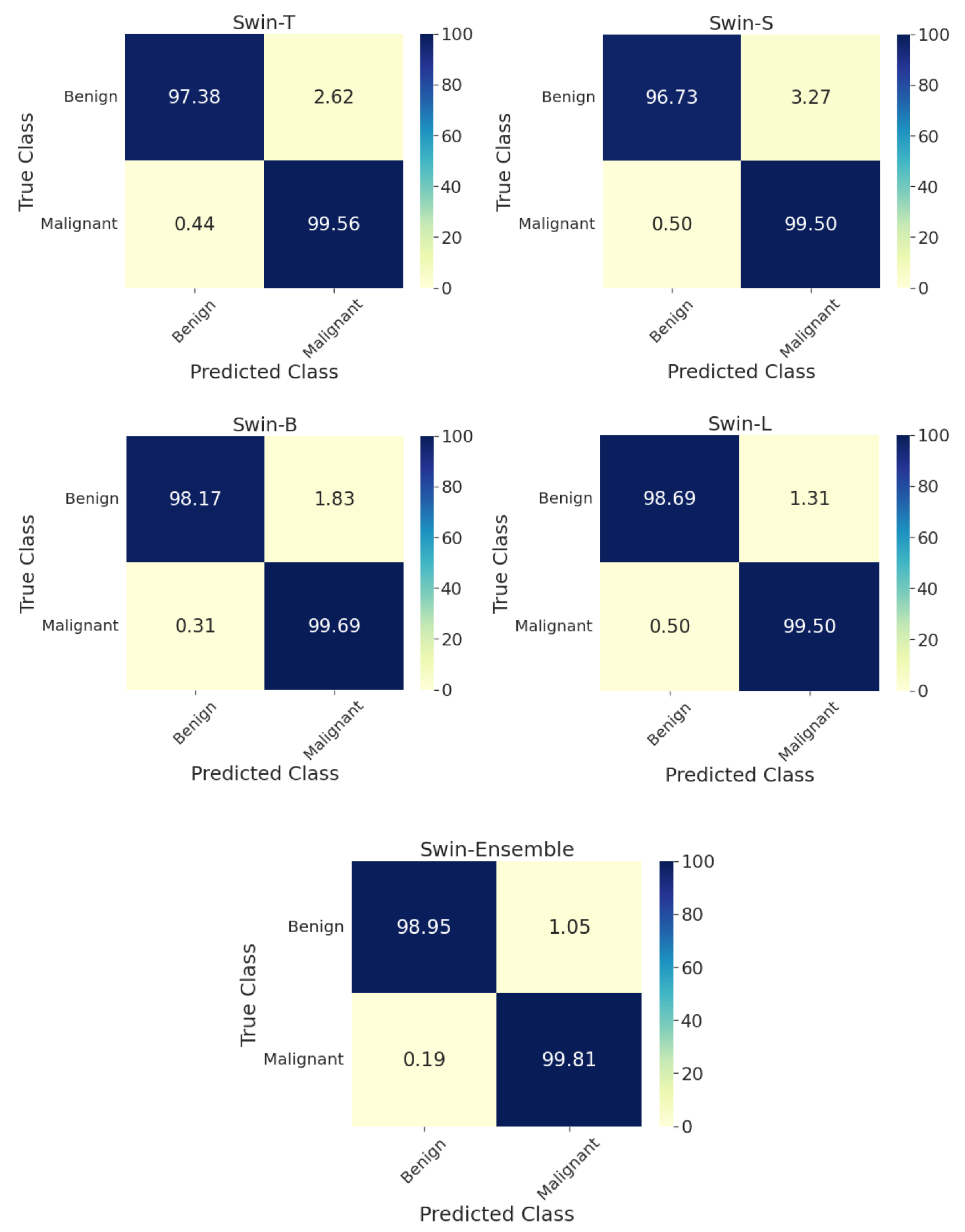

4.1. Two-Class Classification

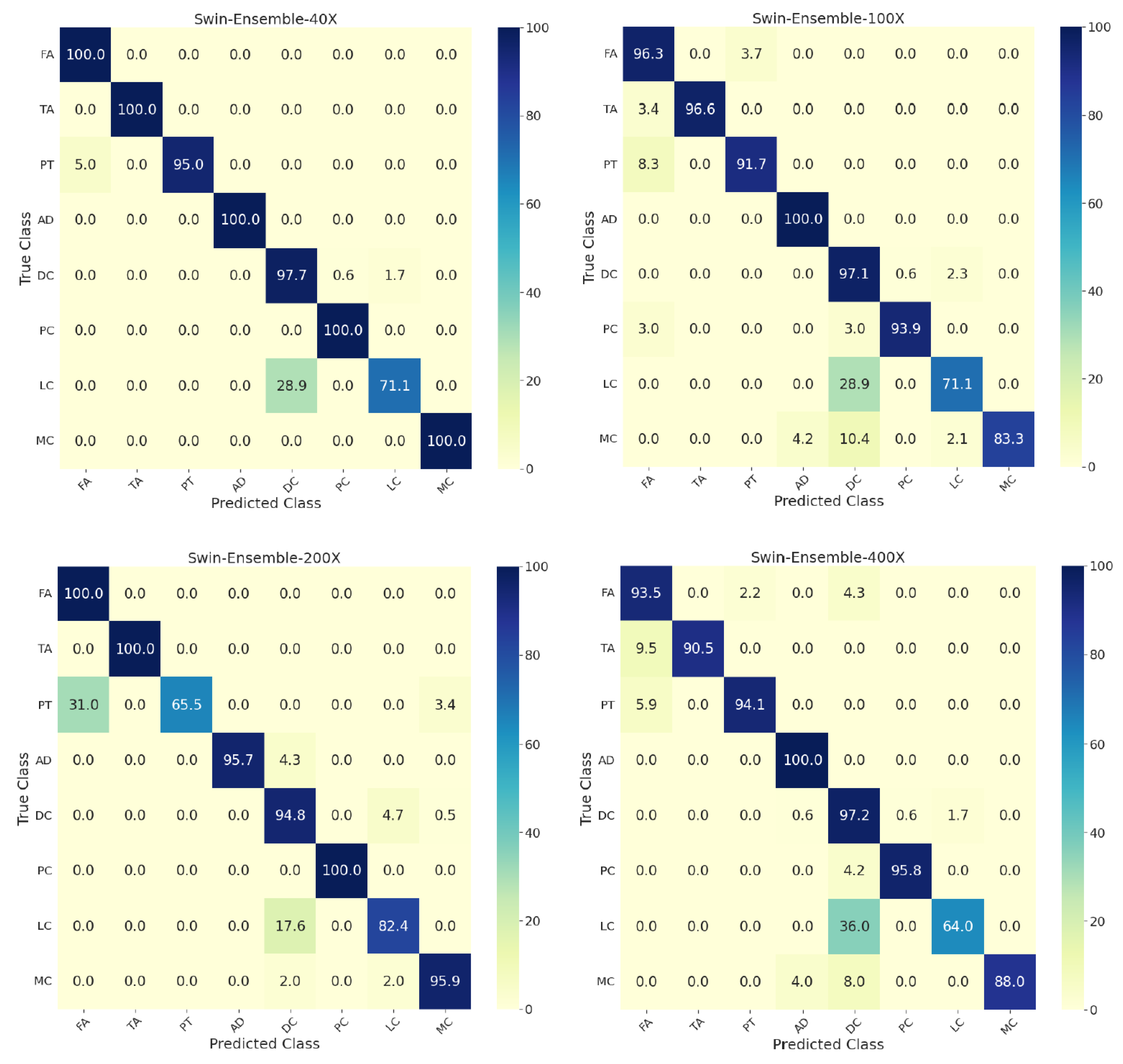

4.2. Eight-Class Classification

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Azamjah, N.; Soltan-Zadeh, Y.; Zayeri, F. Global Trend of Breast Cancer Mortality Rate: A 25-Year Study. Asian Pac. J. Cancer Prev. 2019, 20, 2015–2020. [Google Scholar] [CrossRef]

- Rosenberg, P.S.; Barker, K.A.; Anderson, W.F. Estrogen Receptor Status and the Future Burden of Invasive and In Situ Breast Cancers in the United States. JNCI J. Natl. Cancer Inst. 2015, 107, 159. [Google Scholar] [CrossRef]

- Pathak, P.; Jalal, A.S.; Rai, R. Breast Cancer Image Classification: A Review. Curr. Med. Imaging Former. Curr. Med. Imaging Rev. 2020, 17, 720–740. [Google Scholar] [CrossRef]

- Iranmakani, S.; Mortezazadeh, T.; Sajadian, F.; Ghaziani, M.F.; Ghafari, A.; Khezerloo, D.; Musa, A.E. A Review of Various Modalities in Breast Imaging: Technical Aspects and Clinical Outcomes. Egypt. J. Radiol. Nucl. Med. 2020, 51, 57. [Google Scholar] [CrossRef]

- Ying, X.; Lin, Y.; Xia, X.; Hu, B.; Zhu, Z.; He, P. A Comparison of Mammography and Ultrasound in Women with Breast Disease: A Receiver Operating Characteristic Analysis. Breast J. 2012, 18, 130–138. [Google Scholar] [CrossRef]

- Pereira, R.d.O.; da Luz, L.A.; Chagas, D.C.; Amorim, J.R.; Nery-Júnior, E.d.J.; Alves, A.C.B.R.; de Abreu-Neto, F.T.; Oliveira, M.d.C.B.; Silva, D.R.C.; Soares-Júnior, J.M.; et al. Evaluation of the Accuracy of Mammography, Ultrasound and Magnetic Resonance Imaging in Suspect Breast Lesions. Clinics 2020, 75, 1–4. [Google Scholar] [CrossRef]

- Alshafeiy, T.I.; Matich, A.; Rochman, C.M.; Harvey, J.A. Advantages and Challenges of Using Breast Biopsy Markers. J. Breast Imaging 2022, 4, 78–95. [Google Scholar] [CrossRef]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; DePristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A Guide to Deep Learning in Healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef]

- Balkenende, L.; Teuwen, J.; Mann, R.M. Application of Deep Learning in Breast Cancer Imaging. Semin. Nucl. Med. 2022, 52, 584–596. [Google Scholar] [CrossRef]

- Bai, Y.; Mei, J.; Yuille, A.; Xie, C. Are Transformers More Robust than CNNs? Adv. Neural Inf. Process. Syst. 2021, 34, 26831–26843. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. arXiv 2021. [Google Scholar] [CrossRef]

- Ribli, D.; Horváth, A.; Unger, Z.; Pollner, P.; Csabai, I. Detecting and Classifying Lesions in Mammograms with Deep Learning. Sci. Rep. 2018, 8, 4165. [Google Scholar] [CrossRef] [PubMed]

- Bektas, B.; Emre, I.E.; Kartal, E.; Gulsecen, S. Classification of Mammography Images by Machine Learning Techniques. In Proceedings of the 2018 3rd International Conference on Computer Science and Engineering (UBMK), Sarajevo, Bosnia and Herzegovina, 20–23 September 2018; pp. 580–585. [Google Scholar] [CrossRef]

- Alshammari, M.M.; Almuhanna, A.; Alhiyafi, J. Mammography Image-Based Diagnosis of Breast Cancer Using Machine Learning: A Pilot Study. Sensors 2021, 22, 203. [Google Scholar] [CrossRef]

- Gardezi, S.J.S.; Elazab, A.; Lei, B.; Wang, T. Breast Cancer Detection and Diagnosis Using Mammographic Data: Systematic Review. J. Med. Internet Res. 2019, 21, e14464. [Google Scholar] [CrossRef]

- Devolli-Disha, E.; Manxhuka-Kërliu, S.; Ymeri, H.; Kutllovci, A. Comparative accuracy of mammography and ultrasound IN women with breast symptoms according to age and breast density. Bosn. J. Basic Med. Sci. 2009, 9, 131. [Google Scholar] [CrossRef]

- Tan, K.P.; Mohamad Azlan, Z.; Choo, M.Y.; Rumaisa, M.P.; Siti’Aisyah Murni, M.R.; Radhika, S.; Nurismah, M.I.; Norlia, A.; Zulfiqar, M.A. The Comparative Accuracy of Ultrasound and Mammography in the Detection of Breast Cancer. Med. J. Malaysia 2014, 69, 79–85. [Google Scholar]

- Sadad, T.; Hussain, A.; Munir, A.; Habib, M.; Khan, S.A.; Hussain, S.; Yang, S.; Alawairdhi, M. Identification of Breast Malignancy by Marker-Controlled Watershed Transformation and Hybrid Feature Set for Healthcare. Appl. Sci. 2020, 10, 1900. [Google Scholar] [CrossRef]

- Badawy, S.M.; Mohamed, A.E.N.A.; Hefnawy, A.A.; Zidan, H.E.; GadAllah, M.T.; El-Banby, G.M. Automatic Semantic Segmentation of Breast Tumors in Ultrasound Images Based on Combining Fuzzy Logic and Deep Learning—A Feasibility Study. PLoS ONE 2021, 16, e0251899. [Google Scholar] [CrossRef]

- Byra, M. Breast Mass Classification with Transfer Learning Based on Scaling of Deep Representations. Biomed. Signal Process. Control 2021, 69, 102828. [Google Scholar] [CrossRef]

- Jabeen, K.; Khan, M.A.; Alhaisoni, M.; Tariq, U.; Zhang, Y.D.; Hamza, A.; Mickus, A.; Damaševičius, R. Breast Cancer Classification from Ultrasound Images Using Probability-Based Optimal Deep Learning Feature Fusion. Sensors 2022, 22, 807. [Google Scholar] [CrossRef] [PubMed]

- Veta, M.; Pluim, J.P.W.; Van Diest, P.J.; Viergever, M.A. Breast Cancer Histopathology Image Analysis: A Review. IEEE Trans. Biomed. Eng. 2014, 61, 1400–1411. [Google Scholar] [CrossRef] [PubMed]

- Hameed, Z.; Zahia, S.; Garcia-Zapirain, B.; Aguirre, J.J.; Vanegas, A.M. Breast Cancer Histopathology Image Classification Using an Ensemble of Deep Learning Models. Sensors 2020, 20, 4373. [Google Scholar] [CrossRef] [PubMed]

- Gupta, V.; Vasudev, M.; Doegar, A.; Sambyal, N. Breast Cancer Detection from Histopathology Images Using Modified Residual Neural Networks. Biocybern. Biomed. Eng. 2021, 41, 1272–1287. [Google Scholar] [CrossRef]

- Kaplun, D.; Krasichkov, A.; Chetyrbok, P.; Oleinikov, N.; Garg, A.; Pannu, H.S. Cancer Cell Profiling Using Image Moments and Neural Networks with Model Agnostic Explainability: A Case Study of Breast Cancer Histopathological (BreakHis) Database. Mathematics 2021, 9, 2616. [Google Scholar] [CrossRef]

- Kausar, T.; Kausar, A.; Ashraf, M.A.; Siddique, M.F.; Wang, M.; Sajid, M.; Siddique, M.Z.; Haq, A.U.; Riaz, I. SA-GAN: Stain Acclimation Generative Adversarial Network for Histopathology Image Analysis. Appl. Sci. 2021, 12, 288. [Google Scholar] [CrossRef]

- Umer, M.J.; Sharif, M.; Kadry, S.; Alharbi, A. Multi-Class Classification of Breast Cancer Using 6B-Net with Deep Feature Fusion and Selection Method. J. Pers. Med. 2022, 12, 683. [Google Scholar] [CrossRef]

- Spanhol, F.A.; Oliveira, L.S.; Petitjean, C.; Heutte, L. A Dataset for Breast Cancer Histopathological Image Classification. IEEE Trans. Biomed. Eng. 2016, 63, 1455–1462. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The Advantages of the Matthews Correlation Coefficient (MCC) over F1 Score and Accuracy in Binary Classification Evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef]

- Chicco, D.; Tötsch, N.; Jurman, G. The Matthews Correlation Coefficient (Mcc) Is More Reliable than Balanced Accuracy, Bookmaker Informedness, and Markedness in Two-Class Confusion Matrix Evaluation. BioData Min. 2021, 14, 13. [Google Scholar] [CrossRef] [PubMed]

- Han, Z.; Wei, B.; Zheng, Y.; Yin, Y.; Li, K.; Li, S. Breast Cancer Multi-Classification from Histopathological Images with Structured Deep Learning Model. Sci. Rep. 2017, 7, 4172. [Google Scholar] [CrossRef] [PubMed]

- Bardou, D.; Zhang, K.; Ahmad, S.M. Classification of Breast Cancer Based on Histology Images Using Convolutional Neural Networks. IEEE Access 2018, 6, 24680–24693. [Google Scholar] [CrossRef]

- Alom, M.Z.; Yakopcic, C.; Nasrin, M.S.; Taha, T.M.; Asari, V.K. Breast Cancer Classification from Histopathological Images with Inception Recurrent Residual Convolutional Neural Network. J. Digit. Imaging 2019, 32, 605–617. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Chen, L.; Zhang, H.; Xiao, X. Breast Cancer Histopathological Image Classification Using Convolutional Neural Networks with Small SE-ResNet Module. PLoS ONE 2019, 14, e0214587. [Google Scholar] [CrossRef] [PubMed]

- Yan, R.; Ren, F.; Wang, Z.; Wang, L.; Zhang, T.; Liu, Y.; Rao, X.; Zheng, C.; Zhang, F. Breast Cancer Histopathological Image Classification Using a Hybrid Deep Neural Network. Methods 2020, 173, 52–60. [Google Scholar] [CrossRef]

| Tumor Type | 40× | 100× | 200× | 400× | All |

|---|---|---|---|---|---|

| FA | 253 | 260 | 264 | 237 | 1014 |

| TA | 149 | 150 | 140 | 130 | 569 |

| PT | 109 | 121 | 108 | 115 | 453 |

| AD | 114 | 113 | 111 | 106 | 444 |

| DC | 864 | 903 | 896 | 788 | 3451 |

| PC | 145 | 142 | 135 | 138 | 560 |

| LC | 156 | 170 | 163 | 137 | 626 |

| MC | 205 | 222 | 196 | 169 | 792 |

| Total (N) | 1995 | 2081 | 2013 | 1820 | 7909 |

| Model | Accuracy | AUC | F1-Score | BA | MCC |

|---|---|---|---|---|---|

| Swin-T | 98.9 | 98.5 | 98.9 | 98.5 | 97.4 |

| Swin-S | 98.6 | 98.1 | 98.6 | 98.1 | 96.8 |

| Swin-B | 99.1 | 98.9 | 99.2 | 98.9 | 98.2 |

| Swin-L | 99.2 | 99.1 | 99.2 | 99.1 | 98.3 |

| Ensemble | 99.6 | 99.4 | 99.5 | 99.4 | 98.9 |

| Zoom Factor | Model | Accuracy | AUC | F1-Score | BA | MCC |

|---|---|---|---|---|---|---|

| 40× | Swin-T | 94.7 | 99.7 | 94.7 | 94.4 | 93.1 |

| Swin-S | 94.2 | 99.4 | 94.2 | 93.8 | 92.4 | |

| Swin-B | 94.2 | 99.6 | 94.0 | 93.7 | 92.4 | |

| Swin-L | 95.5 | 99.7 | 95.4 | 94.9 | 94.1 | |

| Ensemble | 96.0 | 99.7 | 95.8 | 95.5 | 94.7 | |

| 100× | Swin-T | 89.7 | 99.1 | 89.5 | 87.2 | 86.8 |

| Swin-S | 90.9 | 99.1 | 90.8 | 90.6 | 88.3 | |

| Swin-B | 91.3 | 99.2 | 91.1 | 89.7 | 88.9 | |

| Swin-L | 92.1 | 99.1 | 92.0 | 91.1 | 89.8 | |

| Ensemble | 92.6 | 99.5 | 92.4 | 91.2 | 90.4 | |

| 200× | Swin-T | 90.6 | 99.4 | 90.7 | 88.7 | 87.3 |

| Swin-S | 91.6 | 99.3 | 91.6 | 89.8 | 88.5 | |

| Swin-B | 92.3 | 99.5 | 92.6 | 91.0 | 89.6 | |

| Swin-L | 92.3 | 99.4 | 92.4 | 90.4 | 89.6 | |

| Ensemble | 93.5 | 99.5 | 93.6 | 91.8 | 91.3 | |

| 400× | Swin-T | 90.4 | 98.9 | 90.3 | 87.8 | 86.5 |

| Swin-S | 91.2 | 99.0 | 90.9 | 87.2 | 87.6 | |

| Swin-B | 91.5 | 99.1 | 91.4 | 88.8 | 88.0 | |

| Swin-L | 91.5 | 99.1 | 91.0 | 87.6 | 87.9 | |

| Ensemble | 93.4 | 99.3 | 93.2 | 90.4 | 90.7 | |

| All zoom factors | Swin-T | 91.9 | 99.4 | 91.8 | 89.7 | 89.3 |

| Swin-S | 91.6 | 99.4 | 91.6 | 89.4 | 88.9 | |

| Swin-B | 91.6 | 99.4 | 91.5 | 89.1 | 88.9 | |

| Swin-L | 92.8 | 99.4 | 92.8 | 92.2 | 90.5 | |

| Ensemble | 93.4 | 99.4 | 93.7 | 92.0 | 91.7 |

| Study | Dataset | Method | Cross-Validation/Testing | Performance in % | Classification Type |

|---|---|---|---|---|---|

| Z Han et al. [34] | BreaKHis N: 7909 | CSD-CNN | 74:26 | At 40×: Accuracy: 89.4 At 40×: Accuracy: 95.8 | 8-class 2-class |

| B Dalal et al. [35] | BreaKHis N: 7909 | CNN-SVM | 70:30 | At 40×: Accuracy: 86.3 F1-score: 83.7 BA: 84.1 At 40×: Accuracy: 94.6 F1-score: 95.6 BA: 95.7 | 8-class 2-class |

| Alom Z et al. [36] | BreaKHis N: 7909 | IRR-CNN | 70:30 | At 40×: Accuracy: 95.6 AUC: 98.9 At 40×: Accuracy: 97.2 AUC: 98.8 | 8-class 2-class |

| Jiang Y et al. [37] | BreaKHis N: 7909 | Small SE-ResNet | 70:30 | At 40×: Accuracy: 93.7 AUC: 99.7 F1-score: 95.4 BA: 95.4 MCC: 93.2 At 40×: Accuracy: 98.9 AUC: 99.9 F1-score: 98.8 BA: 98.8 MCC: 97.7 | 8-class 2-class |

| Hameed Z et al. [24] | WSI N: 845 | Ensemble of VGG16 and VGG 19 | 80:20 | All data: Accuracy: 95.3 F1-score: 95.3 | 2-class |

| Gupta V et al. [25] | BreaKHis N: 7909 | Modified residual networks | 70:30 | All data: Accuracy: 99.5 Recall: 99.4 Precision: 99.2 | 2-class |

| Kaplun D et al. [26] | BreaKHis N: 7909 | Artificial neural network | 85:15 | At 40×: Accuracy: 100 | 2-class |

| Umar J et al. [28] | BreaKHis N: 7909 Dataset B [38] N: 3771 | 6B-Net | 70:30 | All data: Accuracy: 90.1 All data: Accuracy: 94.2 | 8-class 4-class |

| Present study | BreaKHis N: 7909 | Ensemble of SwinTs | 70:30 | All data: Accuracy: 93.4 AUC: 99.4 F1-score: 93.7 BA: 92.0 MCC: 91.7 At 40×: Accuracy: 96.0 AUC: 99.7 F1-score: 95.8 BA: 95.5 MCC: 94.7 All data: Accuracy: 99.6 AUC: 99.4 F1-score: 99.5 BA: 99.4 MCC: 98.9 | 8-class 2-class |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tummala, S.; Kim, J.; Kadry, S. BreaST-Net: Multi-Class Classification of Breast Cancer from Histopathological Images Using Ensemble of Swin Transformers. Mathematics 2022, 10, 4109. https://doi.org/10.3390/math10214109

Tummala S, Kim J, Kadry S. BreaST-Net: Multi-Class Classification of Breast Cancer from Histopathological Images Using Ensemble of Swin Transformers. Mathematics. 2022; 10(21):4109. https://doi.org/10.3390/math10214109

Chicago/Turabian StyleTummala, Sudhakar, Jungeun Kim, and Seifedine Kadry. 2022. "BreaST-Net: Multi-Class Classification of Breast Cancer from Histopathological Images Using Ensemble of Swin Transformers" Mathematics 10, no. 21: 4109. https://doi.org/10.3390/math10214109

APA StyleTummala, S., Kim, J., & Kadry, S. (2022). BreaST-Net: Multi-Class Classification of Breast Cancer from Histopathological Images Using Ensemble of Swin Transformers. Mathematics, 10(21), 4109. https://doi.org/10.3390/math10214109