Abstract

Job Shop Scheduling Problem (JSSP) is a well-known NP-hard combinatorial optimization problem. In recent years, many scholars have proposed various metaheuristic algorithms to solve JSSP, playing an important role in solving small-scale JSSP. However, when the size of the problem increases, the algorithms usually take too much time to converge. In this paper, we propose a hybrid algorithm, namely EOSMA, which mixes the update strategy of Equilibrium Optimizer (EO) into Slime Mould Algorithm (SMA), adding Centroid Opposition-based Computation (COBC) in some iterations. The hybridization of EO with SMA makes a better balance between exploration and exploitation. The addition of COBC strengthens the exploration and exploitation, increases the diversity of the population, improves the convergence speed and convergence accuracy, and avoids falling into local optimum. In order to solve discrete problems efficiently, a Sort-Order-Index (SOI)-based coding method is proposed. In order to solve JSSP more efficiently, a neighbor search strategy based on a two-point exchange is added to the iterative process of EOSMA to improve the exploitation capability of EOSMA to solve JSSP. Then, it is utilized to solve 82 JSSP benchmark instances; its performance is evaluated compared to that of EO, Marine Predators Algorithm (MPA), Aquila Optimizer (AO), Bald Eagle Search (BES), and SMA. The experimental results and statistical analysis show that the proposed EOSMA outperforms other competing algorithms.

Keywords:

slime mould algorithm; equilibrium optimizer; variable neighborhood search; job shop scheduling problem; metaheuristic algorithm MSC:

68T20

1. Introduction

Job Shop Scheduling Problem (JSSP) has become a hot topic in the manufacturing industry; a reasonable JSSP solution can effectively help manufacturers improve productivity and reduce production costs. However, JSSP has been proved to be an NP-hard problem, which is among the most difficult problems to solve [1]. This means that even medium-sized JSSP instances cannot be guaranteed to obtain an optimal solution in finite time with exact solution methods [2]. Therefore, many researchers have turned their attention to metaheuristic algorithms. According to the algorithmic principle, metaheuristic algorithms may be categorized into three categories: evolution-based, physics-based, and swarm-based [3]. The Genetic Algorithm (GA) [4] and Differential Evolution (DE) [5] are the two primary evolution-based algorithms that have been developed to simulate Darwinian biological evolution. The most common physics-based algorithms include Simulated Annealing (SA) [6], Gravitational Search Algorithm (GSA) [7], Multi-verse Optimizer (MVO) [8], Atom Search Optimization (ASO) [9], and Equilibrium Optimizer (EO) [10]; all are inspired by the principles of physics. Swarm-based algorithms mainly simulate the cooperative properties of natural biological communities. Typical algorithms include Particle Swarm Optimization (PSO) [11], Artificial Bee Colony (ABC) [12], Social Spider Optimization (SSO) [13], Gray Wolf Optimizer (GWO) [14], Whale Optimization Algorithm (WOA) [15], Seagull Optimization Algorithm (SOA) [16], Salp Swarm Algorithm (SSA) [17], Harris Hawks Optimization (HHO) [18], Teaching Learning-based Optimization (TLBO) [19], Aquila Optimizer (AO) [20], Bald Eagle Search (BES) [21], Slime Mould Algorithm (SMA) [22], Marine Predators Algorithm (MPA) [23], Chameleon Swarm Algorithm (CSA) [24], Adolescent Identity Search Algorithm (AISA) [25], etc.

In recent years, algorithms that have been used to solve JSSP include GA [26], Taboo Search Algorithm (TSA) [27], SA [28], PSO [29], Ant Colony Optimization (ACO) [30], ABC [31], TLBO [32], Bat Algorithm (BA) [33], Biogeography-based Optimization (BBO) [34], Harmony Search (HS) [35], WOA [36], HHO [37], etc. An increasing number of metaheuristic and hybrid algorithms have been developed and improved, providing new ideas and directions for solving the JSSP. However, to the authors’ knowledge, there are no online studies that apply EO or SMA to solve JSSP-related problems.

A novel bio-inspired optimization technique called Slime Mould Algorithm (SMA) was proposed by Li et al. in 2020 [22]. It is inspired by the oscillatory behavior of slime mould when it is foraging. Since it is easy to understand and implement, it has attracted the attention of many scholars since it was proposed and has been applied in various fields. For example, Wei et al. [38] proposed an improved SMA (ISMA) to solve the problem of optimal reactive power dispatch in power systems. Abdel-Basset et al. [39] applied SMA mixed with the WOA (HSMA-WOA) for X-ray image detection of COVID-19 and evaluated the performance of HSMA-WOA on 12 chest X-ray images and compared it with 5 algorithms. Liu et al. [40] proposed an SMA integrating the Nelder–Mead single-line strategy and chaotic mapping (CNMSMA) and applied it to the photovoltaic parameter extraction problem, which was tested on three photovoltaic modules. Yu et al. [41] proposed an enhanced SMA (ESMA) based on an opposing learning strategy and an elite chaotic search strategy, which was used to predict the water demand of Nanchang city and tested on four models, showing a prediction accuracy of 97.705%. Hassan et al. [42] proposed an improved SMA (ISMA) combined with Sine Cosine Algorithm (SCA) and applied it to single and bi-objective economic and emission dispatch problems, which was tested on five systems; the results showed that the proposed algorithm is more robust than other well-known algorithms. Zhao et al. [43] proposed an improved SMA (DASMA) based on diffusion mechanism and association strategy and applied it to Renyi’s entropy multilevel thresholding image segmentation based on a two-dimensional histogram with nonlocal means; the experimental results show that the proposed algorithm has good performance. Yu et al. [44] proposed an improved SMA (WQSMA), which employs a quantum rotating gate and water cycle operator to improve the robustness of basic SMA and keep the algorithm in balance with the tendency of exploration and exploitation. Rizk-Allah et al. [45] proposed a Chaos Opposition SMA (CO-SMA) to minimize the energy cost of wind turbines on high-altitude sites. Houssein et al. [46] proposed a hybrid algorithm called SMA-AGDE by mixing SMA with adaptive guided DE (AGDE), which enables the exploitation capability of SMA and exploration capability of AGDE to be well integrated into CEC2017 and three engineering design problems to validate the effectiveness of the SMA-AGDE. Premkumar et al. [47] proposed a multi-objective SMA (MOSMA) to solve multi-objective engineering optimization problems. Although SMA has been applied in many fields, many researchers have found that SMA also has shortcomings as the research progresses, such as insufficient global search capability and easy-to-fall-into local optimum. In this paper, in order to broaden the application of SMA, we first hybridize the search strategy of EO with SMA (EOSMA), which can balance exploration and exploitation, increase the population diversity, improve the robustness, and enhance the generalization capability of the algorithm. Then, introducing the Centroid Opposition-based Computation (COBC) [48] into the hybrid algorithm can strengthen the performance of the algorithm, help the search agent to jump out of the local optimum, improve the probability of finding the global optimal solution, and accelerate the convergence rate. Since the search space of JSSP is large and is a discrete problem. In order to solve JSSP quickly and efficiently, a local search operator based on Two-point Exchange Neighborhood (TEN) [31] is incorporated into the EOSMA. In order to solve the discrete problem efficiently, this research designs a Sort-Order-Index (SOI)-based coding method.

The main contributions of this paper can answer the following questions:

- Whether the proposed SOI-based encoding method is effective for JSSP;

- How SMA can be efficiently combined with the EO algorithm and COBC strategy;

- Whether neighborhood structure combined with EOSMA is more efficient for JSSP;

- Whether EOSMA can solve high-dimensional JSSP instances quickly and efficiently.

In this paper, 82 JSSP instance datasets from Operations Research Library (OR Library) are used to test the performance of the proposed EOSMA in comparison with SMA, EO and the newly proposed MPA, AO, and BES with the same neighborhood search. In order to facilitate readers to read and understand the research content of this paper, Table 1 lists the abbreviations used in subsequent sections.

Table 1.

List of abbreviations.

2. Preliminaries

2.1. Job Shop Scheduling Problem

JSSP can be described as: there are machines and jobs, each job contains operations, and the total number of operations is . Each operation has a specified processing time and a processing machine , each machine can only process one operation at a time, and each job must be produced according to a predefined production sequence . The operation completion time of each job can be denoted as , and the total completion time of all jobs can be denoted as . The objective of JSSP is to generate a reasonable operation scheduling scheme that minimizes the maximum completion time when all jobs are completed. The explanation of the parameters is shown in Table 2.

Table 2.

Detailed description of parameters [36].

In summary, the mathematical model of JSSP can be described by Equation (1):

where denotes the predecessor operation before operation , denotes the completion time of operation , and denotes the set of processing machines. The first constraint indicates the priority relationship between operations, that is, the completion time of any operation in front of the operation plus the processing time of the current operation should be less than or equal to the completion time of the current operation; the second constraint indicates that at most one operation can be processed at the same time on a machine; the third constraint indicates that the completion time of any operation must be a non-negative number.

2.2. Encoding and Decoding Mapping

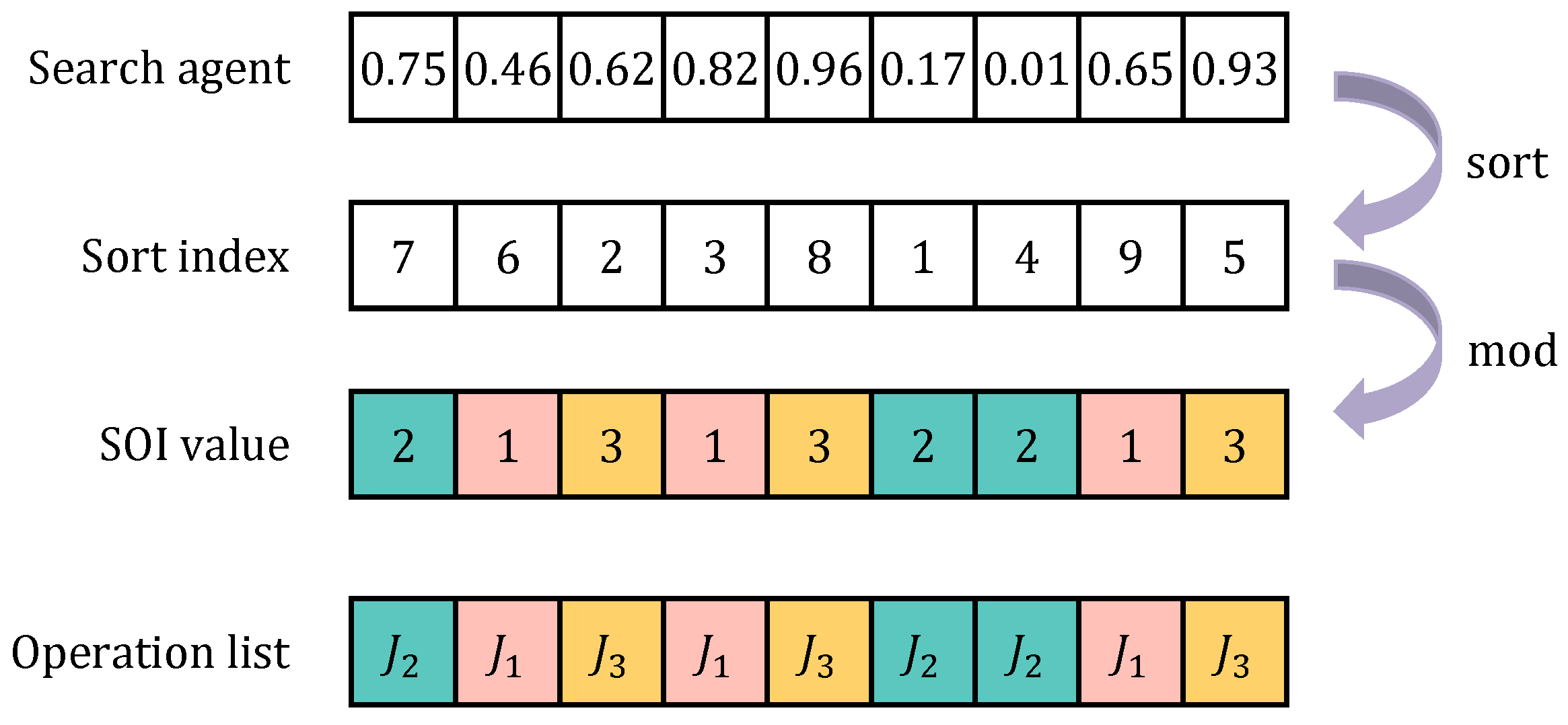

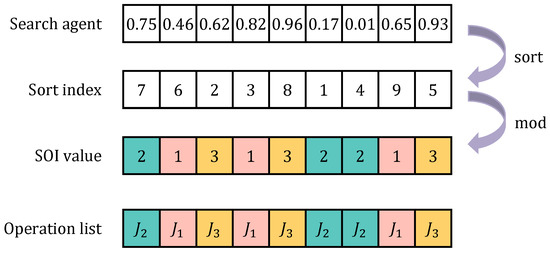

Since both SMA and EO are proposed for continuous problems, they cannot be directly used to solve discrete JSSP. Therefore, in this paper, we propose a novel heuristic rule called Sort-Order-Index (SOI)-based encoding method, which maps the real number encoding to integer encoding, making the proposed EOSMA applicable to solve JSSP. The solution vector of EOSMA does not represent the processing order of the jobs, though the component size of the solution vector has an order relationship. The SOI-based encoding method uses the ordering relationship to map the consecutive locations of the slime mould into a discrete processing order, i.e., the processing order of all operations for all jobs, as shown in Figure 1. The SOI-based encoding method is described as follows: firstly, the components of the search agent are sorted in ascending order to find out the sorted component value corresponding to the position index where the component value before sorting is located to form the sort index ; then the sort index is modulo with the number of jobs to obtain the integer-encoded solution vector , as shown in Equation (2).

Figure 1.

SOI-based encoding mapping.

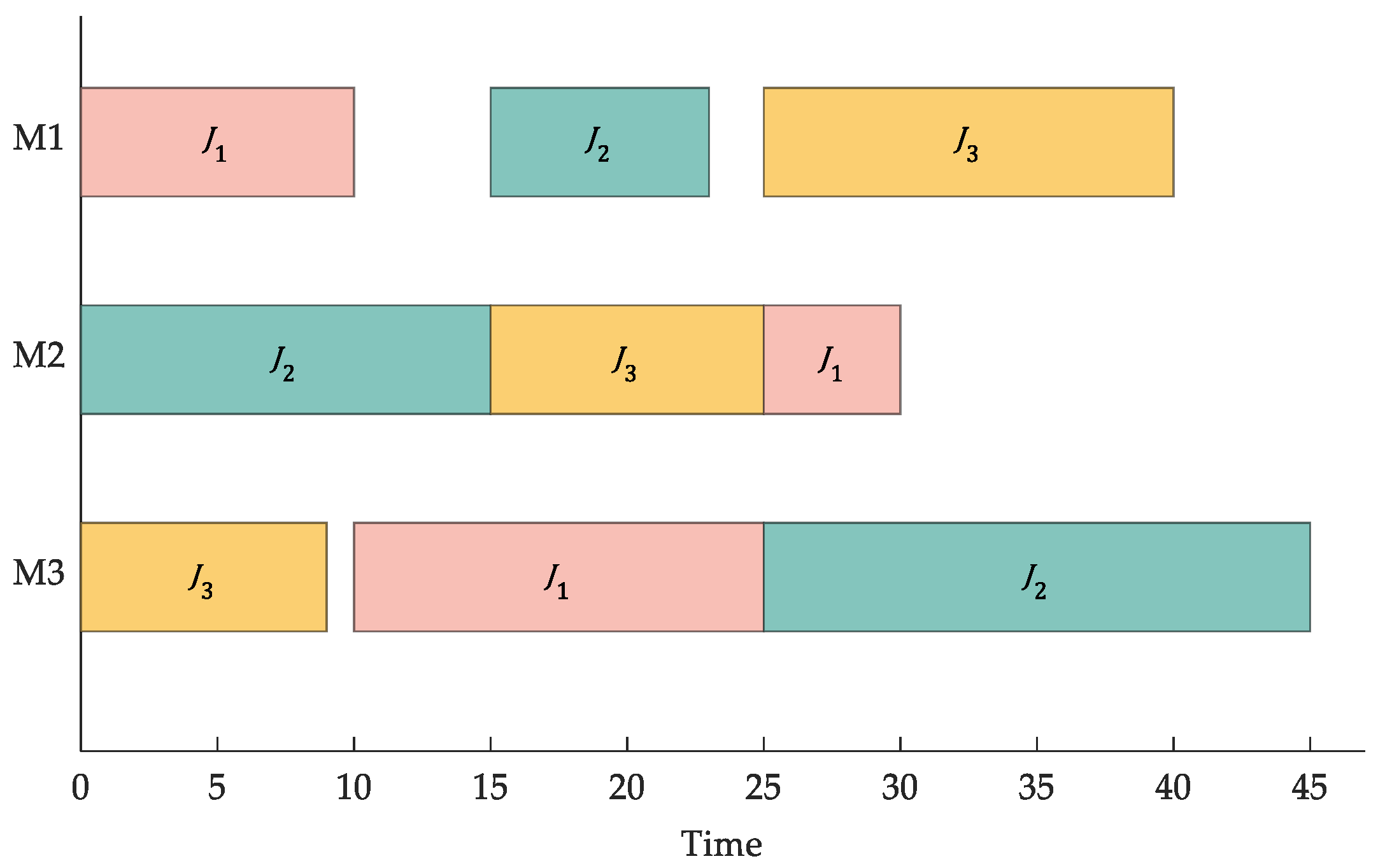

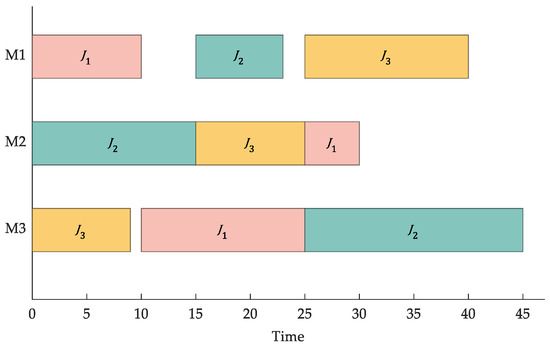

Each solution vector corresponds to a processing order, which is a scheduling scheme. By this transformation, the feasibility of the scheduling scheme can be guaranteed without modifying the evolutionary operation of the algorithm. For example, in the scheduling sequence shown in Figure 1, there are three jobs, each containing three operations, and the number of occurrences of each job represents the corresponding operation from left to right. Since the processing machine and time for each operation are pre-specified (as shown in Table 3), the sequence of operations in Figure 1 can be decoded into the scheduling Gantt chart shown in Figure 2.

Table 3.

A case of JSSP [36].

Figure 2.

SOI-based decoding mapping.

3. Related Works

3.1. Slime Mould Algorithm

Slime Mould Algorithm (SMA) is a swarm-based metaheuristic algorithm developed by Li et al. in 2020 [22]. It simulates the behavioral and morphological changes of slime mould during foraging to find the best food source. The mathematical model for updating the location of slime mould is seen in Equation (3):

where and denote the lower and upper bounds of the search range, and denote random numbers in [0, 1], is an adjustable parameter, is the best location found so far, and are random parameter vectors, takes values in , decreases linearly as the number of iterations goes from 1 to 0, is the thickness of the vein-like vessels, and are two randomly selected individual locations in the population, and indicates the location of slime mould.

The value of is calculated as Equation (4):

where , denotes the fitness , and denotes the best fitness value obtained so far.

The value of in the range of is calculated as Equation (5):

where is the maximum number of iterations.

The formula of is calculated as Equation (6):

where denotes the individuals whose fitness ranks in the top half, denotes a random number in [0, 1], denotes the best fitness of the current iteration, denotes the worst fitness of the current iteration, and denotes the result of ranking the fitness in ascending order (in the minimization problem). The pseudo-code of SMA is shown in Algorithm 1 [22].

| Algorithm 1: Pseudo-code of SMA |

|

3.2. Equilibrium Optimizer

Faramarzi et al. [10] proposed the Equilibrium Optimizer (EO) in 2020, a novel optimization algorithm inspired by physical phenomena of control volume mass balance models. The mass balance equation is usually described by a first-order ordinary differential equation, as shown in Equation (8), which embodies the physical processes of entrance, departure, and generation of mass inside the control volume.

Here, denotes the control volume, denotes the concentration within the control volume, denotes the volume flow rate into or out of the control volume, denotes the concentration when equilibrium is achieved, and denotes the mass generation rate in the control volume.

Equation (9) can be obtained by solving the ordinary differential equation described by Equation (8):

where is the concentration of the control volume at the initial start time , is the flow rate, and is the exponential term coefficient, which can be calculated by Equation (10).

The EO is mainly based on Equation (9) iterative optimization search. For an optimization problem, the concentration represents the individual solution, represents the solution generated by the current iteration, represents the solution obtained in the previous iteration, and represents the best solution found so far.

To meet the optimization needs of different problems, the specific operation procedure and parameters of EO are designed as follows.

(1) Initialization: the algorithm performs random initialization within the upper and lower bounds of each optimization variable, as Equation (11):

where and are the lower and upper bound of the optimization variables, respectively, and represents the random number vector for individual , each element in [0, 1];

(2) Equilibrium pool: In order to improve the exploration capability of the algorithm and avoid falling into local optimum, the equilibrium state (i.e., the optimal individual) in Equation (9) will be selected from the five candidate solutions of the equilibrium pool, which is shown in Equation (12):

where are the four best solutions found so far, and represents the average concentration of the four optimal solutions. The five solutions in the equilibrium pool are chosen as with equal probability;

(3) Exponential term factor : In order to better balance the exploration and exploitation capabilities of the algorithm, Equation (10) is improved as Equation (13).

where means the weight constant coefficient of the global search, the larger the stronger the exploration ability of the algorithm and the weaker the exploitation ability, is the sign function, and represent the random number vector, each element in [0, 1], is the number of current iterations, and is the maximum number of iterations;

(4) Mass generation rate : In order to enhance the exploitation capability of the algorithm, the generation rate is designed as Equation (14):

where is the vector of generation rate control parameter, and are random numbers in [0, 1], and is the generation probability.

Finally, the individual solution can be updated as shown in Equation (15):

where is considered as a unit.

The pseudo-code of EO is shown in Algorithm 2 [10].

| Algorithm 2: Pseudo-code of EO |

|

3.3. Centroid Opposition-Based Computation

Centroid Opposition-based Computation (COBC) is an opposition-based computation scheme proposed by Rahnamayan et al. in 2014 [48]. Experimental results have shown that the average performance of COBC improves by 15% over conventional opposition-based computation method, which is a better improvement strategy. Interested readers can find a detailed description of COBC in [48]. The pseudo-code of COBC is shown in Algorithm 3.

| Algorithm 3: Pseudo-code of COBC |

|

3.4. Variable Neighborhood Search

Variable Neighborhood Search (VNS) [49] is a local search algorithm that uses alternating neighborhood structures composed of different actions to achieve a good balance between centralization and sparsity. The VNS is often used to solve combinatorial optimization problems, which rely on the fact: (1) the locally optimal solution of one neighborhood structure may not be the locally optimal solution of another neighborhood structure; (2) the globally optimal solution is the locally optimal solution of all possible neighborhoods. In order to enhance the local search capability of the metaheuristic algorithm for solving JSSP, a simplified VNS is introduced into the hybrid algorithm in this paper. The pseudo-code of the VNS is shown in Algorithm 4.

| Algorithm 4: Pseudo-code of VNS |

|

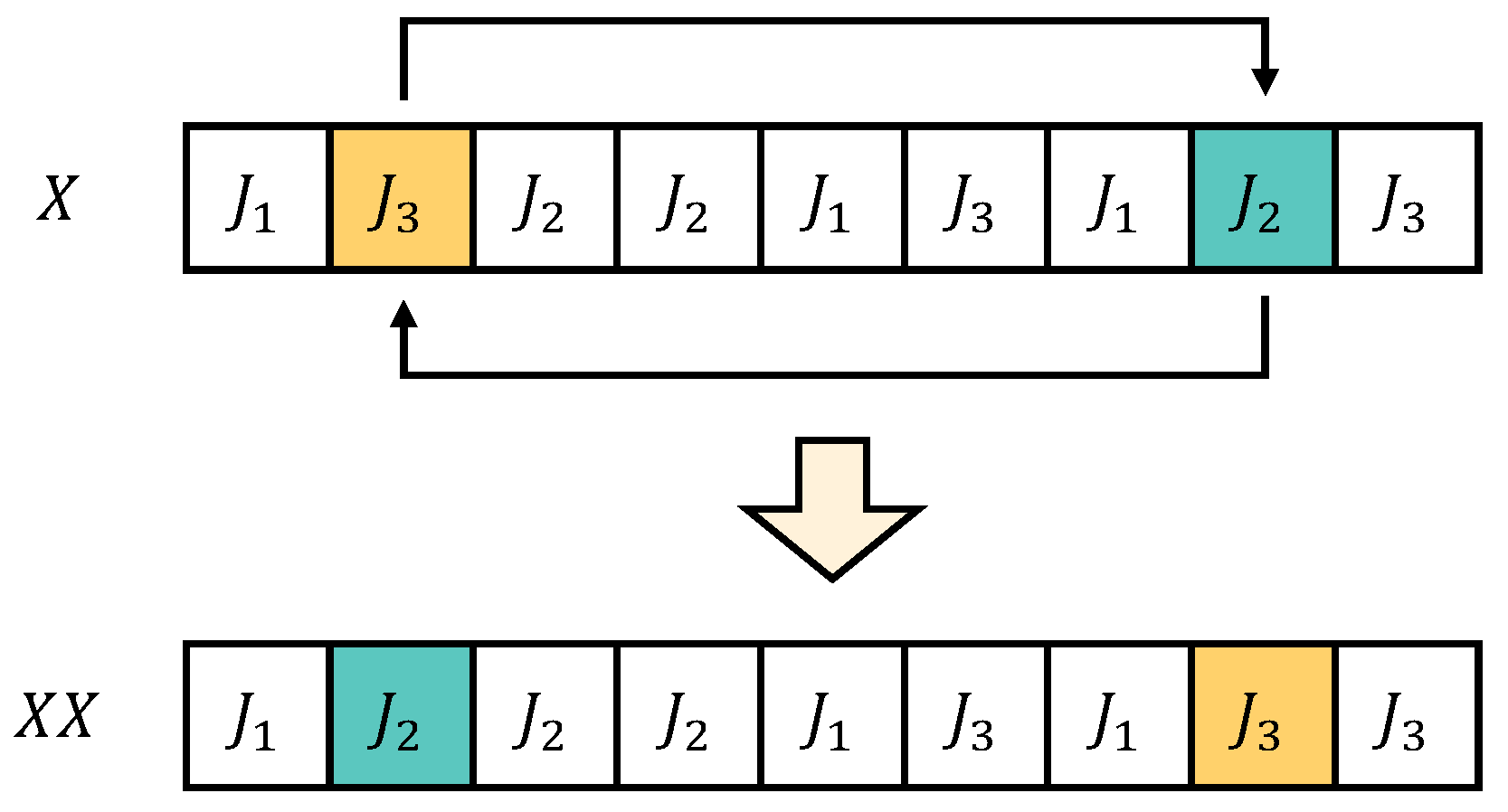

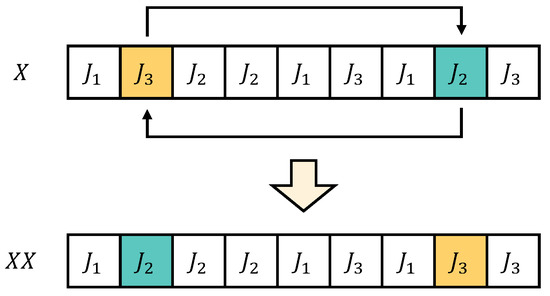

The executes a Two-point Exchange Neighborhood (TEN) [50], which implies exchanging the job operations in solution between the ith and jth dimensions, its pseudo-code is shown in [32]. The example of the exchanging process is shown in Figure 3. It is worth noting that, unlike the setup in [32], in this paper, in order to reduce the time complexity of VNS, does not evaluate all solutions of TEN (a total of solutions); only solutions are randomly selected for evaluation and then the best solution among them is chosen.

Figure 3.

Exchanging process in VNS method [31].

4. Proposed EOSMA for JSSP

The shortcomings of the original SMA are unbalanced exploration and exploitation, weak exploration ability, and easy-to-fall-into local optimum. Changing the simple random search strategy in SMA to an equilibrium optimizer strategy can not only enhance the exploration ability but also improve the diversity of the population. The search agent of EOSMA performs a heuristic search based on the Equation (16):

where is an empirical value; denotes a randomly selected solution from the equilibrium pool; denotes the first solution in the equilibrium pool, i.e., the optimal solution found so far; and the remaining parameters in the Equation (16) use the settings of the original algorithm.

It is worth noting that all components of the solution vector of the first equation of Equation (16) are updated synchronously, independent of the next two equations, while the components of the solution vector of the second and third equations are updated separately; i.e., the same solution vector may be updated using the second or third equation. Experiments show that asynchronous updates possess better performance than synchronous updates. In addition, EOSMA needs to update the equilibrium pool and the fitness weights of individuals at each generation, which increases the computational effort but does not increase the time complexity of the algorithm. Updating the equilibrium pool requires and updating the fitness weights requires sorting the fitness and, therefore, requires . Finally, EOSMA uses greedy selection repeatedly during iterations to speed up convergence, while SMA does not use the greedy strategy.

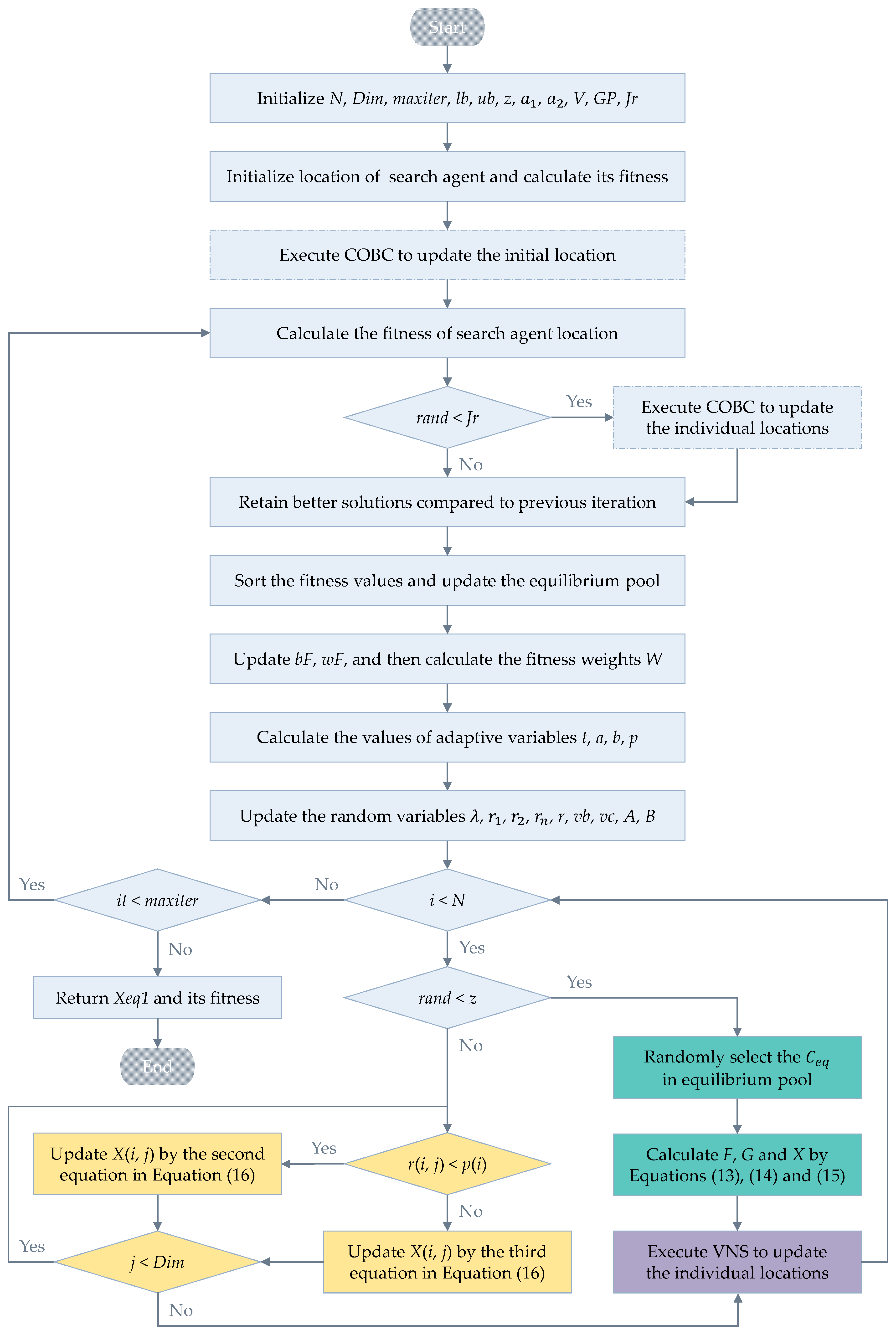

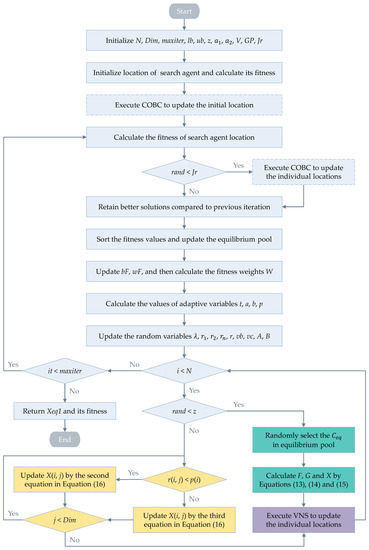

To further improve the performance of the hybrid algorithm for solving JSSP, the COBC strategy and the VNS strategy are introduced. The former enhances the exploration capability of the algorithm by selecting some individuals in each generation to perform the opposing computation; the latter is often used to solve combinatorial optimization problems, which is a local search algorithm framework that enhances the exploitation capability of the algorithm. The flow chart of EOSMA for solving JSSP is shown in Figure 4 and its pseudo-code is shown in Algorithm 5.

| Algorithm 5: Pseudo-code of EOSMA for JSSP |

|

Figure 4.

Flow chart of the EOSMA for JSSP.

5. Experimental Results and Discussions

In this paper, the performance of the EOSMA is evaluated by testing it on 82 test datasets taken from the OR Library. They are low-dimensional FT and ORB from [51,52], higher-dimensional LA and ABZ from [53,54], and high-dimensional YN and SWV from [55,56]. All experiments were executed on Win 10 Operating System and all algorithm codes were run in MATLAB R2019a with hardware details: Intel® Core™ i7-9700 CPU (3.00 GHz) and 16 GB RAM.

For a fair comparison, the population size of all comparison algorithms was set to 25, the maximum number of iterations was set to 100, and all comparison algorithms were run 20 times independently on each dataset. In this paper, five algorithms were selected for comparison experiments with the EOSMA, namely SMA [22], EO [10], MPA [23], AO [20], and BES [21], which are the latest proposed algorithms with superior performance. For a fair comparison, all comparison algorithms incorporate the VNS strategy described in Algorithm 4. The specific parameters of the comparison algorithms are kept consistent with the original paper, as shown in Table 4. The performance of the algorithms is evaluated using the best fitness and the average fitness. The performance metrics are then ranked and the Friedman mean rank of the algorithms on different test instances is tallied; the experimental results are shown in Table 5, Table 6 and Table 7. In these tables, Instance denotes the case name; Size denotes the problem size, i.e., the number of jobs and machines; BKS denotes the best-known solution for that instance as reported by Liu et al. [36]; Best denotes the best fitness obtained by the algorithm; and Mean denotes the average fitness.

Table 4.

Parameter settings of algorithms.

Table 5.

Comparison of solution results of algorithms on FT and ORB.

Table 6.

Comparison of solution results of algorithms on ABZ and LA.

Table 7.

Comparison of solution results of algorithms on YN and SWV.

From Table 5, we can know that EOSMA can obtain better results on the low-dimensional JSSP. The results show that for the FT instance, EOSMA achieves the best results on all three instances and finds BKS on FT06. For the ORB instance, EOSMA achieves the best average performance on 10 instances; finds better solutions than other algorithms on 8 instances; and obtains BKS on ORB07, while VMPA and VBES, respectively, achieve the best results on ORB2 and ORB10 obtained the best solutions. Thus, EOSMA has good performance in solving JSSP-related problems compared to the recently proposed metaheuristic algorithms.

From Table 6, it can be seen that EOSMA can effectively solve JSSP. For 45 instances of LA and ABZ, EOSMA can obtain better performance metrics than other algorithms on all instances except LA18 where the best result is obtained by VBES and find BKS on 24 instances of LA. This shows that EOSMA overcomes the SMA exploration capability shortcomings, and its global search capability is stronger than the latest proposed algorithm to avoid falling into local optimum.

Table 7 presents the algorithm’s solution results on the high-dimensional JSSP; the optimal solution on these instances has not been found yet, so only approximate solutions obtained by different algorithms can be compared. The experimental results show that VAO shows a competitive advantage on high-dimensional instances, achieving better solutions than EOSMA on six instances of SWV, and VMPA achieves the best solutions on YN4 and SWV10, respectively; however, the average solution performance is inferior to that of EOSMA. Therefore, EOSMA still has better performance than other comparative algorithms on high-dimensional JSSP, verifying the effectiveness, accuracy, and robustness of EOSMA on the JSSP.

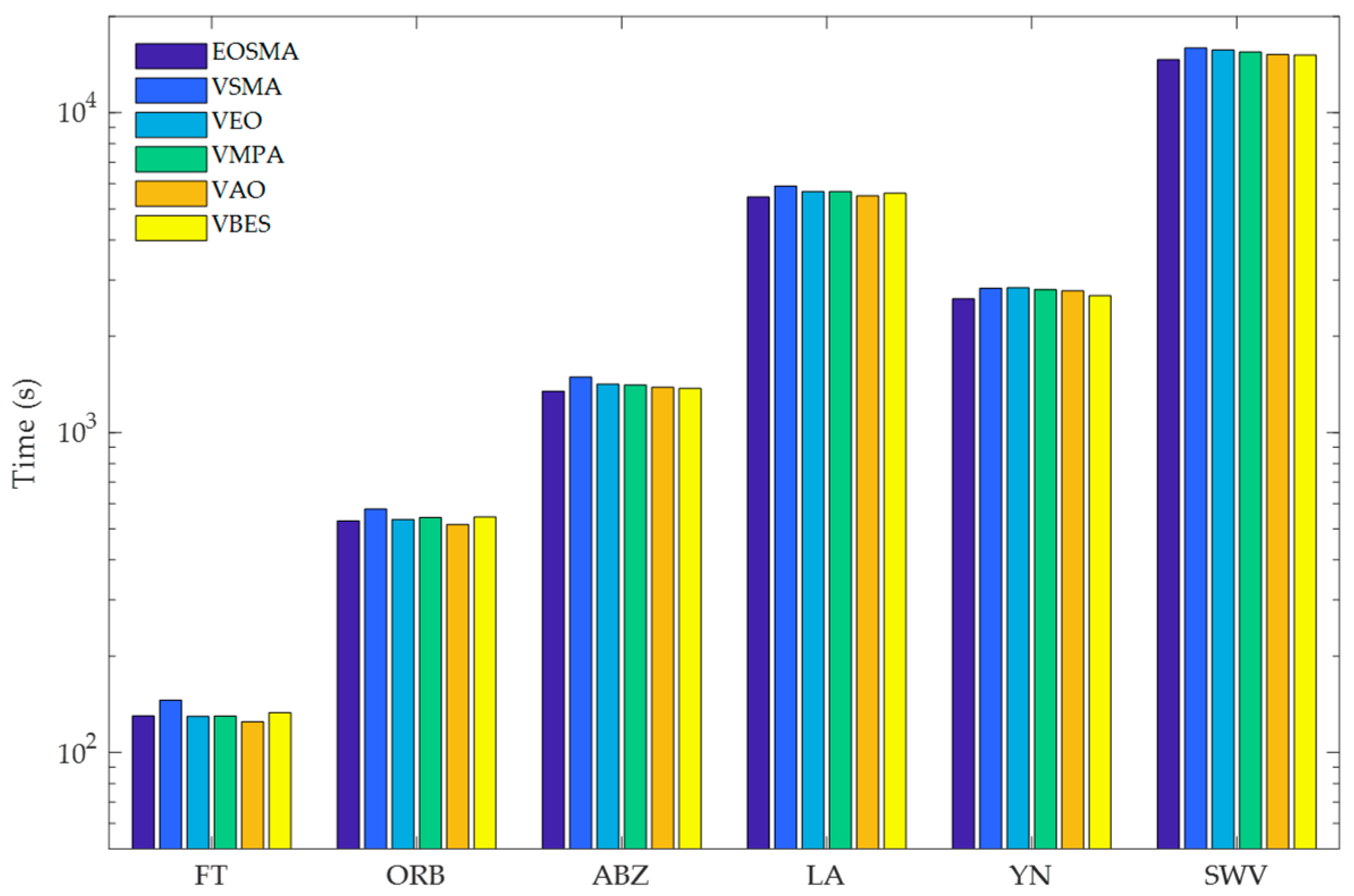

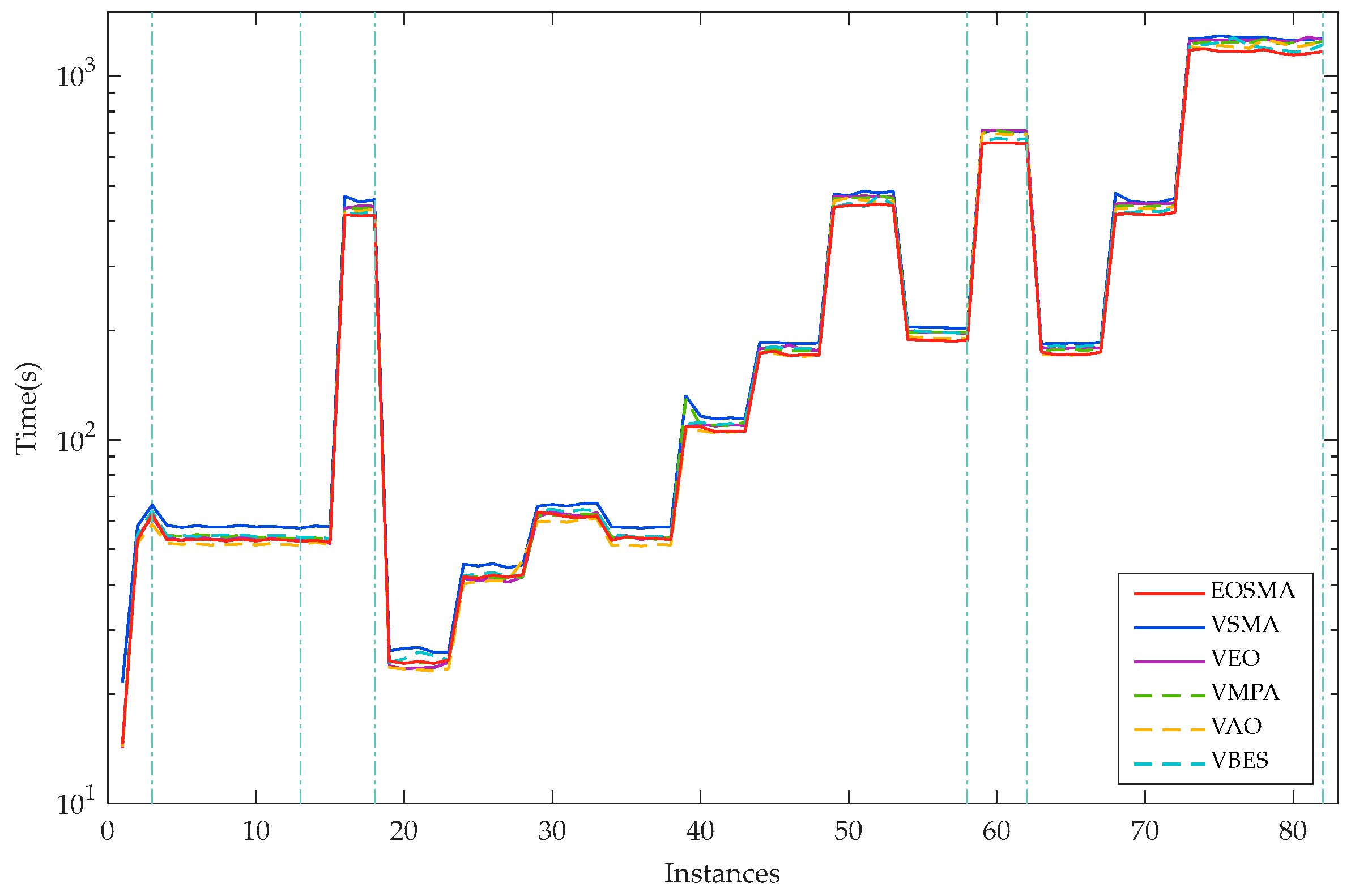

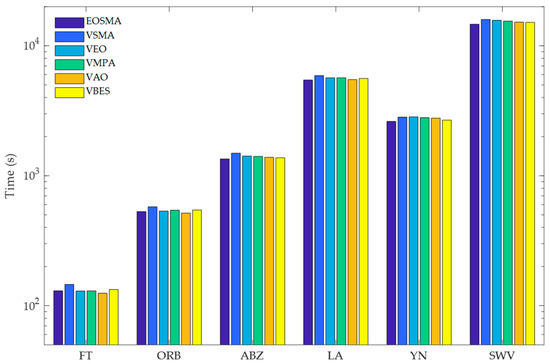

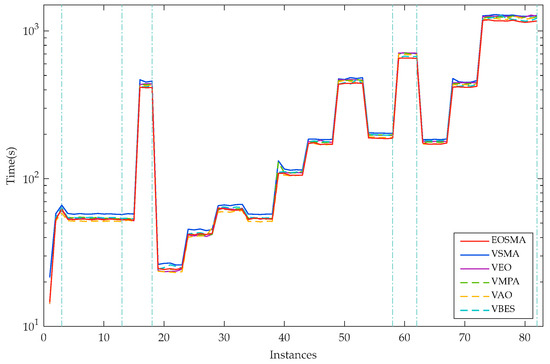

The execution times of the algorithms are shown in Figure 5 and Figure 6. Figure 5 represents the total time consumed by the six algorithms running 20 times on the six case datasets and Figure 6 shows the average single run times of the six algorithms on the 82 datasets.

Figure 5.

Execution time of six algorithms running 20 times.

Figure 6.

Average execution time of six algorithms on all instances.

As can be seen from Figure 5 and Figure 6, the execution time of EOSMA is the shortest among the six well-known comparison algorithms on ABZ, LA, YN, and SWV. The VAO has the shortest execution time on FT and ORB, followed by EOSMA, VEO, and VMPA. Since all six algorithms introduce the neighborhood search strategy, the main time consumption also comes from VNS but the execution time of EOSMA is significantly lower than VSMA on all instances. Particularly, the execution time of EOSMA is the shortest when solving the high-dimensional JSSP. It shows that EOSMA not only outperforms the well-known comparison algorithms in terms of convergence accuracy and robustness, but also has a shorter execution time.

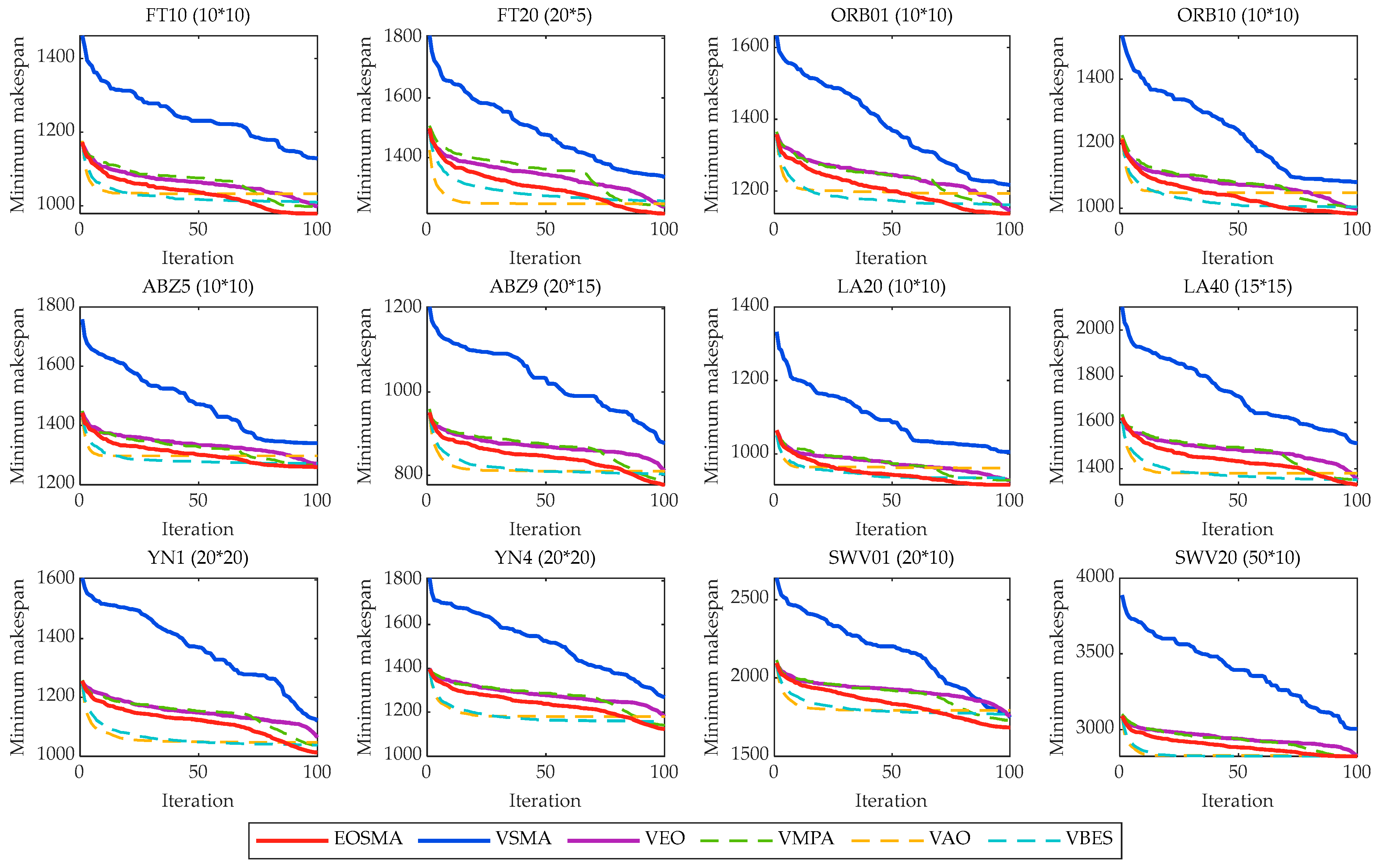

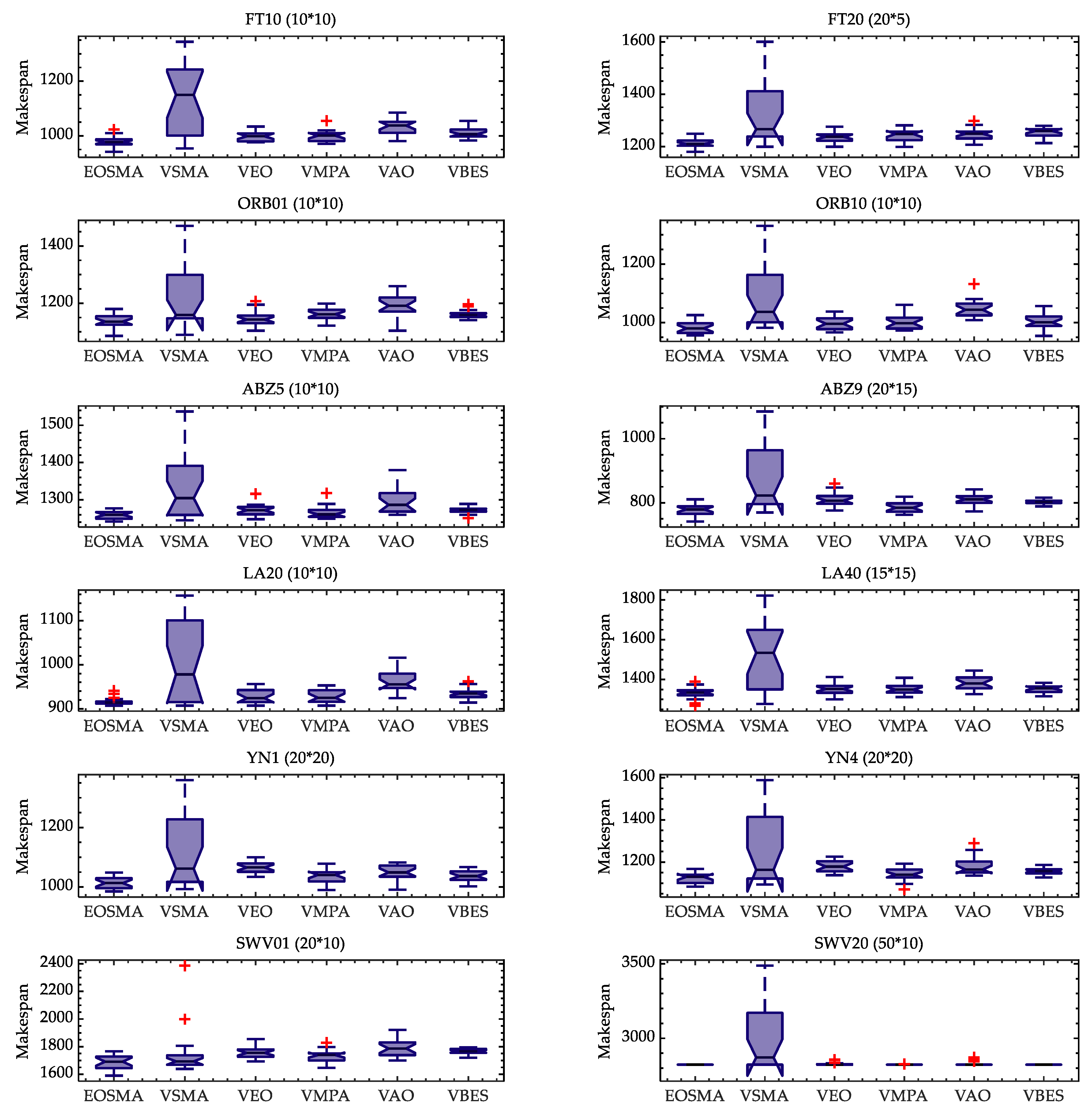

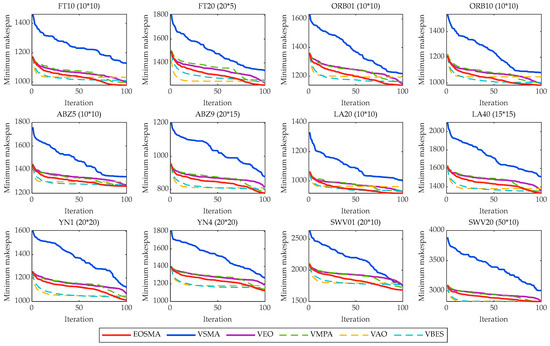

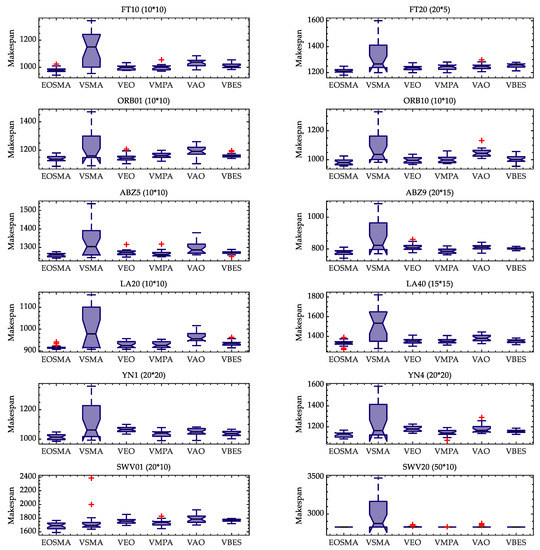

In order to further analyze the convergence process of EOSMA and the comparison algorithm, two instances are selected from each instance set and their convergence curves and box plots are drawn in Figure 7 and Figure 8. It can be concluded that the convergence speed of EOSMA is faster than VSMA and VEO, and the final convergence accuracy is also better. It is worth noting that VSMA without hybridized EO operator has the slowest convergence speed, which is mainly due to the fact that SMA does not use a greedy selection strategy during the iterative process and the second equation of the Equation (3) uses the optimal solution of the current generation instead of the optimal solution found so far. Although EOSMA does not converge as fast as VAO and VBES in the early stages, the latter tends to fall into a local optimum later in the iteration, suggesting that EOSMA strikes a better balance between exploration and exploitation. The box plot likewise shows that EOSMA can find better solutions than other algorithms, with an average performance better than the comparison algorithms and far better than VSMA.

Figure 7.

Average convergence curves of all comparison algorithms.

Figure 8.

Box plots of all algorithms executed 20 times on instances.

The Wilcoxon rank-sum test [37] was performed to examine whether there was a statistically significant difference between the two sets of data, i.e., whether the results obtained by the algorithm were influenced by random factors. A similar comparison of statistical experiments is required to confirm the validity of the data because the metaheuristic algorithm is random [57]. The smaller the p-value, the greater the degree of confidence that there is a significant difference between the two data sets. When the p-value is less than 0.05, it indicates that the results obtained by the two algorithms are significantly different at the 95% confidence interval. Table 8 exhibits the results of the Wilcoxon p-value test for EOSMA and other well-known comparison algorithms.

Table 8.

The p-value generated by Wilcoxon rank-sum test (two-tailed).

The results of the Wilcoxon rank-sum test indicate that there are fewer instances without significant differences (as shown in bold), where NaN indicates that the two algorithms find exactly the same solution, in which case the optimal solution for that instance is usually found. Moreover, EOSMA significantly outperforms VSMA, VEO, VMPA, VAO, and VBES on 76, 60, 49, 69, and 53 instances, respectively, indicating that the algorithm has performance advantages on different instances of JSSP. In conclusion, the performance of EOSMA is significantly different from SMA, EO, and AO on JSSP; the results are statistically significant, indicating that the results obtained by EOSMA can be reproducibly achieved with more than 95% confidence.

6. Conclusions and Future Work

SMA is a novel swarm-based optimization algorithm inspired by the foraging behavior of slime mould; EO is a superior performance physics-based optimization algorithm inspired by the control volume mass balance equation. Although SMA has been applied in various fields due to the novelty of its metaheuristic rules, SMA still suffers from slow convergence, poor robustness, unbalanced exploration and exploitation, and the tendency to fall into local optimality. To overcome these drawbacks, we propose a hybrid algorithm, EOSMA, which uses a centroid opposition-based computation and VNS strategy combined with an SOI rule-based encoding method for fast and efficient solution of job shop scheduling problems. In EOSMA, the random search strategy of SMA is first replaced by the concentration update operator of EO and the third equation of Equation (3) is replaced by the third equation of Equation (16). Then, the centroid opposition-based calculation was introduced into the hybrid algorithm. With these changes, the search agent has a higher probability of finding a better solution and reduces the number of invalid searches, thus improving the exploration and exploitation capabilities of SMA. Finally, to solve JSSP more effectively, the two-point exchange neighborhood search strategy is added to EOSMA, which enhances the local search capability of EOSMA to solve JSSP. The performance of EOSMA was tested on 82 JSSP datasets from the OR Library and compared with recently proposed algorithms with superior performance. The experimental results show that EOSMA exhibits better search capability than SMA, EO, MPA, AO, and BES in solving JSSP.

JSSP is one of the well-known NP-hard problems. As the size increases, the search space of the problem increases dramatically and the execution time of many existing algorithms will increase dramatically, which cannot solve the larger scale job shop scheduling problem well. Additionally, EOSMA can effectively solve the larger scale JSSP in a reasonable running time. This is mainly because EOSMA not only relies on the local search capability of VNS but also has a strong global search capability before the local search, which can better guide the VNS strategy to find the optimal solution. Therefore, EOSMA is a promising algorithm. Future work will consider more practical scheduling problems, such as the flow shop scheduling problem considering material handling time and the permutation flow shop dynamic scheduling problem considering dynamic changes of processed raw materials, etc.

Author Contributions

Conceptualization, methodology, Y.W. and S.Y.; software, Q.L.; writing—original draft preparation, Y.W.; writing—review and editing, Z.O., K.M.D. and Y.Z.; funding acquisition, Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant No. U21A20464, 62066005.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the editor and the three anonymous referees for their positive comments and useful suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Garey, M.R.; Johnson, D.S.; Sethi, R. The complexity of flowshop and jobshop scheduling. Math. Oper. Res. 1976, 1, 330–348. [Google Scholar] [CrossRef]

- Gong, G.; Deng, Q.; Chiong, R.; Gong, X.; Huang, H. An effective memetic algorithm for multi-objective job-shop scheduling. Knowl.-Based Syst. 2019, 182, 104840. [Google Scholar] [CrossRef]

- Tang, C.; Zhou, Y.; Tang, Z.; Luo, Q. Teaching-learning-based pathfinder algorithm for function and engineering optimization problems. Appl. Intell. 2021, 51, 5040–5066. [Google Scholar] [CrossRef]

- Grefenstette, J.J. Genetic algorithms and machine learning. Mach. Learn. 1988, 3, 95–99. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Kirkpatrick, S. Optimization by simulated annealing: Quantitative studies. J. Stat. Phys. 1984, 34, 975–986. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Hatamlou, A. Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 2015, 27, 495–513. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Zhang, Z. A novel atom search optimization for dispersion coefficient estimation in groundwater. Future Gener. Comput. Syst. 2018, 91, 601–610. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Stephens, B.; Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl.-Based Syst. 2020, 191, 105190. [Google Scholar] [CrossRef]

- Eberhart, R.; Kennedy, J. A new optimizer using particle swarm theory. In Proceedings of the Sixth International Symposium on Micro Machine and Human Science, MHS’95, Nagoya, Japan, 4–6 October 1995; IEEE: Nagoya, Japan, 1995; pp. 39–43. [Google Scholar]

- Karaboga, D.; Basturk, B. A powerful and efficient algorithm for numerical function optimization: Artificial Bee Colony (ABC) algorithm. J. Glob. Optim. 2007, 39, 459–471. [Google Scholar] [CrossRef]

- Cuevas, E.; Cienfuegos, M.; Zaldívar, D.; Pérez-Cisneros, M. A swarm optimization algorithm inspired in the behavior of the social-spider. Expert Syst. Appl. 2013, 40, 6374–6384. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Dhiman, G.; Kumar, V. Seagull optimization algorithm: Theory and its applications for large-scale industrial engineering problems. Knowl.-Based Syst. 2018, 165, 169–196. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp swarm algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput.-Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Abualigah, L.; Yousri, D.; Abd Elaziz, M.; Ewees, A.A.; Al-qaness, M.A.A.; Gandomi, A.H. Aquila optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

- Alsattar, H.A.; Zaidan, A.A.; Zaidan, B.B. Novel meta-heuristic bald eagle search optimisation algorithm. Artif. Intell. Rev. 2020, 53, 2237–2264. [Google Scholar] [CrossRef]

- Li, S.; Chen, H.; Wang, M.; Heidari, A.A.; Mirjalili, S. Slime mould algorithm: A new method for stochastic optimization. Future Gener. Comput. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine predators algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Braik, M.S. Chameleon swarm algorithm: A bio-inspired optimizer for solving engineering design problems. Expert Syst. Appl. 2021, 174, 114685. [Google Scholar] [CrossRef]

- Bogar, E.; Beyhan, S. Adolescent Identity Search Algorithm (AISA): A novel metaheuristic approach for solving optimization problems. Appl. Soft Comput. 2020, 95, 106503. [Google Scholar] [CrossRef]

- Kurdi, M. An effective new island model genetic algorithm for job shop scheduling problem. Comput. Oper. Res. 2016, 67, 132–142. [Google Scholar] [CrossRef]

- Song, X.Y.; Meng, Q.H.; Yang, C. Improved taboo search algorithm for job shop scheduling problems. Syst. Eng. Electron. 2008, 30, 93–96. [Google Scholar] [CrossRef]

- Aydin, M.E.; Fogarty, T.C. A distributed evolutionary simulated annealing algorithm for combinatorial optimisation problems. J. Heuristics 2004, 10, 269–292. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, W.; Xu, X. A hybrid discrete particle swarm optimization for dual-resource constrained job shop scheduling with resource flexibility. J. Intell. Manuf. 2017, 28, 1961–1972. [Google Scholar] [CrossRef]

- Huang, R.-H.; Yu, T.-H. An effective ant colony optimization algorithm for multi-objective job-shop scheduling with equal-size lot-splitting. Appl. Soft Comput. 2017, 57, 642–656. [Google Scholar] [CrossRef]

- Banharnsakun, A.; Sirinaovakul, B.; Achalakul, T. Job shop scheduling with the best-so-far ABC. Eng. Appl. Artif. Intell. 2012, 25, 583–593. [Google Scholar] [CrossRef]

- Keesari, H.S.; Rao, R.V. Optimization of job shop scheduling problems using teaching-learning-based optimization algorithm. OPSEARCH 2014, 51, 545–561. [Google Scholar] [CrossRef]

- Dao, T.-K.; Pan, T.-S.; Nguyen, T.-T.; Pan, J.-S. Parallel bat algorithm for optimizing makespan in job shop scheduling problems. J. Intell. Manuf. 2018, 29, 451–462. [Google Scholar] [CrossRef]

- Wang, X.; Duan, H. A hybrid biogeography-based optimization algorithm for job shop scheduling problem. Comput. Ind. Eng. 2014, 73, 96–114. [Google Scholar] [CrossRef]

- Zhao, F.; Liu, Y.; Zhang, Y.; Ma, W.; Zhang, C. A hybrid harmony search algorithm with efficient job sequence scheme and variable neighborhood search for the permutation flow shop scheduling problems. Eng. Appl. Artif. Intell. 2017, 65, 178–199. [Google Scholar] [CrossRef]

- Liu, M.; Yao, X.; Li, Y. Hybrid whale optimization algorithm enhanced with Lévy flight and differential evolution for job shop scheduling problems. Appl. Soft Comput. 2020, 87, 105954. [Google Scholar] [CrossRef]

- Liu, C. An improved Harris hawks optimizer for job-shop scheduling problem. J. Supercomput. 2021, 77, 14090–14129. [Google Scholar] [CrossRef]

- Wei, Y.; Zhou, Y.; Luo, Q.; Deng, W. Optimal reactive power dispatch using an improved slime mould algorithm. Energy Rep. 2021, 7, 8742–8759. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Chang, V.; Mohamed, R. HSMA_WOA: A hybrid novel slime mould algorithm with whale optimization algorithm for tackling the image segmentation problem of chest X-ray images. Appl. Soft Comput. 2020, 95, 106642. [Google Scholar] [CrossRef]

- Liu, Y.; Heidari, A.A.; Ye, X.; Liang, G.; Chen, H.; He, C. Boosting slime mould algorithm for parameter identification of photovoltaic models. Energy 2021, 234, 121164. [Google Scholar] [CrossRef]

- Yu, K.; Liu, L.; Chen, Z. An improved slime mould algorithm for demand estimation of urban water resources. Mathematics 2021, 9, 1316. [Google Scholar] [CrossRef]

- Hassan, M.H.; Kamel, S.; Abualigah, L.; Eid, A. Development and application of slime mould algorithm for optimal economic emission dispatch. Expert Syst. Appl. 2021, 182, 115205. [Google Scholar] [CrossRef]

- Zhao, S.; Wang, P.; Heidari, A.A.; Chen, H.; Turabieh, H.; Mafarja, M.; Li, C. Multilevel threshold image segmentation with diffusion association slime mould algorithm and Renyi’s entropy for chronic obstructive pulmonary disease. Comput. Biol. Med. 2021, 134, 104427. [Google Scholar] [CrossRef] [PubMed]

- Yu, C.; Asghar Heidari, A.; Xue, X.; Zhang, L.; Chen, H.; Chen, W. Boosting quantum rotation gate embedded slime mould algorithm. Expert Syst. Appl. 2021, 181, 115082. [Google Scholar] [CrossRef]

- Rizk-Allah, R.M.; Hassanien, A.E.; Song, D. Chaos-opposition-enhanced slime mould algorithm for minimizing the cost of energy for the wind turbines on high-altitude sites. ISA Trans. 2021, 121, 191–205. [Google Scholar] [CrossRef] [PubMed]

- Houssein, E.H.; Mahdy, M.A.; Blondin, M.J.; Shebl, D.; Mohamed, W.M. Hybrid slime mould algorithm with adaptive guided differential evolution algorithm for combinatorial and global optimization problems. Expert Syst. Appl. 2021, 174, 114689. [Google Scholar] [CrossRef]

- Premkumar, M.; Jangir, P.; Sowmya, R.; Alhelou, H.H.; Heidari, A.A.; Chen, H. MOSMA: Multi-objective slime mould algorithm based on elitist non-dominated sorting. IEEE Access 2021, 9, 3229–3248. [Google Scholar] [CrossRef]

- Rahnamayan, S.; Jesuthasan, J.; Bourennani, F.; Salehinejad, H.; Naterer, G.F. Computing opposition by involving entire population. In Proceedings of the 2014 IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6–11 July 2014; IEEE: Beijing, China, 2014; pp. 1800–1807. [Google Scholar]

- Hansen, P.; Mladenović, N.; Moreno Pérez, J.A. Variable neighbourhood search: Methods and applications. 4OR 2008, 6, 319–360. [Google Scholar] [CrossRef]

- Gao, L.; Li, X.; Wen, X.; Lu, C.; Wen, F. A hybrid algorithm based on a new neighborhood structure evaluation method for job shop scheduling problem. Comput. Ind. Eng. 2015, 88, 417–429. [Google Scholar] [CrossRef]

- Fisher, H.; Thompson, G.L. Probabilistic Learning Combinations of Local Job-Shop Scheduling Rules. Industrial Scheduling; Prentice-Hall: Hoboken, NJ, USA, 1963; pp. 225–251. [Google Scholar]

- Applegate, D.; Cook, W. A computational study of the job-shop scheduling instance. ORSA J. Comput. 1991, 3, 149–156. [Google Scholar] [CrossRef]

- Adams, J.; Balas, E.; Zawack, D. The shifting bottleneck procedure for job shop scheduling. Manag. Sci. 1988, 34, 391–401. [Google Scholar] [CrossRef]

- Lawrence, S. Resource Constrained Project Scheduling: An Experimental Investigation of Heuristic Scheduling Techniques (Supplement); Graduate School of Industrial Administration, Carnegie-Mellon University: Pittsburgh, PA, USA, 1984. [Google Scholar]

- Yamada, T.; Nakano, R. A genetic algorithm applicable to large-scale job-shop instances. Parallel Instance Solving Nat. 1992, 2, 281–290. [Google Scholar]

- Storer, R.H.; Wu, S.D.; Vaccari, R. New search spaces for sequencing problems with application to job shop scheduling. Manag. Sci. 1992, 38, 1495–1509. [Google Scholar] [CrossRef]

- Yin, S.; Luo, Q.; Du, Y.; Zhou, Y. DTSMA: Dominant swarm with adaptive t-distribution mutation-based slime mould algorithm. Math. Biosci. Eng. 2022, 19, 2240–2285. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).