Abstract

This article considers a box-constrained global optimization problem for Lipschitz continuous functions with an unknown Lipschitz constant. The well-known derivative-free global search algorithm DIRECT (DIvide RECTangle) is a promising approach for such problems. Several studies have shown that recent two-step (global and local) Pareto selection-based algorithms are very efficient among all DIRECT-type approaches. However, despite its encouraging performance, it was also observed that the candidate selection procedure has two possible shortcomings. First, there is no limit on how small the size of selected candidates can be. Secondly, a balancing strategy between global and local candidate selection is missing. Therefore, it may waste function evaluations by over-exploring the current local minimum and delaying finding the global one. This paper reviews and employs different strategies in a two-step Pareto selection framework (1-DTC-GL) to overcome these limitations. A detailed experimental study has revealed that existing strategies do not always improve and sometimes even worsen results. Since 1-DTC-GL is a DIRECT-type algorithm, the results of this paper provide general guidance for all DIRECT-type algorithms on how to deal with excessive local refinement more efficiently.

Keywords:

optimization; global optimization; derivative-free optimization; exact optimization algorithms; DIRECT-type algorithms MSC:

90C56; 90C26; 65K05

1. Introduction

In this paper, we consider a box-constrained global optimization problem of the form:

where suppose to be the Lipschitz-continuous objective function, is the input vector, and the feasible region is an n-dimensional hyper-rectangle . The objective function can be non-linear, non-differentiable, non-convex, multi-modal, and potentially a “black-box.” In a black-box case, analytical information is unavailable and can be obtained only by evaluating the function at feasible points. Therefore, traditional derivative-information based local optimization methods cannot be used in this situation.

Among derivative-free global optimization algorithms addressing the black-box problem, two main classes [1] are stochastic meta-heuristic algorithms [2,3,4] and deterministic ones [5,6]. The DIRECT algorithm developed by Jones [7] is a popular and widely used deterministic solution technique for various real-world optimization problems [8,9,10,11,12]. The proposed algorithm is an extension of the classical Lipschitz optimization [13,14,15], which no longer requires the knowledge of the Lipschitz constant. The DIRECT algorithm [7] seeks global optima by dividing the most promising hyper-rectangles and evaluating the objective function at their centers.

Since the original algorithm introduction, many researchers have suggested various modifications or extensions of the DIRECT algorithm in various directions. Recent extensive numerical studies [1,16,17] show that DIRECT-type algorithms are often among the most efficient derivative-free global optimization solution techniques. Various hybridized approaches [10,18,19] that are enriched with local search procedures are among the most effective [16,17,20]. However, on average, among traditional (non-hybridized) DIRECT-type algorithms, two-step (global and local) Pareto selection scheme-based approaches, DIRECT-GL [17], 1-DTC-GL [21], often showed the best performance [17]. Moreover, for complex multi-modal and non-convex problems, they often outperformed hybrid ones.

Nevertheless, in a recent survey [22], two possible shortcomings of such two-step Pareto selection were specified. First, these scheme-based algorithms have no protection against “over-exploring” a local minimum, e.g., no limitation of how small the size of selected potentially optimal hyper-rectangles (POHs) can be. Secondly, a balancing strategy between global and local candidate selection is missing. The two-step-based Pareto selection scheme performs global and local search-oriented selection at each iteration. If the current best solution has remained unchanged for several iterations, it can be deduced that the local selection step is potentially unnecessary.

We note that some proposals and studies have already been carried out in the context of the DIRECT algorithm. In [17], we have experimentally investigated a few strategies [7,23,24,25,26] devoted to preventing the original DIRECT algorithm from selecting tiny hyper-rectangles around current local minima. Moreover, the same study also investigated different strategies for balancing global and local phases [10,18,27,28]. However, we cannot generalize from these results which strategy is the most efficient, as individual algorithms were compared, which may have varied not only in the selection step but also in other steps such as partitioning and sampling. It is therefore unclear which of the proposed improvements has the most potential to prevent excessive local refinement.

Contributions and Structure

The main contributions of this work are summarized below:

- It reviews the proposed techniques for excessive local refinement reduction for DIRECT-type algorithms.

- It experimentally validates them on one of the fastest two-step Pareto selection based 1-DTC-GL algorithm.

- It accurately assesses the impact of each of them and, based on these results, makes recommendations for DIRECT-type algorithms in general.

- All six of the newly developed DIRECT-type algorithmic variations are freely available to anyone, ensuring complete reproducibility and re-usability of all results.

The rest of the paper is organized as follows. Section 2.1 reviews the original DIRECT algorithm. Section 2.2 describes a two-step Pareto selection-based DIRECT-GL algorithm. The review of existing local refinement reduction techniques for DIRECT-type algorithms is given in Section 2.3. New 1-DTC-GL algorithmic variations are given in Section 2.4. The numerical investigation using 287 DIRECTGOLib v1.2 test problems is presented and discussed in Section 3. Finally, Section 4 concludes the paper and highlights possible future directions.

2. Materials and Methods

This section introduces the original DIRECT and 1-DTC-GL algorithms. Additionally, existing strategies for preventing excessive refinement around the current minima are reviewed, summarizing their strengths and weaknesses.

2.1. Overview of the DIRECT Algorithm

The original DIRECT algorithm applies to box-constrained problems and works most of the time in the normalized domain. Therefore, the DIRECT algorithm initially normalizes the domain to the unit hyper-rectangle . The algorithm only refers to the original space when performing objective function evaluations. Therefore, when we say that the value of the objective function is evaluated at , where is the midpoint of the hyper-rectangle, it means that the corresponding midpoint of the original domain () is used, i.e.,

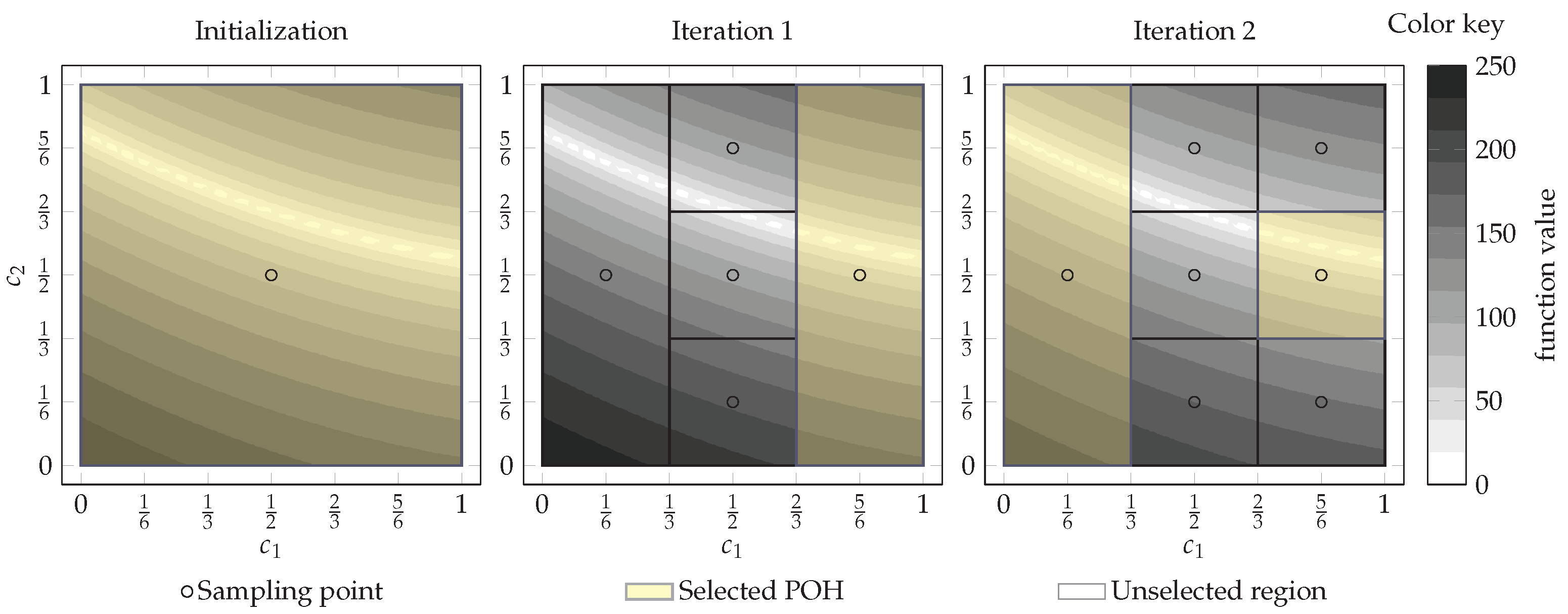

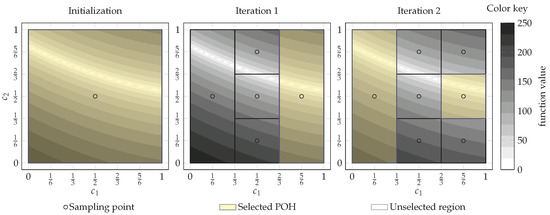

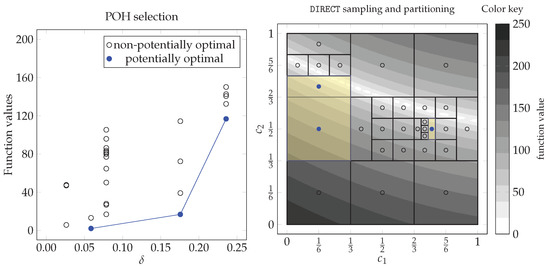

The DIRECT algorithm starts the search by evaluating the objective function at the midpoint of the initial unit hyper-rectangle . Then, the algorithm identifies and selects potentially optimal hyper-rectangles. Initially, only one hyper-rectangle is available (see the left panel in Figure 1). Therefore, selection is trivial. After this, DIRECT creates new midpoints at the positions

where is the maximum side length, M is a set of coordinates with the maximum side length, and is the jth unit vector. The DIRECT algorithm uses n-dimensional trisection, so the objective function is evaluated at each POH only once. The midpoint of selected POH becomes the midpoint of the new smaller “middle” third hyper-rectangle and does not requires additional re-evaluation.

Figure 1.

Two-dimensional illustration of trisection used in the original DIRECT algorithm [7], solving Bukin6 test problem.

If POH has more than one longest coordinate, i.e., (e.g., for the n-dimensional initial hyper-rectangle ), DIRECT starts trisection from the coordinate () with the lowest value

and continues to the highest [7,22]. By using this procedure, it is ensured that lower function values are placed in larger hyper-rectangles. It can be seen in the middle panel of Figure 1. If all coordinates are equal, new smaller non-overlapping hyper-rectangles of n distinct measures are created. Figure 1 demonstrates the selection, sampling, and partitioning process at the initialization and the first two DIRECT iterations on the two-dimensional Bukin6 test problem.

In contrast to initialization, from the first iteration onward (), the selection of the so-called “potentially optimal” hyper-rectangles that should be further explored is not trivial. Let us formalize this selection process.

Let the current partition in iteration k be defined as

where and is the index set identifying the current partition . The next partition, , is obtained by subdividing selected POHs from the current partition . Note that at the first iteration, there is only one candidate, , which is automatically potentially optimal. The formal requirement of potential optimality in future iterations is stated in Definition 1.

Definition 1.

Let denote the center sampling point and be a measure (equivalently, sometimes called distance or size) of the hyper-rectangle . Let be a positive constant and be the best currently found value of the objective function. A hyper-rectangle is said to be potentially optimal if there exists some rate-of-change (Lipschitz) constant such that

where the measure of the hyper-rectangle is

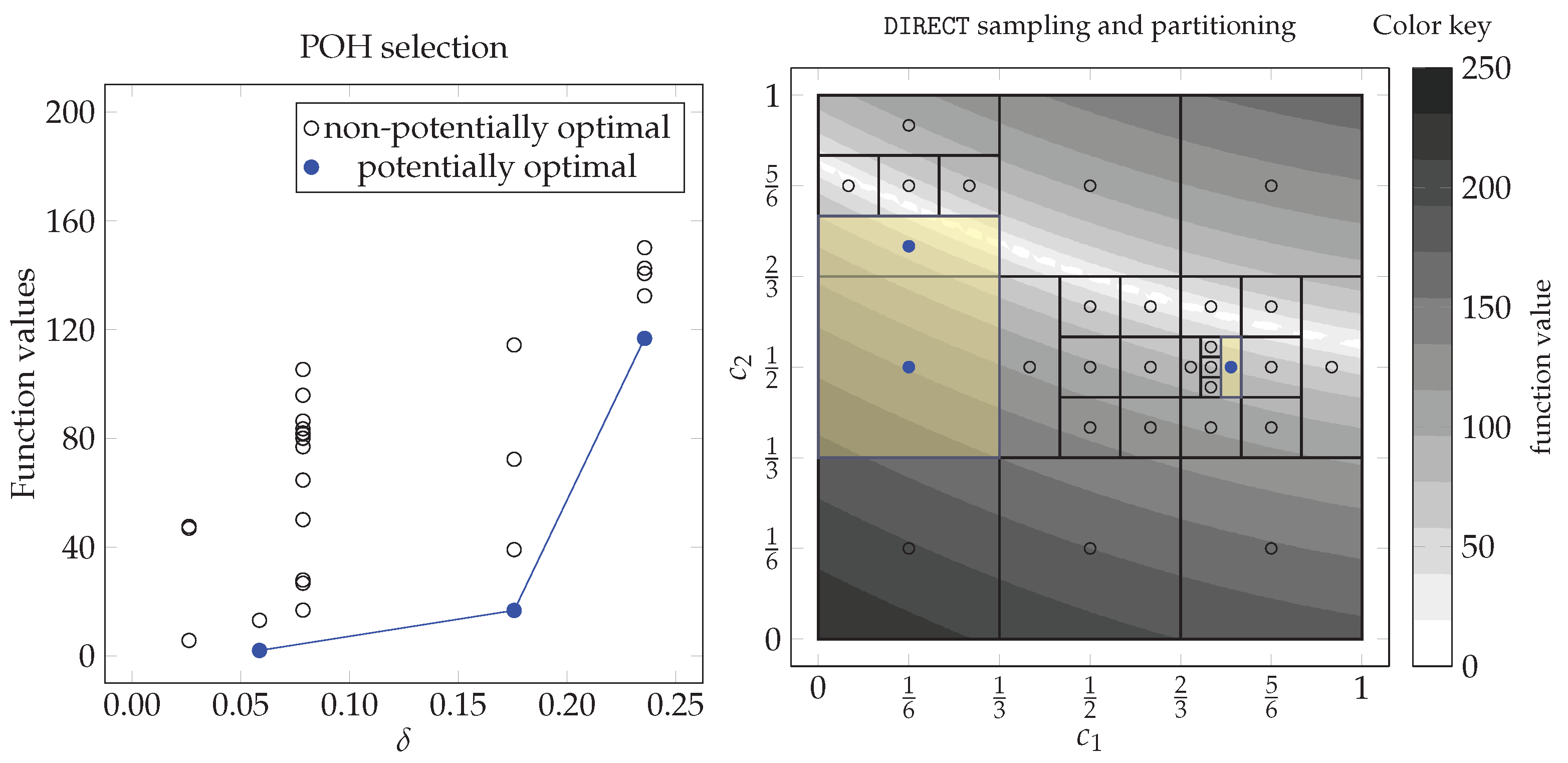

The hyper-rectangle is potentially optimal if the lower Lipschitz bound for the objective function computed by the left-hand side of (5) is the smallest one with some positive constant in the current partition . A geometrical interpretation of POH selection using Definition 1 is illustrated on the left side of Figure 2. Here, each hyper-rectangle is represented as a point whose horizontal coordinate is equal to the measure () and vertical coordinate is equal to the function value attained at the midpoint . The hyper-rectangles satisfying Definition 1 are marked in blue and correspond to the lower-right convex hull of the points. Selected hyper-rectangles are then sampled and subdivided. This process continues until some stopping condition is satisfied and is summarized in Algorithm 1.

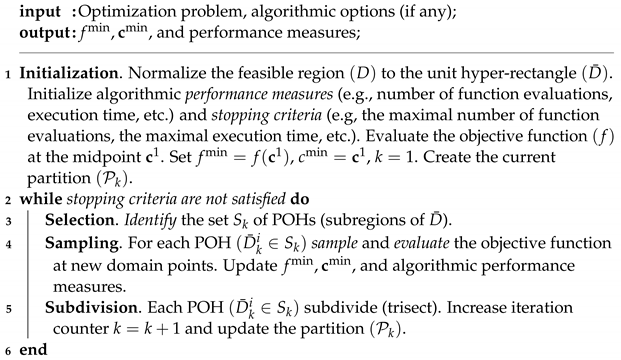

| Algorithm 1: Main steps of the DIRECT algorithm |

|

Figure 2.

Visualization of selected potentially optimal rectangles in the fifth iteration of the DIRECT algorithm solving two-dimensional Bukin6 test problem.

2.2. Two-Step Pareto Selection Based 1-DTC-GL Algorithm

Our previous work [29] introduced a new two-step Pareto selection [30] based algorithm DIRECT-GL. In [21], we investigated the most efficient partitioning schemes combined with a Pareto selection based on two steps. Hyper-rectangular partitioning based on 1-Dimensional Trisection and sampling at the Center points combined with two-step (Global and Local) Pareto selection (1-DTC-GL) was one of the most efficient DIRECT-type approaches. The POH selection in 1-DTC-GL is the most significant difference compared to the original DIRECT. In each iteration, 1-DTC-GL performs the identification of POHs twice. Both times, it selects only Pareto-optimal hyper-rectangles. In the following, we formally state two algorithms used to find them.

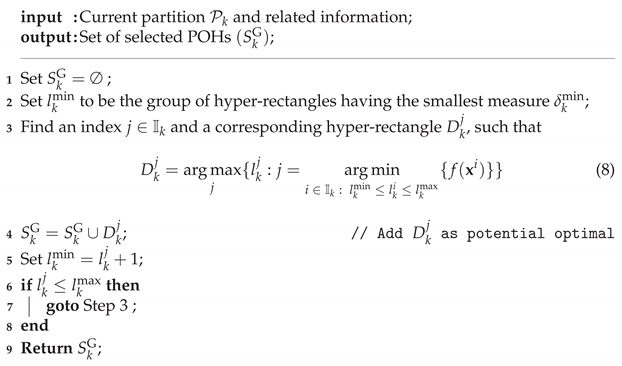

Let be the set of all different indices at the current partition , corresponding to the groups of hyper-rectangles having the same measure . Then, the minimum value corresponds to the group of hyper-rectangles having the smallest measure , while —having the largest measure , i. e., . Algorithm 2 presents the main steps for identifying Pareto optimal hyper-rectangles, i.e., non-dominated on measure (the higher, the better) and midpoint function value (the lower, the better).

| Algorithm 2: Pareto selection enhancing the global search |

|

Similarly, Algorithm 3 selects the hyper-rectangles that are non-dominated in measure and distance from the current minimum point (the closer, the better). When both steps are completed, the unique union of these two sets, from Algorithm 2 and from Algorithm 3, is used. This way, in 1-DTC-GL, the set of POHs is enlarged with various size hyper-rectangles nearest the current minimum point , ensuring a broader examination around the current minima.

| Algorithm 3: Pareto selection enhancing the local search |

| input: Current partition and related information; output: Set of selected POHs ; |

|

Let us summarize the differences between the two-step Pareto selection schemes and the original DIRECT. The selection of POH in 1-DTC-GL is performed twice, taking into account the objective function values and Euclidean distances from the current minima. This way, the set of POHs is enlarged by considering more medium-sized hyper-rectangles and may be more global. The local selection step includes more hyper-rectangles around current minima. In this way, refinement of the solution is accelerated. On the other hand, it can have the opposite effect, as this selection scheme does not protect against over-exploration in sub-optimal regions. In the original DIRECT, the parameter in Equation (6) is used to protect against this [7,28]. However, in 1-DTC-GL, even a tiny hyper-rectangle, where only negligible improvement can be expected, can be selected. Furthermore, the local selection step may be excessive when the current minima do not improve in a series of consecutive iterations.

2.3. Review of Excessive Local Refinement Reduction Techniques

This section reviews techniques introduced in the DIRECT literature for reducing excessive local refinement.

2.3.1. Replacing the Minimum Value with an Average and Median Values

In the original selection of POHs, Equation (6) in Definition 1 is used to protect against excessive refinement around the current local minima [7,28]. In [7], the authors obtained good results for values of ranging from to , while in [24,26,31,32], the authors introduced an adaptive scheme for the parameter .

However, in [23], it was observed that such a selection strategy is sensitive to additive scaling of the objective function. Additionally, the DIRECT algorithm performs poorly when the objective function values are large. To overcome this, the authors suggested scaling the values of the functions by subtracting the median value ) calculated from all the collected values. Formally, a new DIRECT variation, called DIRECT-m, replaces Equation (6) with:

A few years later, a similar idea was extended in [25]. Again, to reduce the sensitivity for additive scaling of the objective function, the authors of the DIRECT-a algorithm proposed using the average value instead:

Equations (10) and (11) also work also work as protection from over-exploration of the local minima.

2.3.2. Limiting the Measure of Hyper-Rectangles

In [33], the authors introduced the aggressive selection of POH. This strategy selects at least one hyper-rectangle from each group of different measures with the lowest function value. This way, the set of POHs enlarges by including non-potentially Lipschitz optimal hyper-rectangles. Although this solution positively affected parallelism [34], it did not have a strong positive effect on sequential algorithms of type DIRECT. In the sequential context, the apparent shortcoming is that hyper-rectangles proliferate as the number of iterations increases, often leading to a memory allocation failure.

To overcome it, the authors in [34] suggested limiting the refinement of the search space when the measure of hyper-rectangles reached some prescribed limit . It has been shown that memory usage may be reduced from to . The parameter has the same purpose as Equation (6), i.e., to avoid wasting function evaluations by “over-exploring” the local minimum. The authors of [21] have set to the size of a hyper-rectangle subdivided times. It is a relatively small and safe value since the choice of the parameter can be dangerous. More significant value can prevent the algorithm from reaching the minimum within the required tolerance.

2.3.3. Balancing the Local and Global Searches

Jones in [22] argued the need for the strategy to better balance emphasis on the local and global search. An approach could be to run a local search subroutine when there is an improvement in the current minima value [18]. However, this would already be a hybridized DIRECT-type algorithm requiring incorporating a separate local algorithm. This paper focuses on strategies without leaving the DIRECT algorithm framework.

Another approach for balancing the emphasis on the global and local search within the DIRECT-type method is the two-phase “globally-biased” technique. DIRECT-type globally-biased algorithms were proposed and experimentally investigated recently [10,28]. The suggested algorithms operate in the traditional phase until a sufficient number of subdivisions have been completed without improving . Once is explored well, the algorithm switches to the global phase and examines larger and far away hyper-rectangles. The algorithm switches back to the traditional phase when the value is improved. It also performs one “security” iteration of the traditional phase at every certain number of iterations. Therefore, the global phase reduces the number of POHs, excluding small hyper-rectangles around the well-explored minimum.

2.4. New Two-Step Pareto Selection Based Algorithmic Variations for Excessive Local Refinement Reduction

In this section, we define six novel 1-DTC-GL algorithmic variations to reduce the potentially excessive local refinement of the original two-step Pareto selection scheme.

2.4.1. 1-DTC-GL-min Algorithm

The 1-DTC-GL-min algorithm incorporates Equation (6) from Definition 1 into the original two-step Pareto selection scheme. Therefore, in Algorithm 2, Line 2, is set to the group of hyper-rectangles with the lowest such that Equation (6) still holds. When such a candidate is found, a traditional two-step Pareto selection scheme is applied between hyper-rectangles of larger diameters.

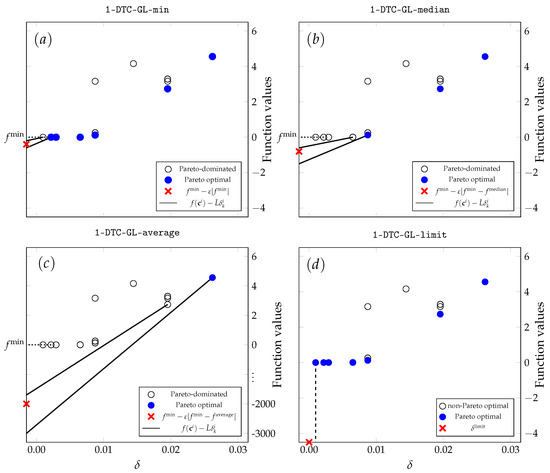

The candidate selection of 1-DTC-GL-min is illustrated in part of Figure 3. The illustrative example is given on a two-dimensional Csendes test problem in the thirteenth iteration of the algorithm. Since the considered techniques do not affect the selection of larger hyper-rectangles, the x-axis is limited to . The y-axis has also been reduced to demonstrate the effectiveness of the strategies under consideration.

Figure 3.

Comparison of two-step Pareto selection using four excessive local refinement reduction techniques implemented to 1-DTC-GL. All four graphs illustrate the selection in the thirteenth iteration of the two-dimensional Csendes test problem with . The selected hyper-rectangles using 1-DTC-GL-min are shown in part (a), 1-DTC-GL-median in part (b), 1-DTC-GL-average in part (c), and 1-DTC-GL-limit in part (d). For clarity, only small diameter hyper-rectangles are shown, as all excessive refinement reduction techniques have no impact on larger hyper-rectangles.

Here, the current minimum value is very close to zero . Equation (6) requires the Lipschitz lower bound to be less than . Therefore, 1-DTC-GL-min excludes tiny hyper-rectangles where the value of the objective function at their midpoint is close to the value. When the current best point is not the global one, selecting tiny hyper-rectangles could significantly delay the discovery of the global one.

2.4.2. 1-DTC-GL-median Algorithm

The 1-DTC-GL-median algorithm incorporates Equation (10) into the original two-step Pareto selection scheme. In 2 2, 1-DTC-GL-median sets to the group of hyper-rectangles with the lowest measure such that Equation (10) still holds. As for 1-DTC-GL-min, when such a hyper-rectangle is found, a traditional two-step Pareto selection scheme is applied between hyper-rectangles of larger diameters.

The result of 1-DTC-GL-median selection is illustrated in part of Figure 3. Equation (10) requires the Lipschitz lower bound to be less than . Since the median value is much higher than 0, 1-DTC-GL-median selects less small hyper-rectangles than 1-DTC-GL-min. This makes the search more global, but can also have a negative impact on the refinement of the global solution.

2.4.3. 1-DTC-GL-average Algorithm

The 1-DTC-GL-average algorithm incorporates Equation (11) into the original two-step Pareto selection scheme. In Algorithm 2, Line 2, 1-DTC-GL-average sets to the group of hyper-rectangles with the slightest measure such that Equation (11) still holds. The selection between larger hyper-rectangles is analogous to the 1-DTC-GL-min and 1-DTC-GL-median algorithms.

The result of 1-DTC-GL-average selection is illustrated in part of Figure 3. Equation (11) requires the Lipschitz lower bound to be less than . Since the Csende test problem has large extreme values, is much larger than . Thus, the 1-DTC-GL-average makes the search even more global 1-DTC-GL-median, which can hurt the refinement of the global solution, requiring the selection of smaller hyper-rectangles.

2.4.4. 1-DTC-GL-limit Algorithm

1-DTC-GL-limit limits the refinement of the search space when the measure of hyper-rectangles reaches some prescribed limit . In 1-DTC-GL-limit, is set to the size of a hyper-rectangle subdivided times. Furthermore, regardless of the hyper-rectangle measure, 1-DTC-GL-limit always selects the hyper-rectangle with .

The result of 1-DTC-GL-limit selection is illustrated in part of Figure 3. Since all hyper-rectangles have measures , the selected set is the same as for the 1-DTC-GL. The differences will appear in later iterations when the hyper-rectangles already subdivided more than 20 times appear.

2.4.5. 1-DTC-GL-gb Algorithm

1-DTC-GL-gb incorporates the “globally-biased” technique into a two-step Pareto selection scheme. The 1-DTC-GL-gb algorithm performs traditional two-step Pareto selection, using Algorithms 2 and 3, until a sufficient number of subdivisions have been completed without improving . Once has been well explored, the 1-DTC-GL-gb algorithm switches to the global phase and performs only Pareto selection improving the global search (Algorithm 2). The 1-DTC-GL-gb algorithm switches back to the traditional phase when the value is improved and during “security” iteration. Finally, we note that the recommended values [10,28] for the additional parameters necessary to switch between phases are used in 1-DTC-GL-gb.

2.4.6. 1-DTC-GL-rev Algorithm

1-DTC-GL-rev incorporates the idea from [18], i.e., it performs the local search selection using Algorithm 3 only when there is an improvement in . The rest of the time, it selects hyper-rectangles using only Algorithm 2 and reduces the impact on local search.

3. Results and Discussions

In this section, we compare the performance of six techniques for reducing the local refinement applied to the 1-DTC-GL algorithm, which showed promising results in our recent computational studies [17,21]. Six newly constructed algorithmic modifications are empirically evaluated and compared with the original 1-DTC-GL algorithm. The algorithmic variations are examined using the most up-to-date version of the DIRECTGOLib v1.2 [35] library. A summary and properties of all box-constrained optimization problems from DIRECTGOLib v1.2 [35] are given in Appendix A, Table A1 and Table A2. Table A1 provides the characteristics of 67 test problems with fixed dimensions, while Table A2 presents 55 test problems with varying dimensionality. In both tables, the main features are reported: problem number (#), problem name, source, dimension (n), default optimization domain (D), perturbed optimization domain (), problem type, and known minimum (). The default domains are taken from the literature and listed in the third column of Table A1 and Table A2. For some problems, the original domain is perturbed () so that the solutions are not located at their midpoints or other locations favorable for any tested algorithm. Some of these test problems have several variants, e.g. AckleyN, BiggsEXP, Bohachevsky, Hartman, ModSchaffer, and Shekel. All test problems listed in Table A2 can be tested for varying dimensionality. For the 55 test problems that can be used specifying any dimension size (n), we considered four different values, and 20, leading to the 287 test problems (see the summary in Table 1).

Table 1.

Characteristics of DIRECTGOLib v1.2 test problems.

Implementation and testing are performed using an Intel R Core i5-10400@2.90 GHz Processor, 16 GB of RAM, and MATLAB R2022a software running on the Windows 10 Education operating system. The results returned by the algorithms were compared with the solution for each problem. An algorithm was assumed to have solved the test problem if it returned a solution whose objective function value did not exceed error. For all analytical test cases where the global optimal value is known prior, a stopping criterion based on the percentage error was applied:

where is the known global optimum. The algorithms were stopped if the percentage error became smaller than the set value or if the number of function evaluations exceeded the prescribed .

Three criteria were recorded for each algorithm: the average number of function evaluations , the average number of iterations , and the average execution time measured in seconds. Table 2 summarizes experimental results on 287 test problems from DIRECTGOLib v1.2. Here, the first column gives the algorithm’s name, while the second column indicates the criteria. Average values are given in columns three to eleven, solving different subsets of test problems, such as low dimensional , higher-dimensionality , convex, non-convex, uni-modal, multi-modal, problems with global minimum value equal to zero, or only those with a non-zero global minimum. The twelfth column shows the median values, while the last column shows the success rate as the proportion of solved problems.

Table 2.

The average number of function evaluations (), iterations () and execution time () using 1-DTC-GL and six newly introduced variations, on the DIRECTGOLib v1.2 test problems set.

As can be seen from the success rate values, 1-DTC-GL-gb ranks first among the seven algorithmic variations tested. However, the difference between the first two places is minimal. The 1-DTC-GL-gb algorithm solved only one more problem (225) than the original 1-DTC-GL algorithm (224). It indicates that the original 1-DTC-GL, which does not require additional parameters as 1-DTC-GL-gb, can successfully handle the excessive local refinement. The third best is 1-DTC-GL-limit , and the fourth is 1-DTC-GL-min, based on the original DIRECT strategy for excessive local refinement prevention. 1-DTC-GL-rev is only in fifth place but works well on uni-modal test problems. Meanwhile, the worst algorithms are 1-DTC-GL-average and 1-DTC-GL-median. The [25] technique applied in the 1-DTC-GL-average algorithm worsened the overall average number of objective function evaluations by compared to 1-DTC-GL. Further, it was observed that the 1-DTC-GL-average algorithm had suffered the most on test problems with , but the opposite when .

These findings suggest that the restriction on selecting small hyper-rectangles may prevent the algorithm from converging to a solution, even with the relatively low accuracy used in this study. It is especially apparent when the solution is equal to zero. All tested local refinement reduction techniques hurt 1-DTC-GL performance.

Not surprisingly, the lowest overall average number of objective function evaluations is obtained again with the 1-DTC-GL-gb algorithm and is approximately lower than with the second best, 1-DTC-GL. As can be seen, ranking the algorithms in terms of success rate and overall average results is analogous, since the success rate depends directly on the number of functions.

Furthermore, although the lowest value median is obtained again with the 1-DTC-GL-gb algorithm, the second best is the 1-DTC-GL-rev. The median values mean that 1-DTC-GL-gb can solve at least half of these test problems with the best performance. Interestingly, 1-DTC-GL-rev was only in fifth place regarding the overall success rate but is second in median value. Like 1-DTC-GL-gb, it restricts local selection, and it seems this technique has the most potential to combat excessive local refinement. According to the median value, the original 1-DTC-GL is only in fifth place, and a value of around 30% is higher than 1-DTC-GL-gb. Moreover, the 1-DTC-GL-gb algorithm proved to be the most effective for non-convex, multi-modal, , , and subsets of test problems.

However, the improvement in the performance of 1-DTC-GL-gb also had some negative consequences. In general, the 1-DTC-GL-gb algorithm required more iterations than the best algorithm for this criterion, 1-DTC-GL. Since the 1-DTC-GL algorithm has no limitation on selecting extremely small and locally located hyper-rectangles, it results in more calculations of the objective functions being performed per iteration. Moreover, the average execution time is best with the original 1-DTC-GL algorithm. The local refinement reduction techniques increased the total number of iterations as well as the average running time of the algorithms. From this, we can summarize that in the case of cheap test functions, the original 1-DTC-GL is the best of all the algorithms tested, meaning that the local refinement reduction schemes are redundant. However, when the objective functions are expensive, the local refinement reduction techniques improve the performance, and 1-DTC-GL-gb is the best technique among all tested.

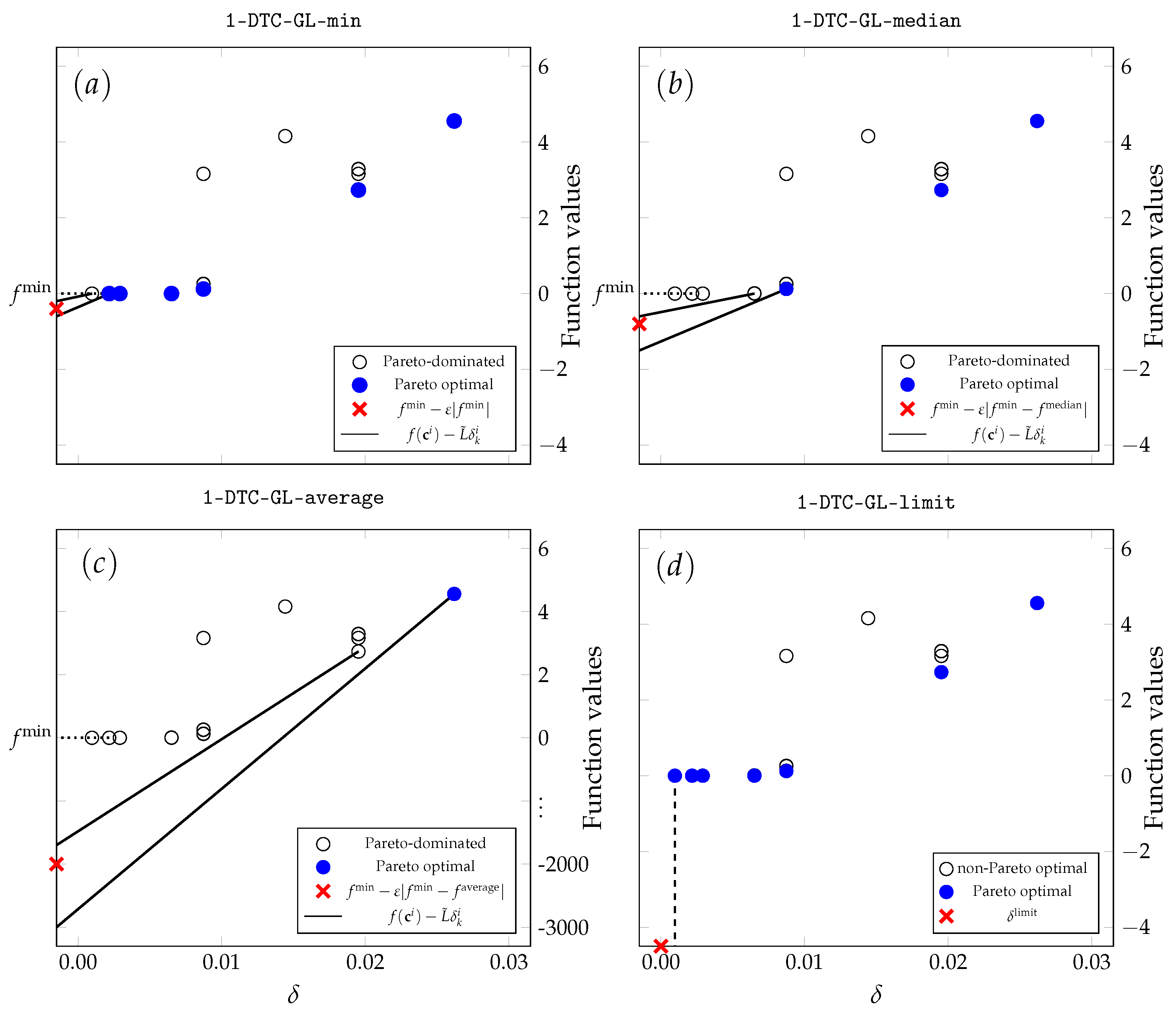

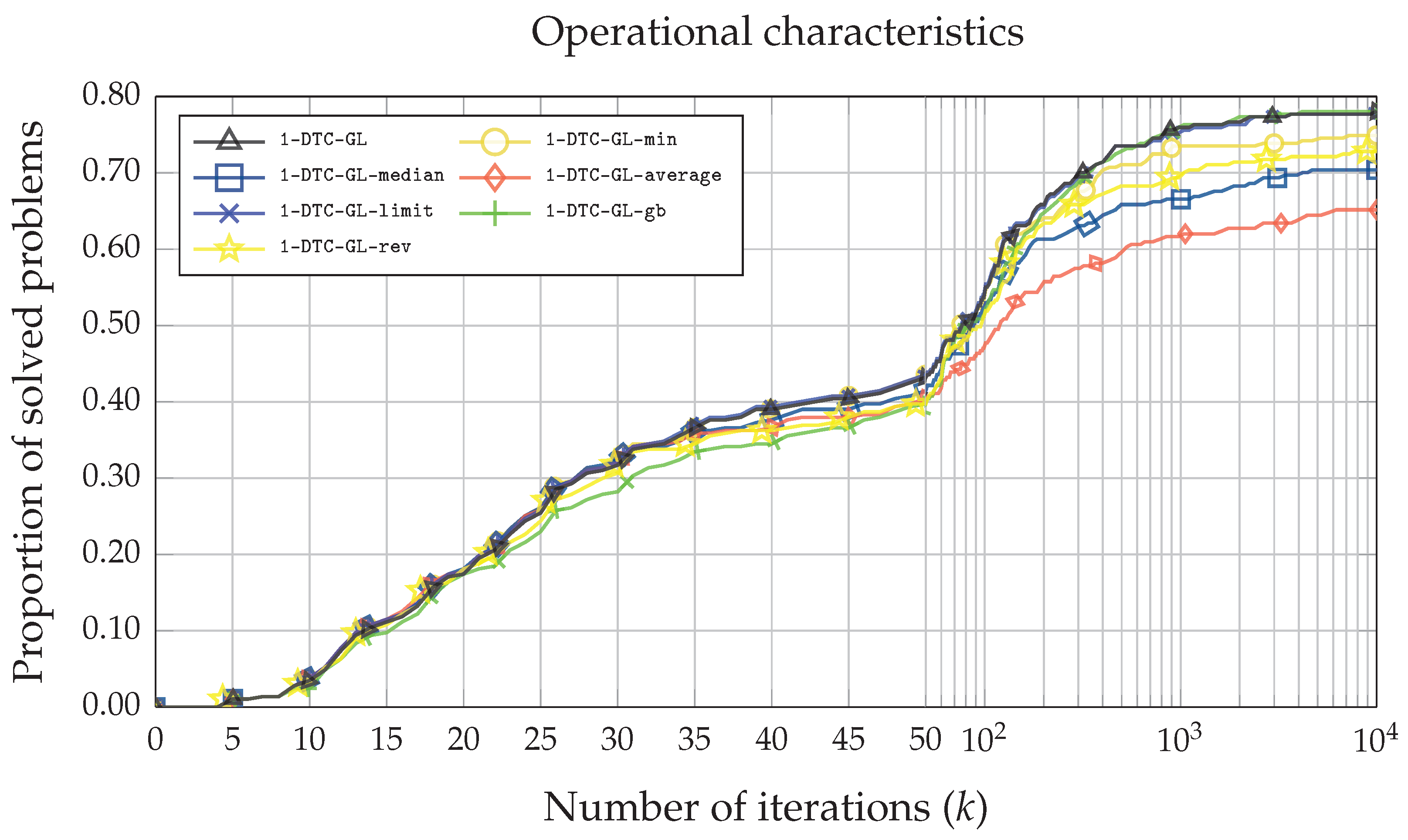

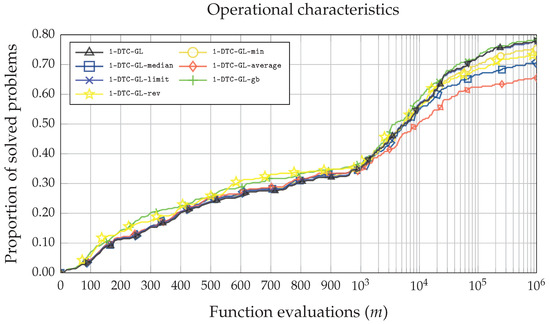

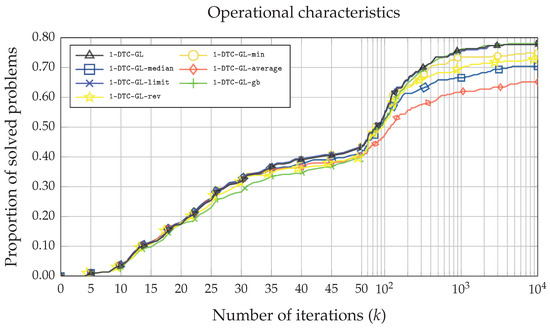

Furthermore, Figure 4 produces line plots of the operational characteristics [36,37], showing the relationship between the number of problems solved and the number of function evaluations. Four out of six techniques (1-DTC-GL-median, 1-DTC-GL-average, 1-DTC-GL-min, 1-DTC-GL-rev) implemented to restrict the selection of small hyper-rectangles had almost no impact on the original 1-DTC-GL algorithm solving the simplest test problems. All these four algorithms have almost identical performance curves to 1-DTC-GL. They were able to solve approximately (95 out of 287) test problems when the maximum allowed number of function evaluations was 1000. However, as the maximum allowed number of function evaluations increases, the performance efficiency of the four approaches starts to deteriorate compared to the original 1-DTC-GL algorithm. The algorithms with the worst performance are 1-DTC-GL-average and 1-DTC-GL-median. The best performance was achieved with the 1-DTC-GL-gb and 1-DTC-GL-rev algorithms. Moreover, while for simpler problems, 1-DTC-GL-rev performed well, for more complex problems, the efficiency deteriorates. The 1-DTC-GL-gb is the only algorithm with the same or better performance than the original 1-DTC-GL algorithm.

Figure 4.

Operational characteristics based on the number of function evaluations for all seven 1-DTC-GL algorithmic variations on the whole set of DIRECTGOLib v1.2 test problems.

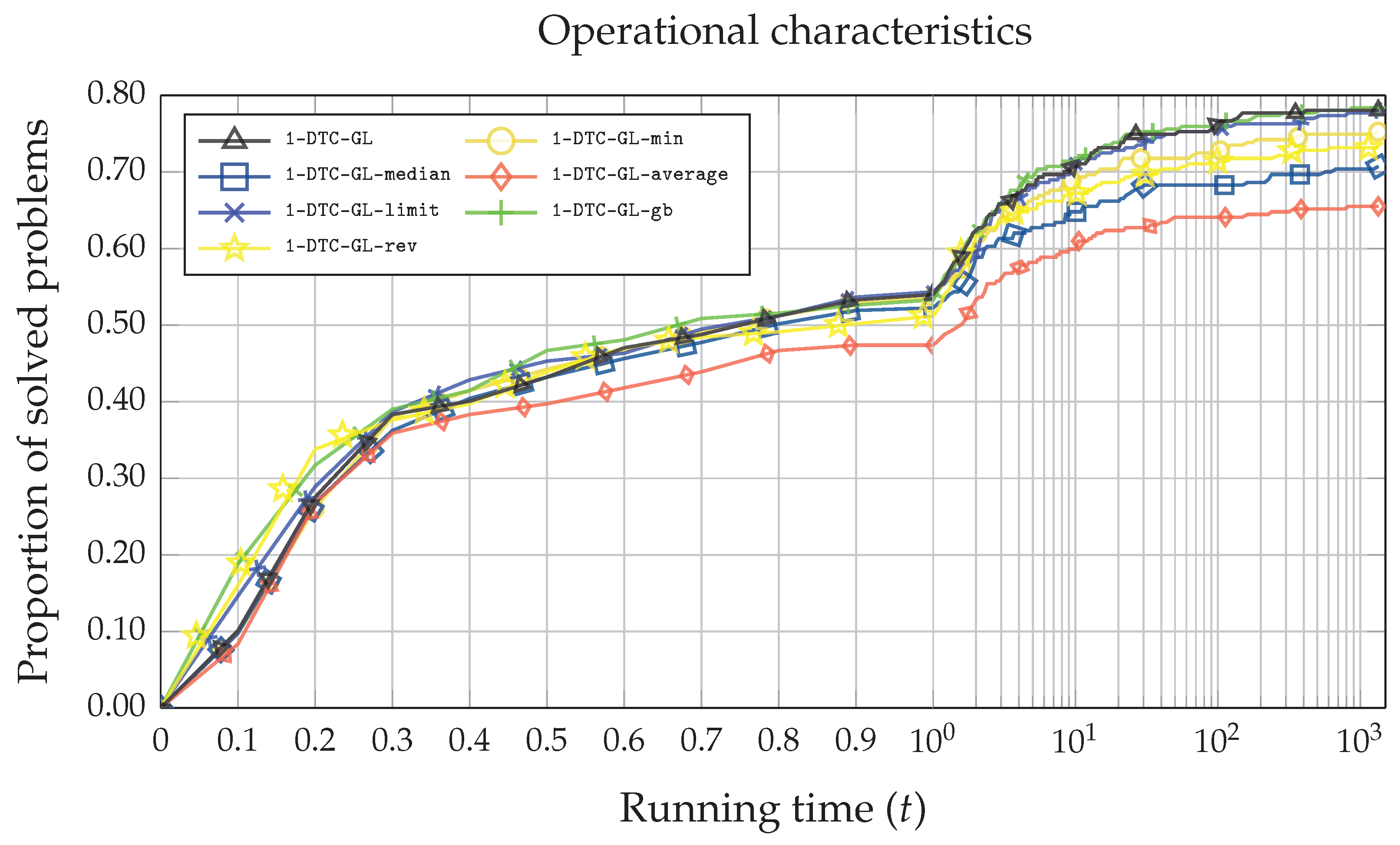

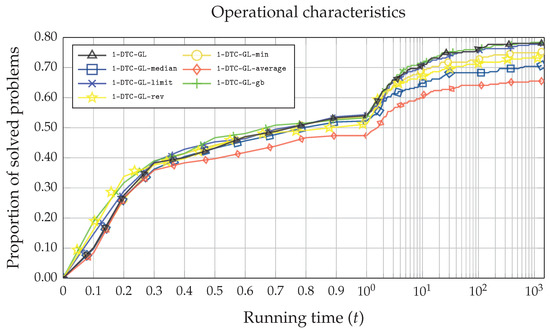

Finally, in Figure 5 and Figure 6, the operational characteristics based on the number of iterations of the function and the execution time are illustrated. Although the average number of iterations () using the 1-DTC-GL algorithm is the lowest (see Table 2), Figure 5 reveals that at least two other algorithms (1-DTC-GL-limit and 1-DTC-GL-gb) perform similarly. A similar situation can be seen in Figure 6, where the x-axis indicates the time in seconds while the y-axis represents the proportion of the problems solved. The most straightforward problems are solved slightly faster using 1-DTC-GL-rev and 1-DTC-GL-gb algorithms. However, as the execution time increases (), the performance efficiency of 1-DTC-GL and 1-DTC-GL-gb becomes almost identical, although the average time of the 1-DTC-GL algorithm is better (see in Table 2).

Figure 5.

Operational characteristics based on the number of iterations for all seven 1-DTC-GL algorithmic variations on the whole set of DIRECTGOLib v1.2 test problems.

Figure 6.

Operational characteristics based on the execution time in seconds for all seven 1-DTC-GL algorithmic variations on the whole set of DIRECTGOLib v1.2 test problems.

4. Conclusions and Potential Future Directions

This study reviewed existing excessive local refinement reduction techniques in the DIRECT context. The six identified techniques were applied to one of the fastest two-step Pareto selection-based algorithms (1-DTC-GL). As other algorithmic parameters were unchanged, this allowed us to assess the impact of each of them objectively.

The seven 1-DTC-GL algorithms were compared using three criteria: the average number of function evaluations, the average number of iterations and the average execution time. In terms of the number of objective functions, the 1-DTC-GL-gb algorithm performed the best, but only one less objective function solved 1-DTC-GL. The other five strategies tested hurt the speed of the original algorithm 1-DTC-GL. This finding made it clear that the restriction on selecting small hyper-rectangles may prevent the algorithms from converging to a solution, even with the relatively low accuracy used in this study. This is particularly evident when the solution to the problem equals zero. No strategy used in 1-DTC-GL has resulted in any noticeable improvements in this case, but instead has worsened it. Interestingly, in terms of iterations and execution time, the original algorithm 1-DTC-GL performed the best. That is because the local refinement reduction techniques increase the total number of iterations, as well as the average running time of the algorithms.

To sum up, the original 1-DTC-GL is the best of all tested algorithms for the cheap test functions, meaning that the local refinement reduction scheme is redundant. However, when objective functions are expensive, local refinement reduction techniques improve performance, and 1-DTC-GL-gb is the best algorithm among all tested. However, its effectiveness is also limited. Therefore, one of the potential future directions is the development of better-suited local refinement reduction techniques for two-step Pareto selection-based DIRECT-type algorithms. Another potential direction is the integration of all DIRECT-type algorithms into the new web-based tool for algebraic modeling and mathematical optimization [38,39]. Finally, since 1-DTC-GL is a DIRECT-type algorithm, the results of this paper can also be generalized to any DIRECT-type algorithm. We leave this as another promising future direction.

Author Contributions

L.S. and R.P. contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

DIRECTGOLib—DIRECT Global Optimization test problems Library is designed as a continuously-growing open-source GitHub repository to which anyone can easily contribute. The exact data underlying this article from DIRECTGOLib v1.2 can be accessed either on GitHub or at Zenodo: (accessed on 12 September 2022) https://github.com/blockchain-group/DIRECTGOLib and https://zenodo.org/record/6617799, and used under the MIT license. We welcome contributions and corrections to it. The original 1-DTC-GL algorithm and six new variations are available at the open-access GitHub repository (accessed on 27 September 2022): https://github.com/blockchain-group/DIRECTGO.

Acknowledgments

Vilnius University Institute of Data Science and Digital Technologies.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. DIRECTGOLib v1.2 Library

Table A1.

Key characteristics of box-constrained global optimization problems with fixed n from DIRECTGOLib v1.2 [35]. Data adapted from [20].

Table A1.

Key characteristics of box-constrained global optimization problems with fixed n from DIRECTGOLib v1.2 [35]. Data adapted from [20].

| # | Name | Source | n | D | Type | No. of Minima | ||

|---|---|---|---|---|---|---|---|---|

| 1 | AckleyN2 | [40] | 2 | uni-modal | convex | |||

| 2 | AckleyN3 | [40] | 2 | uni-modal | convex | |||

| 3 | AckleyN4 | [40] | 2 | non-convex | multi-modal | |||

| 4 | Adjiman | [40] | 2 | - | non-convex | multi-modal | ||

| 5 | BartelsConn | [40] | 2 | non-convex | multi-modal | |||

| 6 | Beale | [41,42] | 2 | - | non-convex | multi-modal | ||

| 7 | BiggsEXP2 | [40] | 2 | - | non-convex | multi-modal | ||

| 8 | BiggsEXP3 | [40] | 3 | - | non-convex | multi-modal | ||

| 9 | BiggsEXP4 | [40] | 4 | - | non-convex | multi-modal | ||

| 10 | BiggsEXP5 | [40] | 5 | - | non-convex | multi-modal | ||

| 11 | BiggsEXP6 | [40] | 6 | - | non-convex | multi-modal | ||

| 12 | Bird | [40] | 2 | - | non-convex | multi-modal | ||

| 13 | Bohachevsky1 | [41,42] | 2 | convex | uni-modal | |||

| 14 | Bohachevsky2 | [41,42] | 2 | non-convex | multi-modal | |||

| 15 | Bohachevsky3 | [41,42] | 2 | non-convex | multi-modal | |||

| 16 | Booth | [41,42] | 2 | - | convex | uni-modal | ||

| 17 | Brad | [40] | 3 | - | non-convex | multi-modal | ||

| 18 | Branin | [41,43] | 2 | - | non-convex | multi-modal | ||

| 19 | Bukin4 | [40] | 2 | - | convex | multi-modal | ||

| 20 | Bukin6 | [42] | 2 | - | convex | multi-modal | ||

| 21 | CarromTable | [44] | 2 | - | non-convex | multi-modal | ||

| 22 | ChenBird | [40] | 2 | - | non-convex | multi-modal | ||

| 23 | ChenV | [40] | 2 | - | non-convex | multi-modal | ||

| 24 | Chichinadze | [40] | 2 | - | non-convex | multi-modal | ||

| 25 | Cola | [40] | 17 | - | non-convex | multi-modal | ||

| 26 | Colville | [41,42] | 4 | - | non-convex | multi-modal | ||

| 27 | Cross_function | [44] | 2 | - | non-convex | multi-modal | ||

| 28 | Cross_in_Tray | [42] | 2 | - | non-convex | multi-modal | ||

| 29 | CrownedCross | [44] | 2 | - | non-convex | multi-modal | ||

| 30 | Crosslegtable | [44] | 2 | - | non-convex | multi-modal | ||

| 31 | Cube | [44] | 2 | - | convex | multi-modal | ||

| 32 | Damavandi | [44] | 2 | - | non-convex | multi-modal | ||

| 33 | Dejong5 | [42] | 2 | - | non-convex | multi-modal | ||

| 34 | Dolan | [40] | 5 | - | non-convex | multi-modal | ||

| 35 | Drop_wave | [42] | 2 | non-convex | multi-modal | |||

| 36 | Easom | [41,42] | 2 | non-convex | multi-modal | |||

| 37 | Eggholder | [42] | 2 | - | non-convex | multi-modal | ||

| 38 | Giunta | [44] | 2 | - | non-convex | multi-modal | ||

| 39 | Goldstein_and _Price | [41,43] | 2 | non-convex | multi-modal | |||

| 40 | Hartman3 | [41,42] | 3 | - | non-convex | multi-modal | ||

| 41 | Hartman4 | [41,42] | 4 | - | non-convex | multi-modal | ||

| 42 | Hartman6 | [41,42] | 6 | - | non-convex | multi-modal | ||

| 43 | HelicalValley | [44] | 3 | - | convex | multi-modal | ||

| 44 | HimmelBlau | [44] | 2 | - | convex | multi-modal | ||

| 45 | Holder_Table | [42] | 2 | - | non-convex | multi-modal | ||

| 46 | Hump | [41,42] | 2 | - | non-convex | multi-modal | ||

| 47 | Langermann | [42] | 2 | - | non-convex | multi-modal | ||

| 48 | Leon | [44] | 2 | - | convex | multi-modal | ||

| 49 | Levi13 | [44] | 2 | - | non-convex | multi-modal | ||

| 50 | Matyas | [41,42] | 2 | convex | uni-modal | |||

| 51 | McCormick | [42] | 2 | - | convex | multi-modal | ||

| 52 | ModSchaffer1 | [45] | 2 | non-convex | multi-modal | |||

| 53 | ModSchaffer2 | [45] | 2 | non-convex | multi-modal | |||

| 54 | ModSchaffer3 | [45] | 2 | non-convex | multi-modal | |||

| 55 | ModSchaffer4 | [45] | 2 | non-convex | multi-modal | |||

| 56 | PenHolder | [41,42] | 2 | - | non-convex | multi-modal | ||

| 57 | Permdb4 | [41,42] | 4 | - | non-convex | multi-modal | ||

| 58 | Powell | [41,42] | 4 | - | convex | multi-modal | ||

| 59 | Power_Sum | [41,42] | 4 | convex | multi-modal | |||

| 60 | Shekel5 | [41,42] | 4 | - | non-convex | multi-modal | ||

| 61 | Shekel7 | [41,42] | 4 | - | non-convex | multi-modal | ||

| 62 | Shekel10 | [41,42] | 4 | - | non-convex | multi-modal | ||

| 63 | Shubert | [41,42] | 2 | - | non-convex | multi-modal | ||

| 64 | TestTubeHolder | [44] | 2 | - | non-convex | multi-modal | ||

| 65 | Trefethen | [44] | 2 | - | non-convex | multi-modal | ||

| 66 | Wood | [45] | 4 | non-convex | multi-modal | |||

| 67 | Zettl | [44] | 2 | - | convex | multi-modal |

i—indexes used for variable bounds (1, …, n). α—domain D was perturbed. The sign “-” means that is the same as D.

Table A2.

Key characteristics of box-constrained global optimization problems with varying n from DIRECTGOLib v1.2 [35]. Data adapted from [20].

Table A2.

Key characteristics of box-constrained global optimization problems with varying n from DIRECTGOLib v1.2 [35]. Data adapted from [20].

| # | Name | Source | D | Type | No. of Minima | ||

|---|---|---|---|---|---|---|---|

| 1 | Ackley | [41,42] | non-convex | multi-modal | |||

| 2 | AlpineN1 | [44] | non-convex | multi-modal | |||

| 3 | Alpine | [44] | non-convex | multi-modal | |||

| 4 | Brown | [40] | - | convex | uni-modal | ||

| 5 | ChungR | [40] | - | convex | uni-modal | ||

| 6 | Csendes | [44] | convex | multi-modal | |||

| 7 | Cubic | [42] | - | convex | uni-modal | ||

| 8 | Deb01 | [44] | non-convex | multi-modal | |||

| 9 | Deb02 | [44] | non-convex | multi-modal | |||

| 10 | Dixon_and_Price | [41,42] | - | convex | multi-modal | ||

| 11 | Dejong | [42] | - | convex | uni-modal | ||

| 12 | Exponential | [40] | - | non-convex | multi-modal | ||

| 13 | Exponential2 | [42] | - | non-convex | multi-modal | ||

| 14 | Exponential3 | [42] | - | non-convex | multi-modal | ||

| 15 | Griewank | [41,42] | non-convex | multi-modal | |||

| 16 | Layeb01 | [46] | convex | uni-modal | |||

| 17 | Layeb02 | [46] | - | convex | uni-modal | ||

| 18 | Layeb03 | [46] | non-convex | multi-modal | |||

| 19 | Layeb04 | [46] | - | non-convex | multi-modal | ||

| 20 | Layeb05 | [46] | - | non-convex | multi-modal | ||

| 21 | Layeb06 | [46] | - | non-convex | multi-modal | ||

| 22 | Layeb07 | [46] | non-convex | multi-modal | |||

| 23 | Layeb08 | [46] | - | non-convex | multi-modal | ||

| 24 | Layeb09 | [46] | - | non-convex | multi-modal | ||

| 25 | Layeb10 | [46] | - | non-convex | multi-modal | ||

| 26 | Layeb11 | [46] | - | non-convex | multi-modal | ||

| 27 | Layeb12 | [46] | - | non-convex | multi-modal | ||

| 28 | Layeb13 | [46] | - | non-convex | multi-modal | ||

| 29 | Layeb14 | [46] | - | non-convex | multi-modal | ||

| 30 | Layeb15 | [46] | - | non-convex | multi-modal | ||

| 31 | Layeb16 | [46] | - | non-convex | multi-modal | ||

| 32 | Layeb17 | [46] | - | non-convex | multi-modal | ||

| 33 | Layeb18 | [46] | - | non-convex | multi-modal | ||

| 34 | Levy | [41,42] | non-convex | multi-modal | |||

| 35 | Michalewicz | [41,42] | - | non-convex | multi-modal | ||

| 36 | Pinter | [44] | non-convex | multi-modal | |||

| 37 | Qing | [44] | - | non-convex | multi-modal | ||

| 38 | Quadratic | [42] | - | convex | uni-modal | ||

| 39 | Rastrigin | [41,42] | non-convex | multi-modal | |||

| 40 | Rosenbrock | [41,43] | non-convex | uni-modal | |||

| 41 | Rotated_H_Ellip | [42] | convex | uni-modal | |||

| 42 | Schwefel | [41,42] | non-convex | multi-modal | |||

| 43 | SineEnvelope | [44] | - | non-convex | multi-modal | ||

| 44 | Sinenvsin | [45] | non-convex | multi-modal | |||

| 45 | Sphere | [41,42] | convex | uni-modal | |||

| 46 | Styblinski_Tang | [47] | non-convex | multi-modal | |||

| 47 | Sum_Squares | [47] | convex | uni-modal | |||

| 48 | Sum_Of_Powers | [42] | convex | uni-modal | |||

| 49 | Trid | [41,42] | - | convex | multi-modal | ||

| 50 | Trigonometric | [41,42] | non-convex | multi-modal | |||

| 51 | Vincent | [47] | - | non-convex | multi-modal | ||

| 52 | WWavy | [40] | non-convex | multi-modal | |||

| 53 | XinSheYajngN1 | [40] | non-convex | multi-modal | |||

| 54 | XinSheYajngN2 | [40] | non-convex | multi-modal | |||

| 55 | Zakharov | [41,42] | convex | multi-modal |

i—indexes used for variable bounds (1, …, n). χ—solution depend on problem dimension. α—domain D was perturbed. The sign “-” means that is the same as D.

References

- Sergeyev, Y.D.; Kvasov, D.E.; Mukhametzhanov, M.S. On the efficiency of nature-inspired metaheuristics in expensive global optimization with limited budget. Sci. Rep. 2018, 8, 453. [Google Scholar] [CrossRef] [PubMed]

- Lee, C.Y.; Zhuo, G.L. A Hybrid Whale Optimization Algorithm for Global Optimization. Mathematics 2021, 9, 1477. [Google Scholar] [CrossRef]

- Al-Shaikh, A.; Mahafzah, B.A.; Alshraideh, M. Hybrid harmony search algorithm for social network contact tracing of COVID-19. Soft Comput. 2021, 1–23. [Google Scholar] [CrossRef] [PubMed]

- Zhigljavsky, A.; Žilinskas, A. Stochastic Global Optimization; Springer: New York, NY, USA, 2008. [Google Scholar]

- Horst, R.; Pardalos, P.M.; Thoai, N.V. Introduction to Global Optimization; Nonconvex Optimization and Its Application; Kluwer Academic Publishers: Berlin, Germany, 1995. [Google Scholar]

- Sergeyev, Y.D.; Kvasov, D.E. Deterministic Global Optimization: An Introduction to the Diagonal Approach; SpringerBriefs in Optimization; Springer: Berlin, Germany, 2017. [Google Scholar] [CrossRef]

- Jones, D.R.; Perttunen, C.D.; Stuckman, B.E. Lipschitzian Optimization Without the Lipschitz Constant. J. Optim. Theory Appl. 1993, 79, 157–181. [Google Scholar] [CrossRef]

- Carter, R.G.; Gablonsky, J.M.; Patrick, A.; Kelley, C.T.; Eslinger, O.J. Algorithms for noisy problems in gas transmission pipeline optimization. Optim. Eng. 2001, 2, 139–157. [Google Scholar] [CrossRef]

- Cox, S.E.; Haftka, R.T.; Baker, C.A.; Grossman, B.; Mason, W.H.; Watson, L.T. A Comparison of Global Optimization Methods for the Design of a High-speed Civil Transport. J. Glob. Optim. 2001, 21, 415–432. [Google Scholar] [CrossRef]

- Paulavičius, R.; Sergeyev, Y.D.; Kvasov, D.E.; Žilinskas, J. Globally-biased BIRECT algorithm with local accelerators for expensive global optimization. Expert Syst. Appl. 2020, 144, 11305. [Google Scholar] [CrossRef]

- Paulavičius, R.; Žilinskas, J. Simplicial Global Optimization; SpringerBriefs in Optimization; Springer: New York, NY, USA, 2014. [Google Scholar] [CrossRef]

- Stripinis, L.; Paulavičius, R.; Žilinskas, J. Penalty functions and two-step selection procedure based DIRECT-type algorithm for constrained global optimization. Struct. Multidiscip. Optim. 2019, 59, 2155–2175. [Google Scholar] [CrossRef]

- Paulavičius, R.; Žilinskas, J. Analysis of different norms and corresponding Lipschitz constants for global optimization. Technol. Econ. Dev. Econ. 2006, 36, 383–387. [Google Scholar] [CrossRef]

- Piyavskii, S.A. An algorithm for finding the absolute minimum of a function. Theory Optim. Solut. 1967, 2, 13–24. (In Russian) [Google Scholar] [CrossRef]

- Sergeyev, Y.D.; Kvasov, D.E. Lipschitz global optimization. In Wiley Encyclopedia of Operations Research and Management Science (in 8 volumes); Cochran, J.J., Cox, L.A., Keskinocak, P., Kharoufeh, J.P., Smith, J.C., Eds.; John Wiley & Sons: New York, NY, USA, 2011; Volume 4, pp. 2812–2828. [Google Scholar]

- Rios, L.M.; Sahinidis, N.V. Derivative-free optimization: A review of algorithms and comparison of software implementations. J. Glob. Optim. 2007, 56, 1247–1293. [Google Scholar] [CrossRef]

- Stripinis, L.; Paulavičius, R. DIRECTGO: A New DIRECT-Type MATLAB Toolbox for Derivative-Free Global Optimization. ACM Trans. Math. Softw. 2022, 1–45. [Google Scholar] [CrossRef]

- Jones, D.R. The Direct Global Optimization Algorithm. In The Encyclopedia of Optimization; Floudas, C.A., Pardalos, P.M., Eds.; Kluwer Academic Publishers: Dordrect, The Netherlands, 2001; pp. 431–440. [Google Scholar]

- Holmstrom, K.; Goran, A.O.; Edvall, M.M. User’s Guide for TOMLAB 7. 2010. Available online: https://tomopt.com/docs/TOMLAB.pdf (accessed on 15 November 2021).

- Stripinis, L.; Paulavičius, R. An extensive numerical benchmark study of deterministic vs. stochastic derivative-free global optimization algorithms. arXiv 2022, arXiv:2209.05759. [Google Scholar] [CrossRef]

- Stripinis, L.; Paulavičius, R. An empirical study of various candidate selection and partitioning techniques in the DIRECT framework. J. Glob. Optim. 2022, 1–31. [Google Scholar] [CrossRef]

- Jones, D.R.; Martins, J.R.R.A. The DIRECT algorithm: 25 years later. J. Glob. Optim. 2021, 79, 521–566. [Google Scholar] [CrossRef]

- Finkel, D.E.; Kelley, C.T. Additive scaling and the DIRECT algorithm. J. Glob. Optim. 2006, 36, 597–608. [Google Scholar] [CrossRef]

- Finkel, D.; Kelley, C. An Adaptive Restart Implementation of DIRECT; Technical Report CRSC-TR04-30; Center for Research in Scientific Computation, North Carolina State University: Raleigh, NC, USA, 2004; pp. 1–16. [Google Scholar]

- Liu, Q. Linear scaling and the DIRECT algorithm. J. Glob. Optim. 2013, 56, 1233–1245. [Google Scholar] [CrossRef]

- Liu, Q.; Zeng, J.; Yang, G. MrDIRECT: A multilevel robust DIRECT algorithm for global optimization problems. J. Glob. Optim. 2015, 62, 205–227. [Google Scholar] [CrossRef]

- Sergeyev, Y.D.; Kvasov, D.E. Global search based on diagonal partitions and a set of Lipschitz constants. SIAM J. Optim. 2006, 16, 910–937. [Google Scholar] [CrossRef]

- Paulavičius, R.; Sergeyev, Y.D.; Kvasov, D.E.; Žilinskas, J. Globally-biased DISIMPL algorithm for expensive global optimization. J. Glob. Optim. 2014, 59, 545–567. [Google Scholar] [CrossRef]

- Stripinis, L.; Paulavičius, R.; Žilinskas, J. Improved scheme for selection of potentially optimal hyper-rectangles in DIRECT. Optim. Lett. 2018, 12, 1699–1712. [Google Scholar] [CrossRef]

- De Corte, W.; Sackett, P.R.; Lievens, F. Designing pareto-optimal selection systems: Formalizing the decisions required for selection system development. J. Appl. Psychol. 2011, 96, 907–926. [Google Scholar] [CrossRef]

- Liu, Q.; Cheng, W. A modified DIRECT algorithm with bilevel partition. J. Glob. Optim. 2014, 60, 483–499. [Google Scholar] [CrossRef]

- Liu, H.; Xu, S.; Wang, X.; Wu, X.; Song, Y. A global optimization algorithm for simulation-based problems via the extended DIRECT scheme. Eng. Optim. 2015, 47, 1441–1458. [Google Scholar] [CrossRef]

- Baker, C.A.; Watson, L.T.; Grossman, B.; Mason, W.H.; Haftka, R.T. Parallel Global Aircraft Configuration Design Space Exploration. In Practical Parallel Computing; Nova Science Publishers, Inc.: Hauppauge, NY, USA, 2001; pp. 79–96. [Google Scholar]

- He, J.; Verstak, A.; Watson, L.T.; Sosonkina, M. Design and implementation of a massively parallel version of DIRECT. Comput. Optim. Appl. 2008, 40, 217–245. [Google Scholar] [CrossRef]

- Stripinis, L.; Paulavičius, R. DIRECTGOLib—DIRECT Global Optimization Test Problems Library, Version v1.2, GitHub. 2022. Available online: https://github.com/blockchain-group/DIRECTGOLib/tree/v1.2 (accessed on 10 July 2022).

- Grishagin, V.A. Operating characteristics of some global search algorithms. In Problems of Stochastic Search; Zinatne: Riga, Latvia, 1978; Volume 7, pp. 198–206. (In Russian) [Google Scholar]

- Strongin, R.G.; Sergeyev, Y.D. Global Optimization with Non-Convex Constraints: Sequential and Parallel Algorithms; Kluwer Academic Publishers: Dordrecht, The Netherlands, 2000. [Google Scholar]

- Jusevičius, V.; Oberdieck, R.; Paulavičius, R. Experimental Analysis of Algebraic Modelling Languages for Mathematical Optimization. Informatica 2021, 32, 283–304. [Google Scholar] [CrossRef]

- Jusevičius, V.; Paulavičius, R. Web-Based Tool for Algebraic Modeling and Mathematical Optimization. Mathematics 2021, 9, 2751. [Google Scholar] [CrossRef]

- Jamil, M.; Yang, X.S. A literature survey of benchmark functions for global optimisation problems. Int. J. Math. Model. Numer. Optim. 2013, 4, 150–194. [Google Scholar] [CrossRef]

- Hedar, A. Test Functions for Unconstrained Global Optimization. 2005. Available online: http://www-optima.amp.i.kyoto-u.ac.jp/member/student/hedar/Hedar_files/TestGO.htm (accessed on 22 March 2017).

- Surjanovic, S.; Bingham, D. Virtual Library of Simulation Experiments: Test Functions and Datasets. 2013. Available online: http://www.sfu.ca/~ssurjano/index.html (accessed on 22 March 2017).

- Dixon, L.; Szegö, C. The Global Optimisation Problem: An Introduction. In Towards Global Optimization; Dixon, L., Szegö, G., Eds.; North-Holland Publishing Company: Amsterdam, The Netherlands, 1978; Volume 2, pp. 1–15. [Google Scholar]

- Gavana, A. Global Optimization Benchmarks and AMPGO. Available online: http://infinity77.net/global_optimization/index.html (accessed on 22 July 2021).

- Mishra, S.K. Some New Test Functions for Global Optimization and Performance of Repulsive Particle Swarm Method. 2006. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=926132 (accessed on 23 August 2006). [CrossRef]

- Abdesslem, L. New hard benchmark functions for global optimization. arXiv 2022, arXiv:2202.04606. [Google Scholar]

- Clerc, M. The swarm and the queen: Towards a deterministic and adaptive particle swarm optimization. In Proceedings of the 1999 Congress on Evolutionary Computation-CEC99 (Cat. No. 99TH8406), Washington, DC, USA, 6–9 July 1999; Volume 3, pp. 1951–1957. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).