Sentence-CROBI: A Simple Cross-Bi-Encoder-Based Neural Network Architecture for Paraphrase Identification

Abstract

1. Introduction

2. Related Work

3. Corpora

4. Methodology

4.1. Text Preprocessing

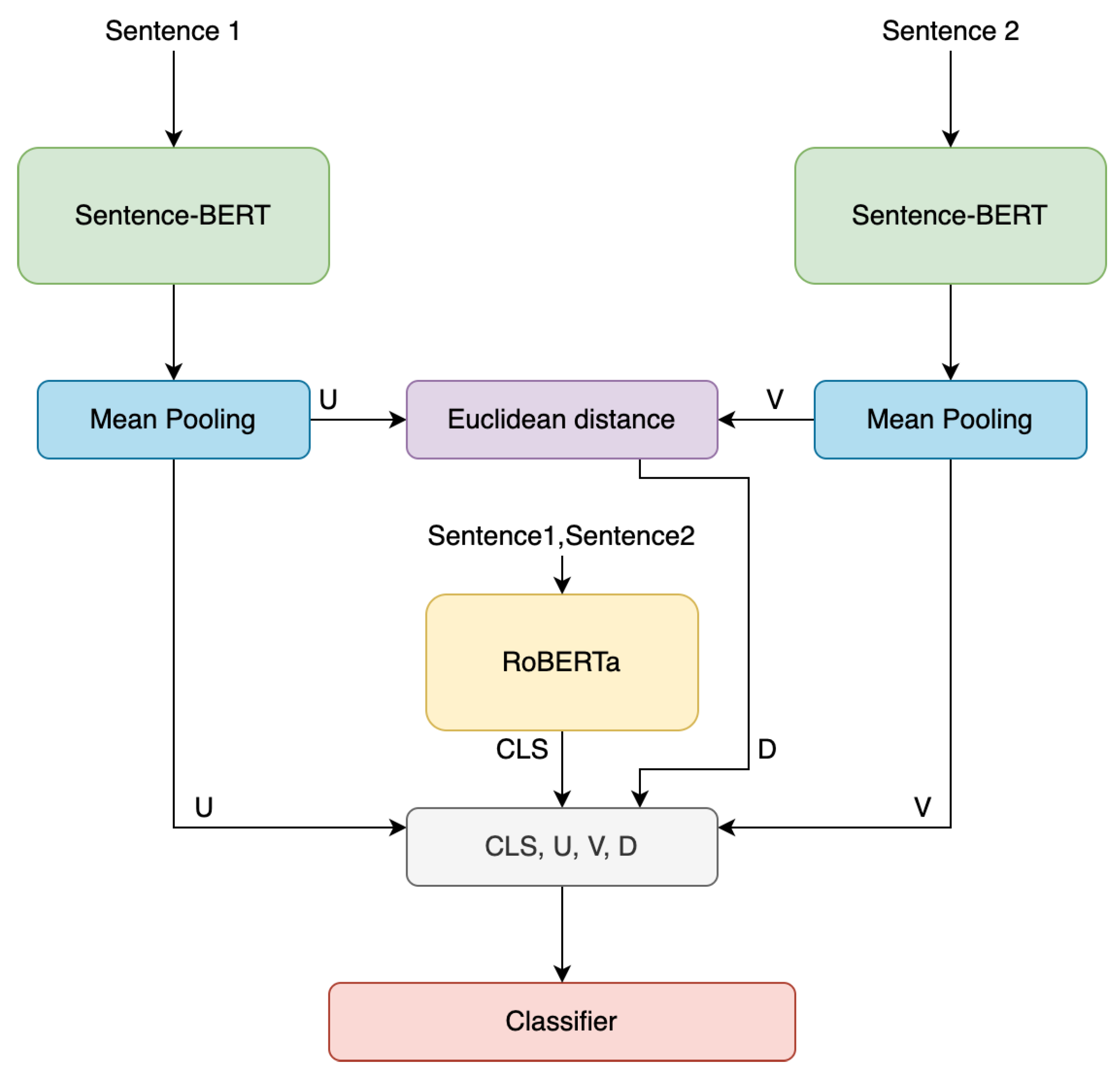

4.2. Model

- is the actual label;

- denotes the probability predicted by the model;

- N is the size of the test set.

4.3. Fine-Tuning

4.4. Ensemble Learning

4.5. Training Details

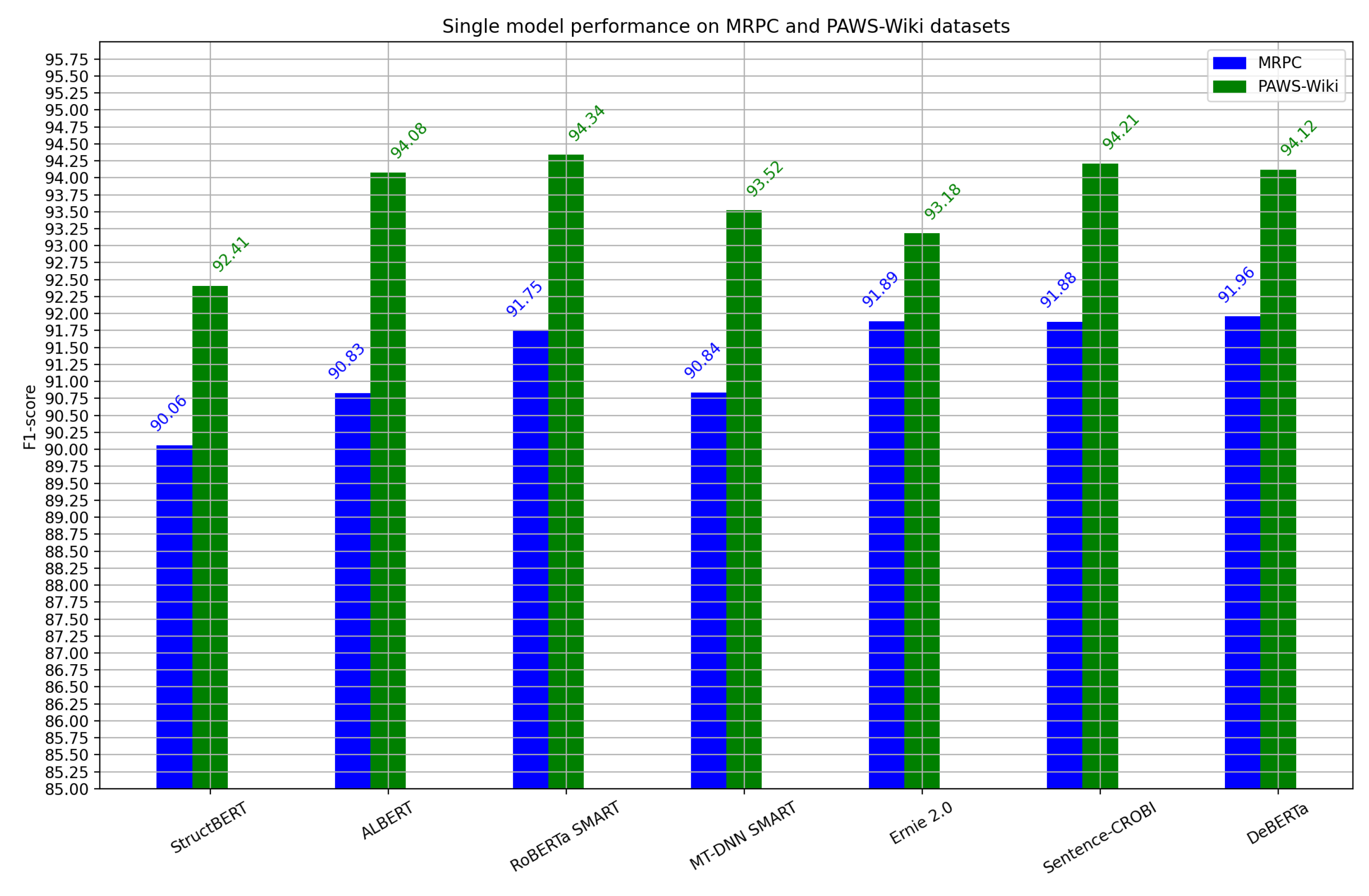

5. Results

5.1. Statistical Significance Tests

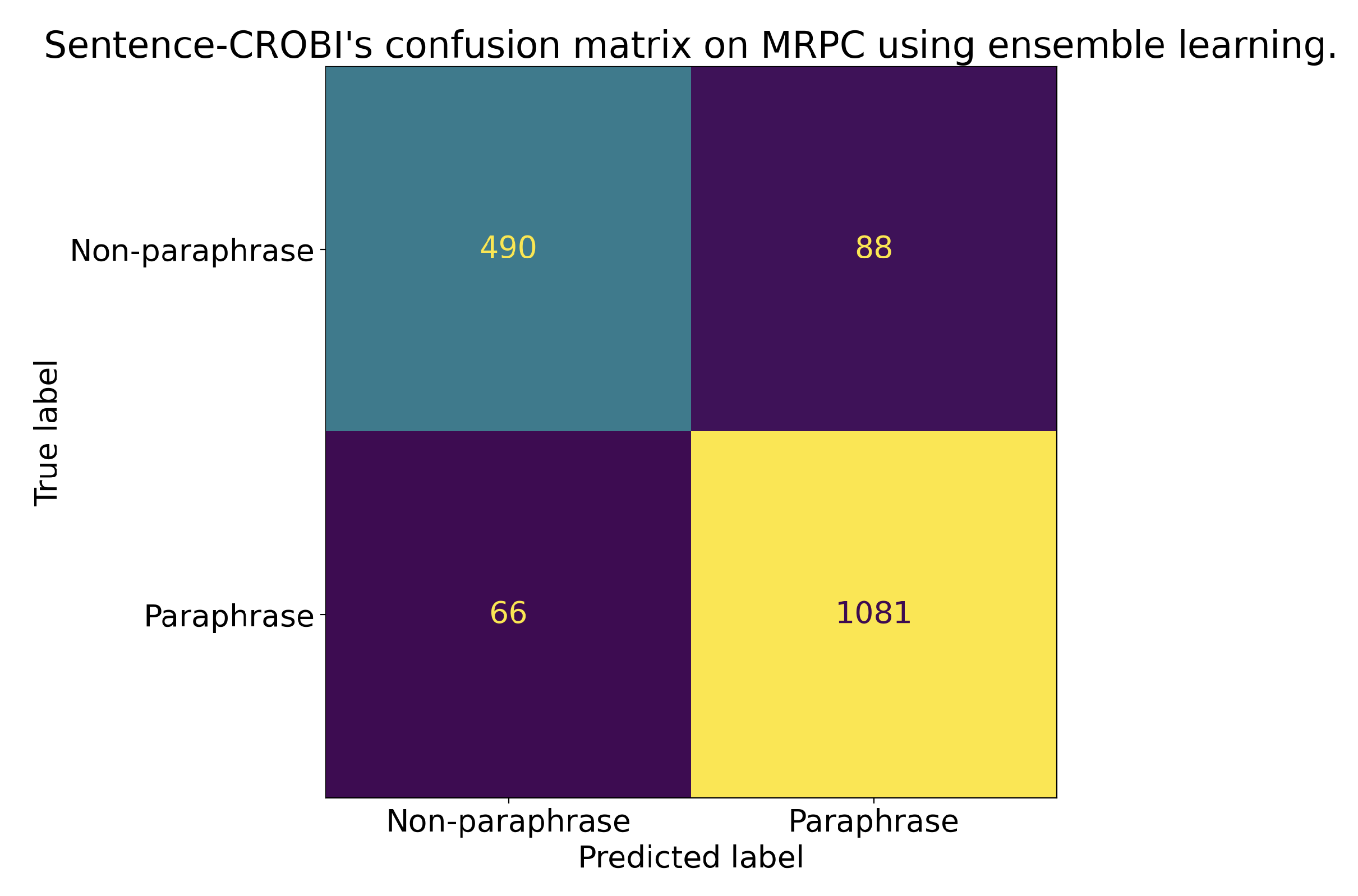

5.2. Error Analysis

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bhagat, R.; Hovy, E. What is a Paraphrase? Comput. Linguist. 2013, 39, 463–472. [Google Scholar] [CrossRef]

- Montoya, M.M.; da Cunha, I.; López-Escobedo, F. Un corpus de paráfrasis en español: Metodología, elaboración y análisis. Rev. Lingüíst. Teor. Apl. 2016, 54, 85–112. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Qiu, X.; Sun, T.; Xu, Y.; Shao, Y.; Dai, N.; Huang, X. Pre-trained Models for Natural Language Processing: A Survey. Sci. China Technol. Sci. 2020, 63, 1872–1897. [Google Scholar] [CrossRef]

- Humeau, S.; Shuster, K.; Lachaux, M.A.; Weston, J. Poly-encoders: Architectures and pre-training strategies for fast and accurate multi-sentence scoring. arXiv 2019, arXiv:1905.01969. [Google Scholar]

- Peng, Q.; Weir, D.; Weeds, J.; Chai, Y. Predicate-argument based bi-encoder for paraphrase identification. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022; Association for Computational Linguistics: Dublin, Ireland, 2022; pp. 5579–5589. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; Association for Computational Linguistics: Minneapolis, MN, USA, 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A robustly optimized BERT pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- He, P.; Liu, X.; Gao, J.; Chen, W. DeBERTa: Decoding-enhanced BERT with disentangled attention. arXiv 2020, arXiv:2006.03654. [Google Scholar]

- de Wynter, A.; Perry, D.J. Optimal subarchitecture extraction for BERT. arXiv 2020, arXiv:2010.10499. [Google Scholar]

- Rogers, A.; Kovaleva, O.; Rumshisky, A. A Primer in BERTology: What We Know about How BERT Works. Trans. Assoc. Comput. Linguist. 2020, 8, 842–866. [Google Scholar] [CrossRef]

- Wang, W.; Bi, B.; Yan, M.; Wu, C.; Xia, J.; Bao, Z.; Peng, L.; Si, L. StructBERT: Incorporating language structures into pre-training for deep language understanding. arXiv 2020, arXiv:1908.04577. [Google Scholar]

- Sun, Y.; Wang, S.; Li, Y.; Feng, S.; Tian, H.; Wu, H.; Wang, H. Ernie 2.0: A continual pre-training framework for language understanding. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 8968–8975. [Google Scholar]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. ALBERT: A lite BERT for self-supervised learning of language representations. arXiv 2020, arXiv:1909.11942. [Google Scholar]

- de Wynter, A. An algorithm for learning smaller representations of models with scarce data. arXiv 2020, arXiv:2010.07990. [Google Scholar]

- Jiang, H.; He, P.; Chen, W.; Liu, X.; Gao, J.; Zhao, T. SMART: Robust and efficient fine-tuning for pre-trained natural language models through principled regularized optimization. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 2177–2190. [Google Scholar] [CrossRef]

- Liu, X.; He, P.; Chen, W.; Gao, J. Multi-task deep neural networks for natural language understanding. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Florence, Italy, 2019; pp. 4487–4496. [Google Scholar] [CrossRef]

- Dai, Z.; Lai, G.; Yang, Y.; Le, Q. Funnel-transformer: Filtering out Sequential Redundancy for Efficient Language Processing. Adv. Neural Inf. Process. Syst. 2020, 33, 4271–4282. [Google Scholar]

- Xu, S.; Shen, X.; Fukumoto, F.; Li, J.; Suzuki, Y.; Nishizaki, H. Paraphrase Identification with Lexical, Syntactic and Sentential Encodings. Appl. Sci. 2020, 10, 4144. [Google Scholar] [CrossRef]

- Bromley, J.; Guyon, I.; LeCun, Y.; Säckinger, E.; Shah, R. Signature Verification using a “Siamese” Time Delay Neural Network. Adv. Neural Inf. Process. Syst. 1993, 6, 737–744. [Google Scholar] [CrossRef]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence embeddings using Siamese BERT-networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; Association for Computational Linguistics: Hong Kong, China, 2019; pp. 3982–3992. [Google Scholar] [CrossRef]

- Dolan, W.B.; Brockett, C. Automatically constructing a corpus of sentential paraphrases. In Proceedings of the Third International Workshop on Paraphrasing (IWP2005), Jeju Island, Korea, 14 October 2005. [Google Scholar]

- Zhang, Y.; Baldridge, J.; He, L. PAWS: Paraphrase adversaries from word scrambling. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; Association for Computational Linguistics: Minneapolis, MN, USA, 2019; pp. 1298–1308. [Google Scholar] [CrossRef]

- Wei, J.; Bosma, M.; Zhao, V.; Guu, K.; Yu, A.W.; Lester, B.; Du, N.; Dai, A.M.; Le, Q.V. Finetuned language models are zero-shot learners. arXiv 2022, arXiv:2109.01652. [Google Scholar]

- Gao, T.; Yao, X.; Chen, D. SimCSE: Simple contrastive learning of sentence embeddings. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Online, 7–11 November 2021; Association for Computational Linguistics: Punta Cana, Dominican Republic, 2021; pp. 6894–6910. [Google Scholar] [CrossRef]

- Sinha, K.; Jia, R.; Hupkes, D.; Pineau, J.; Williams, A.; Kiela, D. Masked language modeling and the distributional hypothesis: Order word matters pre-training for little. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Online, 7–11 November 2021; Association for Computational Linguistics: Punta Cana, Dominican Republic, 2021; pp. 2888–2913. [Google Scholar] [CrossRef]

- Wang, A.; Singh, A.; Michael, J.; Hill, F.; Levy, O.; Bowman, S.R. GLUE: A multi-task benchmark and analysis platform for natural language understanding. In Proceedings of the 7th International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Hambardzumyan, K.; Khachatrian, H.; May, J. WARP: Word-level adversarial reprogramming. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 4921–4933. [Google Scholar] [CrossRef]

- Izsak, P.; Berchansky, M.; Levy, O. How to train BERT with an academic budget. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Online, 7–11 November 2021; Association for Computational Linguistics: Punta Cana, Dominican Republic, 2021; pp. 10644–10652. [Google Scholar] [CrossRef]

- Min, S.; Lewis, M.; Zettlemoyer, L.; Hajishirzi, H. MetaICL: Learning to learn in context. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, WA, USA, 10–15 July 2022; Association for Computational Linguistics: Seattle, WA, USA, 2022; pp. 2791–2809. [Google Scholar] [CrossRef]

- Perez-Almendros, C.; Espinosa-Anke, L.; Schockaert, S. SemEval-2022 task 4: Patronizing and condescending language detection. In Proceedings of the 16th International Workshop on Semantic Evaluation (SemEval-2022), Seattle, WA, USA, 14–15 July 2022; Association for Computational Linguistics: Seattle, WA, USA, 2022; pp. 298–307. [Google Scholar] [CrossRef]

- Dopierre, T.; Gravier, C.; Logerais, W. PROTAUGMENT: Unsupervised diverse short-texts paraphrasing for intent detection meta-learning. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, August 2021; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 2454–2466. [Google Scholar] [CrossRef]

- Williams, A.; Nangia, N.; Bowman, S. A broad-coverage challenge corpus for sentence understanding through inference. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), New Orleans, LA, USA, 1–6 June 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 1112–1122. [Google Scholar]

- Phang, J.; Févry, T.; Bowman, S.R. Sentence encoders on STILTs: Supplementary training on intermediate labeled-data tasks. arXiv 2018, arXiv:1811.01088. [Google Scholar]

- Chen, Y.; Kou, X.; Bai, J.; Tong, Y. Improving BERT with Self-Supervised Attention. IEEE Access 2021, 9, 144129–144139. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 15 June 2022).

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Transformers: State-of-the-art natural language processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Online, 16–20 November 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 38–45. [Google Scholar] [CrossRef]

- Wilcoxon, F. Individual comparisons of grouped data by ranking methods. J. Econ. Entomol. 1946, 39, 269–270. [Google Scholar] [CrossRef] [PubMed]

- Dror, R.; Baumer, G.; Shlomov, S.; Reichart, R. The Hitchhiker’s guide to testing statistical significance in natural language processing. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; Association for Computational Linguistics: Melbourne, Australia, 2018; pp. 1383–1392. [Google Scholar] [CrossRef]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef] [PubMed]

| Corpus | Paraphrase Instances | Non-Paraphrase Instances | Total Instances |

|---|---|---|---|

| MRPC (train) | 2753 | 1323 | 4076 |

| MRPC (test) | 1147 | 578 | 1725 |

| QQP (train) | 134,623 | 229,223 | 363,846 |

| QQP (val) | 14,959 | 25,471 | 40,430 |

| QQP (test) | - | - | 390,965 |

| PAWS-Wiki (train) | 21,829 | 27,572 | 49,401 |

| PAWS-Wiki (val) | 3539 | 4461 | 8000 |

| PAWS-Wiki (test) | 3536 | 4464 | 8000 |

| Model | Accuracy | F1-Score | Difference Compared with Sentence-CROBI (Accuracy/F1-Score) |

|---|---|---|---|

| BORT [10] | 92.30 | 94.10 | 1.23/0.75 |

| MT-DNN SMART [16] | 91.60 | 93.70 | 0.53/0.35 |

| RoBERTa SMART [16] | 91.60 | 93.70 | 0.53/0.35 |

| StructBERTRoBERTa [12] | 91.50 | 93.60 | 0.43/0.25 |

| Funnel-Transformer [18] | 91.20 | 93.40 | 0.13/0.05 |

| ALBERT [14] | 91.20 | 93.40 | 0.13/0.05 |

| Sentence-CROBI | 91.07 | 93.35 | - |

| Ernie 2.0 [13] | 87.40 | 90.20 | −3.67/−3.15 |

| Model | Accuracy | F1-Score | Difference Compared with Sentence-CROBI (Accuracy/F1-Score) |

|---|---|---|---|

| Funnel-Transformer [18] | 90.70 | 75.40 | 0.6/1.6 |

| StructBERTRoBERTa [12] | 90.70 | 74.40 | 0.6/0.6 |

| ALBERT [14] | 90.50 | 74.20 | 0.4/0.4 |

| RoBERTa SMART [16] | 90.01 | 74.00 | −0.09/0.2 |

| MT-DNN SMART [16] | 90.20 | 73.90 | 0.1/0.1 |

| Ernie 2.0 [13] | 90.10 | 73.80 | 0.0/0.0 |

| Sentence-CROBI | 90.10 | 73.80 | - |

| BORT [10] | 85.90 | 66.00 | −4.2/−7.8 |

| Model | Accuracy | F1-Score | Difference Compared with Sentence-CROBI (Accuracy/F1-Score) |

|---|---|---|---|

| RoBERTa SMART [16] | 94.93 | 94.34 | 0.13/0.13 |

| Sentence-CROBI | 94.80 | 94.21 | - |

| DeBERTa [9] | 94.69 | 94.12 | −0.11/−0.09 |

| ALBERT [14] | 94.70 | 94.08 | −0.1/−0.13 |

| MT-DNN SMART [16] | 94.16 | 93.52 | −0.64/−0.69 |

| Ernie 2.0 [13] | 93.86 | 93.18 | −0.94/−1.03 |

| StructBERT [12] | 93.13 | 92.41 | −1.67/−1.8 |

| Model | Accuracy | F1-Score | Difference Compared with Sentence-CROBI (Accuracy/F1-Score) |

|---|---|---|---|

| DeBERTa [9] | 89.30 | 91.96 | 0.21/0.08 |

| Ernie 2.0 [13] | 89.11 | 91.89 | 0.02/0.01 |

| Sentence-CROBI | 89.09 | 91.88 | - |

| RoBERTa SMART [16] | 88.83 | 91.75 | −0.26/−0.13 |

| MT-DNN SMART [16] | 87.71 | 90.84 | −1.38/−1.04 |

| ALBERT [14] | 87.58 | 90.83 | −1.51/−1.05 |

| StructBERT [12] | 86.56 | 90.06 | −2.53/−1.82 |

| Model 1 | Model 2 | MRPC p-Value | PAWS-Wiki p-Value |

|---|---|---|---|

| Sentence-CROBI | ALBERT [14] | 0.0625 | 0.3125 |

| Ernie 2.0 [13] | 0.8125 | 0.0625 | |

| StructBERT [12] | 0.0625 | 0.0625 | |

| RoBERTa SMART [16] | 0.3125 | 0.3125 | |

| MT-DNN SMART [16] | 0.0625 | 0.0625 |

| Model 1 | Model 2 | MRPC p-Value | PAWS-Wiki p-Value |

|---|---|---|---|

| ALBERT [14] | DeBERTa [9] | 0.0625 | 1.0 |

| Ernie 2.0 [13] | 0.0625 | 0.0625 | |

| StructBERT [12] | 0.1875 | 0.0625 | |

| RoBERTa SMART [16] | 0.0625 | 0.0625 | |

| MT-DNN SMART [16] | 1.0 | 0.0625 | |

| DeBERTa [9] | Ernie 2.0 [13] | 0.8125 | 0.0625 |

| StructBERT [12] | 0.0625 | 0.0625 | |

| RoBERTa SMART [16] | 0.4375 | 0.125 | |

| MT-DNN SMART [16] | 0.0625 | 0.0625 | |

| Ernie 2.0 [13] | StructBERT [12] | 0.0625 | 0.0625 |

| RoBERTa SMART [16] | 0.8125 | 0.0625 | |

| MT-DNN SMART [16] | 0.0625 | 0.125 | |

| StructBERT [12] | RoBERTa SMART [16] | 0.0625 | 0.0625 |

| MT-DNN SMART [16] | 0.0625 | 0.0625 | |

| RoBERTa SMART [16] | MT-DNN SMART [16] | 0.0625 | 0.0625 |

| Text 1 | Text 2 |

|---|---|

| Ballmer has been vocal in the past warning that Linux is a threat to Microsoft. | “In the memo, Ballmer reiterated the open-source threat to Microsoft”. |

| “She first went to a specialist for initial tests last Monday, feeling tired and unwell”. | “The star, who plays schoolgirl Nina Tucker in Neighbours, went to a specialist on 30 June feeling tired and unwell”. |

| “Garner said the self-proclaimed mayor of Baghdad, Mohammed Mohsen al-Zubaidi, was released after two days in coalition custody”. | Garner said self-proclaimed Baghdad mayor Mohammed Mohsen Zubaidi was released 48 h after his detention in late April. |

| “It appears from our initial report that this was a textbook landing considering the circumstances”, “ Burke said”. | “Said Mr. Burke: It was a textbook landing considering the circumstances”. |

| “Powell recently changed the story, telling officers that Hoffa’s body was buried at his former home, where the search was conducted Wednesday”. | “Powell changed the story earlier this year, telling officers that Hoffa’s body was buried at his former home, where the aboveground pool now sits”. |

| Text 1 | Text 2 |

|---|---|

| “A Washington County man may have the countys first human case of West Nile virus, the health department said Friday”. | The countys first and only human case of West Nile this year was confirmed by health officials on 8 September. |

| “Snow’s remark “has a psychological impact”, said Hans Redeker, head of foreign-exchange strategy at BNP Paribas”. | “Snow’s remark on the dollar’s effects on exports “has a psychological impact”, said Hans Redeker, head of foreign- exchange strategy at BNP Paribas”. |

| “Another body was pulled from the water on Thursday and two seen floating down the river could not be retrieved due to the strong currents, local reporters said”. | “Two more bodies were seen floating down the river on Thursday, but could not be retrieved due to the strong currents, local reporters said”. |

| “Amgen shares gained 93 cents, or 1.45 percent, to $65.05 in afternoon trading on Nasdaq”. | Shares of Allergan were up 14 cents at $78.40 in late trading on the New York Stock Exchange. |

| “In his speech, Cheney praised Barbour’s accomplishments as chairman of the Republican National Committee”. | Cheney returned Barbour’s favorable introduction by touting Barbour’s work as chair of the Republican National Committee. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ortiz-Barajas, J.-G.; Bel-Enguix, G.; Gómez-Adorno, H. Sentence-CROBI: A Simple Cross-Bi-Encoder-Based Neural Network Architecture for Paraphrase Identification. Mathematics 2022, 10, 3578. https://doi.org/10.3390/math10193578

Ortiz-Barajas J-G, Bel-Enguix G, Gómez-Adorno H. Sentence-CROBI: A Simple Cross-Bi-Encoder-Based Neural Network Architecture for Paraphrase Identification. Mathematics. 2022; 10(19):3578. https://doi.org/10.3390/math10193578

Chicago/Turabian StyleOrtiz-Barajas, Jesus-German, Gemma Bel-Enguix, and Helena Gómez-Adorno. 2022. "Sentence-CROBI: A Simple Cross-Bi-Encoder-Based Neural Network Architecture for Paraphrase Identification" Mathematics 10, no. 19: 3578. https://doi.org/10.3390/math10193578

APA StyleOrtiz-Barajas, J.-G., Bel-Enguix, G., & Gómez-Adorno, H. (2022). Sentence-CROBI: A Simple Cross-Bi-Encoder-Based Neural Network Architecture for Paraphrase Identification. Mathematics, 10(19), 3578. https://doi.org/10.3390/math10193578