Abstract

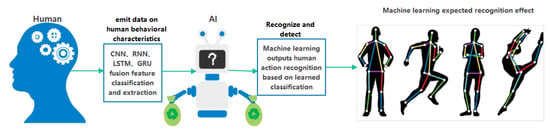

Human behavior is stimulated by the outside world, and the emotional response caused by it is a subjective response expressed by the body. Humans generally behave in common ways, such as lying, sitting, standing, walking, and running. In real life of human beings, there are more and more dangerous behaviors in human beings due to negative emotions in family and work. With the transformation of the information age, human beings can use Industry 4.0 smart devices to realize intelligent behavior monitoring, remote operation, and other means to effectively understand and identify human behavior characteristics. According to the literature survey, researchers at this stage analyze the characteristics of human behavior and cannot achieve the classification learning algorithm of single characteristics and composite characteristics in the process of identifying and judging human behavior. For example, the characteristic analysis of changes in the sitting and sitting process cannot be for classification and identification, and the overall detection rate also needs to be improved. In order to solve this situation, this paper develops an improved machine learning method to identify single and compound features. In this paper, the HATP algorithm is first used for sample collection and learning, which is divided into 12 categories by single and composite features; secondly, the CNN convolutional neural network algorithm dimension, recurrent neural network RNN algorithm, long- and short-term extreme value network LSTM algorithm, and gate control is used. The ring unit GRU algorithm uses the existing algorithm to design the model graph and the existing algorithm for the whole process; thirdly, the machine learning algorithm and the main control algorithm using the proposed fusion feature are used for HATP and human beings under the action of wearable sensors. The output features of each stage of behavior are fused; finally, by using SPSS data analysis and re-optimization of the fusion feature algorithm, the detection mechanism achieves an overall target sample recognition rate of about 83.6%. Finally, the research on the algorithm mechanism of machine learning for human behavior feature classification under the new algorithm is realized.

Keywords:

human behavior; machine learning; convolutional neural networks; human–computer interaction; wearable sensors MSC:

68; 68M25; 68M14

1. Introduction

1.1. Human Behavior Research Background

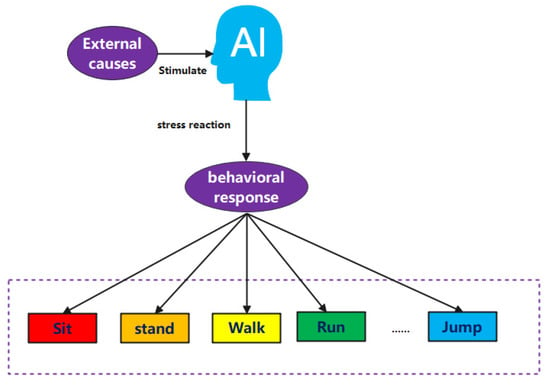

Human behavior was first discovered and defined by Alfred Espinas in 1890 [1]. With the change and development of science and technology, the study of human behavior has gradually become an effective entry point for researchers to understand human emotions. Through literature survey, it is found that the appearance of human behavioral characteristics comes from the subjective reflection of people through the cerebral cortex after receiving external stimuli, as well as the characteristics of facial and limb behaviors [2]. Common human behaviors include sitting, standing, walking, running, jumping, laughing, and crying. The performance of every human behavior stems from changes in human emotions. In the early research process, the research contribution of researchers is to provide favorable characteristic types and research directions for ethology research, from the definition of human behavior to the classification and summary of human behavior. In real life, people experience negative mood swings due to external influences from family, study, and work. When negative mood swings reach a certain level, they cannot be dealt with correctly and ultimately manifest as excessive risky behavior. Human and economic losses. As our researchers, we can timely understand the psychological state of the target through effective means, detect problems in time and take appropriate control measures to reduce negative hidden dangers [3], which is the problem we need to solve in our current research. Figure 1 illustrates the classification of human behavior proposed by researchers [3,4].

Figure 1.

The principles behind human behavior.

1.2. Research Significance (Analysis of Current Situation of Human Behavior Detection Technology at Home and Abroad)

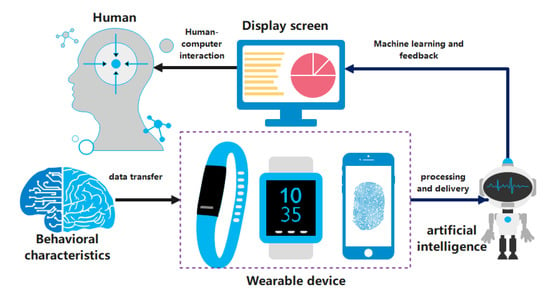

In recent years, with the development of Industry 4.0 technology, human–computer interaction technology has been increasingly favored by researchers in the field of machine learning. Human–computer interaction is abbreviated as HCI [5]. The principle and process are data transmission through artificial intelligence machine learning through emotional factors expressed by humans, and finally, the detection results are fed back by smart devices. Researchers need to implement the process of human–computer interactions and machine learning of human limb features through corresponding algorithms [6]. At present, human action recognition (HAR) has become the main means for researchers to obtain target behaviors. Throughout the previous literature, HAR technology originated in the fields of international military, anti-terrorism, and national security. After determining the behavior of the person involved, carry out the necessary work. Since the 21st century, HAR technology has gradually been widely used in the fields of medical treatment, monitoring, detection, and work assistance. In the field of health care, medical patients can choose to seek medical treatment in the hospital or at home according to their own conditions [7]. The emergence of HAR technology can also directly respond to the signals in the patient’s body in the monitoring system through transmission, allowing doctors to understand the patient’s situation in time. In addition, in the elderly population, HAR technology can also perform real-time data detection on the elderly population, and the guardian can realize the remote care of the physical and mental conditions of the elderly in different places so as to avoid the occurrence of dangerous behaviors. Combining the above, we need a set of scientific detection mechanisms and research methods to effectively capture the behaviors exhibited by humans. The need to effectively understand humans and conduct behavioral research requires access to human emotional factors. Thus, what equipment do we need to capture human emotional traits? Figure 2 is an idealized schematic diagram of human–computer interaction given by researchers [8]. As can be seen from Figure 2, in the process of data interaction between human behaviors in the human–computer interaction mode, a sensor is required to receive human body data and transmit it to an artificial intelligence device for data recognition and analysis. The final research output of this paper is the accurate detection of human behavior based on wearable technology. In the following chapters of this article, we will describe how to conduct experiments with wearable sensors through the analysis and use of human behavioral algorithms and the process of improvement.

Figure 2.

Human–computer interaction model diagram.

2. Background (Algorithm Analysis in Human Behavior Detection Process)

2.1. Status of the Use of Human Behavior Detection Algorithms under Machine Learning (Introduction to IDT, C3D, TSN, DLVF, Accelerators, etc.)

For the identification of human behavior, through a literature survey, in 2016, the researcher Ohnishi team proposed an algorithm with improved and dense optical flow trajectories, which is positioned as improved dense trajectories, referred to as IDT. The algorithm is based on the original optical flow [9]. The dense trajectory DT is improved. Later, with the improvement of technology, in 2014, Simonyan scholars proposed a behavior-based extraction method named the Two-stream algorithm [10]. The advantage of this algorithm is that it realizes the extraction of human behavior features in the video space. Compared with the IDT algorithm, the accuracy is improved. In 2015, the Tran team proposed a three-dimensional convolutional C3D algorithm to process video data more directly, and the detection path changed from the traditional mode to the spatiotemporal mode [11]. While improving the detection rate, the accuracy rate is not significantly improved. In 2016, Chinese scholar Wang Liming’s research team developed a time-aware network TSN algorithm that improved the previous Two-stream algorithm. While improving the detection accuracy, the detection rate is slow due to the complex structure of the TSN algorithm [12]. Finally, in 2017, the research team of LAN scholars re-optimized the TSN algorithm to more reasonably fuse different video clips [13]. Finally, in 2020, Chinese researcher Ding Chongyang’s team once again proposed a recognition method based on space–time-weighted gesture motion features [14] and modeled it under the Fourier pyramid algorithm, which once again improved the detection accuracy of human behavior. According to the above content, in the process of improving the human behavior detection algorithm, the researchers optimized the algorithm structure during the compilation process of the algorithm and realized the behavior detection in video and space. However, while improving the detection accuracy, the detection rate was reduced. Efficiency, which is also a part of our future work, needs to be improved.

2.2. Current Situation Analysis of Wearable Technology in Human Behavior Detection Process (Algorithms, Detection Modes)

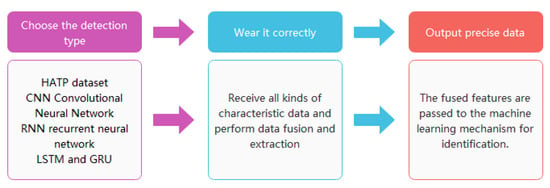

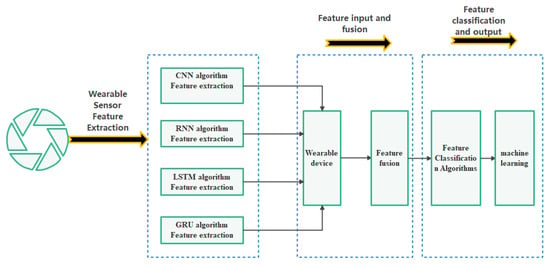

Wearable devices discover the feature expression of raw sensor data through deep learning. In the implementation process, we need our researchers to extract features to achieve the best results [15]. In recent years, the main algorithms used in wearable sensors in human behavior research include restricted Boltzmann machine RBM, deep autoencoder DA, sparse coding SC, convolutional neural network (CNN used earlier), and recurrent neural network RNN [16]. At present, these algorithms constitute the most important algorithms for the acquisition of human behavioral characteristics in wearable media [17]. This paper will acquire human behavior characteristic data based on the acceleration sensor. According to the previous survey, the data are preprocessed to each categorical feature extraction device, and each module has different working characteristics. In the next work, we will make corresponding improvements in complex behavior detection, data collection, and feature extraction and use wearable media sensors to receive HATP sample sets, CNN deep convolutional neural networks, RNN recurrent neural networks, LSTM and Gated recurrent unit GRU algorithm, which fuses data features and transmits them to machine learning equipment for detection to obtain human behavior features. Figure 3 flow chart is a model diagram of the working process of the wearable sensor [18].

Figure 3.

Model diagram of the working process of the wearable sensor.

3. Problem Statement

According to the status quo of the previous literature investigation, the researchers found insufficient detection accuracy and timeliness in the process of improving the mechanism. In this paper, the existing machine learning algorithm needs to be improved, and the feature data combined with the wearable sensor and the existing human behavior algorithm should be integrated in a more optimized way to obtain efficient and accurate detection results. The specific research contents are shown in Table 1 and Table 2.

Table 1.

Research questions.

Table 2.

Research objectives.

Research Objectives (Complete the Extraction and Recognition of Human Behavioral Features under Wearable Technology).

4. Research Methodology

4.1. Research Steps

The overall structure of this paper is to extract the detection data of each step of the human behavior recognition algorithm separately, use wearable sensor equipment to fuse, and transfer the fused feature values to the artificial intelligence that has undergone machine learning. Through the identification and detection of the fused data, the target behavior results are finally fed back [19]. The specific content will be divided into 5 steps, as shown in the Table 3.

Table 3.

Experimental steps in this paper.

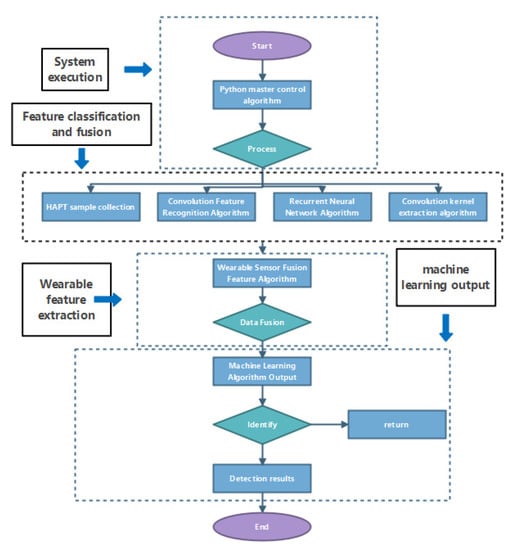

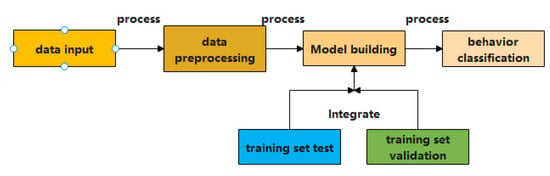

According to the 5 steps in the above table, this paper will refine the specific experimental process. From the beginning of the main program to the end, it is represented by a flow chart, and the specific execution process is shown in Figure 4 (the picture comes from the research process of this article):

Figure 4.

Model diagram of the human behavior detection process.

4.2. Introduction of Experimental Equipment and Parameters

According to the research needs, this paper will use the corresponding software and hardware for research. The specific software and hardware parameters and functions are shown in Table 4.

Table 4.

The specific software and hardware in this experiment and its function description.

4.3. Research Steps (Algorithm Use in Human Behavior Detection Process)

4.3.1. Sample Data Collection (HAPT)

This paper selects different sample types according to the characteristics of different populations of the actual samples, and the sample collection age range is between 20–60 years old. Among them, a comprehensive collection was carried out through the HAPT dataset [22]. The algorithm contains accelerometer inertial sensor axis data. The collection process is the various human behavior characteristics exhibited by the samples in different states. It includes the collection of sitting, standing, lying, walking, running, and other postures. For each sample, the acceleration information during the two movements is taken, and through the sample collection process of the HAPT algorithm, the algorithm can determine whether the target is still (sitting, standing) or moving (walking, running) [23]. The process of definition is divided into ordinary definition and transformation definition formed in limb transformation according to the actual situation. According to the existing research, there are 12 kinds in total. Table 1 shows the data collection situation under the acceleration algorithm of sample collection according to HAPT. The detailed information is shown in Table 5.

Table 5.

HAPT sample data collection form.

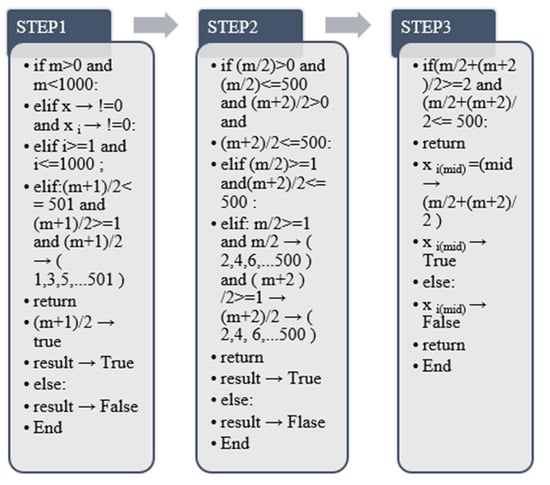

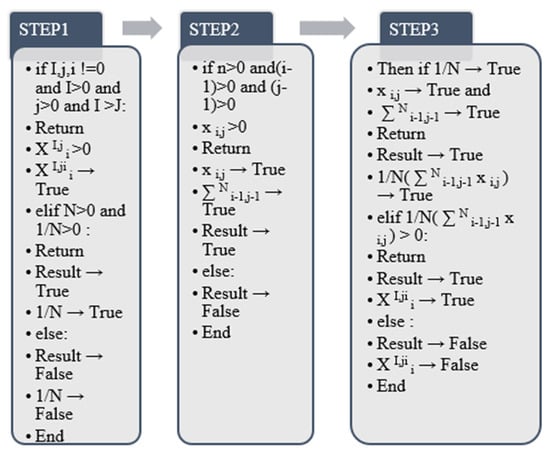

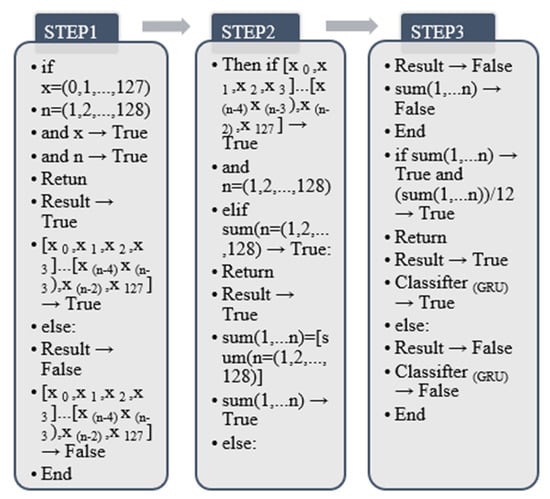

According to the type and quantity of definitions, this step adopts 6 common definitions and 6 transformation definitions for centralized processing. The research method of this step is to draw the human pose image through the line graph. It is divided into ordinary definition and transformation definition. Among them, the common type is a single behavior mode, and the transformation is defined as the change of two behavior modes, which is formed by changing the limb mode. In this paper, according to the HAPT algorithm of data collection and the different behavior changes, the effective label definition and processing time estimation are carried out for each collected sample, and finally, the label definition takes the middle-value method. First, the algorithm contains a total of N sample sets, which are separately encoded and registered by the unit. When there is a change in human behavior, it is set as a binary feature, and when there is no change in human behavior, it is a unary feature. The final binary feature or the value output of a binary feature has only one true value. The final purpose of this step is to obtain the sample output model graph. The above steps are output through [23] the model diagram in Figure 5 and the algorithm in Figure 6.

Figure 5.

HAPT data collection process model diagram.

Figure 6.

HATP sample collection algorithm output.

According to the algorithm output in Figure 5, the principle of this algorithm is to match and calculate through the characteristics of odd and even numbers. The calculation range is limited to 0–1000. (m + 1)/2 represents odd-numbered items, which are unary features after output, and m/2 + (m + 2)/2 are even-numbered features. According to changes in human behavior, the values of m/2 and (m + 2)/2 of even-numbered features will appear accordingly. The algorithm performs cumulative addition according to the given output mode and finally forms a sample dataset.

Through the output process of the above Algorithm, it is divided into odd number judgment and even numbers judgment to realize, and it is carried out through two sets of return values of true and false. When the given dataset label range is in the odd range of 1, 3, 5, …, 501, it is judged to be true, and the dataset the result of the odd item is returned. If the returned label value is not an odd column of 1,3,5, …, 501, and the output result is false, there is no return value. The judgment principle of even-numbered column data is the same as that of odd-numbered columns. When the output label range is 2, 4, 6, …, 500, the return value of the final label is output. Otherwise, the output result is false, and there is no return value.

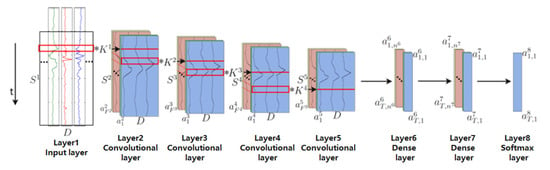

4.3.2. Convolution Feature Recognition Algorithm (One-Dimensional Convolution and Two-Dimensional Convolution)

This process is to identify the dimension of the data itself through the convolution operation. For the selected samples, the processing method adopts a two-dimensional convolution operation [24], and the final goal is to form a two-dimensional convolution kernel. The two-dimensional convolution operation adds a dimension to the original one-dimensional case and is a deep convolutional neural network that can also be used for facial emotional feature detection. The most basic feature of 2D convolutional networks is the type of deep convolutional neural network. In the process of human behavior recognition, it is divided into 7 components, namely the data input layer, convolution layer (same dimension), pooling layer (same facial expression), fully connected layer, classification output layer, convolution layer (different dimensions)) and pooling layers (of different dimensions) [25]. For a one-dimensional convolution operation, a one-dimensional convolution kernel is formed. This process requires the use of two dimensions to extract and form the final fused features. The 1D convolution feature extraction algorithm and the 2D convolution feature extraction algorithm are shown in Figure 7 [25] and Figure 8 [25], and the output process model diagram of the 2D convolution layer is shown in Figure 7 [25] and Figure 8 [25] and Figure 9 [26] shows:

Figure 7.

One-dimensional convolution recognition algorithm output.

Figure 8.

Two-dimensional Convolution Feature Extraction Algorithm (Convolutional Layer).

Figure 9.

Feature extraction algorithm for 2D convolution (pooling layer).

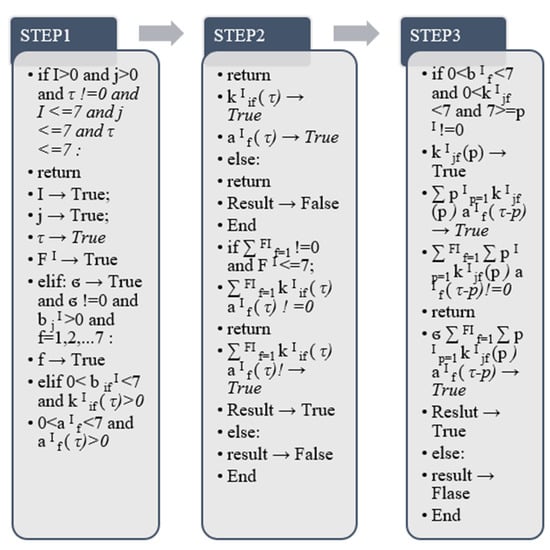

- One-dimensional convolution feature extraction algorithm:

In the above equation, the feature j represented by (τ) is the base, and τ represents the basic parameter of convolution identification. σ represents the nonlinear relationship in the process of mathematical functions, FI represents the number of features of the first layer of , (f = 1) represents the features of the first layer, and the (I + 1) layer after the kernel convolution is created. The eigenvalue is j, and PI represents the length of the convolution kernel in layer I. In addition, represents the base of the convolution kernel b, j is the starting value, and I is the value of the convolution kernel b within the termination range. P represents the default parameter of one-dimensional convolution, and the difference between the feature base and the default parameter is calculated by (τ-p), and the numerical change of the convolution kernel is analyzed from it. In addition, the bias vector produced by the convolution process is represented by bI. When the process begins, we will perform numerical operations on each channel [27].

The output of the above algorithm is carried out according to the output method of the python program. It is divided into the return value when the true value is output and the formation of the false output value without the return value. In the whole process, the algorithm first judges the values of I, j, τ, and FI, and the equation needs to be in all of the above features! = 0 to calculate. When σ is not 0, we need to use the value of in this algorithm to verify that the convolution layer is within the range of 0–7, and we also need to verify whether the value returned by is consistent with and is also in convolution layer in the range 0–7. After satisfying the above value range, continue to judge the and the . This process requires judgment on the weighted summation results in the equations. According to the previous true conditions of (τ) and , it is judged that and after weighted summation Each group of convolutional layer values is also true and the final result can be output. When all the above processes need to be true, the (τ) output by the final algorithm is the calculated one-dimensional convolution output result.

- Two-dimensional convolution feature extraction algorithm (convolution layer):

This paper selects the output value of the algorithm in the above formula, where X is the cardinality of the two-dimensional convolution feature, i represents the parameter (starting value) of the ith convolutional layer, and I represents the output matrix of the product. (default from i = 1) [26], j is used to select the number of outputs of the matrix, the order of this model graph is represented from left to right using 0 to N, and N represents the Nth. The number of specific matrices corresponding to the number is output to the Nth number. W represents the default parameters in the feature extraction algorithm, a represents the initial value before feature extraction, and j represents the final value of feature extraction. represents the initial value of the two-dimensional convolutional layer, f represents a nonlinear function, and our total output formula here is sigmoid.

In the above algorithm output, the output judgment principle is the same as in the previous section. The final detection result of the two-dimensional convolutional layer in the true value range, the false value is an error, and there is no return value. represents the value when the initial value b! = 0. In the algorithm process (), is the default two-dimensional feature. The value of the convolutional layer to be calculated, indicates that the matrix feature output starts from the initial value (i + a − 1) of the convolutional layer in the base X by the end of (I − 1) to calculate the number of matrices performed by J. When both have actual eigenvalues, the product of the two is true; otherwise, it is false. When () is true, the algorithm calculates the value of the final two-dimensional convolution feature result .Figure 9 shows the specific algorithm of feature extraction of 2D convolutional network.

- Feature extraction algorithm for 2D convolution (pooling layer)

In this step, selects the two parameters i and j as the pooling layer part of the two-dimensional convolution, and this part represents the output layer of the pooling part after the two-dimensional convolution starts. (i − 1) and (j −1) are the starting values of the summation, respectively, and n is the ending value. When 1/N represents the final maximum output result N value, the average value of is taken as the final output result after all weighted summations. The final output value is the final feature of the 2D convolution calculated through this process. It is averaged by forming a matrix after the convolution of the previous layer. In this paper, through previous research, after using convolution and pooling operations, there is a global connection layer on the machine, and finally, the algorithm of summing the single classification features and then dividing by the number of corresponding classifications is calculated separately [28].

In the algorithm output of this process, the true value and false value in the python output process are used to judge and return the result. Further, 1/N is the average number of the region where the extracted two-dimensional convolution result is located in the pooling layer, starting from the two initial values of (i − 1) and (j −1), and n is the corresponding pooling layer finally obtained. The upper bound of the weighted sum when the result is true. The product of 1/N and is the final value of . Figure 10 below [28] shows the full process model diagram of a deep convolutional neural network.

Figure 10.

Model diagram of the output process of the two-dimensional convolutional layer.

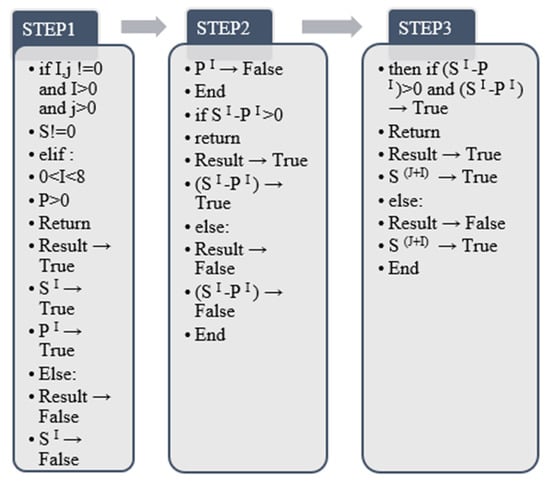

4.3.3. One-Dimensional Convolution and Recursive Feature Extraction Algorithms

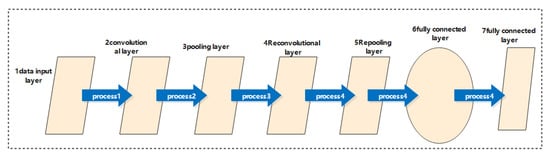

In the previous step, we used the HATP sample collection method and the output algorithm of the one-dimensional and two-dimensional convolutional neural networks to obtain the corresponding probability values, respectively. In the next steps, we will use a recurrent neural network. A recurrent neural network is divided into two output methods, one is combined with a one-dimensional neural network, and the other is data feature extraction under its algorithm. In the process of combining one-dimensional convolution, we use a new model structure algorithm called Deep-Conv LSTM [29]. The feature of this algorithm structure is that the dimensional convolution features are converted into time series according to the recursive layer in the recursive feature by transmitting the features of the data. way to motivate. The first 4 processes in the 2D convolutional layer represented by the * in the figure are the network architecture for top-to-bottom data transmission. The overall process [29] will be shown in Figure 11 and Figure 12 is the algorithm output of this process:

Figure 11.

One-dimensional convolution and recursive feature extraction algorithms.

Figure 12.

One-dimensional convolution and recursive feature extraction algorithms.

From the above figure, we can see that the total dimension and recursive layer are 8 sets of eigenvalues. The features in the model are transmitted in sequence according to the principle of time series [30]. Additionally, there are multiple channels for a single feature in each model. The content in the model diagram is the input data from the sensor from left to right. The first layer in the picture is the input data value of the receiving sensor device, the 2–5 layer is the convolution layer data, and the 6–7 layer is the nonlinear layer. The relationship incentive layer, the last eighth layer, is the classification result of the acquired behavior data [31]. The positioning in the model diagram is that the layer layer represents the data range D × S1, where D represents the number of channels of the data, and SI represents the data length of the first layer at this time. Layers 2–5 are convolutional layers, K I is the convolution kernel in the first layer, FI is the number of features in the first layer [32], and a, i, I are the feature map excitation of the first layer. The last layer 6, and layer 7 are hidden recursive layers. a, t, I, I represent the excitation data from layer i to layer I at time t in a given time series.

S(J+I) = SI − PI +1

The above equations are designed using the fusion of two sets of data, one-dimensional convolution and recursive features. S(J+I) represents the eigenvalue after the fusion of the two, SI represents the number of sample records, PI represents the length of the convolution kernel of the first layer, and the number 1 is the result reference value. After adding 1 to the two sets of eigenvalues, the result will be >1, which will satisfy the output requirements of the algorithm. In the output process of the algorithm, the final S(J+I) can be established only when the characteristic data of both are in a state of >0 [33].

4.3.4. Recurrent Neural Network RNN Algorithm

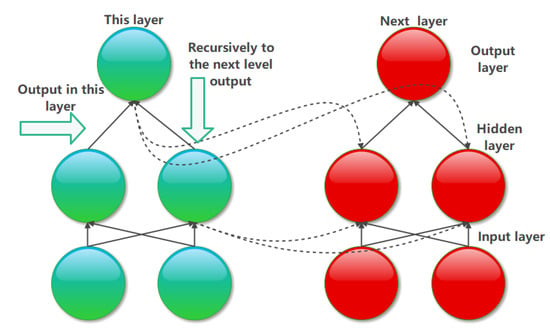

In this step, RNN is different from the convolutional neural network CNN algorithm [34]. Through the previous literature survey, it was found that the structure of RNN has a feedback function; that is, the output process is not limited to the next layer in this structure. After the level is over, it can recurse to the lower structure for feature transfer output mode. In addition to output in the current layer, the hidden layer in the RNN can also output at the next time point [35]. Figure 13 is the model structure diagram [35] given according to the characteristics of the RNN algorithm, and the algorithm of this process [35] is shown in Figure 14.

Figure 13.

RNN algorithm model diagram output of the algorithm.

Figure 14.

RNN algorithm output.

The output of the algorithm is shown in Figure 14:

It is described in Figure 12 that the output mode of the current layer and the next time point is a dotted line connection, and the connection mode in this layer is a solid line connection. This paper chooses the weighted summation method in the output of the algorithm in Figure 13. The method is to carry out the combination according to the combination method, i is the base number for the combination, and I is the final value in the combination. After calculating all the layers in the output process, add them up, and obtain the output result of the final RNN algorithm, as shown in Figure 14. In the algorithm process, this process is still carried out in the way of true and false value judgment. After all the characteristic C values of RNN have corresponding numerical output in each group, the program can calculate the total eigenvalue of sum after summation through the algorithm. Otherwise, no return value is output for an error.

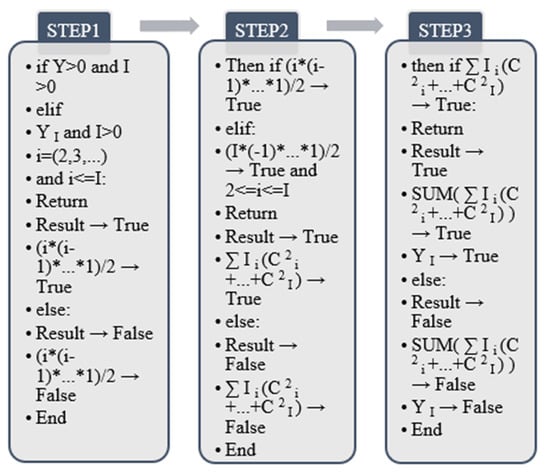

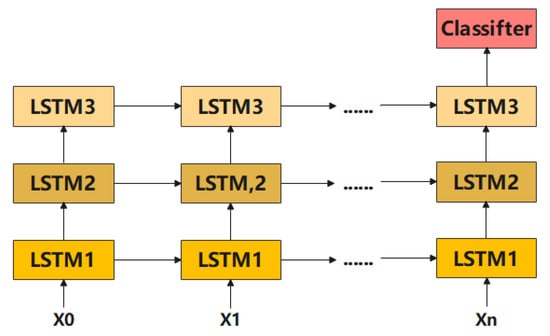

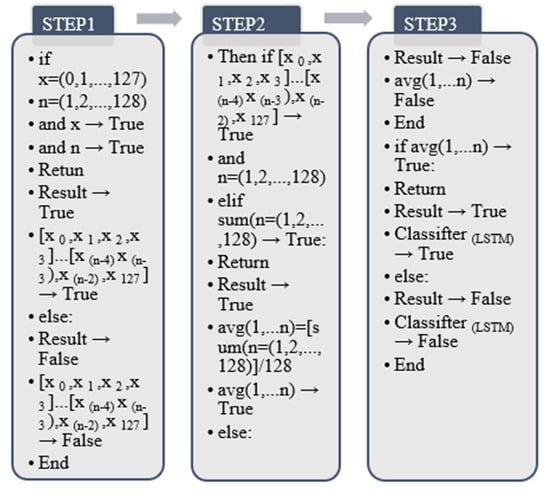

4.3.5. Long Short-Term Time Memory Network LSTM Algorithm

The LSTM algorithm is an important link in the memory network. This paper uses the LSTM algorithm to memorize and transmit data in the upper and lower parts of the time series. The LSTM algorithm is expected to use the matrix method, and the matrix method will use base 4 as the benchmark, and each matrix needs to be exponentiated during the bottom-to-top transmission process [36]. The method used in this paper is divided into three LSTM layers, and the LSTM in each layer adopts a one-way transfer. The data values of the 3 LSTM layers are calculated in 3 squares of 4 from bottom to top. The total number of vector sets will be 4 4 = 128 eigenvectors. The final output will be the probability of the corresponding action class. Figure 15 is the process model, and Figure 16 is the corresponding matrix algorithm [36] output:

Figure 15.

LSTM algorithm process model diagram.

Figure 16.

LSTM algorithm output diagram.

LSTM algorithm output:

It can be seen from the above formula that the output of this unit starts from X1 and ends at X127, and has undergone the calculation between 128 vector sets in the middle [37]. This algorithm adopts the method of cumulative addition at one time and finally takes the average value, from which it is calculated that after the LSTM algorithm passes the initial value of X0 to X127 in one iteration, the final sum is divided by the passed digits, and finally calculated Average Classifier (LSTM) for this round of processes [38]. In the output of the algorithm in this process, 4 groups of eigenvalues are used to output in matrix form in sequence, and the eigenvalues start from 0 and end at 127. In the algorithm, the architecture uses each set of vectors to output the eigenvalues as true. Finally, the python program will calculate the LSTM result through the algorithm and return it as true. If there is an abnormality (data error or feature) in each set of vectors in the output process, the final program will have no feature value and return false.

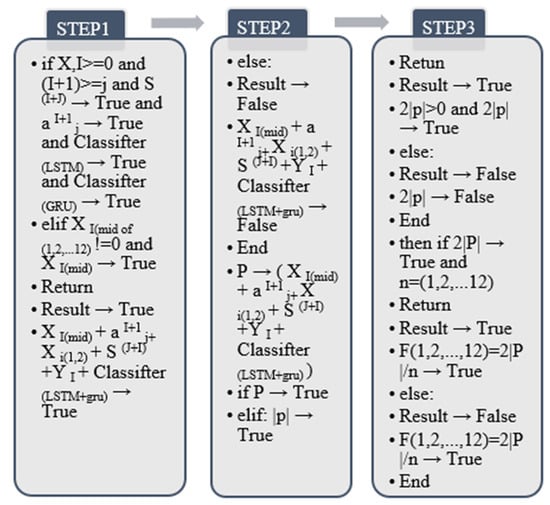

4.3.6. Gated Recurrent Unit GRU Algorithm

Obtains the average output through the matrix output eigenvalues of the previous round, it is necessary to add a gradient effect that effectively eliminates the recurrent neural network RNN algorithm. This paper sets according to the definition of GRU. The logic model of the gated recurrent unit is similar to that of LSTM. The same 128 vector sets are output by the 3-layer GRU layer, and finally, 12 kinds of human behaviors originally in the HATP sample collection library are customized and output according to the actual human behavior recognition method. The output of the algorithm used is shown in Figure 17 [38] below:

Figure 17.

GPU algorithm output graph.

GRU algorithm output:

The significance of the output of this algorithm is that according to the core architecture of the previous LSTM, the gated loop algorithm is finally used to effectively supplement the execution process of the previous RNN algorithm at one time. The output eigenvalues are the gated algorithm values. The algorithm is improved on the original LSTM, and each matrix dataset is added using the SUM summation method, and divide the final result by 12 to obtain 12 feature sets of human behavior data. The implementation process of the design of this algorithm is similar to the LSTM algorithm. The output and eigenvalue calculations are performed in the form of a matrix through 4 sets of features. This process is an aid to the previous link, and its function is to prevent consolidation and prevent abnormal eigenvalues in boundary values. The GRU data are returned when the final algorithm output has feature output. The final eigenvalue needs to be divided by 12; the reason is to take an average of the total eigenvalues of all GRUs; 12 represents the number of 12 human behavior categories.

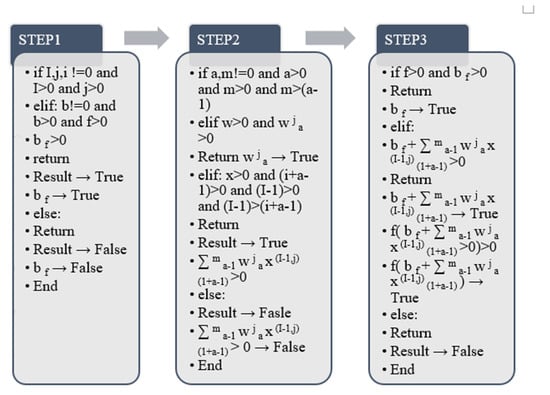

4.3.7. Fusion Algorithm Output of Wearable Devices (Core Part)

The use of the wearable medium is to uniformly extract the algorithmic feature data in all previous steps and transmit it to the detection mechanism for identification. The whole process includes two parts: feature extraction and feature fusion. The feature extraction part is to extract all the output features of algorithms such as CNN, RNN, LSTM, and GRU. The feature fusion part is to integrate and classify all the extracted features [39]. The classified human behavior data features will be sent to the intelligent device after machine learning using transmission, and the intelligent device will recognize and analyze the fusion according to the content of machine learning. Data and test results. The actual working principle model of the wearable sensor used in this paper is gyroscopic. The overall implementation process for wearable sensors falls into 3 broad categories. The method adopted [39] in this paper is shown in Figure 18 as follows:

Figure 18.

Input and output model diagram under the wearable sensor.

According to the particularity of the input and output process of the wearable sensor and the summary of the algorithm in the previous research, in this link, the wearable medium sensor needs to go through three processes acquiring features, fusing features, and outputting features at the same time. In order to realize this operation, this paper will combine the three parts of the algorithm to obtain the following calculation formula and algorithm output [40], as shown in Figure 19.

Figure 19.

Output diagram of wearable sensor algorithm.

In the above formula, this article uses the contents of all the algorithm formulas used in the previous stage to add the sequence. The sequence range is 1–12 because there are positive and negative differences in the 12 human behavior identification features; first, use the formula to identify and transfer the wearable sensor and subtract the sum of all acquired features with a standard value of 1 (The summation part is the sum of all the data of each behavioral feature). Secondly, because the feature has a relationship between positive and negative values for feature extraction, this paper uses the absolute value to take a positive number. Finally, you need to multiply the total difference by 2. Furthermore, double the result [40]. In addition, the value range of n is 1–12 (12 kinds of human behavior characteristic data). In the algorithm design, it is necessary to extract and add the features of the algorithms in each previous link one by one. After obtaining the algorithm data of one step, divide it by n, where n represents the specific classification of the currently obtained fusion data. After the human behavior of the corresponding classification feature is acquired, the following feature part will end and will not be acquired again.

4.3.8. Machine Learning Model Algorithm Output (Core Part)

Machine learning is the last step and the most important part of the research release of this paper. Through this operation, the wearable sensor and the feature extraction of the previous algorithm are associated. According to the working principle of the wearable sensor and the model structure and output method of the algorithm and the previous algorithm. Through research, this paper proposes the following algorithm corresponding to the detection process model [41] Figure 20 and output Figure 21.

Figure 20.

Model diagram of machine learning detection results.

Figure 21.

Machine learning algorithm output.

The execution process of this algorithm performs corresponding machine learning and recognition output according to the 12 human behavior characteristics of the above operation. According to the standard value of 1 as a comparison parameter. The difference is that x, y, and c are used as the eigenvalues of the output results, respectively, in the machine learning process. x corresponds to the HATP algorithm and the convolutional neural network CNN algorithm, y corresponds to the recurrent neural network RNN algorithm, and c corresponds to the eigenvalues of LSTM and GRU. The values in the remaining algorithms specify the eigenvalues. In machine learning, the difference between the weighted summation of the behavioral feature data before each algorithm is subtracted from the standard value, and the corresponding positive value is finally obtained. m is used as the final identification basis of machine learning for later behavior detection. Machine learning is similar to the output design of wearable sensors, which also requires the use of a standard value of 1 minus the eigenvalues of each process. The difference is that the process of machine learning is relative to the output of other processes. Because in the process of machine learning, whether it is positive or negative, it is the correct learning mode. This algorithm uses the method of first square calculation and then square root to ensure that the calculation result of the original equation is a positive number. The weighted sum method is to use 12. The classification of human behavior features is refined accordingly so that the mechanism can adapt to various learning contents so as to better identify and detect target features later.

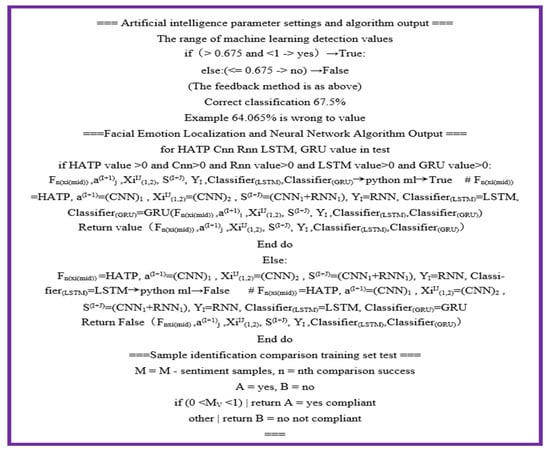

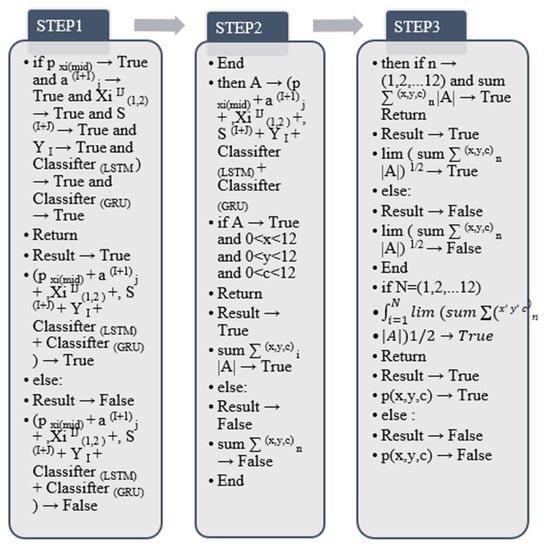

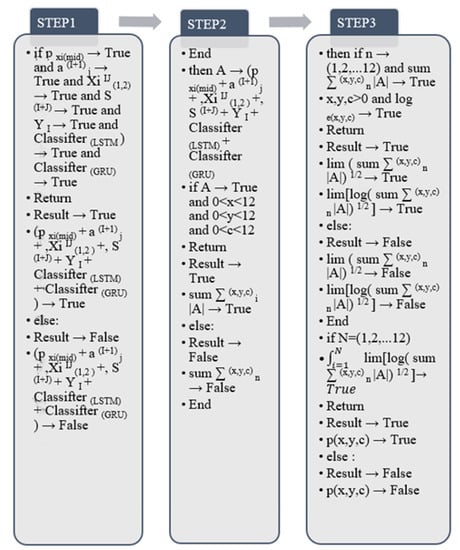

4.3.9. Python Main Control Output Mode

After all the above steps are compiled and completed, the total program control output algorithm under the python architecture of the main program needs to be performed. The algorithm will include the input and output parts of all steps. The final algorithm [41] is shown in Figure 22.

Figure 22.

Python main program algorithm output.

In the design of the python main program algorithm, the overall idea is similar to the process of machine learning, but the overall link needs to be effectively controlled in the output result. p stands for python, x is the control HATP algorithm and CNN algorithm in the corresponding behavior category, y is the corresponding control RNN algorithm, and C is the process corresponding to the LSTM and GRU algorithms. Because all control outputs correspond to the 12 human behavior data given in the paper, the corresponding upper bound of the integral peaks at 12. In this step, firstly, the eigenvalues of each group of algorithms are added in sequence; that is, the classification features are the features of all algorithm steps in this paper under real and effective conditions, and there are 12 types. The purpose of taking the square root after the weighted summation is to improve the detection output value and range of the mechanism. The limit method of the square root value is to output the maximum value of each classification sample data in the process of corresponding behavior feature fusion. The purpose of the final integral calculation is to output the effective range of various feature fusion data within the corresponding range and to control the final algorithm to obtain effective accuracy.

5. Results

5.1. Analysis of Experimental Results (Analysis of Algorithm Execution Results in Each Step)

According to the output method of the machine learning algorithm and other algorithms under the Python total control algorithm in this paper, and combined with the sample data collected in the research process of this paper, the following experimental results are finally obtained [41]. The following is the specific content.

5.1.1. Wearable Sensor Algorithm Analysis

According to the working principle and algorithm output of the previous wearable sensors, this paper currently conducts corresponding experiments on the collected sample data. At present, the previous 12 human behavior features have been extracted and fused uniformly during the operation. The data from sample collection to fusion output [41] are shown in Table 6.

Table 6.

Using wearable sensor feature fusion.

From the test data in the table above, it can be seen that the overall recognition rate of compound actions is lower than that of single human actions. It shows that in the process of human behavior recognition, the two gestures have higher requirements for equipment in the process of connecting. At the same time, in the process of compound human action recognition, the parsing time of the mechanism in unit time is also longer than that of six single action recognition.

5.1.2. Machine Learning Algorithm Output Analysis (Output Interface Diagram + Data Table)

According to the machine learning recognition algorithm specified in Chapter 3, Research Methods, and according to the classification data after the fusion of wearable sensors, we conduct centralized detection on the collected samples. The data analysis and detection situation in the specific detection process is shown in Table 7, and the actual detection data are shown in Table 8 (12 types of human behaviors):

Table 7.

Machine learning detection data analysis table.

Table 8.

Distribution of human behavior detection results under machine learning.

It can be seen from the data in Table 8 that the overall detection of the mechanism after machine learning under the algorithm can achieve the expected effect. The values of regression index and quadratic root likelihood are relatively low, indicating that there are errors and deviations in the recognition process of some human behaviors in the recognition process of the machine, resulting in a low overall value [42]. This is also a specific location where we need to continue improving and testing. In the later improvement process, the eigenvalues before each algorithm will be accurately matched in the output of the machine learning algorithm, and the corresponding control operations will continue to be added to the mathematical formula.

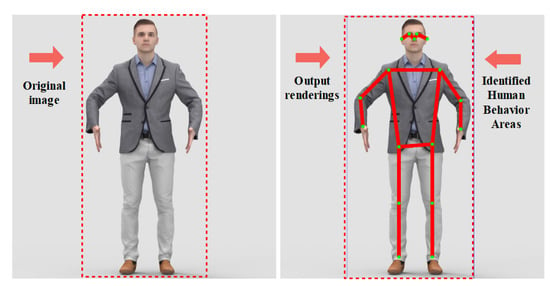

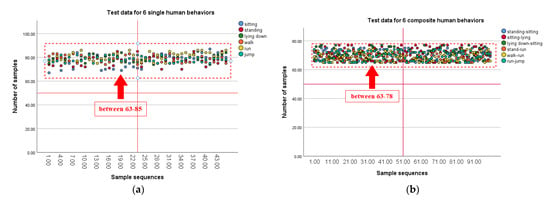

The above is the classification and detection results of 12 human behavior characteristics under the control of the algorithm output by the machine learning mechanism. From the overall point of view, the single human behavior posture in the detection process is more than 80%, and the detection result of the compound human behavior recognition under machine learning is lower than that of the single type. It shows that the current mechanism needs to further improve the algorithm in the process of capturing complex human behavior [43]. In the next step, this paper will carry out secondary sorting from the process of algorithm integration and output to narrow the gap between the recognition rate of compound human behavior and single-type human behavior and, at the same time, improve the detection rate of the overall mechanism. The inspection diagram [43] is shown in Figure 23.

Figure 23.

Schematic diagram of machine learning detection results.

It can be seen from the machine learning detection output graph in Figure 23 that the mechanism realizes the preliminary detection of human behavior after the feature fusion and classification of the wearable sensor under machine learning. The sample in the detection result is standing, and the hand is showing a downward trend. The mechanism judged the behavioral characteristics of the target through the movements of the face and limbs, and the red line area is the part that recognizes the human posture [44]. Our next step will be to refine the existing algorithm to add more detected regions from the identified points. In Figure 24, we will analyze the specific detection data through two sets of combined pictures [45].

Figure 24.

(a) A line chart of machine learning detection under an SPSS (b) Line chart of machine learning detection under b SPSS.

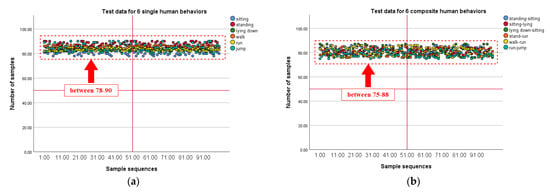

It can be seen from the above data that the machine can identify human behavior data extracted and fused by wearable sensors under the existing algorithm. At present, the overall recognition and detection rate of machine learning is average. Figure 24 shows that the data can be identified by fusing the features. The detection value of some composite human behavior feature data is relatively low, and the overall concentration is between 65–70%. The detection rate of single human behavior features is around 75% [45]. The current mechanism needs to be re-optimized for the output process of the algorithm and needs to be improved for the composite human behavior characteristic data.

5.2. Machine Learning Improvement Algorithms

According to the above detection situation, we optimize the output [45] of some regions for the previous algorithm, as shown in Figure 25.

Figure 25.

The output of the improved machine learning algorithm.

The improvement of the above algorithm is to control the difference of the eigenvalues of the open algorithm relative to the base x, y, and c by taking the logarithm while retaining the original calculation process. We take ex, ey, and ec as the base of the logarithm, the difference between the standard value one minus all feature algorithms as the true number of the logarithm, and calculate the value of each variable under its own logarithm [46]. In the process of machine learning, this paper adopts the method of true value and false value. Returns the detection result under the true value and returns no result under the false value. The range of 1–12 represents 12 kinds of body behaviors, and the sum of the feature values of each kind of body behavior is the whole content to be carried out by machine learning. According to the abbreviation substitution method, the bases ex,ey and ec correspond to the real numbers (HATP, CNN), RNN, (LSTM, GRU). The difference between the above eigenvalues is subtracted from the standard value of 1, and the square is then opened. Square, so that the final value >0. The final result is the sum of 12 groups of corresponding behavioral feature values. Previous algorithm outputs failed to be computed by changes in their functions. This round of new algorithm operations reduces the error between different algorithms to a certain extent and, at the same time, enables the mechanism to more accurately match the algorithm output of each part. In addition, the improvement of the machine learning algorithm is carried out by improving the matching degree of the initial feature value to 72.5%.

5.3. Python Main Program Improvement Algorithm

While optimizing the output of the machine learning algorithm, we improved the python main program algorithm again to better control the connection and control of the algorithms in each research step. The specific improved content [47] is shown in Figure 26.

Figure 26.

The output diagram of the improved algorithm of the Python main program.

Because the previous machine learning algorithm adopted the logarithmic output method for different variables of x, y, and c to narrow the gap between the detection output eigenvalues in the calculation of mathematical formulas, this python improved algorithm needs to correspond to the above process [47]. The architecture of the improved python main program algorithm is similar to the process of machine learning. The three bases of x, y, and c are first weighted and summed and then squared to take the logarithm, which is the same as machine learning. The meaning of using the limit method is to maximize the log value based on the square root and calculate the sum of the characteristics that are closest to the 12 human behaviors. The final integration method is to control the output range and control the final output range within the range of features 1–12. The output processes x, y, and c correspond to (HATP, CNN), RNN, (LSTM, GRU), respectively. Therefore, the improved Python main control algorithm simultaneously adopts the summation of three groups of variables under the area of the corresponding algorithm and finally calculates it in the form of logarithmic output. Reduce the impact on other algorithms in the output process of different algorithms.

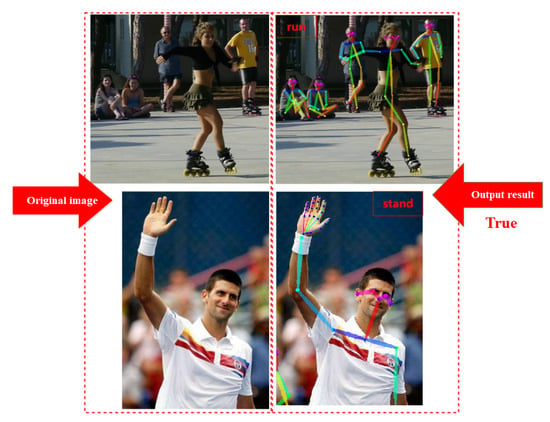

5.4. Improved Test (Test Effect Chart + SPSS Data Analysis Chart and Table)

In this paper, two sets of human behavior features are used for detection in the detection results [48] of Figure 27 above. The left side is the original image, and the right side is the detected result. Compared with the actual situation, the mechanism completes the detection of the target under the improved algorithm. The human behaviors in the two sets of pictures are “running” and “standing” [48]. The mechanism detection output after machine learning is consistent with the actual situation, and the detection is successful.

Figure 27.

Schematic diagram of improved algorithm detection under machine learning.

By re-improving the algorithm, this paper realizes the detection of human behavior characteristics under the wearable sensor by the current detection mechanism under machine learning [49]. By comparing the previous data with Figure 28 [49], the mechanism of the improved algorithm after machine learning has been greatly improved compared to the behavioral feature recognition of the target before the improvement. At present, under the new machine learning algorithm, the average detection rate of a single behavior feature is about 85%, and the detection rate of a compound behavior feature is about 82.5%. The significant improvement compared to before the improvement. Table 9 presents the final detection data under the new algorithm.

Figure 28.

(a) SPSS statistical chart of the detection results after the improved machine learning algorithm (b) SPSS statistical chart of the detection results after the improved machine learning algorithm.

Table 9.

The improved human behavior detection data statistics table.

From the data in the above table, we can see that after using the logarithm method in the output control link of machine learning, the detection rate of the entire detection process has been improved to a certain extent. At present, in the process of detecting compound human behavior, the recognition rate of each behavior before optimization has been improved to a certain extent. The final algorithm output data is shown in Table 10.

Table 10.

Final algorithm output data table.

In the final algorithm output statistics table, the current mechanism is based on the preliminary experiments of the existing research and the operation after the improved algorithm, and the current overall output situation is good. Recognition and judgment of single-type and compound-type gestures that can meet the current stage of human behavioral characteristics.

5.5. Summary

Through the improvement of the machine learning algorithm and the data extraction of the previous convolutional neural network CNN, recurrent neural network RNN, LSTM, and GRU algorithms, respectively, data feature fusion is carried out in the wearable sensor. Finally, the machine learning algorithm used in this paper meets the conditions. Under the premise of the overall completion of the current detection requirements, the overall detection rate has increased from about 79% to the current 83.6%, and the overall detection rate has increased by more than 4.6% [50,51,52,53,54]. There are still some algorithms that have not been identified, and we need to continue to organize the algorithm output in the research steps and optimize the machine learning in the later research, and it is expected that better detection results will be obtained. The entire research process of this paper uses and designs the initial algorithm through the steps of several existing algorithms from the framework and finally integrates the features of multiple links to obtain a machine learning-based human behavior feature classification under wearable medium detection. When looking at the overall situation, there are still some detection errors in the design and experiments of this paper. We need to continue to organize the algorithm output in the research steps and optimize the machine learning in the later research, hoping to obtain better detection results.

6. Conclusions

6.1. Dataset Usage Analysis

The use of the dataset in the research process of this paper is divided into two parts: data collection and data detection. In the process of using the dataset in the early stage, this paper uses the HATP dataset to classify human behavior characteristics in the early stage, including two types of single behavior and composite behavior. After classifying and storing the samples and outputting the experiments, this paper arranges the odd and even numbers according to the labels. In the final data extraction process, the overall detection in the data collection process meets the requirements under the use of the algorithm, and some unrecognized human behavior characteristics need to be adjusted in the next step.

In terms of data detection set, this paper tests the data results of CNN, RNN, LSTM, GRU, and other data at one time and uses wearable technology to perform feature fusion because the distributed algorithm is used in this paper, the algorithm output is performed on each data in turn, and the data fusion is completed under the action of the wearable sensor, and the detection of human behavior is realized. At present, although the mechanism after machine learning can achieve the detection goal and realize the data analysis after feature extraction of multiple sets of fusion data, further optimization is needed in the algorithm output of each link and the fusion extraction of global wearable sensors, because, in the final experiment, it was found that unrecognized targets still existed, again illustrating the limitations of the mechanism.

6.2. Analysis of the Research Results of This Paper

The research contribution of this paper is based on the summary of the algorithms in human behavior detection by researchers in the field in the literature survey, and according to the research process required in this paper, a new theoretical framework is given, and improvements are made on existing algorithms, using wearable sensors to extract The fusion features of various algorithms in human behavior realize human behavior detection of single feature and composite feature under machine learning, which improves the problem that the original mechanism can only detect a single gesture type. This paper firstly uses the HATP algorithm to classify and mark all 12 human behavior samples and output them in binary mode; secondly, the model graph design and algorithm output steps of the CNN, RNN, LSTM, and GRU algorithms are studied. Wearable sensors are used for feature value extraction, fusion, and classification, respectively. The detection mechanism after machine learning is given enough sample data; finally, the target detection is performed by the machine learning mechanism after the training set. It can be seen from the SPSS statistical analysis table after detection that the P value in wearable sensors and machine learning is small, and the overall detection rate of comprehensive human behavior characteristics (composite posture) is low. Through the improvement of the output algorithm in the later stage, the overall detection accuracy of machine learning in compound human behavior detection has been greatly improved, and the overall average detection rate is about 83.6%.

Based on the implementation of the existing artificial intelligence facial expression accurate detection algorithm based on Python architecture, the algorithm has been improved, and with reference to the human behavior detection related algorithm design in the above link, CNN, RNN, GRU and other algorithms are integrated in the wearable Under the sensor, the human behavior detection under machine learning is finally realized. In the later research, the existing algorithm architecture is effectively combined with human psychological emotions, and more complex algorithms are used to realize the comprehensive detection mechanism of artificial intelligence human behavior and emotion under wearable technology.

At present, the limitation of the research is that the overall control process of machine learning needs to be further improved. Because the artificial intelligence mechanism is used for machine learning for the first time, the most accurate use of the algorithm has not yet been achieved. It can be seen from the detection rate that there is still a certain room for improvement.

The shortcoming of the research is that the paper extracts human behavior features and uses wearable sensors to fuse and classify the output. In the process of algorithm selection and sample collection, although a lot of screening has been performed, there are still some areas that need to be changed in the actual process. For example, in the detection of complex human behavior features, the detection range of machine learning is larger than that of single human behavior features, which shows that we need to select more suitable samples for such features in the later stage and machine learning in the process of posture connection. More precise capture of moving targets.

7. Future Work

The future work of this paper is divided into two parts. First of all, it is necessary to continue to detect and improve the existing algorithm, find out the point control of the compound behavior recognition in the process of human behavior detection in the process of machine learning detection, and how to improve the algorithm of the existing mechanism. Accurate capture is a part that needs to be considered. Secondly, connect the previous results on the facial algorithm in the process of human emotion detection with the currently used human behavior recognition algorithm so that in the process of machine learning, the characteristics of human faces, psychological emotions, etc., can be analyzed under the action of wearable sensors combined with behavior. This paper is expected to start from the deep convolutional neural network CNN algorithm and the recurrent neural network RNN algorithm and use the matrix-vector output method to connect the two parts of emotion and behavior, so that machine learning can add the previous emotion detection algorithm to the original human behavior recognition process. Form a unified detection mechanism.

Author Contributions

Conceptualization, Writing—original draft, J.Z.; Supervision, S.B.G.; review and editing, C.V. and M.S.R. and T.C.M.; Validation, J.Z.; propose the new method or methodology, J.Z. and S.B.G.; Formal Analysis, Investigation J.Z.; Resources, S.B.G. and T.C.M.; Software, J.Z. and S.B.G.; Writing—review and editing, C.V. and M.S.R. and T.C.M. All authors have read and agreed to the published version of the manuscript.

Funding

Institutional performance-Projects to finance excellence in RDI, Contract No. 19PFE/30.12.2021 and a grant of the National Center for Hydrogen and Fuel Cells (CNHPC)—Installations and Special Objectives of National Interest (IOSIN) and BEIA projects: AISTOR, FinSESco, CREATE, I-DELTA, DEFRAUDIFY, Hydro3D, FED4FIRE—SO-SHARED, AIPLAN—STORABLE, EREMI, NGI-UAV-AGRO and by European Union’s Horizon 2020 research and innovation program under grant agreements No. 872172 (TESTBED2) and No. 777996 (SealedGRID);and partially supported by Shanghai Qiao Cheng Education Technology Co., Ltd. (Shanghai, China).

Institutional Review Board Statement

At the request of the author, this article is derived from the author’s existing research results and citing icons in other public publications on the Internet. All other content is the original work of the author. This article can guarantee that there is no infringement of any kind.

Informed Consent Statement

This paper collects data according to the needs of the experiment, and signs a confidentiality agreement with all patients to ensure that all data is only used in the experiment of this paper, and that no data is disclosed for other purposes.

Data Availability Statement

The data comes from the use and improvement of python code, and the system can function normally.

Acknowledgments

All authors of this article have participated in the research of the article and expressed their approval for the research content of this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mustaqeem; Kwon, S. A CNN-Assisted Enhanced Audio Signal Processing for Speech Emotion Recognition. Sensors 2019, 20, 183. [Google Scholar] [CrossRef]

- Richter, T.; Fishbain, B.; Fruchter, E.; Richter-Levin, G.; Okon-Singer, H. Machine learning-based diagnosis support system for differentiating between clinical anxiety and depression disorders. J. Psychiatr. Res. 2021, 141, 199–205. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.N.; Ihalage, A.A.; Ma, Y.; Liu, B.; Liu, Y.; Hao, Y. Deep learning framework for subject-independent emotion detection using wireless signals. PLoS ONE 2021, 16, e0242946. [Google Scholar] [CrossRef] [PubMed]

- Haines, N.; Southward, M.W.; Cheavens, J.S.; Beauchaine, T.; Ahn, W.Y. Using computer-vision and machine learning to automate facial coding of positive and negative affect intensity. PLoS ONE 2019, 14, e0211735. [Google Scholar] [CrossRef]

- Hashemnia, S.; Grasse, L.; Soni, S.; Tata, M.S. Human EEG and Recurrent Neural Networks Exhibit Common Temporal Dynamics During Speech Recognition. Front. Syst. Neurosci. 2021, 15, 617605. [Google Scholar] [CrossRef] [PubMed]

- Wray, T.B.; Luo, X.; Ke, J.; Pérez, A.E.; Carr, D.J.; Monti, P.M. Using Smartphone Survey Data and Machine Learning to Identify Situational and Contextual Risk Factors for HIV Risk Behavior Among Men Who Have Sex with Men Who Are Not on PrEP. Prev. Sci. 2019, 20, 904–913. [Google Scholar] [CrossRef]

- Zang, S.; Ding, X.; Wu, M.; Zhou, C. An EEG Classification-Based MFethod for Single-Trial N170 Latency Detection and Estimation. Comput. Math. Methods Med. 2022, 2022, 6331956. [Google Scholar] [CrossRef]

- van Noord, K.; Wang, W.; Jiao, H. Insights of 3D Input CNN in EEG-based Emotion Recognition. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Mexico city, Mexico, 1–5 November 2021; pp. 212–215. [Google Scholar] [CrossRef]

- Wei, Y.X.; Liu, B.P.; Zhang, J.; Wang, X.T.; Chu, J.; Jia, C.X. Prediction of recurrent suicidal behavior among suicide attempters with Cox regression and machine learning: A 10-year prospective cohort study. J. Psychiatr. Res. 2021, 144, 217–224. [Google Scholar] [CrossRef]

- Shukla, S.; Arac, A. A Step-by-Step Implementation of DeepBehavior, Deep Learning Toolbox for Automated Behavior Analysis. J. Vis. Exp. 2020, 156, 60763. [Google Scholar] [CrossRef]

- Noor, S.T.; Asad, S.T.; Khan, M.M.; Gaba, G.S.; Al-Amri, J.F.; Masud, M. Predicting the Risk of Depression Based on ECG Using RNN. Comput. Intell. Neurosci. 2021, 2021, 1299870. [Google Scholar] [CrossRef]

- Ramzan, M.; Dawn, S. Fused CNN-LSTM deep learning emotion recognition model using electroencephalography signals. Int. J. Neurosci. 2021, 12, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Kasper, S. The future direction of psychopathology. The World Journal of Biological Psychiatry. World J. Biol. Psychiatry 2010, 11, 843. [Google Scholar] [CrossRef]

- Li, A.; Yi, S. Emotion Analysis Model of Microblog Comment Text Based on CNN-BiLSTM. Comput. Intell. Neurosci. 2022, 2022, 1669569. [Google Scholar] [CrossRef] [PubMed]

- Fysh, M.C.; Bindemann, M. Human-Computer Interaction in Face Matching. Cogn. Sci. 2018, 42, 1714–1732. [Google Scholar] [CrossRef]

- Feng, X.; Wei, Y.; Pan, X.; Qiu, L.; Ma, Y. Academic Emotion Classification and Recognition Method for Large-scale Online Learning Environment-Based on A-CNN and LSTM-ATT Deep Learning Pipeline Method. Int. J. Environ. Res. Public Health 2020, 17, 1941. [Google Scholar] [CrossRef]

- Albein-Urios, N.; Youssef, G.; Klas, A.; Enticott, P.G. Inner Speech Moderates the Relationship Between Autism Spectrum Traits and Emotion Regulation. J. Autism Dev. Disord. 2021, 51, 3322–3330. [Google Scholar] [CrossRef]

- Coronado, E.; Venture, G. Towards IoT-Aided Human-Robot Interaction Using NEP and ROS: A Platform-Independent, Accessible and Distributed Approach. Sensors 2020, 20, 1500. [Google Scholar] [CrossRef]

- Falowski, S.M.; Sharan, A.; Reyes, B.A.; Sikkema, C.; Szot, P.; Van Bockstaele, E.J. An evaluation of neuroplasticity and behavior after deep brain stimulation of the nucleus accumbens in an animal model of depression. Neurosurgery 2011, 69, 1281–1290. [Google Scholar] [CrossRef]

- Gill, S.; Mouches, P.; Hu, S.; Rajashekar, D.; MacMaster, F.P.; Smith, E.E.; Forkert, N.D.; Ismail, Z. Using Machine Learning to Predict Dementia from Neuropsychiatric Symptom and Neuroimaging Data. J. Alzheimers Dis. 2020, 75, 277–288. [Google Scholar] [CrossRef] [Green Version]

- Liu, M.; Jiao, L.; Liu, X.; Li, L.; Liu, F.; Yang, S. C-CNN: Contourlet Convolutional Neural Networks. In IEEE Transactions on Neural Networks and Learning Systems; IEEE: New York, NY, USA, 2021; Volume 32, pp. 2636–2649. [Google Scholar] [CrossRef]

- Graterol, W.; Diaz-Amado, J.; Cardinale, Y.; Dongo, I.; Lopes-Silva, E.; Santos-Libarino, C. Emotion Detection for Social Robots Based on NLP Transformers and an Emotion Ontology. Sensors 2021, 21, 1322. [Google Scholar] [CrossRef]

- He, J. Algorithm Composition and Emotion Recognition Based on Machine Learning. Comput. Intell. Neurosci. 2022, 2022, 1092383. [Google Scholar] [CrossRef]

- Hammam, N.; Sadeghi, D.; Carson, V.; Tamana, S.K.; Ezeugwu, V.E.; Chikuma, J.; van Eeden, C.; Brook, J.R.; Lefebvre, D.L.; Moraes, T.J.; et al. The relationship between machine-learning-derived sleep parameters and behavior problems in 3- and 5-year-old children: Results from the CHILD Cohort study. Sleep 2020, 43, zsaa117. [Google Scholar] [CrossRef]

- Gross, J.J. Emotion regulation: Affective, cognitive, and social consequences. Psychophysiology 2002, 39, 281–291. [Google Scholar] [CrossRef]

- Hoemann, K.; Xu, F.; Barrett, L.F. Emotion words, emotion concepts, and emotional development in children: A constructionist hypothesis. Dev. Psychol. 2019, 55, 1830–1849. [Google Scholar] [CrossRef]

- Hochheiser, H.; Valdez, R.S. Human-Computer Interaction, Ethics, and Biomedical Informatics. Yearb. Med. Inf. 2020, 29, 93–98. [Google Scholar] [CrossRef]

- Hogeveen, J.; Salvi, C.; Grafman, J. ‘Emotional Intelligence’: Lessons from Lesions. Trends Neurosci. 2016, 39, 694–705. [Google Scholar] [CrossRef]

- Jeong, I.C.; Bychkov, D.; Searson, P.C. Wearable Devices for Precision Medicine and Health State Monitoring. IEEE Trans. Biomed. Eng. 2019, 66, 1242–1258. [Google Scholar] [CrossRef]

- Kakui, K.; Fujiwara, Y. First in Situ Observations of Behavior in Deep-Sea Tanaidacean Crustaceans. Zool. Sci. 2020, 37, 303–306. [Google Scholar] [CrossRef]

- Lin, X.; Wang, C.; Lin, J.M. Research progress on analysis of human papillomavirus by microchip capillary electrophoresis. Se Pu. 2020, 38, 1179–1188. [Google Scholar] [CrossRef]

- Haines, N.; Bell, Z.; Crowell, S.; Hahn, H.; Kamara, D.; McDonough-Caplan, H.; Shader, T.; Beauchaine, T.P. Using automated computer vision and machine learning to code facial expressions of affect and arousal: Implications for emotion dysregulation research. Dev. Psychopathol. 2019, 31, 871–886. [Google Scholar] [CrossRef] [PubMed]

- Kelly, J.T.; Campbell, K.L.; Gong, E.; Scuffham, P. The Internet of Things: Impact and Implications for Health Care Delivery. J. Med. Internet Res. 2020, 22, e20135. [Google Scholar] [CrossRef] [PubMed]

- Laureanti, R.; Bilucaglia, M.; Zito, M.; Circi, R.; Fici, A.; Rivetti, F.; Valesi, R.; Oldrini, C.; Mainardi, L.T.; Russo, V. Emotion assessment using Machine Learning and low-cost wearable devices. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 576–579. [Google Scholar] [CrossRef]

- Oh, G.; Ryu, J.; Jeong, E.; Yang, J.H.; Hwang, S.; Lee, S.; Lim, S. DRER: Deep Learning-Based Driver’s Real Emotion Recognizer. Sensors 2021, 21, 2166. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Wu, G.; Luo, Y.; Qiu, S.; Yang, S.; Li, W.; Bi, Y. EEG-Based Emotion Classification Using a Deep Neural Network and Sparse Autoencoder. Front. Syst. Neurosci. 2020, 14, 43. [Google Scholar] [CrossRef]

- Macaluso, R.; Embry, K.; Villarreal, D.J.; Gregg, R.D. Parameterizing Human Locomotion Across Quasi-Random Treadmill Perturbations and Inclines. In IEEE Transactions on Neural Systems and Rehabilitation Engineering; IEEE: New York, NY, USA, 2021; Volume 29, pp. 508–516. [Google Scholar] [CrossRef]

- Chan, F.H.F.; Barry, T.J.; Chan, A.B.; Hsiao, J.H. Understanding visual attention to face emotions in social anxiety using hidden Markov models. Cogn. Emot. 2020, 34, 1704–1710. [Google Scholar] [CrossRef] [PubMed]

- Mai, N.D.; Lee, B.G.; Chung, W.Y. Affective Computing on Machine Learning-Based Emotion Recognition Using a Self-Made EEG Device. Sensors 2021, 21, 5135. [Google Scholar] [CrossRef]

- Pandeya, Y.R.; Bhattarai, B.; Lee, J. Deep-Learning-Based Multimodal Emotion Classification for Music Videos. Sensors 2021, 21, 4927. [Google Scholar] [CrossRef]

- Lightner, A.L. The Present State and Future Direction of Regenerative Medicine for Perianal Crohn’s Disease. Gastroenterology 2019, 156, 2128–2130.e4. [Google Scholar] [CrossRef]

- Powell, A.; Graham, D.; Portley, R.; Snowdon, J.; Hayes, M.W. Wearable technology to assess bradykinesia and immobility in patients with severe depression undergoing electroconvulsive therapy: A pilot study. J. Psychiatr. Res. 2020, 130, 75–81. [Google Scholar] [CrossRef]

- Reisenzein, R. Cognition and emotion: A plea for theory. Cogn. Emot. 2019, 33, 109–118. [Google Scholar] [CrossRef]

- Guo, J.J.; Zhou, R.; Zhao, L.M.; Lu, B.L. Multimodal Emotion Recognition from Eye Image, Eye Movement and EEG Using Deep Neural Networks. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 3071–3074. [Google Scholar] [CrossRef]

- Li, R.; Liang, Y.; Liu, X.; Wang, B.; Huang, W.; Cai, Z.; Ye, Y.; Qiu, L.; Pan, J. MindLink-Eumpy: An Open-Source Python Toolbox for Multimodal Emotion Recognition. Front. Hum. Neurosci. 2021, 15, 621493. [Google Scholar] [CrossRef]

- Mehta, D.; Siddiqui, M.F.H.; Javaid, A.Y. Recognition of Emotion Intensities Using Machine Learning Algorithms: A Comparative Study. Sensors 2019, 19, 1897. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Zhang, M.; Fang, M.; Zhao, J.; Hou, K.; Hung, C.C. Speech emotion recognition based on transfer learning from the FaceNet framework. J. Acoust. Soc. Am. 2021, 149, 1338. [Google Scholar] [CrossRef] [PubMed]

- Kiel, E.J.; Price, N.N.; Premo, J.E. Maternal comforting behavior, toddlers’ dysregulated fear, and toddlers’ emotion regulatory behaviors. Emotion 2020, 20, 793–803. [Google Scholar] [CrossRef] [PubMed]

- Zhuang, M.; Yin, L.; Wang, Y.; Bai, Y.; Zhan, J.; Hou, C.; Yin, L.; Xu, Z.; Tan, X.; Huang, Y. Highly Robust and Wearable Facial Expression Recognition via Deep-Learning-Assisted, Soft Epidermal Electronics. Research 2021, 2021, 9759601. [Google Scholar] [CrossRef] [PubMed]

- Aledavood, T.; Torous, J.; Triana Hoyos, A.M.; Naslund, J.A.; Onnela, J.P.; Keshavan, M. Smartphone-Based Tracking of Sleep in Depression, Anxiety, and Psychotic Disorders. Curr. Psychiatry Rep. 2019, 21, 49. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jinnuo, Z.; Goyal, S.B.; Tesfayohanis, M.; Omar, Y. Implementation of Artificial Intelligence Image Emotion Detection Mechanism Based on Python Architecture for Industry 4.0. J. Nanomater. 2022, 2022, 5293248. [Google Scholar] [CrossRef]

- Diwan, T.D.; Choubey, S.; Hota, H.S.; Goyal, S.B.; Jamal, S.S.; Shukla, P.K.; Tiwari, B. Feature Entropy Estimation (FEE) for Malicious IoT Traffic and Detection Using Machine Learning. Mob. Inf. Syst. 2021, 2021, 8091363. [Google Scholar] [CrossRef]

- 53. Moorthy, T.V.K.; Budati, A.K.; Kautish, S.; Goyal, S.B.; Prasad, K.L. Reduction of satellite images size in 5G networks using machine learning algorithms. IET Commun. 2022, 16, 584–591. [Google Scholar] [CrossRef]

- Bedi, P.; Goyal, S.B.; Rajawat, A.S.; Shaw, R.N.; Ghosh, A. A Framework for Personalizing Atypical Web Search Sessions with Concept-Based User Profiles Using Selective Machine Learning Techniques. Adv. Comput. Intell. Technol. 2022, 218, 279–291. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).