Abstract

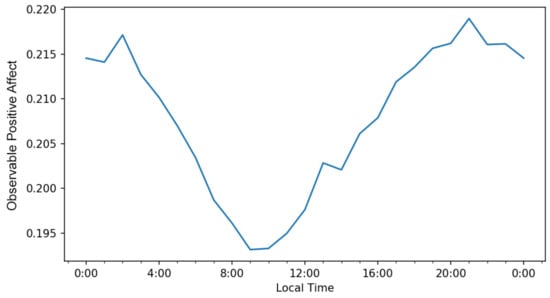

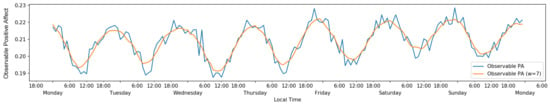

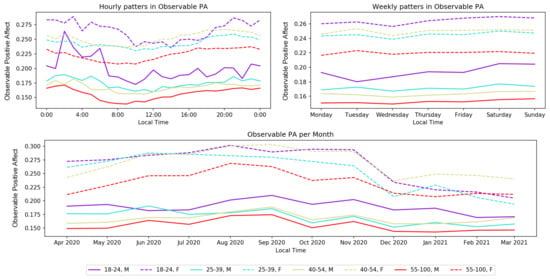

Policymakers and researchers worldwide are interested in measuring the subjective well-being (SWB) of populations. In recent years, new approaches to measuring SWB have begun to appear, using digital traces as the main source of information, and show potential to overcome the shortcomings of traditional survey-based methods. In this paper, we propose the formal model for calculation of observable subjective well-being (OSWB) indicator based on posts from a social network, which utilizes demographic information and post-stratification techniques to make the data sample representative by selected characteristics of the general population. We applied the model on the data from Odnoklassniki, one of the largest social networks in Russia, and obtained an OSWB indicator representative of the population of Russia by age and gender. For sentiment analysis, we fine-tuned several language models on RuSentiment and achieved state-of-the-art results. The calculated OSWB indicator demonstrated moderate to strong Pearson’s (, , ) correlation and strong Spearman’s (, , ) correlation with a traditional survey-based Happiness Index reported by Russia Public Opinion Research Center, confirming the validity of the proposed approach. Additionally, we explored circadian (24 h) and circaseptan (7 day) patterns, and report several interesting findings for the population of Russia. Firstly, daily variations were clearly observed: the morning had the lowest level of happiness, and the late evening had the highest. Secondly, weekly patterns were clearly observed as well, with weekends being happier than weekdays. The lowest level of happiness occurs in the first three weekdays, and starting on Thursday, it rises and peaks during the weekend. Lastly, demographic groups showed different levels of happiness on a daily, weekly, and monthly basis, which confirms the importance of post-stratification by age group and gender in OSWB studies based on digital traces.

Keywords:

subjective well-being; observable subjective well-being; happiness index; social networks; user-generated content; digital traces; sentiment analysis; machine learning; language models; misclassification bias; computational social science MSC:

68T50

1. Introduction

Throughout history, philosophers have considered happiness to be the highest good and the ultimate motivation of human action [1]. Subjective well-being (SWB), also known as the scientific term for happiness and life satisfaction, is used to describe the level of well-being people experience according to their subjective evaluations of their lives [2]. Recently, practical interest in SWB has also been shown by government agencies, considering SWB indicators as one of the key guidelines for the development of the state instead of the currently utilized indicators, such as gross domestic product [3].

Individuals’ levels of SBW are influenced both by internal factors, such as personality [4] and outlook, and external factors, such as the society they live in or life events; thus, people’s SWB is subject to constant changes. Traditionally, SWB is measured through self-report surveys. Although these surveys are considered accurate and valid for measuring SWB [5], they also suffer from some considerable pitfalls. For example, self-reported answers may be exaggerated [6], various biases may affect the results (e.g., social desirability bias [7], question order bias [8], and demand characteristics [9]), momentary mood may influence the subjects’ responses to SWB questions [10], and people tend to recall past events that are consonant with their current affect [11]. Moreover, self-report surveys cannot provide constant updates of well-being to researchers and policymakers, and the cost of conducting them tends to be relatively high, thereby making it challenging for many countries to estimate well-being frequently [12,13,14]. In addition to the methodological and practical challenges of conducting self-report survey studies, there has been a recent decline in the level of trust in the results of such studies in several countries, particularly in Russia. According to the survey [15] conducted by Russia Public Opinion Research Center in 2019, the index of trust in sociological data has continued to decline among Russians over the past three years. The total level of credibility of the results of social research was 58% (this is the total share of respondents who agree that the polls really reflect the real opinion of citizens). At the same time, 37% of citizens are skeptical about the results of opinion polls. Every second respondent (53%) thinks that the poll results are fabricated in order to influence people, persuading them to behave in a certain way. According to the opinion poll [16] by the Public Opinion Foundation in 2020, every third Russian (36%) does not trust the data of opinion polls.

Over the past few decades, there has been much progress in the measurement of SWB [17]. In particular, researchers across disciplines have proposed several innovative digital data sources, also called digital traces, and methods that have the potential to overcome the limitations of traditional survey-based methods [14], including measuring individual and collective well-being [13]. According to Howison et al. [18], digital trace data are found (rather than produced for research), and event-based (rather than summary data), and longitudinal (since events occur over a period of time) data are both produced through and stored by an information system. One of the most commonly used types of digital traces in SWB studies is user-generated content from social networks [12,19]. The most important epistemological advantage of digital trace data is that they present observed (In general, this issue can be debatable for different types of digital traces. For example, in the case of posts from social networks, the source of these data is still the subject with their subjective assessments, which are influenced by many factors. In the framework of this study, we still perceive these data as observable, since the data were originally generated by the subjects not for research, but for personal purposes.) instead of self-reported behavior [20], which is also characterized by real-time observation with continuous follow-up.

Moreover, due to the presence of digital trace data spread over time, it provides researchers with the opportunity to conduct studies that are otherwise impossible or at least difficult to conduct using traditional approaches [14]. Although there is still considerable controversy surrounding the classification, so far, most psychology research [21] has conceptualized SWB as either an assessment of life satisfaction or dissatisfaction (evaluative well-being measures) or as a combination of experienced affect (experienced well-being measures). At the same time, there is also a degree of uncertainty around the terminology in studies measuring SWB based on digital traces because they cannot be unambiguously attributed to either evaluative or experienced measures. We propose to use the term observable subjective well-being (OSWB), which explicitly characterizes the data source as observed (not self-reported) and does not make any assumptions about the evaluative or experienced nature of the data (both can be presented in different proportions).

A growing body of literature [13,22,23] investigates different variations of OSWB indices calculated based on textual content from social media sites. For example, changes in the level of happiness and mood based on tweets were explored for the United States of America [24,25], the United Kingdom [26,27,28], China [29], Italy [30], the UAE [31], and Brazil [32]. However, one of the main challenges with existing studies is the lack of representative data—in terms of the data source, general population of internet users, or general population of the analyzed country. Although for many other languages, OSWB studies have already been conducted, the research of Russian-language content (e.g., [33,34,35]) remains quite limited and targets particular social networks, groups of users, or regions, but not the general population of Russia. For example, Panchenko [33] analyzed the Russian-language segment of Facebook by using a rule-based sentiment classification model with low classification quality. (Panchenko [33] used a dictionary based approach for sentiment analysis of Facebook posts, but tested it on the Books, Movies, and Cameras subsets of the ROMIP 2012 dataset [36]. The average accuracy for these 3 subsets was 32.16 and average was 26.06. At the same time, the classification metrics that the authors of the dataset were able to achieve when publishing it is higher [36].) He did not consider the demographics of the users, and did not measure the reliability of the proposed approach (although the last two items seem to be out of scope of Panchenko’s study). Shchekotin et al. [34] analyzed posts of 1350 of the most popular Vkontakte regional and urban communities, but they likewise did not consider any demographic characteristics and did not measure the reliability of the proposed approach. Kalabikhina et al. [35] explored the demographic temperature of 314 pro-natalist groups (with child-born reproductive attitudes) and 8 anti-natalist (with child-free reproductive attitudes) Vkontake groups. In general, all these studies were focused on the particular group of users or a sample of a social network audience, but they did not project the results with respect to the general population of Russia. Moreover, studies about Russian-language content suffer from a series of disadvantages, outlined in our recent review paper [37]. Furthermore, a recent poll [15] by the Russia Public Opinion Research Center (VCIOM) showed that the overwhelming majority (91%) of Russians are convinced that research of public opinion is necessary. The majority of Russians (78%) believe that public opinion polls help to determine the opinion of people about the situation in their place of residence so that the authorities can take into account the opinion of the people when solving painful problems. Moreover, according to another recent survey [38] by VCIOM, welfare and well-being were most often cited by respondents as the main goals of Russia in the 21st century. Measures of SWB are likely to play an increasingly important role in policy evaluation and decisions because not only do both policymakers and individuals value subjective outcomes, but such outcomes also appear to be affected by major policy interventions [17].

In this paper, we propose the formal model for calculation of the OSWB indicator based on posts from the chosen social network, which utilizes demographic information and post-stratification techniques to make the data sample representative by selected characteristics of the general population. We applied the model on the data from Odnoklassniki, one of the largest social networks in Russia, and obtained OSWB indicator representative of the population of Russia by age and gender. For sentiment analysis, we fine-tuned several language models on the RuSentiment dataset [39] and achieved state-of-the-art (SOTA) results of weighted (4.27 percentage points above existing SOTA) and macro (0.42 percentage points above existing SOTA). The calculated OSWB indicator demonstrated moderate to strong Pearson’s () correlation and strong Spearman’s () correlation with a traditional survey-based indicator reported by Russia Public Opinion Research Center (VCIOM) [40], confirming the acceptable level of validity of the proposed indicator. Considering that the typical reliability of SWB scales is in the range of 0.50 to 0.84 [21,41,42,43,44,45] (and even between 0.40 and 0.66 for single-items measures, such as VCIOM Happiness [42]) corrected for unreliability, the real correlation is practically close to unity. Thus, we assume that the obtained correlation can be interpreted not as moderate, but as one of the highest correlations that can be achieved in behavioral sciences. Additionally, we explored circadian (24 h) and circaseptan (7 day) patterns, and report several interesting findings for the population of Russia (see Section 5.1 and Section 5.2).

The rest of the article is organized as follows. Section 2 describes related work, including existing SWB and OSWB studies, sentiment analysis, and comparisons of text analysis methods and traditional survey methods in sociological research. Section 3 presents a model for the calculation of the OSWB indicator based on posts from the social network. Section 4 describes the data from Odnoklassniki used for real-life application of the proposed model and sentiment classification models. Section 5 highlights key results of the Odnoklassniki data analysis. Section 6 provides the discussion of the results of the study. Section 7 describes the key limitations of the study. In Section 8, conclusions are drawn, and the main contributions of the study are articulated.

2. Related Work

2.1. Happiness and the Economy

National financial measures, such as gross domestic product (GDP), are typically used to assess policy effectiveness. However, GDP has been criticized as a weak indicator of well-being and, therefore, a misleading measure of national success and tool for public policies [13,46,47,48]. In 2011, the UN General Assembly, at the 65th session on the initiative of Bhutan and with the support of more than 50 states, adopted Resolution A/RES/65/309 entitled “Happiness: Towards a Holistic Approach to Development”. Recognizing that GDP by nature was not designed to and reflect the happiness and well-being of individuals in a country, the UN general assembly invited member states to pursue the elaboration of additional measures that can better capture the importance of the pursuit of well-being and happiness in development with a view to guide their public policies.

According to Yashina [49], the level of material well-being is an essential but not the decisive factor in the happiness of the inhabitants of Russia. Measuring the SWB is not yet used at the state level in Russia as a criterion for assessing the success of the socio-economic development of the country, although this issue is being discussed [50]. (For example, the Chairman of the Federation Council of the Federal Assembly of the Russian Federation supported the proposal to measure the happiness index of Russians to assess government decisions [51].) At the same time, state bodies in a number of other countries have already been created to deal with problems of happiness—for instance, Gross National Happiness Center in Butan, Gross National Happiness Center in Thailand, Ministry of Higher Social Happiness in Venezuela, and World Happiness Council in the United Arab Emirates. Thus, the issue of regularly measuring the well-being of Russians at the state level is relevant, and measuring the SWB based on digital traces can become one of the tools due to the previously described advantages over classical survey approaches.

2.2. Subjective Well-Being

Well-being, happiness, and life satisfaction are an integral part of many cross-country comparative studies of the quality of life, which are carried out at the initiative of international organizations and governments [3]. Considering the subjective nature of happiness, researchers frequently measure it by self-report rating scales [13,19]. Despite some debate over the best way to conceptualize and measure the affective component of SWB, most researchers agree that the frequency of emotions, rather than their intensity, is the best indicator of the affective component [52]. In particular, there have been further studies confirming that it is the frequency of emotions that matter for measuring SWB, even in the absence of a psychometric survey, e.g., the emotional recall task is a mostly semantic/psycholinguistic task that has been shown to correlate strongly with PANAS indicators in English speakers [53]. Examples of self-reported surveys are the Gallup World Poll, the World Values Survey, the Eurobarometer, the European Quality of Life Surveys, and the British Household Panel Survey. Some of these organizations conduct surveys in many countries across the world, including Russia. (For example, according to Gallup World Pull survey [54], in 2021, the Index of Russians’ Happiness fell to a ten-year low: only 41% of Russians consider themselves happy, and about a quarter are unhappy.) A growing number of Russian research organizations are becoming involved in the collection of SWB data. In particular, Almakaeva and Gashenina [3] highlighted the following research organizations: Russia Public Opinion Research Center, Public Opinion Foundation, Levada Center, the Institute of Psychology of Russian Academy of Sciences, the Russia Longitudinal Monitoring Survey of HSE University, the Center for Sociological Research of Russian Presidential Academy of National Economy and Public Administration, and the Ronald F. Inglehart Laboratory for Comparative Social Research of HSE University. In comparison with international organizations, Russian organizations tend to focus more on researching well-being in Russia and in different aspects thereof. (For example, one of the most famous indices in Russia is the Happiness Index, calculated by VCIOM. According to VCIOM data for November 2021 [55], the majority of Russians (84%) consider themselves happy, whereas 14% do not. Most often, Russians consider themselves happy due to the health and life of their own and those of their loved ones (29%), due to the fact that they have a family (27%), have children (22%), have general satisfaction with life (21%), or a good job (12%)).

Due to the widespread interest from research and government organizations, many methodological materials (e.g., [43,56,57,58]) have been developed for measuring SWB through surveys. Although self-report surveys have been considered accurate and valid for measuring SWB [5], they also suffer from some considerable pitfalls. One of the main limitations of classical survey polls is reactivity: respondents and subjects almost always know that they are participating in the study, and this, in turn, can have an effect on the results of the study [14]. Self-reported answers may be exaggerated [6], numerous biases may affect the results (e.g., question order bias [8], demand characteristics [9], and social desirability bias [7]), momentary mood may influence subjects’ responses to SWB questions [10], and respondents tend to recall past events that are consonant with their current affect [11]. Additionally, a recognition-based checklist may fail to capture sufficient breadth and specificity of an individual’s recalled emotional experiences and may therefore miss emotions that frequently come to mind [53]. The relatively small sample size in the surveys limits the search for heterogeneous relationships and patterns between the studied concepts [14]. Moreover, self-report surveys cannot provide constant updates of well-being to researchers and policymakers, and they incur relatively high costs to be conducted, thereby making it challenging for many countries to estimate well-being frequently [12,13,14]. Given the formidable list of limitations, over the past few decades, there has been much progress in the measurement of SWB [17]. Researchers across disciplines have proposed several innovative digital data sources, also called digital traces, and methods that have the potential to overcome the limitations of traditional survey-based methods [14], including measuring individual and collective well-being [13].

2.3. Observable Subjective Well-Being

In recent years, there has been an increasing amount of literature on OSWB indices based on digital sources, especially using posts from social networks [12,13,19]. The key idea of these studies is to replace people with texts as the unit of analysis and apply natural language processing (NLP) techniques to quantify expressed sentiment. From the sentiment expression point of view, all existing approaches for OSWB indices calculation can be divided in two groups: word-level (e.g., [59]) and text-level (e.g., [24]) approaches. Word-level approaches analyze texts at the word level, calculate the number of emotionally charged words based on dictionaries of tonal vocabulary, and then calculate indices based on the data obtained. This type of approach has been widely criticized in the literature. For example, it has been shown by Wang et al. [60] that one of the most famous such indices, Facebook’s Gross National Happiness [59], is a valid measure for neither mood nor well-being. Moreover, from an etymological point of view, word-level approaches suffer from the fact that they miss part of the sentiments expressed in a different way than just in words. Emotions in the text can be represented both at the level of form and at the level of content, expressed implicitly and explicitly [61]. In the recent survey on sentiment analysis of texts in Russian [62], it was also mentioned that sentiment can be expressed explicitly using specialized sentiment words and implicitly using sentiment facts [63] or words with connotations [64]. Thus, word-level approaches cannot display the full picture because they ignore part of the emotions presented in the text by definition; therefore, recent studies more often focus on text-level approaches. In contrast with word-level approaches, text-level approaches analyse the entire text, attempting to capture all sentiment presented, and for each text return a sentiment class or a group of sentiment classes presented. Thus, we decided to focus only on the text-level approaches.

Extensive review of existing OSWB studies has been performed in recent articles [13,19,23], so we will not focus in detail on describing existing work and instead consider their challenges and limitations. Firstly, the lack of domain-specific training data and representative data for the analysis is a major challenge [23], including for the Russian language [37]. Secondly, very few studies (just over 1% according to [23]) use deep learning, which can help to achieve a higher quality sentiment classification in contrast with rule-based and traditional machine learning methods, as already demonstrated for the Russian language [65,66,67,68,69]. Thirdly, there are significant theoretical knowledge gaps regarding best practices and guidelines for calculating OSWB indices based on digital traces. For example, demographic information is rarely used (slightly less than 5% according to [23]), which may call into question the representativeness of the results obtained. In classical survey research, the sampling design is intended to accommodate different demographic groups in proportion to their distribution in the target population, so the results are representative of the target population. This is also the case for OSWB studies [24,25,26,27,28,29,30,31,32] mentioned earlier. In the context of digital traces, access to such information is usually difficult or impossible. According to the European Social Survey Sampling Guidelines [58], which are also used for measuring and reporting on Europeans’ well-being [70], individual-level variables (e.g., gender and age) tend to be more beneficial than regions or characteristics of small areas (e.g., local unemployment rate and population density). Thus, the presence of these demographic variables tends to be crucial for OSWB research to perform post-stratification and make results representative of the general population of the analyzed country. Lastly, there is still significant controversy about correspondence of digital traces to survey data [19,60,71,72,73]. More specifically, it is often asked whether social media content really represents the state of affairs in the offline world. According to a study by Dudina [74], claiming that a social media discussion shows only the reactions of social media users is tantamount to believing that the answers to the survey questions reflect only the opinions of the people who answered those questions, without the possibility of extrapolating the results to wider groups. This, in turn, is tantamount to rejecting the idea of representativity in the social sciences. Supporting a similar idea, Schober et al. [75] stated that traditional population coverage may not be required for social media analysis to effectively predict social phenomena to the extent that social media content distills or summarizes broader conversations that are also measured by surveys. Despite the disagreements between the existing points of view, it should be noted that the issue of validating the results obtained is extremely important. We believe that some of the existing methods of the social sciences can be used to determine the validity of the results obtained on the basis of electronic traces. Obviously, not all of them are suitable for working with digital traces due to nature of the data. For example, it remains an open question how to perform a face validity or test-retest reliability [43] checks given that OSWB researchers commonly lack additional information beyond the digital traces themselves and some information the users specified on their online profiles. However, we argue that a number of other checks outlined in the OECD Guidelines on Measuring SWB [43] can be used to check the results; for example, validity can be tested by comparing results with different measures.

As for the OSWB studies focused on Russian content, the literature remains quite limited and, as our previous survey [37] has shown, is one of the promising areas of research. For example, Panchenko [33] built several sentiment indicators for Russian-language Facebook. Shchekotin et al. [34] proposed a method for subjective assessment of well-being in Russian regions based on VKontakte data. Sivak and Smirnov [73] examined correlations between self-reports and digital trace proxies of depression, anxiety, mood, social integration, and sleep among high school students. Kalabikhina et al. [35] analyzed the demographic temperature (ratio of positive and negative comments) in certain sociodemographic groups of Vkontakte users. As was highlighted in [37], some other studies (e.g., [76,77,78,79]) were dedicated to developing the systems for sentiment monitoring of Russian social media users, but any results of OSWB analysis were not reported. However, all of the above studies, despite their significant contribution to the field of study, focused on certain groups of users or individuals and neglected to consider the construction of the OSWB indicator as representative of at least several sociodemographics for the whole population of Russia. Thus, the problem of constructing a OSWB indicator representative of the Russia population is a relevant one.

2.4. Sharing of Emotions Offline and Online

In many OSWB studies, the presence of an emotional component in posts from social networks is perceived as an axiom. Emotions are indeed an integral part of human communication, but from both a theoretical and a practical point of view, it is interesting to consider how much the transmission of emotions differs in the offline and online worlds. The groundbreaking study by Rime et al. [80] of private emotional experiences revealed that an emotion is generally followed by the social sharing of emotion (SSE), or evocation of the episode in a shared language to some addressee by the person who experienced it. The affected person will communicate with others about his or her experienced feelings and the event’s emotional circumstances. The first set of experiments revealed that 88–96% of emotional experiences are shared and discussed to some degree. These conversations can happen not only immediately after the moment of receiving the experience, but also during the hours, days, or even weeks and months following the emotional episode. Moreover, the intensity of sharing will depend on the intensity of experienced emotions. More intense emotions are commonly shared to a wider range of addressees with a higher degree of recurrence, and the urge to share them extends to a longer period. In the majority of cases, the process of social sharing was initiated immediately after the emotional event (52.8–67.5% respondents shared emotions the same day) and was repetitive (67.6–77.7% respondents shared emotions several or many times). SSE has been found to occur regardless of emotion type, age, gender, culture, and education level, though with slight variations between these [81].

Recent research has shown that interpersonal media, including social network sites, are widely used for SSE [82]. Moreover, despite the use of specific visual and written cues [83], emotional communication online and offline is surprisingly similar [84,85]. Interestingly, social norms, social media platform characteristics, and individual preferences have been found to influence social network site choice for sharing a particular type of emotion. Vermeulen et al. [86] reported that Facebook statuses, Snapchat, and Instagram are mostly used for sharing positive emotions, whereas Twitter and Messenger are also used for sharing negative emotions. Choi and Toma [82] found that media selection for the first instance of social sharing among undergraduates was driven by their perceptions of media affordances and by their habitual media use with the target of their disclosure. For sharing positive events, respondents articulated preferences for bandwidth (e.g., “This channel allows me to receive cues about how the other person is feeling” [87]) and privacy (e.g., “My communication is private via this channel” [87]) affordances, whereas for positive events, they preferred accessibility (e.g., “It is easy for me to access this channel” [87]).

As for the question of which type of valence is most prevalent in social network sites, the existing studies seem to suggest predominantly that positive emotional valence prevails in network-visible channels. For example, the study [88] of positive emotion capitalization on Facebook identified that user experience tends to be structured around positive emotions and ways of supporting them in case of public interactions in front of an audience (i.e., not private communication). Bazarova et al. [89] also reported a significant difference between network-visible and private channels on Facebook, where private messages expressed fewer positive emotions compared to posts on others’ timelines or status updates. Social sharing of negative emotions in social networking sites has been found to often occur via private messages, partially due to impression management concerns with sharing to a larger audience [90]. However, there are also studies that report negative posts outnumbering other types of emotional posts in public channels, supporting previous findings [82,86] that the platform for expression of a certain emotion is chosen situationally and depends on a number of platform characteristics and individual preferences. For example, Hidalgo et al. [91] reported that a large part of emotional blog posts on LiveJournal showed full initiation of social sharing, where the share of negative posts was the largest. Moreover, intensity of emotional support was stronger for negative posts than for positive or bivalent posts. The recent research also shows that negative emotions in online posts might flow or spread further not only because of the platform characteristics or individual preferences, but also because of the specific content and susceptibility to emotional contagion brought by the semantic information embedded in that post. For example, Stella et al. [92] found that more highly re-shared tweets about COVID-19 vaccines contained negative emotions of sadness and disgust that were not found in less re-shared tweets on the same topic. Ferrara and Yang [93] reported that tweets with negative valence could spread at faster rates than positive messages but the latter could reach also larger audiences.

Based on the existing literature, the following conclusions and assumptions can be drawn. Firstly, the aggregation of expressed sentiment on an individual level before calculating an OSWB index is recommended since the same events can be reported by a user several times over a continuous period of time. Secondly, one can expect that there will be different proportions of positive and negative posts on different social networks, since different characteristics of the online communication channel are important for people when exchanging experiences about positive and negative events. Thirdly, one can expect that there will be different proportions of positive and negative posts across types of communications (e.g., private messages vs. public posts) within one social network since some groups of individuals prefer bandwidth and privacy affordances when sharing negative experiences. Lastly, until the identity of the characteristics and perceptions of the use of different social networks have been proven, one should not expect that the OSWB indices obtained from the analysis of posts from each of them will completely coincide.

2.5. Text Analysis Methods and Traditional Surveys

According to the recent survey by Németh et al. [20] on the potential of automated text analytics in social knowledge building, studies of large datasets can have the same shortcomings as surveys. As a consequence, such traditional factors as biased sample, sampling procedure composition, external validity, and coverage should be considered. However, replacing people with texts as the unit of analysis may cause several additional biases, such as different social media usage patterns among users, the digital divide, and socio-demographic information.

Usually, when conducting sociological research, researchers attempt to make their sample representative of their target population. The issue of representativeness, however, is not a new problem, nor is it unique to digital data sources [94]. For example, bias may arise when using standard survey procedures, such as phone-based sampling, which represents only non-institutionalized populations [95]. In the context of social media sites, it is challenging to obtain a sample that is representative of the users of the entire social network, let alone the internet users or the population of a particular country. Generally, algorithms used by social media sites or data aggregation platforms that provide researchers and developers with application programming interfaces (APIs) for sampling procedures of the data are not transparent [20]. Consequently, in the majority of cases, researchers cannot verify when the data collected through APIs are a representative sample of all available posts or simply a biased portion thereof. In our previous study [37], we indicated that one of the most reliable ways to receive access to representative historical data is to request access to these data directly from the data source. For instance, access to the historical data from Odnoklassniki, the second largest social network in Russia, can be requested directly through OK Data Science Lab (https://insideok.ru/category/dsl/, accessed on 1 June 2022).

In their investigation into data sources for public opinion studies, Dudina and Iudina [72] stated that analysis of text from the internet cannot as yet be considered as a full-fledged alternative to public opinion polls. The authors considered the lack of a theoretical basis for generalizing data to broader groups of the population as the main challenge for the dissemination of the conclusions obtained in social media research to non-digital reality. For example, the traditional mass survey model assumes linking opinions to sociodemographic groups, whereas when analyzing data from social media, there is a problem with obtaining reliable sociodemographic information. Moreover, there are other big data–driven, approach-specific issues related to the quality of data that are not present in surveys or interviews [20]. For example, the presence of irrelevant data, fake data and bots, and the lack of demographic variables make it impossible to complete routinely used post-stratification weighting. Theoretically, it is possible to obtain sociodemographic information from social networks, but in most cases, it depends on the functionality of the social network, the filling level of the user’s account and the user’s privacy settings. One of the most reliable solutions, as in the case of access to representative data, may be to obtain data from specialized research platforms managed by the analyzed social network.

In classical survey methods of conducting research, the desire of the respondent to make a favorable impression on the interviewer is usually seen as a biasing factor. In the digital space, users tend to attempt to impress each other; therefore, it is worth considering how people manage their experiences and present themselves when communicating online. In other words, social interactions within social media can me considered as mediated interactions, where people imagine an audience and build their self-representation accordingly [96]. According to Dudina [74], in the context of classical survey methods, the bias is toward socially approved responses, whereas in the digital space, there is often a bias toward socially unapproved or aggressive responses. At the same time, it is important to take into account that the opinions expressed on social networks directly depend not only on the characteristics and intentions of the authors, but also on the user agreement of a particular social network, as well as the level of freedom of speech, censorship, and regulatory legislation in a particular country [37]. Thus, in both cases, researchers are faced with the basis of perception, but in the case of a classic interview, it is directed toward the interviewer, and in the case of analyzing social networks, toward the potential audience of the author of the post.

Speaking of challenges specific to studies based on digital traces, it is first of all worth highlighting different usage patterns characteristic of different people. Firstly, more internet-active people are more likely to appear in digital corpora due to the amount of posted messages [20]. Assuming that, on average, users have no more than one active account for a particular social network, we assume that simple filtering or aggregation of expressed opinion may negotiate this bias. Secondly, the digital divide is a decreasing but still existing problem [20], so different social–demographic groups may be overrepresented or underrepresented in the data sample. By examining the association between user characteristics and their adoption of social media sites, Hargittai [97] suggested that several sociodemographic factors relate to who adopts such sites. The author discovered that big data derived from social media tend to oversample the views of more privileged people since those of higher socioeconomic status are more likely to be on several social media platforms. Moreover, internet skills are related to using such sites, proving that opinions visible on these sites do not represent all types of people from the general population equally. Since age appears to have a negative influence on internet skills [98] and the internet penetration generally is not equal between different age groups [99], it would be logical to continue the thought and also assume that user-generated content from the internet also does not take into account the opinions of people of different age categories equally. In Russia, sociodemographic and economic determinants still play a key role in the digital divide, despite its reduction. For example, low-income citizens, older citizens (over 65) and citizens with disabilities, as well as rural residents, are the most vulnerable social groups [99]. Thus, we assume that the usage of the post-stratification weighting may negotiate this bias. Another issue of using online data is the possibility of incurring in posts produced by fake accounts, e.g., trolls or bots. Research based on Russian-language Twitter suggests that at least 50 messages per user are needed to detect trolls [100], and at least 10 to detect a bot [101].

Thus, a significant share of studies agree that the use of data from social media makes it possible to dispense with traditional survey methods. However, the question is raised about the representativeness of the information presented and about the possibility of expanding the findings to a wider social context. The combination of traditional social science techniques—such as post-stratification based on demographic groups—and results validity confirmation such as comparison with other SWB measures [43] seem to be good candidates to address mentioned issues.

2.6. Sentiment Analysis

Sentiment analysis is an NLP task whose objective is to study subjective information and affective states from different types of content. In the context of OSWB studies, sentiment analysis tends to be a primary way of identifying emotions expressed in digital trace objects. According to Cambria et al. [102], there are (at least) 15 NLP problems that need to be solved to achieve human-like accuracy in sentiment analysis of texts (Sentiment analysis of media content (i.e., images and videos) is beyond the scope of this study), which are organized into three layers: Syntactics (e.g., microtext normalization, sentence boundary disambiguation, part-of-speech tagging, text chunking, and lemmatization), Semantics (e.g., word sense disambiguation, concept extraction, named entity recognition, anaphora resolution, and subjectivity detection), and Pragmatics (e.g., personality recognition, sarcasm detection, metaphor understanding, aspect extraction, polarity detection). Polarity detection (e.g., classifying text as positive or negative) is the key sentiment analysis task (and the most popular one [102]), so we will further refer to sentiment analysis in the meaning of polarity detection.

Recently, deep learning-based ML approaches have captured the attention of academics and practitioners because of their ability to notably outperform traditional ML approaches in the sentiment analysis task [103]. In our recent survey [37], we analyzed the applications of sentiment analysis for Russian-language texts and identified transfer learning of pretrained language models as one of the most relevant research opportunities, which can increase the quality of the applications of sentiment analysis for Russian-language texts. In our following study [65], we fine-tuned the Multilingual Universal Sentence Encoder [104], RuBERT [105], and Multilingual BERT [106], and we obtained strong (in some cases, even state of the art) results on seven sentiment datasets in Russian. Experiments on fine-tuning pretrained transformers on Russian-language sentiment datasets were also carried out by Golubev et al. [66], Kotelnikova [67], Moshkin et al. [68], and Konstantinov et al. [69]. In all cases, BERT-based models achieved better results compared to other approaches. Since the field of NLP is developing at a rapid pace, many other pretrained transformers have emerged since the publication of the above studies. If Multilingual BERT and RuBERT were already quite well studied in the context of Russian-language sentiment analysis, then, for example, such powerful models as XLM-RoBERTa [107] and MBART [108] have not yet been widely considered by academics. One of the main challenges in comparing the accuracy of different pretrained transformers on sentiment analysis tasks is that pretraining and fine-tuning of transformers are commonly extremely resource-intensive and time-consuming tasks that require a significant amount of computational resources.

Thus, in this study, we decided to evaluate the most recent pretrained language models on the sentiment analysis of texts in Russian following the methodology described in [65]. Based on recent studies [109,110,111] on language models fine-tuning for sentiment analysis and Russian SuperGLUE [112] leaderboard (https://russiansuperglue.com/leaderboard/2, accessed on 1 May 2022), we selected the following models: XLM-RoBERTa-Large [107], RuRoBERTa-Large [113], and MBART-Large [108]. As a baseline model, we decided to use RuBERT [105] because in previous studies (e.g., [65,67,69]) it consistently showed high or even state-of-the-art classification scores on Russian sentiment datasets.

3. Measuring Observable Subjective Well-Being

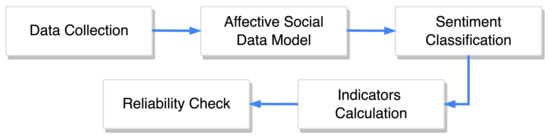

The pipeline of the proposed approach (see Figure 1) consists of the following stages: obtaining raw data for analysis, training the sentiment classifier, building an affective social data model, selecting the OSWB metrics of interest, and calculating the OSWB indicators.

Figure 1.

Pipeline for measuring OSWB.

- Firstly, it is necessary to calculate the minimum sample size, and collect the required amount of data.

- Secondly, it is necessary to construct the affective social data model using collected data and sentiment classification model. The proposed affective social data model is based on the theory of socio-technical interactions (STI) [114] and the phenomenon of the social sharing of emotions (SSE) [80]. Online social network platforms involve individuals interacting with technologies and other individuals, thereby representing STI. When interacting, individuals tends to share their emotions (88–96% of emotional experiences are shared and discussed [80]) regardless of emotion type, age, gender, culture, and education level, though with slight variations between them [81]. Considering that emotional communication online and offline is surprisingly similar [84,85], we assumed both to be a good source for analyzing the affective state on the individual level and then aggregated it to capture the OSWB measure on the population level.

- Thirdly, the sentiment classification model should be trained to extract sentiment from the collected data. It is recommended to train the model on the training dataset from the same source as collected data. If the training dataset from the same source of data is not available, then it is recommended to select a training dataset from the most similar data source available.

- Fourthly, it is necessary to calculate OSWB indicators of interest using the constructed affective social data model. The proposed approach for calculation takes into account demographic characteristics of selected sample of users and maps this sample to the general population of the selected country via post-stratification.

- Lastly, the reliability of calculated indices must be verified. Among various available reliability measures, comparing the obtained OSWB indicators with existing survey-based SWB indicators tends to be the most straightforward option.

3.1. Data Sampling

A central idea behind data collection for computational social science research is collecting relatively inexpensive data, aiming at all the available data (i.e., big datasets are good, and bigger is better [115]). However, the question of determining the minimum sample size remains relevant. Following the standard approach from social sciences, the minimum sample size n and margin of error E are given by

where N is the population size, r is the fraction of responses that you are interested in, and is the critical value for the confidence level c. When determining the sample size, one should also take into account the sample size used in classic survey-based SWB surveys. For example, Gallup World Poll typically uses samples of around 1000 individuals aged 15 or over in each country [56,116,117], the minimum sample size of World Values Survey is 1200 respondents aged 18 and older [118], and the regular sample size in Standard and Special Eurobarometer surveys is 1000 respondents per country [119]. In the case of Russian SWB surveys, the VCIOM Happiness index typically has samples of 1600 respondents aged 18 or over [40], and the FOM Mood of Others index has samples of 1600 respondents [120].

Note that in the case of working with electronic traces, the initial unit of analysis is digital trace, and there is often access not to the respondents directly, but to the traces that they left. The analysis of M electronic traces will not always mean that these traces were left by M users, and will depend on how many, on average, of the users leave traces. As a result, to estimate the minimum size of digital traces it is additionally required to multiply the minimum number of respondents n on the average number of digital traces left by a user during analyzed time interval .

However, in practice, it can be expected that prior to gaining access to digital traces, it is impossible to estimate the number of traces per user . In this case, after gaining access to as much data as possible, it will be enough to verify that these traces were left by a number of users that is not less than the calculated minimum number of respondents n.

3.2. Affective Social Data Model

The affective social data model for socio-technical interactions (see Definition 10) consists of two elements: actors and interactions. The actors (see Definition 11) represent participants of STI generating digital traces. The interactions (see Definition 12) represent structural aspects of STI and generated digital traces representing SSE. As a basis for the formal description of the model, we took the online social data model for social indicators research model that we proposed earlier [121] to analyze the influence of the misclassification bias on the social indicators research. We applied classical set theory to develop our model since the recent literature [122,123] articulated a series of its advantages in the computational social sciences.

Definition 1.

is a finite set of all user types defined as where

- represents a user account which was created for personal use, and

- represents a user account which was created for business use.

It is important to delimit the types of accounts since the purpose of using a social network—and, as a result, the type of content—can strongly depend on them.

Definition 2.

is a finite set of all artifact types defined as where we have the following:

- represents text and (or) media posts or comments;

- represents the reactions to posted artifacts, such as likes or dislikes;

- represents digital photos, videos, and audio content.

Each artifact type represents a type of user-generated content (UGC). Basically, represents all communications on users’ pages that occurs in the social networks, except private messages. (Our model does not consider private messages because not only are they extremely problematic to obtain, but their analysis can also raise a series of legal, privacy, and ethical questions.) Other UGC, such as digital photos, videos, and audio published in users’ albums, but not published on users’ pages, are represented as . Reactions to and , such as likes or dislikes, are represented as .

Definition 3.

is a finite set of sexes defined as where

- represents male sex, and

- represents female sex.

Definition 4.

is a set of birth dates.

Definition 5.

G is a set of geographical information.

Definition 6.

is a finite set of marital statuses defined as where we have the following:

- represents a person who is in culturally recognized union between people called spouses;

- represents a person who is not in serious committed relationships, or is not part of a civil union;

- represents a person who is no longer married because the marriage has been dissolved;

- represents a person whose spouse has died.

Definition 7.

is a set of family types (i.e., classification of a person’s family unit) defined as where we have the following:

- represents a family which includes only the spouses and unmarried children who are not of age;

- represents a family of one parent (The parent is either widowed, divorced (and not remarried), or never married.) together with their children;

- represents a family with mixed parents (One or both parents remarried, bringing children of the former family into the new family.);

- represents a group of people in an individual’s life that satisfies the typical role of family as a support system.

Definition 8.

is the user’s numbers of children.

Definition 9.

is the number of people living in the user’s household.

The combination of sex , birth date , marital states , family type , and number of children represents demographics of the population and is of interest for conducting SWB studies [43]. This model does not consider other co-variates (e.g., material conditions, quality of life, and psychological measures) recommended for collection alongside measures of SWB since there is virtually no access to them within social networks data.

Definition 10.

The Affective Social Data Model for Socio-Technical Interactions is defined as a tuple where we have the following:

- A is the actors, representing the participants of socio-technical interactions generating UGC as defined further in Definition 11;

- I is the interactions, representing the structural aspects and UGC of as defined further in Definition 12.

As provided in the conceptual model and in Definition 10, the affective social data model for socio-technical interactions () contains actors (those who are doing and interacting) and interactions (what is being done and interacted).

Definition 11.

The Actors of is defined as a tuple where we have the following:

- U is a finite set of users ranged over by u;

- is a finite set of user types (as defined in Definition 1) ranged over by ;

- is a finite set of users’ sexes (as defined in Definition 3) ranged over by ;

- is a set of users’ birth dates ranged over by ;

- is a set of users’ marital statuses (as defined in Definition 6) ranged over by ;

- is a set of users’ family types (as defined in Definition 7) ranged over by ;

- is the user’s numbers of children (as defined in Definition 8) ranged over by ;

- is a set of numbers of people living in the users’ households (as defined in Definition 9) ranged over by ;

- G is a set of users’ geographical information (as defined in Definition 5) ranged over by g;

- is the user type function mapping each user to the user type;

- is the sex function mapping each user to the user’s sex if defined;

- is the birth date function mapping each user to the user’s birth date if defined;

- is the marital status function mapping each user to the user’s marital status if defined;

- is the family type function mapping each user to the user’s family type if defined;

- is the number of children function mapping each user to the user’s number of children if defined;

- is the household size function mapping each user to the user’s household size if defined;

- is the geographic information function mapping each user to the user’s geographic information if defined.

The formal definition of actors is provided in Definition 11. The first two items contain a set of users (U) and a set of user types (), respectively. The next six items contain demographic information, including sex (), birth date (), marital status (), family type (), the numbers of children (), the numbers of people living in the household (), and geographical information (G). The rest of the items are mapping functions from a user to the user’s type and all mentioned demographic characteristics if defined. The set of demographic characteristics was constructed based on existing guidelines on measuring SWB [43,56,58,70] to cover as many potentially useful demographic data as possible, although we understand that some of them can be unavailable in digital trace data (see Definition 8).

Definition 12.

The Interactions of is defined as a tuple where we have the following:

- is a finite set of artifacts ranged over by ;

- is a finite set of artifact types (as defined in Definition 2) ranged over by ;

- S is a finite set of sentiment classes ranged over by s. (The list of final classes is not specified within this model, since it is expected that it may differ both depending on the final task of building the index and depending on the markup of the training dataset that is used to train the model.)

- is a function mapping the artifact and the user on whose feed it was published;

- is a function mapping the artifact and the user created it;

- is the artifact type function mapping each artifact to an artifact type;

- is a parent artifact function, which is a partial function mapping artifacts to their parent artifact if defined;

- is a relation defining mapping between artifact and sentiment;

- is a time function that keeps tracks of the timestamp of an artifact created by an user;

- is a time function that returns the age of the user on the time of the artifact creation if the user’s birthday is defined;

- is a partial function mapping users to mutually disjoint sets of their artifacts;

- is a partial function mapping users to the artifacts reacted by the users.

3.3. Sentiment Classification

As can be seen from definition, S represents a finite set of sentiment classes, and represents mapping between an artifact and a sentiment. From the sentiment classification perspective, S is a set of classes in a training sentiment dataset, and is a function that runs the sentiment classification model trained on the sentiment dataset and returns the sentiment of the artifact.

3.4. OSWB Indicator Calculation

The approach for calculating OSWB indicators consists of three steps.

- Select content of interest for the analysis; that is, textual posts published by users on their own pages.

- Make data sample representative of the target population by applying sampling techniques.

- Calculate selected OSWB measures based on the representative data sample.

3.4.1. Data Selection

Definition 13.

is a finite ordered set of T non-overlapping time intervals, such as .

Definition 14.

is a partial mapping a timestamp of artifact creation to a time interval if the birthday of the user is defined.

Definition 15.

P is a finite set of textual posts published by users on their own pages and defined as follows:

Definition 16.

is a finite set of posts published by authors on their pages during time interval and is defined as follows:

We focus on the user’s own posts posted on their pages, as we assume that such posts are more likely to contain the emotional state of the author compared to posts elsewhere. We also believe that the users’ pages in most cases are not limited to a specific thematic domain, in comparison with the walls of groups and communities; therefore, these posts should contain a larger number of different topics and, on average, be general-domain sources of data.

Definition 17.

is a finite set of users who posted textual posts on their own profiles within time interval and is defined as follows:

After obtaining , it is necessary to validate that the number of users for each time interval is not less that the minimum sample size n (see Equation (2)). In case it is less than n for at least one , then the calculation of the index with the selected confidence level and margin of error is not possible.

3.4.2. Data Sampling

Definition 18.

is a finite set of demographics mapping functions with defined values over the given users set and is defined as follows.

Since not all of these characteristics can be obtained from social network data, in accordance with the European Social Survey Sampling Guidelines [58], it is recommended to use at least age and gender characteristics for the sampling design.

Definition 19.

is a finite set of users representative of the target population by applying stratification (Here, is the population size, n is the total sample size, k is the number of strata, is the number of sampling units in i-th strata such as , is the number of sampling units to be drawn from i-th stratum such as . Strata are constructed such that they are non-overlapping and homogeneous with respect to the characteristic under study. For fixed k, the proportional allocation of stratum size can be calculated as , where each is proportional to stratum size .) by .

Definition 20.

is a finite set of posts created by representative sample of users on their own pages during time interval and defined as follows:

3.4.3. Index Calculation

Firstly, it is required to aggregate sentiment for users who posted several times during the considered time intervals.

Definition 21.

is the sentiment aggregation function which aggregates the sentiment of posts published during time interval by user u and is defined as follows:

The aggregation function can be defined in several ways (e.g., major voting).

Definition 22.

is the aggregated user sentiment expressed in a post published during period of time.

Finally, the OSWB indicator can be calculated.

Definition 23.

is the OSWB indicator and is defined as follows:

where is an indicator formula, which can be defined in several ways depending on the study goals (see examples in Section 4.5).

4. Observable Subjective Well-Being Based on Odnoklassniki Content

4.1. Odnoklassniki Data

According to the VCIOM survey [124] in 2017, the preferences among usage of particular social networks in Russia have age characteristics. The largest share of the audience of VKontakte users, 40% of the total audience, consists of people aged 25–34 years. Among Instagram users, 38% are between the ages of 18 and 24, and 37% are between the ages of 25 and 34. Among the daily audience of Odnoklassniki, the most common group is also 25–34 years old (28%). At the same time, the distribution of the Odnoklassniki audience by age is the closest among all social networks to the general distribution of the internet audience in Russia [124]. Similar findings were reported in the study by [125], where the author concluded that Odnoklassniki is the most democratic social network in Russia because it is used by all categories of the population, including “traditional non-users”—that is, the elderly and people with a low level of education. In fact, according to Brodovskaya, the only network used by older Russians is Odnoklassniki, since Russians who have reached the age of 60 do not have accounts on any foreign social networks. This makes Odnoklassniki a great source of data for analysis since post-stratification weights are not expected to vary significantly. In case some subgroups have either extremely small or extremely large weights, it can actually make the estimate worse by increasing the model’s variance and sensitivity to outliers [126].

We calculated the minimum sample size (see Section 3.1) using Raosoft (http://www.raosoft.com/samplesize.html, accessed on 1 May 2022) (population size of 40,000,000 [127], the same margin of error of 2.5% and confidence level of 95% as was used in VCIOM Happiness [40]) and yielded . Considering that we did not have information about average number of posts by users, we requested from the OK Data Science Lab as many posts as they could provide, but not fewer than 1537 per day. We requested only those posts which (1) contained textual content only, (2) were published by individual users on their own public pages, and (3) were published within the territory of Russia.

The OK Data Science Lab provided us with 7,200,000 randomly selected textual (i.e., ) posts published in Russia (i.e., ) by individual users (i.e., ) on their public profiles between April 2020 and May 2021, for a total of 20,000 posts per day. Each post contained anonymized user identifiers (primary identifier of artifacts ), date of birth if known (), gender if known (), time of publication (required for ), author’s time zone at the moment of publication (required for ), author’s country ( for all posts) at the moment of publication (based on IP and other Odnoklassniki internal heuristics (the quality of determining geolocation by IP is outside of the scope of this work)), text (required for sentiment mapping function ), and language used in the post. We then filtered out duplicates, posts of authors without date of birth or gender, and obtained 7,049,907 posts for further analysis. These posts were published by 3,610,891 unique users—1.95 posts per user on average. We checked the number of unique authors of posts for each day and confirmed that it exceeds 1537 unique authors for each day. All user data were provided in an anonymized format; therefore, it was impossible to identify the real author of the post. A more detailed description of the characteristics of the data (e.g., gender and age distribution) is not possible in accordance with the Non-Disclosure Agreement; however, it is available through official Ondoklassniki reports [127] (see Table 1). The core of the Ondoklassniki audience is women and men 25–44 [128]. All generations of people are represented in Ondoklassniki: children, teenagers, the core of the audience aged 25–44, and older people.

Table 1.

Gender distribution for Odnoklassniki audience in 2021. Source: [127].

The Odnoklassniki data are available from OK Data Science Lab, but restrictions apply to the availability of these data; they were used under license for the current study, and so they are not publicly available. Data are, however, available from the OK Data Science Lab upon reasonable request, https://insideok.ru/category/dsl/ (accessed on 1 May 2022).

4.2. Demographic Groups

While selecting demographic groups, in addition to general guidelines on measuring SWB mentioned earlier [43,56,57,58], we also relied on recommendations by Russian research agencies to cover country-specific aspects: the VCIOM SPUTNIK methodology [129] and RANEPA Eurobarometer methodology [130]. Thus, we selected the following demographic variables for post-stratification.

- Gender. The array reflects the sex structure of the general population: male and female.

- Age. The array is divided into four age groups, reflecting the general population: 18–24 years old, 25–39 years old, 40–54 years old, and 55 years old and older.

While the model contains many other demographic characteristics (e.g., , , , G from Definition 11), we were unable to use them to construct the OSWB indices because the Odnoklassniki data did not contain them.

The data about real population characteristic were obtained from the Federal State Statistics Service of Russia (https://rosstat.gov.ru/compendium/document/13284, accessed on 1 May 2022).

4.3. Sentiment Classification

4.3.1. Training Data

Manual annotation of a subset of provided Odnoklassniki posts via crowdsourcing platforms was not possible in accordance with the non-disclosure agreement. Thus, for training a classifier, we chose one of the existing datasets with the data that are most similar to posts from Odnoklassniki. Unfortunately, the Russian language is not as well resourced as the English language, especially in the field of sentiment analysis [65], so the selection options were quite limited. Based on the previously obtained list of available training datasets in Russian [37], we identified RuSentiment [39], which consists of posts from VKontake (VKontake is the largest national social network in Russia, with about 100M active users per month [131]), as the most appropriate dataset due to the following reasons. Firstly, RuSentiment is the largest sentiment dataset of general-domain posts in Russian, which was annotated manually (Fleiss’ ) by native speakers with linguistic background. Almost all other datasets are either domain-specific (e.g., SentiRuEval 2016 [132]) or annotated automatically (e.g., RuTweetCorp [133]), or both (e.g., RuReviews [134]). The only exception is the RuSentiTweet [135] dataset, but it consists from Russian-language tweets and as a result, has different linguistic characteristics. Secondly, the corpora similarity measure proposed by Dunn [136] confirmed that RuSentiment and Odnoklassniki data are similar (see Appendix A for details). The similarity between texts from Odnoklassniki and VKontakte was intuitively expected since they are the two largest national social networks in Russia [137], very close in terms of the available functionality for communications [138], and used by Russians with approximately the same intensity [125].

RuSentiment contains 31,185 general-domain posts from Vkontakte (28,218 in the training subset and 2967 in the test subset), which were manually annotated into five classes:

- Positive Sentiment Class represents explicit and implicit positive sentiment.

- Negative Sentiment Class represents explicit and implicit negative sentiment.

- Neutral Sentiment Class represents texts without any sentiment.

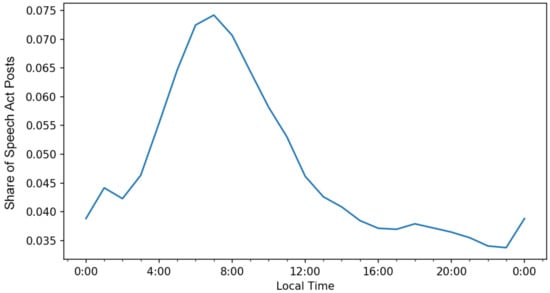

- Speech Act Class represents congratulatory posts, formulaic greetings, and thank-you posts.

- Skip Class represents noisy posts, unclear cases, and texts that were likely not created by the users themselves.

The dataset was labeled by native speakers with a linguistics background with a Fleiss’ kappa of 0.58. The dataset consists of two subsets: training subset (28,218 texts) and test subset (2967 texts). We trained our models on the training subset and reported classification metrics on the tests subset to compare results with other studies on RuSentiment.

4.3.2. Classification Model

Based on the literature review, we selected the following pretrained language models for fine-tuning experiments to identify the most accurate one.

- XLM-RoBERTa-Large (https://huggingface.co/xlm-roberta-large, accessed on 1 June 2022) [107] by Facebook is a multilingual RoBERTa [139] model with BERT-Large architecture trained on 100 different languages.

- RuRoBERTa-Large (https://huggingface.co/sberbank-ai/ruRoberta-large, accessed on 1 June 2022) [113] by SberDevices is a version of the RoBERTa [139] model with BERT-Large architecture and BBPE tokenizer from GPT-2 [140] trained on Russian texts.

- mBART-large-50 (https://huggingface.co/facebook/mbart-large-50, accessed on 1 June 2022) [108] by Facebook is a multilingual sequence-to-sequence model pretrained using the multilingual denoising pretraining objective [141].

- RuBERT (https://huggingface.co/DeepPavlov/rubert-base-cased, accessed on 1 June 2022) [105] by DeepPavlov is a BERT model trained on news data and the Russian-language part of Wikipedia. The authors built a custom vocabulary of Russian subtokens and took weights from the Multilingual BERT-base as initialization weights.

The characteristicsof the selected models, including information about tokenization, vocabulary, and configuration, can be found in Table 2.

Table 2.

Characteristics of selected models.

On the top of the pretrained language model, we applied a simple softmax layer to predict the probability of classes c:

where W is the task-specific parameter matrix of the added softmax layer. The fine-tuning stage was performed on 1 Tesla V100 SXM2 32GB GPU with the following parameters: a number of train epochs of [4, 5, 6, 7, 8], a max sequence length of 128, a batch size of [16, 32, 64], and a learning rate of [2e-6, 2e-5, 2e-4]. The hyperparameter value ranges were chosen based on values used in existing studies [65,105,135,142,143]. Fine-tuning was performed using the Transformers library [144]. Since the dataset originally had a division into test and training subsets, we additionally divided the existing training subset into validation (20%) and new training (80%) subsets. The models were evaluated in terms of macro and weighted measures:

where i is the class index, N the number of classes, and is the weight of class i. The highest possible value of macro and weighted is 1.0 and the lowest possible value is 0. We repeated each experiment 3 times and reported the mean values of the measurements.

According to the results of fine-tuning presented in Table 3, RuRoBERTa-Large (, , ) demonstrated the best classification scores of weighted (4.27 percentage points above existing SOTA) and macro (0.42 percentage points above existing SOTA), thereby achieving new state-of-the-art results on RuSentiment. XLM-RoBERTa-Large (, , ) showed slightly lower but still competitive results. However, taking into account that XLM-RoBERTa-Large is larger than RuRoBERTa-Large, it turns out that in any case, it is much more efficient to use RuRoBERTa-Large for sentiment analysis of RuSentiment data. Surprisingly, mBART-large-50 (, , ) did not show results higher than those of RuBERT (, , ).

Table 3.

Classification results of fine-tuned models. Random represents a random classifier. Weighted is reported because it was used as the main quality measure in the original paper. Existing weighted SOTA was achieved by shallow-and-wide CNN with ELMo embeddings [145]. Existing macro SOTA was achieved by fine-tuned RuBERT [65].

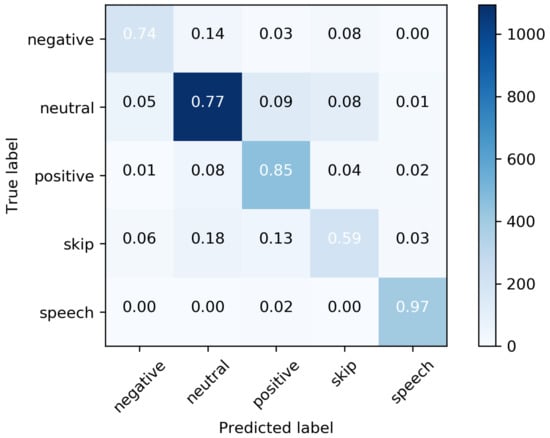

The most common misclassification errors of RuRoBERTa-Large (see Figure 2) were the classifying Skip Class as Neutral and Positive Class, Negative Class as Neutral Class, and Neutral Class as Positive. The speech acts class was more clearly separated from other classes because it was composed of a well-defined group of speech constructs. Predictably, the Skip Class was one of the most hardly classified because this class initially contained noisy and hardly interpretable posts. Neutral sentiment is logically located between negative and positive sentiment, so it is expected that it can be classified incorrectly. As was mentioned in our previous study [65], this issue looks like a general challenge of non-binary sentiment classification. For example, Barnes et al. [146] also reported that the most common errors come from the no-sentiment classes (i.e., the Neutral Class in our case).

Figure 2.

Normalized confusion matrix for RuRoBERTa-Large. The diagonal elements represent the share of objects for which the predicted label is equal to the true label (i.e., Recall), whereas off-diagonal elements are those that are mislabeled by the classifier. The higher the diagonal values of the confusion matrix the better, indicating many correct predictions. The color bar represents the number of objects classified in a particular way, where the light blue color represents zero objects and dark blue represents the maximum amount of objects.

We made the fine-tuned RuRoBERTa-Large model publicly available (https://github.com/sismetanin/sentiment-analysis-in-russian, accessed on 1 May 2022) to the research community.

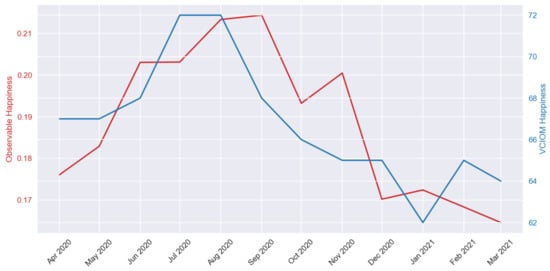

4.4. Validity Check

As mentioned in the literature review, according to the OECD Guidelines on Measuring SWB [43], validity can be verified by comparing results when using different measures on the individual level. However, this implies that for verification, we need the SWB values of the indicator obtained by the classical survey method for at least a part of the study participants. Of course, we do not have such data at our disposal; however, in earlier literature [147] it was indicated that the language-based assessment of social media posts can constitute valid SWB measures. Thus, to verify the results in our case, we propose to check the validity on the aggregated level by selecting an existing indicator obtained on the basis of survey data, which will coincide in the time period with our indicator. Considering that our time period is relatively small, we cannot use an indicator that is calculated once a year since it makes no sense to build a correlation based on a time series of two values. Among the SWB indices for Russia, calculated by the organizations mentioned in the literature review, the VCIOM Happiness index seems to be best suited for our time period since it was calculated monthly. Thus, for the reliability check, we decided to use the VCIOM Happiness index. Validity checks for OSWB studies at the aggregate level have also been used in other studies (e.g., [29,148]), so we followed their practice.

4.5. Indicator Formula

Within our study, we explored two types of indicator formulas.

Definition 24.

is the observable positive affect indicator (experiencing pleasant emotions and moods) and is defined as follows:

where is the number of positive posts, is the number of negative posts, is the number of neutral posts, is the number of posts with greetings and speech acts, and is the number of ambiguous posts that cannot be unambiguously assigned to one of the other classes.

The indicator takes values from 0 to 1.

Definition 25.

is the observable negative affect indicator (experiencing unpleasant, distressing emotions and moods) and is defined as follows:

The indicator takes values from 0 to 1.

4.6. Misclassification Bias

Although we achieved new SOTA results on the RuSentiment dataset, the best classification model was still not error-free, which could introduce a bias in our analysis results. To estimate the impact of misclassification bias on OSWB indicators of interest, we applied a simulation approach for misclassification bias assessment introduced in our previous paper [121]. For the generation of synthetic time series, we applied Nonlinear Autoregressive Moving Average model from the TimeSynth [149] library with random hyperparameters for each simulation run. We chose Pearson’s and Spearman’s correlation coefficients as the main metrics. For each indicator calculated further (see Section 4.5), we ran 500,000 simulation iterations. According to the results of the simulation, the aggregated p-values are higher than 0.95, and both coefficients demonstrated almost perfect aggregated correlation scores. Thus, we can confirm that the there is a negligible impact of the misclassification bias on the calculation of all considered indices, allowing us to achieve an almost perfect level of correlation between the predicted and true underlying indicators.

5. Results