Complex Noise-Resistant Zeroing Neural Network for Computing Complex Time-Dependent Lyapunov Equation

Abstract

:1. Introduction

- This paper proposes and investigates a complex double-integral noise-resistant ZNN model, which is first used to solve the CTDLE. It is noteworthy that the CNRZNN model is more general. When a coefficient of the CNRZNN model is set to 0, the existing NTZNN model is a special form of the CNRZNN model.

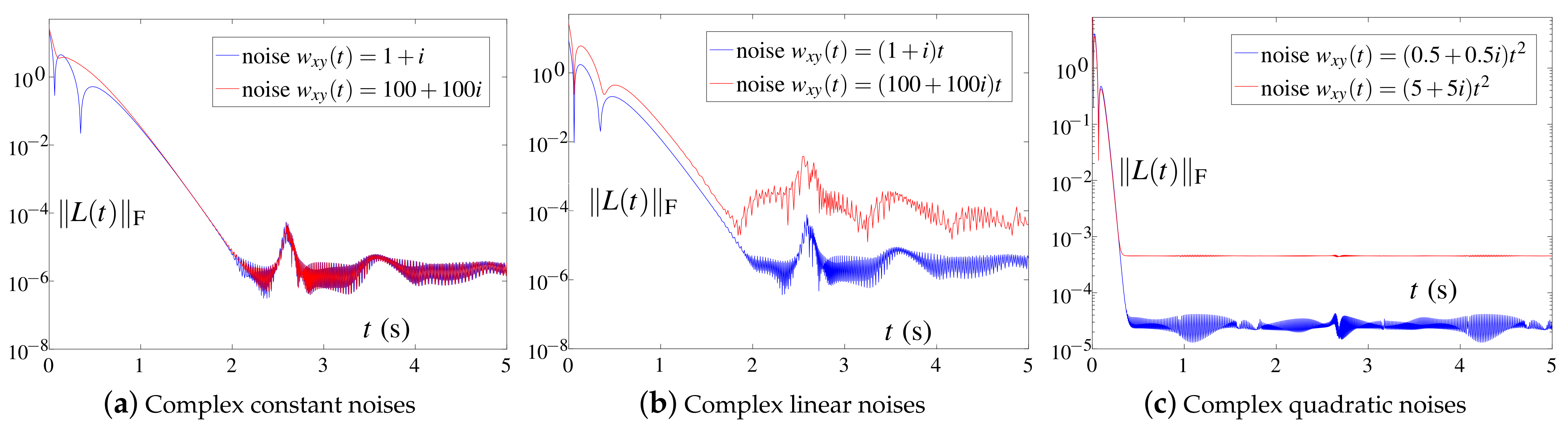

- The CNRZNN model is analyzed and deduced, and the convergence and robustness of the CNRZNN model are proved theoretically. It shows that the CNRZNN model has an inherent tolerance to complex constant noise, complex linear noise and complex quadratic noises.

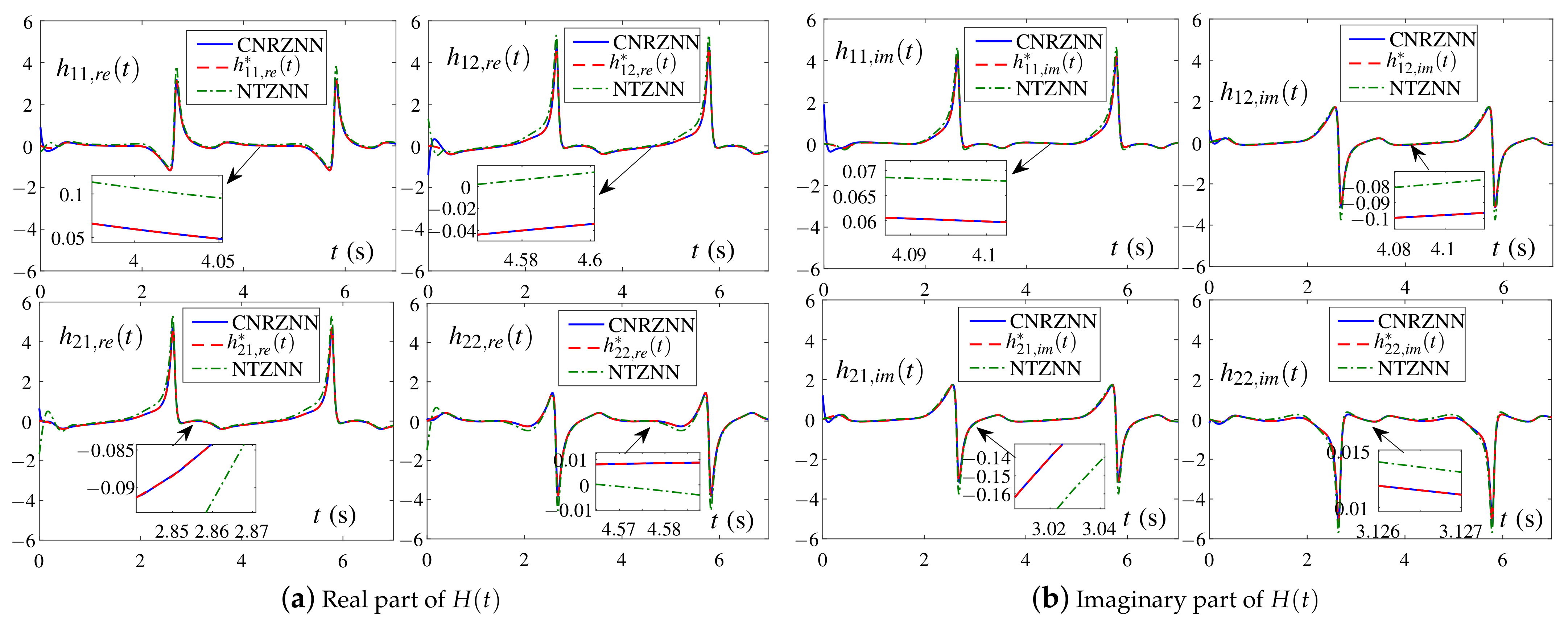

- Three different experiments verify the effectiveness, convergence and robustness of the CNRZNN model. Meanwhile, the NTZNN model is introduced to make a robustness comparison with the CNRZNN model under the condition of complex linear noise, complex quadratic noise and various real noises.

- Compared with the NTZNN model, the CNRZNN model has more outstanding robustness for solving CTDLE under complex linear noises and complex quadratic noises. To be precise, in the case of complex linear noise, the residual error of the CNRZNN model converges stably to order , which is much lower than that of the NTZNN model at order . Similarly, for complex quadratic noise, the residual of the CNRZNN model can achieve stable convergence, while the residual of the NTZNN model is divergent.

2. Problem Formulation and Models Design

2.1. Problem Formulation

2.2. CNRZNN Design Formula

2.3. CNRZNN Model

3. Theoretical Analysis and Results

3.1. Convergence of CNRZNN Model

3.2. Robustness of CNRZNN Model

4. Illustrative Examples and Results

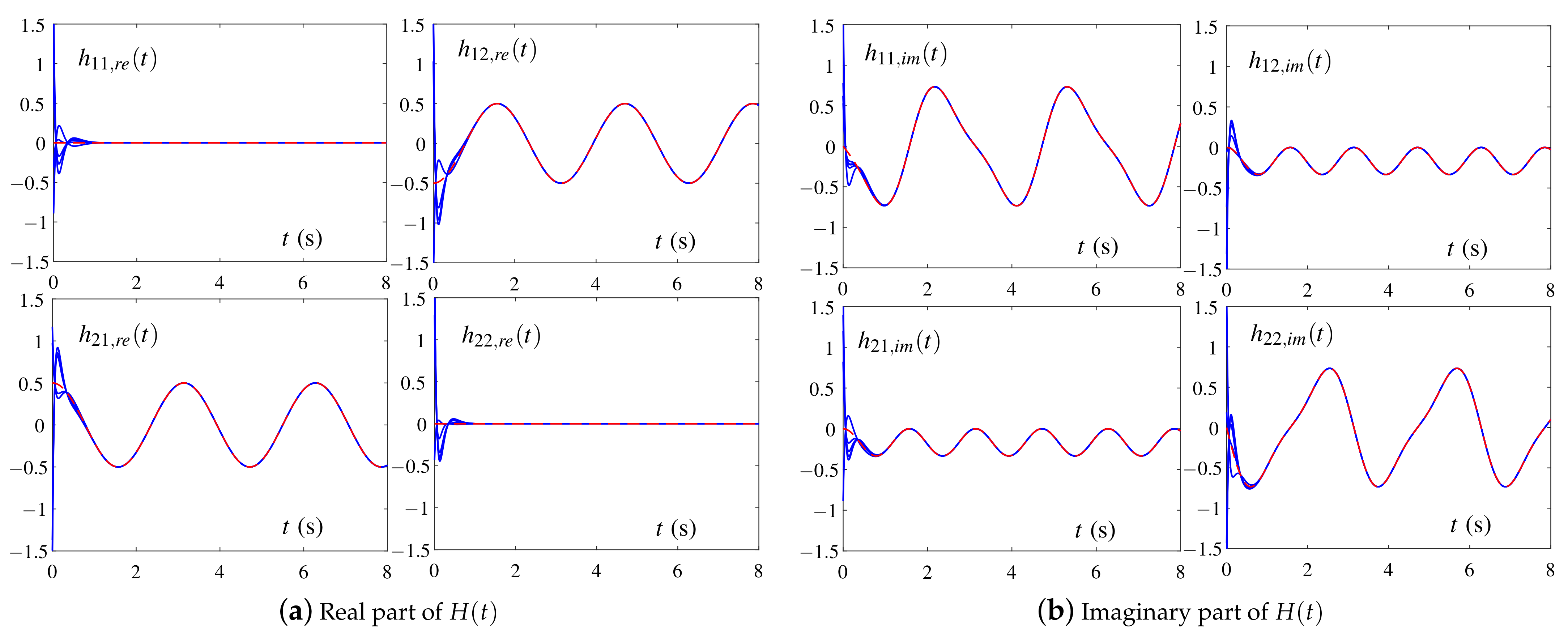

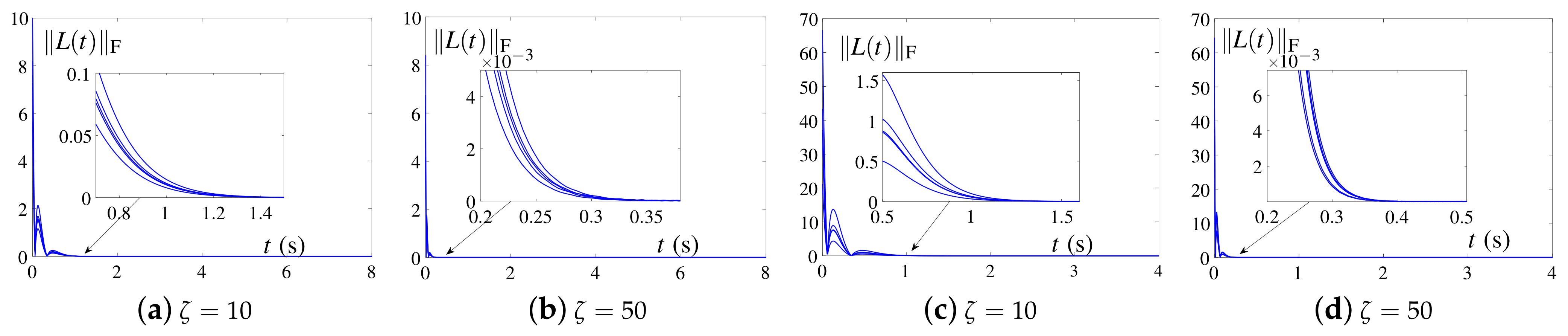

4.1. Example 1

4.2. Example 2

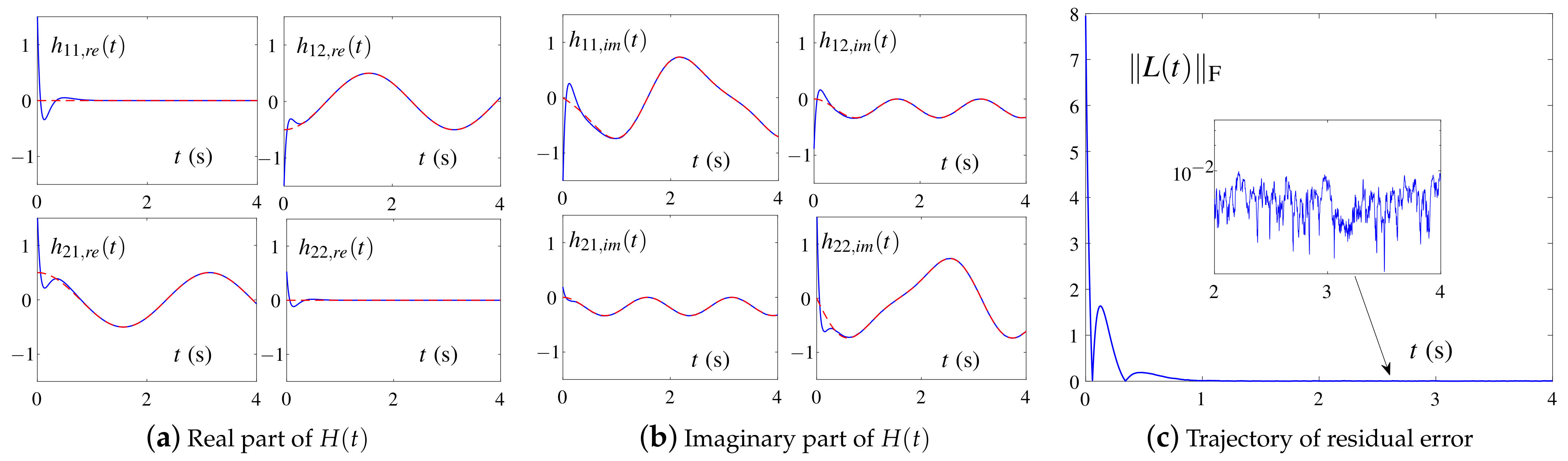

4.3. Experimental Comparison of Real Lyapunov Equation under Real Noises

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| HNN | Hopfield neural network |

| RNN | recurrent neural network |

| ZNN | zeroing neural networks |

| IEZNN | integral-enhanced ZNN |

| NTZNN | noise-tolerant ZNN |

| RNZNN | robust nonlinear ZNN |

| VPZNN | varying-parameter ZNN |

| CNRZNN | complex noise-resistant ZNN |

| TDLE | time-dependent Lyapunov equation |

| TDSE | time-dependent Sylvester equation |

| CTDLE | complex time-dependent Lyapunov equation |

| CTDSE | complex time-dependent Sylvester equation |

| AF | activation function |

References

- Guo, D.; Zhang, Y. Zhang neural network, Getz-Marsden dynamic system, and discrete-time algorithms for time-varying matrix inversion with application to robots’ kinematic control. Neurocomputing 2012, 97, 22–32. [Google Scholar] [CrossRef]

- Shi, Y.; Zhang, Y. New discrete-time models of zeroing neural network solving systems of time-variant linear and nonlinear inequalities. IEEE Trans. Syst. Man Cybern. Syst. 2020, 50, 565–576. [Google Scholar] [CrossRef]

- Huang, H.; Fu, D.; Wang, G.; Jin, L.; Liao, S.; Wang, H. Modified Newton integration algorithm with noise suppression for online dynamic nonlinear optimization. Numer. Algorithms 2021, 87, 575–599. [Google Scholar] [CrossRef]

- Tanaka, N.; Iwamoto, H. Active boundary control of an Euler–Bernoulli beam for generating vibration-free state. J. Sound Vib. 2007, 304, 570–586. [Google Scholar] [CrossRef]

- Zhang, H.; Li, Z.; Qu, Z.; Lewis, F.L. On constructing Lyapunov functions for multi-agent systems. Automatica 2015, 58, 39–42. [Google Scholar] [CrossRef] [Green Version]

- Mathews, J.H.; Fink, K.D. Numerical Methods Using MATLAB; Prentice Hall: Hoboken, NJ, USA, 2004. [Google Scholar]

- Penzl, T. Numerical solution of generalized Lyapunov equations. Adv. Comput. Math. 1998, 8, 33–48. [Google Scholar] [CrossRef]

- Stykel, T. Low-rank iterative methods for projected generalized Lyapunov equations. Electron. Trans. Numer. Anal. 2008, 30, 187–202. [Google Scholar]

- Zhang, Y.; Li, Z.; Li, K. Complex-valued Zhang neural network for online complex-valued time-varying matrix inversion. Appl. Math. Comput. 2011, 217, 10066–10073. [Google Scholar] [CrossRef]

- Stanimirović, P.S.; Petković, M.D. Improved GNN models for constant matrix inversion. Neural Process. Lett. 2019, 50, 321–339. [Google Scholar] [CrossRef]

- Jiang, W.; Lin, C.L.; Katsikis, V.N.; Mourtas, S.D.; Stanimirović, P.S.; Simos, T.E. Zeroing neural network approaches based on direct and indirect methods for solving the Yang–Baxter-like matrix equation. Mathematics 2022, 10, 1950. [Google Scholar] [CrossRef]

- Li, X.; Xu, Z.; Li, S.; Su, Z.; Zhou, X. Simultaneous obstacle avoidance and target tracking of multiple wheeled mobile robots with certified safety. IEEE Trans. Cybern. 2021. online ahead of print. [Google Scholar] [CrossRef] [PubMed]

- Kornilova, M.; Kovalnogov, V.; Fedorov, R.; Zamaleev, M.; Katsikis, V.N.; Mourtas, S.D.; Simos, T.E. Zeroing neural network for pseudoinversion of an arbitrary time-varying matrix based on singular value decomposition. Mathematics 2022, 10, 1208. [Google Scholar] [CrossRef]

- Jin, L.; Yan, J.; Du, X.; Xiao, X.; Fu, D. RNN for solving time-variant generalized Sylvester equation with applications to robots and acoustic source localization. IEEE Trans. Ind. Inform. 2020, 16, 6359–6369. [Google Scholar] [CrossRef]

- Khan, A.T.; Li, S.; Cao, X. Control framework for cooperative robots in smart home using bio-inspired neural network. Measurement 2021, 167, 108253. [Google Scholar] [CrossRef]

- Stanimirović, P.S.; Srivastava, S.; Gupta, D.K. From Zhang neural network to scaled hyperpower iterations. J. Comput. Appl. Math. 2018, 331, 133–155. [Google Scholar] [CrossRef]

- Xiao, L. A finite-time convergent Zhang neural network and its application to real-time matrix square root finding. Neural. Comput. Appl. 2019, 31, 793–800. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, J.; Weng, J. Dynamic Moore-Penrose inversion with unknown derivatives: Gradient neural network approach. IEEE Trans. Neural Netw. Learn. Syst. 2022. online ahead of print. [Google Scholar] [CrossRef]

- Guo, D.; Zhang, Y. ZNN for solving online time-varying linear matrix–vector inequality via equality conversion. Appl. Math. Comput. 2015, 259, 327–338. [Google Scholar] [CrossRef]

- Stanimirović, P.S.; Petković, M.D. Gradient neural dynamics for solving matrix equations and their applications. Neurocomputing 2018, 306, 200–212. [Google Scholar] [CrossRef]

- Uhlig, F. Zhang neural networks for fast and accurate computations of the field of values. Linear Multilinear A 2019, 68, 1894–1910. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Chen, K.; Li, X.; Yi, C.; Zhu, H. Simulink modeling and comparison of Zhang neural networks and gradient neural networks for time-varying Lyapunov equation solving. Proc. Int. Conf. Nat. Comput. ICNC 2008, 3, 521–525. [Google Scholar]

- Yi, C.; Zhang, Y.; Guo, D. A new type of recurrent neural networks for real-time solution of Lyapunov equation with time-varying coefficient matrices. Math. Comput. Simul. 2013, 92, 40–52. [Google Scholar] [CrossRef]

- Lv, X.; Xiao, L.; Tan, Z.; Yang, Z. Wsbp function activated Zhang dynamic with finite-time convergence applied to Lyapunov equation. Neurocomputing 2018, 314, 310–315. [Google Scholar] [CrossRef]

- Shi, Y.; Mou, C.; Qi, Y.; Li, B.; Li, S.; Yang, B. Design, analysis and verification of recurrent neural dynamics for handling time-variant augmented Sylvester linear system. Neurocomputing 2021, 426, 274–284. [Google Scholar] [CrossRef]

- Xiao, L.; Tao, J.; Li, W. An arctan-type varying-parameter ZNN for solving time-varying complex Sylvester equations in finite time. IEEE Trans. Ind. Inform. 2022, 18, 3651–3660. [Google Scholar] [CrossRef]

- Guo, D.; Yi, C.; Zhang, Y. Zhang neural network versus gradient-based neural network for time-varying linear matrix equation solving. Neurocomputing 2011, 74, 3708–3712. [Google Scholar] [CrossRef]

- Ding, L.; Xiao, L.; Zhou, K.; Lan, Y.; Zhang, Y.; Li, J. An improved complex-valued recurrent neural network model for time-varying complex-valued Sylvester equation. IEEE Access 2019, 7, 19291–19302. [Google Scholar] [CrossRef]

- Jin, L.; Zhang, Y.; Li, S. Integration-enhanced Zhang neural network for real-time-varying matrix inversion in the presence of various kinds of noises. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 2615–2627. [Google Scholar] [CrossRef] [PubMed]

- Yan, J.; Xiao, X.; Li, H.; Zhang, J.; Yan, J.; Liu, M. Noise-tolerant zeroing neural network for solving non-stationary Lyapunov equation. IEEE Access 2019, 7, 41517–41524. [Google Scholar] [CrossRef]

- Xiao, L.; Zhang, Y.; Hu, Z.; Dai, J. Performance benefits of robust nonlinear zeroing neural network for finding accurate solution of Lyapunov equation in presence of various noises. IEEE Trans. Ind. Inform. 2019, 15, 5161–5171. [Google Scholar] [CrossRef]

- Xiang, Q.; Li, W.; Liao, B.; Huang, Z. Noise-resistant discrete-time neural dynamics for computing time-dependent Lyapunov equation. IEEE Access 2018, 6, 45359–45371. [Google Scholar] [CrossRef]

- He, Y.; Liao, B.; Xiao, L.; Han, L.; Xiao, X. Double accelerated convergence ZNN with noise-suppression for handling dynamic matrix inversion. Mathematics 2021, 10, 50. [Google Scholar] [CrossRef]

- Wang, G.; Hao, Z.; Zhang, B.; Jin, L. Convergence and robustness of bounded recurrent neural networks for solving dynamic Lyapunov equations. Inf. Sci. 2022, 588, 106–123. [Google Scholar] [CrossRef]

- Xiao, L.; He, Y. A noise-suppression ZNN model with new variable parameter for dynamic Sylvester equation. IEEE Trans. Ind. Inform. 2021, 17, 7513–7522. [Google Scholar] [CrossRef]

- Liao, B.; Xiang, Q.; Li, S. Bounded Z-type neurodynamics with limited-time convergence and noise tolerance for calculating time-dependent Lyapunov equation. Neurocomputing 2019, 325, 234–241. [Google Scholar] [CrossRef]

- Sun, Z.; Wang, G.; Jin, L.; Cheng, C.; Zhang, B.; Yu, J. Noise-suppressing zeroing neural network for online solving time-varying matrix square roots problems: A control-theoretic approach. Expert Syst. Appl. 2022, 192, 116272. [Google Scholar] [CrossRef]

- Trefethen, L.N.; Bau, D., III. Numerical Linear Algebra; Siam: Philadelphia, PA, USA, 1997; Volume 50. [Google Scholar]

- Zhang, Y.; Jiang, D.; Wang, J. A recurrent neural network for solving Sylvester equation with time-varying coefficients. IEEE Trans. Neural Netw. 2002, 13, 1053–1063. [Google Scholar] [CrossRef]

- Durrett, R. Probability: Theory and Examples; Cambridge University Press: Cambridge, UK, 2019; Volume 49. [Google Scholar]

- Zhang, Y.; Jin, L.; Guo, D.; Yin, Y.; Chou, Y. Taylor-type 1-step-ahead numerical differentiation rule for first-order derivative approximation and ZNN discretization. J. Appl. Math. Comput. 2015, 273, 29–40. [Google Scholar] [CrossRef]

- Oppenheim, A.V.; Willsky, A.S. Signals & Systems; Prentice-Hall: Englewood Cliffs, NJ, USA, 1997. [Google Scholar]

| Problem | Type | Integral Term | Linear Noise Rejection | Quadratic Noise Rejection | |

|---|---|---|---|---|---|

| This Paper | Time-Dependent Lyapunov | Complex-Valued | Double Integral | Strong | Strong |

| [30] | time-dependent Lyapunov | real-valued | single integral | weak | weak |

| [32] | time-dependent Lyapunov | real-valued | single integral | weak | weak |

| [36] | time-dependent Lyapunov | real-valued | single integral | weak | weak |

| [31] | time-dependent Lyapunov | real-valued | absence | weak | weak |

| [22] | time-dependent Lyapunov | real-valued | absence | none | none |

| [28] | time-dependent Sylvester | complex-valued | absence | none | none |

| [25] | time-dependent Sylvester | real-valued | absence | none | none |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liao, B.; Hua, C.; Cao, X.; Katsikis, V.N.; Li, S. Complex Noise-Resistant Zeroing Neural Network for Computing Complex Time-Dependent Lyapunov Equation. Mathematics 2022, 10, 2817. https://doi.org/10.3390/math10152817

Liao B, Hua C, Cao X, Katsikis VN, Li S. Complex Noise-Resistant Zeroing Neural Network for Computing Complex Time-Dependent Lyapunov Equation. Mathematics. 2022; 10(15):2817. https://doi.org/10.3390/math10152817

Chicago/Turabian StyleLiao, Bolin, Cheng Hua, Xinwei Cao, Vasilios N. Katsikis, and Shuai Li. 2022. "Complex Noise-Resistant Zeroing Neural Network for Computing Complex Time-Dependent Lyapunov Equation" Mathematics 10, no. 15: 2817. https://doi.org/10.3390/math10152817

APA StyleLiao, B., Hua, C., Cao, X., Katsikis, V. N., & Li, S. (2022). Complex Noise-Resistant Zeroing Neural Network for Computing Complex Time-Dependent Lyapunov Equation. Mathematics, 10(15), 2817. https://doi.org/10.3390/math10152817