Abstract

The Landweber iteration method is one of the most popular methods for the solution of linear discrete ill-posed problems. The diversity of physical problems and the diversity of operators that result from them leads us to think about updating the main methods and algorithms to achieve the best results. We considered in this work the linear operator equation and the use of a new version of the Landweber iterative method as an iterative solver. The main goal of updating the Landweber iteration method is to make the iteration process fast and more accurate. We used a polar decomposition to achieve a symmetric positive definite operator instead of an identity operator in the classical Landweber method. We carried out the convergence and other necessary analyses to prove the usability of the new iteration method. The residual method was used as an analysis method to rate the convergence of the iteration. The modified iterative method was compared to the classical Landweber method. A numerical experiment illustrates the effectiveness of this method by applying it to solve the inverse boundary value problem of the heat equation (IBVP).

MSC:

65F22; 15A29; 65F10; 90C20

1. Introduction

In the early twentieth century, Hadamard [1] labeled the situations for well-posed problems for linear operator equations, stating that a problem is well-posed when it fulfilled the following points:

- Solution exists;

- Uniqueness, this solution is unique;

- Stability (the given data are continuously dependent on the solution).

If at least one of the above points or conditions is not fulfilled in the problem, the problem is considered to be an ill-posed problem. The violations of 1 and 2 can often be improved with a small re-formulation of the problem. Violations of stability are much harder to remedy because they imply that a small disturbance in the data leads to a large disturbance in the estimated solution [2,3,4,5]. The inverse problem under the study of the heat equation can be solved by many methods; for example, the method of regularization by Tikhonov A.N. [6], the method of Lavrentiev M.M. [7], the method of quasi-solutions by Ivanova V.K. [8], and many others.

The Landweber iteration is a basic and initial method for solving inverse problems or linear operator equations. Due to its ease of operation and relatively low complexity per iteration, the Landweber method attracted a lot of attention for studying inverse problems. The useful methods for solving inverse problems depend on the profound insight through the problems related to the algorithms for solving these problems [9,10,11,12,13,14].

Regarding Landweber iterations in Hilbert spaces, see [15,16]. In the last years, the Landweber method has been extended to solve inverse problems in many spaces, such as t Banach spaces [17,18,19]. Many works have modified the Landweber iteration method, such as [20], which used a revision of the residual principle method for iteration of the Landweber in order to solve the inverse problems (linear and non-linear) in Banach spaces and the author proved the new convergence results. In [21], the author estimated the convergence of the error between the exact and estimated solution by considering the regularization level in the estimated solution by using the initial data for selecting the parameter of regularization. In [22], the author presents the Landweber iterative method and accelerates it by using a sparse Jacobian-based reconstruction method.

This article presents an update of the classical Landweber iteration. We use a new reversible operator to increase iteration convergence. We also compared the proposed iterative method with the classical Landweber iteration. A numerical experiment illustrates the effectiveness of this method through an application solving the heat equation’s inverse boundary value problem.

2. Problem Statement

In general, the generic form used to explain numerical solutions to ill-posed problems is applied to the following linear operator equation for the first kind:

where is a linear positive compact operator and act from a Hilbert space to the same . We also assume that and the operator compressed, self-adjoint, and affirmative description . In particular, the operator is severely ill-conditioned and may be rank-deficient. Operators of this kind arise from the discretization of linear ill-posed problems, such as Fredholm or Volterra integral equations of the first kind.

Right-hand side data are given with some error or measurement noise, which is typical of practical studies. Considering the following scenario to depict this general situation: assume the right-hand side of the problem (1) given with some error level . Instead of , we have with the following norm equation:

We pose a problem that requires finding the estimated solution for (1) and (2) with some input data or information provided in the right-hand side . We called an estimated solution, and the parameter presented the regularization parameter and this parameter linked to the error level , i.e., , and we calculated the error estimate .

The definition of steady ways for addressing the ill-posed problems are dependent on the usage of a priori data concerning the inexactness of the incoming data. Once the right-hand side is given with some error, we now attempt to solve following problem:

Variational methods, as an alternative way of solving the problem (3), compute minimizing the norm for the residual form , or the functional discrepancy method as the following form:

There are varieties of possible solutions to the problem that satisfy Equation (3), which are exact in the face of some discrepancy . Considering the well-posed problems required the identification of the class of wanted solutions and clearly giving a priori restrictions on the solution. We are attentive to the bounded solutions for the given problem (1) and (2), hence, the a priori is constrained with the following equation:

where

3. Method of Iterative Regularization

In order to solve the problems with ill-posed definitions, the iteration methods have been successfully used. By calling the problem (1), we will discuss the specific features in of the updated iteration Landweber method below. The ill-posedness behaviors of the inverse problems are connected to the fact that the eigenvalues of operator , shown in reducing order , tend to zero for .

The general form of the iteration method for solving Equation (3)with approximately given input data, can be rewritten as following form:

in this method, called the two-layer iteration method, the is defined as a relaxation parameter and is a positive definite operator. is equivalent to the identity operator, the (6) classical Landweber iteration method [23].

There are several well-known methods that can be driven in the form of (3) by selecting the operator . Cimmino’s method [24] is , where represents the ith row of . The CAV method [25] used the following form of operator , where is the number of non-zeroes in the jth column of . We define the new type of the Landweber iteration method by using as follows:

Q is unitary operator and , by using the polar decomposition of the operator :

Now the new version of the iteration method is shown in the following iterative equation:

Depending on the specific method, iteration method (9) can resolve the variational problem by the minimization action of the functional discrepancy method We defined the MLI algorithm (Algorithm 1), which represented the Modified-Landweber iterative (MLI).

| Algorithm 1 MLI (number iteration) |

|

The classical Landweber method can be defined by the following algorithm (Algorithm 2), and we called it the Classical-Landweber iterative (CLI) method.

| Algorithm 2 CLI (number of iteration) |

|

In order to make the iteration method in (9) effective, it is critical to invent faithful stopping criteria. This method consists of reducing the given problem to the variational problem:

The iterating process is much longer with the noise input data , which leads to an iterative solution with a loss of resolution . This phenomenon is called semi-convergence and is presented by Natterer [26].

4. Analysis of Convergence of Iterations

In this section, we consider Theorem 1 in [27]. To understand the convergence behavior of the iteration technique, we took a closer look at the errors between the estimated and exact solutions by using the constant parameter .

We called as the unique solution for Equation (4).

Theorem 1.

Let for . Then, the iterates of (9) converge to a solution (called) of (4) if and only if with the largest singular value of .

Proof of Theorem 1.

Assume . Let and .

Then using (9), we obtain:

We assume that the singular value decomposition (SVD) for . We represent in the following form:

where , , and

Using (11), we have

where

It follows that:

with SVD

where

Note that if , if , for , i.e., , we find

This completes the proof. □

5. Number of Iterations

We need to formulate the conditions under which MLI (9) provides the estimated solution for problem (1) and (2) after the number of iterations .

Theorem 2.

Let the number of iterations in MLI (9) andas. Then,such that.

Proof of Theorem 2.

We represent the inexactness at the nth iteration as . By (9), we obtain:

where some initial estimate.

In order to find the exact solution, we can use the following representation:

This illustration corresponds to the iteration solution for problem (1) in which the initial approximation coincides with the exact solution.

With equality (17) taken into account, for the inexactness, we obtain the following:

where , with being inexactness. The first term in (18) is in the iteration method, and the term is related to the inexactness on the input data of (1).

Under , where , we take

In order to verify this variation, we have passed from difference (19) to the equivalent inequality

where self-adjoint and positive definition with the estimate taken into account, we obtain:

provided is satisfied.

Taking inequality (19), we obtained:

The estimate deserves a more detailed study. To begin with, assume that . Such a situation is met, for instance, with the initial approximation in the solution to problems (1) and (2) in class (5). Let us show that for . We use the representation:

For any small , can found N such that

By , we obtain:

In case of sufficiently large N, for the first term we obtain the following:

The substitution of (20) into (18) yields the estimate

such that for . Estimating (21) completes the proof. □

In the updated iteration method (9), the number of iterations matched the inexactness input data; also the input data or right-hand side serves the regularization parameter.

6. Estimating the Rate of Convergence

We know the convergence without needing the discovery the rate. By narrowing the class of a priori limitations of the solution, we define the following theorem for the estimated solution as an explicit function of the inexactness of the input data.

Consider the iterative method (9) under the more stringent constraints of the iterative parameter,

Theorem 3.

Suppose that the exact solution of problem (3) belongs to the class

Then, for the inaccuracy of the iteration method (9) with

their holds the estimate

Proof of Theorem 3.

By condition (23), it is necessary to know the quantity of in (21). The iterations number n determines the outcome.

With , we find , by using the above, we find:

After that, in view of (23) we find:

Under constraint (22) on the iteration parameter, we have and, hence,

where . The function attains its maximum at the point

and,

Replace the (25) solutions in estimate (24), in which the constant

depends on and , and does not depend on . Minimizing the input data of (24) lets us formulate the stop criterion:

i.e., . Here, for the inexactness of the estimated solution, we obtain the following estimation

with

The approximation of (27) establishes the direct requirement of the rate at which the approximated solution converges to the exact solution on inexactness δ and on the exact solution’s smoothness (parameter p). □

7. Numerical Results

We considered the inverse boundary value problem found in [28]:

supposing that the is a function such that

and is the identified number. By applying the separation of variables method, we achieve:

where and

Integrating by two parts for the right-hand side of (34), we obtain:

where , by (32) and

From (33) and (37) we find the next integral equation for the first kind:

where . We define the operator by:

We used the discretization algorithm in [29] and applied it in [30], by specifying the derivative for the kernel we achieve the following:

where .

The operator is an infinite-dimensional operator. The next step applies the discretization algorithm to the integral Equation (40) and converts the operator to the finite-dimension operator , where for and for operator , there is an operator , where for

From the above, we convert the system of differential Equations (28)–(32) to the linear operator equation where by reformulating the differential equation to an integral equation by using the separation of variables and applying the discretization algorithm to convert the integral equation into a system of linear algebra equations or linear operator equations.

where

where

The operator in (43) is a non-injective operator, because it has the triangular property [28].

Considering the above problem, we need to find the function . The real solution, and the represented the input function, where the , and . In [31], we prepared a case study using MATLAB code. First of all, the discretization algorithm was applied to obtain the operator, and by using , we obtained the vector . We then add some noise, as explained in the code for obtaining the vector . Finally, we computed the SVD for operator , and used it in the MLI and CLI algorithms to obtain the approximation solutions .

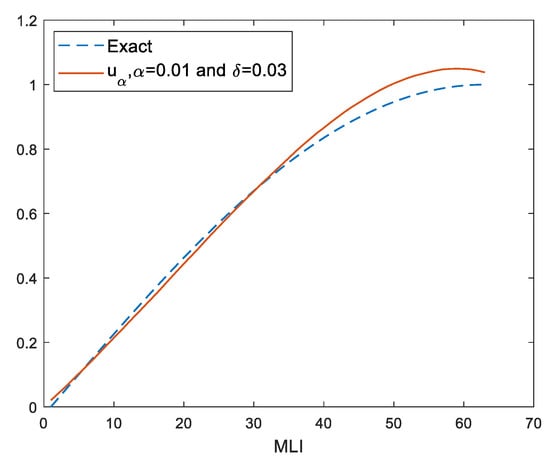

Algorithm 1 MLI (number iteration): MLI the value of the regularization parameter that was used with 1000 iterations (see Figure 1).

Figure 1.

Approximation solution by MLI.

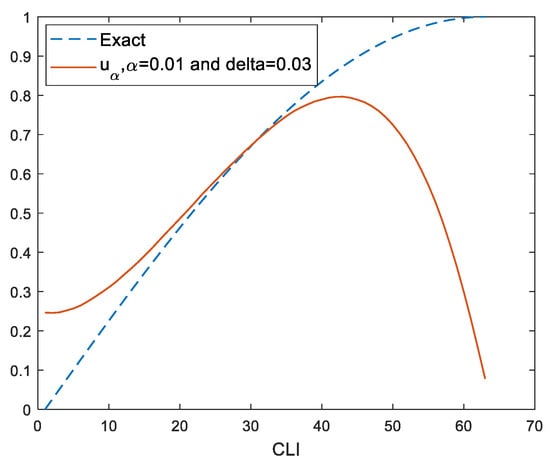

Algorithm 2 CLI (number of iteration): the value of regularization parameter with 1000 iterations (see Figure 2).

Figure 2.

Approximation solution by CLI.

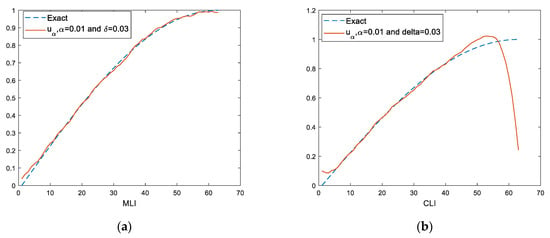

In Figure 3, the iterations increased to 10,000.

Figure 3.

Approximation solution with 10,000 iterations: (a) MLI algorithm; (b) CLI algorithm.

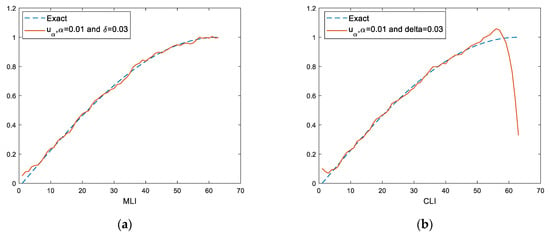

In Figure 4, the iterations increased to 20,000.

Figure 4.

Approximation solution with 20,000 iterations: (a) MLI algorithm; (b) CLI algorithm.

8. Conclusions Remarks and Observations

This paper defined algorithms that solve ill-posed inverse problems by using an iterative regularization method (Landweber iterative type). The regularization parameter was chosen by considering the iteration method that was most consistent with the real solution. The residual method was used as an analysis method to rate the last iteration’s convergence. We observed that the minimum of the discrepancy is extremely unstable with respect to data perturbations on the right-hand side of the linear operator equation. It is clear that the updated Landweber iteration algorithm obtained a good approximation solution compared with the classical Landweber method. That means that our updated Landweber method successfully fixed this instability in the discrepancy method. The suggested algorithms successfully solve the inverse boundary value problem for the heat-conducting problem.

Author Contributions

Data curation, H.K.I.A.-M., M.A., H.A. and E.-S.M.E.-k.; Formal analysis, M.A., H.A. and A.K.; Funding acquisition, H.A., M.A.; Investigation, H.K.I.A.-M., M.A. and H.A.; Methodology, H.K.I.A.-M., M.A. and E.-S.M.E.-k.; Project administration, M.A. and H.A; Resources, M.A. and A.K.; Software, H.K.I.A.-M. and M.A.; Visualization, M.A. All authors have read and agreed to the published version of the manuscript.

Funding

The work was supported by Act 211 Government of the Russian Federation, contract No. 02.A03.21.0011. The work was supported by the Ministry of Science and Higher Education of the Russian Federation (government order FENU-2020-0022).

Data Availability Statement

The datasets used to support this study are included within the code on GitHub. We prepared the case study by using MATLAB code. First, the discretization algorithm was applied to obtain the operator and, by using it, we obtained the vector. We then added some noise as explained in the code to obtain the vector. Finally, we computed the SVD for the operator and used it in the MLI and CLI algorithms to obtain approximation solutions [31].

Conflicts of Interest

The authors declare no conflict of interest.

References

- Daniell, P.J.; Hadamard, J. Lectures on Cauchy’s Problem in Linear Partial Differential Equations. Math. Gaz. 1924, 12, 173. [Google Scholar] [CrossRef]

- Al-Mahdawi, H.K. Studying the Picard’s Method for Solving the Inverse Cauchy Problem for Heat Conductivity Equations. Bull. South Ural State Univ. Ser. Comput. Math. Softw. Eng. 2019, 8, 5–14. [Google Scholar] [CrossRef]

- Al-Mahdawi, H.K. Development the Regularization Computing Method for Solving Boundary Value Problem to Heat Equation in the Composite Materials. J. Phys. Conf. Ser. 2021, 1999, 12136. [Google Scholar] [CrossRef]

- Al-Mahdawi, H.K. Development the Numerical Method to Solve the Inverse Initial Value Problem for the Thermal Conductivity Equation of Composite Materials. J. Phys. Conf. Ser. 2021, 1879, 32016. [Google Scholar] [CrossRef]

- Al-Mahdawi, H.K.; Sidikova, A.I. An Approximate Solution of Fredholm Integral Equation Of The First Kind By The Regularization Method With Parallel Computing. Turkish J. Comput. Math. Educ. 2021, 12, 4582–4591. [Google Scholar]

- Tikhonov, A.N. On the Regularization of Ill-Posed Problems. Proc. USSR Acad. Sci. 1963, 153, 49–52. [Google Scholar]

- Lavrentiev, M.M. The Inverse-Problem in Potential Theory. Dokl. Akad. Nauk SSSR 1956, 106, 389–390. [Google Scholar]

- Ivanov, V.K. The application of Picard’s method to the solution of integral equations of the first kind. Bull. Inst. Politenn. Iasi. 1968, 14, 71–78. [Google Scholar]

- Glasko, V.B.; Kulik, N.I.; Shklyarov, I.N.; Tikhonov, A.N. An inverse problem of heat conductivity. Zhurnal Vychislitel’ noi Mat. i Mat. Fiz. 1979, 19, 768–774. [Google Scholar]

- Belonosov, A.S.; Shishlenin, M.A. Continuation problem for the parabolic equation with the data on the part of the boundary. Siber. Electron. Math. Rep. 2014, 11, 22–34. [Google Scholar]

- Kabanikhin, S.I.; Hasanov, A.; Penenko, A.V. A gradient descent method for solving an inverse coefficient heat conduction problem. Numer. Anal. Appl. 2008, 1, 34–45. [Google Scholar] [CrossRef]

- Yagola, A.G.; Stepanova, I.E.; Van, Y.; Titarenko, V.N. Obratnye zadachi i metody ikh resheniya: Prilozheniya k geofizike (Inverse Problems and Methods for Their Solution: Applications to Geophysics); Binom. Laboratoriya Znanii: Moscow, Russia, 2014. [Google Scholar]

- Kabanikhin, S.I.; Krivorot’ko, O.I.; Shishlenin, M.A. A numerical method for solving an inverse thermoacoustic problem. Numer. Anal. Appl. 2013, 6, 34–39. [Google Scholar] [CrossRef]

- Tanana, V.P. On the order-optimality of the projection regularization method in solving inverse problems. Sib. Zhurnal Ind. Mat. 2004, 7, 117–132. [Google Scholar]

- Clason, C.; Nhu, V.H. Bouligand-Levenberg-Marquardt iteration for a non-smooth ill-posed inverse problem. arXiv 2019, arXiv:1902.10596. [Google Scholar] [CrossRef]

- Clason, C.; Nhu, V.H. Bouligand–Landweber iteration for a non-smooth ill-posed problem. Numer. Math. 2019, 142, 789–832. [Google Scholar] [CrossRef] [Green Version]

- Jin, Q. Landweber-Kaczmarz method in Banach spaces with inexact inner solvers. Inverse Probl. 2016, 32, 104005. [Google Scholar] [CrossRef] [Green Version]

- Kaltenbacher, B.; Schöpfer, F.; Schuster, T. Convergence of some iterative methods for the regularization of nonlinear ill-posed problems in Banach spaces. Inverse Probl. 2009, 25, 19. [Google Scholar] [CrossRef] [Green Version]

- Schöpfer, F.; Louis, A.K.; Schuster, T. Nonlinear iterative methods for linear ill-posed problems in Banach spaces. Inverse Probl. 2006, 22, 311. [Google Scholar] [CrossRef] [Green Version]

- Real, R.; Jin, Q. A revisit on Landweber iteration. Inverse Probl. 2020, 36, 75011. [Google Scholar] [CrossRef]

- Li, D.-G.; Fu, J.-L.; Yang, F.; Li, X.-X. Landweber Iterative Regularization Method for Identifying the Initial Value Problem of the Rayleigh–Stokes Equation. Fractal Fract. 2021, 5, 193. [Google Scholar] [CrossRef]

- Wang, J. A two—Step accelerated Landweber—Type iteration regularization algorithm for sparse reconstruction of electrical impedance tomography. Math. Methods Appl. Sci. 2021, 1–12. [Google Scholar] [CrossRef]

- Landweber, L. An Iteration Formula for Fredholm Integral Equations of the First Kind. Am. J. Math. 1951, 73, 615. [Google Scholar] [CrossRef]

- Cimmino, G. Cacolo approssimato per le soluzioni dei systemi di equazioni lineari. La Ric. Sci. 1938, 1, 326–333. [Google Scholar]

- Censor, Y.; Gordon, D.; Gordon, R. Component averaging: An efficient iterative parallel algorithm for large and sparse unstructured problems. Parallel Comput. 2001, 27, 777–808. [Google Scholar] [CrossRef]

- Natterer, F. Computerized tomography. In The Mathematics of Computerized Tomography; Springer: Berlin/Heidelberg, Germany, 1986; pp. 1–8. [Google Scholar]

- Mesgarani, H.; Azari, Y. Numerical investigation of Fredholm integral equation of the first kind with noisy data. Math. Sci. 2019, 13, 267–268. [Google Scholar] [CrossRef] [Green Version]

- Al-Mahdawi, H.K. Solving of an Inverse Boundary Value Problem for the Heat Conduction Equation by Using Lavrentiev Regularization Method. J. Phys. Conf. Ser. 2021, 1715, 12032. [Google Scholar] [CrossRef]

- Tanana, V.P.; Vishnyakov, E.Y.; Sidikova, A.I. An approximate solution of a Fredholm integral equation of the first kind by the residual method. Numer. Anal. Appl. 2016, 9, 74–81. [Google Scholar] [CrossRef]

- Al-Mahdawi, H.K. Development of a numerical method for solving the inverse Cauchy problem for the heat equation. Bull. South Ural State Univ. Ser. Comput. Math. Softw. Eng. 2019, 8, 22–31. [Google Scholar]

- Al-Mahdawi, H.K. BVP_PDE_Heat; GitHub Inc.: San Francisco, CA, USA, 2022; Available online: https://github.com/hssnkd1/BVP_PDE_Heat/blob/main/Landweber (accessed on 25 May 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).