1. Introduction

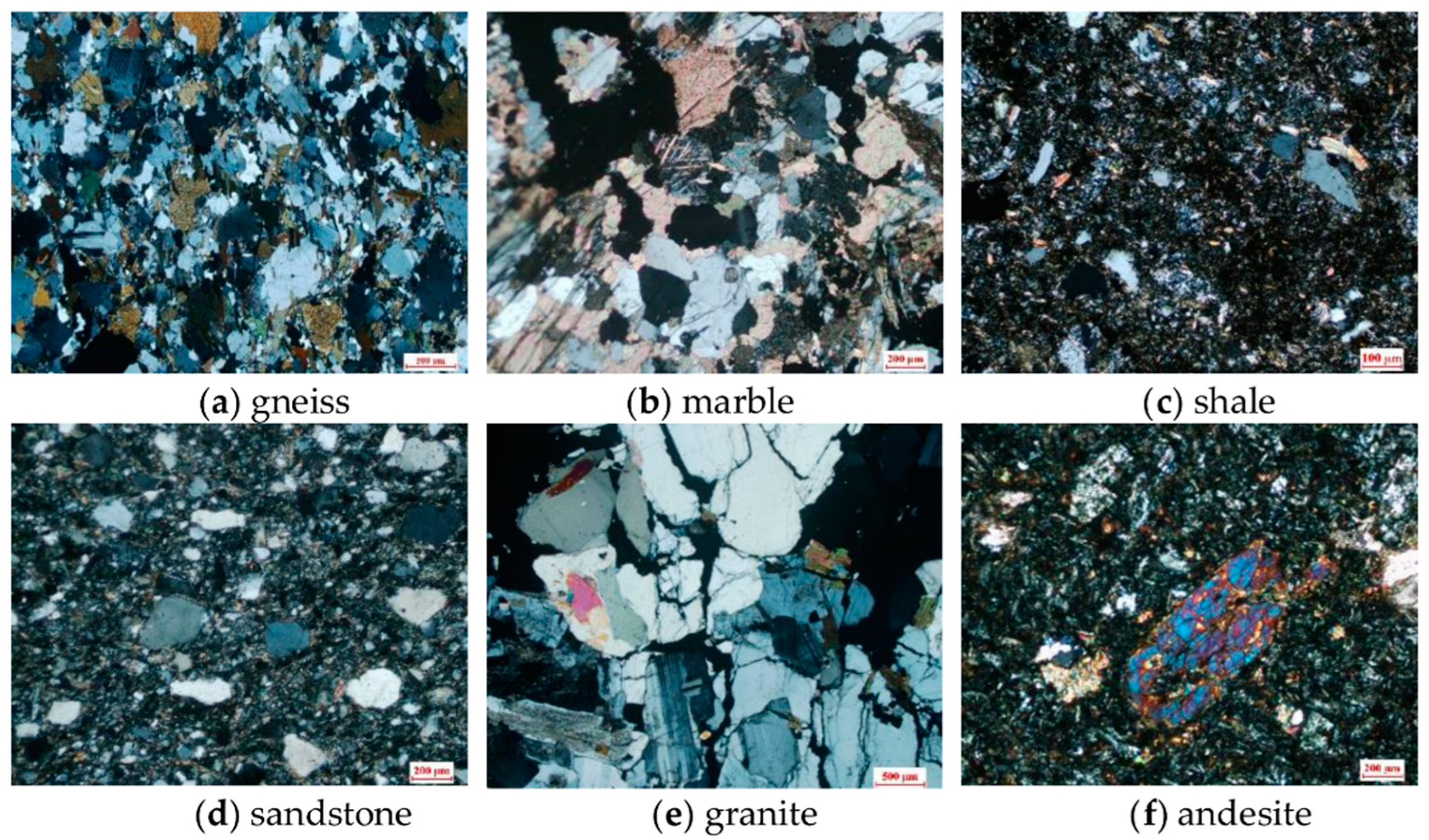

Rock type classification, a valuable task, is extremely important in geological engineering, rock mechanics, mining engineering, and resource exploration. While the characteristics of rocks’ appearance under outdoor conditions often show diversity due to illumination, shading, humidity, shape, etc., the main way of classifying rock types in situ is to distinguish rock apparent features with the utilization of auxiliary tools, such as a magnifying glass and a knife. In contrast, owing to the presence of different mineral compositions in the rock, the features of color, grain size, shape, internal cleavage, structure, and other information are visible in rock thin-section images, which can represent specific rock petrographic information. In any case, it is challenging for geologists to classify both image formats mentioned above based on their experiences, and it is also time-consuming and costly. Therefore, it is necessary for researchers to study how to classify rocks efficiently and accurately.

In the past, many scholars have studied different methods to identify rock types, which can be summarized into the following categories: physical test methods, numerical statistical analysis, and intelligent approaches.

X-ray diffraction (XRD) is a common method of physical testing that can quickly obtain rock mineral fractions, and rock types can then be classified based on rock mineral-fraction information. Shao et al. [

1] used X-ray powder crystal diffraction to accurately recognize gneiss rock feldspar, albite, and quartz but could not identify metallic minerals, such as tourmaline, sphene, etc. Chi et al. [

2] analyzed the whole-rock chemical composition by XRD and then calculated the rock impurity factor, magnesium factor, and calcium factor based on chemical compositions to make the final classification of marble. However, due to the limitations of the XRD mineral semiquantitative analysis technique, such as inaccurate quantification of mineral components, it is still necessary to rely on other methods to verify the identification results of the XRD mineral semiquantitative method.

Zhang et al. [

3,

4] utilized a mathematics statistics theory to extract rock lithology features. Sr and Yb are considered the classification characteristics of granite rock. Shaaban and Tawfik [

5] adopted a rough-set mathematical theory to classify six types of volcanic rock, and the proposed model prioritizes computation times and cost. Yin et al. [

6] combined means of image processing and pattern recognition, investigated features of rock structures in FMI image format, and developed a classification system with 81.11% accuracy. The rock thin-section image classification effect of four pattern recognition methods was evaluated by Młynarczuk et al. [

7], and finally, the nearest-neighbor algorithm and CIELab data format were confirmed as the best scheme. The methods mentioned above have good results for rock classification, but the model performance differs depending on the level of knowledge of different people. With the convenience of digital image acquisition, it is possible to accumulate a large dataset. Thus, intelligent algorithms based on large datasets are widely applied to the classification of rock types. Unlike physical and numerical analysis methods, intelligent methods involve less or no human interaction and achieve better generalization.

Marmo et al. [

8] introduced image-processing technology and an artificial neural network (ANN) to identify carbonate thin sections; the model showed 93.5% accuracy. Singh et al. [

9] followed the same method as Marmo: 27-dimensional numerical parameters were extracted as the neural network input, and the model reached 92.22% precision for classifying basaltic thin-section images. A support vector machine (SVM) algorithm was developed by Chatterjee et al. [

10]. A total of 40 features were selected out of the original 189 features as the model input, and six types of limestone were identified with 96.2% performance. Patel et al. [

11] developed a robust model based on a probabilistic neural network (PNN) and nine color histogram features, and the overall error rate of classification was below 6% on seven limestone rock types. Tian et al. [

12] proposed an SVM identification model with the combination of Principal Component Analysis (PCA) and obtained 97% classification accuracy. Khorram et al. [

13] presented a limestone classification model in which six features were obtained from segmentation images and used as the input of the neural network, and the model achieved a higher R

2 value. Intelligent methods show advantages in rock type classification. However, it is worth noting that they heavily rely on the quality of numerical features extracted by researchers, which directly determines the final performance of the model.

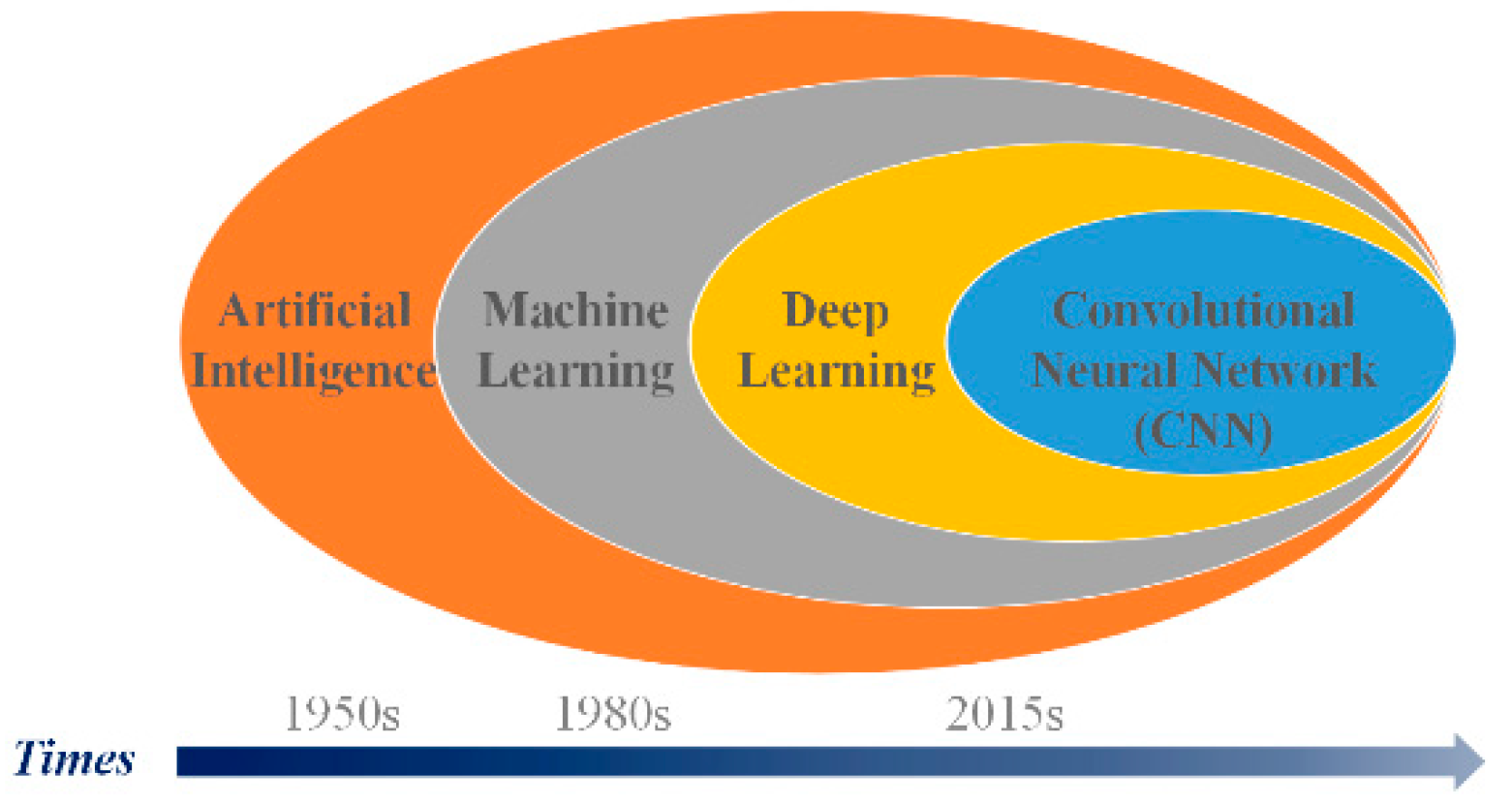

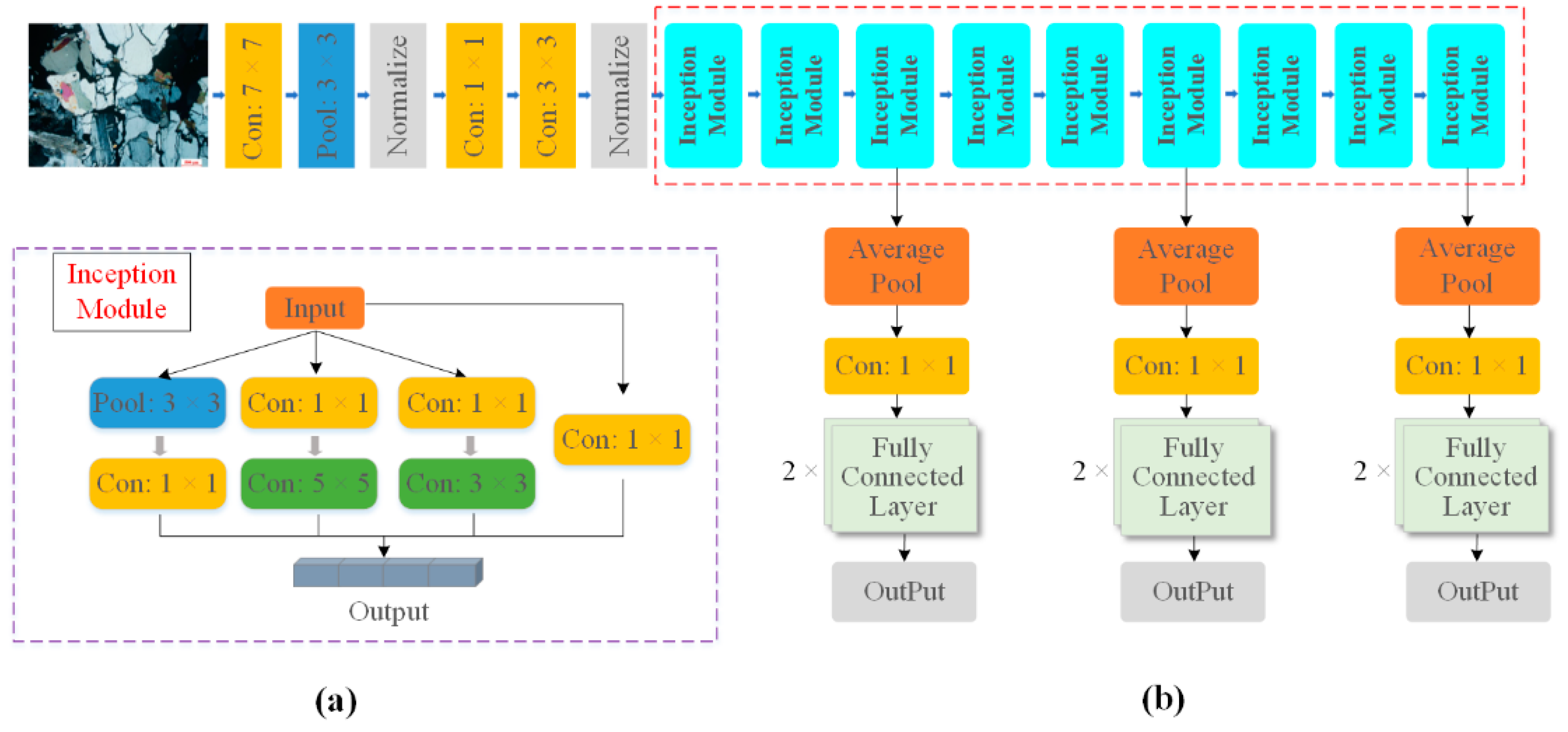

Convolutional neural networks (CNNs), another intelligent approach, also have great advantages in image-processing fields. The earliest application of a CNN was designed to solve the problem of classifying handwritten digital numbers [

14], which obtained remarkable success, and afterward, the achievements of CNNs are blooming everywhere, including in object detection [

15,

16,

17,

18,

19], face recognition [

20], natural language processing [

21,

22], remote sensing [

23,

24], autonomous driving [

25], and intelligent medicine [

26,

27,

28].

Recently, many researchers have made great breakthroughs in transferring computer-based methods to rock class identification and classification. Li et al. [

29] used an enhanced TradaBoost algorithm to recognize microscopic sandstone images collected in different areas. Polat et al. [

30] transferred two CNNs to automatically classify six types of volcanic rocks and evaluated the effect of four different optimizers. Anjos et al. [

31] proposed four CNN models to identify three kinds of Brazilian presalt carbonate rocks using microscopic thin-section images. Samet et al. [

32] presented an image segmentation method based on the fuzzy rule, which used rock thin sections as input and returned mineral segmentation regions. Yang et al. [

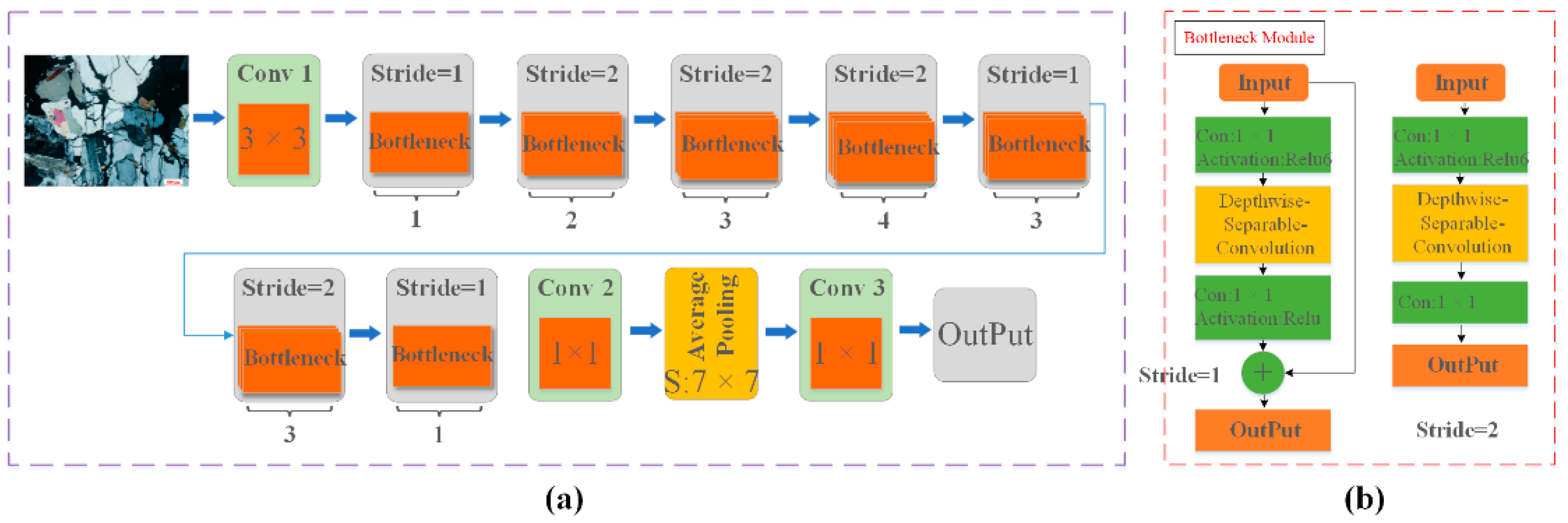

33] employed a ResNet50 neural network to classify five scales of rock thin-section images, and finally, the model obtained excellent performance. Xu et al. [

34] studied petroleum exploration and deep learning algorithms; the ResNet-18 convolutional neural network was selected to classify four types of rock thin-section images. Su et al. [

35] innovatively proposed a method that consisted of three CNNs, and the final prediction label was the combination of three CNN results. The proposed model performs well in classifying thirteen types of rock thin-section images. Gao et al. [

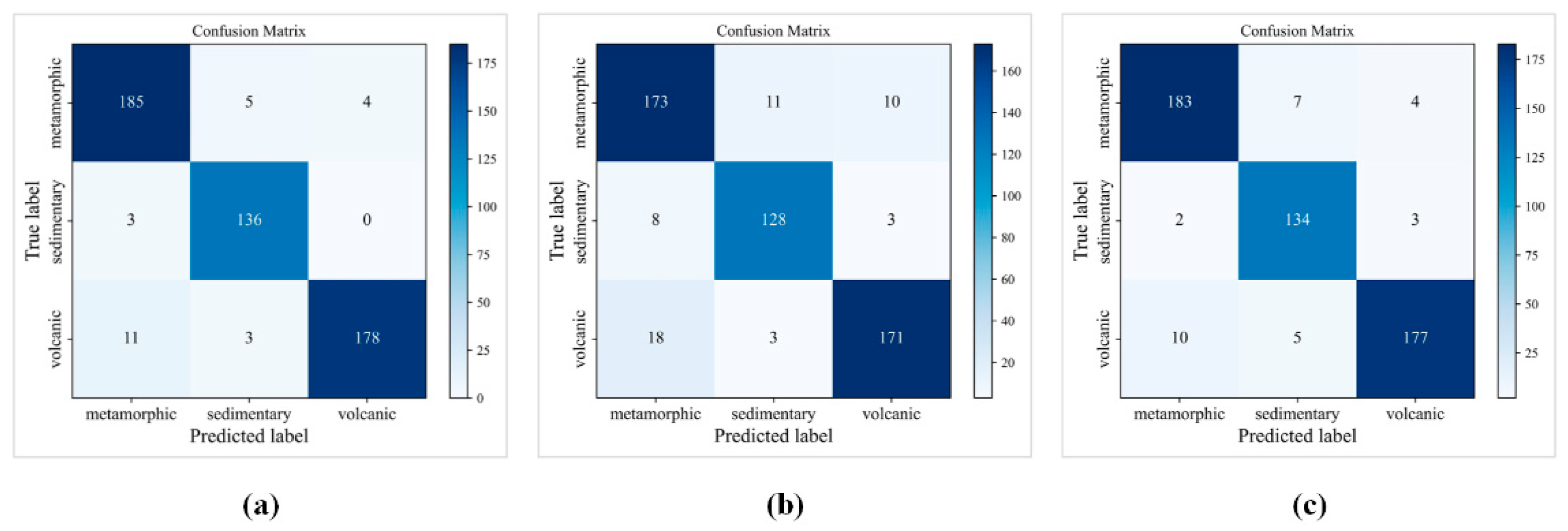

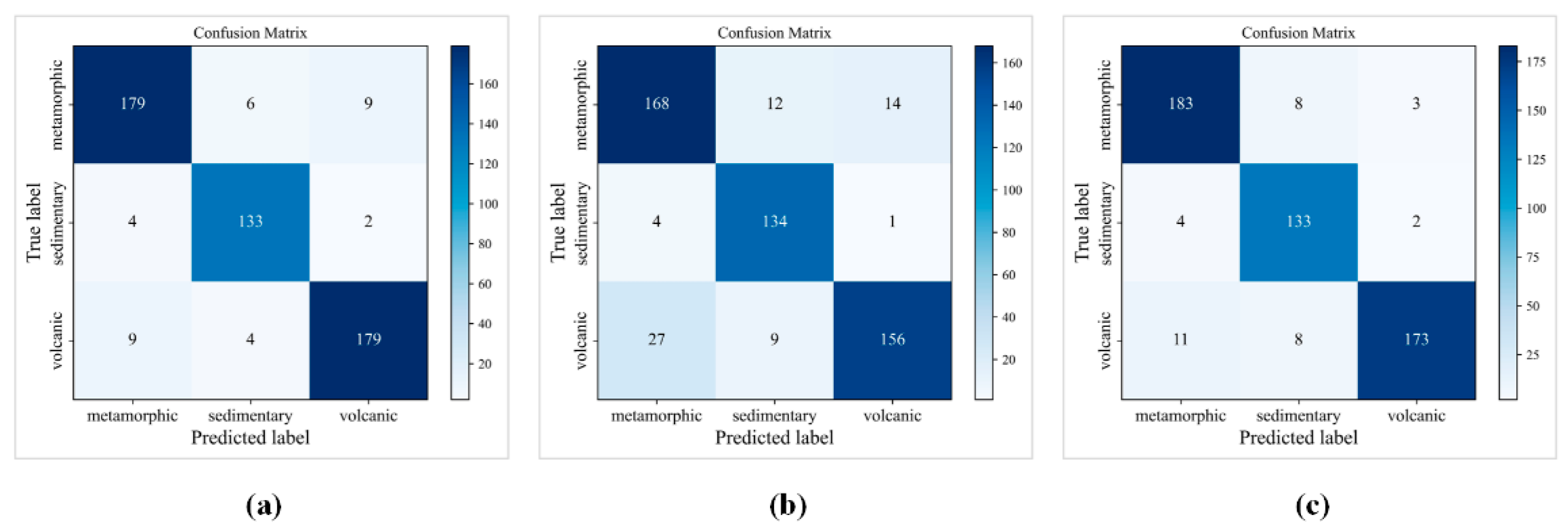

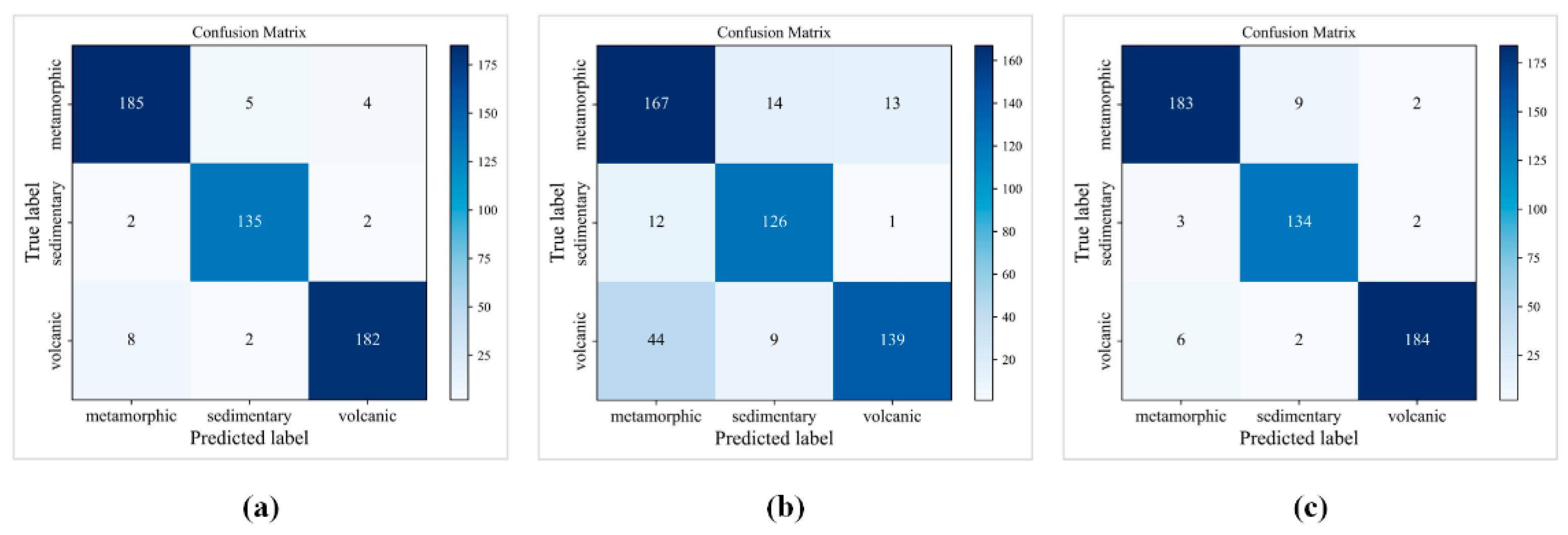

36] comprehensively compared shallow neural networks and deep neural networks on the classification of rock thin-section images, and the results show that deep neural networks outperform shallow networks. According to three main types of rock—metamorphic, sedimentary, and volcanic rock—Ma et al. [

37] studied an enhanced feature extraction CNN model based on SeNet [

38], and the model achieved 90.89% accuracy on the test dataset. Chen et al. [

39] introduced ResNet50 and ResNet101 neural networks to construct a classifier to complete the identification of rock thin-section images, reaching 90.24% and 91.63% performance, respectively. In addition, some other researchers have studied rock type classification based on datasets obtained by digital cameras instead of microscopic images [

40,

41,

42].

Of course, all the methods mentioned above provide great theoretical support for the automatic classification of rocks, while many focus on only a small number of rock classes or the subclasses of the three major rocks. To the best of our knowledge, most existing studies have focused on the neural network’s classification accuracy of rock types instead of considering how to train networks to enhance the effect of the model. Additionally, compared to the general images that could be easily distinguished by a CNN, thin-section images of rocks are special; the composition of mineral crystals in the rock thin-section image is not uniform in proportion, and there is no clear definition of semantic-level feature information, such as particle size and shape contour of mineral crystals. Meanwhile, mineral crystals fill the whole image so that there is no exact distinction between background and foreground in the rock thin-section image. Thus, it is essential to study the training methodologies of the CNN models.

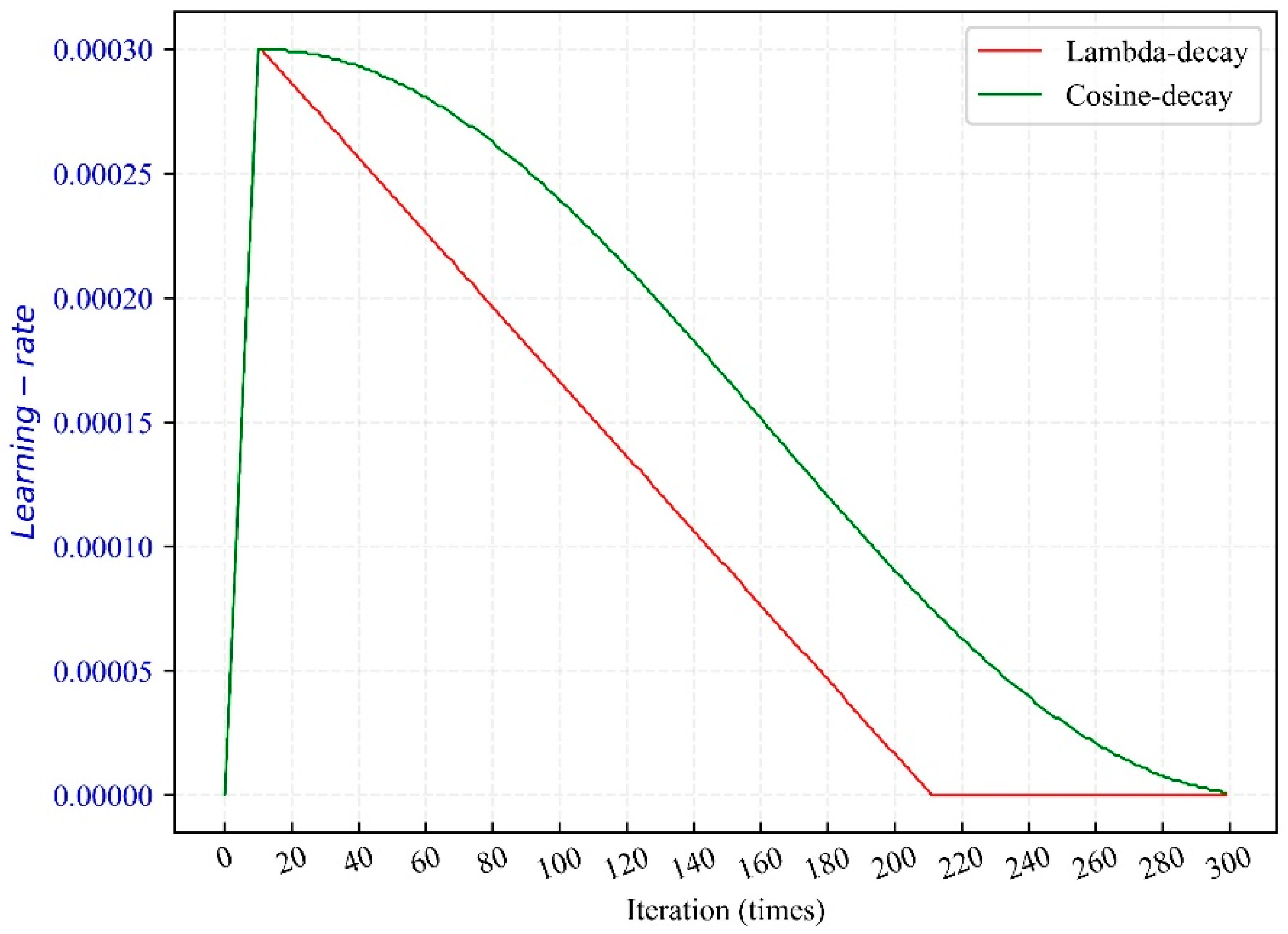

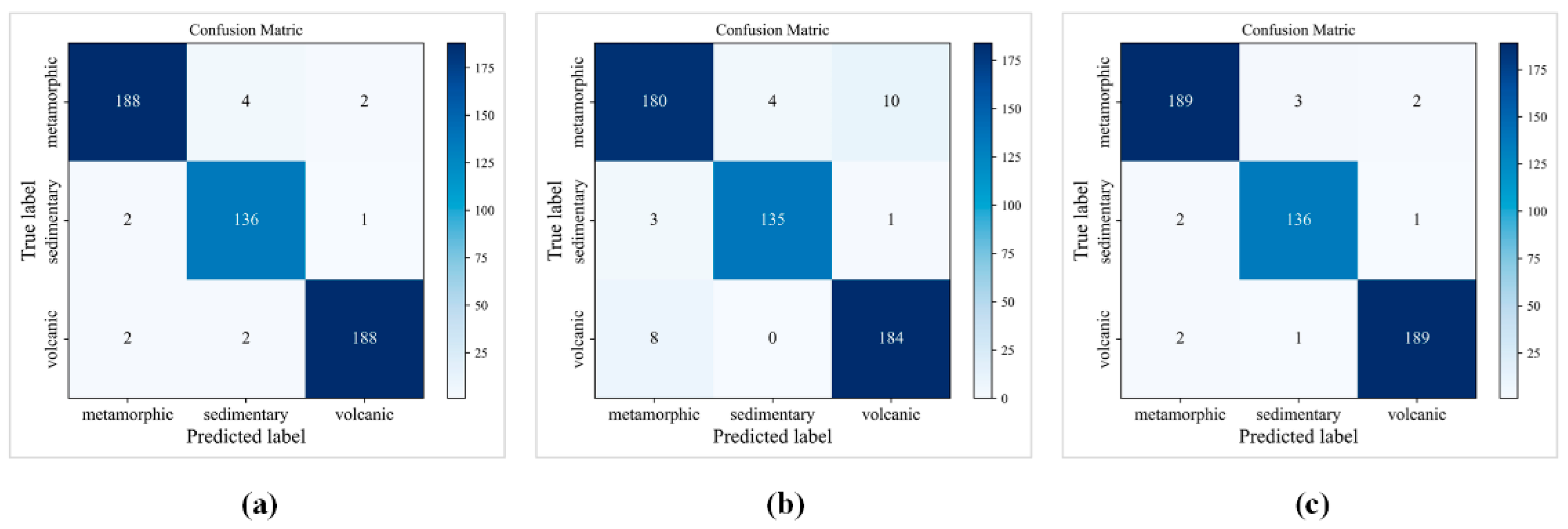

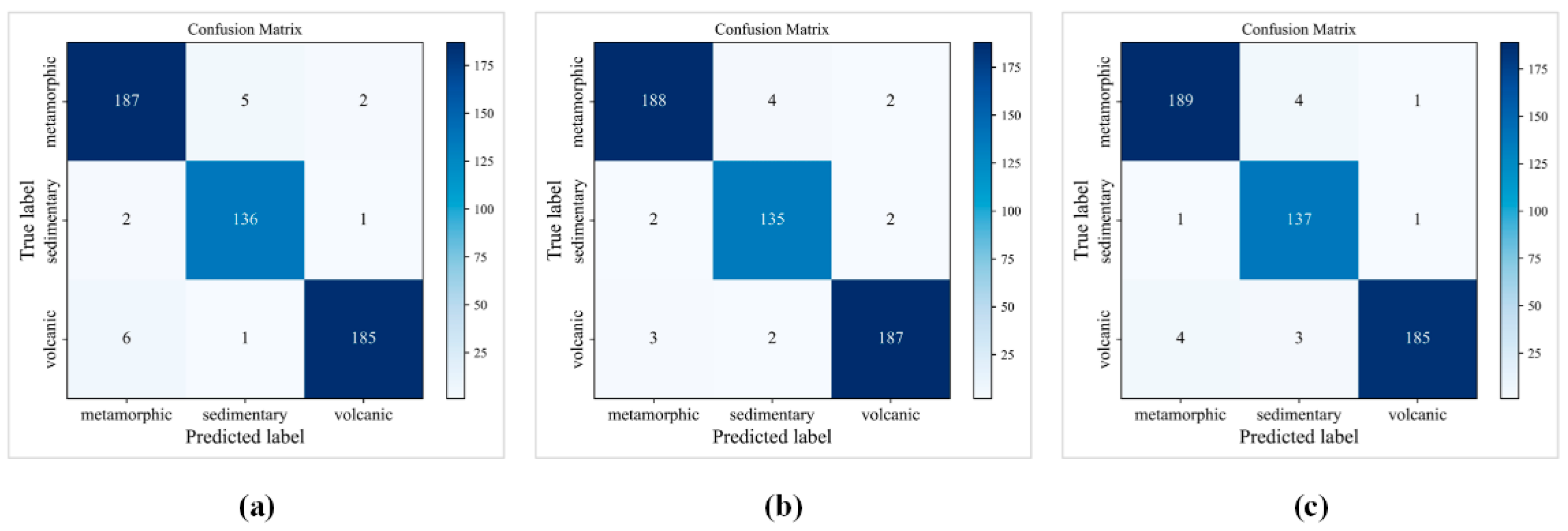

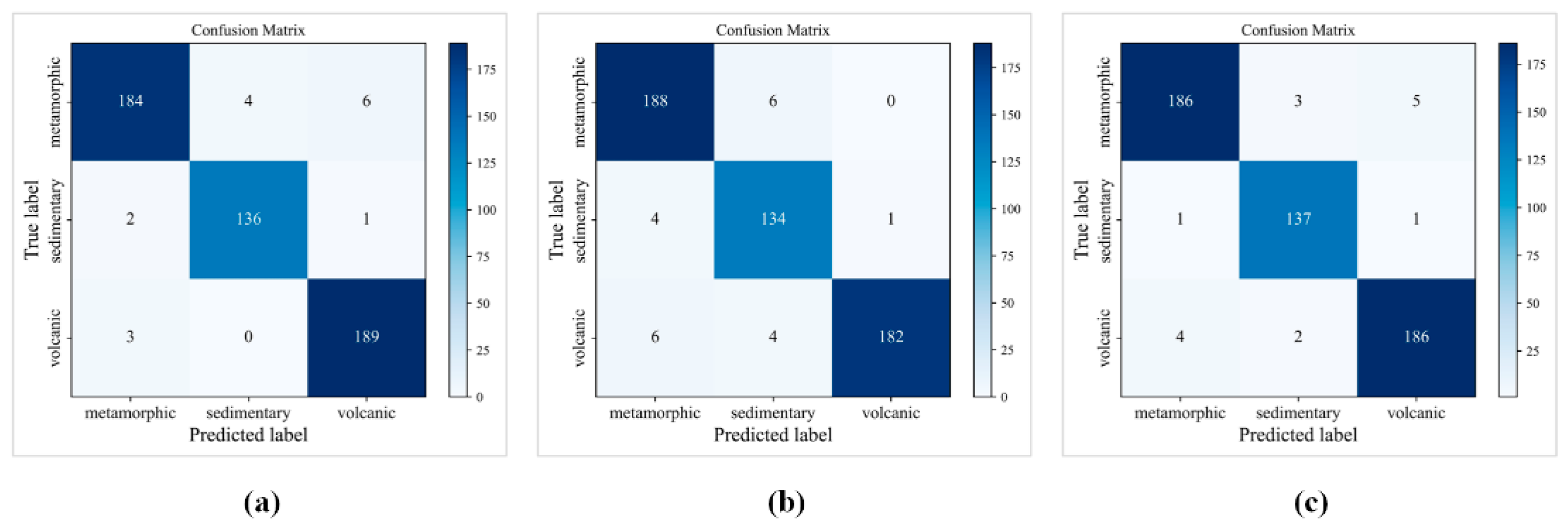

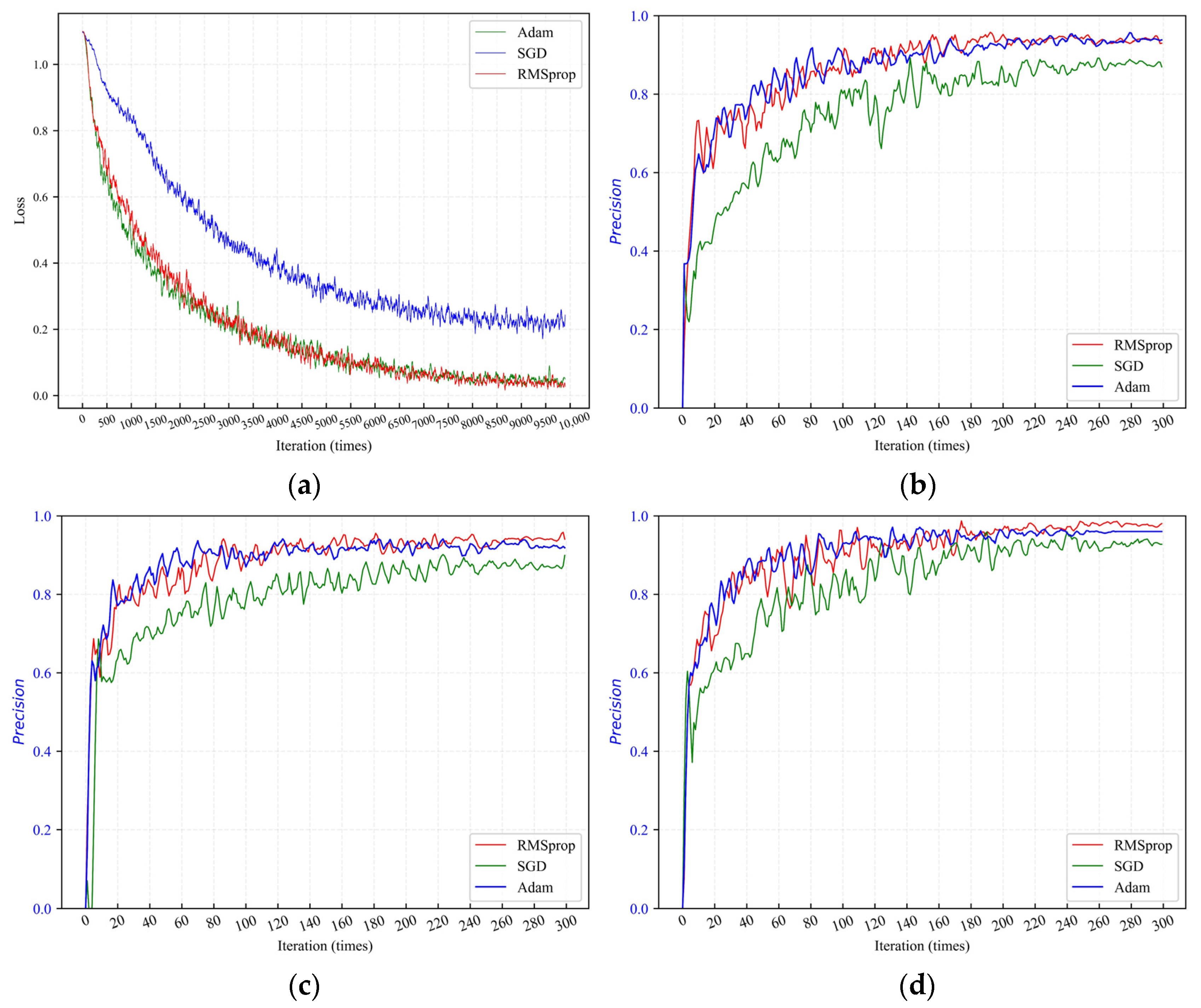

Therefore, in this paper, three kinds of main rocks and their subclasses were selected as the research objects, not only for systematically evaluating the classification precision of four kinds of CNN model for three types of rock but also for discussing the influence of the optimization algorithms (RMSprop, SGD, and Adam optimizers) and learning-rate decay modes (cosine and lambda learning-rate decay schedules) on the model’s accuracy during the network training process. Finally, the optimal neural network model and the best training skills are summarized, which provides a reliable reference for the better realization of automatic rock class classification.

The structure of this study is as follows: the

Section 2 introduces detailed information about the dataset, theoretical knowledge of four CNN algorithms, and learning-rate adjustment methods. The

Section 3 depicts model training requirements and the results analysis of the trained model. The

Section 4 evaluates the performance of four algorithms, optimizers, and the learning-rate decay modes. Furthermore, experimental verification on another database is carried out to validate the effect of the best-trained model. Finally, the optimum model, optimization algorithms, and learning-rate adjustment mode are obtained.