Abstract

Data representation is of significant importance in minimizing multi-label ambiguity. While most researchers intensively investigate label correlation, the research on enhancing model robustness is preliminary. Low-quality data is one of the main reasons that model robustness degrades. Aiming at the cases with noisy features and missing labels, we develop a novel method called robust global and local label correlation (RGLC). In this model, subspace learning reconstructs intrinsic latent features immune from feature noise. The manifold learning ensures that outputs obtained by matrix factorization are similar in the low-rank latent label if the latent features are similar. We examine the co-occurrence of global and local label correlation with the constructed latent features and the latent labels. Extensive experiments demonstrate that the classification performance with integrated information is statistically superior over a collection of state-of-the-art approaches across numerous domains. Additionally, the proposed model shows promising performance on multi-label when noisy features and missing labels occur, demonstrating the robustness of multi-label classification.

Keywords:

multi-label classification; label correlations; noisy features; missing labels; robustness MSC:

93B51; 14N20; 46T10

1. Introduction

Multi-label learning [1,2,3] aims at learning a mapping from features to labels and determines a group of associated labels for unseen instances. The traditional “is-a” relation between instances and labels has thus been upgraded with the “has-a” relation. With the advancement of automation and networking, the expenditure continuously collecting data decreases significantly, resulting in a collection of advanced algorithms to cope with concept cognition [4,5,6,7,8,9,10]. However, it is a non-trivial task to collect massive high-quality data, as unexpected device failure or data falsification happens from time to time. Therefore, how to effectively cope with flawed multi-label data is frequently discussed in [11,12,13,14].

Label correlations are frequently exploited as auxiliary information for classifier construction as they approximately recover the joint probability density among labels. The appearance possibility of the label “bee” will be higher if the labels “flower” and “butterfly” are present in a picture. The global label correlation means the label relation holds universally, and the local label correlation otherwise. The label correlation of “flower, butterfly, and bee” is global if the picture is in the natural scene case. It degenerates as local if the picture describes the flowers in the house without butterflies and bees. As a result, the premise of global label correlation and local label correlation concurring is more reasonable. However, both label correlations are vulnerable to the feature noise and missing label, as they are inherent in the observed data itself. Recent papers focus on label correlation. Guo et al. [15] utilized Laplacian manifold regularization to recover the missing labels. Sun et al. [16] imposed sparse constraint and linear self-recovery to deal with the weakly supervised labels and noisy features. Zhang et al. [17] addressed the noise in latent features and latent labels via bi-sparsity regularization. Lou et al. [18] introduced a fuzzy weighting strategy on both observed features and instances to resist the impact of noisy features. Fan et al. [19] adopted manifold learning to exploit both global and local label correlations with selected features. Nevertheless, most of them examine the model robustness explicitly with either feature space or label space. The classification performance on the concurrence of both noisy features and missing labels will be more reliable if we strengthen the robustness of both global and local label correlations in latent data representation.

For multi-label classifiers, the evaluation metrics emphasize the accuracy and ranking of relevant labels [20]. Either from an instance perspective or a label perspective, the minority distributions of related labels amplify sensitivity to model parameter selections. In other words, inaccurate information, including feature noise [21,22] and label missing [23,24,25], may skew the estimation of model parameters. Subspace learning is an effective method to uncover the underlying structural representations. The label correlation estimation in subspace gains better credibility due to the removal of distractive information. Chen et al. [26] learned a shared latent projection via linear low-dimensional transformation, which is solved by a generalized eigenvalue problem. Chen et al. [27] adopted a neural factorization machine on both the feature side and label side to exploit feature and label correlations. Wang et al. [28] restrained the subspace dimension by relaxing the performance difference to a pre-trained model and preserving the largest parameters in the corresponding round. Huang et al. [29] sought a latent label space by minimizing the distance difference of pairwise label correlation constraint on explicit and implicit labels. However, these approaches neither examine the potentially flawed data nor take full advantage of the concurrence of global and local label correlation.

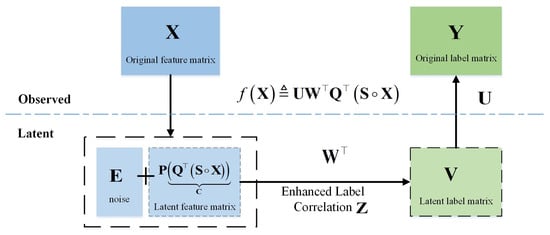

Based on subspace learning and manifold learning, we present a novel method called Robust Global and Local label Correlation (RGLC) to leverage both global and local label correlation extracted from the latent representation, as illustrated in Figure 1. Inspired by [30,31], subspace learning is employed to reconstruct a clean feature representation. As the evaluation metrics work in the observed label matrix, we generate latent label representation via matrix factorization and train a model from noise-free feature representation to latent label representation. During this training, we coupled with global and local label learning. Compared with existing multi-label algorithms, the contributions of RGLC are enumerated as follows:

Figure 1.

Framework of RGLC. Instead of directly learning global and local label correlations Z from the observed feature matrix X to observed label matrix Y, we attempt to train the RGLC (i.e., ) model from latent feature matrix (i.e., ) to latent label matrix (i.e., ). It exploits global and local label correlations Z on latent features and labels with linear mapping .

- For the first time, we exploit both global label correlations and local label correlations to regularize the learning from latent features to latent labels. The integrated intrinsic label correlation in RGLC robustly handles multi-label classification problems in different fields.

- The subspace learning and matrix decomposition are jointly incorporated to deduce latent features and latent labels from flawed multi-label data. The two modules strengthen the robustness of RGLC when completing multi-label classification tasks with noisy features and missing labels.

- We intensively examine RGLC from different aspects of modularity, complexity, convergence, and sensitivity. The satisfactory performance demonstrates the superiority of enhanced global and local label correlations.

We structure the remaining into five sections. We review related works of robust subspace learning, manifold regularization and global and local label correlation in Section 2. Section 3 details the components of the presented multi-label model (RGLC). Section 3.2 explains the optimization solving of RGLC. Extensive comparisons are completed and analyzed in Section 4. We discuss the characteristics of RGLC in Section 5. Finally, we summarize the findings and values of the proposed algorithm and suggest future research directions in Section 6.

2. Related Work

2.1. Robust Subspace Learning

Subspace learning is an effective strategy to strengthen model robustness. As far as the goal of subspace learning, there are roughly three solutions in single-view based multi-label learning [32]: (i) only reduce the feature matrix to ; (ii) only reduce the label matrix to ; and (iii) reduce the label matrix and feature matrix to and , respectively. The commonness of these methods is that the generated subspace is compact with the low-rank property. Recently, many approaches prefer the embedded strategy, which means subspace learning and classifier construction are optimized alternatively. For the first category, wrapping multi-label classification with label-specific features generation model (WRAP) [33] learnt the linear model from to and the embedded label-specific features simultaneously. For the second category, latent relative label importances for the multi-label learning model (RLIM) [34] learns the relative label importance while training the classifier from to . For the third category, the independent feature and label components model (IFLC) [35] learns the classifier from the latent feature subspace (i.e., ) to the latent label subspace (i.e., ) by maximizing the independence individually and maximizing correlations jointly. In this paper, we employ different strategies for latent representation learning on the feature and label sides. Concretely, the latent feature subspace learning takes an embedded strategy by constructing a linear regression from to without the consideration of label correlation. The latent label subspace learning takes an embedded method by constructing a linear regression from to .

2.2. Manifold Regularization

Manifold regularization [36] constrains the distribution of output similarity based on instance similarity. Cai proposed feature manifold learning and sparse regularization for the multi-label feature selection model (MSSL) [37], and it preserved feature geometric structure by feature manifold learning. Other scholars proposed a manifold regularized discriminative feature selection (MDFS) model [38]. It designed a feature embedding method that retains local label correlation via manifold regularization. There is also a Bayesian model with label manifold regularization and label confidence constraint (BM-LRC) [39]. It applied label manifold regularization on topic and document levels to estimate label similarity for multi-label text classification. Feng considered a regularized matrix factorization for the multi-label learning (RMFL) model [40]. It preserved topological information in local features through label manifold regularization. In this paper, we employ manifold regularization on latent label subspace learning to optimize the coefficients in the linear regression model.

2.3. Global and Local Label Correlation

Effective utilization of global and local label correlation is beneficial to improving multi-label classification if label information is assumed to be plausible. We explore accurate label correlations from the label subspace constrained by manifold regularization. Recent works [41,42,43] focus on local label correlations. Multi-label learning with global and local label correlation (GLOCAL) [31] is proposed for the first time, which attempts to deal with multi-label missing labels by exploiting both global and local label correlation in latent label subspace. The global and local label manifold regularizers use the pairwise label correlation. Global and local multi-view multi-label learning (GLMVML) [44] extended GLOCAL to a multi-view situation. Global–local label correlation (GLC) [45] solved the partial multi-label learning problem by simultaneously taking label coefficient matrix and consistency among the coefficient matrix as global and local label correlation regularizers, respectively. In this paper, we learn both global label correlations and local label correlations in latent label subspace, which constitutes a component of objective functions for the multi-label classifier.

3. Materials and Methods

We recall some essential notations here. Given multi-label instance , where is the original feature matrix, d is the feature count, and n is the instance count. constitutes the ground-truth label matrix, where denotes the label association information of across label space, with if is associated with the j-th label, and otherwise. l is the label count.

Let and denote two transformation matrices imposed on and , respectively. We explain the model components by learning a latent feature matrix () first (Section 3.1.1) and then learning a latent label matrix () (Section 3.1.2), which is regularized by global and local manifold (Section 3.1.3) and learning latent label correlations Z (Section 3.1.4). The model output for unseen instances is presented finally (Section 3.1.5). The pipeline also applies to the case with noisy features and missing labels. We explain the problem solving in Section 3.2.

3.1. Proposed Model

3.1.1. Learning Latent Features

Inspired by [30], we seek a latent feature matrix which is the low-rank representation of with both informative and discriminative information by imposing reconstruction constraints. To obtain informativeness through imposing the reconstruction constraint:

where transforms the original feature matrix to the latent feature matrix and reconstructs the original feature matrix from latent feature matrix . To ensure a non-trivial solution, we add orthogonal constraint on , denoted as . separates the noise from feature matrix . The desired latent feature matrix should minimize the classification error, and we assume that the classifier from the latent feature matrix to label matrix is a linear regression model. The smaller the regression loss is, the better the reconstructed feature becomes. We require the simplicity of and , as they do not participate in the procedure of latent label learning. We use indicator matrix and to simulate the feature () and missing label, respectively. Hence, we have the objective function as:

where denotes the Frobenius norm, is the weight of the classifier and , are all tradeoff parameters balancing the complexity between and , . The elements and in and hold if they are not noisy features and missing labels, and otherwise. We do not consider label correlation here, as the original label matrix may contain the missing labels. We learn the latent features () in a label-by-label fashion. For simplicity, we denote:

3.1.2. Learning Latent Labels

The ground-truth label matrix is transformed to the latent label matrix by the transformation matrix satisfying , where . The classifier

is learnt from the latent feature matrix to the latent label matrix . We assume the mapping is also linear. Therefore, we obtain the latent label matrix by minimizing the reconstruction error of latent labels and square loss of the classifier prediction performance described as:

where if is observable, and otherwise. is a linear transformation from to , and is a transformation matrix from to . is the regularization item.

3.1.3. Global and Local Manifold Regularizer

Applying global and local manifold regularizers on label representation is conducive to distinguishing the similarity of different instances of latent features in both the global and local sense. The two regularization terms can guide the model to exploit global and local label correlation. We compute global and local manifold regularizers in a pairwise way. This idea is inspired by [31]. is the output set of the classifier based on different groups of instances and the classifier is trained from the latent feature to the latent label . denotes a group of instance sets with latent features, where and are the th and th latent features containing and instances. and are corresponding latent label representations of and , respectively. We definite the global manifold regularizer as:

which should be minimized. is the label outputs on all instances, where . is the Laplacian matrix of , where denotes the diagonal of matrix and denotes a vector of ones. is a global label correlation matrix measured by the cosine similarity among all latent labels (denoted as ), where . is the matrix trace. Similarly, for the ith local manifold regularizer, we have:

which should also be minimized. is the label output of ith groups of instances. For simplicity, we assume that each local similarity corresponds to only one set of instances, which means the corresponding intersection of instances between two arbitrary and is empty. is the Laplacian matrix of , where denotes the diagonal of the matrix and denotes a vector of ones. is a local label correlation matrix measured by the cosine similarity of ith local label subset (denoted as ), where . is the matrix trace. Thus, (5) can be rewritten as:

where is an indicator matrix with if is observable and otherwise.

3.1.4. Learning Label Correlations

The cosine similarity measured on the original label matrix may be dubious when a missing label occurs. One alternative solution is to learn the Laplacian matrix on , which stores the fluctuations on pairwise label correlations when an arbitrary label changes. Considering the symmetric positive definite property of the Laplacian matrix, we decompose as , with . To avoid a trivial solution, we add the diagonal constraint for all . To unify the optimization processing on label correlation, we assume the global label correlation matrix is a linear combination of local label correlation matrices. Thus, the global label Laplacian matrix is the linear combination of the local label Laplacian matrix. Then, (8) is rewritten as:

where symbol ∘ denotes Hadamard (element-wise) product. , and are tradeoff parameters.

3.1.5. Predicting Labels for Unseen Instances

With the latent feature matrix , the latent label matrix and the classifier obtained from Section 3.1.1, Section 3.1.2, Section 3.1.3 and Section 3.1.4, we present the model output here. For an unseen instance , the label prediction in the original label space is denoted as:

3.2. Optimization

3.2.1. Solving (2)

We use the Alternating Direction Method of Multipliers (ADMM) to solve the objective function in (2). By introducing constraint , the objective function is renewed as:

The augmented Lagrangian function is:

where , are Lagrange multipliers, denotes the Frobenius inner product and is a penalty parameter.

Update A (Line 3 in Algorithm 1):

Let , we have:

Update P (Line 4 in Algorithm 1):

Problem (16) is a classic orthogonal procrustes problem [46], given .

Update Q (Line 5 in Algorithm 1):

Let , we have:

where . Q is renewed by a Sylvester equation [47].

Update R (Line 6 in Algorithm 1):

Let , we have:

where .

Update E (Line 7 in Algorithm 1):

which has the closed-form solution [48] shown below:

where , is a sign function with 1 if the input is greater than 0, 0 if the input is 0 and −1 otherwise.

Update , , and (Line 8 in Algorithm 1):

where .

3.2.2. Solving (9)

We employ the alternating minimization to solve the objective function in (9). We implement the solving for U, V, and W via manopt toolbox [49].

Update (Line 13 in Algorithm 1):

for each . We solve it with projected gradient descent. is calculated by solving the gradient of :

To satisfy the constraint , we update the jth row of (i.e., by .

Update V (Line 15 in Algorithm 1):

is calculated by solving the gradient of :

Update U (Line 16 in Algorithm 1):

is calculated by solving the gradient of :

Update W (Line 17 in Algorithm 1):

is calculated by solving the gradient of :

3.3. Computational Complexity Analysis

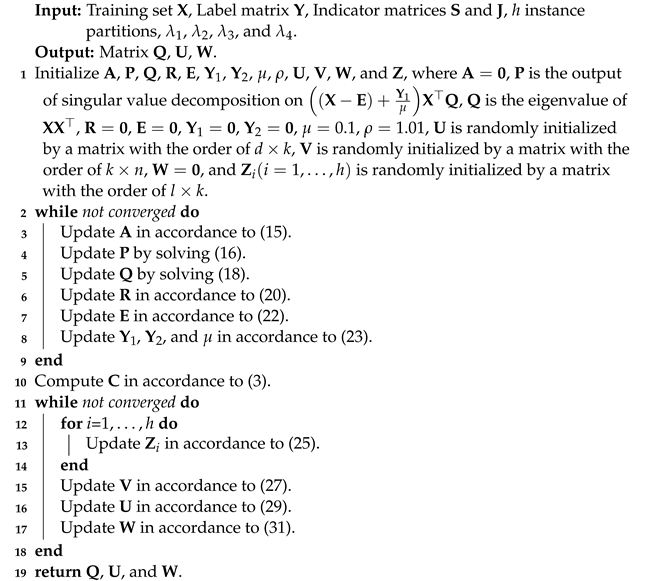

This section analyzes the computational complexity of proposed RGLC (see Algorithm 1).

| Algorithm 1: RGLC |

|

3.3.1. Complexity Analysis of Solving (2)

The most time-consuming steps are the calculation of matrix A and matrix Q. Firstly, the complexity of calculating A (step 3) is . Secondly, the complexity of calculating P (step 4) is . Thirdly, the complexity of calculating Q (step 5) is . Finally, the complexity of computing R (step 6) is . Since the dimensionality of d is usually larger than instance count n, and the instance count n is much larger than l, we have the complexity of solving (2) as , where is the iteration count.

3.3.2. Complexity Analysis of Solving (9)

The most time-consuming steps are the calculation of matrix Z. Firstly, the complexity of calculating Z (step 13) is , where denotes the complexity for the generation of local instance groups with K-means algorithms, and is the iteration counts. Secondly, the complexity of calculating V (step 15) is . Thirdly, the complexity of calculating U (step 16) is . Finally, the complexity of computing W (step 17) is . As we adopt gradient descent for Z, V, U, and W, the gradient decreases to zero at the rate of . Therefore, the complexity of solving (9) is , where is the iteration count.

In a nutshell, the complexity is .

4. Results

4.1. Experimental Settings

We conduct extensive experiments to examine the robustness of RGLC on twelve benchmarks, where bibtex, corel5k, enron, genbase, mediamill, medical, scene are from Mulan http://mulan.sourceforge.net/datasets.html (accessed on 21 January 2022) [50], languagelog and slashdot are from Meka http://waikato.github.io/meka/datasets/ (accessed on 21 January 2022) [51] and art, business, and health are from MDDM www.lamda.nju.edu.cn/code_MDDM.ashx (accessed on 21 January 2022) [52]. Table 1 summarizes the data characteristics from domains including images, text, video, and biology. For each dataset, we introduce the example count (#n), the feature dimensionality (#f), the label count (#q), the average number of associated labels per instance (#c) and the domain.

Table 1.

Characteristics of datasets.

We adopt six evaluation metrics (Hamming Loss, Ranking Loss, One Error, Coverage, Average Precision, and Micro F1) [53] to evaluate the classification performance. The last two achieve better performance if the values are large, and the remaining metrics obtain better performance if the values are small. Let and denote the relevant and irrelevant label set in ground truth and n be the unseen instances count; then, the formulas of metrics are enumerated as:

- (1)

- Hamming Loss (abbreviated as Hl) evaluates the average difference between predictions and ground truth (see Formula (32)). The smaller the value of Hamming loss is, the better the performance of an algorithm becomes.where is the set symmetric difference and is the set cardinality.

- (2)

- Ranking Loss (abbreviated as Rkl) evaluates the fraction that an irrelevant label ranks before the relevant label in label predictions (see Formula (33)). The smaller the value of Ranking Loss is, the better the performance of an algorithm becomes.where denotes the ranking position in ascending order for the j-th label on the i-th instance. is the set cardinality.

- (3)

- One Error (abbreviated as Oe) evaluates whether the average fraction that the label ranks first in prediction is the irrelevant label (see Formula (34)). The smaller the value of One Error is, the better the performance of an algorithm becomes.where is equal to 1 if the condition holds, and it equals 0 otherwise. The operator denotes the ranking position in ascending order for the j-th label on the i-th instance.

- (4)

- Coverage (abbreviated as Cvg) evaluates the average fraction for inclusion of all ground-truth labels in the ranking of label predictions (see Formula 35). The smaller the value of Coverage is, the better the performance of an algorithm becomes.where denotes the ranking position in ascending order for the j-th label on the i-th instance.

- (5)

- Average Precision (abbreviated as Ap) evaluates the average precision of actually relevant labels ranking before a relevant label examined by label predictions (see Formula 36). The larger the value of Average Precision is, the better the performance of an algorithm becomes.where is the set cardinality.

The configurations used for comparing algorithms are enumerated as follows:

- Multi-label learning with label-specific features (LIFT) http://palm.seu.edu.cn/zhangml/files/LIFT.rar (accessed on 21 January 2022) [54]: Generating cluster-based label-specific features for multi-label.

- Learning label-specific features (LLSF) https://jiunhwang.github.io/ (accessed on 21 January 2022) [55]: Learning label-specific features to promote multi-label classification performance.

- Multi-label twin support vector machine (MLTSVM) http://www.optimal-group.org/Resource/MLTSVM.html (accessed on 21 January 2022) [56]: Providing multi-label learning algorithm with twin support vector machine.

- Global and local label correlations (Glocal) http://www.lamda.nju.edu.cn/code_Glocal.ashx (accessed on 21 January 2022) [31]: Learning global and local correlation for multi-label.

- Hybrid noise-oriented multilabel learning (HNOML) [17]: A feature and label noise-resistance multi-label model.

- Manifold regularized discriminative feature selection for multi-label learning (MDFS) https://github.com/JiaZhang19/MDFS (accessed on 21 January 2022) [38]: Learning discriminative features via manifold regularization.

- Multilabel classification with group-based mapping (MCGM) https://github.com/JianghongMA/MC-GM (accessed on 21 January 2022) [43]: Group-based local correlation with local feature selection.

- Fast random k labelsets (fRAkEL) http://github.com/KKimura360/fast_RAkEL_matlab (accessed on 21 January 2022) [57]: A fast version of Random k-labelsets.

- RGLC: Proposed model. ,,, and are searched in . Cluster number and , as they have limited impacts on considered metrics.

Firstly, we explore the robustness of the baseline performance compared with the eight state-of-the-art multi-label classification algorithms across different domains. Secondly, we examine the contribution of included components by an ablation study. For the first two experiments, both S and J are all ones for baseline comparisons. Thirdly, we examine the robustness of RGLC with noisy features and missing labels. Lastly, we analyze convergence and sensitivity with noisy features and missing labels. For fairness, we present the results from the average of six times five-fold cross-validation. We conduct the entire experiments on a computer with an Intel(R) Core i7-10700 2.90GHz CPU and 32GB RAM running on a Windows 10 operating system.

To simulate noisy features and missing labels, we randomly replace 1 with 0 in each column of the indicator matrix S and J using the strategy in article [17]. The replacements are increased from 0% to 80% with 20% being the step size. The experimental comparison is on the datasets of business and health.

4.2. Learning with Benchmarks

Table 2 elaborates the experimental results of selected algorithms across all considered datasets. We first calculate the nine-algorithm performance rank on the same dataset and then calculate the average of one-algorithm performance rank among the twelve datasets. We obtain the value of the “Avg rank” of one algorithm and then rank it to get the value in ().

Table 2.

Comparison of each algorithm (mean ± std). Best results and second best are in bold and underlined, respectively. (↓ the smaller the better, ↑ the larger the better).

We employ the Friedman test [58] to quantify the differences between all considered algorithms. We introduce four symbols to detail the processing of Friedman statistics (see Formula (37)), including comparing algorithms number (k), datasets number (N), the performance rank of the j-th algorithm on the i-th dataset () and the average rank of the j-th algorithm over all the datasets (). With the null hypothesis (i.e., ) of all algorithms obtaining identical performance, we have the F-distribution with degrees of freedom as the numerator and degrees of freedom as the denominator:

where

Table 3 presents the value of Friedman statistics for all considered metrics and the referenced critical difference (CD). As shown in Table 3, at the significance level , we reject the null hypothesis (i.e., ) of statistically indistinguishable performance among the considered algorithms for all considered evaluation metrics. The result implies that it is feasible to examine whether RGLC gains statistical superiority over other algorithms by conducting the post hoc test such as the Holm test [58].

Table 3.

Summary of the Friedman statistics (, ) and the critical value at significance level in terms of each evaluation measure (k:# comparing algorithms; N:# datasets).

Table 4 presents the statistical result of RGLC compared with remaining algorithms by the Holm test (see Formula (39)) at significance level (). We use RGLC as the control algorithm and nominate it as . We stipulate the remaining comparing algorithms as in the order of average rank across all datasets in each evaluation metric, where .

Table 4.

Comparison of RGLC (control algorithm) against the remaining approaches. The test statistics and p-value are determined by the Holm test at significance level = 0.05. Algorithms that are statistically inferior than RGLC are in bold size.

The p-value of (denoted as ) is calculated by standard normal distribution. For significance level , we examine whether is smaller than for . Specially, the Holm test continues until there exists a th step, where denotes the first j with . is configured as if hold for all j.

Accordingly, we have the following findings based on reported experimental comparisons:

- From Table 2, we observe that for all six evaluation metrics, RGLC achieves the best performance or second-best performance in 66.67% (4/6) and 33.33% (2/6) cases according to the rank of “Avg rank” in terms of Hamming Loss, Ranking Loss, One Error, Coverage, Average Precision, and Micro F1. It is only inferior to LIFT and fRAkEL according to the rank of the value of “Avg rank” in Table 2 based on the evaluation metric Ranking Loss and One Error, respectively. Specifically, RGLC achieves 24 (33.33%) best (the number of values indicated in bold) and 18 (25%) second-best performances (the number of values indicated in underlined) on 72 observations (12 datasets × 6 metrics). In contrast, the second-best method is LIFT, which achieves 12 (16.67%) best and 18 (25%) second-best performances on 72 observations (12 datasets × 6 metrics).

- The Holm test in Table 4 shows that RGLC is statistically superior to other algorithms to varying degrees in all metrics, with the best performance in Average Precision (better than seven algorithms) and the worst performance in Ranking Loss (better than three algorithms). Concretely, RGLC significantly outperforms MCGM in terms of all metrics except Micro F1, significantly outperforms MLTSVM in terms of Hamming Loss, Ranking Loss, Coverage, and Average Precision, significantly outperforms Glocal in terms of Hamming Loss, Coverage, Average Precision, and Micro F1, significantly outperforms HNOML in terms of Hamming Loss, One Error, Average Precision, and Micro F1, significantly outperforms MDFS in terms of One Error, Coverage, Average Precision, and Micro F1, significantly outperforms fRAkEL in terms of Hamming Loss, Ranking Loss, Average Precision, and Micro F1, significantly outperforms LLSF in terms of Hamming Loss, One Error, and Average Precision and significantly outperforms LIFT in terms of Coverage.

4.3. Ablation Study

Table 5 shows the functionality of latent features, latent labels, global label correlation, and the local label correlation.

Table 5.

Comparison of each component in RGLC. Best results are in bold size. ↓: the smaller the better; ↑: the larger the better.

We devise four degenerate versions of RGLC: (i) RGLC-LF, which learns both global and local label correlation without latent features; (ii) RGLC-LL, which learns both global and local label correlation without latent labels; (iii) RGLC-GC, which learns local label correlation only on both latent features and latent labels; and (iv) RGLC-LC, which learns global label correlation only on both latent features and latent labels. The lower the rank is, the better the performance becomes. For the degenerate versions, the larger difference in the ranking of the algorithms to RGLC is, the more significant the component in boosting classification performance and vice versa.

As shown in Table 5, RGLC achieves the best performance across all metrics on average. Latent feature learning contributes most among all components, and latent label learning contributes almost the same as local label correlation. The global label correlation has the least contribution to boosting performance.

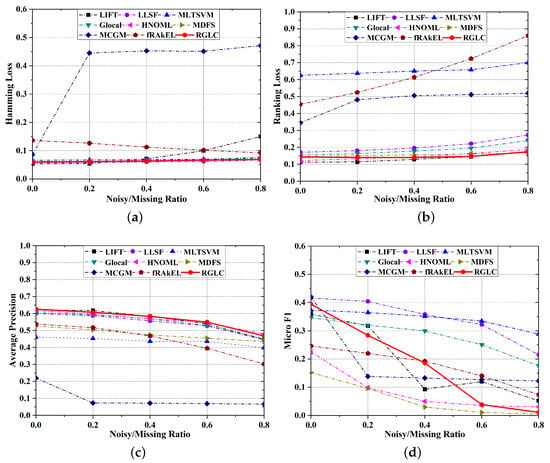

4.4. Learning with Noisy Features and Missing Labels

In this section, we evaluate the fluctuations of RGLC with the concurrence of noisy features and missing labels.

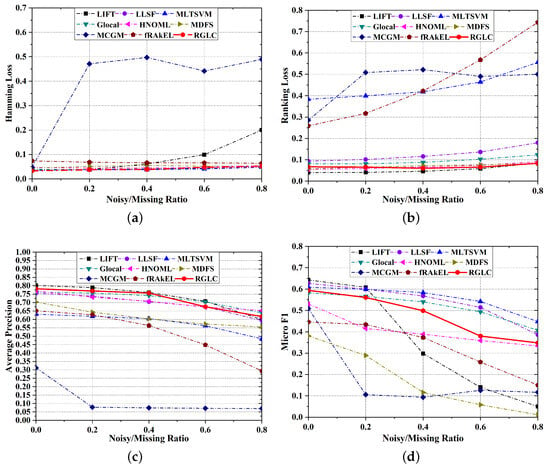

Figure 2 illustrates the classification performance on dataset art.

Figure 2.

Robustness experiments with noisy features and missing labels on dataset art on metric (a) Hamming Loss, (b) Ranking Loss, (c) Average Precision, and (d) Micro F1.

As can be observed, the performance of all metrics degenerates as the ratio of noisy features and missing labels increases. For most cases, RGLC fluctuates least as the noisy features and missing labels increase.

Figure 3 illustrates the classification performance of the dataset business.

Figure 3.

Robustness experiments with noisy features and missing labels on dataset business on metric (a) Hamming Loss, (b) Ranking Loss, (c) Average Precision, and (d) Micro F1.

As can be observed, the performance of all metrics degenerates as the ratio of noisy features and missing labels increases. For most cases, RGLC fluctuates the least as the noisy features and missing labels increase.

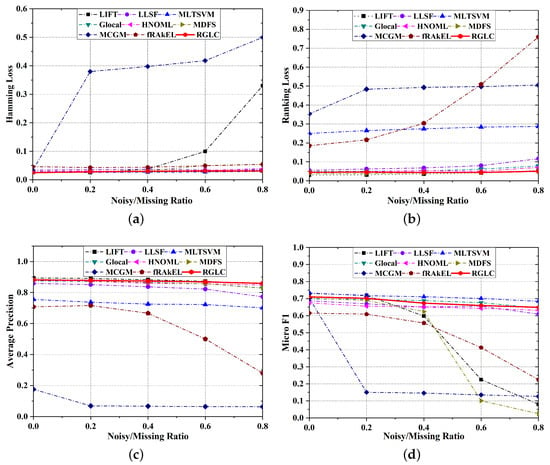

Figure 4 illustrates the classification performance of dataset health.

Figure 4.

Robustness experiments with noisy features and missing labels on dataset health on metric (a) Hamming Loss, (b) Ranking Loss, (c) Average Precision, and (d) Micro F1.

As can be observed, the performance of all metrics degenerates as the ratio of noisy features and missing labels increases. For most cases, RGLC fluctuates least as the noisy features and missing labels increase.

4.5. Convergence

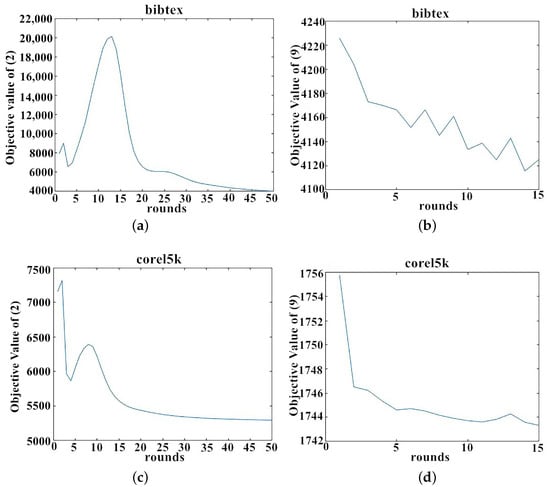

Furthermore, we study the convergence of RGLC. Without losing generality, we report the variations of two objective functions on datasets bibtex and corel5k in Figure 5, given 60% noisy features and missing labels presence.

As can be observed, values of both objective functions converge quickly in a few iterations. Similar phenomena also apply to other datasets. The runtime comparisons of all algorithms in Table 6 show that the computational complexity of RGLC is acceptable on large-scale datasets.

Table 6.

Runtime (in seconds) for learning with 60% noisy features and missing labels. Best results are in bold size.

4.6. Sensitivity

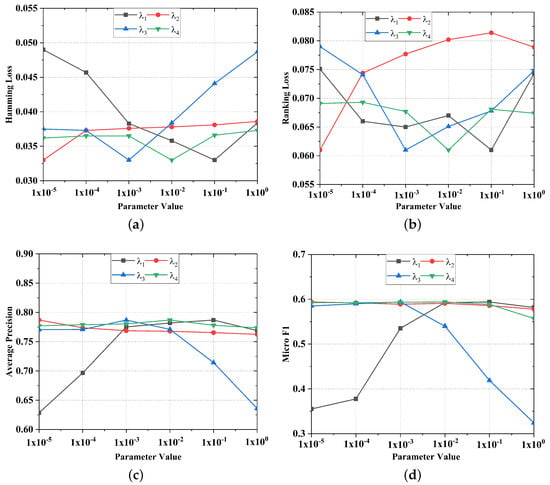

We also study the sensitivity of involved parameters (, , , and ) and report the fluctuations in the health dataset in Figure 6.

Figure 6.

Performance fluctuations of , , , and on the health dataset with 20% noisy features and missing labels on metric (a) Hamming Loss, (b) Ranking Loss, (c) Average Precision, and (d) Micro F1.

The larger is, the higher the significance of potential representation is, and the smaller and are, the lower the complexity of the model on the feature side and label side. The larger is, the smaller and are, and the more satisfactory the Average Precision, Hamming Loss, Micro F1, and Ranking Loss are. However, the results can deteriorate if is too small, which means the latent labels are less important than latent features. A larger means higher importance of the label manifold. The contribution of the label manifold is smaller than the latent feature and latent label, yet it is larger than the model parameters. Similar phenomena also apply to other datasets. Consequently, we recommend the settings of parameters , , , and in the order of .

5. Discussion

This section discusses the robustness of RGLC. The experimental results on benchmarks indicate that RGLC gains the best performance on average across different domains. This means RGLC is competent for multi-label classification. The ablation study examines the functionality of included modules (i.e., latent features, latent labels, global label correlations and local label correlation). It reveals that all modules contribute to the performance improvement, and local label correlation is more conducive than global label correlation. Such a conclusion coincides with perception, as global label correlation is more challenging than local label correlation when data are automatically collected. In real applications, the data collection is inevitably affected by noise and missing. The degenerations of RGLC on three large-scale datasets are negligible for metrics Hamming Loss, Ranking Loss, Coverage, and Average Precision. It implies the availability for uncontrolled cases demonstrating model robustness. The convergence analysis implies that the objective values of RGLC converge in limited rounds. Nevertheless, the computational complexity is not very satisfying, which means the computation in each iteration is considerable. Partial reasons stem from the optimization on a large number of intermediate matrix (A, P, Q, R, E, W, U, V, Z). Future work should focus on the acceleration of RGLC.

6. Conclusions

This paper formulated a robust multi-label classification method RGLC by exploiting enhanced global and local label correlations. Unlike the existing multi-label approaches, which realize robustness by either exploiting label correlations or reconstructing data representation, we tackle it simultaneously by learning global label correlations and local label correlations from the low-rank latent space. We strengthen the reliability of global label correlations and local label correlations by combining subspace learning and manifold regularization. Extensive studies on multiple domains demonstrate the robust performance of RGLC over a collection of state-of-the-art multi-label algorithms. We further demonstrate the robustness of RGLC in the presence of noisy features and missing labels, which is more realistic in multi-label data collection. In the future, we intend to validate whether RGLC shows robustness for more categories of noisy features and missing labels.

Author Contributions

Conceptualization, formal analysis, writing—original draft preparation, T.Z.; methodology, software, validation, writing—review and editing, Y.Z.; writing—review and editing, W.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the National Natural Science Foundation of China grant number 61976158, 61976160, 62076182, 62163016, 62006172, 61906137, and it is also partially supported by the Jiangxi “Double Thousand Plan” with grant number 20212ACB202001.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We would like to thank the constructive comments from D. Q. Miao. Meanwhile, we would like to thank serveral people for their contributions in datasets. Their names are in alphabetical order: M. R. Boutell, S. Diplaris, P. Duygulu, I. Katakis, J. P. Pestian, J. Read, C. G. M. Snoek and Y. Zhang.

Conflicts of Interest

Y.J. Zhang is a post-doctoral at China UnionPay Co., Ltd. The data and computing resources of this paper have no commercial relationship with the company.

Correction Statement

Due to the article publishing process standard, the academic editor should be updated. This information has been updated and this change does not affect the scientific content of the article.

References

- Zhang, M.L.; Zhou, Z.H. A review on multi-label learning algorithms. IEEE Trans. Knowl. Data Eng. 2014, 26, 1819–1837. [Google Scholar] [CrossRef]

- Gibaja, E.; Ventura, S. A tutorial on multilabel learning. ACM Comput. Surv. 2015, 47, 1–38. [Google Scholar] [CrossRef]

- Liu, W.W.; Shen, X.B.; Wang, H.B.; Tsang, I.W. The emerging trends of multi-label learning. IEEE Trans. Pattern Anal. Mach. Intell. 2021, in press. [Google Scholar] [CrossRef]

- Xu, W.H.; Li, W.T. Granular computing approach to two-way learning based on formal concept analysis in fuzzy datasets. IEEE Trans. Cybern. 2016, 46, 366–379. [Google Scholar] [CrossRef]

- Xu, W.H.; Yu, J.H. A novel approach to information fusion in multi-source datasets: A granular computing viewpoint. Inf. Sci. 2017, 378, 410–423. [Google Scholar] [CrossRef]

- Zhang, Y.J.; Miao, D.Q.; Zhang, Z.F.; Xu, J.F.; Luo, S. A three-way selective ensemble model for multi-label classification. Int. J. Approx. Reason. 2018, 103, 394–413. [Google Scholar] [CrossRef]

- Zhang, Y.J.; Miao, D.Q.; Pedrycz, W.; Zhao, T.N.; Xu, J.F.; Yu, Y. Granular structure-based incremental updating for multi-label classification. Knowl. Based Syst. 2020, 189, 105066:1–105066:15. [Google Scholar] [CrossRef]

- Zhang, Y.J.; Zhao, T.N.; Miao, D.Q.; Pedrycz, W. Granular multilabel batch active learning with pairwise label correlation. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 3079–3091. [Google Scholar] [CrossRef]

- Yuan, K.H.; Xu, W.H.; Li, W.T.; Ding, W.P. An incremental learning mechanism for object classification based on progressive fuzzy three-way concept. Inf. Sci. 2022, 584, 127–147. [Google Scholar] [CrossRef]

- Xu, Y.H.; Yuan, K.H.; Li, W.T. Dynamic updating approximations of local generalized multigranulation neighborhood rough set. Appl. Intell. 2022, in press. [Google Scholar] [CrossRef]

- Guo, Y.M.; Chung, F.L.; Li, G.Z.; Wang, J.C.; Gee, J.C. Leveraging label-specific discriminant mapping features for multi-label learning. ACM Trans. Knowl. Discov. Data 2019, 13, 24:1–24:23. [Google Scholar] [CrossRef]

- Huang, D.; Cabral, R.; Torre, F.D.L. Robust regression. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 363–375. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.H.; Zhang, H.J.; Chow, T.W.S. Multilabel classification with label-specific features and classifiers: A coarse- and fine-tuned framework. IEEE Trans. Cybern. 2021, 51, 1028–1042. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Lin, Y.D.; Jiang, M.; Li, S.Z.; Tang, Y.; Tan, K.C. Multi-label feature selection via global relevance and redundancy optimization. In Proceedings of the International Conference on Artificial Intelligence, Yokohama, Japan, 7–15 January 2020; pp. 2512–2518. [Google Scholar]

- Guo, B.L.; Hou, C.P.; Shan, J.C.; Yi, D.Y. Low rank multi-label classification with missing labels. In Proceedings of the International Conference on Pattern Recognition, Beijing, China, 21–24 August 2018; pp. 417–422. [Google Scholar]

- Sun, L.J.; Ye, P.; Lyu, G.Y.; Feng, S.H.; Dai, G.J.; Zhang, H. Weakly-supervised multi-label learning with noisy features and incomplete labels. Neurocomput. 2020, 413, 61–71. [Google Scholar] [CrossRef]

- Zhang, C.Q.; Yu, Z.W.; Fu, H.Z.; Zhu, P.F.; Chen, L.; Hu, Q.H. Hybrid noise-oriented multilabel learning. IEEE Trans. Cybern. 2020, 50, 2837–2850. [Google Scholar] [CrossRef] [PubMed]

- Lou, Q.D.; Deng, Z.H.; Choi, K.S.; Shen, H.B.; Wang, J.; Wang, S.T. Robust multi-label relief feature selection based on fuzzy margin co-optimization. IEEE Trans. Emerg. Top. Comput. Intell. 2022, 6, 387–398. [Google Scholar] [CrossRef]

- Fan, Y.L.; Liu, J.H.; Liu, P.Z.; Du, Y.Z.; Lan, W.Y.; Wu, S.X. Manifold learning with structured subspace for multi-label feature selection. Pattern Recogn. 2021, 120, 108169:1–108169:16. [Google Scholar] [CrossRef]

- Xu, M.; Li, Y.F.; Zhou, Z.H. Robust multi-label learning with pro loss. IEEE Trans. Knowl. Data Eng. 2020, 32, 1610–1624. [Google Scholar] [CrossRef]

- Braytee, A.; Liu, W.; Anaissi, A.; Kennedy, P.J. Correlated multi-label classification with incomplete label space and class imbalance. ACM Trans. Intell. Syst. Technol. 2019, 10, 56:1–56:26. [Google Scholar] [CrossRef]

- Dong, H.B.; Sun, J.; Sun, X.H. A multi-objective multi-label feature selection algorithm based on shapley value. Entropy 2021, 23, 1094. [Google Scholar] [CrossRef]

- Jain, H.; Prabhu, Y.; Varma, M. Extreme multi-label loss functions for recommendation, tagging, ranking & other missing label applications. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 935–944. [Google Scholar]

- Qarraei, M.; Schultheis, E.; Gupta, P.; Babbar, R. Convex surrogates for unbiased loss functions in extrem classification with missing labels. In Proceedings of the World Wide Web Conference, Ljubljana, Slovenia, 19–23 April 2021; pp. 3711–3720. [Google Scholar]

- Wydmuch, M.; Jasinska-Kobus, K.; Babbar, R.; Dembczynski, K. Propensity-scored probabilistic label trees. In Proceedings of the International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, 11–15 July 2021; pp. 2252–2256. [Google Scholar]

- Chen, Z.J.; Hao, Z.F. A unified multi-label classification framework with supervised low-dimensional embedding. Neurocomputing 2016, 171, 1563–1575. [Google Scholar] [CrossRef]

- Chen, C.; Wang, H.B.; Liu, W.W.; Zhao, X.Y.; Hu, T.L.; Chen, G. Two-stage label embedding via neural factorization machine for multi-label classification. In Proceedings of the Association for the Advance in Artificial Intelligence, Hawaii, HI, USA, 27 December–1 January 2019; pp. 3304–3311. [Google Scholar]

- Wei, T.; Li, Y.F. Learning compact model for large-scale multi-label data. In Proceedings of the Association for the Advance in Artificial Intelligence, Hawaii, HI, USA, 27 December–1 January 2019; pp. 5385–5392. [Google Scholar]

- Huang, J.; Xu, L.C.; Wang, J.; Feng, L.; Yamanishi, K. Discovering latent class labels for multi-label learning. In Proceedings of the International Conference on Artificial Intelligence, Yokohama, Japan, 7–15 January 2020; pp. 3058–3064. [Google Scholar]

- Fang, X.Z.; Teng, S.H.; Lai, Z.H.; He, Z.S.; Xie, S.L.; Wong, W.K. Robust latent subspace learning for image classification. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 2502–2515. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Kwok, J.T.; Zhou, Z.H. Multi-label learning with global and local label correlation. IEEE Trans. Knowl. Data Eng. 2018, 30, 1081–1094. [Google Scholar] [CrossRef]

- Siblini, W.; Kuntz, P.; Meyer, F. A review on dimensionality reduction for multi-label classification. IEEE Trans. Knowl. Data Eng. 2021, 33, 839–857. [Google Scholar] [CrossRef]

- Yu, Z.B.; Zhang, M.L. Multi-label classification with label-specific feature generation: A wrapped approach. IEEE Trans. Pattern Anal. Mach. Intell. 2021, in press. [Google Scholar] [CrossRef]

- He, S.; Feng, L.; Li, L. Estimating latent relative labeling importances for multi-label learning. In Proceedings of the International Conference on Data Mining, Singapore, 17–20 November 2018; pp. 1013–1018. [Google Scholar]

- Zhong, Y.J.; Xu, C.; Du, B.; Zhang, L.F. Independent feature and label components for multi-label classification. In Proceedings of the International Conference on Data Mining, Singapore, 17–20 November 2018; pp. 827–836. [Google Scholar]

- Belkin, M.; Niyogi, P.; Sindhwani, V. Manifold regularization: A geometric framework for learning from labeled and unlabeled examples. J. Mach. Learn. Res. 2006, 7, 2399–2434. [Google Scholar]

- Cai, Z.L.; Zhu, W. Multi-label feature selection via feature manifold learning and sparsity regularization. Int. J. Mach. Learn. Cybern. 2018, 9, 1321–1334. [Google Scholar] [CrossRef]

- Zhang, J.; Luo, Z.M.; Li, C.D.; Zhou, C.E.; Li, S.Z. Manifold regularized discriminative feature selection for multi-label learning. Pattern Recogn. 2019, 95, 136–150. [Google Scholar] [CrossRef]

- Guan, Y.Y.; Li, X.M. Multilabel text classification with incomplete labels: A save generative model with label manifold regularization and confidence constraint. IEEE Multimedia 2020, 27, 38–47. [Google Scholar] [CrossRef]

- Feng, L.; Huang, J.; Shu, S.L.; An, B. Regularized matrix factorization for multilabel learning with missing labels. IEEE Trans. Cybern. 2020, in press. [Google Scholar] [CrossRef]

- Huang, S.J.; Zhou, Z.H. Multi-label learning by exploiting label correlation locally. In Proceedings of the Association for Advanced Artificial Intelligence, Toronto, ON, Canada, 22–26 July 2012; pp. 945–955. [Google Scholar]

- Jia, X.Y.; Zhu, S.S.; Li, W.W. Joint label-specific features and correlation information for multi-label learning. J. Comput. Sci. Technol. 2020, 35, 247–258. [Google Scholar] [CrossRef]

- Ma, J.H.; Chiu, B.C.Y.; Chow, T.W.S. Multilabel classification with group-based mapping: A framework with local feature selection and local label correlation. IEEE Trans. Cybern. 2020, in press. [Google Scholar] [CrossRef] [PubMed]

- Zhu, C.M.; Miao, D.Q.; Wang, Z.; Zhou, R.G.; Wei, L.; Zhang, X.F. Global and local multi-view multi-label learning. Neurocomputing 2020, 371, 67–77. [Google Scholar] [CrossRef]

- Sun, L.J.; Feng, S.H.; Liu, J.; Lyu, G.Y.; Lang, C.Y. Global-local label correlation for partial multi-label learning. IEEE Trans. Multimed. 2022, 24, 581–593. [Google Scholar] [CrossRef]

- Cai, X.; Ding, C.; Nie, F.; Huang, H. On the equivalent of low-rank linear regressions and linear discriminant analysis based regressions. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Chicago, IL, USA, 11–14 August 2013; pp. 1124–1132. [Google Scholar]

- Sylvester, J. Sur l’equation en matrices px=xq. C. R. Acad. Sci. Paris 1884, 99, 67–71. [Google Scholar]

- Liu, G.C.; Lin, Z.C.; Yan, S.C.; Sun, J.; Yu, Y.; Ma, Y. Robust recovery of subspace structures by low-rank representation. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 171–184. [Google Scholar] [CrossRef]

- Boumal, N.; Mishra, B.; Absil, P.A.; Sepulchre, R. Manopt, a matlab toolbox for optimization on manifolds. J. Mach. Learn. Res. 2014, 15, 1455–1459. [Google Scholar]

- Tsoumakas, G.; Katakis, I.; Vlahavas, I. Mining Multi-label Data. In Data Mining and Knowledge Discovery Handbook; Maimon, O., Rokach, L., Eds.; Springer: Boston, MA, USA, 2009; pp. 667–685. [Google Scholar]

- Read, J.; Reutemann, P.; Pfahringer, B.; Holmes, G. Meka: A multi-label/multi-target extension to weka. J. Mach. Learn. Res. 2016, 17, 21:1–21:5. [Google Scholar]

- Zhang, Y.; Zhou, Z.H. Multilabel dimensionality reduction via dependence maximization. ACM Trans. Knowl. Disocv. Data 2010, 4, 14:1–14:21. [Google Scholar] [CrossRef]

- Schapire, R.E.; Singer, Y. BoosTexter: A boosting-based system for text categorization. Mach. Learn. 2000, 39, 135–168. [Google Scholar] [CrossRef]

- Zhang, M.L.; Wu, L. LIFT: Multi-label learning with label-specific features. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 107–120. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.; Li, G.R.; Huang, Q.M.; Wu, X.D. Learning label-specific features and class-dependent labels for multi-label classification. IEEE Trans. Knowl. Data Eng. 2016, 28, 3309–3323. [Google Scholar] [CrossRef]

- Chen, W.J.; Shao, Y.H.; Li, C.N.; Deng, N.Y. MLTSVM: A novel twin support vector machine to multi-label learning. Pattern Recogn. 2016, 52, 61–74. [Google Scholar] [CrossRef]

- Kimura, K.; Kudo, M.; Sun, L.; Koujaku, S. Fast random k-labelsets for large-scale multi-label classification. In Proceedings of the International Conference on Pattern Recognition, Cancun, Mexico, 4–8 December 2016; pp. 438–443. [Google Scholar]

- Demsar, J. Statistical comparisons of classifier over multiple data sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).