Comparing Multi-Objective Local Search Algorithms for the Beam Angle Selection Problem

Abstract

:1. Introduction

2. An Overview of IMRT Optimisation Problems

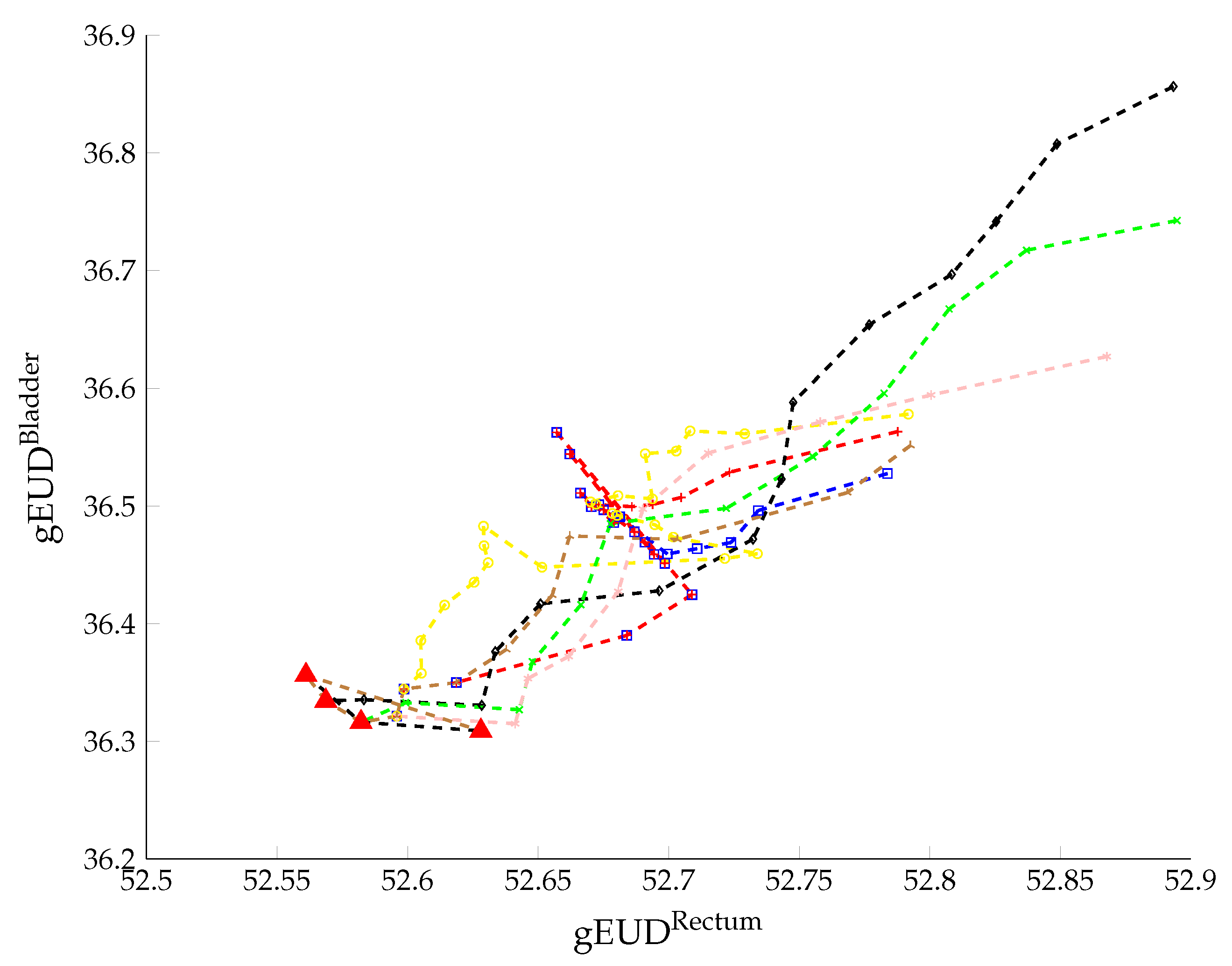

2.1. gEUD-Based MO-BAO: Mathematical Formulation

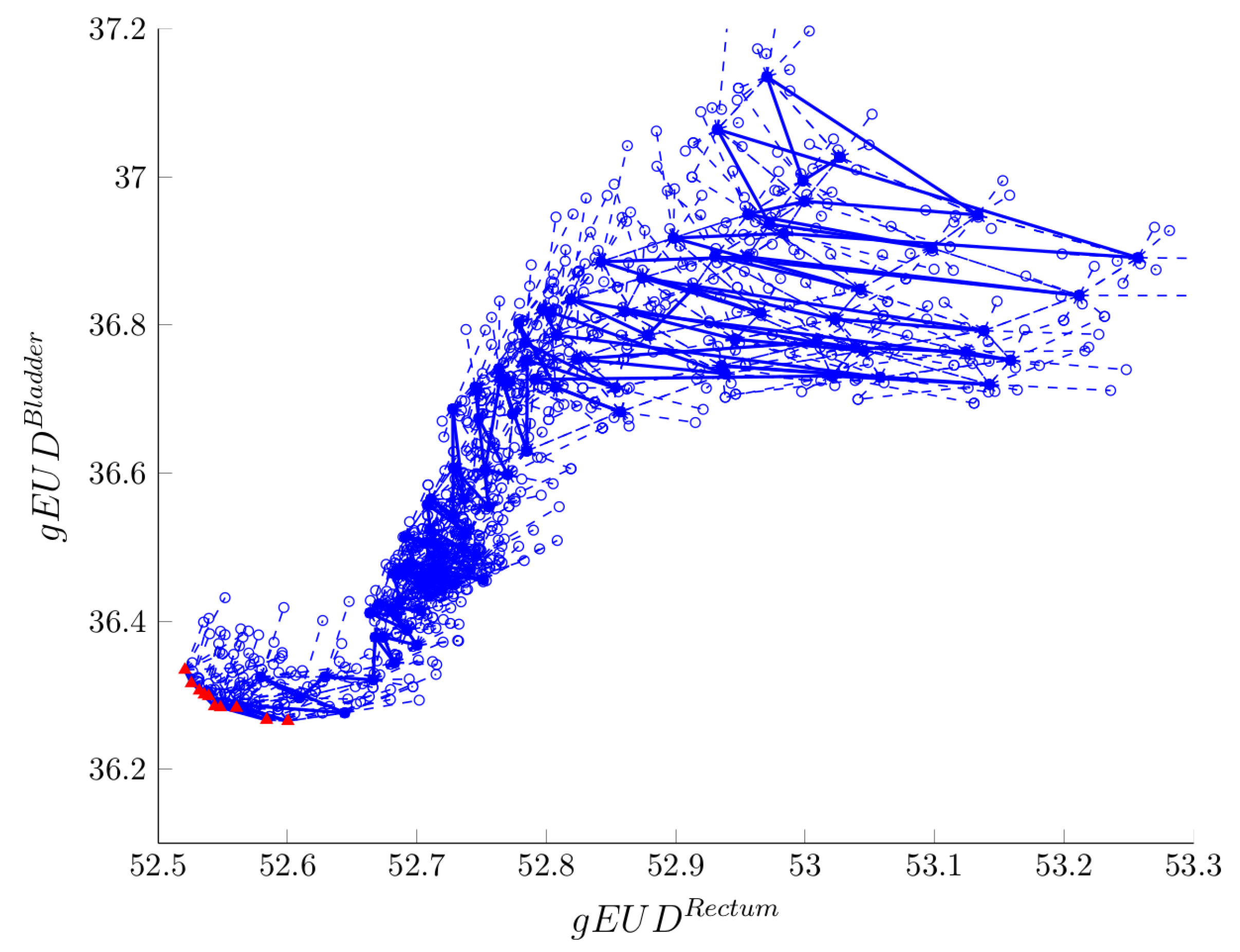

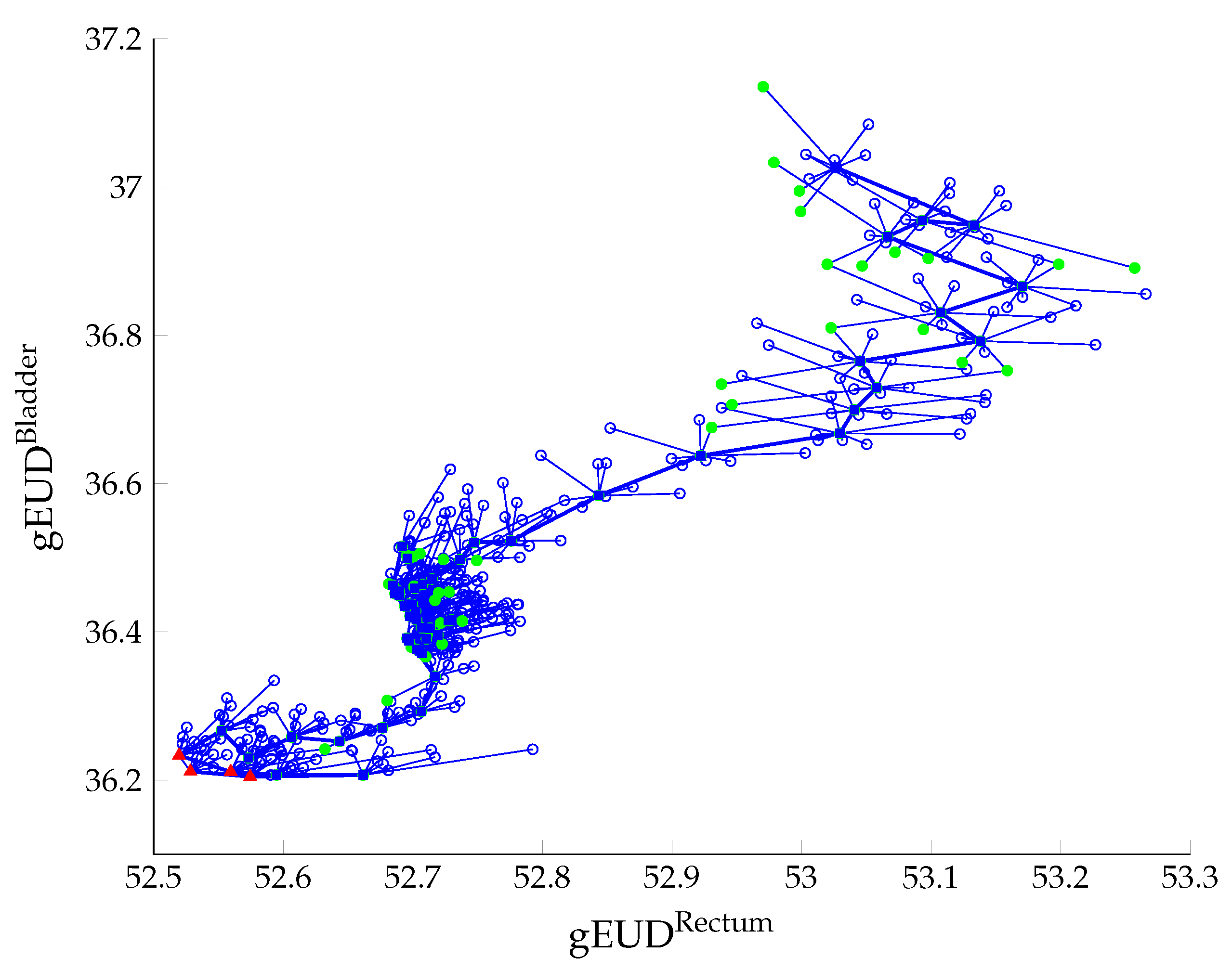

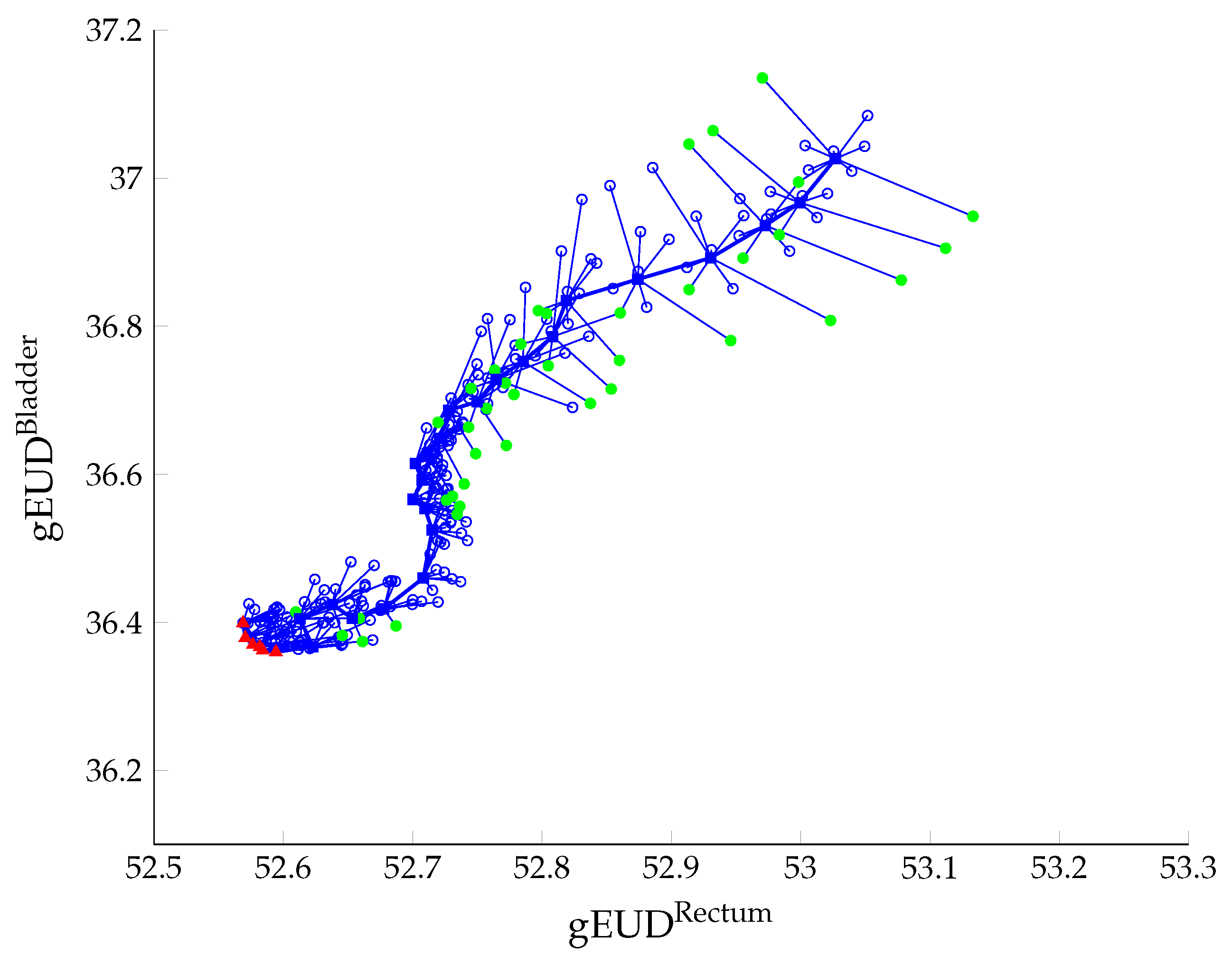

3. The Multi-Objective Beam Angle Optimisation Problem

MO-BAO: Literature Review

4. Multi-Objective Local Search

4.1. Pareto Local Search: General Framework

4.2. Pareto Local Search

| Algorithm 1: Pareto Local Search |

|

4.3. Random Pareto Local Search

4.4. Judgement-Function-Guided Pareto Local Search

4.5. Neighbours-First Pareto Local Search

4.6. Second Phase: Exact Optimisation of the MO-FMO Problem

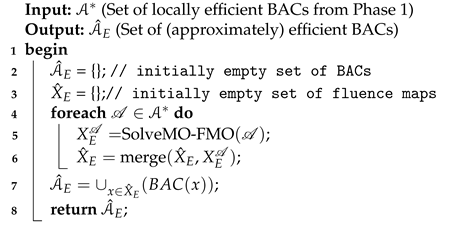

| Algorithm 2: Phase 2: Efficient Set Generation |

|

5. Computational Experiments

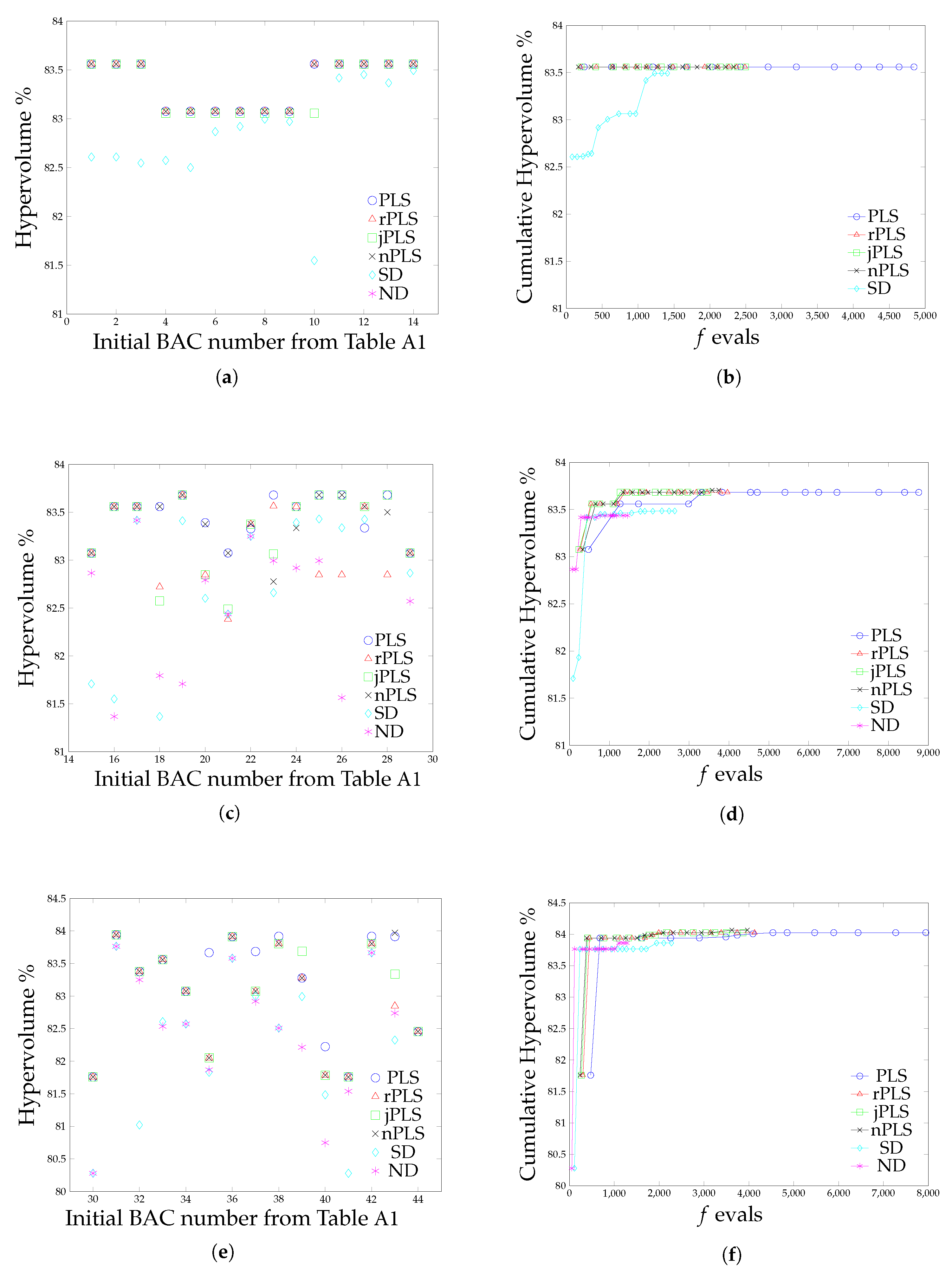

5.1. Hypevolume Quality Indicator

5.2. Results

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Equally distant BACs | 1 | 0 | 70 | 140 | 210 | 280 |

| 2 | 5 | 75 | 145 | 215 | 285 | |

| 3 | 10 | 80 | 150 | 220 | 290 | |

| 4 | 15 | 85 | 155 | 225 | 295 | |

| 5 | 20 | 90 | 160 | 230 | 300 | |

| 6 | 25 | 95 | 165 | 235 | 305 | |

| 7 | 30 | 100 | 170 | 240 | 310 | |

| 8 | 35 | 105 | 175 | 245 | 315 | |

| 9 | 40 | 110 | 180 | 250 | 320 | |

| 10 | 45 | 115 | 185 | 255 | 325 | |

| 11 | 50 | 120 | 190 | 260 | 330 | |

| 12 | 55 | 125 | 195 | 265 | 335 | |

| 13 | 60 | 130 | 200 | 270 | 340 | |

| 14 | 65 | 135 | 205 | 275 | 345 | |

| Semi-Random BACs | 15 | 55 | 95 | 205 | 250 | 305 |

| 16 | 50 | 135 | 175 | 240 | 335 | |

| 17 | 60 | 80 | 200 | 255 | 335 | |

| 18 | 40 | 115 | 190 | 230 | 355 | |

| 19 | 35 | 135 | 165 | 250 | 305 | |

| 20 | 70 | 120 | 150 | 240 | 350 | |

| 21 | 70 | 100 | 155 | 290 | 310 | |

| 22 | 60 | 100 | 150 | 220 | 335 | |

| 23 | 20 | 140 | 190 | 240 | 305 | |

| 24 | 60 | 80 | 185 | 250 | 330 | |

| 25 | 25 | 125 | 155 | 255 | 300 | |

| 26 | 35 | 130 | 175 | 275 | 300 | |

| 27 | 60 | 115 | 175 | 245 | 345 | |

| 28 | 35 | 145 | 170 | 280 | 320 | |

| 29 | 35 | 105 | 165 | 235 | 320 | |

| Completely Random BACs | 30 | 5 | 40 | 230 | 275 | 340 |

| 31 | 95 | 210 | 245 | 270 | 340 | |

| 32 | 30 | 120 | 130 | 170 | 345 | |

| 33 | 80 | 155 | 265 | 270 | 335 | |

| 34 | 20 | 125 | 185 | 220 | 305 | |

| 35 | 10 | 45 | 125 | 155 | 305 | |

| 36 | 80 | 140 | 205 | 245 | 330 | |

| 37 | 20 | 105 | 155 | 245 | 290 | |

| 38 | 155 | 210 | 275 | 315 | 325 | |

| 39 | 135 | 175 | 210 | 275 | 355 | |

| 40 | 80 | 115 | 130 | 320 | 345 | |

| 41 | 0 | 30 | 225 | 245 | 330 | |

| 42 | 215 | 230 | 245 | 285 | 310 | |

| 43 | 55 | 105 | 185 | 225 | 350 | |

| 44 | 0 | 15 | 40 | 215 | 275 |

References

- Ehrgott, M.; Güler, C.; Hamacher, H.W.; Shao, L. Mathematical optimization in intensity modulated radiation therapy. Ann. Oper. Res. 2009, 175, 309–365. [Google Scholar] [CrossRef]

- Cabrera-Guerrero, G.; Ehrgott, M.; Mason, A.; Raith, A. A matheuristic approach to solve the multiobjective beam angle optimization problem in intensity-modulated radiation therapy. Int. Trans. Oper. Res. 2018, 25, 243–268. [Google Scholar] [CrossRef]

- Cabrera-Guerrero, G.; Lagos, C.; Cabrera, E.; Johnson, F.; Rubio, J.M.; Paredes, F. Comparing Local Search Algorithms for the Beam Angles Selection in Radiotherapy. IEEE Access 2018, 6, 23701–23710. [Google Scholar] [CrossRef]

- Ehrgott, M.; Johnston, R. Optimisation of beam directions in intensity modulated radiation therapy planning. OR Spectr. 2003, 25, 251–264. [Google Scholar] [CrossRef]

- Pugachev, A.; Xing, L. Incorporating prior knowledge into beam orientaton optimization in IMRT. Int. J. Radiat. Oncol. Biol. Phys. 2002, 54, 1565–1574. [Google Scholar] [CrossRef]

- Pugachev, A.; Li, J.G.; Boyer, A.; Hancock, S.; Le, Q.; Donaldson, S.; Xing, L. Role of beam orientation optimization in intensity-modulated radiation therapy. Int. J. Radiat. Oncol. Biol. Phys. 2001, 50, 551–560. [Google Scholar] [CrossRef]

- Rowbottom, C.; Webb, S.; Oldham, M. Beam orientation optimization in intensity-modulated radiation treatment planning. Med. Phys. 1998, 25, 1171–1179. [Google Scholar] [CrossRef]

- Cabrera-Guerrero, G.; Mason, A.; Raith, A.; Ehrgott, M. Pareto local search algorithms for the multi-objective beam angle optimisation problem. J. Heur. 2018, 24, 205–238. [Google Scholar] [CrossRef]

- Cabrera-Guerrero, G.; Ehrgott, M.; Mason, A.; Raith, A. Bi-objective optimisation over a set of convex sub-problems. Ann. Oper. Res. 2021, in press. [Google Scholar] [CrossRef]

- Niemierko, A. Reporting and analyzing dose distributions: A concept of equivalent uniform dose. Med. Phys. 1997, 24, 103–113. [Google Scholar] [CrossRef]

- Perez-Alija, J.; Gallego, P.; Barceló, M.; Ansón, C.; Chimeno, J.; Latorre, A.; Jornet, N.; García, N.; Vivancos, H.; Ruíz, A.; et al. PO-1838 Dosimetric impact of the introduction of biological optimization objectives gEUD and RapidPlan. Radiother. Oncol. 2021, 161, S1567–S1568. [Google Scholar] [CrossRef]

- Fogliata, A.; Thompson, S.; Stravato, A.; Tomatis, S.; Scorsetti, M.; Cozzi, L. On the gEUD biological optimization objective for organs at risk in Photon Optimizer of Eclipse treatment planning system. J. Appl. Clin. Med. Phys. 2018, 19, 106–114. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Thomas, E.; Chapet, O.; Kessler, M.; Lawrence, T.; Ten Haken, R. Benefit of using biologic parameters (EUD and NTCP) in IMRT optimization for treatment of intrahepatic tumors. Int. J. Radiat. Oncol. Biol. Phys. 2005, 62, 571–578. [Google Scholar] [CrossRef]

- Wu, Q.; Mohan, R.; Niemierko, A. IMRT optimization based on the generalized equivalent uniform dose (EUD). In Engineering in Medicine and Biology Society, 2000, Proceedings of the 22nd Annual International Conference of the IEEE, Chicago, IL, USA, 23–28 July 2000; Enderle, J., Ed.; IEEE: Piscataway, NJ, USA, 2000; Volume 1, pp. 710–713. [Google Scholar]

- Wu, Q.; Djajaputra, D.; Wu, Y.; Zhou, J.; Liu, H.; Mohan, R. Intensity-modulated radiotherapy optimization with gEUD-guided dose-volume objectives. Phys. Med. Biol. 2003, 48, 279–291. [Google Scholar] [CrossRef]

- Cabrera-Guerrero, G.; Rodriguez, N.; Lagos, C.; Cabrera, E.; Johnson, F. Local Search Algorithms for the Beam Angles’ Selection Problem in Radiotherapy. Math. Probl. Eng. 2018, 2018, 4978703. [Google Scholar] [CrossRef] [Green Version]

- Cabrera G., G.; Ehrgott, M.; Mason, A.; Philpott, A. Multi-objective optimisation of positively homogeneous functions and an application in radiation therapy. Oper. Res. Lett. 2014, 42, 268–272. [Google Scholar] [CrossRef]

- Miettinen, K. Nonlinear Multiobjective Optimization. In International Series in Operations Research and Management Science; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1999; Volume 12. [Google Scholar]

- Dias, J.; Rocha, H.; Ferreira, B.; Lopes, M. A genetic algorithm with neural network fitness function evaluation for IMRT beam angle optimization. Cent. Eur. J. Oper. Res. 2014, 22, 431–455. [Google Scholar] [CrossRef] [Green Version]

- Lei, J.; Li, Y. An approaching genetic algorithm for automatic beam angle selection in IMRT planning. Comput. Methods Programs Biomed. 2009, 93, 257–265. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Yao, J.; Yao, D. Automatic beam angle selection in IMRT planning using genetic algorithm. Phys. Med. Biol. 2004, 49, 1915–1932. [Google Scholar] [CrossRef]

- Li, Y.; Yao, D.; Chen, W. A particle swarm optimization algorithm for beam angle selection in intensity-modulated radiotherapy planning. Phys. Med. Biol. 2005, 50, 3491–3514. [Google Scholar] [CrossRef]

- Li, Y.; Yao, D. Accelerating the Radiotherapy Planning with a Hybrid Method of Genetic Algorithm and Ant Colony System. In Advances in Natural Computation; Lecture Notes in Computer, Science; Jiao, L., Wang, L., Gao, X., Liu, J., Wu, F., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4222, pp. 340–349. [Google Scholar]

- Li, Y.; Yao, D.; Chen, W.; Zheng, J.; Yao, J. Ant colony system for the beam angle optimization problem in radiotherapy planning: A preliminary study. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Scotland, UK, 2–5 September 2005; Corne, D., Ed.; IEEE: Piscataway, NJ, USA, 2005; pp. 1532–1538. [Google Scholar]

- Bertsimas, D.; Cacchiani, V.; Craft, D.; Nohadani, O. A hybrid approach to beam angle optimization in intensity-modulated radiation therapy. Comput. Oper. Res. 2013, 40, 2187–2197. [Google Scholar] [CrossRef]

- Bortfeld, T.; Schlegel, W. Optimization of beam orientations in radiation therapy: Some theoretical considerations. Phys. Med. Biol. 1993, 38, 291–304. [Google Scholar] [CrossRef]

- Djajaputra, D.; Wu, Q.; Wu, Y.; Mohan, R. Algorithm and performance of a clinical IMRT beam-angle optimization system. Phys. Med. Biol. 2003, 48, 3191. [Google Scholar] [CrossRef] [PubMed]

- Stein, J.; Mohan, R.; Wang, X.; Bortfeld, T.; Wu, Q.; Preiser, K.; Ling, C.; Schlegel, W. Number and orientation of beams in intensity-modulated radiation treatments. Med. Phys. 1997, 24, 149–160. [Google Scholar] [CrossRef]

- Aleman, D.; Kumar, A.; Ahuja, R.; Romeijn, H.; Dempsey, J. Neighborhood search approaches to beam orientation optimization in intensity modulated radiation therapy treatment planning. J. Glob. Optim. 2008, 42, 587–607. [Google Scholar] [CrossRef]

- Craft, D. Local beam angle optimization with linear programming and gradient search. Phys. Med. Biol. 2007, 52, 127–135. [Google Scholar] [CrossRef] [PubMed]

- Das, S.; Cullip, T.; Tracton, G.; Chang, S.; Marks, L.; Anscher, M.; Rosenman, J. Beam orientation selection for intensity-modulated radiation therapy based on target equivalent uniform dose maximization. Int. J. Radiat. Oncol. Biol. Phys. 2003, 55, 215–224. [Google Scholar] [CrossRef]

- Lim, G.; Kardar, L.; Cao, W. A hybrid framework for optimizing beam angles in radiation therapy planning. Ann. Oper. Res. 2014, 217, 357–383. [Google Scholar] [CrossRef]

- Gutierrez, M.; Cabrera-Guerrero, G. A Reduced Variable Neighbourhood Search Algorithm for the Beam Angle Selection Problem in Radiation Therapy. In Proceedings of the 2020 39th International Conference of the Chilean Computer Science Society (SCCC), Coquimbo, Chile, 16–20 November 2020. [Google Scholar]

- Gutierrez, M.; Cabrera-Guerrero, G. A Variable Neighbourhood Search Algorithm for the Beam Angle Selection Problem in Radiation Therapy. In Proceedings of the 2018 37th International Conference of the Chilean Computer Science Society (SCCC), Santiago, Chile, 5–9 November 2018. [Google Scholar]

- Aleman, D.M.; Romeijn, H.E.; Dempsey, J.F. A Response Surface Approach to Beam Orientation Optimization in Intensity-Modulated Radiation Therapy Treatment Planning. INFORMS J. Comput. 2009, 21, 62–76. [Google Scholar] [CrossRef]

- Zhang, H.H.; Gao, S.; Chen, W.; Shi, L.; D’Souza, W.D.; Meyer, R.R. A surrogate-based metaheuristic global search method for beam angle selection in radiation treatment planning. Phys. Med. Biol. 2013, 58, 1933–1946. [Google Scholar] [CrossRef] [Green Version]

- Rocha, H.; Dias, J.M.; Ferreira, B.C.; Lopes, M.C. Beam angle optimization for intensity-modulated radiation therapy using a guided pattern search method. Phys. Med. Biol. 2013, 58, 2939–2953. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ehrgott, M.; Holder, A.; Reese, J. Beam selection in radiotherapy design. Linear Algebra Its Appl. 2008, 428, 1272–1312. [Google Scholar] [CrossRef] [Green Version]

- Lim, G.; Cao, W. A two-phase method for selecting IMRT treatment beam angles: Branch-and-Prune and local neighborhood search. Eur. J. Oper. Res. 2012, 217, 609–618. [Google Scholar] [CrossRef]

- Zhang, H.H.; Shi, L.; Meyer, R.; Nazareth, D.; D’Souza, W. Solving Beam-Angle Selection and Dose Optimization Simultaneously via High-Throughput Computing. INFORMS J. Comput. 2009, 21, 427–444. [Google Scholar] [CrossRef]

- Sadeghnejad Barkousaraie, A.; Ogunmolu, O.; Jiang, S.; Nguyen, D. A fast deep learning approach for beam orientation optimization for prostate cancer treated with intensity-modulated radiation therapy. Med. Phys. 2020, 47, 880–897. [Google Scholar] [CrossRef]

- Sadeghnejad-Barkousaraie, A.; Bohara, G.; Jiang, S.; Nguyen, D. A reinforcement learning application of a guided Monte Carlo tree search algorithm for beam orientation selection in radiation therapy. Mach. Learn. Sci. Technol. 2021, 2, 035013. [Google Scholar] [CrossRef]

- Gerlach, S.; Fürweger, C.; Hofmann, T.; Schlaefer, A. Feasibility and analysis of CNN-based candidate beam generation for robotic radiosurgery. Med. Phys. 2020, 47, 3806–3815. [Google Scholar] [CrossRef]

- Gerlach, S.; Fürweger, C.; Hofmann, T.; Schlaefer, A. Multicriterial CNN based beam generation for robotic radiosurgery of the prostate. Curr. Dir. Biomed. Eng. 2020, 6, 20200030. [Google Scholar] [CrossRef]

- Schreibmann, E.; Lahanas, M.; Xing, L.; Baltas, D. Multiobjective evolutionary optimization of the number of beams, their orientations and weights for intensity-modulated radiation therapy. Phys. Med. Biol. 2004, 49, 747–770. [Google Scholar] [CrossRef]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. Evol. Comput. IEEE Trans. 2002, 6, 182–197. [Google Scholar] [CrossRef] [Green Version]

- Fiege, J.; McCurdy, B.; Potrebko, P.; Champion, H.; Cull, A. PARETO: A novel evolutionary optimization approach to multiobjective IMRT planning. Med. Phys. 2011, 38, 5217–5229. [Google Scholar] [CrossRef]

- Breedveld, S.; Storchi, P.R.M.; Voet, P.W.J.; Heijmen, B.J.M. iCycle: Integrated, multicriterial beam angle, and profile optimization for generation of coplanar and noncoplanar IMRT plans. Med. Phys. 2012, 39, 951–963. [Google Scholar] [CrossRef]

- Azizi-Sultan, A.S. Automatic Selection of Beam Orientations in Intensity-Modulated Radiation Therapy. Electron. Notes Discret. Math. 2010, 36, 127–134. [Google Scholar] [CrossRef]

- Breedveld, S.; Craft, D.; van Haveren, R.; Heijmen, B. Multi-criteria optimization and decision-making in radiotherapy. Eur. J. Oper. Res. 2019, 277, 1–19. [Google Scholar] [CrossRef]

- Chankong, V.; Haimes, Y. Multiobjective Decision Making Theory and Methodology; Elsevier Science: New York, NY, USA, 1983. [Google Scholar]

- Haimes, Y.Y.; Lasdon, L.; Da, D. On a Bicriterion Formulation of the Problems of Integrated System Identification and System Optimization. IEEE Trans. Syst. Man Cybern. 1971, 1, 296–297. [Google Scholar]

- Paquete, L.; Chiarandini, M.; Stützle, T. Pareto Local Optimum Sets in the Biobjective Traveling Salesman Problem: An Experimental Study. In Metaheuristics for Multiobjective Optimisation; Lecture Notes in Economics and Mathematical, Systems; Gandibleux, X., Sevaux, M., Sörensen, K., T’kindt, V., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; Volume 535, pp. 177–199. [Google Scholar]

- Angel, E.; Bampis, E.; Gourvès, L. A Dynasearch Neighborhood for the Bicriteria Traveling Salesman Problem. In Metaheuristics for Multiobjective Optimisation; Lecture Notes in Economics and Mathematical, Systems; Gandibleux, X., Sevaux, M., Sörensen, K., T’kindt, V., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; Volume 535, pp. 153–176. [Google Scholar]

- Lust, T.; Teghem, J. Two-phase Pareto local search for the biobjective traveling salesman problem. J. Heuristics 2010, 16, 475–510. [Google Scholar] [CrossRef]

- Drugan, M.; Thierens, D. Stochastic Pareto local search: Pareto neighbourhood exploration and perturbation strategies. J. Heuristics 2012, 18, 727–766. [Google Scholar] [CrossRef] [Green Version]

- Liefooghe, A.; Humeau, J.; Mesmoudi, S.; Jourdan, L.; Talbi, E.G. On dominance-based multiobjective local search: Design, implementation and experimental analysis on scheduling and traveling salesman problems. J. Heuristics 2011, 18, 317–352. [Google Scholar] [CrossRef]

- Eichfelder, G. Adaptive Scalarization Methods in Multiobjective Optimization; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Deasy, J.; Blanco, A.; Clark, V. CERR: A computational environment for radiotherapy research. Med. Phys. 2003, 30, 979–985. [Google Scholar] [CrossRef]

- Wächter, A.; Biegler, L. On the implementation of a primal-dual interior point filter line search algorithm for large-scale nonlinear programming. Math. Program. 2006, 106, 25–57. [Google Scholar] [CrossRef]

- Hansen, M.; Jaszkiewicz, A. Evaluating the Quality of Approximations to the Non-Dominated Set; Technical Report; IMM, Department of Mathematical Modelling, Technical University of Denmark: Lyngby, Denmark, 1998. [Google Scholar]

- Knowles, J.; Corne, D. On metrics for comparing nondominated sets. In Proceedings of the 2002 Congress on Evolutionary Computation, Washington, DC, USA, 12–17 May 2002; Volume 1, pp. 711–716. [Google Scholar]

- Zitzler, E. Evolutionary Algorithms for Multiobjective Optimization: Methods and Applications. Ph.D. Thesis, ETH Zurich, Zurich, Switzerland, 1999. [Google Scholar]

- Zitzler, E.; Thiele, L.; Laumanns, M.; Fonseca, C.M.; Grunert da Fonseca, V. Performance Assessment of Multiobjective Optimizers: An Analysis and Review. IEEE Trans. Evol. Comput. 2003, 7, 117–132. [Google Scholar] [CrossRef] [Green Version]

| MO or SO | Dominance Analysis per Iteration | All Neighbours Explored | Iteration Consists on | Next Solution Selection | No Neighbour Meets the Criterion to Be Chosen | Termination Criterion | |

|---|---|---|---|---|---|---|---|

| PLS | MO | Y | Y | Exploring the entire neighbourhood of all solutions in the set of non-dominated points (NDPs). | No choosing criterion needed | Algorithm ends | The neighbourhood of all the solutions in the set of NDPs has already been explored |

| jPLS | MO | Y | Y | Selecting the solution with the best single-objective function value within the set of NDPs and exploring its entire neighbourhood. | Solution with the best single-objective function value in the set of NDPs | If no neighbour has a better objective function value than the current solution, it chooses the solution with the best objective function value from the set of NDPs | The neighbourhood of all the solutions in the set of NDPs has already been explored |

| rPLS | MO | Y | Y | Randomly selecting a solution within the set of NDPs and exploring its entire neighbourhood. | Randomly among those solutions in the set of NDPs | Algorithm ends | The neighbourhood of all the solutions in the set of NDPs has already been explored |

| nPLS | MO | Y | Y | Selecting a solution within the set of NDPs that dominates the current solution and exploring its entire neighbourhood. | First neighbour that dominates the current solution. | If no neighbour dominates the current solution, it chooses one solution randomly from the set of NDPs | The neighbourhood of all the solutions in the set of NDPs has already been explored |

| PLS | rPLS | jPLS | nPLS | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| # | f Eval | f Eval | f Eval | f Eval | |||||||||||||

| Equally distant BACs | 1 | 83.560 | 4 | 33 | 250 | 83.560 | 4 | 24 | 197 | 83.560 | 4 | 26 | 206 | 83.560 | 4 | 20 | 168 |

| 2 | 83.560 | 4 | 55 | 379 | 83.560 | 4 | 27 | 221 | 83.560 | 4 | 25 | 202 | 83.560 | 4 | 21 | 177 | |

| 3 | 83.560 | 4 | 82 | 576 | 83.560 | 4 | 27 | 223 | 83.560 | 4 | 29 | 246 | 83.560 | 4 | 37 | 299 | |

| 4 | 83.076 | 8 | 35 | 260 | 83.076 | 8 | 21 | 171 | 83.056 | 8 | 22 | 178 | 83.074 | 8 | 22 | 178 | |

| 5 | 83.076 | 8 | 27 | 207 | 83.076 | 8 | 20 | 159 | 83.056 | 8 | 21 | 171 | 83.074 | 8 | 20 | 164 | |

| 6 | 83.076 | 9 | 54 | 358 | 83.076 | 8 | 18 | 147 | 83.056 | 8 | 18 | 148 | 83.074 | 8 | 16 | 130 | |

| 7 | 83.076 | 9 | 63 | 400 | 83.076 | 8 | 20 | 161 | 83.056 | 8 | 21 | 170 | 83.076 | 9 | 20 | 156 | |

| 8 | 83.076 | 9 | 58 | 383 | 83.076 | 8 | 27 | 206 | 83.056 | 8 | 22 | 178 | 83.074 | 8 | 21 | 167 | |

| 9 | 83.076 | 9 | 57 | 393 | 83.076 | 8 | 24 | 191 | 83.056 | 8 | 23 | 187 | 83.074 | 8 | 23 | 183 | |

| 10 | 83.560 | 4 | 75 | 529 | 83.560 | 4 | 31 | 251 | 83.056 | 8 | 39 | 313 | 83.560 | 4 | 24 | 200 | |

| 11 | 83.560 | 4 | 46 | 333 | 83.560 | 4 | 22 | 182 | 83.560 | 4 | 17 | 144 | 83.560 | 4 | 19 | 157 | |

| 12 | 83.560 | 4 | 43 | 297 | 83.560 | 4 | 19 | 155 | 83.560 | 4 | 15 | 125 | 83.560 | 4 | 15 | 126 | |

| 13 | 83.560 | 4 | 40 | 272 | 83.560 | 4 | 13 | 110 | 83.560 | 4 | 14 | 118 | 83.560 | 4 | 14 | 117 | |

| 14 | 83.560 | 4 | 28 | 208 | 83.560 | 4 | 14 | 115 | 83.560 | 4 | 13 | 112 | 83.560 | 4 | 13 | 110 | |

| Semi-Random BACs | 15 | 83.076 | 9 | 68 | 476 | 83.076 | 8 | 34 | 269 | 83.076 | 8 | 29 | 240 | 83.076 | 9 | 43 | 335 |

| 16 | 83.560 | 4 | 112 | 794 | 83.560 | 4 | 32 | 268 | 83.560 | 4 | 39 | 326 | 83.560 | 4 | 38 | 320 | |

| 17 | 83.560 | 4 | 72 | 461 | 83.560 | 4 | 30 | 234 | 83.560 | 4 | 23 | 190 | 83.560 | 4 | 23 | 190 | |

| 18 | 83.560 | 4 | 198 | 1257 | 82.722 | 10 | 55 | 428 | 82.575 | 4 | 41 | 343 | 83.560 | 4 | 32 | 262 | |

| 19 | 83.681 | 8 | 50 | 338 | 83.681 | 8 | 23 | 185 | 83.681 | 8 | 21 | 175 | 83.681 | 8 | 30 | 240 | |

| 20 | 83.392 | 10 | 67 | 505 | 82.849 | 4 | 16 | 130 | 82.849 | 6 | 19 | 157 | 83.377 | 6 | 28 | 232 | |

| 21 | 83.076 | 9 | 103 | 710 | 82.385 | 9 | 33 | 256 | 82.490 | 4 | 26 | 219 | 83.076 | 8 | 30 | 247 | |

| 22 | 83.328 | 6 | 21 | 170 | 83.377 | 6 | 19 | 157 | 83.377 | 6 | 19 | 162 | 83.377 | 6 | 17 | 142 | |

| 23 | 83.681 | 8 | 102 | 694 | 83.566 | 8 | 74 | 566 | 83.067 | 5 | 41 | 339 | 82.777 | 4 | 35 | 288 | |

| 24 | 83.560 | 4 | 77 | 509 | 83.560 | 4 | 28 | 237 | 83.560 | 4 | 26 | 217 | 83.337 | 6 | 46 | 371 | |

| 25 | 83.681 | 8 | 55 | 336 | 82.849 | 8 | 21 | 167 | 83.681 | 8 | 18 | 150 | 83.681 | 8 | 24 | 190 | |

| 26 | 83.681 | 8 | 69 | 459 | 82.849 | 8 | 38 | 284 | 83.681 | 8 | 29 | 239 | 83.681 | 8 | 30 | 242 | |

| 27 | 83.337 | 6 | 145 | 1051 | 83.560 | 4 | 33 | 271 | 83.560 | 4 | 25 | 214 | 83.560 | 4 | 31 | 254 | |

| 28 | 83.681 | 8 | 97 | 646 | 82.849 | 8 | 42 | 336 | 83.681 | 8 | 38 | 313 | 83.501 | 4 | 32 | 265 | |

| 29 | 83.076 | 9 | 52 | 355 | 83.076 | 8 | 22 | 175 | 83.076 | 8 | 23 | 189 | 83.076 | 8 | 21 | 170 | |

| Completely Random BACs | 30 | 81.758 | 6 | 67 | 473 | 81.758 | 6 | 37 | 301 | 81.758 | 6 | 32 | 265 | 81.758 | 6 | 29 | 237 |

| 31 | 83.940 | 7 | 30 | 206 | 83.940 | 7 | 18 | 146 | 83.940 | 7 | 17 | 138 | 83.940 | 7 | 18 | 144 | |

| 32 | 83.377 | 6 | 138 | 936 | 83.377 | 6 | 43 | 352 | 83.377 | 6 | 44 | 361 | 83.377 | 6 | 39 | 325 | |

| 33 | 83.560 | 4 | 95 | 642 | 83.560 | 4 | 41 | 331 | 83.560 | 4 | 36 | 289 | 83.560 | 4 | 37 | 299 | |

| 34 | 83.076 | 8 | 92 | 639 | 83.076 | 8 | 32 | 262 | 83.076 | 8 | 32 | 266 | 83.076 | 8 | 30 | 249 | |

| 35 | 83.667 | 12 | 89 | 591 | 82.050 | 12 | 37 | 291 | 82.050 | 12 | 35 | 289 | 82.050 | 12 | 31 | 252 | |

| 36 | 83.914 | 10 | 35 | 253 | 83.914 | 10 | 21 | 164 | 83.914 | 10 | 20 | 164 | 83.914 | 10 | 22 | 173 | |

| 37 | 83.685 | 9 | 54 | 351 | 83.076 | 9 | 18 | 143 | 83.076 | 8 | 17 | 138 | 83.076 | 8 | 18 | 146 | |

| 38 | 83.918 | 5 | 65 | 445 | 83.805 | 5 | 32 | 258 | 83.805 | 5 | 31 | 252 | 83.805 | 5 | 33 | 265 | |

| 39 | 83.278 | 14 | 43 | 318 | 83.278 | 14 | 30 | 232 | 83.688 | 4 | 43 | 355 | 83.278 | 14 | 30 | 222 | |

| 40 | 82.224 | 14 | 92 | 613 | 81.785 | 14 | 38 | 296 | 81.785 | 14 | 30 | 243 | 81.785 | 14 | 39 | 304 | |

| 41 | 81.758 | 6 | 55 | 425 | 81.758 | 6 | 46 | 366 | 81.758 | 6 | 32 | 269 | 81.758 | 6 | 40 | 327 | |

| 42 | 83.918 | 5 | 81 | 543 | 83.805 | 5 | 29 | 240 | 83.805 | 5 | 25 | 213 | 83.805 | 5 | 26 | 221 | |

| 43 | 83.914 | 10 | 122 | 839 | 82.849 | 4 | 45 | 356 | 83.337 | 6 | 30 | 255 | 83.972 | 4 | 56 | 456 | |

| 44 | 82.455 | 5 | 98 | 670 | 82.455 | 5 | 48 | 389 | 82.455 | 5 | 45 | 374 | 82.455 | 5 | 41 | 348 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cabrera-Guerrero, G.; Lagos, C. Comparing Multi-Objective Local Search Algorithms for the Beam Angle Selection Problem. Mathematics 2022, 10, 159. https://doi.org/10.3390/math10010159

Cabrera-Guerrero G, Lagos C. Comparing Multi-Objective Local Search Algorithms for the Beam Angle Selection Problem. Mathematics. 2022; 10(1):159. https://doi.org/10.3390/math10010159

Chicago/Turabian StyleCabrera-Guerrero, Guillermo, and Carolina Lagos. 2022. "Comparing Multi-Objective Local Search Algorithms for the Beam Angle Selection Problem" Mathematics 10, no. 1: 159. https://doi.org/10.3390/math10010159

APA StyleCabrera-Guerrero, G., & Lagos, C. (2022). Comparing Multi-Objective Local Search Algorithms for the Beam Angle Selection Problem. Mathematics, 10(1), 159. https://doi.org/10.3390/math10010159