How Digital and Oral Peer Feedback Improves High School Students’ Written Argumentation—A Case Study Exploring the Effectiveness of Peer Feedback in Geography

Abstract

1. Introduction

1.1. Research Background

1.2. Research Questions

- What do successful and effective peer feedback groups do differently or better than less effective groups?

- What deficits and potential in written argumentation and feedback can be identified?

- How are different media (digital or written) used for feedback?

- To what extent do students accept feedback and how do they optimize their texts due to the feedback of their peers?

2. Theoretical Perspectives

2.1. Argumentation (Competence) in Educational Contexts

2.2. A Disciplinary Literacy Approach: Written Argumentation Competence in Geography Education

2.3. Developing High School Students’ Argumentation Skills in Geography

2.4. Peer Feedback in the Context of Written Argumentation

3. Methods

3.1. Classroom Contexts: Participants, Procedure of the Teaching Unit

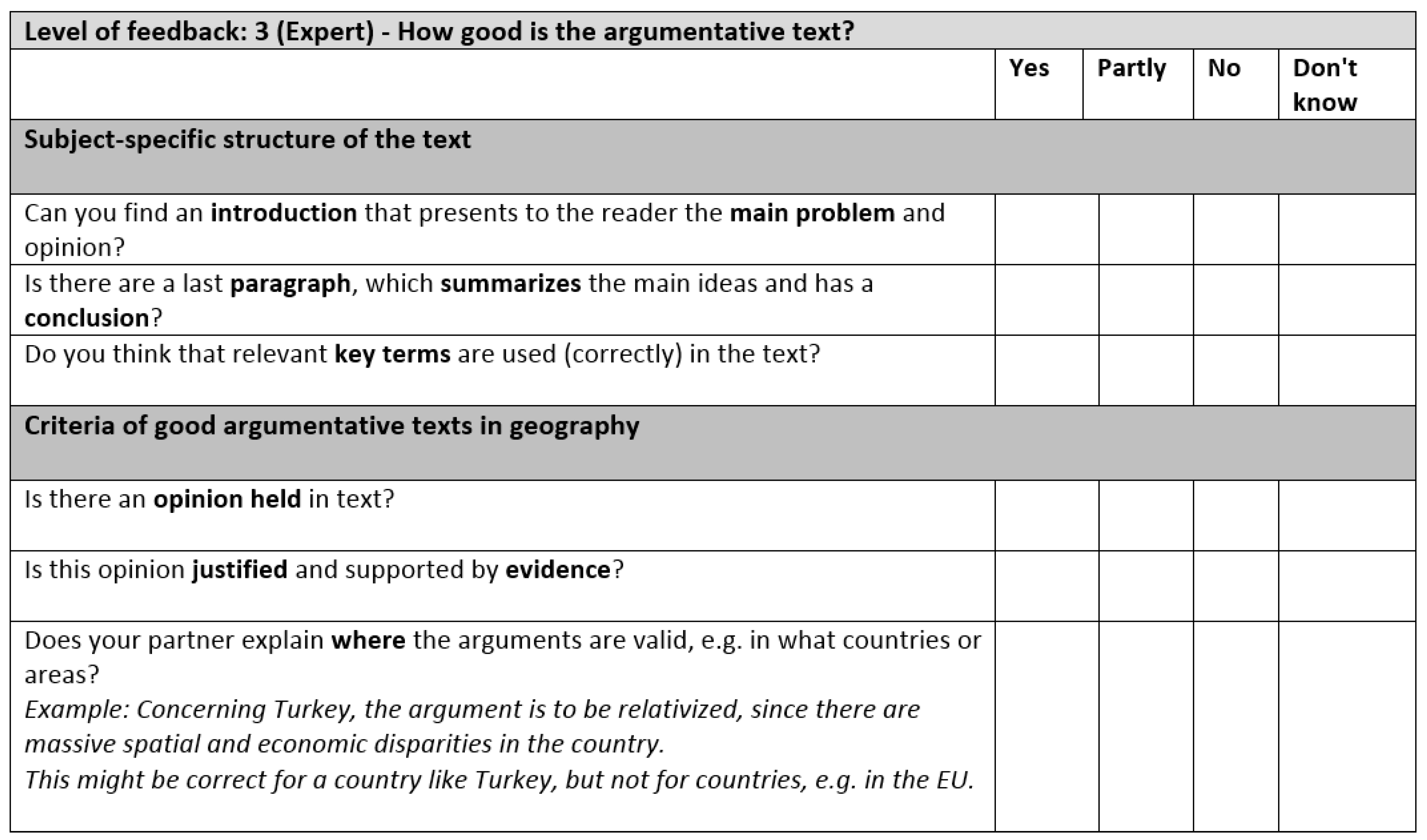

3.2. Theoretical and Empirically-Guided Design of a Feedback Sheet for Written Argumentation in Geography

3.3. Methods of the Case Study: Quantitative and Qualitative Mixed-Method Approach and Data Analysis

- The second task was the handling of feedback in the text itself. The students were supposed to write in Word, since Word offers the function “versions”. Due to the function, different versions of text file could be stored, and the changes could be visualized. Moreover, the students’ annotations could be digitally saved and highlighted. This allowed a procedural document analysis of the three stages of the texts; the pre-text, the re-viewed text and the final post-text [119]. Here, we could document what kind of feedback appeared in the feedback sheet and in the texts, and which of the feedback suggestions appeared to be accepted and changed and/or corrected in the final file.

- The final task was the analysis of the interactional stage of the feedback in which mutual feedback was presented. First of all, we recorded the students’ interactions and transcribed it on the basis of conversation and sociolinguistic analysis [120,121,122]. We rejected the idea of videography, because of our lack of interest in visual aspects of feedback and in favor of less organizational effort and less pressure for the students. Using the transcripts, we documented the quantity of speech acts [123], the quantity of the switching of speakers as a grade for interaction and the content of each of the speech acts. The latter we analyzed by assigning the acts, which contained references to content in the feedback’s interactional process, to inductive partially overlapping categories using a number of fundamental frameworks (Table 1) [28,124,125].

4. Findings

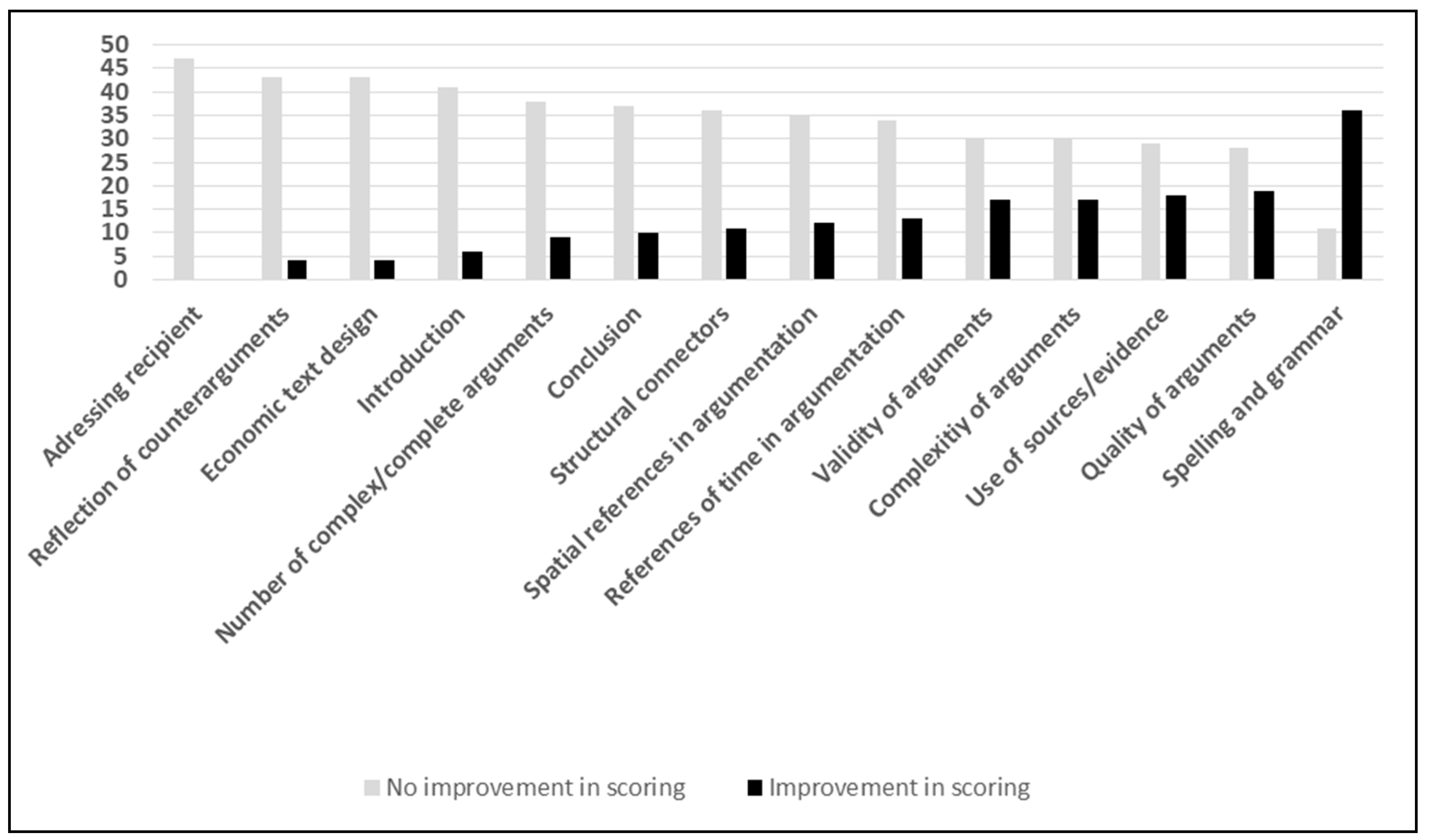

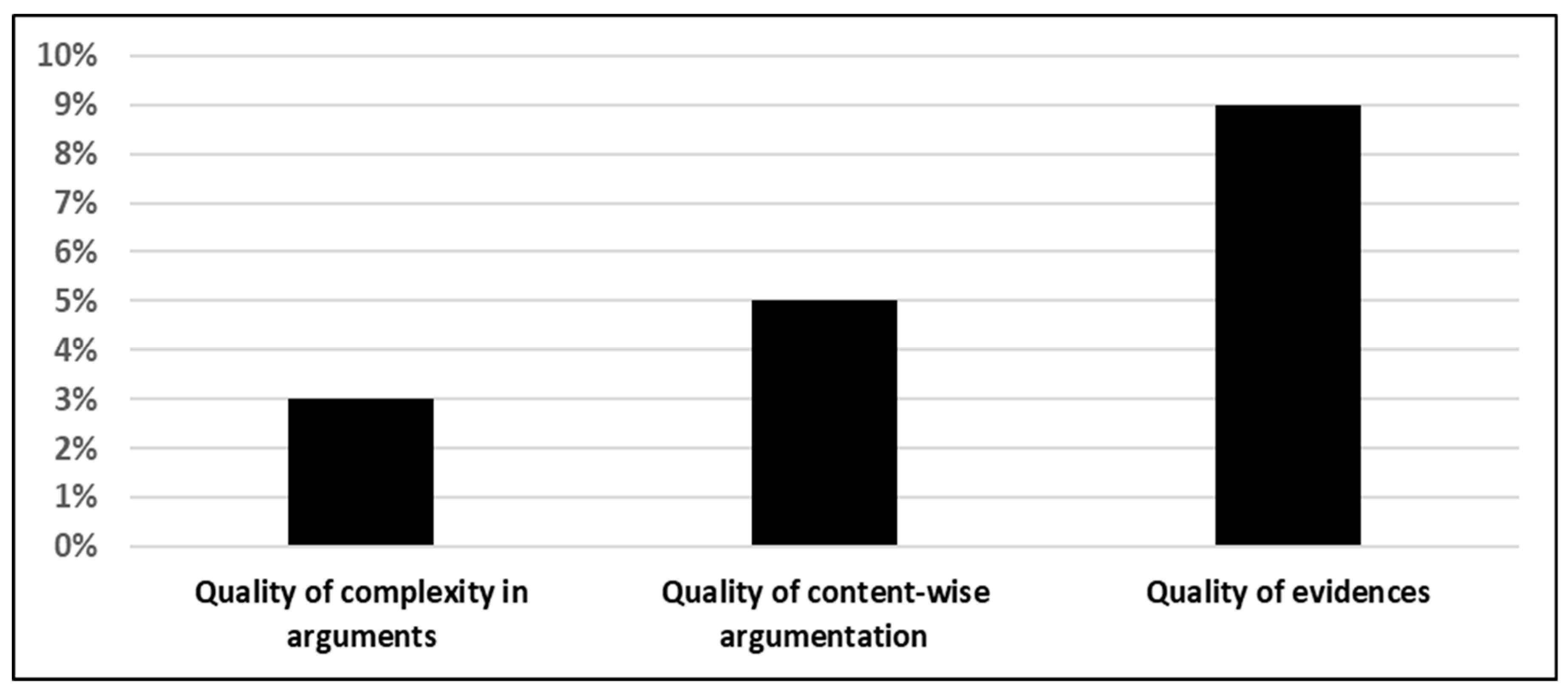

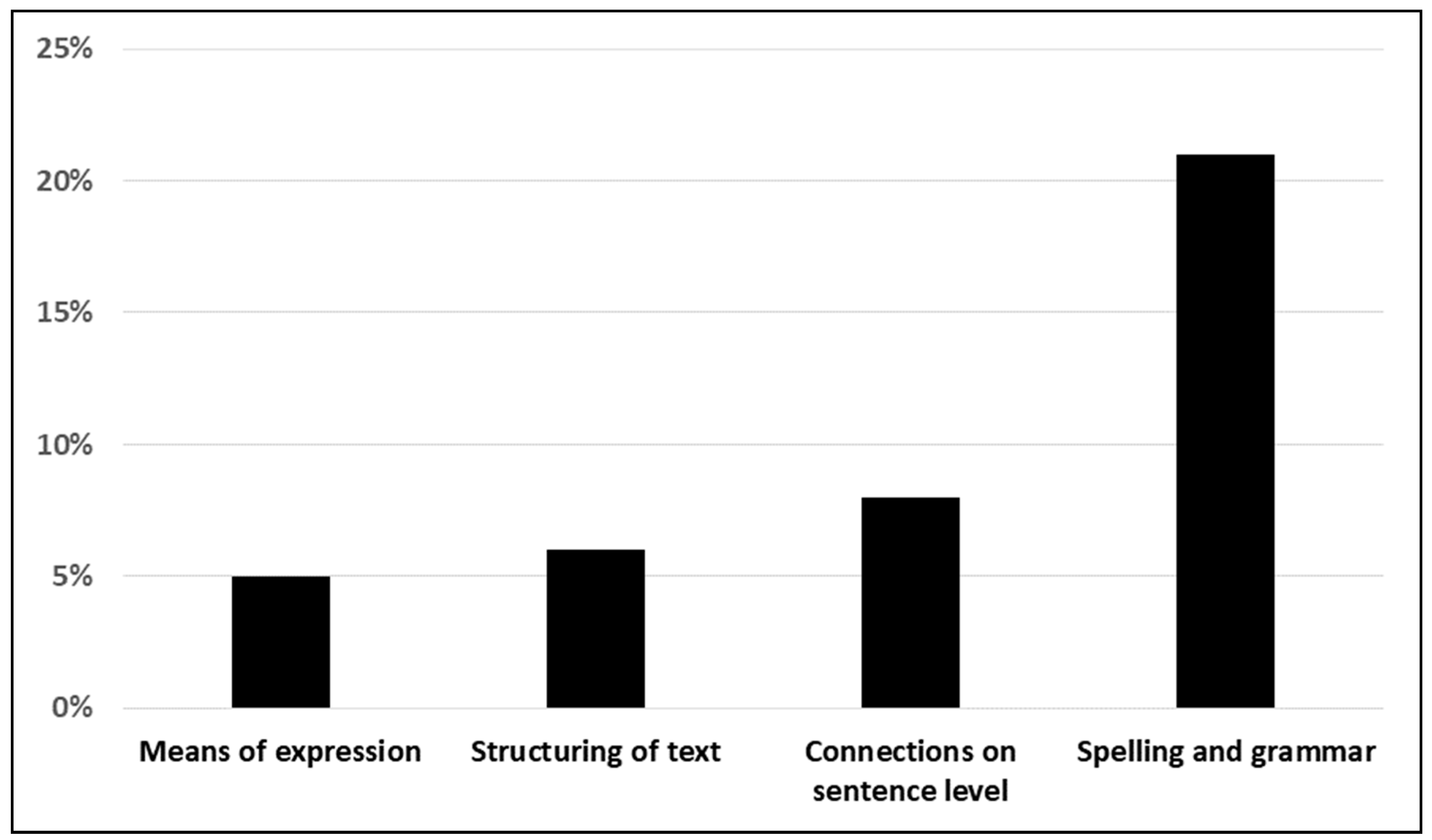

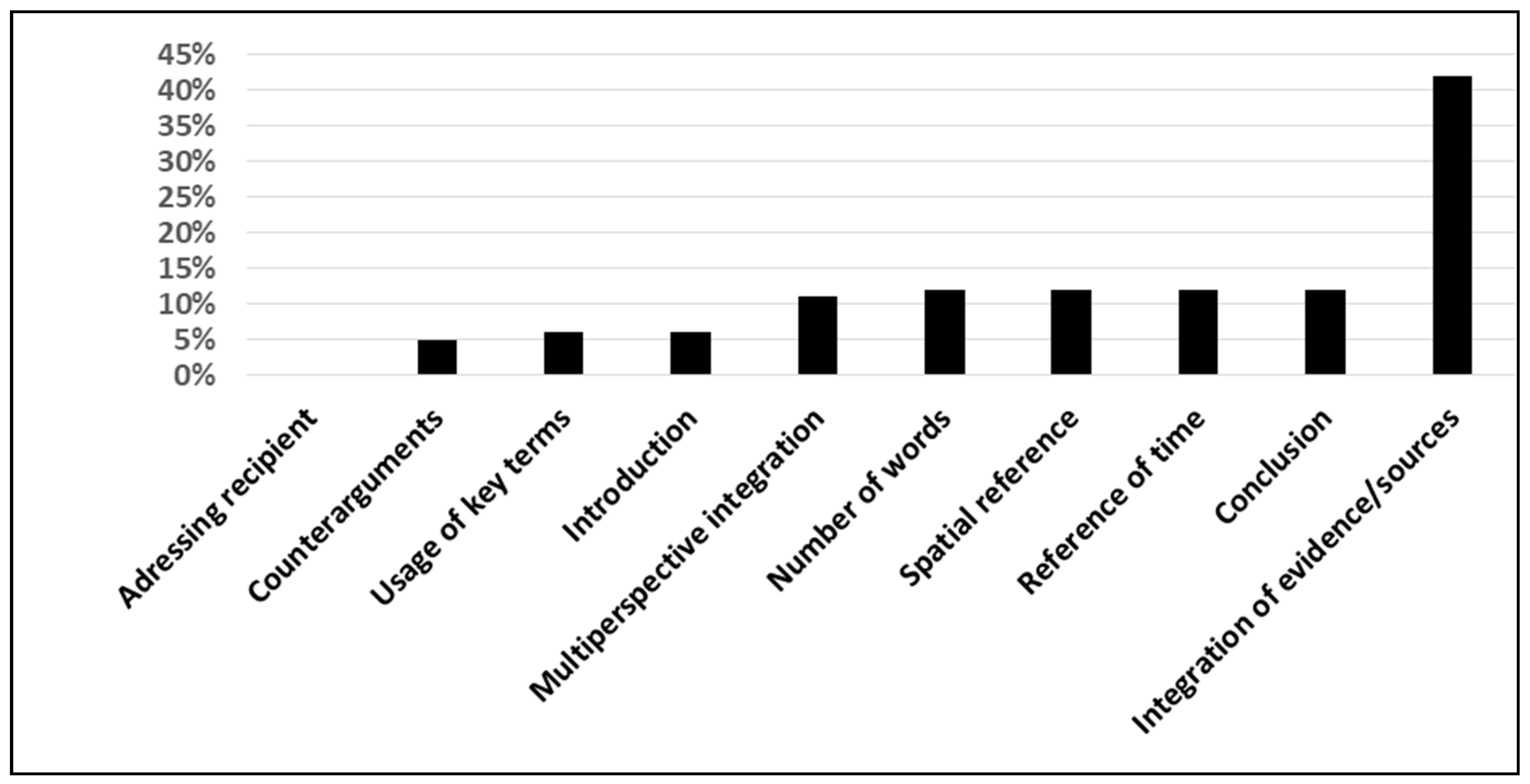

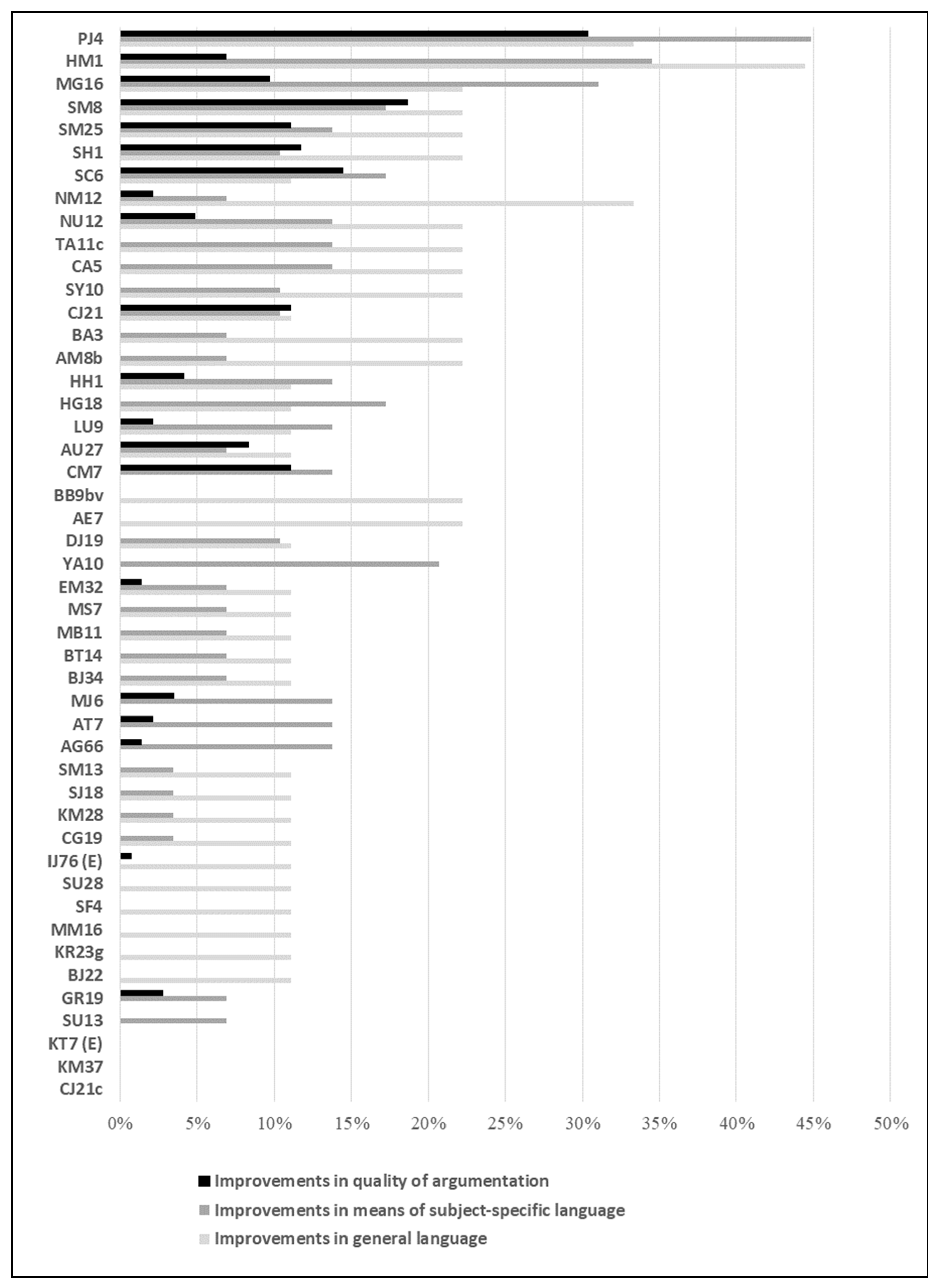

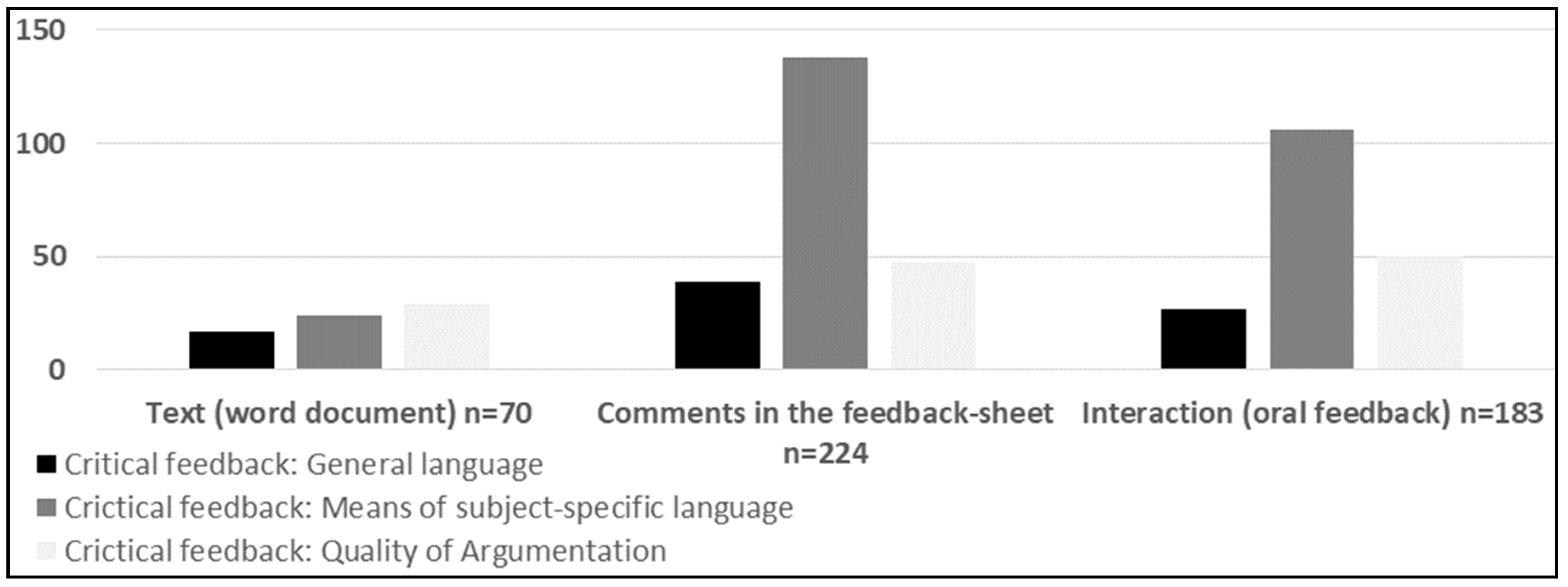

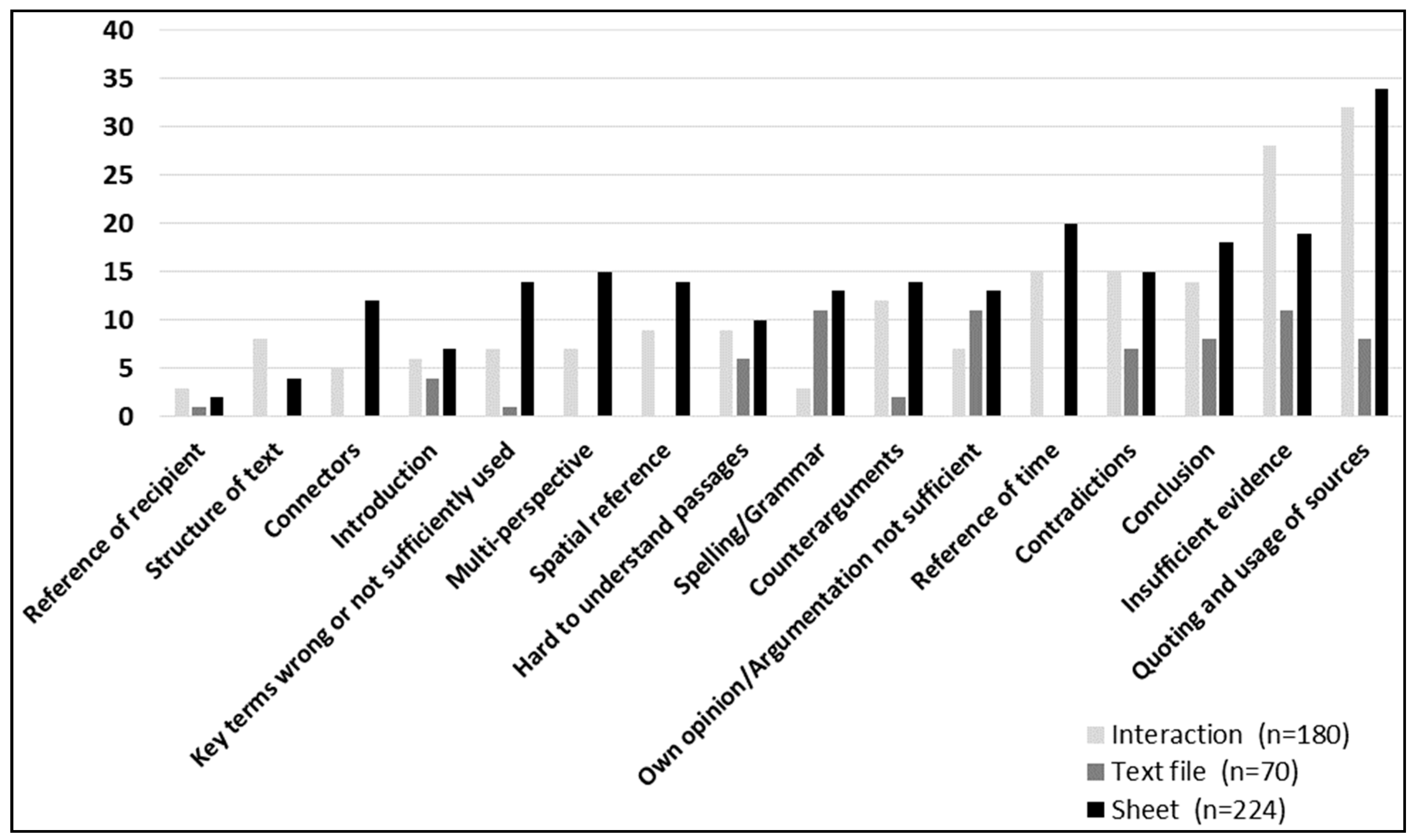

4.1. Descriptive Findings

4.2. Explanatory Findings

NM12: “And… (hesitating) your lines of argumentation were very good. What I missed were evidences, facts, you know, (unintelligible), so what you relate to. Here, he (Erdogan) never mentioned, this is why you have… too, and you have to give evidence for that. This is why I marked that passage, you understand?”

NM12: “That, here, he never said it that way, so I think…you have to give evidence for that. This is why I marked it, you know?”HH1: “Yes, but, I thought…I used a different phrase. I can do that, can‘t I?”NM12: “Yeah, but still. You have to…you know… prove it somehow, give evidence.”HH1: “Yes, I could have used a quote from the text. Ok, I agree. I will do that.”

KM28: “Well, I really liked how you justified and explained, when you write here, that the EU would be able to take action in the crisis. That‘s a really good reason.”

MG16: “What you could have done is, you could‘ve used more paragraphs, like, you know for structure.”

BJ22: “True, I think this would have helped to make the text easier to read. Yes, I haven‘t thought of that, actually.”MG16: “Yes, but we haven‘t had enough time, I know. So it‘s alright.”

AD185: “…ok, the text has many grammar and spelling errors, like generally, many errors, it is not written appropriately, many parts cannot be understood, because of spelling…ehm…the bad structure of the text and general a bad structure makes it hard to follow…ehm…it is difficult to follow ideas and points of the author.”

BJ 22: “…and then there is the main part with arguments, which is badly structured, everything is mixed up, really bad.”

5. Discussion and Implications

5.1. Factors for Success in Feedback and the Role of Socio-Scientific References in Feedback

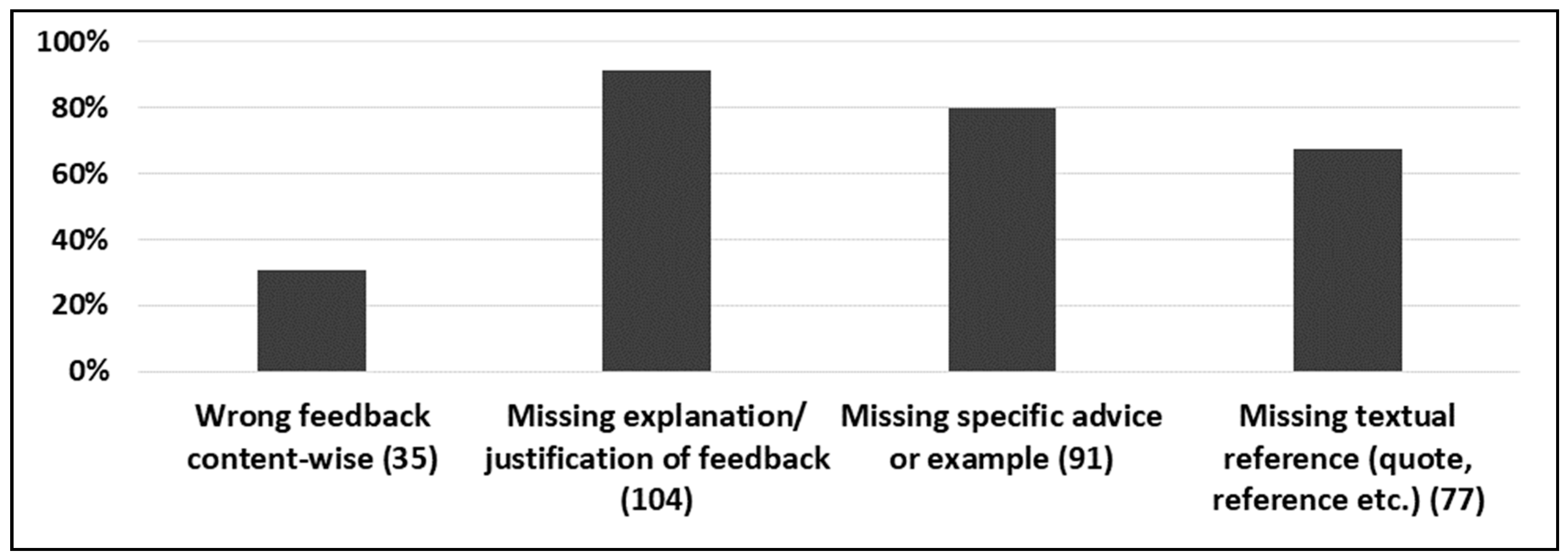

5.2. Deficits and Potential in Written Argumentation and Feedback in Terms of Disciplinary Literacy

5.3. Limitations

5.4. Implications and Prospects

Author Contributions

Funding

Conflicts of Interest

References

- Budke, A.; Meyer, M. Fachlich argumentieren lernen—Die Bedeutung der Argumentation in den unterschiedlichen Schulfächern. In Fachlich Argumentieren Lernen. Didaktische Forschungen zur Argumentation in den Unterrichtsfächern. Learning How to Argue in the Subjects; Budke, A., Kuckuck, M., Meyer, M., Schäbitz, F., Schlüter, K., Weiss, G., Eds.; Waxmann: Münster, Germany, 2015; pp. 9–31. [Google Scholar]

- Deutsche Gesellschaft für Geographie (DGfG)—German Association for Geography. Bildungsstandards im Fach Geographie für den Mittleren Schulabschluss mit Aufgabenbeispielen. Educational Standards in Geography for the Intermediate School Certificate; Selbstverlag DGfG: Bonn, Germany, 2014. [Google Scholar]

- National Research Council. New Research Opportunities in the Earth Sciences; The National Academies Press: Washington, DC, USA, 2012. [Google Scholar] [CrossRef]

- Achieve. Next Generation Science Standards. 5 May 2012. Available online: http://www.nextgenerationscience.org (accessed on 16 June 2018).

- Osborne, J. Teaching Scientific Practices: Meeting the Challenge of Change. J. Sci. Teach. Educ. 2014, 25, 177–196. [Google Scholar] [CrossRef]

- Jetton, T.L.; Shanahan, C. Preface. In Adolescent Literacy in the Academic Disciplines: General Principles and Practical Strategies; Jetton, T.L., Shanahan, C., Eds.; Guilford Press: New York, NY, USA, 2012; pp. 1–34. [Google Scholar]

- Norris, S.; Phillips, L. How literacy in its fundamental sense is central to scientific literacy. Sci. Educ. 2003, 87, 223–240. [Google Scholar] [CrossRef]

- Leisen, J. Handbuch Sprachförderung im Fach. Sprachsensibler Fachunterricht in der Praxis. A Guide to Language Support in the Subjects. Language-Aware Subject Teaching in Practice; Varus: Bonn, Germany, 2010. [Google Scholar]

- Becker-Mrotzek, M.; Schramm, K.; Thürmann, E.; Vollmer, H.K. Sprache im Fach—Einleitung [Subject-language—An introduction]. In Sprache im Fach. Sprachlichkeit und Fachliches Lernen. Subject-Langugae and Learning in the Sciences; Becker-Mrotzek, M., Schramm, M.K., Thürmann, E., Vollmer, H.K., Eds.; Waxmann: Berlin, Germany, 2013; pp. 7–24. [Google Scholar]

- Budke, A.; Kuckuck, M. (Eds.) Sprache im Geographieunterricht. Bilinguale und sprachsensible Materialien und Methoden. Language in Geography Education. Bilingual and Language-Aware Material; Waxmann: Münster, Germany, 2017; Available online: https://www.waxmann.com/?eID=texte&pdf=3550Volltext.pdf&typ=zusatztext (accessed on 16 June 2018).

- Cummins, J. Interdependence of first- and second-language proficiency in bilingual children. In Language Processing in Bilingual Children; Bialystok, E., Ed.; Cambridge University Press: Cambridge, UK, 1991; pp. 70–89. [Google Scholar]

- Common Core State Standards Initiative. Common Core State Standards for English Language Arts and Literacy in History/Social Studies and Science. 2010. Available online: http://www.corestandards.org/ (accessed on 16 June 2018).

- Bazerman, C. Emerging perspectives on the many dimensions of scientific discourse. In Reading Science; Martin, J.R., Veel, R., Eds.; Routledge: London, UK, 1998; pp. 15–28. [Google Scholar]

- Halliday, M.A.K.; Martin, J.R. Writing Science: Literacy and Discursive Power; University of Pittsburgh Press: Pittsburgh, PA, USA, 1993. [Google Scholar]

- Pearson, J.C.; Carmon, A.; Tobola, C.; Fowler, M. Motives for communication: Why the Millennial generation uses electronic devices. J. Commun. Speech Theatre Assoc. N. D. 2010, 22, 45–55. [Google Scholar]

- Weiss, I.R.; Pasley, J.D.; Smith, P.; Banilower, E.R.; Heck, D.J. A Study of K–12 Mathematics and Science Education in the United States; Horizon Research: Chapel Hill, NC, USA, 2003. [Google Scholar]

- Evagorou, M.; Osborne, J. Exploring Young Students’ Collaborative Argumentation Within a Socioscientific Issue. J. Res. Sci. Teach. 2013, 50, 209–237. [Google Scholar] [CrossRef]

- Budke, A. Förderung von Argumentationskompetenzen in aktuellen Geographieschulbüchern. In Aufgaben im Schulbuch; Matthes, E., Heinze, C., Eds.; Julius Klinkhardt: Bad Heilbrunn, Germany, 2011. [Google Scholar]

- Erduran, S.; Simon, S.; Osborne, J. TAPping into argumentation: Developments in the application of Toulmin’s Argument Pattern for studying science discourse. Sci. Educ. 2004, 88, 915–933. [Google Scholar] [CrossRef]

- Bell, P. Promoting Students’ Argument Construction and Collaborative Debate in the Science Classroom. In Internet Environments for Science Education; Linn, M., Davis, E., Bell, P., Eds.; Lawrence Erlbaum: Hillsdale, NJ, USA, 2004; pp. 115–143. [Google Scholar]

- Newton, P.; Driver, R.; Osborne, J. The place of argumentation in the pedagogy of school science. Int. J. Sci. Educ. 1999, 21, 553–576. [Google Scholar] [CrossRef]

- Budke, A.; Kuckuck, M.; Morawski, M. Sprachbewusste Kartenarbeit? Beobachtungen zum Karteneinsatz im Geographieunterricht. Language-aware analysis of teaching maps. GW-Unterricht 2017, 148, 5–15. [Google Scholar] [CrossRef]

- Yu, S.; Lee, I. Peer feedback in second language writing (2005–2014). Lang. Teach. 2016, 49, 461–493. [Google Scholar] [CrossRef]

- Diab, N.W. Assessing the relationship between different types of student feedback and the quality of revised writing. Assess. Writ. 2011, 14, 274–292. [Google Scholar] [CrossRef]

- Berggren, J. Learning from giving feedback: A study of secondary-level students. ELT J. 2015, 69, 58–70. [Google Scholar] [CrossRef]

- Lehnen, K. Gemeinsames Schreiben. Writing together. In Schriftlicher Sprachgebrauch/Texte Verfassen. Writing Texts. Written Usage of Language; Feilke, H., Pohl, T., Eds.; Schneider Hohengehren: Baltmannsweiler, Germany, 2014; pp. 414–431. [Google Scholar]

- Rapanta, C.; Garcia-Mila, M.; Gilabert, S. What Is Meant by Argumentative Competence? An Integrative Review of Methods of Analysis and Assessment in Education. Rev. Educ. Res. 2013, 83, 438–520. [Google Scholar] [CrossRef]

- Mercer, N.; Dawes, L.; Wegerif, R.; Sams, C. Reasoning as a scientist: Ways of helping children to use language to learn science. Br. Educ. Res. J. 2004, 30, 359–377. [Google Scholar] [CrossRef]

- Roschelle, J.; Teasley, S. The Construction of Shared Knowledge in Collaborative Problem Solving; Springer: Heidelberg, Germany, 1995. [Google Scholar]

- Michalak, M.; Lemke, V.; Goeke, M. Sprache im Fachunterricht: Eine Einführung in Deutsch als Zweitsprache und Sprachbewussten Unterricht. Language in the Subject: An Introduction in German as A Second Language and Language-Aware Teaching; Narr Francke Attempto: Tübingen, Germany, 2015. [Google Scholar]

- Duschl, R.A.; Schweingruber, H.A.; Shouse, A.W. Taking Science to School: Learning and Teaching Science in Grades K–8; National Academic Press: Washington, DC, USA, 2007. [Google Scholar]

- National Research Council. Inquiry and the National Science Education Standards; National Academy Press: Washington, DC, USA, 2000; Available online: http://www.nap.edu/openbook.php?isbn=0309064767 (accessed on 16 June 2018).

- European Union Recommendation of the European Parliament and of the Council of 18 December 2006 on Key Competences for Lifelong Learning. 2006. Available online: http://eur-lex.europa.eu/LexUriServ/LexUriServ.do?uri=OJ:L:2006:394:0010:0018:en:PDF (accessed on 16 June 2018).

- Asterhan, C.S.C.; Schwarz, B.B. Argumentation and explanation in conceptual change: Indications from protocol analyses of peer-to-peer dialog. Cogn. Sci. 2009, 33, 374–400. [Google Scholar] [CrossRef] [PubMed]

- Weinstock, M.P. Psychological research and the epistemological approach to argumentation. Informal Logic 2006, 26, 103–120. [Google Scholar] [CrossRef]

- Weinstock, M.P.; Neuman, Y.; Glassner, A. Identification of informal reasoning fallacies as a function of epistemological level, grade level, and cognitive ability. J. Educ. Psychol. 2006, 98, 327–341. [Google Scholar] [CrossRef]

- Hoogen, A. Didaktische Rekonstruktion des Themas Illegale Migration Argumentationsanalytische Untersuchung von Schüler*innenvorstellungen im Fach Geographie. Didactical Reconstruction of the Topic “Illegal Migration”. Analyis of Argumentation among Pupils’ Concepts. Ph.D. Thesis, 2006. Available online: https://www.uni-muenster.de/imperia/md/content/geographiedidaktische-forschungen/pdfdok/band_59.pdf (accessed on 16 June 2018).

- Means, M.L.; Voss, J.F. Who reasons well? Two studies of informal reasoning among children of different grade, ability and knowledge levels. Cogn. Instr. 1996, 14, 139–178. [Google Scholar] [CrossRef]

- Reznitskaya, A.; Anderson, R.; McNurlen, B.; Nguyen-Jahiel, K.; Archoudidou, A.; Kim, S. Influence of oral discussion on written argument. Discourse Process. 2001, 32, 155–175. [Google Scholar] [CrossRef]

- Kuhn, D. Education for Thinking; Harvard University Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Leitao, S. The potential of argument in knowledge building. Hum. Dev. 2000, 43, 332–360. [Google Scholar] [CrossRef]

- Nussbaum, E.M.; Sinatra, G.M. Argument and conceptual engagement. Contemp. Educ. Psychol. 2003, 28, 573–595. [Google Scholar] [CrossRef]

- Kuckuck, M. Konflikte im Raum—Verständnis von Gesellschaftlichen Diskursen Durch Argumentation im Geographieunterricht. Spatial Conflicts—How Pupils Understand Social Discourses through Argumentation. Ph.D. Thesis, Monsenstein und Vannerdat, Münster, Germany, 2014. Geographiedidaktische Forschungen, Bd. 54. [Google Scholar]

- Dittrich, S. Argumentieren als Methode zur Problemlösung Eine Unterrichtsstudie zur Mündlichen Argumentation von Schülerinnen und Schülern in kooperativen Settings im Geographieunterricht. Solving Problems with Argumentation in the Geography Classroom. Ph.D. Thesis, 2017. Available online: https://www.uni-muenster.de/imperia/md/content/geographiedidaktische-forschungen/pdfdok/gdf_65_dittrich.pdf (accessed on 16 June 2018).

- Müller, B. Komplexe Mensch-Umwelt-Systeme im Geographieunterricht mit Hilfe von Argumentationen erschließen. Analyzing Complex Human-Environment Systems in Geography Education with Argumentation. Ph.D. Thesis, 2016. Available online: http://kups.ub.uni koeln.de/7047/4/Komplexe_Systeme_Geographieunterricht_Beatrice_Mueller.pdfNational (accessed on 16 June 2018).

- Leder, J.S. Pedagogic Practice and the Transformative Potential of Education for Sustainable Development. Argumentation on Water Conflicts in Geography Teaching in Pune, India. Ph.D. Thesis, Universität zu Köln, Cologne, Germany, 2016. Available online: http://kups.ub.uni-koeln.de/7657/ (accessed on 16 June 2018).

- Kopperschmidt, J. Argumentationstheorie. Theory of Argumentation; Junius: Hamburg, Germany, 2000. [Google Scholar]

- Kienpointner, M. Argumentationsanalyse. Analysis of Argumentation; Innsbrucker Beiträge zur Kulturwissenschaft 56; Institut für Sprachwissenschaften der Universität Innsbruck: Innsbruck, Austria, 1983. [Google Scholar]

- Angell, R.B. Argumentationsrezeptionskompetenzen im Vergleich der Fächer Geographie, Biologie und Mathematik. In Reasoning and logic; Budke, A., Ed.; Appleton-Century-Crofts: New York, NY, USA, 1964. [Google Scholar]

- Toulmin, S. The Use of Arguments; Cambridge University Press: Cambridge, MA, USA, 1958. [Google Scholar]

- Walton, D.N. The New Dialectic: Conversational Contexts of Argument; University of Toronto Press: Toronto, ON, Canada, 1998. [Google Scholar]

- Perelman, C. The Realm of Rhetoric; University of Notre Dame Press: Notre Dame, IN, USA, 1982. [Google Scholar]

- Felton, M.; Kuhn, D. The development of argumentative discourse skills. Discourse Processes 2001, 32, 135–153. [Google Scholar] [CrossRef]

- Kuhn, D. The Skills of Argument; Cambridge University Press: Cambridge, UK, 1991. [Google Scholar]

- Kuhn, D.; Shaw, V.; Felton, M. Effects of dyadic interaction on argumentative reasoning. Cogn. Instr. 1997, 15, 287–315. [Google Scholar] [CrossRef]

- Zohar, A.; Nemet, F. Fostering students’ knowledge and argumentation skills through dilemmas in human genetics. J. Res. Sci. Teach. 2002, 39, 35–62. [Google Scholar] [CrossRef]

- Erduran, S.; Jiménez-Aleixandre, M.P. Argumentation in Science Education: Perspectives from Classroom-Based Research; Springer: Dordrecht, The Netherlands, 2008. [Google Scholar]

- Garcia-Mila, M.; Andersen, C. Cognitive foundations of learning argumentation. In Argumentation in Science Education: Perspectives from Classroom-Based Research; Erduran, S., Jiménez-Aleixandre, M.P., Eds.; Springer: Dordrecht, The Netherlands, 2008; pp. 29–47. [Google Scholar]

- McNeill, K.L. Teachers’ use of curriculum to support students in writing scientific arguments to explain phenomena. Sci. Educ. 2008, 93, 233–268. [Google Scholar] [CrossRef]

- Billig, M. Arguing and Thinking: A Rhetorical Approach to Social Psychology; Cambridge University Press: Cambridge, UK, 1987. [Google Scholar]

- Bachmann, T.; Becker-Mrotzek, M. Schreibaufgaben situieren und profilieren. To profile writing tasks. In Textformen als Lernformen. Text Forms als Learning Forms; Pohl, T., Steinhoff, T., Eds.; Gilles & Francke: Duisburg, Germany, 2010; pp. 191–210. [Google Scholar]

- Feilke. Schreibdidaktische Konzepte. In Forschungshandbuch Empirische Schreibdidaktik. Handbook on Empirical Teaching Writing Research; Becker-Mrotzek, M., Grabowski, J., Steinhoff, T., Eds.; Waxmann: Münster, Germany, 2017; pp. 153–173. [Google Scholar]

- Hayes, J.; Flower, L. Identifying the organization of writing processes. In Cognitive Processes in Writing; Gregg, L., Steinberg, E., Eds.; Erlbaum: Hillsdale, MI, USA, 1980; pp. 3–30. [Google Scholar]

- Dawson, V.; Venville, G. High-school students’ informal reasoning and argumentation about biotechnology: An indicator of scientific literacy? Int. J. Sci. Educ. 2009, 31, 1421–1445. [Google Scholar] [CrossRef]

- Toulmin, S. The Use of Arguments; Beltz: Weinheim, Germany, 1996. [Google Scholar]

- CEFR (2001)—Europarat (2001). Gemeinsamer Europäischer Referenzrahmen für Sprachen: Lernen, Lehren, Beurteilen. Common European Framework for Languages; Langenscheidt: Berlin, Germany; München, Germany, 2011; Available online: https://www.coe.int/en/web/portfolio/the-common-european-framework-of-reference-for-languages-learning-teaching-assessment-cefr- (accessed on 16 June 2018).

- Jimenez-Aleixandre, M.; Erduran, S. Argumentation in science education: An overview. In Argumentation in Science Education: Perspectives from Classroom-Based Research; Erduran, S., Jimenez-Aleixandre, M., Eds.; Springer: New York, NY, USA, 2008; pp. 3–27. [Google Scholar]

- Evagorou, M. Discussing a socioscientific issue in a primary school classroom: The case of using a technology-supported environment in formal and nonformal settings. In Socioscientific Issues in the Classroom; Sadler, T., Ed.; Springer: New York, NY, USA, 2011; pp. 133–160. [Google Scholar]

- Evagorou, M.; Jime’nez-Aleixandre, M.; Osborne, J. ‘Should We Kill the Grey Squirrels? ‘A Study Exploring Students’ Justifications and Decision-Making. Int. J. Sci. Educ. 2012, 34, 401–428. [Google Scholar] [CrossRef]

- Nielsen, J.A. Science in discussions: An analysis of the use of science content in socioscientific discussions. Sci. Educ. 2012, 96, 428–456. [Google Scholar] [CrossRef]

- Oliveira, A.; Akerson, V.; Oldfield, M. Environmental argumentation as sociocultural activity. J. Res. Sci. Teach. 2012, 49, 869–897. [Google Scholar] [CrossRef]

- Kuhn, D. Teaching and learning science as argument. Sci. Educ. 2010, 94, 810–824. [Google Scholar] [CrossRef]

- Kuckuck, M. Die Rezeptionsfähigkeit von Schüler*innen und Schülern bei der Bewertung von Argumentationen im Geographieunterricht am Beispiel von raum-bezogenen Konflikten. Z. Geogr. 2015, 43, 263–284. [Google Scholar]

- Budke, A.; Weiss, G. Sprachsensibler Geographieunterricht. In Sprache als Lernmedium im Fachunterricht. Theorien und Modelle für das Sprachbewusste Lehren und Lernen. Language as Learning Medium in the Subjects; Michalak, M., Ed.; Schneider Hohengehren: Baltmannsweiler, Germany, 2014; pp. 113–133. [Google Scholar]

- Budke, A.; Uhlenwinkel, A. Argumentieren im Geographieunterricht. Theoretische Grundlagen und unterrichtspraktische Umsetzungen. In Geographische Bildung. Kompetenzen in der didaktischer Forschung und Schulpraxis. Geography Education. Competences in Educational Research and Practical Implementation; Meyer, C., Henry, R., Stöber, G., Eds.; Westermann: Braunschweig, Germany, 2011; pp. 114–129. [Google Scholar]

- Budke, A.; Kuckuck, M. (Eds.) Politische Bildung im Geographieunterricht. Civic Education in Teaching Geography; Franz Steiner Verlag: Stuttgart, Germany, 2016. [Google Scholar]

- Uhlenwinkel, A. Geographisches Wissen und geographische Argumentation. In Fachlich Argumentieren Lernen. Didaktische Forschungen zur Argumentation in den Unterrichtsfächern. Learning to Argue. Empirical Research about Argumenation in the Subjects; Budke, A., Kuckuck, M., Meyer, M., Schäbitz, F., Schlüter, K., Weiss, G., Eds.; Waxmann: Münster, Germany, 2015; pp. 46–61. [Google Scholar]

- Budke, A.; Michalak, M.; Kuckuck, M.; Müller, B. Diskursfähigkeit im Fach Geographie—Förderung von Kartenkompetenzen in Geographieschulbüchern. In Befähigung zu Gesellschaftlicher Teilhabe. Beiträge der Fachdidaktischen Forschung. Enabling Social Participation; Menthe, J., Höttecke, J.D., Zabka, T., Hammann, M., Rothgangel, M., Eds.; Waxmann: Münster, Germany, 2016; Bd. 10; pp. 231–246. [Google Scholar]

- Lehnen, K. Kooperatives Schreiben. In Handbuch Empirische Schreibdidaktik. Handbook on Empirical Teaching Writing Research; Becker-Mrotzek, M., Grabowski, J., Steinhoff, T., Eds.; Waxmann: Münster, Germany, 2017; pp. 299–315. [Google Scholar]

- Dillenbourg, P.; Baker, M.; Blaye, A.; O’Malley, C. The Evolution of Research on Collaborative Learning; Elsevier: Oxford, UK, 1996. [Google Scholar]

- Andriessen, J. Arguing to learn. In The Cambridge Hand-Book of the Learning Sciences; Sawyer, R.K., Ed.; Cambridge University Press: New York, NY, USA, 2005; pp. 443–459. [Google Scholar]

- Berland, L.; Reiser, B. Classroom communities’ adaptations of the practice of scientific argumentation. Sci. Educ. 2010, 95, 191–216. [Google Scholar] [CrossRef]

- Teasley, S.D.; Roschelle, J. Constructing a joint problem space: The computer as a tool for sharing knowledge. In Computers as cognitive tools; Lajoie, S.P., Derry, S.J., Eds.; Lawrence Erlbaum Associates, Inc.: Mahwah, NJ, USA, 1993; pp. 229–258. [Google Scholar]

- Berland, L.K.; Hammer, D. Framing for scientific argumentation. J. Res. Sci. Teach. 2012, 49, 68–94. [Google Scholar] [CrossRef]

- Damon, W. Peer education: The untapped potential. J. Appl. Dev. Psychol. 1984, 5, 331–343. [Google Scholar] [CrossRef]

- Hatano, G.; Inagaki, K. Sharing cognition through collective comprehension activity. In Perspectives on Socially Shared Cognition; Resnick, L., Levine, J.M., Teasley, S.D., Eds.; American Psychological Association: Washington, DC, USA, 1991; pp. 331–348. [Google Scholar]

- Chi, M.; De Leeuw, N.; Chiu, M.H.; Lavancher, C. Eliciting Self-Explanations Improves Understanding. Cogn. Sci. 1994, 18, 439–477. [Google Scholar]

- King, A. Enhancing peer interaction and learning in the classroom through reciprocal interaction. Am. Educ. Res. J. 1990, 27, 664–687. [Google Scholar] [CrossRef]

- Coyle, D.; Hood, P.; Marsh, D. CLIL: Content and Language Integrated Learning; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Morawski, M.; Budke, A. Learning with and by Language: Bilingual Teaching Strategies for the Monolingual Language-Aware Geography Classroom. Geogr. Teach. 2017, 14, 48–67. [Google Scholar] [CrossRef]

- Breidbach, S.; Viebrock, B. CLIL in Germany—Results from Recent Research in a Contested Field of Education. Int. CLIL Res. J. 2012, 1, 5–16. [Google Scholar]

- Gibbs, G.; Simpson, C. Conditions under which Assessment supports Student Learning. Learn. Teach. High. Educ. 2004, 1, 3–31. [Google Scholar]

- Diab, N.W. Effects of peer- versus self-editing on students’ revision of language errors in revised drafts. Syst. Int. J. Educ. Technol. Appl. Linguist. 2010, 38, 85–95. [Google Scholar] [CrossRef]

- Zhao, H. Investigating learners’ use and understanding of peer and teacher feedback on writing: A comparative study in a Chinese English writing classroom. Assess. Writ. 2010, 1, 3–17. [Google Scholar] [CrossRef]

- S’eror, J. Alternative sources of feedback and second language writing development in university content courses. Can. J. Appl. Linguist./Revue canadienne de linguistique appliqu’ee 2011, 14, 118–143. [Google Scholar]

- Lundstrom, K.; Baker, W. To give is better than to receive: The benefits of peer review to the reviewer’s own writing. J. Second Lang. Writ. 2009, 18, 30–43. [Google Scholar] [CrossRef]

- Pyöriä1, P.; Ojala, S.; Saari, T.; Järvinen, K. The Millennial Generation: A New Breed of Labour? Sage Open 2017, 7, 1–14. [Google Scholar] [CrossRef]

- Bresman, H.; Rao, V. A Survey of 19 Countries Shows How Generations X, Y, and Z Are—And Aren’t—Different. 2017. Available online: https://hbr.org/2017/08/a-survey-of-19-countries-shows-how-generations-x-y-and-z-are-and-arent-different (accessed on 16 June 2018).

- Baur, R.; Goggin, M.; Wrede-Jackes, J. Der c-Test: Einsatzmöglichkeiten im Bereich DaZ. C-test: Usability in SLT; proDaz; Universität Duisburg-Essen: Duisburg, Germany; Stiftung Mercator: Berlin, Germany, 2013. [Google Scholar]

- Baur, R.; Spettmann, M. Lesefertigkeiten testen und fördern. Testing and supporting reading skills. In Bildungssprachliche Kompetenzen Fördern in der Zweitsprache. Supporting Scientific Language in SLT; Benholz, E., Kniffka, G., Winters-Ohle, B., Eds.; Waxmann: Münster, Germany, 2010; pp. 95–114. [Google Scholar]

- Metz, K. Children’s understanding of scientific inquiry: Their conceptualization of uncertainty in investigations of their own design. Cogn. Instr. 2004, 22, 219–290. [Google Scholar] [CrossRef]

- Sandoval, W.A.; Millwood, K. The quality of students’ use of evidence in written scientific explanations. Cogn. Instr. 2005, 23, 23–55. [Google Scholar] [CrossRef]

- Gibbons, P. Scaffolding Language, Scaffolding Learning. Teaching Second Language Learners in the Mainstream Classroom; Heinemann: Portsmouth, NH, USA, 2002. [Google Scholar]

- Kniffka, G. Scaffolding. 2010. Available online: http://www.uni-due.de/prodaz/konzept.php (accessed on 11 October 2017).

- Hogan, K.; Nastasi, B.; Pressley, M. Discourse patterns and collaborative scientific reasoning in peer and teacher-guided discussions. Cogn. Instr. 1999, 17, 379–432. [Google Scholar] [CrossRef]

- Light, P. Collaborative learning with computers. In Language, Classrooms and Computers; Scrimshaw, P., Ed.; Routledge: London, UK, 1993; pp. 40–56. [Google Scholar]

- Alexopoulou, E.; Driver, R. Small group discussions in physics: Peer inter-action in pairs and fours. J. Res. Sci. Teach. 1996, 33, 1099–1114. [Google Scholar] [CrossRef]

- Bennett, J.; Hogarth, S.; Lubben, F.; Campell, B.; Robinson, A. Talking science: The research evidence on the use of small group discussions in science teaching. Int. J. Sci. Educ. 2010, 32, 69–95. [Google Scholar] [CrossRef]

- Gielen, S.; DeWever, B. Structuring peer assessment: Comparing the impact of the degree of structure on peer feedback content. Comput. Hum. Behav. 2015, 52, 315–325. [Google Scholar] [CrossRef]

- Morawski, M.; Budke, A. Förderung von Argumentationskompetenzen durch das “Peer-Review-Verfahren” Ergebnisse einer empirischen Studie im Geographieunterricht unter besonderer Berücksichtigung von Schüler*innen, die Deutsch als Zweitsprache lernen. Teaching argumentation competence with peer-review. Results of an empirical study in geography education with special emphasis on students who learn German as a second language. Beiträge zur Fremdsprachenvermittlung, Special Issue 2018, in press. [Google Scholar]

- Hovardas, T.; Tsivitanidou, O.; Zacharia, Z. Peer versus expert feedback: An investigation of the quality of peer feedback among secondary school students. Comput. Educ. 2014, 71, 133–152. [Google Scholar] [CrossRef]

- Gielen, S.; Peeters, E.; Dochy, F.; Onghena, P.; Struyven, K. Improving the effectiveness of peer feedback for learning. Learn. Instr. 2010, 20, 304–315. [Google Scholar] [CrossRef]

- Becker-Mrotzek, M.; Böttcher, I. Schreibkompetenz Entwickeln und Beurteilen; Cornelsen: Berlin, Germany, 2012. [Google Scholar]

- Neumann, A. Zugänge zur Bestimmung von Textqualität. In Handbuch Empirische Schreibdidaktik. Handbook on Empirical Teaching Writing Research; Becker-Mrotzek, M., Grabowski, J., Steinhoff, T., Eds.; Waxmann: Münster, Germany, 2017; pp. 173–187. [Google Scholar]

- Denzin, N.K. The Research Act. A Theoretical Introduction to Sociological Methods; Aldine Pub: Chicago, IL, USA, 1970. [Google Scholar]

- Rost, J. Theory and Construction of Tests. In Testtheorie Testkonstruktion; Verlag Hans Huber: Bern, Switzerland; Göttingen, Germany; Toronto, ON, Canada; Seattle, WA, USA, 2004. [Google Scholar]

- Bortz, J.; Döring, N. Forschungsmethoden und Evaluation für Human- und Sozialwissenschaftler. Empirical Methods and Evaluation for the Human and Social Sciences, 4th ed.; Springer: Berlin/Heidelberg, Germany; New York, NY, USA, 2006. [Google Scholar]

- Wirtz, M.; Caspar, F. Beurteilerübereinstimmung und Beurteilerreliabilität. Reliability of Raters; Hogrefe: Göttingen, Germany, 2002. [Google Scholar]

- Bowen, G. Document Analysis as a Qualitative Research Method. Qual. Res. J. 2009, 9, 27–40. [Google Scholar] [CrossRef]

- Gumperz, J.; Berenz, N. Transcribing Conversational Exchanges. In Talking Data: Transcription and Coding in Discourse Research; Edwards, J.A., Lampert, M.D., Eds.; Psychology Press: Hillsdale, NJ, USA, 1993; pp. 91–121. [Google Scholar]

- Atkinson, J.M. Structures of Social Action: Studies in Conversation Analysis; Cambridge University Press: Cambridge, UK; Editions de la Maison des Sciences de l’Homme: Paris, France, 1984. [Google Scholar]

- Langford, D. Analyzing Talk: Investigating Verbal Interaction in English; Macmillan: Basingstoke, UK, 1994. [Google Scholar]

- Searle, J. The classification of illocutionary acts. Lang. Soc. 1976, 5, 1–24. [Google Scholar] [CrossRef]

- Herrenkohl, L.; Guerra, M. Participant structures, scientific discourse, and student engagement in fourth grade. Cogn. Instr. 1998, 16, 431–473. [Google Scholar] [CrossRef]

- Mayring, P. Qualitative Inhaltsanalyse. Qualitative Analysis of Content, 12th ed.; Beltz: Weinheim, Geremany, 2015. [Google Scholar]

- Chiu, M. Effects of argumentation on group micro-creativity: Statistical discourse analyses of algebra students’ collaborative problem solving. Contemp. Educ. Psychol. 2008, 33, 382–402. [Google Scholar] [CrossRef]

- Coleman, E.B. Using explanatory knowledge during collaborative problem solving in science. J. Learn. Sci. 1998, 7, 387–427. [Google Scholar] [CrossRef]

- Mercer, N.; Wegerif, R.; Dawes, L. Children’s talk and the development of reasoning in the classroom. Br. Educ. Res. J. 1999, 25, 95–111. [Google Scholar] [CrossRef]

- Resnick, L.; Michaels, S.; O’Connor, C. How (well-structured) talk builds the mind. In From Genes to Context: New Discoveries about Learning from Educational Research and Their Applications; Sternberg, R., Preiss, D., Eds.; Springer: New York, NY, USA, 2010; pp. 163–194. [Google Scholar]

- Chin, C.; Osborne, J. Supporting argumentation through students’ questions: Case studies in science classrooms. J. Learn. Sci. 2010, 19, 230–284. [Google Scholar] [CrossRef]

- Ryu, S.; Sandoval, W. Interpersonal Influences on Collaborative Argument during Scientific Inquiry. In Proceedings of the American Educational Research Association (AERA), New York, NY, USA, 24–29 March 2008. [Google Scholar]

- Sampson, V.; Clark, D.B. A comparison of the collaborative scientific argumentation practices of two high and two low performing groups. Res. Sci. Educ. 2009, 41, 63–97. [Google Scholar] [CrossRef]

- Van Amelsvoort, M.; Andriessen, J.; Kanselaar, G. Representational tools in computer-supported argumentation-based learning: How dyads work with constructed and inspected argumentative diagrams. J. Learn. Sci. 2007, 16, 485–522. [Google Scholar] [CrossRef]

- Beach, R.; Friedrich, T. Response to writing. In Handbook of Writing Research; MacArthur, C., Graham, S., Fitzgerald, J., Eds.; Guilford: New York, NY, USA, 2006; pp. 222–234. [Google Scholar]

- Nelson, M.; Schunn, C. The nature of feedback: How different types of peer feedback affect writing performance. Instr. Sci. 2009, 37, 375–401. [Google Scholar] [CrossRef]

| Discourse Code | Definition | Example |

|---|---|---|

| Contribution to discourse by reviewer | ||

| Criticism/negative feedback with text reference | A text passage is evaluated as optimizable and is referenced. | “Unfortunately, here in the second paragraph you did not write whether the argument would be valid, like 100 years ago”. |

| Criticism/negative feedback without text reference | A text passage is evaluated as optimizable but is not referenced. | “What makes the text less structured is that you do not use paragraphs really.” |

| Praise/positive feedback with text reference | A text passage is evaluated as good and is referenced. | “The reader gets a good overview, because you use a good structure, for example here in paragraph one and two.” |

| Praise/positive feedback without text reference | A text passage is evaluated as good but is not referenced. | “You give good reasons for your opinion. This makes the text professional.” |

| Precise suggestion without example | Student clearly suggests what is to be changed but does not offer an example. | “You could use more connectors here.” |

| Precise suggestion with example | Student clearly suggests what is to be changed and offers an example. | “It would be a good idea, to use the connector “however” here. This would make the structure clearer.” |

| Checking criteria of sheet without evaluation | Student says, that one criterion is met or not met in the text but does not evaluate it. | “No sources are used. The opinion is justified.” |

| Question, demand by reviewer | Reviewer needs more information about the process of writing. | “No, where is that from? Are these your emotions?” |

| Favorable comment on mistake | Reasons were discussed as to why the mistake is not sensible. | “But no worries, it is alright. We did not have enough time anyway.” |

| Request from reviewer to react to feedback | A reaction of the author is demanded, after feedback from reviewer has been mentioned. | “What you’re saying?” |

| Contribution to discourse by author of the text being discussed | ||

| Explanation or justification of text by author | Author explains his or her decisions regarding writing the text. | “Of course, I use counterarguments. Take a look...” |

| Approval of feedback | Feedback is accepted. | “Yes, you’re right. I will do that.” |

| Contradiction leads to discussion | Point is disputable and discussed. | A1: Of course, I mention Erdogan. A2: No, where? |

| Contraction does not lead to discussion | Point is disputable, but not discussed. | “No, but go on.” |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Morawski, M.; Budke, A. How Digital and Oral Peer Feedback Improves High School Students’ Written Argumentation—A Case Study Exploring the Effectiveness of Peer Feedback in Geography. Educ. Sci. 2019, 9, 178. https://doi.org/10.3390/educsci9030178

Morawski M, Budke A. How Digital and Oral Peer Feedback Improves High School Students’ Written Argumentation—A Case Study Exploring the Effectiveness of Peer Feedback in Geography. Education Sciences. 2019; 9(3):178. https://doi.org/10.3390/educsci9030178

Chicago/Turabian StyleMorawski, Michael, and Alexandra Budke. 2019. "How Digital and Oral Peer Feedback Improves High School Students’ Written Argumentation—A Case Study Exploring the Effectiveness of Peer Feedback in Geography" Education Sciences 9, no. 3: 178. https://doi.org/10.3390/educsci9030178

APA StyleMorawski, M., & Budke, A. (2019). How Digital and Oral Peer Feedback Improves High School Students’ Written Argumentation—A Case Study Exploring the Effectiveness of Peer Feedback in Geography. Education Sciences, 9(3), 178. https://doi.org/10.3390/educsci9030178