Exploring Chemistry Student Teachers’ Diagnostic Competence—A Qualitative Cross-Level Study

Abstract

1. Introduction

2. Theoretical Background

- Conditional knowledge: defines teachers’ knowledge about students’ backgrounds that is important for chemistry teaching, including influences that effect teaching and learning [57]. On the one hand, the dimensions of the “Diversity Wheel” [10] are addressed here. On the other hand, the influences and effects of heterogeneity or diversity on chemistry teaching are also included.

- Technological knowledge: defines the ability to select the most appropriate data collection for the actional phase. Knowledge about methods and instruments is needed, including their advantages and disadvantages. This knowledge domain also includes methods for analyzing the obtained data [57].

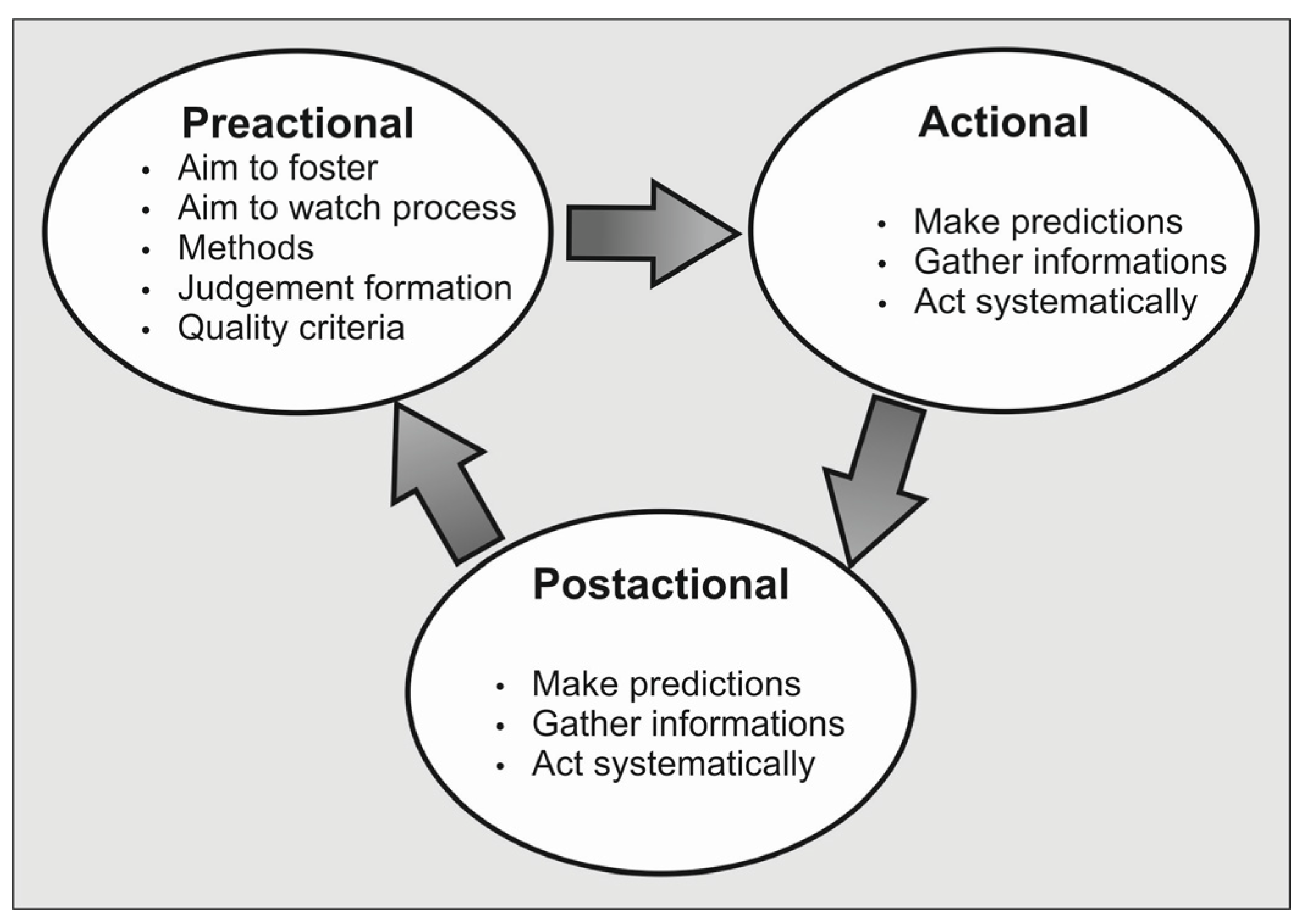

- Knowledge of change: refers to the pre-actional phase, therefore the further development of students. It refers to the strategies to deal with changing the resulting experience or behavior of the students in chemistry teaching [57]. For example, knowledge about dealing with misconceptions or about aspects of linguistically sensitive teaching is important [11].

- Competence knowledge: includes the awareness of and attitudes towards diagnostics. For schools, this means that teachers are able to integrate a diagnosis into their teaching and adapt the lesson plan. Jäger also described this knowledge as the ability to answer a question. If a teacher does not possess these skills, then his or her personal knowledge of the topic must be expanded or a more competent person must be sought out for assistance [57].

3. Methods

3.1. Research Question

- What level of diagnostic competence do student teachers possess at different stages of their university teacher training program in chemistry?

- How does diagnostic competence differ among student teachers in varying semesters?

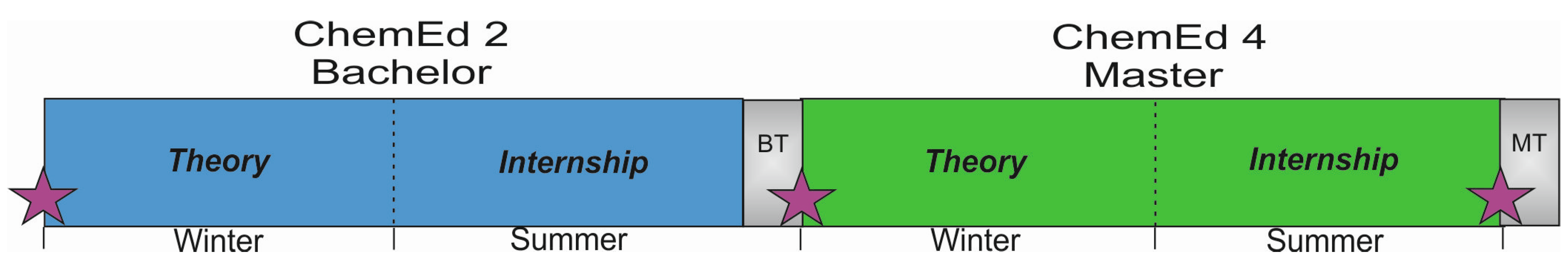

3.2. Context of the Research

3.3. Instrument and Evaluation Pattern

- How can learning group heterogeneity affect education?

- What methods would you use for diagnosis?

- What strategies would you use in the classroom to deal with heterogeneity?

- How (if at all) would you include heterogeneity in your lesson planning?

3.4. Sample

4. Results

4.1. Competence Knowledge

4.2. Conditional Knowledge

4.3. Technological Knowledge

4.4. Knowledge of Change

5. Discussion and Implications

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Ohle, A.; McElvany, N. Teachers’ diagnostic competences and their practical relevance, special issue editorial. J. Educ. Res. Online 2015, 7, 5–10. [Google Scholar]

- Klug, J.; Bruder, S.; Kelava, A.; Spiel, C.; Schmitz, B. Diagnostic competence of teachers: A process model that accounts for diagnosis learning behaviour tested by means of a case scenario. Teach. Teach. Educ. 2013, 30, 28–46. [Google Scholar] [CrossRef]

- Vogt, F.; Rogalla, M. Developing adaptive teaching competency through coaching. Teach. Teach. Educ. 2009, 25, 1051–1060. [Google Scholar] [CrossRef]

- Harrison, A.G.; Treagust, D.F. The Particulate Nature of Matter: Challenges in Understanding the Submicroscopic World. In Chemical Education: Towards Research-Based Practice; Gilbert, J.K., Jong, O.D., Justi, R., Treagust, S.F., van Driel, J., Eds.; Kluwer: Dordrecht, The Netherlands, 2002; pp. 189–212. ISBN 1402011121. [Google Scholar]

- Taber, K. Developing Teachers as Learning Doctors. Teach. Dev. 2005, 9, 219–235. [Google Scholar] [CrossRef]

- Brookhart, S.M. Educational assessment knowledge and skills for teachers. Educ. Meas. Issues Pract. 2011, 30, 3–12. [Google Scholar] [CrossRef]

- Treagust, D.F. Development and use of diagnostic test to evaluate students’ misconceptions in science. Int. J. Sci. Educ. 1988, 10, 159–169. [Google Scholar] [CrossRef]

- Barke, H.-D.; Hazari, A.; Yitbarek, S. Misconceptions in Chemistry: Addressing Perceptions in Chemical Education; Springer: Berlin, Germany, 2009; ISBN 3540709886. [Google Scholar]

- Taber, K.S. Chemical Misconceptions—Prevention, Diagnosis and Cure: Theoretical Background; RSC: London, UK, 2002; Volume 1, ISBN 0854043861. [Google Scholar]

- Johns Hopkins University Diversity Leadership Council. Diversity Wheel. Available online: https://www.web.jhu.edu/dlc/resources/diversity_wheel/index.html (accessed on 22 August 2017).

- Tolsdorf, Y.; Markic, S. Dealing language in science classroom–Diagnosing student’ linguistic skills. In Science Education towards Inclusion; Markic, S., Abels, S., Eds.; Nova: New York, NY, USA, 2016. [Google Scholar]

- Florian, L.; Black-Hawkins, K. Exploring inclusive pedagogy. Br. Educ. Res. J. 2011, 37, 813–828. [Google Scholar] [CrossRef]

- Morrison, J.A.; Lederman, N.G. Science Teachers’ Diagnosis and Understanding of Students’ Preconceptions. Sci. Educ. 2003, 87, 849–867. [Google Scholar] [CrossRef]

- Loughran, J.; Mulhall, P.; Berry, A. Exploring pedagogical content knowledge in science teacher education. Int. J. Sci. Educ. 2008, 30, 1301–1320. [Google Scholar] [CrossRef]

- Pellegrino, J.W.; Chudowsky, N.; Glaser, R. Knowing What Students Know: The Science and Design of Educational Assessment; National Academic Press: Washington, DC, USA, 2001; ISBN 0309072727. [Google Scholar]

- Ruiz-Primo, M.A.; Li, M.; Wills, K.; Giamellaro, M.; Lan, M.C.; Mason, H.; Sands, D. Developing and evaluating instructionally sensitive assessments in science. J. Res. Sci. Teach. 2012, 49, 691–712. [Google Scholar] [CrossRef]

- Shepard, L.A. The role of classroom assessment in teaching and learning. In Handbook of Research on Teaching, 4th ed.; Richardson, V., Ed.; American Educational Research Association: Washington, DC, USA, 2001; pp. 1066–1101. ISBN 0935302263. [Google Scholar]

- Black, P. Formative and summative aspects of assessment: Theoretical and research foundations in the context of pedagogy. In Sage Handbook of Research on Classroom Assessment; McMillan, J.H., Ed.; Sage: Thousand Oaks, CA, USA, 2013; pp. 167–178. ISBN 1412995876. [Google Scholar]

- Ingenkamp, K.; Lissmann, U. Lehrbuch der Pädagogischen Diagnostik, 6th ed.; Beltz: Weinheim, Germany, 2008; ISBN 3407255039. [Google Scholar]

- Füchter, A. Pädagogische und didaktische Diagnostik—Eine schulische Entwicklungsaufgabe mit hohem Professionalitätsanspruch. In Diagnostik und Förderung, Teil I: Didaktische Grundlagen; Füchter, A., Moegling, K., Eds.; Prolog: Immenhausen, Germany, 2011; pp. 45–83. ISBN 3934575560. [Google Scholar]

- Bell, B. Classroom assessment of science learning. In Handbook of Research on Science Education; Abell, S.K., Lederman, N.G., Eds.; Lawrence Erlbaum: Mahwah, NJ, USA, 2007; pp. 965–1006. ISBN 0805847146. [Google Scholar]

- Schrader, F.-W. Diagnostische Kompetenz von Lehrpersonen. Beiträge zur Lehrerinnen- und Lehrerbildung 2013, 31, 154–165. [Google Scholar]

- Bennett, R.E. Formative assessment: A critical review. Assess. Educ. 2011, 18, 5–25. [Google Scholar] [CrossRef]

- Nitko, A.J.; Brookhart, S.M. Educational Assessment of Students, 5th ed.; Prentice-Hall: Upper Saddle River, NJ, USA, 2007; ISBN 0131719254. [Google Scholar]

- Shwartz, Y.; Dori, Y.J.; Treagust, D.F. How to outline objectives for chemistry education and how to assess them. In Teaching Chemistry—A Studybook; Eilks, I., Hofstein, A., Eds.; Sense: Rotterdam, The Netherlands, 2013; pp. 37–65. ISBN 9462091382. [Google Scholar]

- The Organisation for Economic Co-Operation and Development. Formative Assessment: Improving Learning in Secondary Classrooms; OECD Publishing: Paris, France, 2005; Available online: https://www.oecd.org/edu/ceri/35661078.pdf (accessed on 22 August 2017).

- Black, P.; Wiliam, D. Developing the theory of formative assessment. Educ. Assess. Eval. Account. 2009, 21, 5–31. [Google Scholar] [CrossRef]

- Atkin, J.M.; Black, P.; Coffey, J. Classroom Assessment and the National Science Education Standards; National Academy Press: Washington, DC, USA, 2001; ISBN 030906998X. [Google Scholar]

- Hattie, J.; Yates, G. Visible Learning and the Science of How We Learn; Routledge: London, UK, 2014; ISBN 0415704995. [Google Scholar]

- Heidemeier, H. Self and Supervisor Ratings of Job-Performance: Meta-Analyses and a Process Model of Rater Convergence. Ph.D. Dissertation, Friedrich-Alexander-Universität, Erlangen-Nürnberg, Germany, 10 May 2005. Available online: https://opus4.kobv.de/opus4-fau/frontdoor/index/index/docId/143 (accessed on 22 August 2018).

- Klug, J. Modeling and Training a New Concept of Teachers’ Diagnostic Competence. Ph.D. Dissertation, Technische Universität Darmstadt, Darmstadt, Germany, 25 August 2011. Available online: http://tuprints.ulb.tu-darmstadt.de/2838/1/16.01.2012_Dissertation_Julia_Klug.pdf (accessed on 22 August 2018).

- Von Aufschnaiter, C.; Cappell, J.; Dübbelde, G.; Ennemoser, M.; Mayer, J.; Stiensmeier-Pelster, J. Diagnostische Kompetenz: Theoretische Überlegungen zu einem zentralen Konstrukt der Lehrerbildung. Z. Pädagogik 2015, 5, 738–758. [Google Scholar] [CrossRef]

- Tan, K.-C.D.; Taber, K.S.; Goh, N.-K.; Chia, L.-S. The ionisation energy instrument: A two-tier multiple-choice instrument to determine high school students’ understanding of ionisation energy. Chem. Educ. Res. Pract. 2005, 6, 180–197. [Google Scholar] [CrossRef]

- Peterson, R.F.; Treagust, D.F.; Garnett, P.J. Development and Application of a Diagnostic Instrument to Evaluate Grade 11 and 12 Students’ Concepts of Covalent Bonding and Structure following a Course of Instruction. J. Res. Sci. Teach. 1989, 26, 301–314. [Google Scholar] [CrossRef]

- Tamir, P. Assessment and evaluation in science education—Opportunities to learn and outcomes. In International Handbook of Science Education; Fraser, B.J., Tobin, K.G., Eds.; Kluwer: Dordrecht, The Netherlands, 1998; pp. 762–789. ISBN 9780792335313. [Google Scholar]

- Shulman, L.S. Those Who Understand: Knowledge Growth in Teaching. Educ. Res. 1986, 15, 4–14. [Google Scholar] [CrossRef]

- Shulman, L.S. Knowledge and Teaching: Foundations of the New Reform. Harv. Educ. Rev. 1987, 57, 1–22. [Google Scholar] [CrossRef]

- Abell, S.K. Research on science teacher knowledge. In Handbook of Research on Science Education; Abell, S.K., Lederman, N.G., Eds.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2007; pp. 1105–1149. ISBN 0805847146. [Google Scholar]

- Abell, S.K. Twenty years later: Does pedagogical content knowledge remain a useful idea? Int. J. Sci. Educ. 2008, 30, 1405–1416. [Google Scholar] [CrossRef]

- Park, S.; Oliver, J.S. Revisiting the Conceptualization of Pedagogical Content Knowledge (PCK): PCK as a Conceptual Tool to Understand Teachers as Professionals. Res. Sci. Educ. 2008, 38, 261–284. [Google Scholar] [CrossRef]

- Loughran, J.; Berry, A.; Mulhall, P. Professional Learning: Understanding and Developing Science Teachers’ Pedagogical Content Knowledge; Sense: Rotterdam, The Netherlands, 2006; ISBN 9789087903657. [Google Scholar]

- Loughran, J.; Berry, A.; Mulhall, P. Understanding and Developing Science Teachers’ Pedagogical Content Knowledge, 2nd ed.; Sense: Rotterdam, The Netherlands, 2012; ISBN 9789460917882. [Google Scholar]

- Black, P.; William, D. Assessment and classroom learning. Assess. Educ. 1998, 5, 7–74. [Google Scholar] [CrossRef]

- Feinberg, A.B.; Shapiro, E.S. Teacher Accuracy: An Examination of Teacher-Based Judgments of Students’ Reading with Differing Achievement Levels. J. Educ. Res. 2009, 102, 453–462. [Google Scholar] [CrossRef]

- Perry, N.E.; Hutchinson, L.; Thauberger, C. Talking about teaching self-regulated learning: Scaffoling student teachers’ development and use of practices that promote self-regulated learning. Int. J. Educ. Res. 2008, 47, 97–108. [Google Scholar] [CrossRef]

- Coladarci, T. Accuracy of teacher judgment of students’ responses to standardized test items. J. Educ. Psychol. 1986, 78, 141–146. [Google Scholar] [CrossRef]

- Hoge, R.D.; Coladarci, T. Teacher-based judgments of academic achievement: A review of literature. Rev. Educ. Res. 1989, 59, 297–313. [Google Scholar] [CrossRef]

- Partenio, I.; Taylor, R.L. The relationship of teacher ratings and IQ: A question of bias? Sch. Psychol. Rev. 1985, 14, 79–83. [Google Scholar]

- Demaray, M.K.; Elliott, S.N. Teachers’ judgements of students’ academic functioning: A comparison of actual and predicted performances. Sch. Psychol. Q. 1998, 13, 8–24. [Google Scholar] [CrossRef]

- Feinberg, A.B.; Shapiro, E.S. Accuracy of teacher judgments in predicting oral reading fluency. Sch. Psychol. Q. 2003, 18, 52–65. [Google Scholar] [CrossRef]

- Karing, C.; Matthäi, J.; Artelt, C. Genauigkeit von Lehrerurteilen über die Lesekompetenz ihrer Schülerinnen und Schüler in der Sekundarstufe I—Eine Frage der Spezifität? Z. Pädagogische Psychol. 2011, 25, 159–172. [Google Scholar] [CrossRef]

- Südkamp, A.; Kaiser, J.; Möller, J. Accuracy of teachers’ judgements of students’ academic achievement: A Meta-analysis. J. Educ. Psychol. 2012, 104, 743–762. [Google Scholar] [CrossRef]

- Begeny, J.C.; Eckert, T.L.; Montarello, S.A.; Storie, M.S. Teachers’ perceptions of students’ reading abilities: An examination of the relationship between teachers’ judgments and students’ performance across a continuum of rating methods. Sch. Psychol. Q. 2008, 23, 43–55. [Google Scholar] [CrossRef]

- Bates, C.; Nettelbeck, T. Primary school teachers’ judgments of reading achievement. Educ. Psychol. 2001, 21, 177–187. [Google Scholar] [CrossRef]

- Capizzi, A.M.; Fuchs, L. Effects of curriculum-based measurement with and without diagnostic feedback on teacher planning. Remedial Spec. Educ. 2005, 26, 159–174. [Google Scholar] [CrossRef]

- Krauss, S.; Brunner, M.; Kunter, M.; Baumert, J.; Blum, W.; Neubrand, M.; Jordan, A. Pedagogical content knowledge and content knowledge of secondary mathematics teachers. J. Educ. Psychol. 2008, 100, 716–725. [Google Scholar] [CrossRef]

- Jäger, R.S. Diagnostischer Prozess. In Handbuch der Psychologischen Diagnostik; Petermann, F., Eid, M., Eds.; Hogrefe: Göttingen, Germany, 2006; pp. 89–96. ISBN 9783801719111. [Google Scholar]

- Ohle, A.; McElvany, N.; Horz, H.; Ullrich, M. Text-picture integration—Teachers’ attitudes, motivation and self-related cognitions in diagnostics. J. Educ. Res. Online 2015, 7, 11–33. [Google Scholar]

- Tolsdorf, Y.; Markic, S. Development of an instrument and evaluation pattern for the analysis of chemistry student teachers’ diagnostic competence. Eur. J. Phys. Chem. Educ. 2017. accepted. [Google Scholar]

- Weisberg, H.E. The Total Survey Error Approach: A Guide to the New Science of Survey Research; The University of Chicago Press: Chicaco, IL, USA, 2005; ISBN 9780226891286. [Google Scholar]

- Mayring, P. Qualitative Content Analysis: Theoretical Foundation, Basis Procedures and Software Solutions; Beltz: Klagenfurt, Germany, 2014. [Google Scholar]

- Tolsdorf, Y.; Markic, S. Exploring student teachers´ knowledge concerning diagnostics in science lessons. In Science Education Research: Engaging Learners for a Sustainable Future. Proceedings of the ESERA 2015 Conference, Helsinki, Finland, 31 August–4 September 2015; Lavonen, J., Juuti, K., Lampiselkä, J., Uitto, A., Hahl, K., Eds.; University of Helsinki: Helsinki, Finland, 2016. [Google Scholar]

- Swanborn, P.G. A common base for quality control criteria in quantitative and qualitative research. Qual. Quant. 1996, 30, 19–35. [Google Scholar] [CrossRef]

- Corno, L.; Snow, R.E. Adapting teaching to individual differences among learners. In Third Handbook of Research on Teaching; Wittrock, M.C., Ed.; Macmillan: New York, NY, USA, 1986; pp. 605–629. ISBN 0029003105. [Google Scholar]

| Group 1 Pre CemEd2 | Group 2 Pre CemEd4 | Group 4 Post CemEd4 | ||

|---|---|---|---|---|

| Number | 43 | 37 | 28 | |

| Sex | Female | 19 | 19 | 13 |

| Male | 24 | 18 | 15 | |

| Age | Under 21 | 12 | 0 | 0 |

| 21–24 | 21 | 24 | 19 | |

| 25–29 | 6 | 11 | 8 | |

| More than 30 | 4 | 2 | 1 | |

| Migration background | 8 | 6 | 0 | |

| Visited school | Grammar school | 37 | 35 | 25 |

| Comprehensive school | 5 | 1 | 3 | |

| Second subject | Biology | 22 | 16 | 13 |

| Mathematics | 13 | 12 | 8 | |

| Physic | 3 | 0 | 0 | |

| Geography | 0 | 3 | 3 | |

| Language | 2 | 3 | 2 | |

| Politics & History | 2 | 1 | 0 | |

| Music | 0 | 2 | 2 | |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tolsdorf, Y.; Markic, S. Exploring Chemistry Student Teachers’ Diagnostic Competence—A Qualitative Cross-Level Study. Educ. Sci. 2017, 7, 86. https://doi.org/10.3390/educsci7040086

Tolsdorf Y, Markic S. Exploring Chemistry Student Teachers’ Diagnostic Competence—A Qualitative Cross-Level Study. Education Sciences. 2017; 7(4):86. https://doi.org/10.3390/educsci7040086

Chicago/Turabian StyleTolsdorf, Yannik, and Silvija Markic. 2017. "Exploring Chemistry Student Teachers’ Diagnostic Competence—A Qualitative Cross-Level Study" Education Sciences 7, no. 4: 86. https://doi.org/10.3390/educsci7040086

APA StyleTolsdorf, Y., & Markic, S. (2017). Exploring Chemistry Student Teachers’ Diagnostic Competence—A Qualitative Cross-Level Study. Education Sciences, 7(4), 86. https://doi.org/10.3390/educsci7040086